Abstract

Personal tracking technologies allow sensing of the physical activity carried out by people. Data flows collected with these sensors are calling for big data techniques to support data collection, integration and analysis, aimed to provide personalized support when learning motor skills through varied multisensorial feedback. In particular, this paper focuses on vibrotactile feedback as it can take advantage of the haptic sense when supporting the physical interaction to be learnt. Despite each user having different needs, when providing this vibrotactile support, personalization issues are hardly taken into account, but the same response is delivered to each and every user of the system. The challenge here is how to design vibrotactile user interfaces for adaptive learning of motor skills. TORMES methodology is proposed to facilitate the elicitation of this personalized support. The resulting systems are expected to dynamically adapt to each individual user’s needs by monitoring, comparing and, when appropriate, correcting in a personalized way how the user should move when practicing a predefined movement, for instance, when performing a sport technique or playing a musical instrument.

1. Introduction

Society is becoming more and more virtual due to the online capabilities provided by current information and communication technologies in varied ways and flavors (e.g., learning systems, social networks, banking, shopping, entertainment, etc.). Benefits include removing temporal and spatial barriers, but usually entail physical inactivity.

Nevertheless, technology can foster physicality in virtual reality scenarios, providing a feeling of immersion, presence, engagement and so on. For instance, the field of exergames, which entice users to learn motions (e.g., dance moves) or to exercise through gamification techniques, has massively expanded in the past years supported by commercial products such as Nintendo Wii, Playstation Move or Xbox Kinect. These systems rely on motion sensing technology that tracks physical reactions using either inertial sensors (e.g., gyroscopes and accelerometers) in a handheld controller (when available) to detect movement in three dimensions, and/or optical sensing technology that can be used to sense the controller position or to directly track the gestures made by the user’s body.

While the use of these products for exergaming can train the execution of predefined movements and is helpful for people not engaged in physical activity during their leisure time, they do not seem sufficient for achieving moderate intensity health exercise [1]. Nonetheless, these technological advances in the entertainment domain have provided fertile ground to develop applications for other domains, such as physical therapy learning systems [2]. They have also been suggested to support motor skills learning [3]. This paper discusses the need of personalizing the learning of motor skills and the value of vibrotactile feedback to provide this support.

2. Background

The training process on motor skills acquisition can follow the learning-by-doing approach [4]. This active interaction between action and perception within the user environment can generate the so-called enactive knowledge that is acquired by doing [5] and is stored in the form of motor responses [6]. Enactive interfaces support the transmission of knowledge obtained through action and can feed the user back through diverse senses, such as when playing a musical instrument [7]. In fact, and in addition to playing a musical instrument, there are many human activities that involve enactive knowledge and require physical practice to be learnt, such as dancing, hand writing, hand drawing, training for surgery, improving the technique in sports and martial arts, learning sign language, etc. In order to perform these activities with mastery, the person needs to practice by repeating over and over the movements involved till the appropriate way to carry them out is learnt [8], that is, she needs to train her motor skills (i.e., conscious body movements involving muscular activity).

Thus, there is a need to guide and correct the user’s movements till her ideal movement (i.e., the best way this person can perform the movement considering her particular physical features) is achieved. In fact, since each person has different goals, physical condition and motor skills, this training should be done in a personalized way, considering her own body build, corporal features and specific physical abilities, her current performance and the particularities of the motor skills to be learnt [9]. This means that each person should receive the advice and support that is most appropriate for her individual characteristics in her current context, which might be very different from the advice and support required by another person in a different context. In order to elicit appropriate psychomotor support, user data driven methodologies are needed. The TORMES methodology [10], which combines user centered design methods with data mining techniques, has been proposed to support the elicitation of the personalized support required when learning motor skills by guiding qualitative analysis on quantitative indicators. This adapted response needs to be triggered to the user through a given interface. The system should be able to select the most appropriate feedback modality (i.e., visual, auditory and haptic, or a combination of them) and their delivery should be personalized to the learner’s situation (e.g., attention-demanding phase, cognitive overload…) since there is not a unique best way to provide the sensorial feedback in motor learning [11].

Anyway, haptic feedback is the one that makes the difference from non-motor skills training systems, as it can be specifically applied when physical movements are involved in the learning process [12]. This kind of feedback can be classified into [13] (1) tactile stimulations (i.e., electrotactile, thermal and mechanical); (2) kinesthesic (including exoskeleton forced-feedback systems); and (3) vibrotactile (i.e., vibrating components that deliver information through temporal parameters in the signal such as frequency, amplitude, waveform and duration). In fact, vibrotactile feedback is very useful in motor skills training to help learners avoid motion errors as it provides more subtle support [13].

Thus, this paper explores how vibrotactile feedback is to be provided in motion learning systems in terms of how the movement is monitored, compared and corrected. Findings discuss the lack of personalization support provided in the systems analyzed, and point to the application of the TORMES methodology to identify personalization opportunities when designing vibrotactile interfaces for interactive intelligent systems that can provide adaptive learning of motor skills.

3. Vibrotactile Feedback in Motor Skills Training Systems

Vibrotactile feedback can be provided at key moments through diverse tactile actuators (i.e., tactors) that can apply resistance, force, vibration, etc. By adjusting the density and frequency of the tactors, realistic surface contours can be created such as roughness, bumpiness, stickiness and so on [14]. The goal can be either consolidating or correcting a movement. For instance, if the user has performed the movement accurately, she can receive a soft vibration as a reward; in turn, if she performs the movement in an incorrect way, the system can produce some force so that the user perceives resistance to that movement and thus, has to try in a different direction or way. Existing reviews show that vibrotactile devices are an appropriate means of providing immediate feedback for motor learning and augmentation [12]. By providing vibrotactile non-invasive stimulations to the surface of the skin, vibrotactile feedback does not hinder other tasks (e.g., speaking, seeing and hearing) and receives a high level of user’s acceptance [15] without the need to shift the visual attention [13].

In a previous work [9], seventeen systems that supported motor skills learning have been reviewed. Those six [16,17,18,19,20,21] that provide vibrotactile feedback are compiled in Table 1, analyzing the information that is monitored (sensors used, processing approach, measures computed), how it is compared to detect errors (user, movement and context data used to diagnose errors) and what correction support (actuators used, feedback trigger and delivery) is provided. In addition, it provides basic details of the system such as the body part involved and the kind of activity carried out, and discusses the personalization issues considered in the system. The detail of the descriptions synthetized in Table 1 depends on the information available in the papers analyzed.

Table 1.

Details of the six motor skills training systems reported in [9] that deliver vibrotactile feedback.

The systems analyzed in Table 1 have been developed as a general solution (e.g., [16,20]) or to train motor skills in specific contexts, such as karate [17], tai-chi [18], snowboarding [19], and violin playing [21]. Most of the systems focus on a specific part of the body (arm and/or hands) as in [16,17,18,20]. The torso is also considered in [21]; [19] focuses on different parts across the body.

Regarding the monitoring, in most of the systems, movement was sensed with optical tracking techniques that detect markers placed on users’ body, using motion capture systems either with Vicon cameras [16,18] or infrared devices [17,20]. These techniques limit the data collection to the room where the tracking infrastructure is prepared. Nonetheless, there are two systems (by coincidence the same that consider several body parts) that collect movement data with wearable inertial sensing units worn directly on the body (ad hoc combination of sensors in [19] and a commercial inertial motion capture suit in [21]), without the need of any infrastructure in the room. In turn, the processing approach is carried out in most systems by the software provided by the motion capture system when used [16,17,20,21]. However, there are two systems that have implemented their own algorithms (a gesture recognition system with probabilistic neural networks and finite state machines in [18] and an empirical algorithm in [19]). Measures specified are either angles and positions [16,17,20] or forces [19].

The systems compare the current movement of the user with a reference, which usually is the movement carried out by another person with expertise in the performance of the movement [16,17,18,19,20]. Only [21] computes the ideal user movement and compares the performance against that reference. In any case, for the error diagnosis, systems carry out some movement modelling, with ad hoc algorithms that depend on the domain and kind of movement. User features are never modeled, although in the ideal individual path, [18] considers user’s build, the way in which she is holding the violin and even the teacher’s pedagogy. The modelling of the context is only considered in [19].

Diverse vibration actuators are used to deliver corrections aimed to tell the user when she moves away from the desired position and/or movement trajectory (usually the signal is “on” until the movement is properly carried out) [16,17,20,21], or guides which part of the body should be moved [17,19]. Their number and placement depends on the type of movement to be corrected. The vibration signal can be binary [19,21], or can modify amplitude and/or frequency [16,17,18,20]. In addition, [18] delivers multimodal feedback, complementing the tactile vibrations with a virtual reality graphical scenario and 3D sound feedback.

Although users’ features are not modelled, some personalization information is used when calibrating the system [16,18,19,21], dimensions are normalized before comparison [17,18], and tolerance margins can be customized [21]. In addition, [20] uses angles measures rather than absolute positions to be more tolerant with different users’ builds and body structures.

4. A Proposal for Personalized Vibrotactile Feedback to Support Motor Skills Learning

Systems for learning motor skills require that the physical actions carried out by the user when acquiring enactive knowledge are monitored, compared and, when needed, corrected [9]. Thus, there exists an interesting research path to develop personalized motion learning systems that provide vibrotactile feedback in the psychomotor learning domain (i.e., the learning domain dealing with motor skills learning). This domain is one of the three educational domains proposed by Bloom’s taxonomy of learning objectives [22], but it has received little attention in the past, probably because required technological advances to non-intrusively measure motion features with wearable devices have not been developed till recently. The other two domains are the cognitive and affective.

Personalized motion learning systems pose several challenges for artificial intelligence engineers to design and develop the corresponding algorithms for the intelligent system, such as describing correct (expert) movements, diagnosing users’ movements, and deciding the feedback to provide to each specific user in each specific situation. Thus, there is an opportunity to explore how to take advantage of the information collected from users’ interactions to learn better ways of detecting human movements and personalizing the system’s response to help the user improve her physical performance. In particular, this research proposal addresses the challenge of how to design vibrotactile user interfaces for interactive intelligent systems that can support adaptive learning of motor skills.

Since data is collected from the human body, the vibrotactile support has to be provided along the so-called biocybernetic loop [23], which involves (1) data collection from various psychophysiological sensors; (2) real-time analysis of collected data; and (3) an adapted response triggered at the corresponding user interface. The first two (i.e., data collection and analysis) relate to monitoring the user’s actions, while the last one (i.e., delivering the adapted response), involves comparing and when appropriate correcting the user’s actions.

Monitoring starts with collecting data from psychophysiological sensors. The optical sensing solutions and inertial tracking handheld controllers used in commercial exergame platforms as well as in the systems compiled in Table 1 are evolving (supported by the quantified-self movement) towards less intrusive and more precise and varied personal tracking solutions that allow monitoring of human activity from different perspectives (including but not restricted to movement) with wearable devices that integrate, among other kinds of sensors, inertial and physiological sensors on objects that people usually wear such as bracelets, watches, t-shirts, etc. [24].

These continuous data flows collected from varied wearable quantified-self devices come in great velocity and in high volumes, which call for the application of big data processing techniques [25]. Thus, they can process in real time the physical measures compiled in the systems analyzed in Table 1 such as position, angles, force, etc., as well as other data of relevance, such as the physiological changes in the user. In fact, the concept of behavioral big data has been coined to refer to these very large and rich multidimensional datasets on human behaviors, actions and interactions, which have become available due to technological advancement in measurement and storage and which challenges technical issues such as data access, analysis scalability, a quick changing environment, etc. [26]. Data mining techniques aimed at discovering relevant patterns in datasets can be integrated into big data infrastructures, appearing additional open issues regarding distributed mining, time evolving data and visualization, among others [27], either at the data, model, or system levels [28]. In addition, progress in data analysis is moving the paradigm of big data to the concept of big service where complex service ecosystems can speed up data processing, scale up with data volume, improve adaptability and extensibility despite data diversity and uncertainty and turn raw, low-level data into actionable knowledge [29].

In order to deliver the adapted response, both comparing and correcting the user’s actions is required. Comparing requires the previous modelling of the user, the movement and the context. User modelling should include computing the relevant cognitive, affective and psychomotor issues from the user’s interaction, resulting in knowledge regarding the current user state and skill level, as well as the effect produced by the feedback on the user. No user modelling is reported in the systems analyzed in Table 1, but it is still a general demand when developing wearable personal systems that should adapt to users’ interaction [30]. In turn, movement modelling is reported in the analyzed systems as the result of training algorithms with the interactions carried out by expert users who can perform the movements with mastery. In addition, the context where the user is placed can also provide relevant information in order to deliver the vibrotactile support, as discussed, but not computed, in [19].

Current versus accurate performance (i.e., learner versus expert interactions) are to be compared once the modelling is done. Table 1 shows that ad hoc algorithms are implemented for error diagnosis, as they are much related to the domain being trained and the particularities of the movements analyzed. This process is key to triggering the adapted vibrotactile response at the corresponding user interface. From the systems analyzed, vibrotactile support is usually limited to specific movements in certain body parts, and the user is either informed when moving away from the desired performance or subtly guided on how to move the corresponding body part. This information is transmitted by modifying the amplitude or frequency of the signal. However, except for [19] that carried out interviews with instructors to understand the errors performed by users and [21] that contacted professionals to select the locations where to put the tactors, no other efforts were reported in the papers analyzed to design the support to be provided. Furthermore, the need of personalizing this support is not even an issue, with the exception of the attempt in [21] of defining the users’ ideal movement. The personalization information that has been reported relates to considering users’ variations when monitoring and modelling (e.g., calibrating to build the user skeletal model [16,18,21], and comparing normalized versions of movements [17,18] using variables that do not depend much on users’ differences [20]).

However, as discussed in [9], the correcting action should take into account, among others, the specific user’s features (goal, physical condition, motor skills achieved…), the type of movement and the current context of the user, so the appropriate psycho-educational support is delivered to the learner when needed (including cognitive, affective and psychomotor issues). As a result, even if two users make the same error, they may not receive the same response from the system because they may have different needs, goals, moods, etc. For instance, when the user is a novice, it might be better to be more supportive, and then decrease the support provided [21]. Nevertheless, it may also depend on the affective state [31]. If the user gets frustrated, the system may be more supportive so the user finds easier to carry out the movement, while if she is bored, it may change the movement being practiced. In addition, guiding the learner by delivering haptic guidance when the movement performed does not reflect the reference movement might not be the most appropriate psycho-educational approach to achieve long-term learning, although it might help to increase motivation by contributing to short-term performance [8]. Delivering the information through different sensorial channels (as done in [18]) might also be appropriate in some scenarios, but not in others where the other channels are already used (as in [21]). A multisensorial support that can personalize the feedback in terms of light, sound, and/or vibration has been proposed in the AICARP approach to deal with stressful situations that are monitored with physiological sensors [32]. In addition, if a stronger haptic support is to be provided, forced-guidance using exoskeletons might be considered, as proposed in the tangibREC approach [33].

A case study on Aikido defensive martial art has been discussed elsewhere [34]. The idea behind Aikido is to learn techniques that can make entering and turning movements that redirect the momentum of the opponent's attack, and end with a throw or joint lock. To progress in the martial art, learners watch how the teacher (sensei) defends from a specific attack and then practice in pairs the attack and the corresponding defensive technique. Even if the sensei performs the movement very slowly, it is not possible for the learner to perceive and assimilate all the issues to be taken into account when trying to replicate the movement (e.g., movement of feet, turn of hip, position of hand, relaxation of arms…), resulting in an incorrect performance by the learner as it differs from the sensei’s. In fact, years and years of practice are required to properly master the performance of the techniques. Moreover, if the learner partner is taller than the partner that has attacked the sensei to show the technique, the technique cannot be performed exactly the same way. For instance, if the sensei asks a taller person to attack her, she may need to make the turn wider so she can take the attacker’s gravity center lower than with the previous person. In addition, the learner’s body build is different from the sensei’s, so even in the case that the attacker is the same, and it would be possible for the learner to perform the same exact movements that the sensei does, both learner and sensei will not produce the same result on the attacker. Thus, the proper execution of the technique cannot be exactly the same as done by the sensei, but needs to be adapted to the user’s body build (both attacker or uke, and defender or tori). So, users and movements need to be properly modelled, allowing the error diagnosis algorithms to take into account the individual user’s features when comparing learner’s movements with the expert’s. In addition, the particular context where the learner is placed needs to be modelled, too. Modelling the context implies many issues, such as the user’s affective state, the environment conditions (temperature, humidity…), the kind of session (learning a technique, improving the performance of a technique, free practice, preparing for a belt exam…), if the user has pain in some joint due to an incorrect previous execution, etc. With all this information, the system should identify the errors made by the user. However, not all errors should be corrected, and the correction should not always be the same one, nor the same one for everybody. Modelled information also needs to be taken into account when deciding the support to be provided.

For instance, when a inductive learner wearing a tuned Aikidogi (i.e., the Aikido training uniform) with inertial and physiological sensors as well as tactors attached to the sleeves practices the shomen-uchi striking attack for the first time, it might be a good option to guide the learner with the movement to be carried out a couple of times by triggering vibrations on the part of the body that is to be moved and in the direction of the movement. Then, the system can stop the vibration and just monitor several tries of the user in order to identify the errors made (e.g., the arm stops before finishing the movement towards the partner’s head). As a result, the system can provide vibration support again, but this time, focused on making the arm complete the movement trajectory. From the physical performance and physiological information from her affective state, the system can identify when it is a good time to change the technique and practice another one, even though the learner still makes big errors with the first one.

This case study on Aikido can provide some insight on the different issues to be considered in order to design vibrotactile user interfaces for interactive intelligent systems that can support adaptive learning of motor skills. User centered design methodologies such as TORMES [10] can facilitate the elicitation of the personalized support to be provided from a psycho-educational perspective that takes into account cognitive, affective and psychomotor issues. In particular, TORMES combines user centered design methods and data mining techniques to qualitatively analyzed quantitative information collected from the users’ interactions iteratively around the following four stages: identify the context of use, specify user requirements, create the design of the solution and evaluate the design against the requirements. The resulting personalized support is to be described in terms of a semantic model that covers what support is offered (action to be done), how and where the learner is informed about the support (modality), when and to who produce the support (learner features, interaction capabilities and context, restrictions, applicability conditions), why a support has been produced (rationale), and which are the features that characterize the support (category, relevance, appropriateness, origin…).

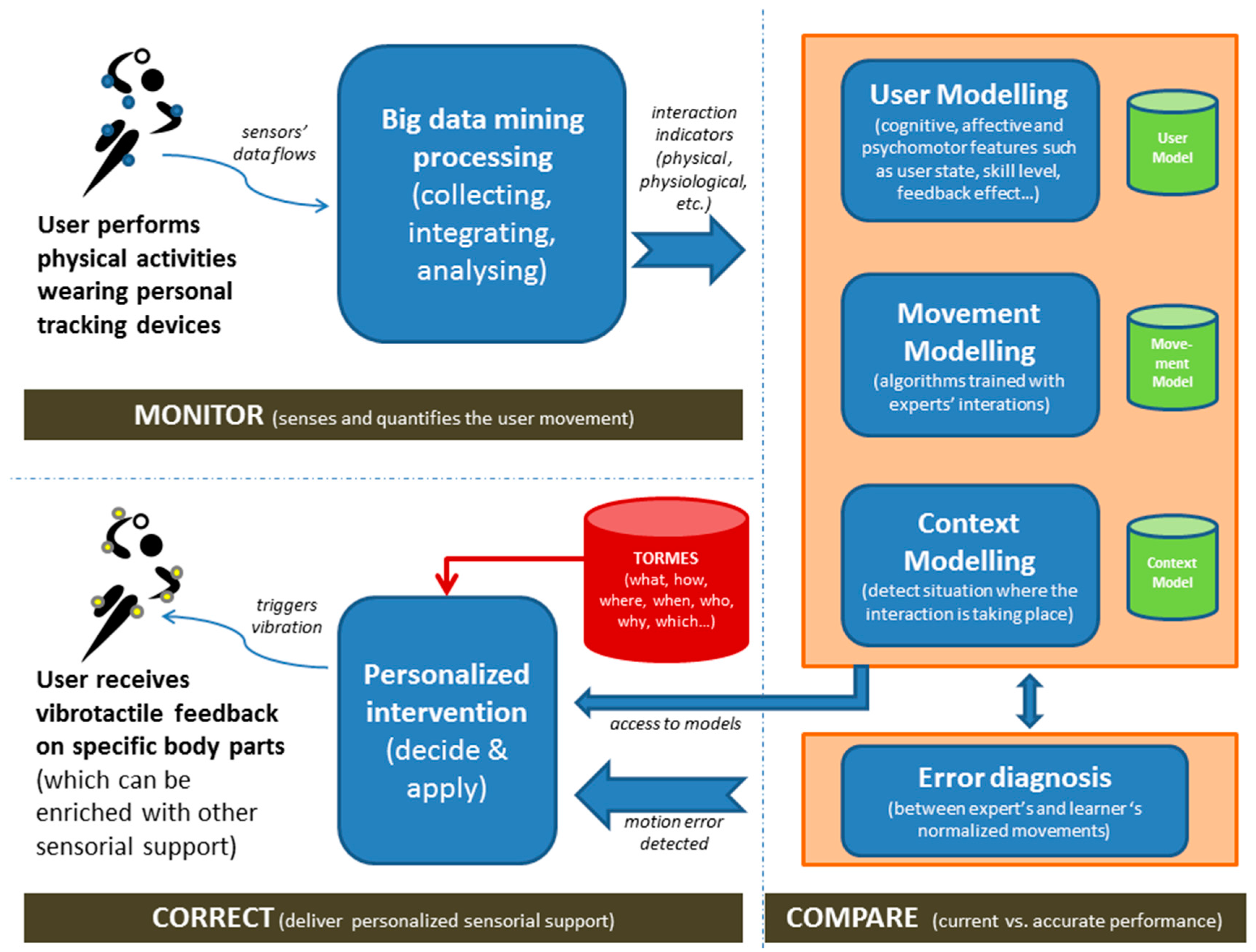

Figure 1 identifies several processing components that should enable the development of motor skills learning systems with personalized vibrotactile feedback. The three steps analyzed in the systems reported in Table 1 (and discussed in [9]) are clearly defined: monitoring, comparing and correcting. Monitoring deals with sensing and quantifying the users’ interactions. Data collected and processed should not only relate to movement, but other information such as physiological data can be relevant to model the performance and other issues such as changes in the affective state. Comparing current versus accurate performance requires modelling of the user, movement and context before error diagnosis algorithms are run. When movement errors are detected, the system should decide (considering the modelled information about the user, movement and context) if an intervention is to be provided or not. The decision algorithm is guided by the outcomes of the application of the TORMES methodology, resulting in the delivery—when appropriate—of multisensorial adapted response, which, as discussed in Section 2, most probably will be vibrotactile feedback (or vibrotactile feedback enriched with another sensorial output).

Figure 1.

Schema of the three processing steps (monitoring, comparing and correcting) required to deliver vibrotactile personalized feedback for adaptive learning of motor skills.

As represented in Figure 1, wearable devices placed on the user (e.g., watches, gloves, t-shirts, bracelets, hats…) or on the instrument used by the user (e.g., guitar, golf club, oar…) can collect personal information about the performance of the movements in real time. To analyze the data collected, data mining algorithms can be integrated into big data processing techniques. As a result, interaction indicators that involve serval kinds of issues such as physical and physiological, are computed.

These indicators can be used to build and update the different models used by the system to provide personalization, namely those describing the user, the movement and the context. Appropriate algorithms are needed for the corresponding user, movement and context modelling. The knowledge generated in these modelling processes can allow a comparison of the current interaction with the appropriate movement as if an expert with the current user’s features carries out the movement. For this comparison, the interaction features should be normalized before being compared.

If this comparison shows motion errors, then the system should consider an intervention to correct them. This is the key step to providing the personalization and should be designed with the involvement of users, applying appropriate elicitation methodologies such as TORMES.

The resulting systems are expected to dynamically adapt to each individual user’s needs by monitoring, comparing and, when appropriate, correcting the way the user moves when practicing some predefined movement as defined, for instance, when performing a sport technique or playing a musical instrument.

The approach proposed is in line with the attempts to move learning platforms to ecosystems of interacting learning tools that provide new opportunities for user adaptation and which take advantage of the new sensor data available [35].

5. Conclusions

Current advances in wearable technologies (such as intelligent bracelets, watches, t-shirts, etc.) can facilitate quantifying and describing the psychomotor activity and physiological status of a person while carrying out learning tasks that involve enactive knowledge and require the consolidation of motor tasks into memory through repetition towards accurate movements (e.g., playing a musical instrument, practicing a sport technique, using sign language, etc.). In addition, big data processing techniques have been suggested in order to support the processing of user collected motor data from diverse wearable sources of heterogeneous information. By discussing some existing systems that provide vibrotactile feedback when carrying out motor skills, this paper aims to open the field for researchers on learning personalization to integrate user, movement and context modelling techniques into systems that provide support for motor skills acquisition. Elicitation methodologies such as TORMES can serve to design the intervention strategy that is most appropriate for each user in each context, which is to be implemented with appropriate algorithms, hence personalizing the vibrotactile feedback delivered.

Conflicts of Interest

The author declares no conflict of interest.

References

- Scheer, K.S.; Siebrant, S.M.; Brown, G.A.; Shaw, B.S.M.; Shaw, I. Wii, Kinect, and Move. Heart Rate, Oxygen Consumption, Energy Expenditure, and Ventilation due to Different Physically Active Video Game Systems in College Students. Int. J. Exerc. Sci. 2014, 7, 22–32. [Google Scholar] [PubMed]

- Tang, R.; Yang, X.-D.; Bateman, S.; Jorge, J.; Tang, A. Physio@Home: Exploring Visual Guidance and Feedback Techniques for Physiotherapy Exercises. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI ‘15), Seoul, Korea, 18–23 April 2015; pp. 4123–4132.

- Di Tore, P.A.; Raiola, G. Exergames and motor skills learning: A brief summary. Int. Res. J. Appl. Basic Sci. 2012, 3, 1161–1164. [Google Scholar]

- Shank, R.C.; Berman, T.R.; Macpherson, K.A. Learning by Doing. In Instructional-Design Theories and Models: A New Paradigm of Instructional Theory; Reigeluth, C.M., Ed.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1999; Volume II, pp. 161–181. [Google Scholar]

- Bruner, J.S. Toward a Theory of Instruction; Harvard University Press: Cambridge, MA, USA, 1966. [Google Scholar]

- Luciani, A.; Cadoz, C. (Eds.) Enaction and Enactive Interfaces: A Handbook of Terms; Enactive System Books: Grenoble, France, 2007.

- Raymaekers, C. Special issue on enactive interfaces. Interact. Comput. 2009, 21, 1–2. [Google Scholar] [CrossRef]

- Soderstrom, N.C.; Bjork, R.A. Learning Versus Performance: An Integrative Review. Perspect. Psychol. Sci. 2015, 10, 176–199. [Google Scholar] [CrossRef] [PubMed]

- Santos, O.C. Training the Body: The Potential of AIED to support Personalized Motor Skills Learning. Int. J. Artif. Intell. Educ. 2016, 26, 730–755. [Google Scholar] [CrossRef]

- Santos, O.C.; Boticario, J.G. Practical guidelines for designing and evaluating educationally oriented recommendations. Comput. Educ. 2015, 81, 354–374. [Google Scholar] [CrossRef]

- Sigrist, R.; Rauter, G.; Riener, R.; Wolf, P. Augmented visual, auditory, haptic, and multimodal feedback in motor learning: A review. Psychon. Bull. Rev. 2013, 20, 21–53. [Google Scholar] [CrossRef] [PubMed]

- Alahakone, A.U.; Senanayake, S.M.N.A. Vibrotactile feedback systems: Current trends in rehabilitation, sports and information display. In Proceedings of the 2009 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Singapore, 14–17 July 2009; pp. 1148–1153.

- Bark, K.; Hyman, E.; Tan, F.; Cha, E.; Jax, S.A.; Buxbaum, L.J.; Kuchenbecker, K.J. Effects of Vibrotactile Feedback on Human Learning of Arm Motions. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 51–63. [Google Scholar] [CrossRef] [PubMed]

- Ahmaniemi, T.; Marila, J.; Lantz, V. Design of Dynamic Vibrotactile Textures. IEEE Trans. Haptics 2010, 3, 245–256. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.Z.-H.; Wong, D.W.-C.; Lam, W.K.; Wan, A.H.-P.; Lee, W.C.-C. Balance Improvement Effects of Biofeedback Systems with State-of-the-Art Wearable Sensors: A Systematic Review. Sensors 2016, 16, 434. [Google Scholar] [CrossRef] [PubMed]

- Lieberman, J.; Breazeal, C. TIKL: Development of a Wearable Vibrotactile Feedback Suit for Improved Human Motor Learning. IEEE Trans. Robot. 2007, 23, 919–926. [Google Scholar] [CrossRef]

- Bloomfield, A.; Badler, N. Virtual Training via vibrotactile arrays. Teleoper. Virtual Environ. 2008, 17, 103–120. [Google Scholar] [CrossRef]

- Portillo-Rodriguez, O.; Sandoval-Gonzalez, O.; Ruffaldi, E.; Leonardi, R.; Avizzano, C.; Bergamasco, M. Real-time gesture recognition, evaluation and feed-forward correction of a multimodal Tai-Chi platform. Lect. Notes Comput. Sci. 2008, 5270, 30–39. [Google Scholar]

- Spelmezan, D.; Schanowski, A.; Borchers, J. Wearable automatic feedback devices for physical activities. In Proceedings of the Fourth International Conference on Body Area Networks, Los Angeles, CA, USA, 1–3 April 2009.

- Miaw, D.; Raskar, R. Second Skin: Motion capture with actuated feedback for motor learning. In Proceedings of the IEEE Virtual Reality Conference, Waltham, MA, USA, 20–24 March 2010; pp. 289–290.

- Van der Linden, J.; Schoonderwaldt, E.; Bird, J.; Johnson, R. MusicJacket—Combining Motion Capture and Vibrotactile Feedback to Teach Violin Bowing. IEEE Trans. Instrum. Meas. 2011, 60, 104–113. [Google Scholar] [CrossRef]

- Bloom, B.S.; Engelhart, M.D.; Furst, E.J.; Hill, W.H.; Krathwohl, D.R. Taxonomy of educational objectives: The classification of educational goals. In Handbook I: Cognitive Domain; Longman: New York, NY, USA, 1956. [Google Scholar]

- Fairclough, S.H.; Gilleade, K.M. Construction of the biocybernetic loop: A case study. In Proceedings of the 14th ACM International Conference on Multimodal Interaction, Santa Monica, CA, USA, 22–26 October 2012; pp. 571–578.

- Mukhopadhyay, S.C. Wearable Sensors for Human Activity Monitoring: A Review. IEEE Sens. J. 2015, 15, 1321–1330. [Google Scholar] [CrossRef]

- Swan, M. The Quantified Self: Fundamental Disruption in Big Data Science and Biological Discovery. BIG DATA 2013, 1, 85–99. [Google Scholar] [CrossRef] [PubMed]

- Shmueli, G. Analyzing Behavioral Big Data: Methodological, Practical, Ethical, and Moral Issues. Qual. Eng. 2017, 29, 57–74. [Google Scholar]

- Fan, W.; Bifet, A. Mining big data: Current status, and forecast to the future. SIGKDD Explor. Newsl. 2013, 14, 1–5. [Google Scholar] [CrossRef]

- Wu, X.; Zhu, X.; Wu, G.Q.; Ding, W. Data mining with big data. IEEE Trans. Knowl. Data Eng. 2014, 26, 97–107. [Google Scholar]

- Xu, X.; Sheng, Q.Z.; Zhang, L.; Fan, Y.; Dustdar, S. From Big Data to Big Service. Computer 2015, 48, 80–83. [Google Scholar] [CrossRef]

- Kuflik, T.; Kay, J.; Quigley, A. Preface to the special issue on ubiquitous user modeling and user-adapted interaction. User Model. User Adapt. Interact. 2015, 25, 185–187. [Google Scholar] [CrossRef]

- Santos, O.C. Emotions and Personality in Adaptive e-Learning Systems: An Affective Computing Perspective. In Emotions and Personality in Personalized Systems; Tkalčič, M., De Carolis, B., de Gemmis, M., Odić, A., Košir, A., Eds.; Springer: New York, NY, USA, 2016; pp. 278–279. [Google Scholar]

- Santos, O.C.; Uria-Rivas, R.; Rodriguez-Sanchez, M.C.; Boticario, J.G. An Open Sensing and Acting Platform for Context-Aware Affective Support in Ambient Intelligent Educational Settings. IEEE Sens. J. 2016, 16, 3865–3874. [Google Scholar] [CrossRef]

- Santos, O.C. Beyond Cognitive and Affective Issues: Designing Smart Learning Environments for Psychomotor Personalized Learning. In Learning, Design, and Technology; Springer: New York, NY, USA, 2016; pp. 1–24. [Google Scholar]

- Santos, O.C. Education still needs Artificial Intelligence to support Personalized Motor Skill Learning: Aikido as a case study. Workshop on Les Contes du Mariage: Should AI stay married to Ed? In Proceedings of the 17th International Conference on Artificial Intelligence in Education (AIED 2015), Madrid, Spain, 22–26 June 2015; pp. 72–81.

- Nye, B.D. ITS, The End of the World as We Know It: Transitioning AIED into a Service-Oriented Ecosystem. Int. J. Artif. Intell. Educ. 2016, 26, 756–770. [Google Scholar] [CrossRef]

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).