A Distributed Execution Pipeline for Clustering Trajectories Based on a Fuzzy Similarity Relation

Abstract

1. Introduction

2. Literature

3. Background

- (Reflexivity): ;

- (Symmetry): ;

- (max–min transitivity): ;

- (max–Δ transitivity): ;

- (max–prod transitivity): .

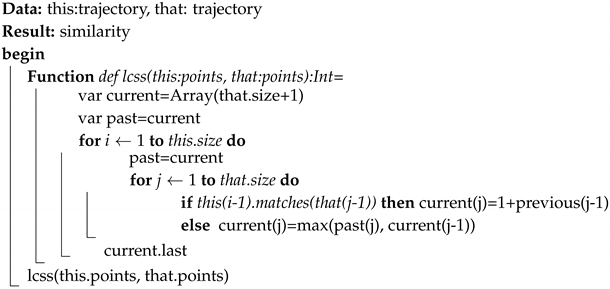

| Algorithm 1: LCSS linear space algorithm. |

|

4. Materials and Methods

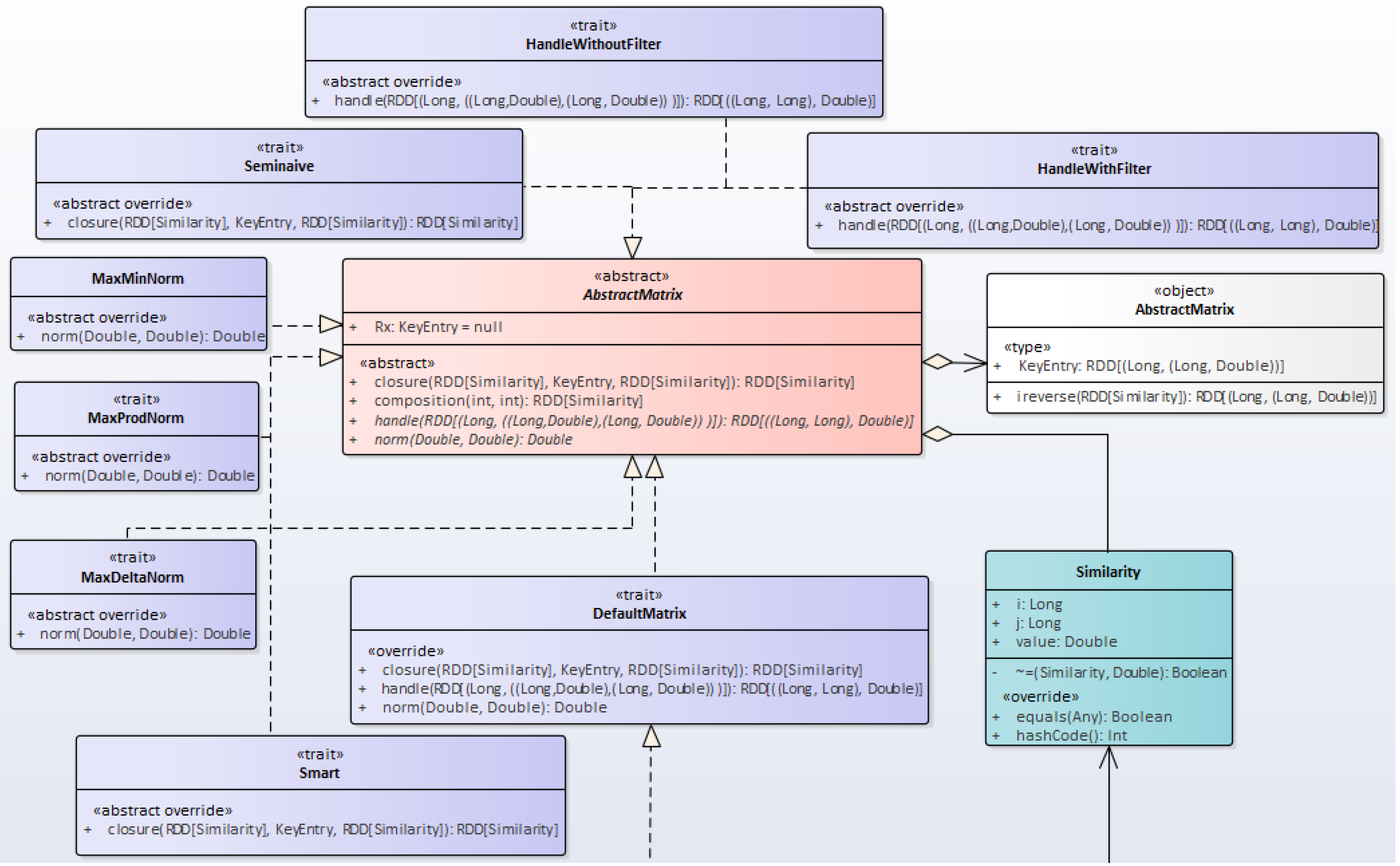

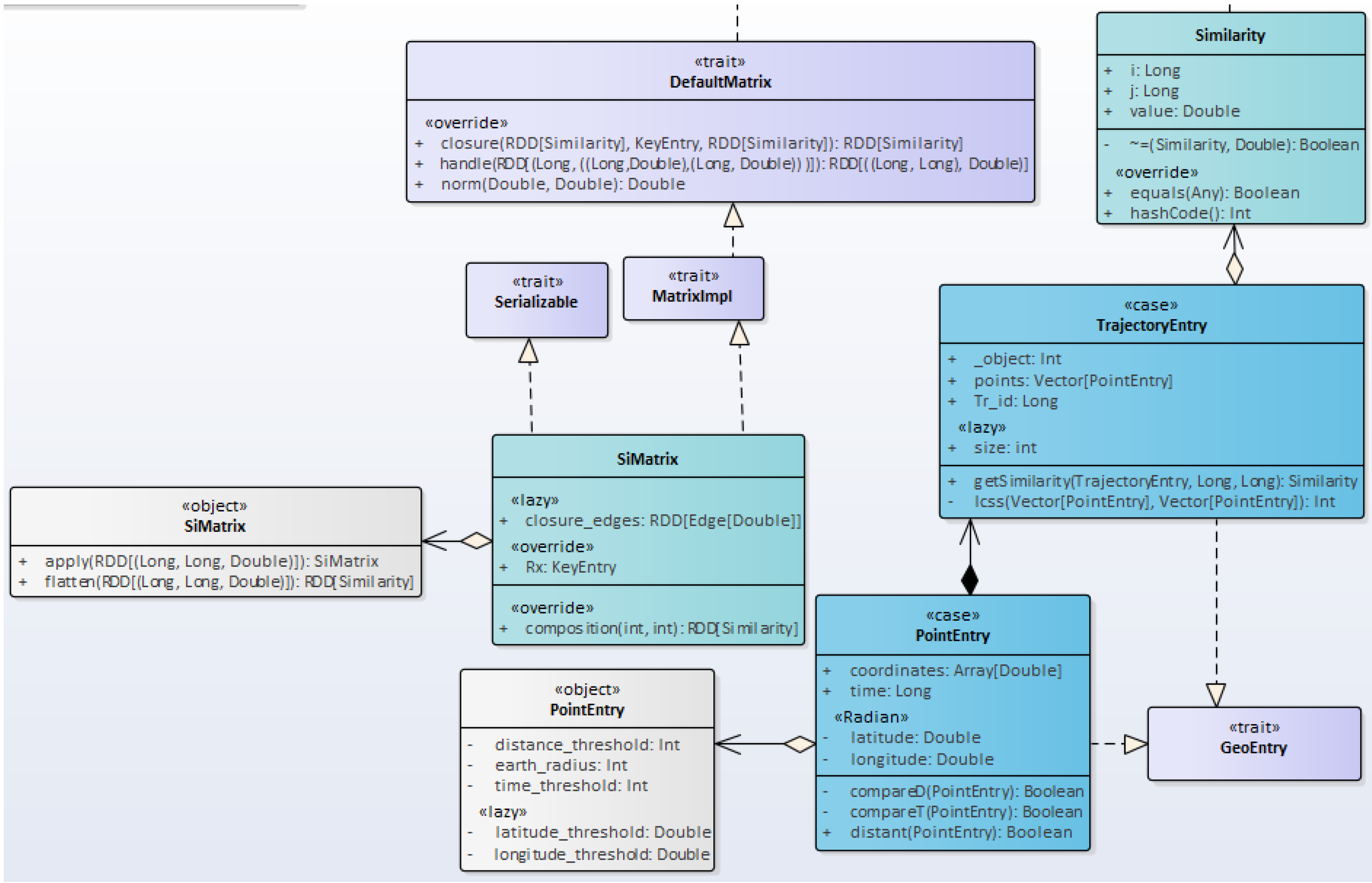

4.1. The Project’s Class Diagrams

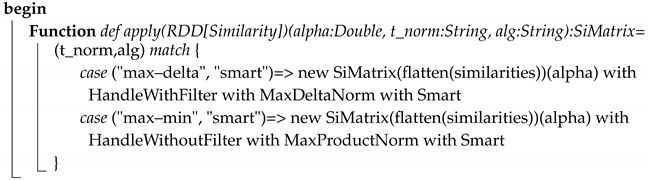

| Algorithm 2: The SiMatrix companion object apply method. |

|

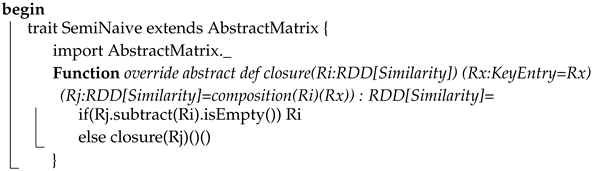

| Algorithm 3: The SemiNaive trait. |

|

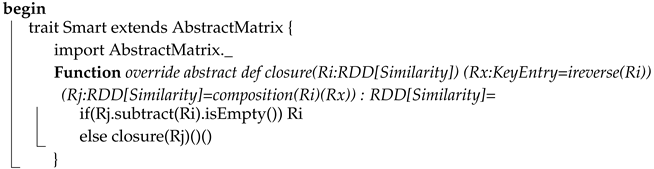

| Algorithm 4: The Smart trait. |

|

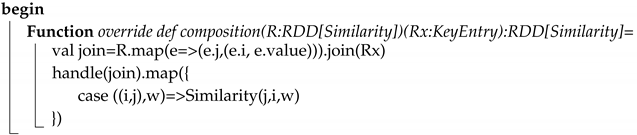

| Algorithm 5: The overridden composition method in the SiMatrix class. |

|

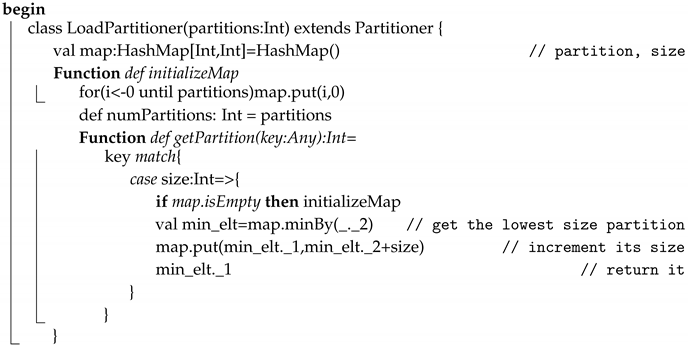

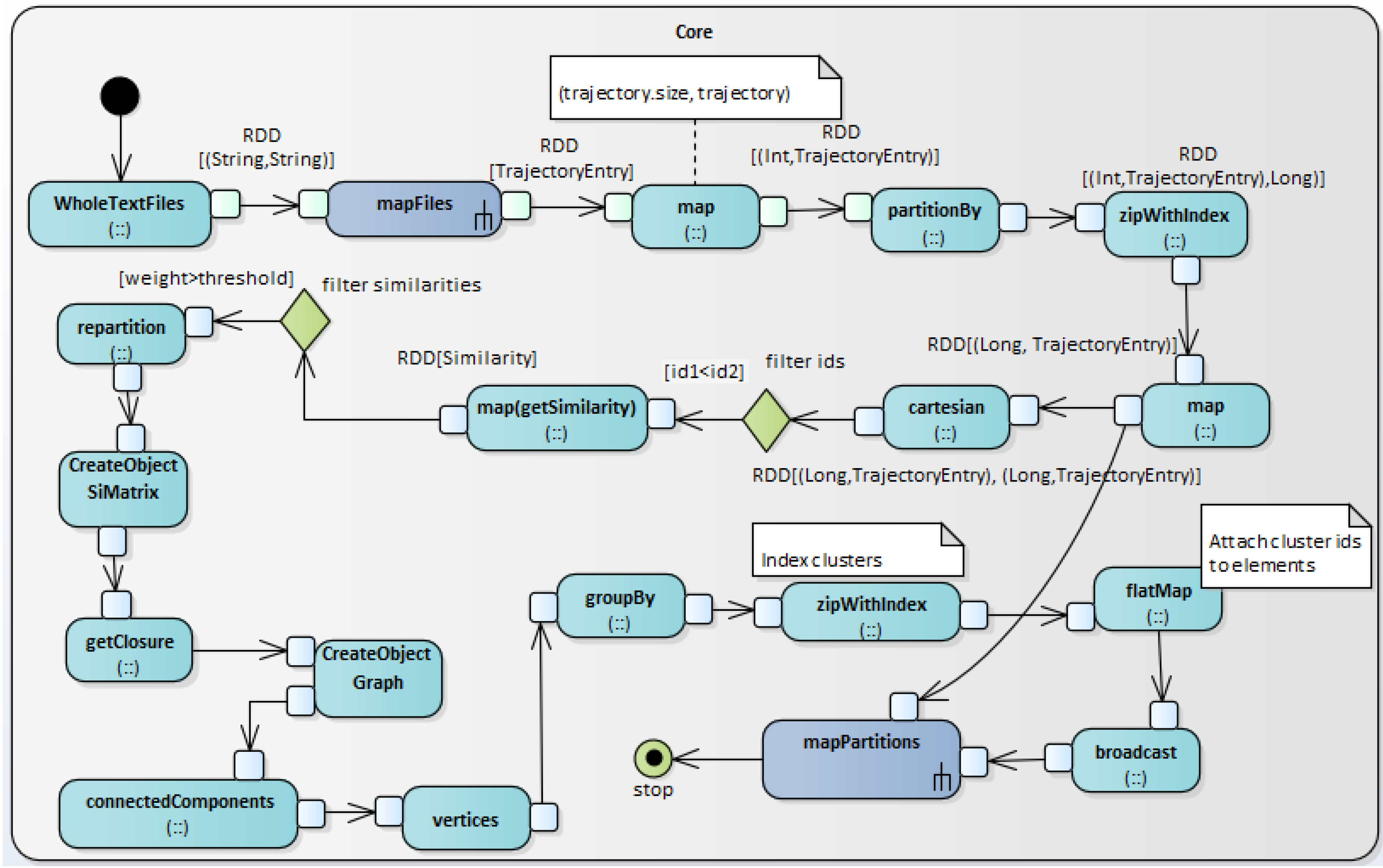

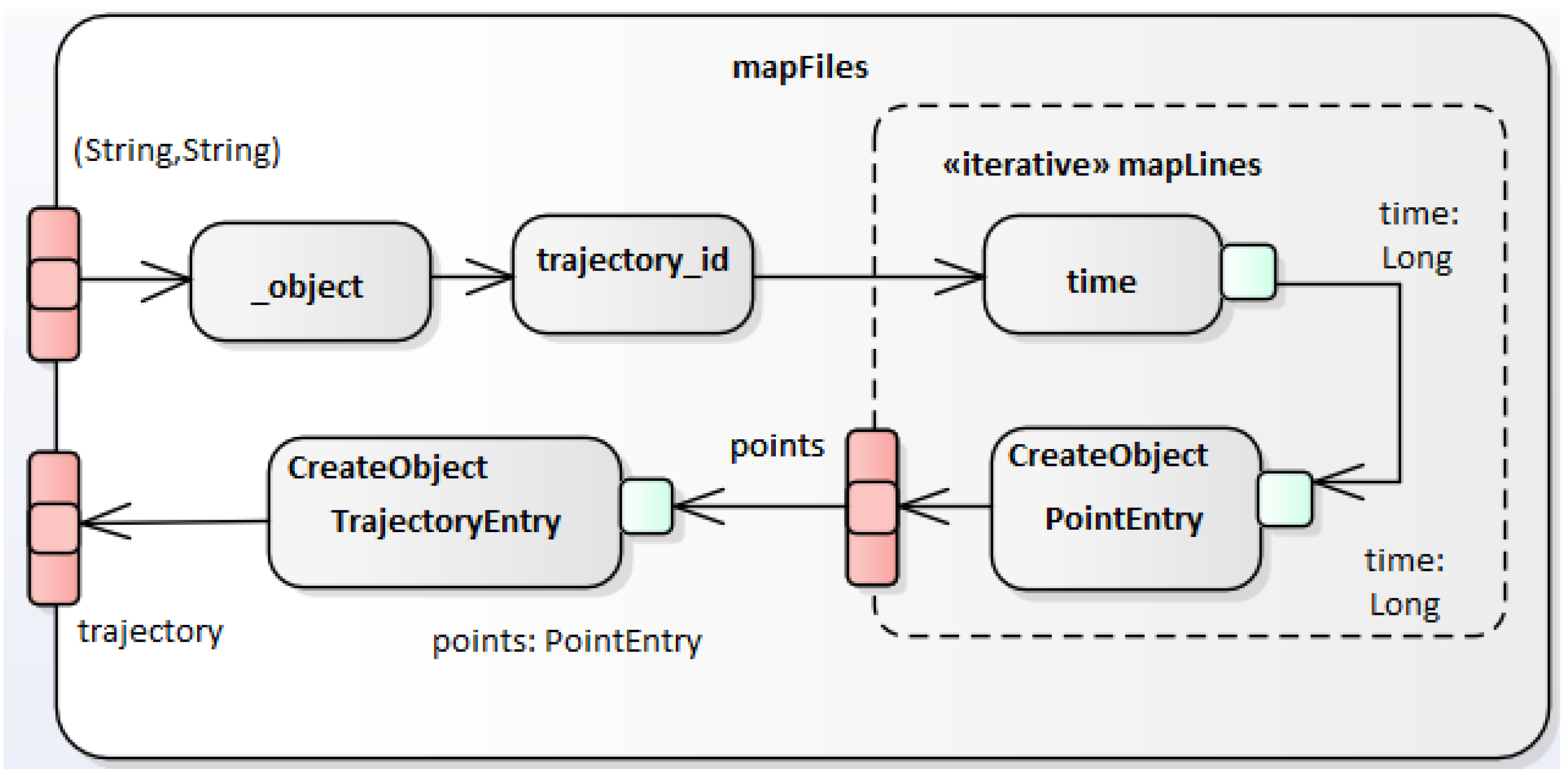

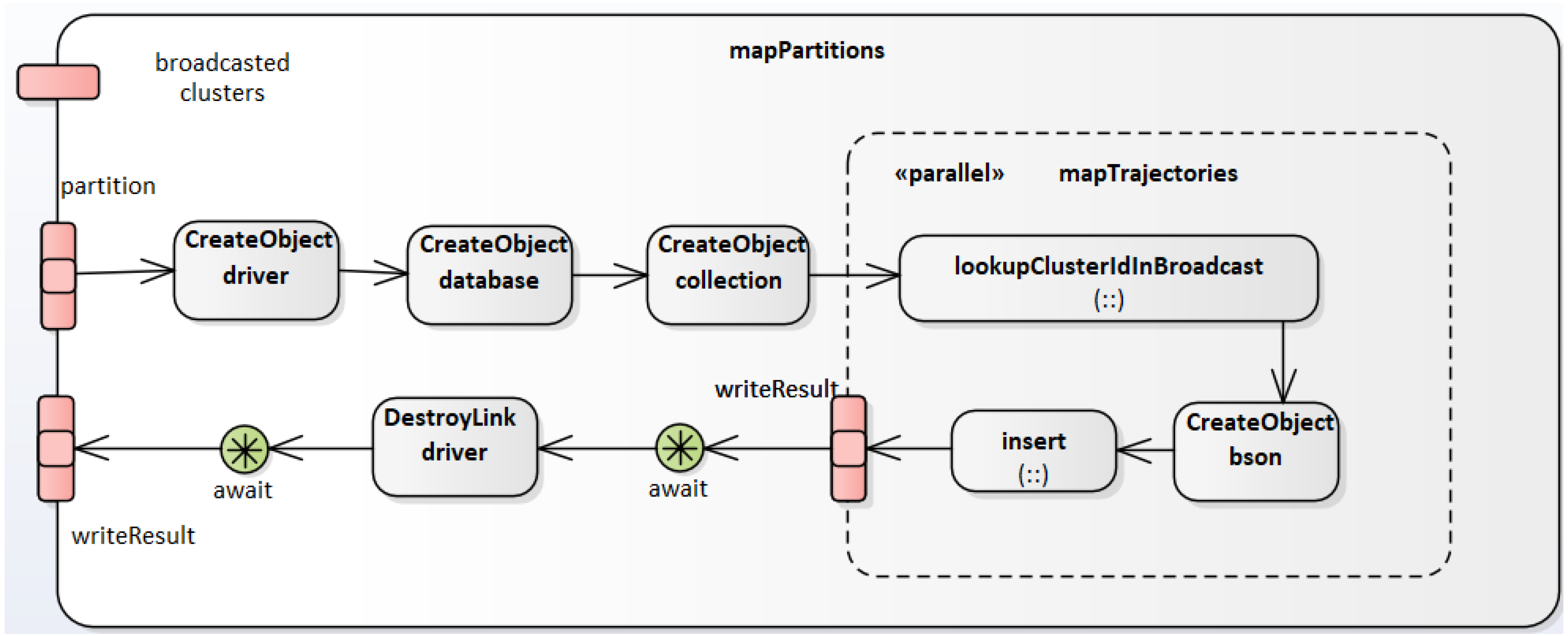

4.2. The Overall Execution Pipeline

| Algorithm 6: The LoadPartitioner class. |

|

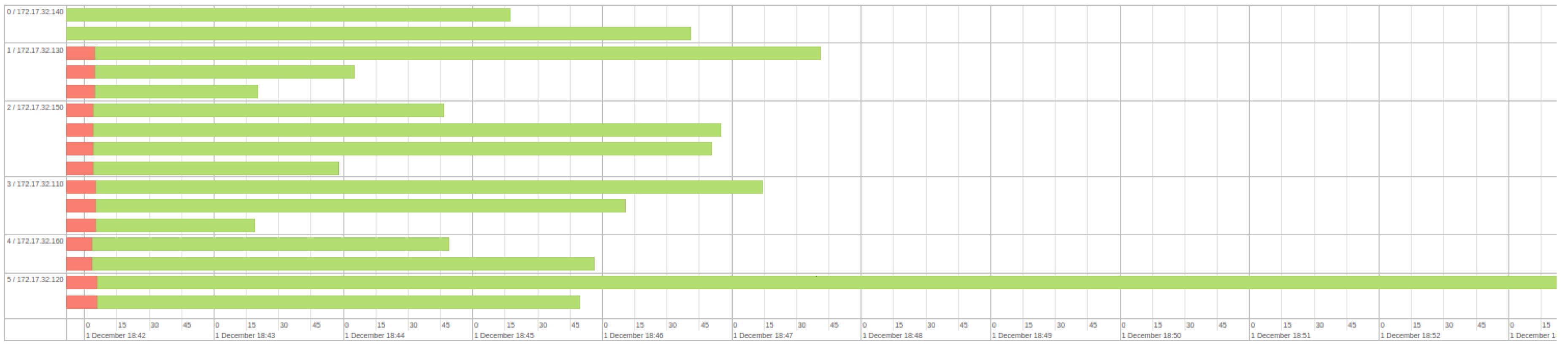

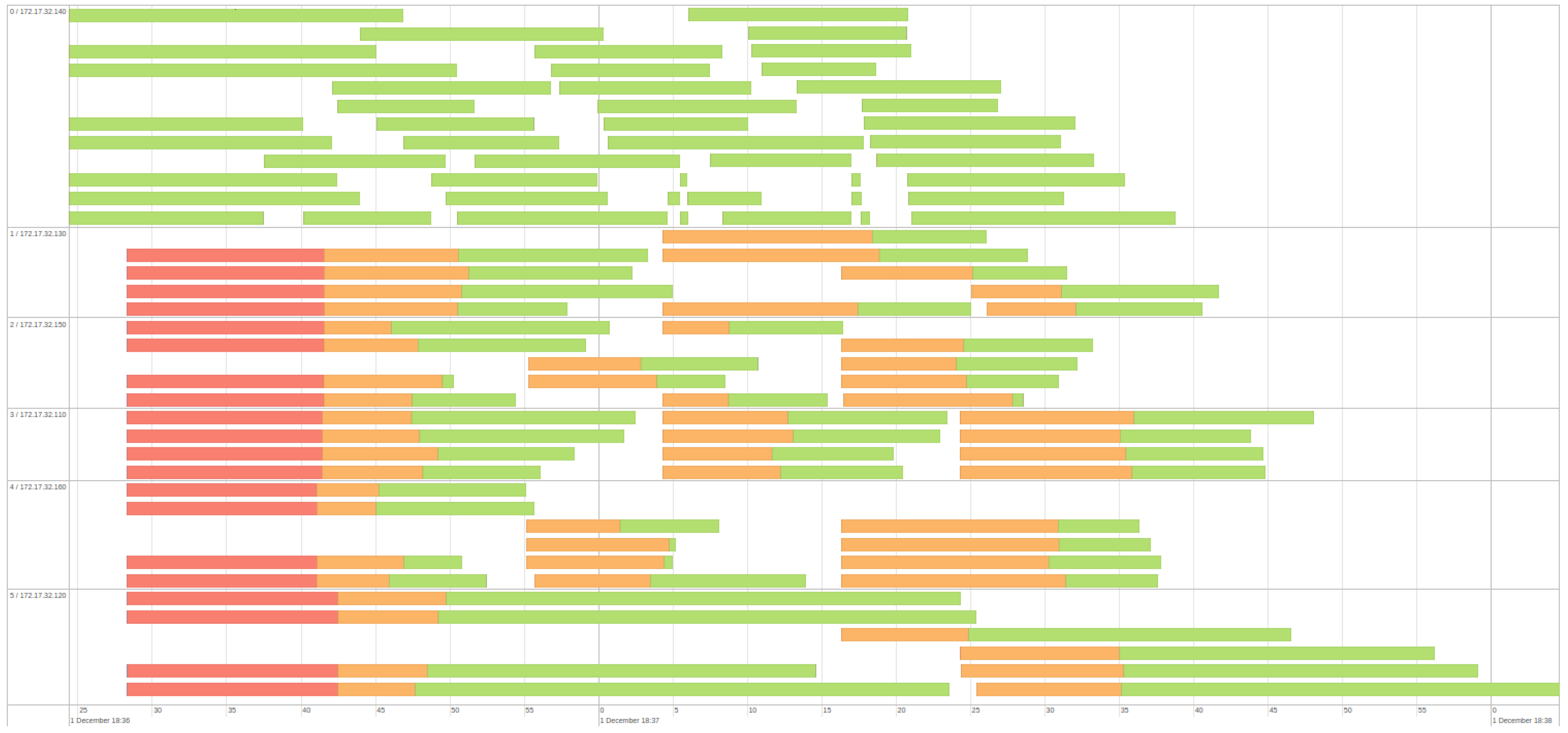

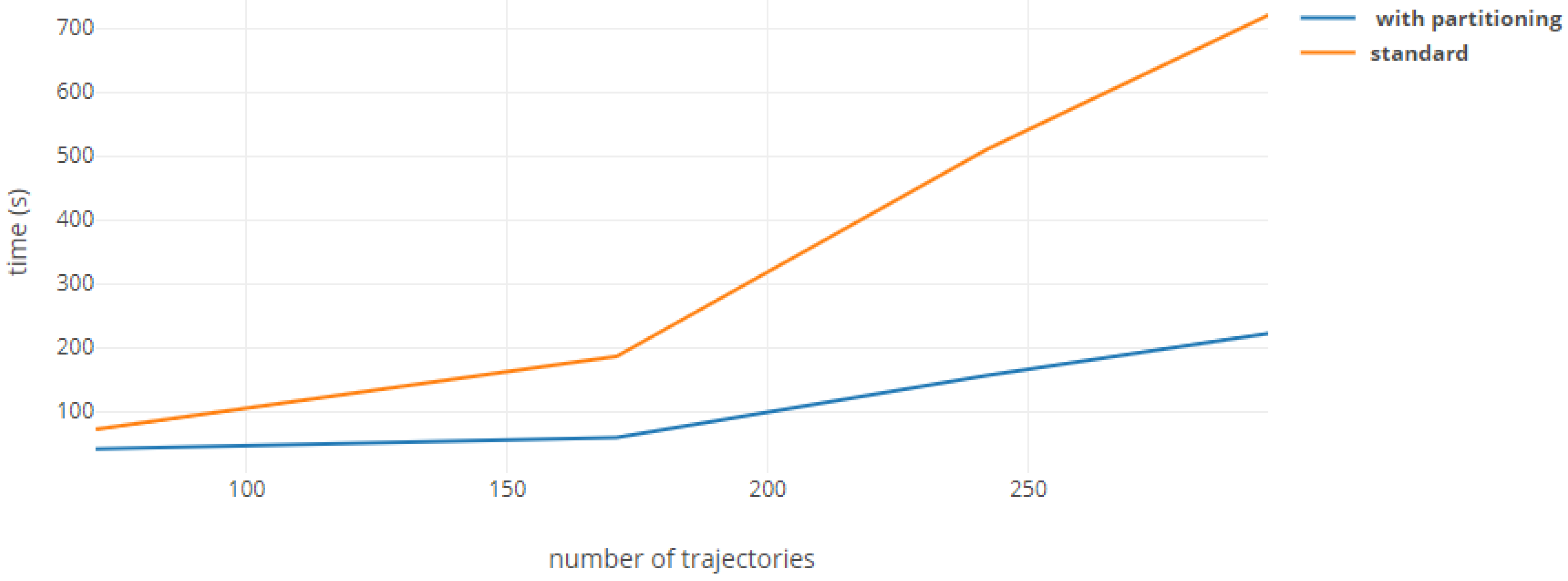

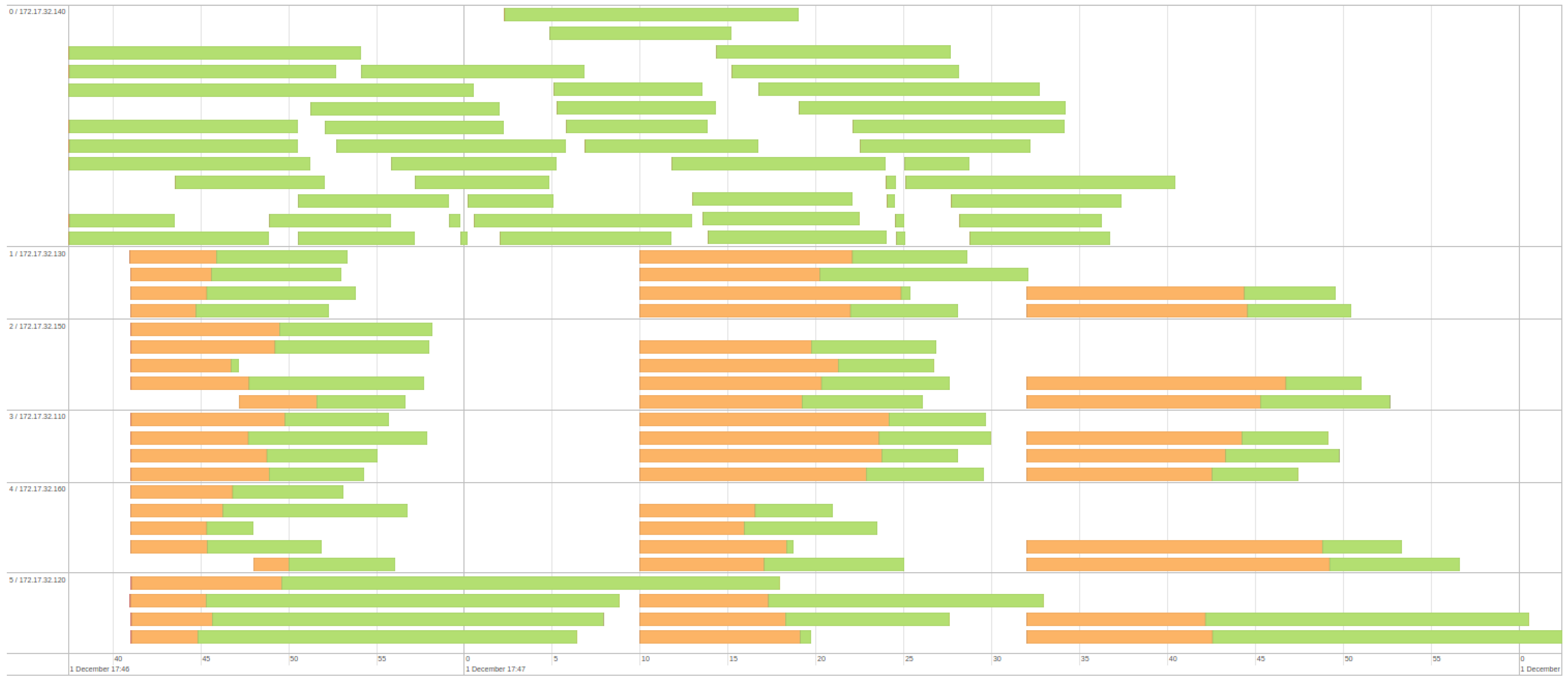

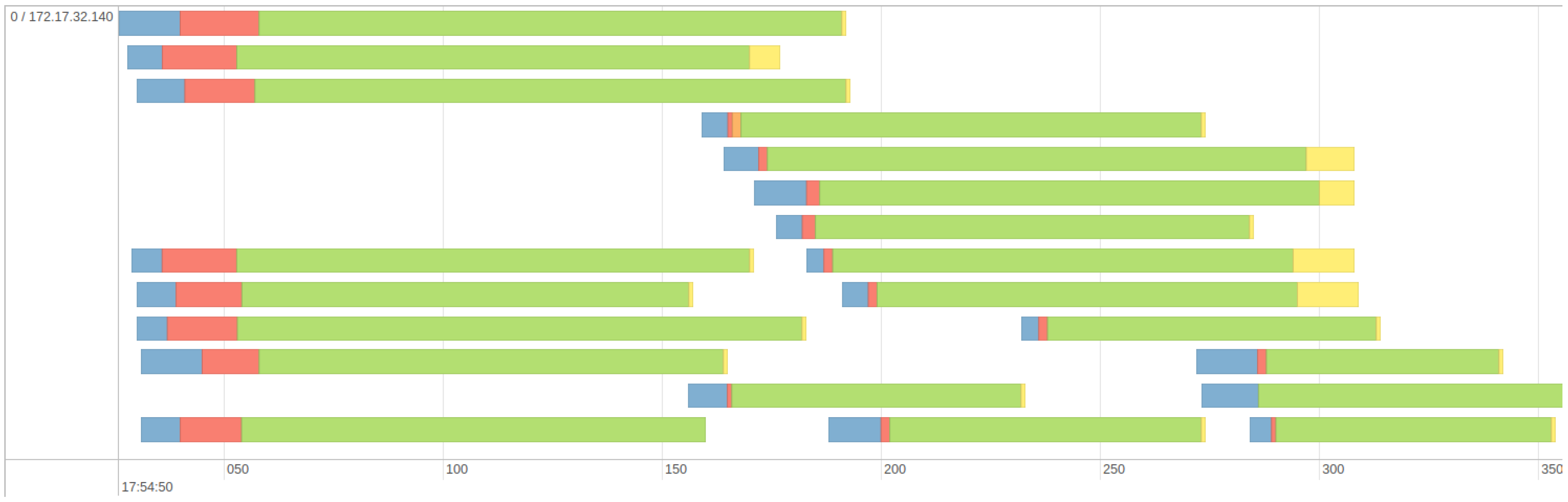

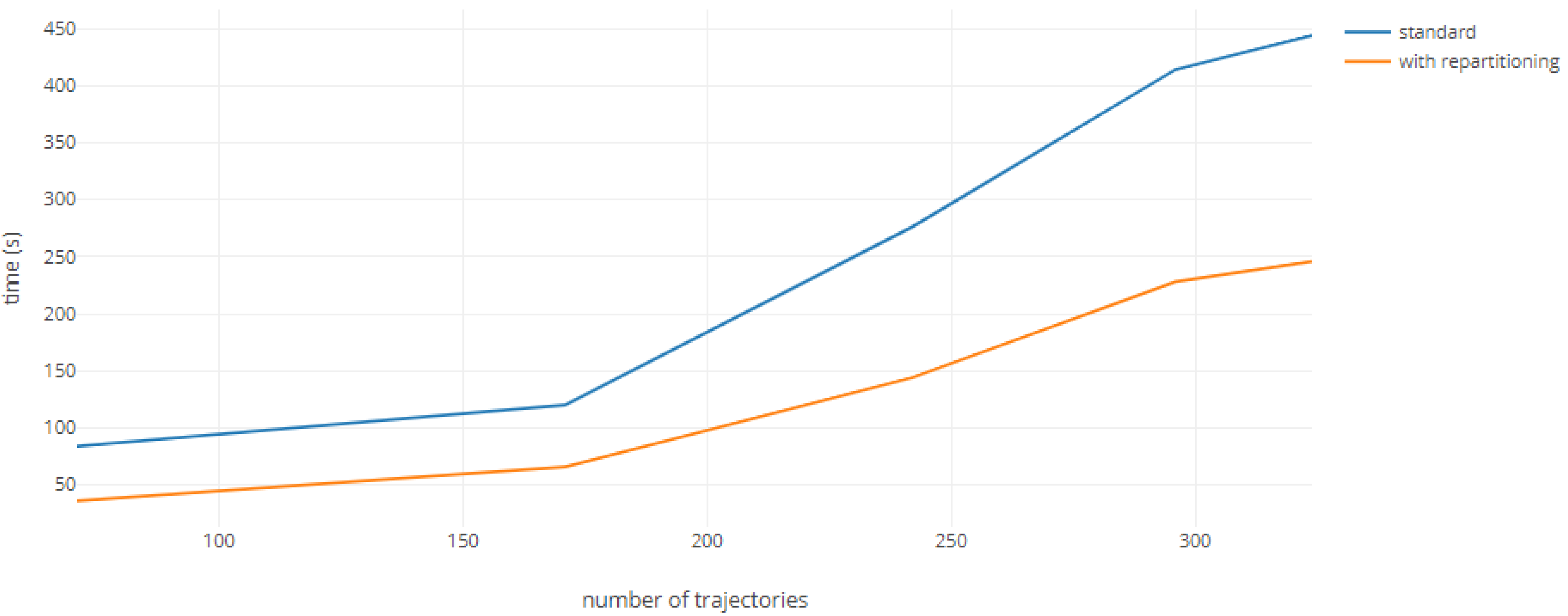

5. Results and Discussions

6. Conclusions

Computer Code

Author Contributions

Funding

Conflicts of Interest

References

- Zheng, Y.; Zhang, L.; Xie, X.; Ma, W.Y. Mining Interesting Locations and Travel Sequences From GPS Trajectories. In Proceedings of the International conference on World Wide Web 2009, Madrid, Spain, 20–24 April 2009. [Google Scholar]

- Zheng, Y.; Li, Q.; Chen, Y.; Xie, X.; Ma, W.Y. Understanding Mobility Based on GPS Data. In Proceedings of the Ubicomp 2008, Seoul, Korea, 21–24 September 2008. [Google Scholar]

- Zheng, Y.; Xie, X.; Ma, W.Y. Geolife: A collaborative social networking service among user, location and trajectory. IEEE Data Eng. Bull. 2010, 33, 32–39. [Google Scholar]

- Magdy, N.; Sakr, M.A.; Mostafa, T.; El-Bahnasy, K. Review on trajectory similarity measures. In Proceedings of the 2015 IEEE Seventh International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 12–14 December 2015; pp. 613–619. [Google Scholar]

- Zheng, V.W.; Cao, B.; Zheng, Y.; Xie, X.; Yang, Q. Collaborative Filtering Meets Mobile Recommendation: A User-Centered Approach. In Proceedings of the AAAI, Atlanta, GA, USA, 11–15 July 2010; Volume 10, pp. 236–241. [Google Scholar]

- Ioannidis, Y.E. On the computation of the transitive closure of relational operators. In Proceedings of the VLDB, Berkeley, CA, USA, 25–28 August 1986; Volume 86, pp. 403–411. [Google Scholar]

- Brodie, M.L.; Mylopoulos, J. On Knowledge Base Management Systems: Integrating Artificial Intelligence and Database Technologies; Springer Science & Business Media: New York, NY, USA, 2012. [Google Scholar]

- Zadeh, L.A. Fuzzy Logic and Its Applications; Springer: New York, NY, USA, 1965. [Google Scholar]

- Yang, M.S. A survey of fuzzy clustering. Math. Comput. Model. 1993, 18, 1–16. [Google Scholar] [CrossRef]

- Yao, J.; Dash, M.; Tan, S.; Liu, H. Entropy-based fuzzy clustering and fuzzy modeling. Fuzzy Sets Syst. 2000, 113, 381–388. [Google Scholar] [CrossRef]

- Boulmakoul, A.; Zeitouni, K.; Chelghoum, N.; Marghoubi, R. Fuzzy structural primitives for spatial data mining. Complex Syst. 2002, 5, 14. [Google Scholar]

- Kondruk, N. Clustering method based on fuzzy binary relation. East.-Eur. J. Enterp. Technol. 2017, 2. [Google Scholar] [CrossRef]

- Tamura, S.; Higuchi, S.; Tanaka, K. Pattern classification based on fuzzy relations. IEEE Trans. Syst. Man Cybern. 1971, SMC-1, 61–66. [Google Scholar] [CrossRef]

- Yang, M.S.; Shih, H.M. Cluster analysis based on fuzzy relations. Fuzzy Sets Syst. 2001, 120, 197–212. [Google Scholar] [CrossRef]

- Liang, G.S.; Chou, T.Y.; Han, T.C. Cluster analysis based on fuzzy equivalence relation. Eur. J. Oper. Res. 2005, 166, 160–171. [Google Scholar] [CrossRef]

- Houtsma, M.A.; Apers, P.M.; Ceri, S. Distributed transitive closure computations: The disconnection set approach. In Proceedings of the VLDB, Brisbane, Queensland, Australia, 13–16 August 1990; Volume 90, pp. 335–346. [Google Scholar]

- Houtsma, M.A.; Apers, P.M.; Schipper, G.L. Data fragmentation for parallel transitive closure strategies. In Proceedings of the IEEE Ninth International Conference on Data Engineering, Vienna, Austria, 19–23 April 1993; pp. 447–456. [Google Scholar]

- Gribkoff, E. Distributed Algorithms for the Transitive Closure. 2013. Available online: https://pdfs.semanticscholar.org/57fd/5969b2a454c90b57b12c49e90847fee079a8.pdf (accessed on 19 January 2019).

- Boulmakoul, A.; Maguerra, S.; Karim, L.; Badir, H. A Scalable, Distributed and Directed Fuzzy Relational Algorithm for Clustering Semantic Trajectories. In Proceedings of the Sixth International Conference on Innovation and New Trends in Information Systems, Casablanca, Morocco, 24–25 November 2017. [Google Scholar]

- Maguerra, S.; Boulmakoul, A.; Karim, L.; Badir, H. Scalable Solution for Profiling Potential Cyber-criminals in Twitter. In Proceedings of the Big Data & Applications 12th Edition of the Conference on Advances of Decisional Systems, Marrakech, Morocco, 2–3 May 2018. [Google Scholar]

- Li, H.; Liu, J.; Wu, K.; Yang, Z.; Liu, R.W.; Xiong, N. Spatio-temporal vessel trajectory clustering based on data mapping and density. IEEE Access 2018, 6, 58939–58954. [Google Scholar] [CrossRef]

- Yi, D.; Su, J.; Liu, C.; Chen, W.H. Trajectory Clustering Aided Personalized Driver Intention Prediction for Intelligent Vehicles. IEEE Trans. Ind. Inform. 2018. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Bezdek, J.C.; Harris, J.D. Fuzzy partitions and relations; an axiomatic basis for clustering. Fuzzy Sets Syst. 1978, 1, 111–127. [Google Scholar] [CrossRef]

- Boulmakoul, A.; Karim, L.; Lbath, A. Moving object trajectories meta-model and spatio-temporal queries. arXiv, 2012; arXiv:1205.1796. [Google Scholar] [CrossRef]

- Cook, J.D. Converting Miles to Degrees Longitude or Latitude. 2009. Available online: https://www.johndcook.com/blog/2009/04/27/converting-miles-to-degrees-longitude-or-latitude/ (accessed on 30 November 2018).

- Hirschberg, D.S. A linear space algorithm for computing maximal common subsequences. Commun. ACM 1975, 18, 341–343. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maguerra, S.; Boulmakoul, A.; Karim, L.; Badir, H. A Distributed Execution Pipeline for Clustering Trajectories Based on a Fuzzy Similarity Relation. Algorithms 2019, 12, 29. https://doi.org/10.3390/a12020029

Maguerra S, Boulmakoul A, Karim L, Badir H. A Distributed Execution Pipeline for Clustering Trajectories Based on a Fuzzy Similarity Relation. Algorithms. 2019; 12(2):29. https://doi.org/10.3390/a12020029

Chicago/Turabian StyleMaguerra, Soufiane, Azedine Boulmakoul, Lamia Karim, and Hassan Badir. 2019. "A Distributed Execution Pipeline for Clustering Trajectories Based on a Fuzzy Similarity Relation" Algorithms 12, no. 2: 29. https://doi.org/10.3390/a12020029

APA StyleMaguerra, S., Boulmakoul, A., Karim, L., & Badir, H. (2019). A Distributed Execution Pipeline for Clustering Trajectories Based on a Fuzzy Similarity Relation. Algorithms, 12(2), 29. https://doi.org/10.3390/a12020029