Parameter Optimization of Active Disturbance Rejection Controller Using Adaptive Differential Ant-Lion Optimizer

Abstract

:1. Introduction

- Differential evolution strategy is introduced into ALO to enhance the diversification of population in each iteration, which ensures the global exploration of the algorithm.

- A step-scaling method is integrated into ALO, which changes the step size according to the number of iterations. The step-scaling method can achieve a good balance of exploration and exploitation.

- DSALO algorithm is conducted on four representative test functions, compared with other algorithms to demonstrate its efficiency.

- DSALO is applied in the parameter optimization problem of ADRC. The results indicate that DSALO can search for better parameters.

2. Differential Step-Scaling Antlion Algorithm

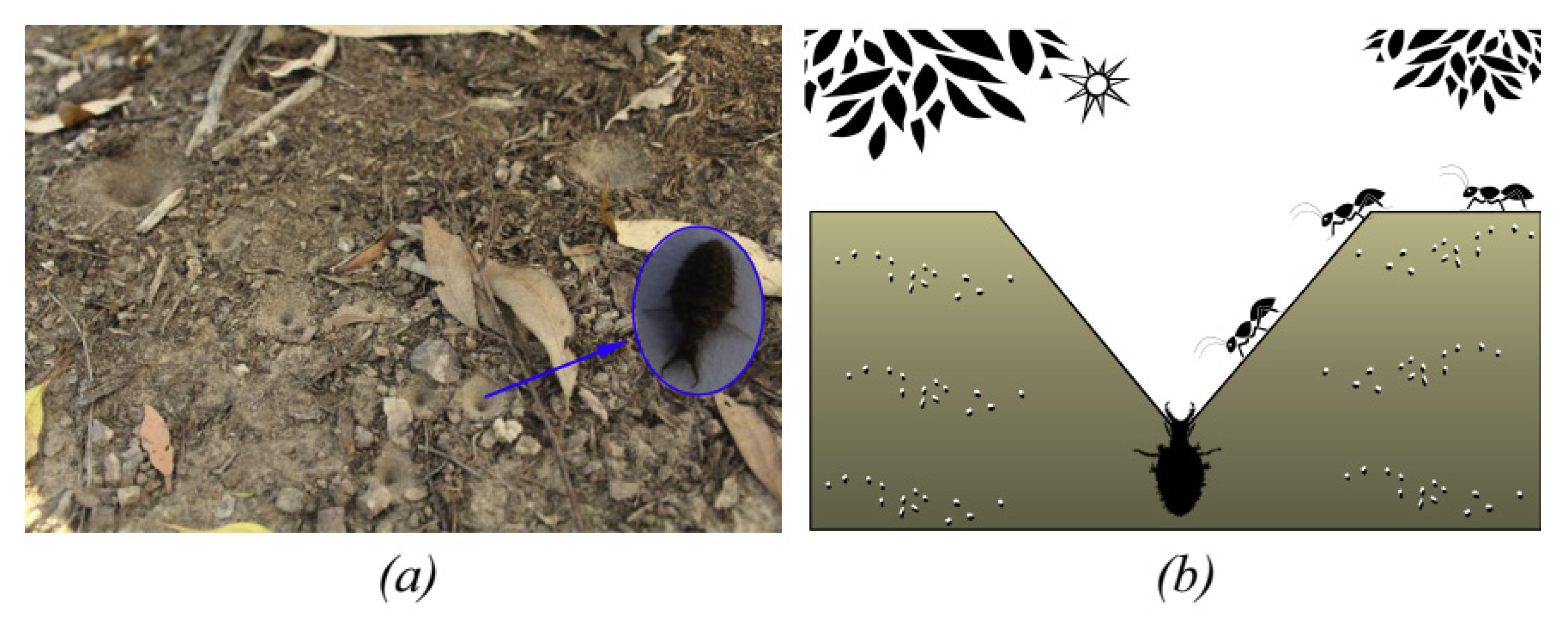

2.1. Antlion Algorithm

2.2. Differential Step-Scaling Ant-Lion Algorithm

2.3. Algorithm Idea and Specific Steps

| Algorithm 1 Pseudo-Code of DSALO |

| Initialize the first population of ants and antlions randomly |

| Calculate the fitness of ants and antlions |

| Find the best ant or antlions, then set it as the initial elite antlion |

| While the maxmum iteration is not reached |

| For each ant |

| Select an antlion using Roulette wheel |

| Update boundaries using Equations (12) and (13) |

| Make a random walk using Equation (1) |

| Normalize and update the position of ant using Equations (9) and (16) |

| End for |

| Calculate the fitness of all ants |

| Replace an antlion if its corresponding ant becomes fitter |

| Apply Mutation, Crossover, and Selection operator to antlions |

| Update the elite antlion |

| End while |

| Return the elite antlion |

3. Performance Evaluation of Differential Step-Scaling Ant-Lion Algorithm

3.1. Algorithm Evaluation Criteria

3.2. Test Function

3.3. Analysis of Test Results

4. Parameter Optimization of ADRC

5. Conclusions and Future Perspectives

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Han, J. From PID to active disturbance rejection control. IEEE Trans. Ind. Electron. 2009, 56, 900–906. [Google Scholar] [CrossRef]

- Han, J. The “Extended State Observer” of a Class of Uncertain Systems. Control Decis. 1995, 10, 85–88. [Google Scholar]

- Wang, C.; Quan, L.; Zhang, S.; Meng, H.; Lan, Y. Reduced-order model based active disturbance rejection control of hydraulic servo system with singular value perturbation theory. ISA Trans. 2017, 67, 455–465. [Google Scholar] [CrossRef] [PubMed]

- Chang, X.; Li, Y.; Zhang, W.; Wang, N.; Xue, W. Active disturbance rejection control for a flywheel energy storage system. IEEE Trans. Ind. Electron. 2014, 62, 991–1001. [Google Scholar] [CrossRef]

- Chen, Z.; Zheng, Q.; Gao, Z. Active disturbance rejection control of chemical processes. In Proceedings of the 2007 IEEE International Conference on Control Applications, Singapore, 1–3 October 2007; pp. 855–861. [Google Scholar]

- Tao, J.; Sun, Q.L.; Tan, P.L.; Chen, Z.Q.; He, Y.P. Active disturbance rejection control (ADRC)-based autonomous homing control of powered parafoils. Nonlinear Dynam 2016, 86, 1461–1476. [Google Scholar] [CrossRef]

- Hou, Y.; Gao, Z.; Jiang, F.; Boulter, B.T. Active disturbance rejection control for web tension regulation. In Proceedings of the 40th IEEE Conference on Decision and Control (Cat. No. 01CH37228), Orlando, FL, USA, 4–7 December 2001; pp. 4974–4979. [Google Scholar]

- Gao, Z. Scaling and bandwidth-parameterization based controller tuning. In Proceedings of the American Control Conference, Minneapolis, MN, USA, 4–6 June 2003; pp. 4989–4996. [Google Scholar]

- Kang, C.; Wang, S.; Ren, W.; Lu, Y.; Wang, B. Optimization design and application of active disturbance rejection controller based on intelligent algorithm. IEEE Access 2019, 7, 59862–59870. [Google Scholar] [CrossRef]

- Türk, S.; Deveci, M.; Özcan, E.; Canıtez, F.; John, R. Interval type-2 fuzzy sets improved by Simulated Annealing for locating the electric charging stations. Inf. Sci. 2021, 547, 641–666. [Google Scholar] [CrossRef]

- Demirel, N.Ç.; Deveci, M. Novel search space updating heuristics-based genetic algorithm for optimizing medium-scale airline crew pairing problems. Int. J. Comput. Intell. Syst. 2017, 10, 1082–1101. [Google Scholar] [CrossRef] [Green Version]

- Bianchi, L.; Dorigo, M.; Gambardella, L.M.; Gutjahr, W.J. A survey on metaheuristics for stochastic combinatorial optimization. Nat. Comput. 2009, 8, 239–287. [Google Scholar] [CrossRef] [Green Version]

- Mirjalili, S. The Ant Lion Optimizer. Adv. Eng. Softw. 2015, 83, 80–98. [Google Scholar] [CrossRef]

- Emary, E.; Zawbaa, H.M.; Hassanien, A.E. Binary ant lion approaches for feature selection. Neurocomputing 2016, 213, 54–65. [Google Scholar] [CrossRef]

- Zawbaa, H.M.; Emary, E.; Grosan, C. Feature selection via chaotic antlion optimization. PLoS ONE 2016, 11, e0150652. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yamany, W.; Tharwat, A.; Hassanin, M.F.; Gaber, T.; Hassanien, A.E.; Kim, T.-H. A new multi-layer perceptrons trainer based on ant lion optimization algorithm. In Proceedings of the 2015 Fourth International Conference on Information Science and Industrial Applications (ISI), Busan, Korea, 20–22 September 2015; pp. 40–45. [Google Scholar]

- Rajan, A.; Jeevan, K.; Malakar, T. Weighted elitism based Ant Lion Optimizer to solve optimum VAr planning problem. Appl. Soft Comput. 2017, 55, 352–370. [Google Scholar] [CrossRef]

- Mouassa, S.; Bouktir, T.; Salhi, A. Ant lion optimizer for solving optimal reactive power dispatch problem in power systems. Eng. Sci. Technol. Int. J. 2017, 20, 885–895. [Google Scholar] [CrossRef]

- Tian, T.; Liu, C.; Guo, Q.; Yuan, Y.; Li, W.; Yan, Q. An improved ant lion optimization algorithm and its application in hydraulic turbine governing system parameter identification. Energies 2018, 11, 95. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Feng, B.; Li, G.; Qi, J.; Zhao, D.; Mu, Y. Optimal distributed generation planning in active distribution networks considering integration of energy storage. Appl. Energy 2018, 210, 1073–1081. [Google Scholar] [CrossRef] [Green Version]

- Zainal, M.I.; Yasin, Z.M.; Zakaria, Z. Network reconfiguration for loss minimization and voltage profile improvement using ant lion optimizer. In Proceedings of the 2017 IEEE Conference on Systems, Process and Control (ICSPC), Meleka, Malaysia, 15–17 December 2017; pp. 162–167. [Google Scholar]

- Grzimek, B.; Schlager, N.; Olendorf, D.; McDade, M.C. Grzimek′ s Animal Life Encyclopedia; Gale Farmington Hills: Detroit, MI, USA, 2004. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Wang, M.J.; Heidari, A.A.; Chen, M.X.; Chen, H.L.; Zhao, X.H.; Cai, X.D. Exploratory differential ant lion-based optimization. Expert Syst. Appl. 2020, 159, 113548. [Google Scholar] [CrossRef]

| Functional Expression | Solution | The Optimal Value | |

|---|---|---|---|

| F1 | [−30, 30] | 0 | |

| F2 | [−32, 32] | 0 | |

| F3 | [−600, 600] | 0 | |

| F4 | [−5.12, 5.12] | 0 |

| The Function Name | Algorithm | Mean Fitness | The Standard Deviation | Maximum Fitness | Minimum Fitness |

|---|---|---|---|---|---|

| F1 | DSALO | 6.19 × 10−1 | 1.37 × 101 | 2.27 | 1.71 × 10−3 |

| ALO | 4.43 × 101 | 6.83 × 102 | 8.99 × 102 | 9.32 | |

| PSO | 3.73 × 103 | 1.12 × 101 | 6.64 × 103 | 8.93 × 102 | |

| OEALO | 8.34 | 2.64 × 101 | 7.38 | 1.67 × 10−3 | |

| F2 | DSALO | 5.28 × 10−15 | 9.46 × 10−3 | 4.89 × 10−14 | 8.73 × 10−16 |

| ALO | 2.65 × 10−5 | 5.34 × 10−2 | 3.92 × 10−5 | 1.35 × 10−5 | |

| PSO | 1.79 | 8.32 × 10−2 | 3.17 | 1.01 | |

| OEALO | 4.74 × 10−5 | 7.39 × 10−2 | 1.44 × 10−5 | 8.39 × 10−6 | |

| F3 | DSALO | 1.06 × 10−15 | 3.48 | 3.74 × 10−15 | 8.88 × 10−16 |

| ALO | 9.15 × 10−2 | 6.83 × 101 | 4.7 × 10−1 | 7.63 × 10−2 | |

| PSO | 4.36 × 10−2 | 5.69 × 10−1 | 7.97 × 10−2 | 3.94 × 10−2 | |

| OEALO | 3.47 × 10−9 | 2.04 × 10−3 | 2.56 × 10−9 | 4.43 × 10−9 | |

| F4 | DSALO | 3.39 × 10−4 | 2.71 × 10−1 | 3.78 × 10−4 | 3.14 × 10−4 |

| ALO | 7.76 × 10−4 | 1.04 | 8.67 × 10−4 | 6.77 × 10−4 | |

| PSO | 5.36 × 10−4 | 5.82 | 7.08 × 10−4 | 4.35 × 10−4 | |

| OEALO | 3.57 × 10−4 | 3.85 × 10−1 | 3.86 × 10−4 | 3.03 × 10−4 |

| Performance Indicators | Methods | Index Number | ||

|---|---|---|---|---|

| IAE | DSALO | 2.6116 × 103 | 1.1899 | 0.6133 |

| OEALO | 2.6776 × 103 | 1.18026 | 0.57996 | |

| IAE + overshoot | DSALO | 3.4860 × 103 | 0.99298 | 0.54947 |

| OEALO | 3.5029 × 103 | 0.9577 | 0.5967 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, Q.; Zhang, Y. Parameter Optimization of Active Disturbance Rejection Controller Using Adaptive Differential Ant-Lion Optimizer. Algorithms 2022, 15, 19. https://doi.org/10.3390/a15010019

Jin Q, Zhang Y. Parameter Optimization of Active Disturbance Rejection Controller Using Adaptive Differential Ant-Lion Optimizer. Algorithms. 2022; 15(1):19. https://doi.org/10.3390/a15010019

Chicago/Turabian StyleJin, Qibing, and Yuming Zhang. 2022. "Parameter Optimization of Active Disturbance Rejection Controller Using Adaptive Differential Ant-Lion Optimizer" Algorithms 15, no. 1: 19. https://doi.org/10.3390/a15010019

APA StyleJin, Q., & Zhang, Y. (2022). Parameter Optimization of Active Disturbance Rejection Controller Using Adaptive Differential Ant-Lion Optimizer. Algorithms, 15(1), 19. https://doi.org/10.3390/a15010019