Abstract

Uncertainty or vagueness is usually used to reflect the limitations of human subjective judgment on practical problems. Conventionally, imprecise numbers, e.g., fuzzy and interval numbers, are used to cope with such issues. However, these imprecise numbers are hard for decision-makers to make decisions, and, therefore, many defuzzification methods have been proposed. In this paper, the information of the mean and spread/variance of imprecise data are used to defuzzify imprecise data via Mellin transform. We illustrate four numerical examples to demonstrate the proposed methods, and extend the method to the simple additive weighting (SAW) method. According to the results, our method can solve the problem of the inconsistency between the mean and spread, compared with the center of area (CoA) and bisector of area (BoA), and is easy and efficient for further applications.

1. Introduction

As we know, the real world is imperfect, imprecise, and uncertain. Hence, many theories and approaches, e.g., fuzzy sets, rough sets, and interval sets, have been proposed to reflect the vagueness of the real world. To reflect the human’s subjective uncertainty of linguistics, Professor Zadeh used fuzzy numbers to capture the uncertainty and the membership function to be the corresponding weights [1]. Usually, a fuzzy number is represented by a triangular fuzzy number (a1, a2, a3), where a1, a2, and a3 are the left, center, and right values of a fuzzy number, respectively. Indeed, we can use alternative forms, e.g., trapezoidal or Gaussian, to present fuzzy numbers.

However, in most practical situations, we still hope the information for decision-making is clear and crisp. Therefore, many defuzzification methods are proposed and used to transform imprecise values, e.g., interval or fuzzy numbers, into crisp values for decision-making. Note that defuzzifying imprecise data means using some functions to transform fuzzy or interval numbers into crisp values. Since imprecise data analysis, no matter interval or fuzzy numbers, is popular in many fields, e.g., multiobjective programming [2,3,4], data envelopment analysis (DEA) [5,6,7], and decision sciences [8,9,10], the defuzzification of imprecise numbers is a critical topic.

The problem of defuzzifying interval numbers is similar to that of defuzzifying fuzzy numbers. The main difference is that the y-axis of fuzzy numbers denotes the membership function, but the y-axis of interval numbers is the probability density function (pdf). The authors of [11] first proposed the concept of ranking fuzzy numbers using the mean and spread. However, it is inefficient to determine the better fuzzy number or interval data when one has a higher mean and a higher spread. Although the concept of coefficient of variance (CV) was provided in [12] to cope with the problem of inconsistency, it fails when there are crisp values, and it is hard to apply to follow-up multiple attribute decision-making (MADM) applications, such as simple additive weighting (SAW) or weight product method (WPM), due to the fact the original unit is changed. Hence, an easy and efficient algorithm to defuzzify imprecise numbers is a critical issue for practical applications.

In this paper, we first find the mean and spread of imprecise numbers via the Mellin transformation. Then, we derive the trade-off coefficient between the mean and spread. Finally, we develop the preference relation of imprecise numbers to rank them. In addition, we use four examples to demonstrate our concepts and extend our method to choose the best alternative using the SAW method. Based on the numerical results, our method can handle the problem of the inconsistency between the mean and the spread with respect to the center of area (CoA) and the bisector of area (BoA), and can be easily used in further applications.

The rest of this paper is organized as follows. Section 2 states the problem of the inconsistency between the mean and the spread. Section 3 derives the relation between the mean and the spread according to the utility theory. The detail of the Mellin transform is presented in Section 4. Four numerical examples are used in Section 5 to demonstrate our concept. Discussion is presented in Section 6, and the final section presents conclusions.

2. Statement of the Problem

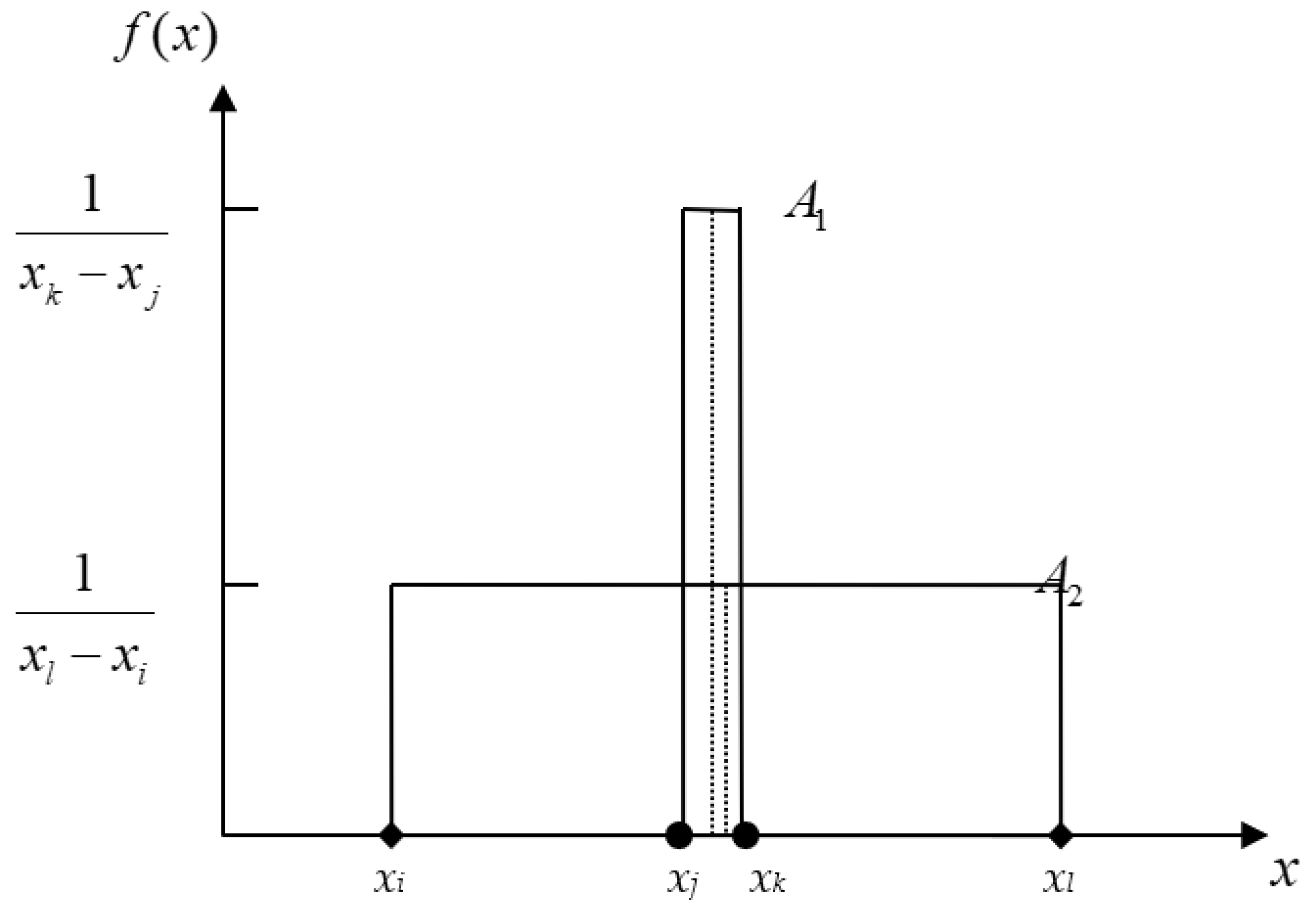

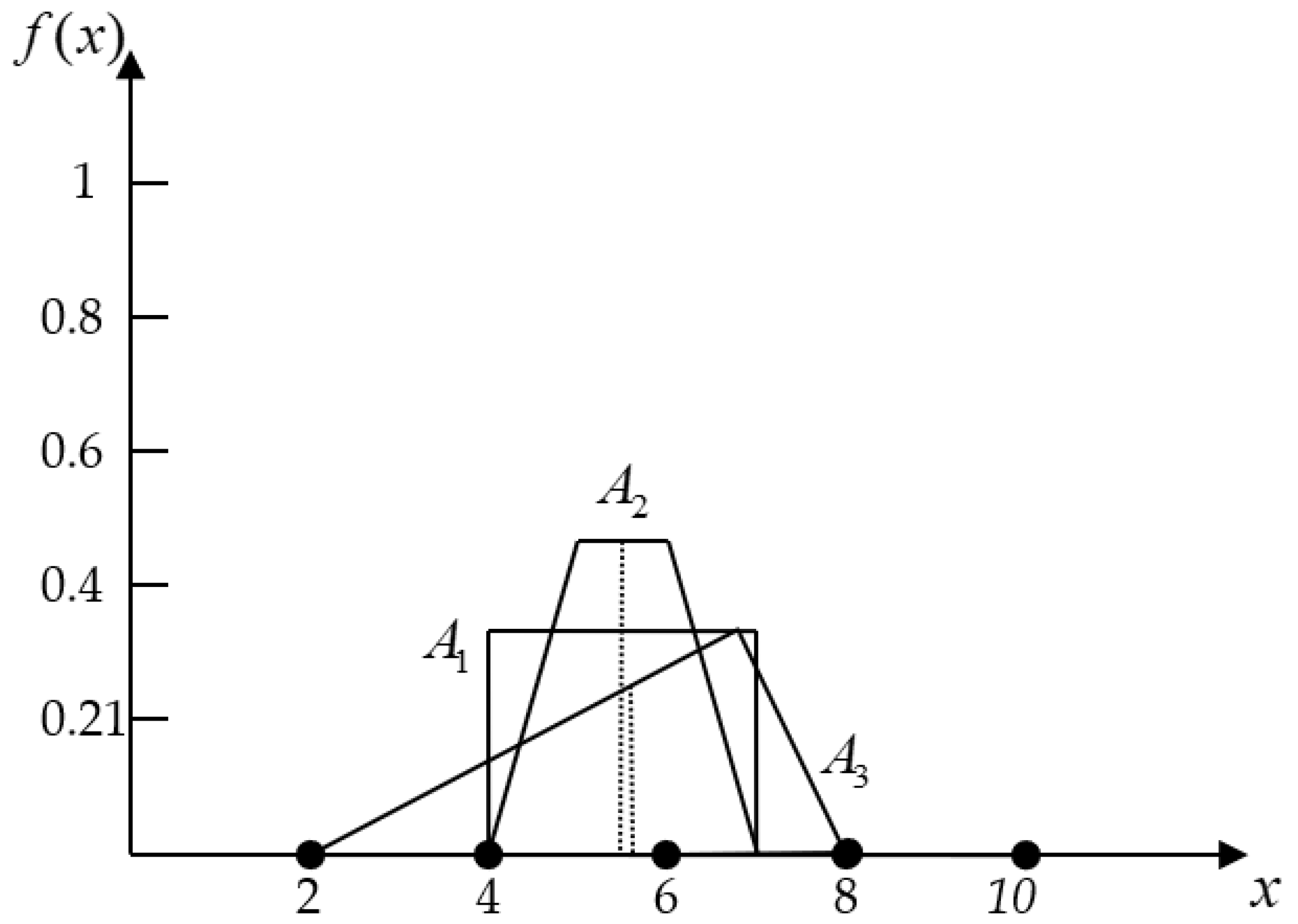

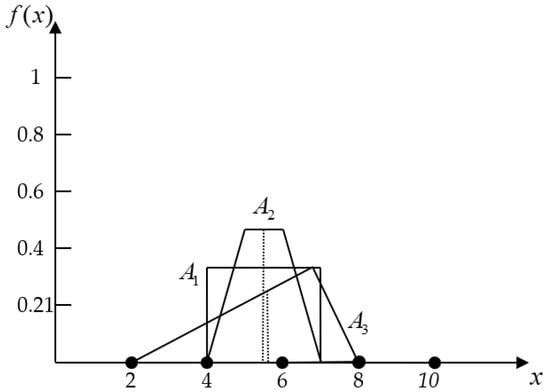

Assume there are two alternatives, and , and their means and spreads are , , and , , respectively. According to [10], the rules for ranking fuzzy numbers is if or if and . However, if we assume there are two imprecise numbers with uniform distributions, then the rules seem incorrect when there is a smaller mean and a smaller spread, as in Figure 1.

Figure 1.

The conflicting situation in precise data.

It is intuitive for a decision-maker to prefer over , even though has a higher mean than . That being said, the higher mean does not necessarily have the higher ranking order if the spread is too large, i.e., the mean and the spread comprise the trade-off relation. This situation is also called risk premium (RP), which represents the additional compensation required for assuming a higher risk, and is usually discussed in economic and financial management.

In addition, [12] proposed another criterion, called the CV index, to improve Lee and Li’s method [11] according to the following equation:

where denotes the spread and denotes the mean.

However, it is clear that the CV index fails when there are crisp values or the mean is zero. In addition, another shortcoming is that the result is difficult to interpret because the unit has changed. Hence, many researchers provided different methods to efficiently rank fuzzy numbers, e.g., [13,14,15,16,17], or interval numbers, e.g., [16,17].

Another practical issue is that ranking imprecise numbers is not enough for further applications. A decision-maker might like to derive the crisp values of alternatives [18]. Surely, plenty of defuzzification methods have been proposed to efficiently derive the crisp values from imprecise numbers, e.g., the center of sums method, the CoA method, the BoA method, the weighted average method, and different kinds of maxima methods. However, none of them can handle all the considered situations here, and we will compare our method with CoA and BoA to justify the proposed method. Here, we incorporate the concept of risk premium into our method and derive the trade-off coefficient between the mean and spread to rank and defuzzify imprecise numbers.

3. A Trade-Off between the Mean and Spread

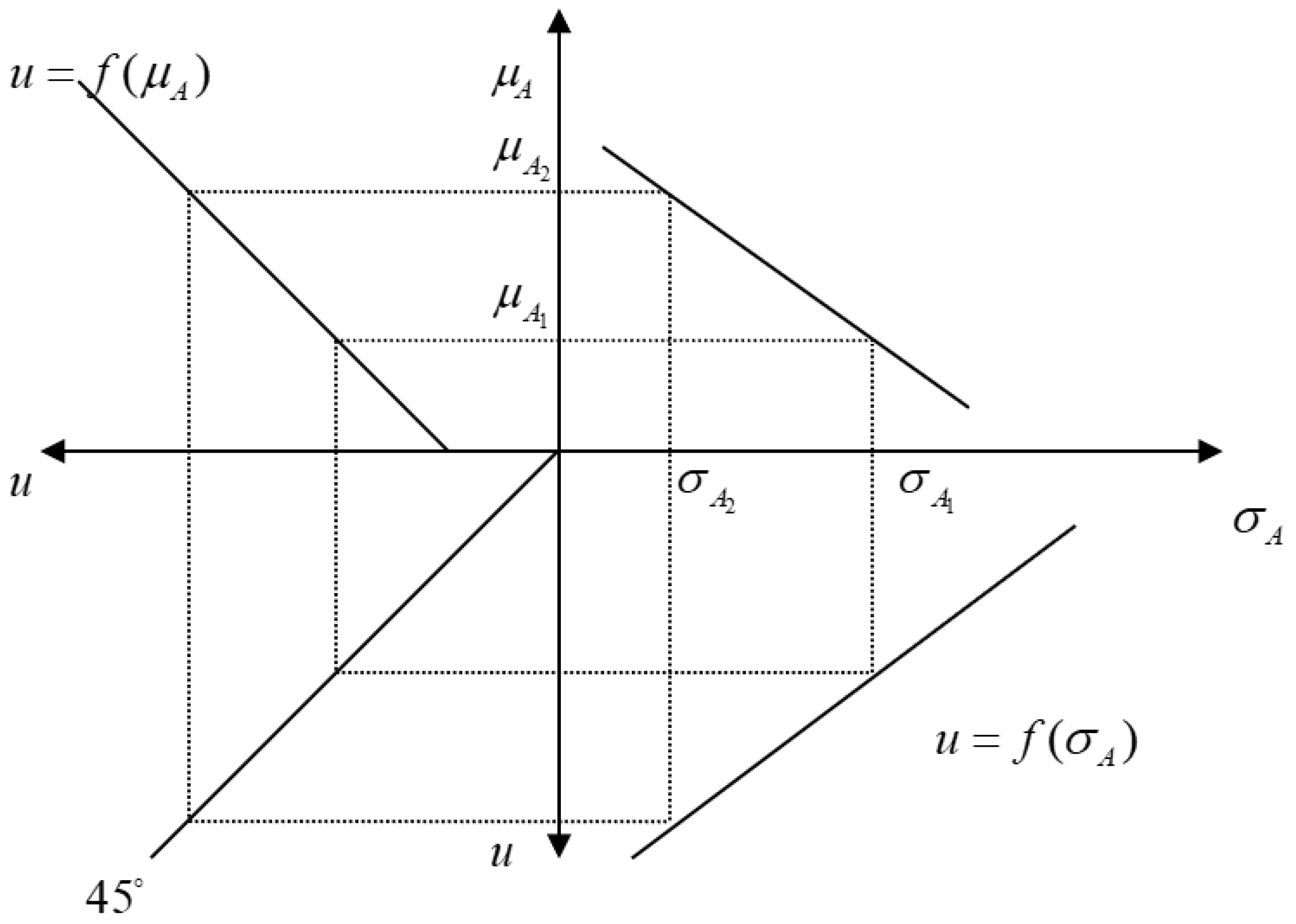

According to utility theory, it is intuitive that a higher mean () has a higher utility () and a lower spread (), correspondingly, has a higher utility. For simplicity, we assume the equations are linear as:

and

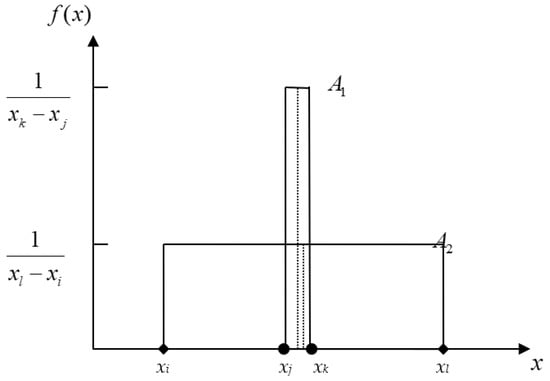

Next, we derive the relationship between the mean and spread according to our assumptions. Let two points be and then , and can be derived by Equations (2) and (3) to form the relationship between and , as shown in Figure 2.

Figure 2.

The relationship between the mean and the spread.

According to Figure 2, we know that having an alternative with a larger spread is the same as having a smaller mean and vice versa. Hence, the trade-off between the mean and the spread can be defined as:

where is an indifference ratio between the mean and the spread.

Next, we develop a criterion to incorporate the mean and the spread according to Equation (4). This is because if we can transform the spread to the mean, we can easily compare any imprecise data using the rule for the higher mean with the higher ranking order. Based on this concept, we can transform the difference between the spreads into the difference between the means and let:

Then, we can obtain the equation to be the criterion to rank the imprecise data as:

where denotes the preference difference between two imprecise numbers and = indicates the trade-off ratio between the mean and the spread.

Equation (7) indicates that the grades of the utility in are superior to , so we can conclude that

As the mean and spread are monotone functions, it is obvious that satisfies the transitive axiom (i.e., if and , then ). In addition, our simulation indicates is approximately equal to −0.25, and the method of our experiment is listed in Appendix A.

Let us consider the defuzzifying problem of the proposed method as follows. Let be a status quo alternative. The defuzzifying value of is its , and the defuzzifying value of can be derived as:

In addition, this paper uses the Mellin transform to calculate the mean and spread/variance in any distribution quickly. Next, we describe details of the Mellin transform, and link it to the nth moment to calculate the mean and spread.

4. An Application of the Mellin Transform

Given a random variable, the Mellin transform, of a pdf, , can be defined as:

Let be a measurable function on into and is a random variable. Then, some properties of the Mellin transform can be described, as shown in Table 1. For example, if then the scaling property can be expressed as:

Table 1.

The properties of the Mellin transform.

Given a continuous non-negative random variable, X, the nth moment of X is denoted by and is defined as:

For n = 1, the mean of X can be expressed as:

and the variance of X can be calculated by:

Since the relation between the nth moment and the Mellin transform of X can be linked by:

then the mean and the variance of X can be calculated by:

According to Equations (18) and (19), we can quickly calculate the mean and the spread in any distribution. In practice, the uniform, the triangular, and the trapezoidal distribution are usually used, and their Mellin transforms are summarized as shown in Table 2. More Mellin transforms in various probabilistic density functions can be found in [19].

Table 2.

Mellin transform of some probability density functions.

Based on Table 2, the mean and spread values can be efficiently derived by calculating and . Then, we can rank and defuzzify imprecise data using Equation (7). The following section uses four numerical examples to show the detailed procedures of the proposed method.

5. Numerical Examples

In this section, we demonstrate four examples to show how our method ranks imprecise data. The first three examples illustrate the way to rank various imprecise data. The last example demonstrates our approach to extending to the simple additive weighting method. The Mellin transform derives the values of the mean and spread, and the preference of objects is obtained by incorporating the variance into the mean value.

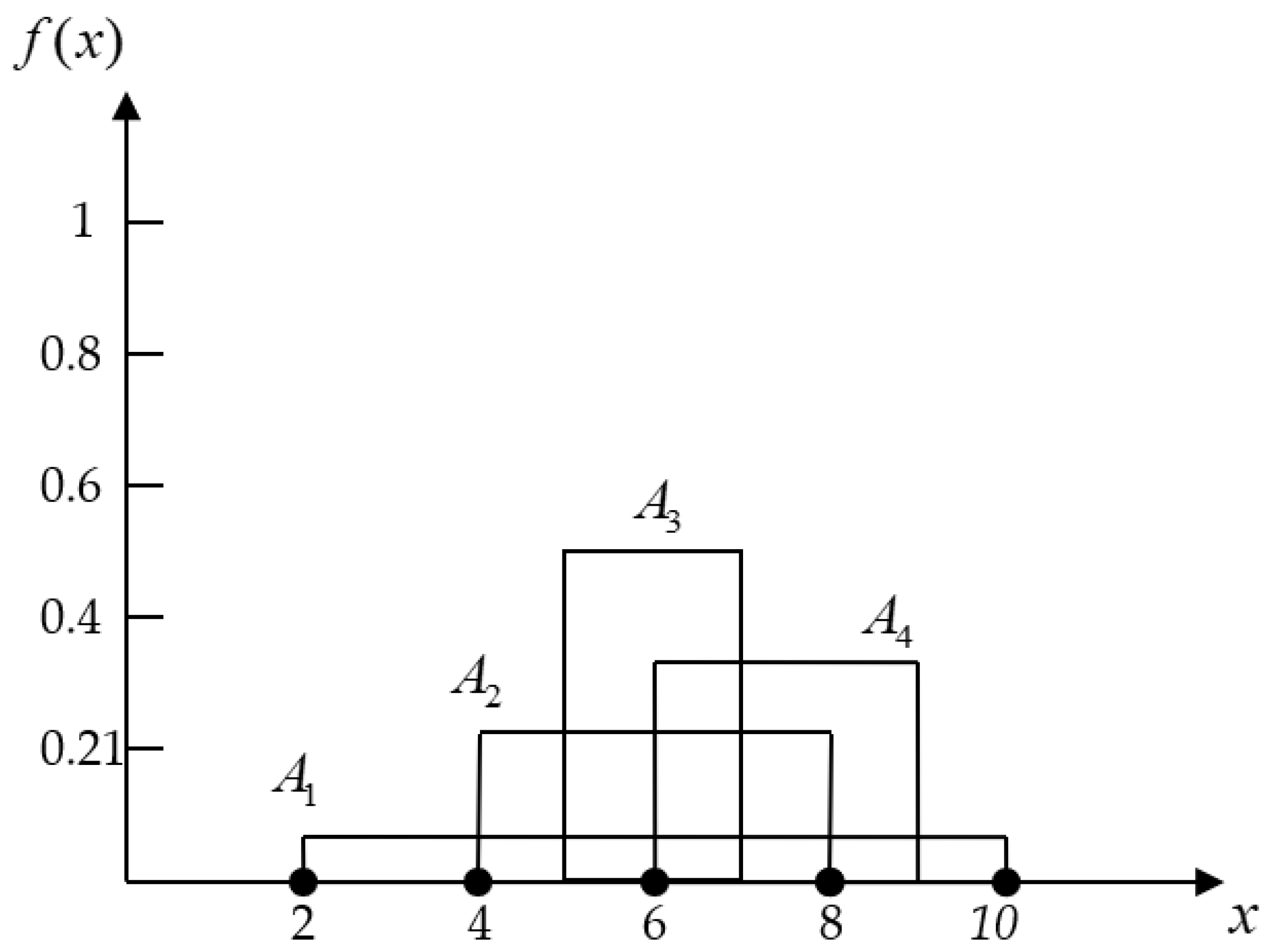

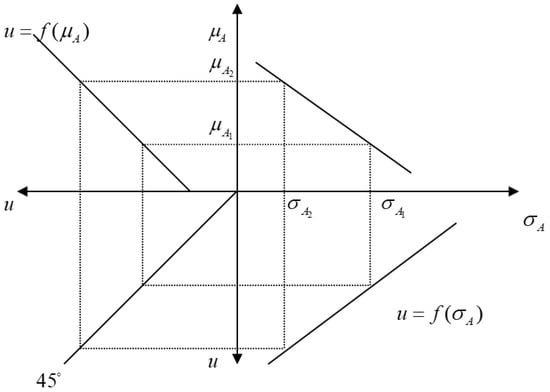

Example 1.

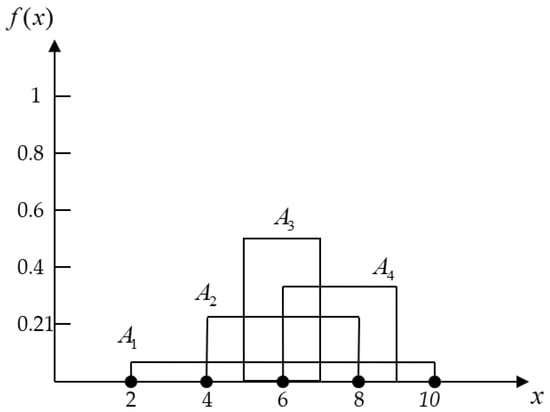

Let four alternatives using imprecise data be used to measure the preference where we do not know any information about our alternatives (i.e., assuming they have uniform distribution). Their imprecise preferences are A1 = (2, 10), A2 = (4, 8), A3 = (5, 7), and A4 = (6, 9), and these probability density functions are represented in Figure 3.

Figure 3.

The probability density function in the first example.

According to the Mellin transform, we can obtain:

then the differences in the alternatives’ total mean can be calculated as:

Based on the above result, we can conclude the ranking result is . We can set A1 as the status quo alternative and defuzzify other alternatives. The result is compared with CoA and Boa and presented, as shown in Table 3.

Table 3.

The comparison of defuzzifying approaches in Example 1.

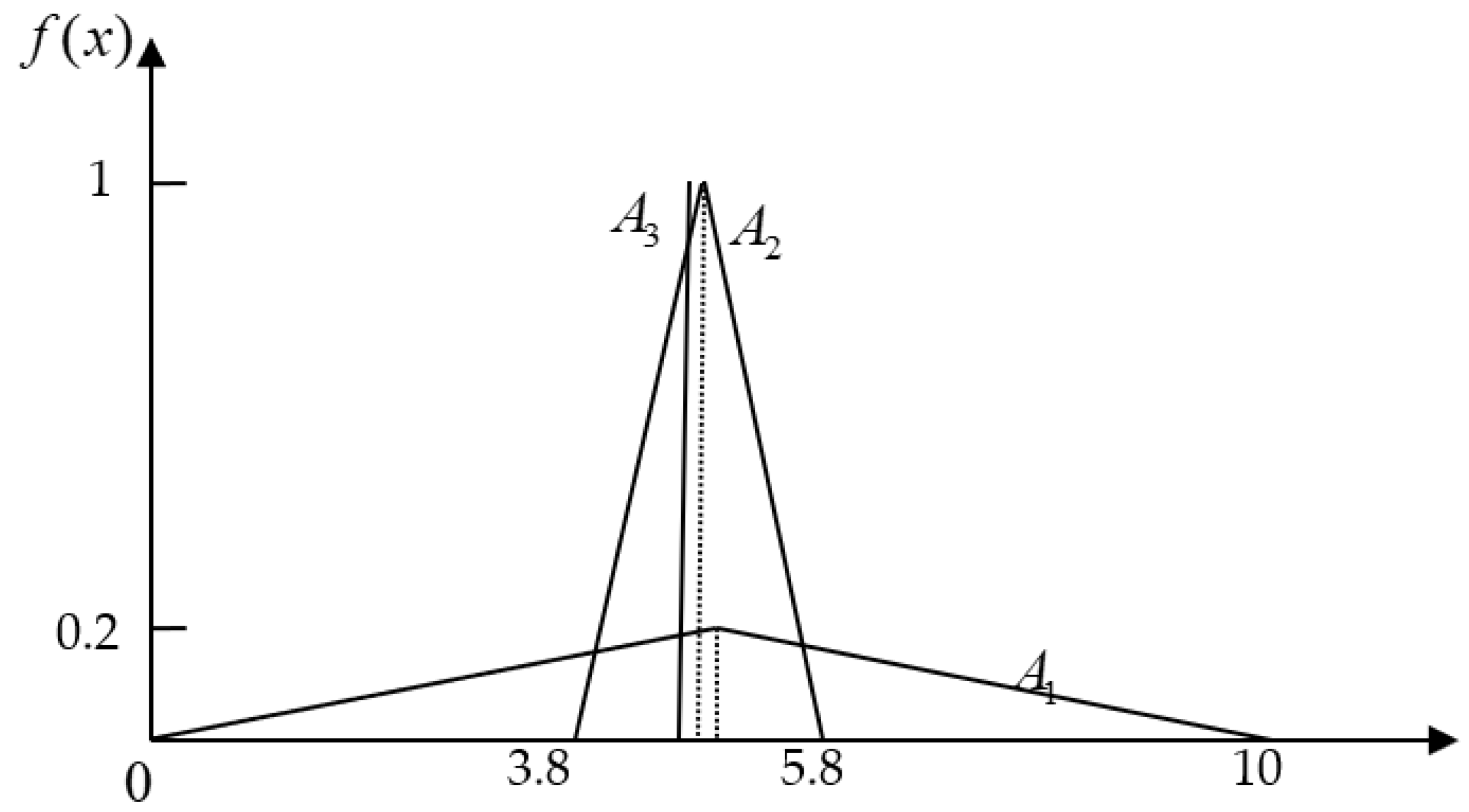

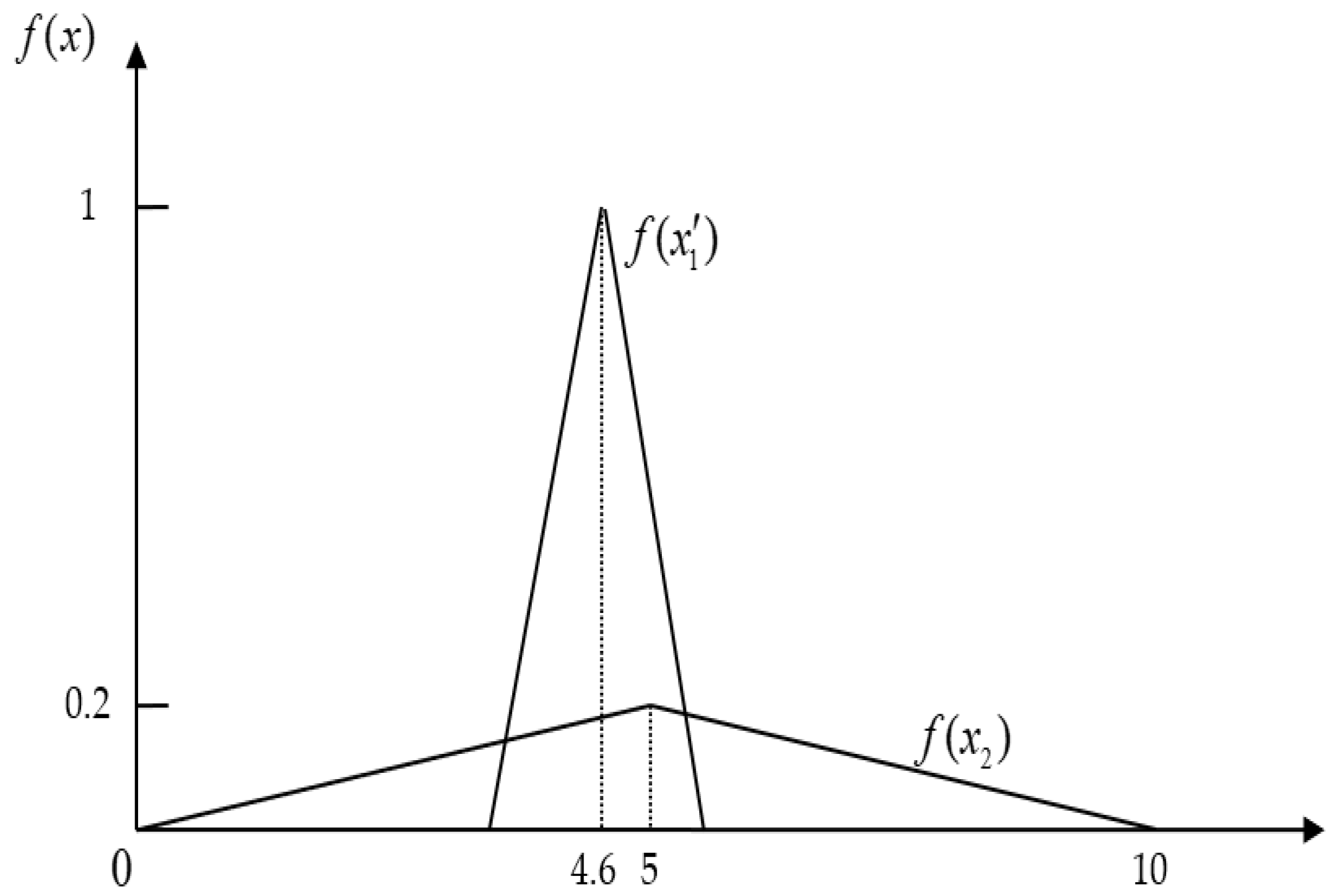

Example 2.

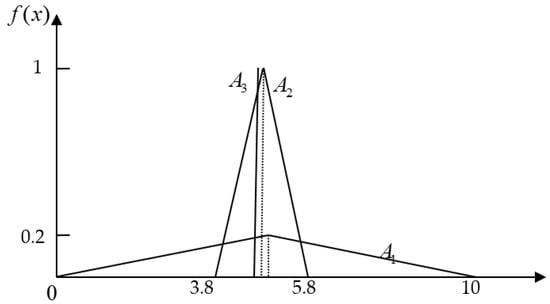

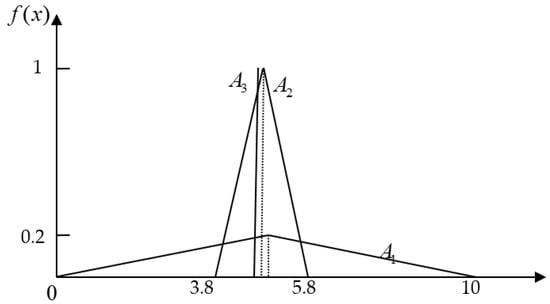

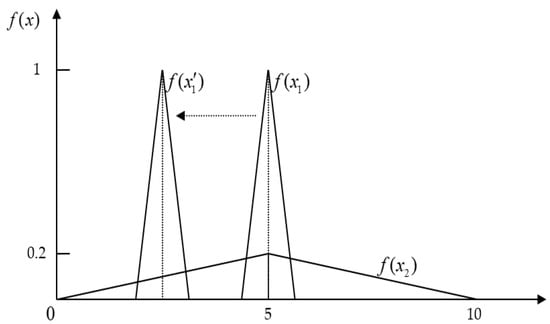

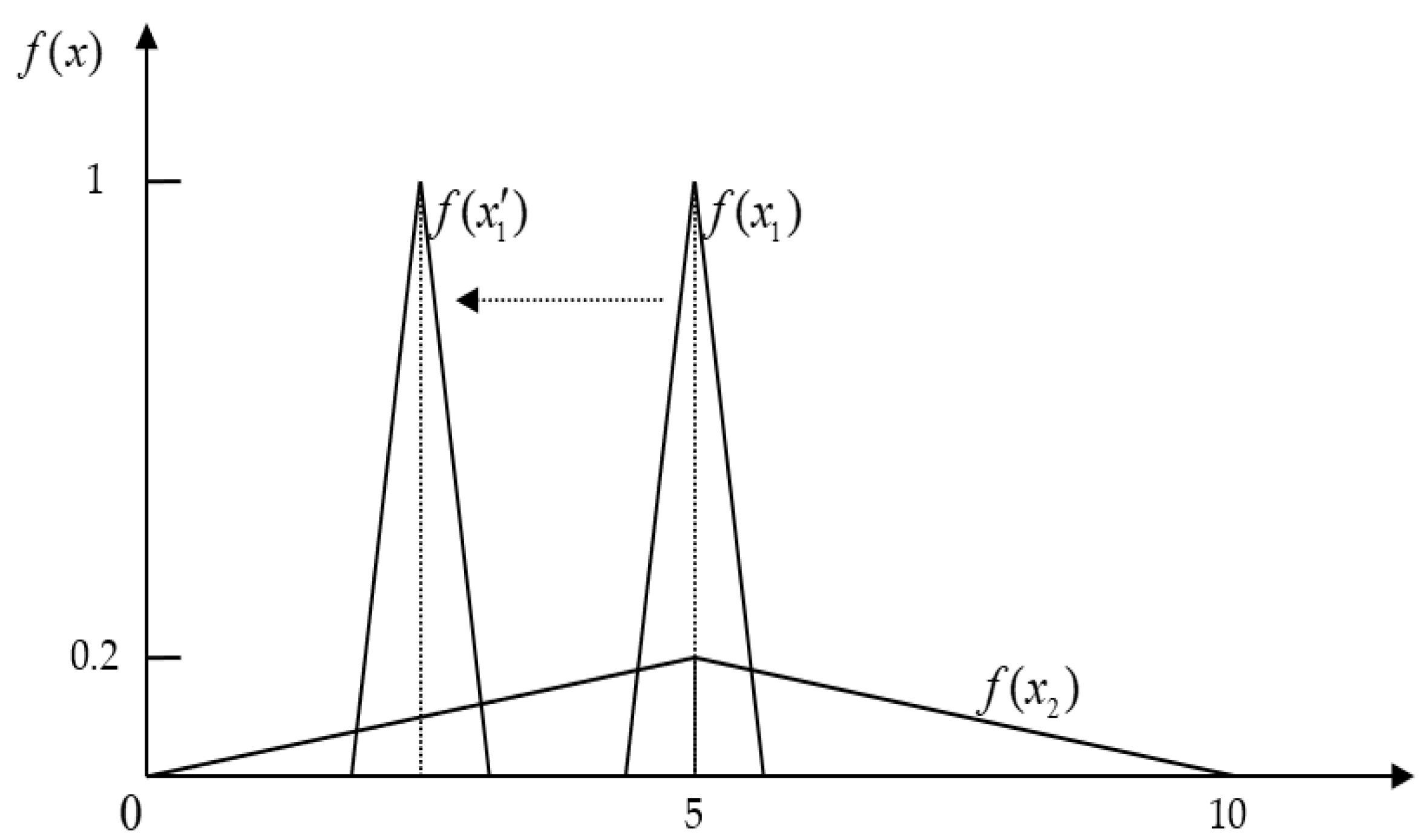

Given two imprecise data with triangular distributions, A1 = (0, 6, 10) and A2 = (3.8, 4.8, 5.8), and a crisp value A3 = 4.6, to present the measurement of the preference in A1, A2, and A3. Their probability density functions are listed in Figure 4.

Figure 4.

The probability density function in the second example.

By using the Mellin transform,

then the differences in the alternative’s total mean can be calculated as:

The above result indicates that the preference relation is , where A3 is the best choice. Then, we can compare the proposed method with CoA and Boa, as shown in Table 4.

Table 4.

The comparison of defuzzifying approaches in Example 2.

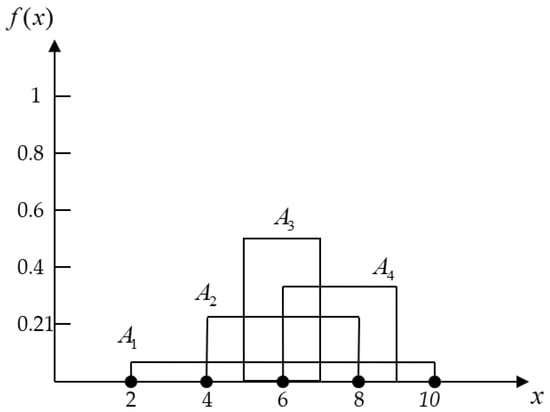

Example 3.

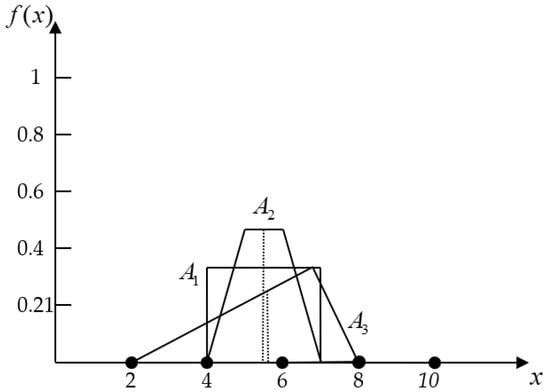

Assume three alternatives (A1, A2, and A3) using interval data to measure the preference rating, and of these alternatives, A2 and A3 are known to have trapezoidal and triangular distributions, respectively. Their scores are A1 = (4, 7), A2 = (4, 5, 6, 7), and A3 = (2, 7, 8), respectively, and the probability density functions are described in Figure 5.

Figure 5.

The probability density function in the third example.

By using the Mellin transform, the mean and the spread can be calculated as:

then the differences in the alternatives’ total mean can be calculated as:

The above result indicates that , and we can compare the proposed method with CoA and Boa, as shown in Table 5.

Table 5.

The comparison of defuzzifying approaches in Example 3.

Example 4.

In order to extend to further applications in MADM, we demonstrate a way to employ the SAW method using our concept. The SAW method can be expressed as:

whereis the value function of alternative, andandare weight and value functions of attribute, respectively. After a normalization process, the value of alternativecan be rewritten as:

Next, we can apply our concept to the simple additive weighting method according to follow equations:

and let:

Then,

whenmeanswhere, and vice versa.

Assume there are three alternatives described in Table 6, where each alternative is measured using interval data. In addition, we assume these alternatives satisfy the uniform distributions.

Table 6.

Data for evaluation in the fourth example.

Next, we need to normalize all alternatives, and linear normalization is most often used in the SAW method [20]. Therefore, in this example, we normalize the interval data according to the following equation:

where and are the interval boundary, and is the maximum value in jth attribute. Based on Equation (20), we can normalize the interval data in Table 7.

Table 7.

Data for evaluation in the fourth example after normalization.

After this normalization process, we can calculate the differences between the alternatives’ means as follows:

According to the results and , and the alternative is the best choice.

6. Discussions

One critical issue for imprecise numbers is to rank their order and defuzzify their values. This paper focuses on imprecise data, and uses the mean and spread to defuzzify and rank data. Although the CoA and BoA are widely used to defuzzify fuzzy numbers because of their simplicity, they can obtain wrong preferences in more complicated situations. According to the utility theory, the difference between the spreads can be transformed into the difference between the means by multiplying a specific ratio. Therefore, we can develop some equations to achieve our purpose here.

Our method has several characteristics when ranking imprecise numbers based on our numerical examples. First, when imprecise numbers have the same mean, the smaller spread has the higher-ranking preference. Second, the influence of the spread to certain utilities can clearly be reflected in the results, so it is not necessary to have the higher mean indicate the higher-ranking preference if the spread is too large. However, the trade-off between the mean and the spread is not mentioned in Lee and Li’s method [10]. Third, our method is suitable for both imprecise and crisp data or mixed data. Last, our method does not change the measurement unit, and the results can be interpreted more intuitively and easily applied to further areas of research.

The main concept of our method is that the difference in the utility should be larger than zero when one preference is larger than another. We use three examples and the SAW method to demonstrate our concept. Based on the results, our methods can rank the preferences correctly. However, the correctness of the proposed method highly depends on the choice of . Although we propose an experimental method to determine the parameter, other sophisticated ways should be considered in different applications or considerations. The choice of the parameter also provides the flexibility for decision-makers to reflect on the facing problems.

7. Conclusions

According to the probability theory, we can describe the characteristics of imprecise data depending on its mean and spread. These two criteria are also used to rank interval and fuzzy numbers. However, the mean and spread exist in a trade-off relationship, and it is not necessary to choose the alternative with the higher mean if the spread of the alternative is too large. In order to solve the problem of this inconsistency under the perspectives of the mean and the spread, the difference between the spread is transformed into the difference between the mean. According to the results of the preference difference between alternatives, we can rank the priority of the imprecise data. In addition, we also provide a method to derive the crisp value of an imprecise number.

There are several advantages to our proposed method. First, after transforming the spread, we can overcome the problem of being unable to determine the better ranking when one has a higher mean and spread. Second, no matter the imprecise data distribution, the mean and spread can quickly be calculated by the Mellin transform. Third, interpretation is easier and more intuitive because the unit is not changed. In addition, our method can be easily extended to other methods in MADM for further applications.

Author Contributions

Conceptualization, C.-Y.C. and J.-J.H.; methodology, J.-J.H.; writing—review and editing, C.-Y.C. and J.-J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| Abbreviation | Definition |

| DEA | Data envelopment analysis |

| Probability density function | |

| CV | Coefficient of variance |

| MADM | Multiple attribute decision-making |

| SAW | Simple additive weighting |

| WPM | simple product weighting |

| CoA | Center of area |

| BoA | Bisector of area |

| RP | Risk premium |

| Symbol | |

| Alternative | |

| Mean | |

| Spread | |

| Utility function | |

| Indifference ratio between the mean and the spread | |

| Trade-off ratio between the mean and the spread | |

| Preference difference between two imprecise numbers | |

| Mellin transform | |

| [,] | Interval boundary |

Appendix A

Based on the above discussion, the trade-off relationship between the mean and the spread can be defined as:

where θ < 0 is an indifference ratio between the mean and the spread.

ΔμA = θΔσA

According to the equation, the mean and the spread have a trade-off of indifference ratio and have no relation to the shape of the alternative. Next, we simulate various kinds of situations to determine the indifference ratio.

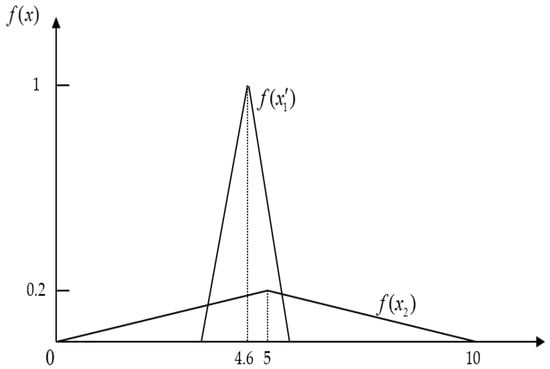

Two alternatives, and , measured using imprecise data, = (4, 6) and = (0, 10), with triangular distribution, respectively, and their probability density functions ( and ) are described in Figure A1. The mean and the spread of and can be calculated using the Mellin transform as:

Figure A1.

The concept of finding the indifference ratio.

Figure A1.

The concept of finding the indifference ratio.

It is obvious that (with the same mean and has the smaller spread), and then we move to the left by decreasing until the expert cannot figure out which one is better, i.e., we view in this situation. Based on our experiments, when the , then and . The results are described in Figure A2.

Figure A2.

The trade-off result where .

Figure A2.

The trade-off result where .

References

- Zadeh, L.A. Fuzzy Set Theory and Its Applications; University of California: Los Angeles, CA, USA, 1965; Volume 8, p. 338. [Google Scholar]

- Ishibuchi, H.; Tanaka, H. Multiobjective programming in optimization of the interval objective function. Eur. J. Oper. Res. 1990, 48, 219–225. [Google Scholar] [CrossRef]

- Shaocheng, T. Interval number and fuzzy number linear programmings. Fuzzy Sets Syst. 1994, 66, 301–306. [Google Scholar] [CrossRef]

- Sengupta, A.; Pal, T.K.; Chakraborty, D. Interpretation of inequality constraints involving interval coefficients and a solution to interval linear programming. Fuzzy Sets Syst. 2001, 119, 129–138. [Google Scholar] [CrossRef]

- Tavana, M.; Khalili-Damghani, K.; Arteaga, F.J.S.; Mahmoudi, R.; Hafezalkotob, A. Efficiency decomposition and measurement in two-stage fuzzy DEA models using a bargaining game approach. Comput. Ind. Eng. 2018, 118, 394–408. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, Y.; Chai, J.; Wang, L.; Wang, S.; Lev, B. Sustainable supply chain evaluation: A dynamic double frontier network DEA model with interval type-2 fuzzy data. Inf. Sci. 2019, 504, 394–421. [Google Scholar] [CrossRef]

- Arana-Jiménez, M.; Sánchez-Gil, M.; Younesi, A.; Lozano, S. Integer interval DEA: An axiomatic derivation of the technology and an additive, slacks-based model. Fuzzy Sets Syst. 2021, 422, 83–105. [Google Scholar] [CrossRef]

- Akram, M.; Adeel, A. TOPSIS Approach for MAGDM Based on Interval-Valued Hesitant Fuzzy N-Soft Environment. Int. J. Fuzzy Syst. 2019, 21, 993–1009. [Google Scholar] [CrossRef]

- Tolga, A.C.; Parlak, I.B.; Castillo, O. Finite-interval-valued Type-2 Gaussian fuzzy numbers applied to fuzzy TODIM in a healthcare problem. Eng. Appl. Artif. Intell. 2020, 87, 103352. [Google Scholar] [CrossRef]

- Wu, L.; Gao, H.; Wei, C. VIKOR method for financing risk assessment of rural tourism projects under interval-valued intuitionistic fuzzy environment. J. Intell. Fuzzy Syst. 2019, 37, 2001–2008. [Google Scholar] [CrossRef]

- Lee, E.; Li, R.-J. Comparison of fuzzy numbers based on the probability measure of fuzzy events. Comput. Math. Appl. 1988, 15, 887–896. [Google Scholar] [CrossRef]

- Cheng, C.-H. A new approach for ranking fuzzy numbers by distance method. Fuzzy Sets Syst. 1998, 95, 307–317. [Google Scholar] [CrossRef]

- Ban, A.I.; Coroianu, L. Simplifying the Search for Effective Ranking of Fuzzy Numbers. IEEE Trans. Fuzzy Syst. 2014, 23, 327–339. [Google Scholar] [CrossRef]

- Chanas, S.; Zieliński, P. Ranking fuzzy interval numbers in the setting of random sets–further results. Inf. Sci. 1999, 117, 191–200. [Google Scholar] [CrossRef]

- Chanas, S.; Delgado, M.; Verdegay, J.; Vila, M. Ranking fuzzy interval numbers in the setting of random sets. Inf. Sci. 1993, 69, 201–217. [Google Scholar] [CrossRef]

- Moore, R.E. Interval Analysis; Prentice-Hall: Englewood Cliffs, NJ, USA, 1966; Volume 4, pp. 8–13. [Google Scholar]

- Sengupta, A.; Pal, T.K. On comparing interval numbers. Eur. J. Oper. Res. 2000, 127, 28–43. [Google Scholar] [CrossRef]

- Kundu, S. Min-transitivity of fuzzy leftness relationship and its application to decision making. Fuzzy Sets Syst. 1997, 86, 357–367. [Google Scholar] [CrossRef]

- Yoon, K. A probabilistic approach to rank complex fuzzy numbers. Fuzzy Sets Syst. 1996, 80, 167–176. [Google Scholar] [CrossRef]

- Yoon, K.P.; Huang, C.L. Multiple Attribute Decision Making: An Introduction, Sage University Paper Series on Quantitative Applications in the Social Sciences; Sage: Thousand Oaks, CA, USA, 1995. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).