Pose-Based Gait Analysis for Diagnosis of Parkinson’s Disease

Abstract

1. Introduction

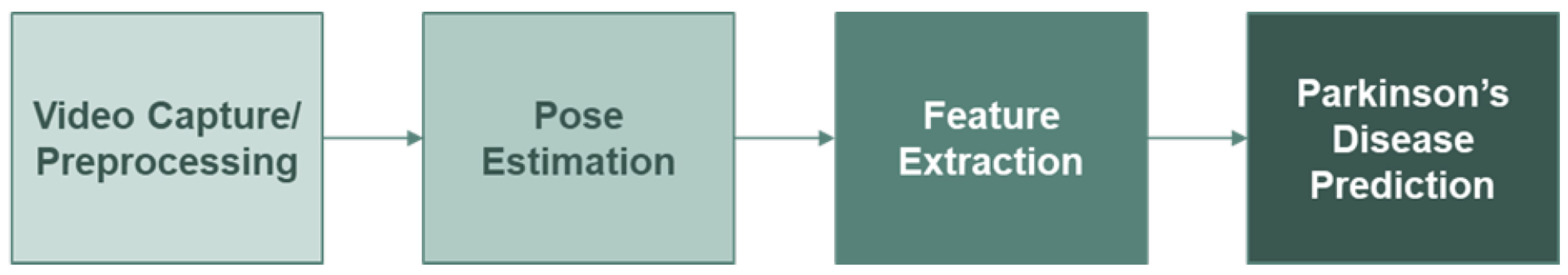

2. Methodology

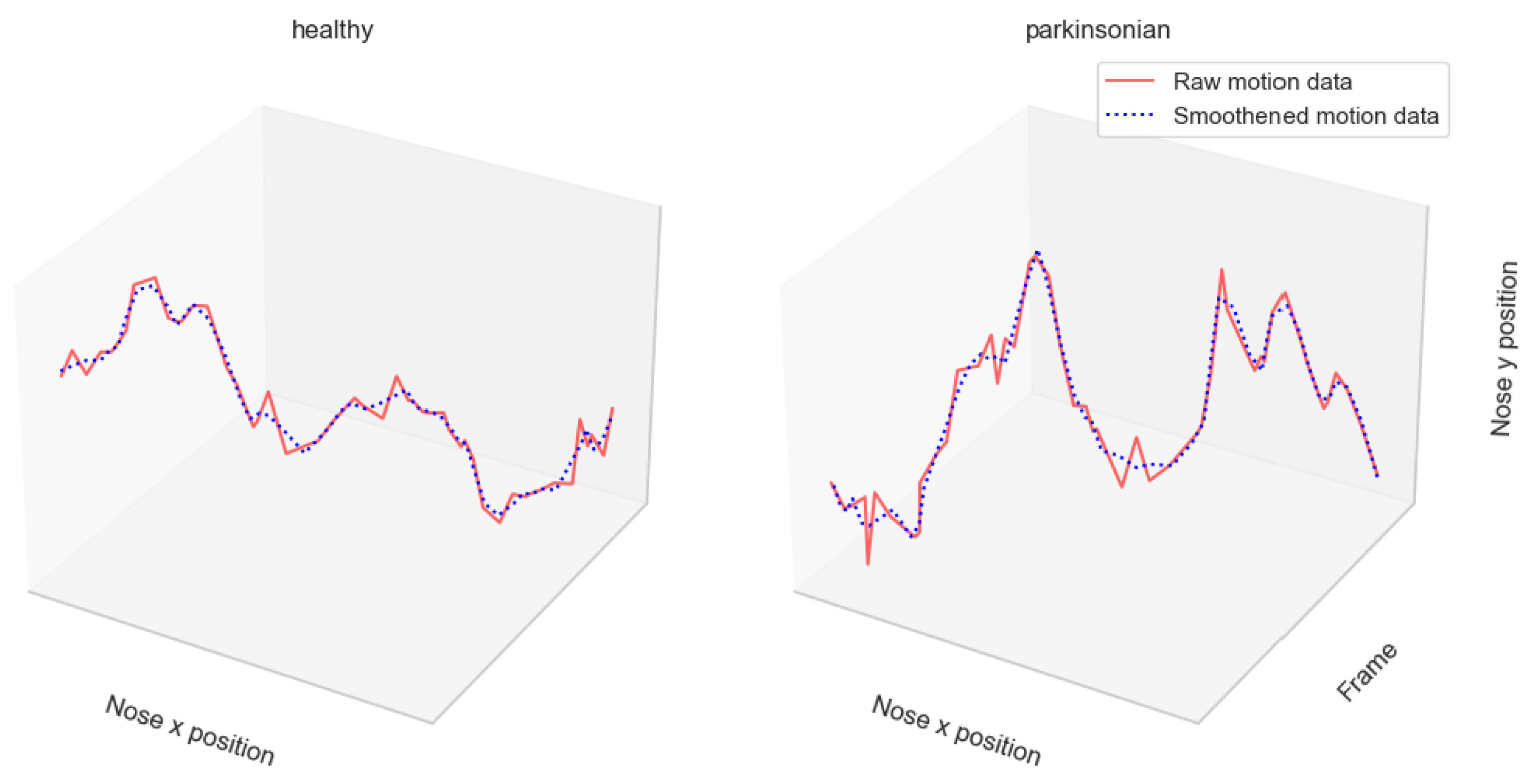

2.1. Video Capture and Preprocessing

2.2. Human Pose Estimation

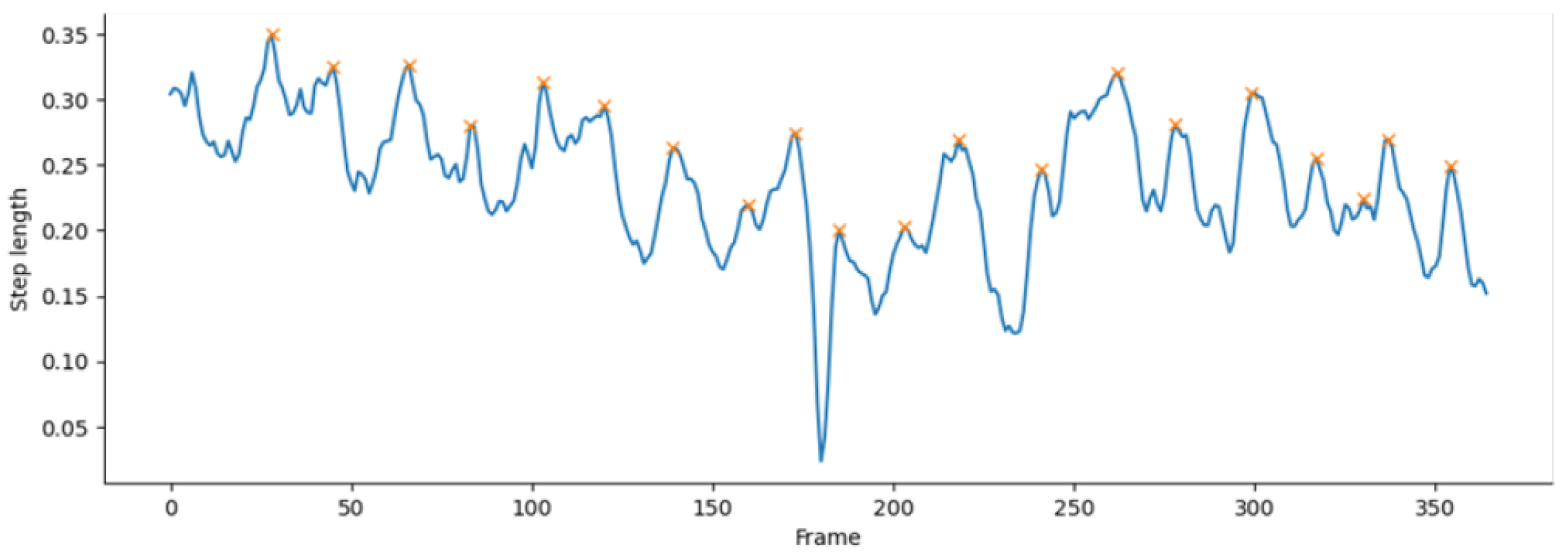

2.3. Feature Extraction

3. Experiments and Results

3.1. Experiment Setup

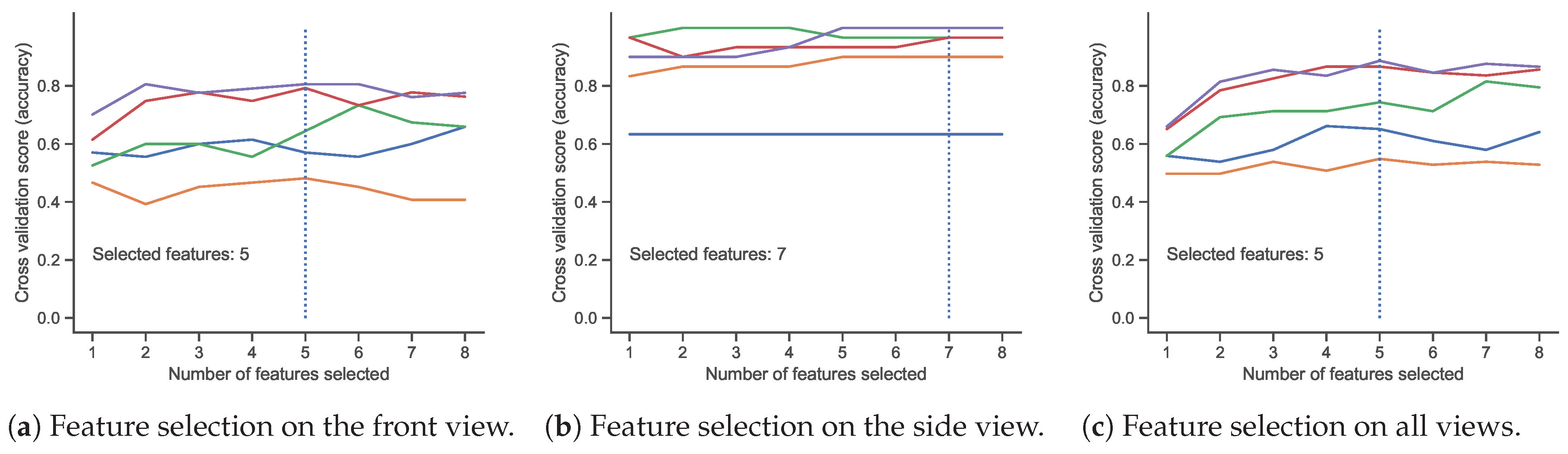

3.2. Feature Selection

3.3. Experimental Results

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| 2D | 2-dimensional |

| PD | Parkinson’s disease |

| HPE | Human pose estimation |

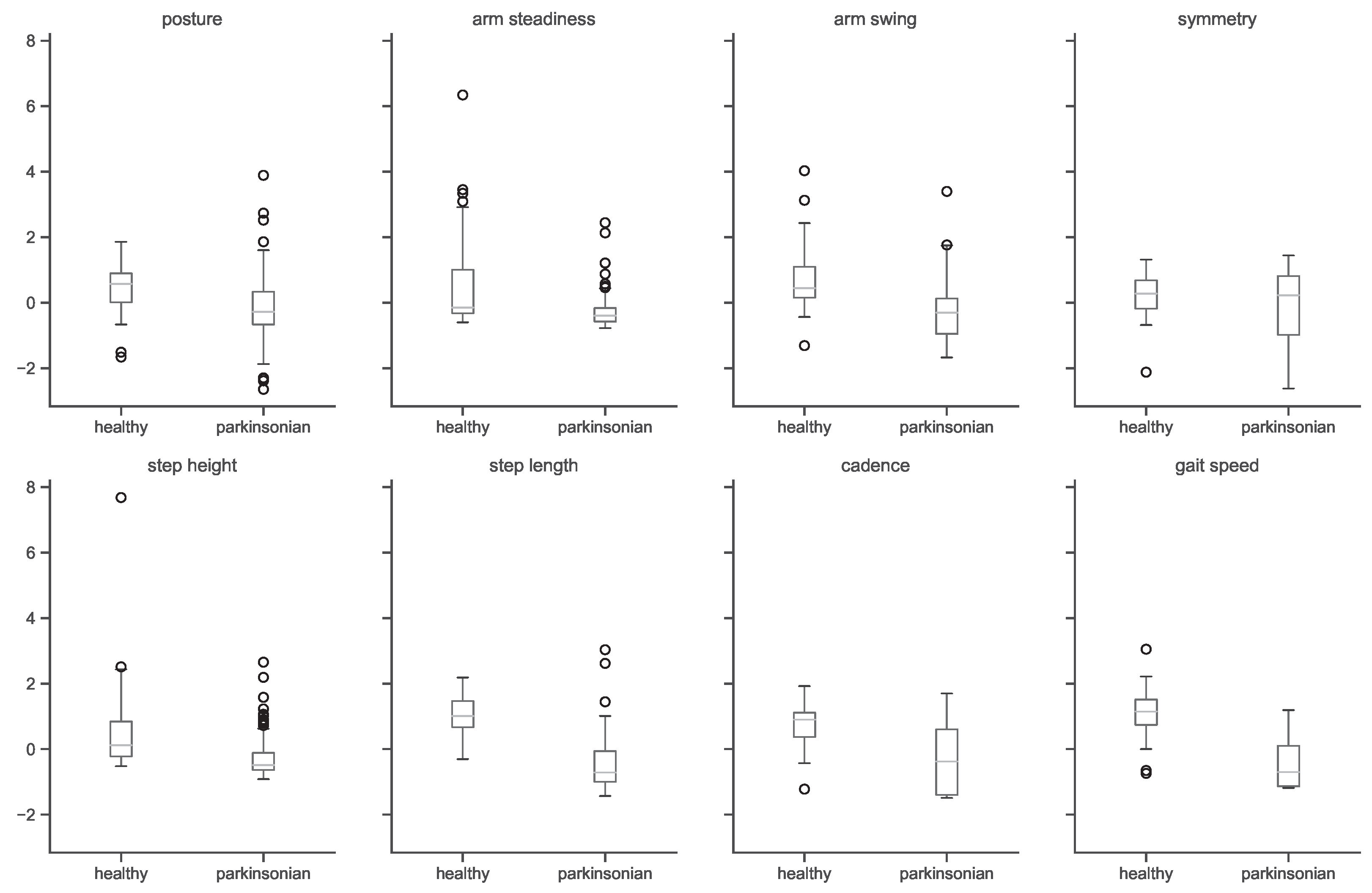

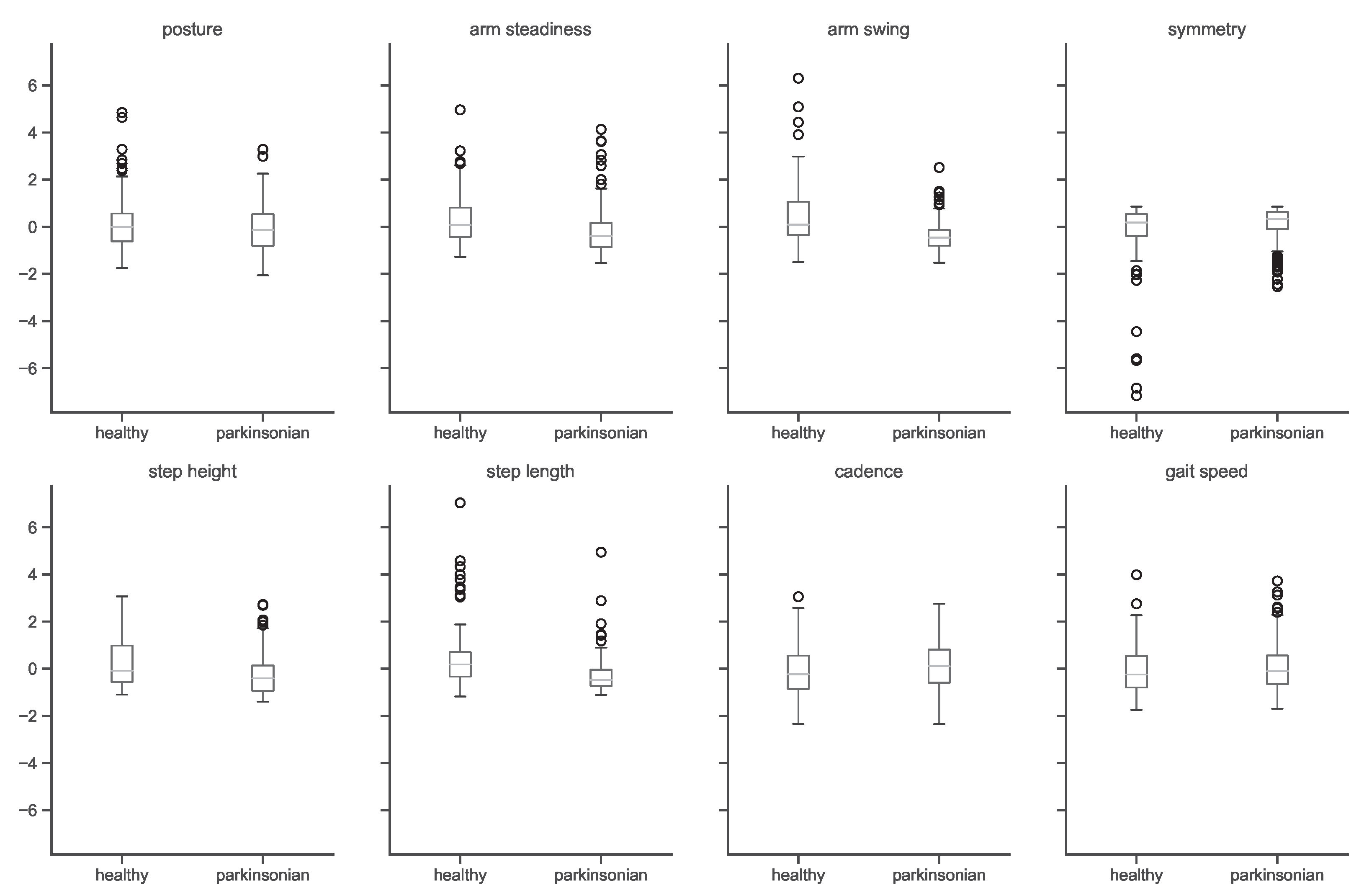

Appendix A. Features Analysis

References

- Morris, M.E.; Huxham, F.; McGinley, J.; Dodd, K.; Iansek, R. The biomechanics and motor control of gait in Parkinson disease. Clin. Biomech. 2001, 16, 459–470. [Google Scholar] [CrossRef] [PubMed]

- Åström, F.; Koker, R. A parallel neural network approach to prediction of Parkinson’s Disease. Expert Syst. Appl. 2011, 38, 12470–12474. [Google Scholar] [CrossRef]

- Postuma, R.B.; Montplaisir, J. Predicting Parkinson’s disease—Why, when, and how? Park. Relat. Disord. 2009, 15, S105–S109. [Google Scholar] [CrossRef] [PubMed]

- Sadek, R.M.; Mohammed, S.A.; Abunbehan, A.R.K.; Ghattas, A.K.H.A.; Badawi, M.R.; Mortaja, M.N.; Abu-Nasser, B.S.; Abu-Naser, S.S. Parkinson’s Disease Prediction Using Artificial Neural Network. Int. J. Acad. Health Med. Res. (IJAHMR) 2019, 3, 1–8. [Google Scholar]

- di Biase, L.; Di Santo, A.; Caminiti, M.L.; De Liso, A.; Shah, S.A.; Ricci, L.; Di Lazzaro, V. Gait Analysis in Parkinson’s Disease: An Overview of the Most Accurate Markers for Diagnosis and Symptoms Monitoring. Sensors 2020, 20, 3529. [Google Scholar] [CrossRef] [PubMed]

- Aderinola, T.B.; Connie, T.; Ong, T.S.; Yau, W.C.; Teoh, A.B.J. Learning Age From Gait: A Survey. IEEE Access 2021, 9, 100352–100368. [Google Scholar] [CrossRef]

- Margiotta, N.; Avitabile, G.; Coviello, G. A wearable wireless system for gait analysis for early diagnosis of Alzheimer and Parkinson disease. In Proceedings of the 2016 5th International Conference on Electronic Devices, Systems and Applications (ICEDSA), Ras Al Khaimah, United Arab Emirates, 6–8 December 2016; pp. 1–4. [Google Scholar]

- Sivaranjini, S.; Sujatha, C.M. Deep learning based diagnosis of Parkinson’s disease using convolutional neural network. Multimed. Tools Appl. 2020, 79, 15467–15479. [Google Scholar] [CrossRef]

- Zhao, H.; Tsai, C.C.; Zhou, M.; Liu, Y.; Chen, Y.L.; Huang, F.; Lin, Y.C.; Wang, J.J. Deep learning based diagnosis of Parkinson’s Disease using diffusion magnetic resonance imaging. Brain Imaging Behav. 2022, 16, 1749–1760. [Google Scholar] [CrossRef] [PubMed]

- Oh, S.L.; Hagiwara, Y.; Raghavendra, U.; Yuvaraj, R.; Arunkumar, N.; Murugappan, M.; Acharya, U.R. A deep learning approach for Parkinson’s disease diagnosis from EEG signals. Neural Comput. Appl. 2020, 32, 10927–10933. [Google Scholar] [CrossRef]

- Aghzal, M.; Mourhir, A. Early Diagnosis of Parkinson’s Disease based on Handwritten Patterns using Deep Learning. In Proceedings of the 2020 Fourth International Conference on Intelligent Computing in Data Sciences (ICDS), Fez, Morocco, 21–23 October 2020; pp. 1–6. [Google Scholar]

- Pereira, C.R.; Weber, S.A.T.; Hook, C.; Rosa, G.H.; Papa, J.P. Deep Learning-Aided Parkinson’s Disease Diagnosis from Handwritten Dynamics. In Proceedings of the 2016 29th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Sao Paulo, Brazil, 4–7 October 2016; pp. 340–346, ISSN 2377-5416. [Google Scholar]

- Ali, M.R.; Myers, T.; Wagner, E.; Ratnu, H.; Dorsey, E.R.; Hoque, E. Facial expressions can detect Parkinson’s disease: Preliminary evidence from videos collected online. NPJ Digit. Med. 2021, 4, 129. [Google Scholar] [CrossRef] [PubMed]

- Bandini, A.; Orlandi, S.; Escalante, H.J.; Giovannelli, F.; Cincotta, M.; Reyes-Garcia, C.A.; Vanni, P.; Zaccara, G.; Manfredi, C. Analysis of facial expressions in parkinson’s disease through video-based automatic methods. J. Neurosci. Methods 2017, 281, 7–20. [Google Scholar] [CrossRef] [PubMed]

- Balaji, E.; Brindha, D.; Balakrishnan, R. Supervised machine learning based gait classification system for early detection and stage classification of Parkinson’s disease. Appl. Soft Comput. 2020, 94, 106494. [Google Scholar]

- Mehta, D.; Asif, U.; Hao, T.; Bilal, E.; von Cavallar, S.; Harrer, S.; Rogers, J. Towards Automated and Marker-Less Parkinson Disease Assessment: Predicting UPDRS Scores Using Sit-Stand Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Virtual, 19–25 June 2021; pp. 3841–3849. [Google Scholar]

- Ricciardi, C.; Amboni, M.; De Santis, C.; Improta, G.; Volpe, G.; Iuppariello, L.; Ricciardelli, G.; D’Addio, G.; Vitale, C.; Barone, P.; et al. Using gait analysis’ parameters to classify Parkinsonism: A data mining approach. Comput. Methods Programs Biomed. 2019, 180, 105033. [Google Scholar] [CrossRef] [PubMed]

- Williams, S.; Relton, S.D.; Fang, H.; Alty, J.; Qahwaji, R.; Graham, C.D.; Wong, D.C. Supervised classification of bradykinesia in Parkinson’s disease from smartphone videos. Artif. Intell. Med. 2020, 110, 101966. [Google Scholar] [CrossRef] [PubMed]

- Fang, H.S.; Xie, S.; Tai, Y.W.; Lu, C. RMPE: Regional Multi-person Pose Estimation. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Cao, Z.; Hidalgo Martinez, G.; Simon, T.; Wei, S.; Sheikh, Y.A. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Nakano, N.; Sakura, T.; Ueda, K.; Omura, L.; Kimura, A.; Iino, Y.; Fukashiro, S.; Yoshioka, S. Evaluation of 3D markerless motion capture accuracy using OpenPose with multiple video cameras. Front. Sport. Act. Living 2020, 2, 50. [Google Scholar] [CrossRef] [PubMed]

| Feature | Related PD Symptom | Visual Perception | Computation |

|---|---|---|---|

| posture | hunched posture | nose-to-foot distance | mean * |

| arm steadiness | arm tremors | wrist steadiness | mean * |

| arm swing | reduced arm swing | nose-to-wrist distance | mean * |

| arm swing symmetry | arm swing asymmetry | uneven arm swings | mean |

| step height | reduced step height | ankle-to-ground distance | * |

| step | reduced step length | left-to-right ankle distance | mean |

| cadence | reduced cadence | lower step frequency | (see Figure 4) |

| speed | reduced gait speed | slower movement |

| Total | Front View | Side View | |||

|---|---|---|---|---|---|

| Healthy | Parkinsonian | Healthy | Parkinsonian | ||

| Number of subjects | 167 | 67 | 48 | 26 | 26 |

| After augmentation | 974 | 314 | 360 | 92 | 208 |

| Feature | Feature Ranks by View | Selected Features by View | |||||

|---|---|---|---|---|---|---|---|

| Front | Side | All | Average | Front | Side | All | |

| posture | 7 | 6 | 7 | 7 | × | ✓ | × |

| arm steadiness | 4 | 7 | 6 | 6 | ✓ | ✓ | ✓ |

| arm swing | 1 | 3 | 1 | 2 | ✓ | ✓ | ✓ |

| arm swing symmetry | 5 | 8 | 5 | 6 | × | × | × |

| step height | 3 | 4 | 3 | 3 | ✓ | ✓ | ✓ |

| step length | 2 | 2 | 2 | 2 | ✓ | ✓ | ✓ |

| cadence | 8 | 5 | 8 | 7 | × | ✓ | × |

| gait speed | 6 | 1 | 4 | 4 | ✓ | ✓ | ✓ |

| number of selected features | 5 | 7 | 5 | ||||

| View | Train/Test | Features | Smooth | Acc | Healthy | Parkinsonian | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | |||||

| 8 | × | 95% | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | ||

| Front | 505/169 | 8 | ✓ | 95% | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 |

| 5 | × | 95% | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | ||

| 8 | × | 97% | 1.00 | 0.92 | 0.96 | 0.96 | 1.00 | 0.98 | ||

| Side | 225/75 | 8 | ✓ | 97% | 1.00 | 0.92 | 0.96 | 0.96 | 1.00 | 0.98 |

| 7 | × | 97% | 1.00 | 0.92 | 0.96 | 0.96 | 1.00 | 0.98 | ||

| 8 | × | 92% | 0.88 | 0.92 | 0.90 | 0.94 | 0.92 | 0.93 | ||

| All | 730/244 | 8 | ✓ | 93% | 0.90 | 0.94 | 0.92 | 0.96 | 0.93 | 0.94 |

| 5 | × | 93% | 0.88 | 0.94 | 0.91 | 0.96 | 0.92 | 0.94 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Connie, T.; Aderinola, T.B.; Ong, T.S.; Goh, M.K.O.; Erfianto, B.; Purnama, B. Pose-Based Gait Analysis for Diagnosis of Parkinson’s Disease. Algorithms 2022, 15, 474. https://doi.org/10.3390/a15120474

Connie T, Aderinola TB, Ong TS, Goh MKO, Erfianto B, Purnama B. Pose-Based Gait Analysis for Diagnosis of Parkinson’s Disease. Algorithms. 2022; 15(12):474. https://doi.org/10.3390/a15120474

Chicago/Turabian StyleConnie, Tee, Timilehin B. Aderinola, Thian Song Ong, Michael Kah Ong Goh, Bayu Erfianto, and Bedy Purnama. 2022. "Pose-Based Gait Analysis for Diagnosis of Parkinson’s Disease" Algorithms 15, no. 12: 474. https://doi.org/10.3390/a15120474

APA StyleConnie, T., Aderinola, T. B., Ong, T. S., Goh, M. K. O., Erfianto, B., & Purnama, B. (2022). Pose-Based Gait Analysis for Diagnosis of Parkinson’s Disease. Algorithms, 15(12), 474. https://doi.org/10.3390/a15120474