GRNN: Graph-Retraining Neural Network for Semi-Supervised Node Classification

Abstract

1. Introduction

2. Related Work

2.1. Graph-Based Semi-Supervised Classification

2.2. Graph Neural Networks

2.3. Graph Neural Networks with a Smoothing Model

3. Preliminary

3.1. Notations and Problem Definition

3.2. The Classical Graph Neural Networks

3.3. Residual Learning

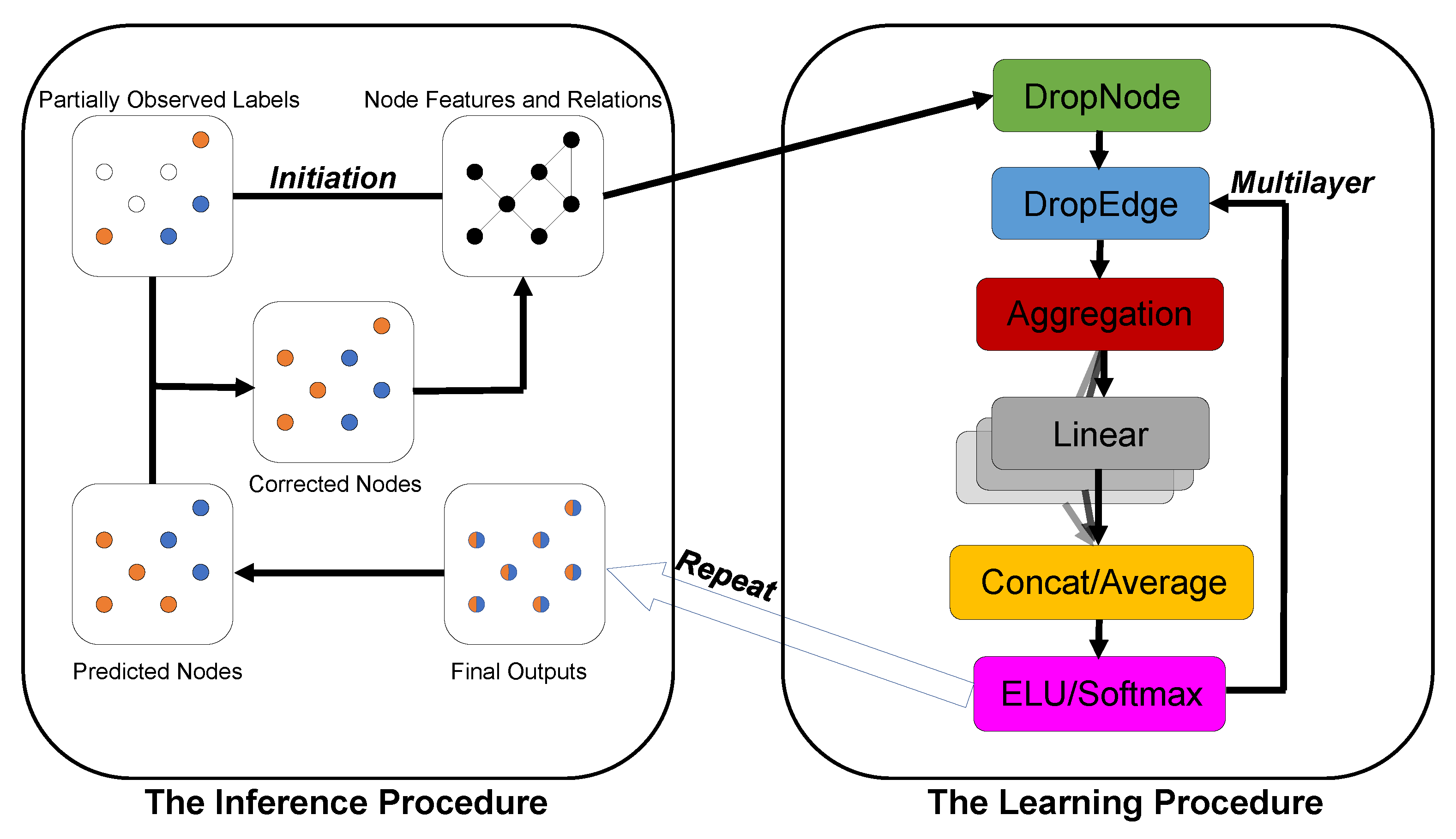

4. GRNN: Graph-Retraining Neural Network

4.1. The Benchmark Neural Network

4.2. The Smoothing Model

4.3. The Loss Function of GRNN

| Algorithm 1 GRNN for semi-supervised node classification |

Initialization: ; ; repeat Compute using Equation (4) with ; for do Update base on Equation (5) with ; Update base on Equation (6); end for Compute (which is equivalent to ) using Equation (7); until The classification loss is minimized according to Equation (8); Compute using Equation (11); fordo repeat Compute using Equation (4) with ; for do Update base on Equation (5) with ; Update base on Equation (6); end for Compute (which is equivalent to ) using Equation (7); until The classification loss is minimized according to Equation (20); Update base on Equation (12); end for return(which is the subset of ) |

5. Experiments and Discussion

5.1. Datasets

5.2. Baselines Methods

5.2.1. The Citation Network and the Webpage Network

5.2.2. The Protein–Protein Interaction

5.3. Experimental Settings

5.3.1. The Citation Network

5.3.2. The Protein–Protein Interaction

5.3.3. The Citation Network with Fewer Training Samples

5.3.4. The Webpage Network

5.4. Result Analysis

6. Ablation Study

7. Discussion

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Liu, Q.; Zeng, Y.; Mokhosi, R.; Zhang, H. STAMP: Short-Term Attention/Memory Priority Model for Session-based Recommendation. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD 2018, London, UK, 19–23 August 2018; pp. 1831–1839. [Google Scholar] [CrossRef]

- Liu, Q.; Yu, F.; Wu, S.; Wang, L. A Convolutional Click Prediction Model. In Proceedings of the 24th ACM International Conference on Information and Knowledge Management, CIKM 2015, Melbourne, VIC, Australia, 19–23 October 2015; pp. 1743–1746. [Google Scholar] [CrossRef]

- Li, C.; Goldwasser, D. Encoding Social Information with Graph Convolutional Networks forPolitical Perspective Detection in News Media. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; pp. 2594–2604. [Google Scholar] [CrossRef]

- Qiu, J.; Tang, J.; Ma, H.; Dong, Y.; Wang, K.; Tang, J. DeepInf: Social Influence Prediction with Deep Learning. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD 2018, London, UK, 19–23 August 2018; pp. 2110–2119. [Google Scholar] [CrossRef]

- Li, Q.; Han, Z.; Wu, X. Deeper Insights Into Graph Convolutional Networks for Semi-Supervised Learning. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), The 30th innovative Applications of Artificial Intelligence (IAAI-18), and The 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18), New Orleans, LA, USA, 2–7 February 2018; pp. 3538–3545. [Google Scholar]

- Hu, F.; Zhu, Y.; Wu, S.; Wang, L.; Tan, T. Hierarchical Graph Convolutional Networks for Semi-supervised Node Classification. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, 10–16 August 2019; pp. 4532–4539. [Google Scholar]

- Hui, B.; Zhu, P.; Hu, Q. Collaborative Graph Convolutional Networks: Unsupervised Learning Meets Semi-Supervised Learning. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, The IAAI 2020, Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, 7–12 February 2020; pp. 4215–4222. [Google Scholar]

- Diao, Z.; Wang, X.; Zhang, D.; Liu, Y.; Xie, K.; He, S. Dynamic Spatial-Temporal Graph Convolutional Neural Networks for Traffic Forecasting. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 890–897. [Google Scholar] [CrossRef]

- Han, Y.; Wang, S.; Ren, Y.; Wang, C.; Gao, P.; Chen, G. Predicting Station-Level Short-Term Passenger Flow in a Citywide Metro Network Using Spatiotemporal Graph Convolutional Neural Networks. ISPRS Int. J. Geo Inf. 2019, 8, 243. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive representation learning on large graphs. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 1025–1035. [Google Scholar]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Neural Message Passing for Quantum Chemistry. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017; pp. 1263–1272. [Google Scholar]

- Huang, Q.; He, H.; Singh, A.; Lim, S.; Benson, A.R. Combining Label Propagation and Simple Models out-performs Graph Neural Networks. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, 3–7 May 2021. [Google Scholar]

- Shi, Y.; Huang, Z.; Feng, S.; Zhong, H.; Wang, W.; Sun, Y. Masked Label Prediction: Unified Message Passing Model for Semi-Supervised Classification. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI 2021, Montreal, QC, Canada, 19–27 August 2021; pp. 1548–1554. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B Methodol. 1977, 39, 1–22. [Google Scholar]

- Qu, M.; Bengio, Y.; Tang, J. GMNN: Graph Markov Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 5241–5250. [Google Scholar]

- Lafferty, J.D.; McCallum, A.; Pereira, F.C.N. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In Proceedings of the Eighteenth International Conference on Machine Learning (ICML 2001), Williamstown, MA, USA, 28 June–1 July 2001; pp. 282–289. [Google Scholar]

- Zhou, D.; Bousquet, O.; Lal, T.N.; Weston, J.; Schölkopf, B. Learning with Local and Global Consistency. In Proceedings of the Advances in Neural Information Processing Systems 16 Neural Information Processing Systems, NIPS 2003, Vancouver and Whistler, BC, Canada, 8–13 December 2003; pp. 321–328. [Google Scholar]

- Zhu, X.; Ghahramani, Z.; Lafferty, J.D. Semi-Supervised Learning Using Gaussian Fields and Harmonic Functions. In Proceedings of the Machine Learning, Proceedings of the Twentieth International Conference (ICML 2003), Washington, DC, USA, 21–24 August 2003; pp. 912–919. [Google Scholar]

- Joachims, T. Transductive Learning via Spectral Graph Partitioning. In Proceedings of the Machine Learning, Proceedings of the Twentieth International Conference (ICML 2003), Washington, DC, USA, 21–24 August 2003; pp. 290–297. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Hammond, D.K.; Vandergheynst, P.; Gribonval, R. Wavelets on graphs via spectral graph theory. Appl. Comput. Harmon. Anal. 2011, 30, 129–150. [Google Scholar] [CrossRef]

- Bruna, J.; Zaremba, W.; Szlam, A.; LeCun, Y. Spectral Networks and Locally Connected Networks on Graphs. In Proceedings of the 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016; pp. 3837–3845. [Google Scholar]

- Monti, F.; Boscaini, D.; Masci, J.; Rodolà, E.; Svoboda, J.; Bronstein, M.M. Geometric Deep Learning on Graphs and Manifolds Using Mixture Model CNNs. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 5425–5434. [Google Scholar] [CrossRef]

- Bai, L.; Jiao, Y.; Cui, L.; Hancock, E.R. Learning aligned-spatial graph convolutional networks for graph classification. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Würzburg, Germany, 16 September 2019; pp. 464–482. [Google Scholar]

- Klicpera, J.; Bojchevski, A.; Günnemann, S. Predict then Propagate: Graph Neural Networks meet Personalized PageRank. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Chiang, W.; Liu, X.; Si, S.; Li, Y.; Bengio, S.; Hsieh, C. Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD 2019, Anchorage, AK, USA, 4–8 August 2019; pp. 257–266. [Google Scholar] [CrossRef]

- Wang, H.; Leskovec, J. Unifying Graph Convolutional Neural Networks and Label Propagation. arXiv 2020, arXiv:2002.06755. [Google Scholar]

- Gong, M.; Zhou, H.; Qin, A.K.; Liu, W.; Zhao, Z. Self-Paced Co-Training of Graph Neural Networks for Semi-Supervised Node Classification. IEEE Trans. Neural Netw. Learn. Syst. 2022; Early Access. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Rong, Y.; Huang, W.; Xu, T.; Huang, J. DropEdge: Towards Deep Graph Convolutional Networks on Node Classification. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Yang, C.; Liu, Z.; Zhao, D.; Sun, M.; Chang, E.Y. Network Representation Learning with Rich Text Information. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, IJCAI 2015, Buenos Aires, Argentina, 25–31 July 2015; pp. 2111–2117. [Google Scholar]

- Hu, W.; Fey, M.; Zitnik, M.; Dong, Y.; Ren, H.; Liu, B.; Catasta, M.; Leskovec, J. Open Graph Benchmark: Datasets for Machine Learning on Graphs. In Proceedings of the Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, Virtual, 6–12 December 2020. [Google Scholar]

- Sen, P.; Namata, G.; Bilgic, M.; Getoor, L.; Gallagher, B.; Eliassi-Rad, T. Collective Classification in Network Data. AI Mag. 2008, 29, 93–106. [Google Scholar] [CrossRef]

- Zitnik, M.; Leskovec, J. Predicting multicellular function through multi-layer tissue networks. Bioinformatics 2017, 33, i190–i198. [Google Scholar] [CrossRef] [PubMed]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. DeepWalk: Online learning of social representations. In Proceedings of the The 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’14, New York, NY, USA, 24–27 August 2014; pp. 701–710. [Google Scholar] [CrossRef]

- Zheng, R.; Chen, W.; Feng, G. Semi-supervised node classification via adaptive graph smoothing networks. Pattern Recognit. 2022, 124, 108492. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, H.; Tao, S. Semi-supervised classification via full-graph attention neural networks. Neurocomputing 2022, 476, 63–74. [Google Scholar] [CrossRef]

- Wu, W.; Hu, G.; Yu, F. Ricci Curvature-Based Semi-Supervised Learning on an Attributed Network. Entropy 2021, 23, 292. [Google Scholar] [CrossRef] [PubMed]

- Xu, B.; Huang, J.; Hou, L.; Shen, H.; Gao, J.; Cheng, X. Label-Consistency based Graph Neural Networks for Semi-supervised Node Classification. In Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval, SIGIR 2020, Virtual Event, 25–30 July 2020; pp. 1897–1900. [Google Scholar] [CrossRef]

- Wu, F., Jr.; Souza, A.; Zhang, T.; Fifty, C.; Yu, T.; Weinberger, K.Q. Simplifying Graph Convolutional Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 6861–6871. [Google Scholar]

- Chen, M.; Wei, Z.; Huang, Z.; Ding, B.; Li, Y. Simple and Deep Graph Convolutional Networks. In Proceedings of the 37th International Conference on Machine Learning, ICML 2020, Virtual Event, 13–18 July 2020; pp. 1725–1735. [Google Scholar]

- Liu, M.; Gao, H.; Ji, S. Towards Deeper Graph Neural Networks. In Proceedings of the KDD ’20: The 26th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Virtual Event, 23–27 August 2020; pp. 338–348. [Google Scholar] [CrossRef]

- Zheng, W.; Qian, F.; Zhao, S.; Zhang, Y. M-GWNN: Multi-granularity graph wavelet neural networks for semi-supervised node classification. Neurocomputing 2021, 453, 524–537. [Google Scholar] [CrossRef]

- Peng, L.; Hu, R.; Kong, F.; Gan, J.; Mo, Y.; Shi, X.; Zhu, X. Reverse Graph Learning for Graph Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 2022; Early Access. 2022. [Google Scholar] [CrossRef] [PubMed]

- Grover, A.; Leskovec, J. node2vec: Scalable Feature Learning for Networks. In Proceedings of the Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar] [CrossRef]

- Sun, K.; Lin, Z.; Zhu, Z. Multi-Stage Self-Supervised Learning for Graph Convolutional Networks on Graphs with Few Labeled Nodes. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, 7–12 February 2020; pp. 5892–5899. [Google Scholar]

- Feng, Y.; You, H.; Zhang, Z.; Ji, R.; Gao, Y. Hypergraph Neural Networks. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 3558–3565. [Google Scholar] [CrossRef]

- Gao, Y.; Feng, Y.; Ji, S.; Ji, R. HGNN+: General Hypergraph Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3181–3199. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Liu, Y. A Survey on Hyperlink Prediction. arXiv 2022, arXiv:2207.02911. [Google Scholar] [CrossRef]

- Huang, J.; Yang, J. UniGNN: A Unified Framework for Graph and Hypergraph Neural Networks. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI 2021, Virtual Event, 19–27 August 2021; pp. 2563–2569. [Google Scholar] [CrossRef]

| Attributes | Cora | Citeseer | PubMed | Wiki | Arxiv | PPI |

|---|---|---|---|---|---|---|

| Nodes | 2708 | 3327 | 19,717 | 2405 | 169,343 | 56,944 (24 graphs) |

| Edges | 5429 | 4732 | 44,338 | 11,596 | 1,166,243 | 818,716 |

| Features | 1433 | 3703 | 500 | 4973 | 128 | 50 |

| Class | 7 | 6 | 3 | 17 | 40 | 121 (multilabel) |

| Train Nodes | 140 | 120 | 60 | - | 90,941 | 44,906 (20 graphs) |

| Validation Nodes | 500 | 500 | 500 | - | 29,799 | 6514 (2 graphs) |

| Test Nodes | 1000 | 1000 | 1000 | - | 48,603 | 5514 (2 graphs) |

| Label Rate | 0.052 | 0.036 | 0.003 | - | 0.537 | 0.789 |

| Model | Cora | Citeseer | PubMed |

|---|---|---|---|

| MLP | 55.1 | 46.5 | 71.4 |

| DeepWalk [39] | 67.2 | 43.2 | 65.3 |

| Chebyshev [26] | 81.2 | 69.8 | 74.4 |

| GCN [11] | 81.5 | 70.3 | 79.0 |

| GAT [12] | 83.0 | 72.5 | 79.0 |

| SGC [44] | 81.0 | 71.9 | 78.9 |

| GMNN [18] | 83.7 | 72.9 | 81.8 |

| DAGNN [46] | 84.4 | 73.3 | 80.5 |

| GCNII [45] | 85.5 | 73.4 | 80.2 |

| AGSN [40] | 83.8 | 74.5 | 79.9 |

| FGANN [41] | 83.2 | 72.4 | 79.2 |

| RCGCN [42] | 82.0 | 73.2 | - |

| M-GWNN [47] | 84.6 | 72.6 | 80.4 |

| LC-GAT [43] | 83.5 | 73.8 | 79.1 |

| H-GCN [7] | 84.5 | 72.8 | 79.8 |

| rGNN [48] | 83.89 | 73.35 | - |

| SPC-CNN [32] | 84.8 | 74.0 | 81.0 |

| GRNN-0 | 84.0 ± 0.7 | 71.0 ± 0.8 | 79.2 ± 0.5 |

| GRNN | 86.0 ± 0.4 | 74.5 ± 0.6 | 81.5 ± 0.5 |

| GCN+GRNN | 81.5 ± 0.6 | 75.2 ± 0.2 | 79.3 ± 0.1 |

| GCNII+GRNN | 85.8 ± 0.6 | 73.9 ± 0.5 | 81.4 ± 0.6 |

| Model | PPI |

|---|---|

| Random | 39.6 |

| MLP | 42.2 |

| GraphSAGE-GCN [13] | 50.0 |

| GraphSAGE-mean [13] | 59.8 |

| GraphSAGE-LSTM [13] | 61.2 |

| GraphSAGE-pool [13] | 60.0 |

| GAT [12] | 97.3 |

| GRNN-0 | 98.34 ± 0.05 |

| Methods | Label Rate | |||||

|---|---|---|---|---|---|---|

| 0.5% | 1% | 2% | 3% | 4% | 5% | |

| LP | 56.4 | 62.3 | 65.4 | 67.5 | 69.0 | 70.2 |

| GCN | 50.9 | 62.3 | 72.2 | 76.5 | 78.4 | 79.7 |

| Co-training [6] | 56.6 | 66.4 | 73.5 | 75.9 | 78.9 | 80.8 |

| Self-training [6] | 53.7 | 66.1 | 73.8 | 77.2 | 79.4 | 80.0 |

| Union [6] | 58.5 | 69.9 | 75.9 | 78.5 | 80.4 | 81.7 |

| InterSection [6] | 49.7 | 65.0 | 72.9 | 77.1 | 79.4 | 80.2 |

| MultiStage [50] | 61.1 | 63.7 | 74.4 | 76.1 | 77.2 | - |

| M3S [50] | 61.5 | 67.2 | 75.6 | 77.8 | 78.0 | - |

| GRNN-0 | 55.4 | 65.0 | 75.3 | 75.7 | 79.9 | 81.0 |

| GRNN | 63.0 | 69.2 | 79.2 | 80.2 | 82.4 | 82.8 |

| Methods | Label Rate | |||||

|---|---|---|---|---|---|---|

| 0.5% | 1% | 2% | 3% | 4% | 5% | |

| LP | 34.8 | 40.2 | 43.6 | 45.3 | 46.4 | 47.3 |

| GCN | 43.6 | 55.3 | 64.9 | 67.5 | 68.7 | 69.6 |

| Co-training [6] | 47.3 | 55.7 | 62.1 | 62.5 | 64.5 | 65.5 |

| Self-training [6] | 43.3 | 58.1 | 68.2 | 69.8 | 70.4 | 71.0 |

| Union [6] | 46.3 | 59.1 | 66.7 | 66.7 | 67.6 | 68.2 |

| InterSection [6] | 42.9 | 59.1 | 68.6 | 70.1 | 70.8 | 71.2 |

| MultiStage [50] | 53.0 | 57.8 | 63.8 | 68.0 | 69.0 | - |

| M3S [50] | 56.1 | 62.1 | 66.4 | 70.3 | 70.5 | - |

| GRNN-0 | 53.1 | 60.9 | 66.2 | 68.9 | 70.2 | 70.7 |

| GRNN | 60.0 | 66.0 | 69.4 | 70.8 | 71.5 | 71.7 |

| Methods | Label Rate | |||

|---|---|---|---|---|

| 0.03% | 0.05% | 0.1% | 0.3% | |

| LP | 61.4 | 66.4 | 65.4 | 66.8 |

| GCN | 60.5 | 57.5 | 65.9 | 77.8 |

| Co-training [6] | 62.2 | 68.3 | 72.7 | 78.2 |

| Self-training [6] | 51.9 | 58.7 | 66.8 | 77.0 |

| Union [6] | 58.4 | 64.0 | 70.7 | 79.2 |

| InterSection [6] | 52.0 | 59.3 | 69.4 | 77.6 |

| MultiStage [50] | 57.4 | 64.3 | 70.2 | - |

| M3S [50] | 59.2 | 64.4 | 70.6 | - |

| GRNN-0 | 60.7 | 61.9 | 73.4 | 78.9 |

| GRNN | 65.3 | 68.8 | 77.3 | 81.6 |

| Methods | Train Nodes per Class (Label Rate) | ||

|---|---|---|---|

| 5 (3.5%) | 10 (7.0%) | 15 (10.0%) | |

| GCN | 57.9 | 63.1 | 66.4 |

| GCNII | 60.1 | 66.2 | 70.5 |

| AGSN() [40] | 58.6 | 67.5 | 72.4 |

| AGSN() [40] | 59.5 | 70.1 | 74.4 |

| GRNN-0 | 60.1 | 65.0 | 67.5 |

| GRNN | 63.0 | 67.5 | 69.1 |

| GCN+GRNN | 61.5 | 65.9 | 68.2 |

| GCNII+GRNN | 62.4 | 69.7 | 72.2 |

| Model | Arxiv |

|---|---|

| MLP | 55.50 ± 0.23 |

| Node2vec | 70.07 ± 0.13 |

| GCN | 70.39 ± 0.26 |

| GCN+GRNN | 70.42 ± 0.42 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Fan, S. GRNN: Graph-Retraining Neural Network for Semi-Supervised Node Classification. Algorithms 2023, 16, 126. https://doi.org/10.3390/a16030126

Li J, Fan S. GRNN: Graph-Retraining Neural Network for Semi-Supervised Node Classification. Algorithms. 2023; 16(3):126. https://doi.org/10.3390/a16030126

Chicago/Turabian StyleLi, Jianhe, and Suohai Fan. 2023. "GRNN: Graph-Retraining Neural Network for Semi-Supervised Node Classification" Algorithms 16, no. 3: 126. https://doi.org/10.3390/a16030126

APA StyleLi, J., & Fan, S. (2023). GRNN: Graph-Retraining Neural Network for Semi-Supervised Node Classification. Algorithms, 16(3), 126. https://doi.org/10.3390/a16030126