Elevating Univariate Time Series Forecasting: Innovative SVR-Empowered Nonlinear Autoregressive Neural Networks

Abstract

1. Introduction

2. Methodology and Data

2.1. Proposed Forecasting Framework

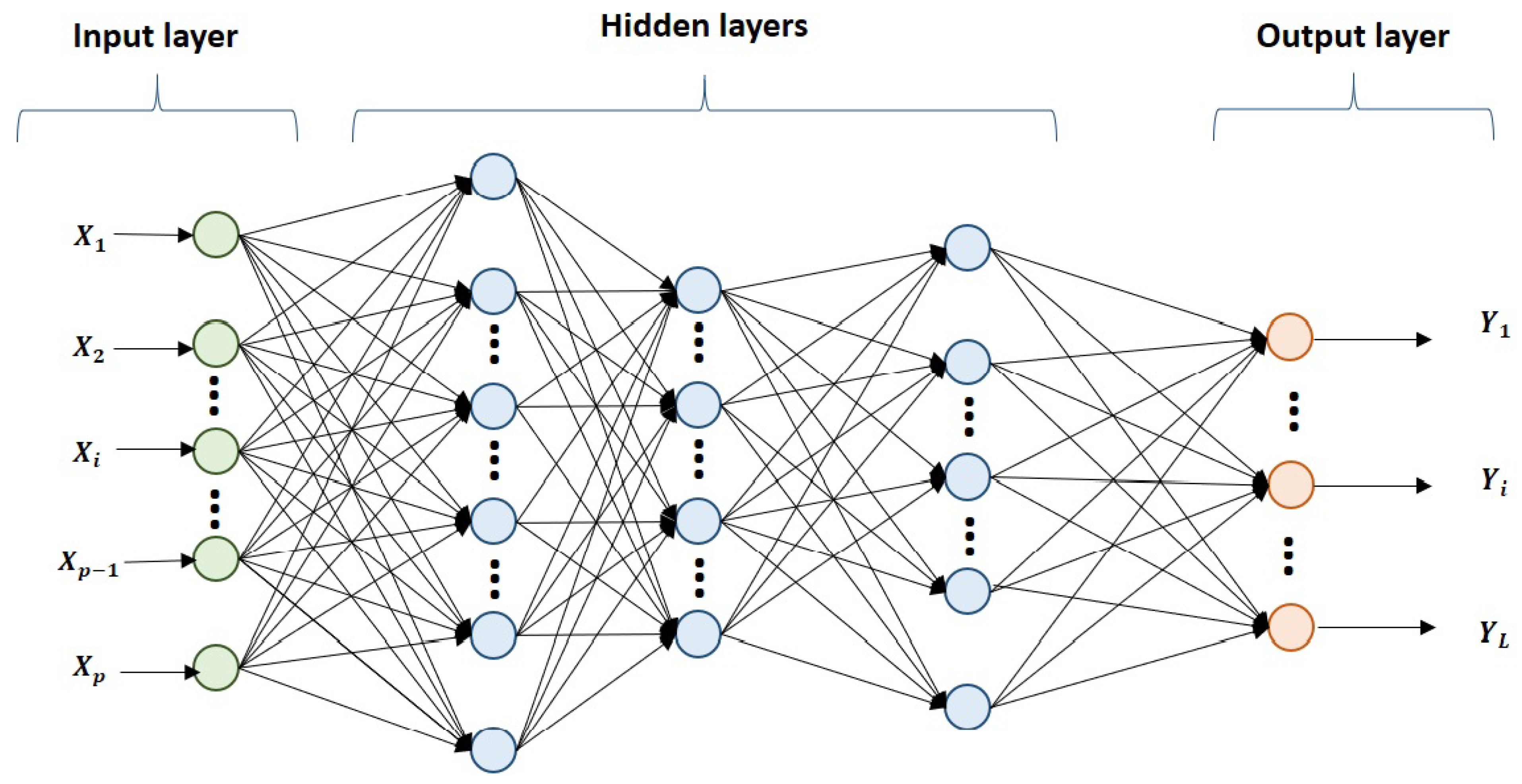

2.1.1. Multilayer Perceptron (MLP)

2.1.2. Determining the Nonlinear Autoregressive (NAR) Neural Networks

2.1.3. Determining the Support Vector Regression (SVR)

2.1.4. The Novel Hybrid NAR–SVR Model

2.2. Dataset Description

2.2.1. Agricultural Yield Datasets

2.2.2. COVID-19 Cases Datasets

2.2.3. Bitcoin Prices Dataset

3. Results

3.1. Berry Time Series Results

3.2. SARS-CoV-2 Time Series Results

3.3. Bitcoin Time Series Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mehmood, Q.; Sial, M.; Riaz, M.; Shaheen, N. Forecasting the Production of Sugarcane Crop of Pakistan for the Year 2018–2030, Using box-Jenkin’S Methodology. J. Anim. Plant Sci. 2019, 29, 1396–1401. [Google Scholar]

- Jamil, R. Hydroelectricity consumption forecast for Pakistan using ARIMA modeling and supply-demand analysis for the year 2030. Renew. Energy 2020, 154, 1–10. [Google Scholar] [CrossRef]

- Selvaraj, J.J.; Arunachalam, V.; Coronado-Franco, K.V.; Romero-Orjuela, L.V.; Ramírez-Yara, Y.N. Time-series modeling of fishery landings in the Colombian Pacific Ocean using an ARIMA model. Reg. Stud. Mar. Sci. 2020, 39, 101477. [Google Scholar] [CrossRef]

- Wang, M. Short-term forecast of pig price index on an agricultural internet platform. Agribusiness 2019, 35, 492–497. [Google Scholar] [CrossRef]

- Petropoulos, F.; Spiliotis, E.; Panagiotelis, A. Model combinations through revised base rates. Int. J. Forecast. 2022, 39, 1477–1492. [Google Scholar] [CrossRef]

- Wegmüller, P.; Glocker, C. US weekly economic index: Replication and extension. J. Appl. Econom. 2023, 1–9. [Google Scholar] [CrossRef]

- Guizzardi, A.; Pons, F.M.E.; Angelini, G.; Ranieri, E. Big data from dynamic pricing: A smart approach to tourism demand forecasting. Int. J. Forecast. 2021, 37, 1049–1060. [Google Scholar] [CrossRef]

- García, J.R.; Pacce, M.; Rodrígo, T.; de Aguirre, P.R.; Ulloa, C.A. Measuring and forecasting retail trade in real time using card transactional data. Int. J. Forecast. 2021, 37, 1235–1246. [Google Scholar] [CrossRef]

- Sekadakis, M.; Katrakazas, C.; Michelaraki, E.; Kehagia, F.; Yannis, G. Analysis of the impact of COVID-19 on collisions, fatalities and injuries using time series forecasting: The case of Greece. Accid. Anal. Prev. 2021, 162, 106391. [Google Scholar] [CrossRef]

- Rostami-Tabar, B.; Ziel, F. Anticipating special events in Emergency Department forecasting. Int. J. Forecast. 2022, 38, 1197–1213. [Google Scholar] [CrossRef]

- Marvin, D.; Nespoli, L.; Strepparava, D.; Medici, V. A data-driven approach to forecasting ground-level ozone concentration. Int. J. Forecast. 2021, 38, 970–987. [Google Scholar] [CrossRef]

- Guo, H.; Zhang, D.; Liu, S.; Wang, L.; Ding, Y. Bitcoin price forecasting: A perspective of underlying blockchain transactions. Decis. Support Syst. 2021, 151, 113650. [Google Scholar] [CrossRef]

- Elalem, Y.K.; Maier, S.; Seifert, R.W. A machine learning-based framework for forecasting sales of new products with short life cycles using deep neural networks. Int. J. Forecast. 2022, in press. [Google Scholar] [CrossRef]

- Katris, C.; Kavussanos, M.G. Time series forecasting methods for the Baltic dry index. J. Forecast. 2021, 40, 1540–1565. [Google Scholar] [CrossRef]

- Salinas, D.; Flunkert, V.; Gasthaus, J.; Januschowski, T. DeepAR: Probabilistic forecasting with autoregressive recurrent networks. Int. J. Forecast. 2020, 36, 1181–1191. [Google Scholar] [CrossRef]

- Huber, J.; Stuckenschmidt, H. Daily retail demand forecasting using machine learning with emphasis on calendric special days. Int. J. Forecast. 2020, 36, 1420–1438. [Google Scholar] [CrossRef]

- Li, Y.; Bu, H.; Li, J.; Wu, J. The role of text-extracted investor sentiment in Chinese stock price prediction with the enhancement of deep learning. Int. J. Forecast. 2020, 36, 1541–1562. [Google Scholar] [CrossRef]

- Smyl, S.; Grace Hua, N. Machine learning methods for GEFCom2017 probabilistic load forecasting. Int. J. Forecast. 2019, 35, 1424–1431. [Google Scholar] [CrossRef]

- Chen, W.; Xu, H.; Jia, L.; Gao, Y. Machine learning model for Bitcoin exchange rate prediction using economic and technology determinants. Int. J. Forecast. 2021, 37, 28–43. [Google Scholar] [CrossRef]

- Calvo-Pardo, H.; Mancini, T.; Olmo, J. Granger causality detection in high-dimensional systems using feedforward neural networks. Int. J. Forecast. 2021, 37, 920–940. [Google Scholar] [CrossRef]

- Zhang, W.; Gong, X.; Wang, C.; Ye, X. Predicting stock market volatility based on textual sentiment: A nonlinear analysis. J. Forecast. 2021, 40, 1479–1500. [Google Scholar] [CrossRef]

- Saxena, H.; Aponte, O.; McConky, K.T. A hybrid machine learning model for forecasting a billing period’s peak electric load days. Int. J. Forecast. 2019, 35, 1288–1303. [Google Scholar] [CrossRef]

- Anggraeni, W.; Mahananto, F.; Sari, A.Q.; Zaini, Z.; Andri, K.B. Forecasting the Price of Indonesia’s Rice Using Hybrid Artificial Neural Network and Autoregressive Integrated Moving Average (Hybrid NNs-ARIMAX) with Exogenous Variables. Procedia Comput. Sci. 2019, 161, 677–686. [Google Scholar] [CrossRef]

- Wang, B.; Liu, P.; Chao, Z.; Junmei, W.; Chen, W.; Cao, N.; O’Hare, G.; Wen, F. Research on Hybrid Model of Garlic Short-term Price Forecasting based on Big Data. Comput. Mater. Contin. 2018, 57, 283–296. [Google Scholar] [CrossRef]

- Xu, S.; Chan, H.K.; Zhang, T. Forecasting the demand of the aviation industry using hybrid time series SARIMA-SVR approach. Transp. Res. Part E Logist. Transp. Rev. 2019, 122, 169–180. [Google Scholar] [CrossRef]

- Sujjaviriyasup, T.; Pitiruek, K. Hybrid ARIMA-support vector machine model for agricultural production planning. Appl. Math. Sci. 2013, 7, 2833–2840. [Google Scholar] [CrossRef]

- Ju, X.; Cheng, M.; Xia, Y.; Quo, F.; Tian, Y. Support Vector Regression and Time Series Analysis for the Forecasting of Bayannur’s Total Water Requirement. Procedia Comput. Sci. 2014, 31, 523–531. [Google Scholar] [CrossRef][Green Version]

- Borrero, J.D.; Mariscal, J. Predicting Time SeriesUsing an Automatic New Algorithm of the Kalman Filter. Mathematics 2022, 10, 2915. [Google Scholar] [CrossRef]

- Borrero, J.D.; Mariscal, J.; Vargas-Sánchez, A. A New Predictive Algorithm for Time Series Forecasting Based on Machine Learning Techniques: Evidence for Decision Making in Agriculture and Tourism Sectors. Stats 2022, 5, 1145–1158. [Google Scholar] [CrossRef]

- Khedmati, M.; Seifi, F.; Azizi, M. Time series forecasting of bitcoin price based on autoregressive integrated moving average and machine learning approaches. Int. J. Eng. 2020, 33, 1293–1303. [Google Scholar]

- Chatterjee, A.; Gerdes, M.W.; Martinez, S.G. Statistical explorations and univariate timeseries analysis on COVID-19 datasets to understand the trend of disease spreading and death. Sensors 2020, 20, 3089. [Google Scholar] [CrossRef] [PubMed]

- Katris, C. A time series-based statistical approach for outbreak spread forecasting: Application of COVID-19 in Greece. Expert Syst. Appl. 2021, 166, 114077. [Google Scholar] [CrossRef] [PubMed]

- Tripathi, B.; Sharma, R.K. Modeling bitcoin prices using signal processing methods, bayesian optimization, and deep neural networks. Comput. Econ. 2022, 1–27. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Zhang, Y. Corn cash price forecasting with neural networks. Comput. Electron. Agric. 2021, 184, 106120. [Google Scholar] [CrossRef]

- Fang, Y.; Guan, B.; Wu, S.; Heravi, S. Optimal forecast combination based on ensemble empirical mode decomposition for agricultural commodity futures prices. J. Forecast. 2020, 39, 877–886. [Google Scholar] [CrossRef]

- Šestanović, T.; Arnerić, J. Neural Network Structure Identification in Inflation Forecasting. J. Forecast. 2020, 40, 62–79. [Google Scholar] [CrossRef]

- Arrieta-Prieto, M.; Schell, K.R. Spatio-temporal probabilistic forecasting of wind power for multiple farms: A copula-based hybrid model. Int. J. Forecast. 2021, 38, 300–320. [Google Scholar] [CrossRef]

- Elshafei, B.; Pena, A.; Xu, D.; Ren, J.; Badger, J.; Pimenta, F.M.; Giddings, D.; Mao, X. A hybrid solution for offshore wind resource assessment from limited onshore measurements. Appl. Energy 2021, 298, 117245. [Google Scholar] [CrossRef]

- Alsumaiei, A.A.; Alrashidi, M.S. Hydrometeorological Drought Forecasting in Hyper-Arid Climates Using Nonlinear Autoregressive Neural Networks. Water 2020, 12, 2611. [Google Scholar] [CrossRef]

- Sarkar, M.R.; Julai, S.; Hossain, M.S.; Chong, W.T.; Rahman, M. A Comparative Study of Activation Functions of NAR and NARX Neural Network for Long-Term Wind Speed Forecasting in Malaysia. Math. Probl. Eng. 2019, 2019, 6403081. [Google Scholar] [CrossRef]

- Salam, M.A.; Yazdani, M.G.; Wen, F.; Rahman, Q.M.; Malik, O.A.; Hasan, S. Modeling and Forecasting of Energy Demands for Household Applications. Glob. Chall. 2020, 4, 1900065. [Google Scholar] [CrossRef] [PubMed]

- Doğan, E. Analysis of the relationship between LSTM network traffic flow prediction performance and statistical characteristics of standard and nonstandard data. J. Forecast. 2020, 39, 1213–1228. [Google Scholar] [CrossRef]

- Chandra, S.; Kumar, S.; Kumar, R. Forecasting of municipal solid waste generation using non-linear autoregressive (NAR) neural models. Waste Manag. 2021, 121, 206–214. [Google Scholar] [CrossRef]

- Saba, A.I.; Elsheikh, A.H. Forecasting the prevalence of COVID-19 outbreak in Egypt using nonlinear autoregressive artificial neural networks. Process Saf. Environ. Prot. 2020, 141, 1–8. [Google Scholar] [CrossRef]

- Khan, F.M.; Gupta, R. ARIMA and NAR based prediction model for time series analysis of COVID-19 cases in India. J. Saf. Sci. Resil. 2020, 1, 12–18. [Google Scholar] [CrossRef]

- Djedidi, O.; Djeziri, M.A.; Benmoussa, S. Remaining useful life prediction in embedded systems using an online auto-updated machine learning based modeling. Microelectron. Reliab. 2021, 119, 114071. [Google Scholar] [CrossRef]

- Sun, Z.; Li, K.; Li, Z. Prediction of Horizontal Displacement of Foundation Pit Based on NAR Dynamic Neural Network. IOP Conf. Ser. Mater. Sci. Eng. 2020, 782, 042032. [Google Scholar] [CrossRef]

- Chen, W.; Xu, H.; Chen, Z.; Jiang, M. A novel method for time series prediction based on error decomposition and nonlinear combination of forecasters. Neurocomputing 2021, 426, 85–103. [Google Scholar] [CrossRef]

- Habib, S.; Khan, M.M.; Abbas, F.; Ali, A.; Hashmi, K.; Shahid, M.U.; Bo, Q.; Tang, H. Risk Evaluation of Distribution Networks Considering Residential Load Forecasting with Stochastic Modeling of Electric Vehicles. Energy Technol. 2019, 7, 1900191. [Google Scholar] [CrossRef]

- Chen, E.Y.; Fan, J.; Zhu, X. Community network auto-regression for high-dimensional time series. J. Econom. 2022, 235, 1239–1256. [Google Scholar] [CrossRef]

- Shoaib, M.; Raja, M.A.Z.; Sabir, M.; Bukhari, A.; Alrabaiah, H.; Shah, Z.; Kumam, P.; Islam, S. A stochastic numerical analysis based on hybrid NAR-RBFs networks nonlinear SITR model for novel COVID-19 dynamics. Comput. Methods Programs Biomed. 2021, 202, 105973. [Google Scholar] [CrossRef] [PubMed]

- Zhu, B.; Zhang, J.; Wan, C.; Chevallier, J.; Wang, P. An evolutionary cost-sensitive support vector machine for carbon price trend forecasting. J. Forecast. 2023, 42, 741–755. [Google Scholar] [CrossRef]

- Wei, M.; Sermpinis, G.; Stasinakis, C. Forecasting and trading Bitcoin with machine learning techniques and a hybrid volatility/sentiment leverage. J. Forecast. 2023, 42, 852–871. [Google Scholar] [CrossRef]

- Du, K.L.; Swamy, M. Neural Networks and Statistical Learning; Oxford University Press: Oxford, UK, 2014; Chapter 04; pp. 86–132. [Google Scholar] [CrossRef]

- Chauvin, Y.; Rumelhart, D. Backpropagation: Theory, Architectures, and Applications; Psychology Press: London, UK, 1995. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Leshno, M.; Lin, V.Y.; Pinkus, A.; Schocken, S. Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw. 1993, 6, 861–867. [Google Scholar] [CrossRef]

- Huang, G.B.; Chen, L.; Siew, C. Universal Approximation Using Incremental Constructive Feedforward Networks With Random Hidden Nodes. IEEE Trans. Neural Netw. 2006, 17, 879–892. [Google Scholar] [CrossRef]

- Sharma, S.; Sharma, S.; Athaiya, A. Activation Functions in Neural Networks. Int. J. Eng. Appl. Sci. Technol. 2020, 4, 310–316. [Google Scholar] [CrossRef]

- Garces-Jimenez, A.; Castillo-Sequera, J.; del Corte Valiente, A.; Gómez, J.; González-Seco, E. Analysis of Artificial Neural Network Architectures for Modeling Smart Lighting Systems for Energy Savings. IEEE Access 2019, 7, 119881–119891. [Google Scholar] [CrossRef]

- De Cicco, A. The Fruit and Vegetable Sector in the EU—A Statistical Overview; EU: Brussels, Belgium, 2020. [Google Scholar]

- Macori, G.; Gilardi, G.; Bellio, A.; Bianchi, D.; Gallina, S.; Vitale, N.; Gullino, M.; Decastelli, L. Microbiological Parameters in the Primary Production of Berries: A Pilot Study. Foods 2018, 7, 105. [Google Scholar] [CrossRef]

- Skrovankova, S.; Sumczynski, D.; Mlcek, J.E.A. Bioactive Compounds and Antioxidant Activity in Different Types of Berries. Int. J. Mol. Sci. 2015, 16, 24673–24706. [Google Scholar] [CrossRef]

- Petropoulos, F.; Makridakis, S.; Stylianou, N. COVID-19: Forecasting confirmed cases and deaths with a simple time-series model. Int. J. Forecast. 2020, 61, 439–452. [Google Scholar] [CrossRef]

- Aslam, M. Using the kalman filter with Arima for the COVID-19 pandemic dataset of Pakistan. Data Brief 2020, 31, 105854. [Google Scholar] [CrossRef] [PubMed]

- Benvenuto, D.; Giovanetti, M.; Vassallo, L.; Angeletti, S.; Ciccozzi, M. Application of the ARIMA model on the COVID-2019 epidemic dataset. Data Brief 2020, 29, 105340. [Google Scholar] [CrossRef] [PubMed]

- Bayyurt, L.; Bayyurt, B. Forecasting of COVID-19 Cases and Deaths Using ARIMA Models. medRxiv 2020. [Google Scholar] [CrossRef]

- Hernandez-Matamoros, A.; Fujita, H.; Hayashi, T.; Perez-Meana, H. Forecasting of COVID-19 per regions using ARIMA models and polynomial functions. Appl. Soft Comput. 2020, 96, 106610. [Google Scholar] [CrossRef]

- Koo, E.; Kim, G. Prediction of Bitcoin price based on manipulating distribution strategy. Appl. Soft Comput. 2021, 110, 107738. [Google Scholar] [CrossRef]

- Chen, Z.; Li, C.; Sun, W. Bitcoin price prediction using machine learning: An approach to sample dimension engineering. J. Comput. Appl. Math. 2020, 365, 112395. [Google Scholar] [CrossRef]

- Shu, M.; Zhu, W. Real-time prediction of Bitcoin bubble crashes. Phys. A Stat. Mech. Its Appl. 2020, 548, 124477. [Google Scholar] [CrossRef]

- Raju, S.M.; Tarif, A.M. Real-Time Prediction of BITCOIN Price using Machine Learning Techniques and Public Sentiment Analysis. arXiv 2020, arXiv:2006.14473. [Google Scholar]

| Strawberry Model | MAE | RMSE | |

|---|---|---|---|

| NAR | 0.875 | 108,818.00 | 218,035.20 |

| NAR–SVR | 0.897 | 96,192.39 | 198,001.30 |

| AR(1) | 0.805 | 112,350.30 | 272,086.80 |

| ARIMA(1,1,1) | 0.886 | 101,767.80 | 208,013.20 |

| SVR | 0.795 | 149,745.00 | 279,310.80 |

| Raspberry Model | MAE | RMSE | |

| NAR | 0.898 | 3963.26 | 6820.32 |

| NAR–SVR | 0.933 | 3094.87 | 5521.29 |

| AR(1) | 0.776 | 5667.00 | 10,132.67 |

| ARIMA(1,1,1) | 0.796 | 4204.36 | 9656.68 |

| SVR | 0.835 | 4663.82 | 8706.49 |

| Blueberry Model | MAE | RMSE | |

| NAR | 0.906 | 18,736.12 | 28,500.04 |

| NAR–SVR | 0.916 | 17,985.56 | 26,919.89 |

| AR(1) | 0.609 | 30,773.63 | 58,217.35 |

| ARIMA(1,1,1) | 0.914 | 18,207.08 | 27,282.07 |

| SVR | 0.572 | 34,628.62 | 60,854.12 |

| COVID-19 Cases in Spain | MAE | RMSE | |

|---|---|---|---|

| NAR | 0.297 | 11,039.31 | 16,740.69 |

| NAR–SVR | 0.648 | 7852.59 | 11,840.78 |

| AR(1) | 0.000 | 12,444.86 | 20,378.32 |

| ARIMA(1,1,1) | 0.155 | 12,752.84 | 18,358.76 |

| SVR | 0.242 | 10,911.04 | 17,388.00 |

| COVID-19 Cases in Italy | MAE | RMSE | |

| NAR | 0.583 | 2614.85 | 3235.67 |

| NAR–SVR | 0.727 | 1787.28 | 2619.95 |

| AR(1) | 0.585 | 2618.05 | 3229.73 |

| ARIMA(1,1,1) | 0.583 | 2608.97 | 3235.73 |

| SVR | 0.688 | 2223.86 | 2800.11 |

| COVID-19 Cases in Turkey | MAE | RMSE | |

| NAR | 0.966 | 971.63 | 1380.42 |

| NAR–SVR | 0.970 | 939.97 | 1332.71 |

| AR(1) | 0.953 | 1079.75 | 1637.19 |

| ARIMA(1,1,1) | 0.953 | 1146.95 | 1631.25 |

| SVR | 0.944 | 1382.90 | 1775.86 |

| Model | MAE | RMSE | |

|---|---|---|---|

| NAR | 0.952 | 1599.15 | 2093.40 |

| NAR–SVR | 0.953 | 1576.55 | 2082.80 |

| ARIMA(1,2,1) | 0.952 | 1590.71 | 2094.93 |

| SVR | 0.000 | 30,533.27 | 32,457.01 |

| Model | Our NAR–SVR | [68] |

|---|---|---|

| COVID-19 in Spain | ||

| COVID-19 in Italy | ||

| COVID-19 in Turkey |

| Model | Our | [72] | ||

|---|---|---|---|---|

| SARS-CoV-2 | NAR–SVR | ARIMA | LSTM (single feature) | LSTM (multifeature) |

| RMSE | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Borrero, J.D.; Mariscal, J. Elevating Univariate Time Series Forecasting: Innovative SVR-Empowered Nonlinear Autoregressive Neural Networks. Algorithms 2023, 16, 423. https://doi.org/10.3390/a16090423

Borrero JD, Mariscal J. Elevating Univariate Time Series Forecasting: Innovative SVR-Empowered Nonlinear Autoregressive Neural Networks. Algorithms. 2023; 16(9):423. https://doi.org/10.3390/a16090423

Chicago/Turabian StyleBorrero, Juan D., and Jesus Mariscal. 2023. "Elevating Univariate Time Series Forecasting: Innovative SVR-Empowered Nonlinear Autoregressive Neural Networks" Algorithms 16, no. 9: 423. https://doi.org/10.3390/a16090423

APA StyleBorrero, J. D., & Mariscal, J. (2023). Elevating Univariate Time Series Forecasting: Innovative SVR-Empowered Nonlinear Autoregressive Neural Networks. Algorithms, 16(9), 423. https://doi.org/10.3390/a16090423