Abstract

Quantum computing has opened up various opportunities for the enhancement of computational power in the coming decades. We can design algorithms inspired by the principles of quantum computing, without implementing in quantum computing infrastructure. In this paper, we present the quantum predator–prey algorithm (QPPA), which fuses the fundamentals of quantum computing and swarm optimization based on a predator–prey algorithm. Our results demonstrate the efficacy of QPPA in solving complex real-parameter optimization problems with better accuracy when compared to related algorithms in the literature. QPPA achieves highly rapid convergence for relatively low- and high-dimensional optimization problems and outperforms selected traditional and advanced algorithms. This motivates the application of QPPA to real-world application problems.

1. Introduction

Metaheuristic optimization algorithms [1] have gained attention as a promising alternative to traditional optimization methods such as linear programming, nonlinear programming, and dynamic programming [2,3]. Metaheuristic algorithms have the ability to explore large solution spaces efficiently and handle complex problems that are difficult to model mathematically or have a large number of variables and constraints [4]. These algorithms aim to solve optimization problems by mimicking the behavior of natural processes such as biological evolution [5,6]. Some of the most prominent examples include genetic algorithms [6], which are inspired by biological evolution and natural selection, and particle swarm optimization, inspired by the behavior of a swarm of birds [7]. Another widely used form of swarm optimization is ant colony optimization, based on the behavior of ants as they forage for food [8]. Metaheuristics repeatedly create new solutions through operators such as crossover and mutation and combine them to obtain better solutions over the course of evolution (optimization) [6]. Metaheuritics are typically gradient-free optimization methods and hence suitable for models where gradient information is difficult to obtain, such as geoscientific models [9]. Since metaheuristic algorithms do not rely on gradient information, one of the major limitations is slow convergence; however, parallel implementations have been shown to address this limitation partly, for certain types of problems [9,10,11].

Apart from inspiration from biology and natural science, physics and mechanics have also inspired the design of metaheuristic optimization algorithms. Physics-based optimization algorithms are inspired by physical phenomena such as gravity, electricity, and magnetism [12,13]. These algorithms simulate the physical behavior of particles or agents in the search space to find optimal solutions. The gravitational search algorithm (GSA) [14] is based on the law of gravity and mass interactions where agents are a collection of masses that interact with each other based on Newtonian laws of motion. GSA has the advantage of being fast and easy to implement, and it is also computationally efficient when compared to related algorithms. The magnetic optimization algorithm (MOA) has a measure of the mass and magnetic field according to its fitness [15]. The fitter magnetic particles are those with larger magnetic fields and a higher mass. These particles are located in a lattice-like environment that uses the force of attraction onto their neighbors. The electromagnetism-like mechanism algorithm (EMO) is based on an attraction–repulsion mechanism to move the sample points toward optimality [16]. The chaos-based optimization algorithm (COA) [17] employs a search within nonlinear, discrete-time dynamical systems to optimize complex functions of multiple variables. It generates chaotic trajectories to systematically explore the search space, effectively seeking global minima for intricate criterion functions, and provides a dynamic strategy for nonlinear optimization challenges.

Quantum computing [18,19] has opened up various opportunities the enhancement of computational power in the coming decades. Quantum computing has the potential to provide better infrastructure for artificial intelligence [20] and also pose threats when it comes to cyber-security, as the current security systems, such as as quantum computers with enhanced computational power, are anticipated to break encryption in the future [21]. However, major challenges in the implementation of quantum computing remain [22]. Even with an implementation that is functional, the design of algorithms for quantum computing would need to be revisited [23]. There has been a growing trend in developing quantum-inspired optimization algorithms, such as the quantum genetic algorithm (QGA) [24], quantum particle swarm optimization (QPSO) [25], quantum teaching–learning-based optimization (QTLBO) [26], and the quantum marine predator algorithm (QMOA) [27]. These algorithms have shown promising results in solving optimization problems that are beyond the capabilities of classical optimization algorithms. The development of quantum optimization algorithms is expected to have a significant impact on the knapsack problem [25] and related engineering problems [26].

In this study, the term “quantum” in the algorithm’s name is attributed to its inspiration from quantum mechanics, following a naming convention employed previously [24,25,26,27], with the understanding that the algorithm is not intended for execution on real quantum computers. Inspired by quantum mechanics, we can design algorithms that do not necessarily need to wait for the development of quantum computing infrastructure. In this paper, we present the quantum predator–prey algorithm, which fuses the fundamentals of quantum computing and swarm optimization by featuring a predator–prey algorithm for real-parameter optimization. We evaluate our algorithm for sixteen benchmark real-parameter optimizations and compare prominent metaheuristic algorithms from the literature. We also provide a statistical analysis and sensitivity analysis of our results.

2. Related Work

The field of metaheuristic optimization has grown rapidly with inspiration from various processes in nature. Cat swarm optimization (CSO) is a population-based optimization technique that mimics the foraging behavior of cats as they search for prey [28]. The tunicate swarm algorithm (TSA) imitates the jet propulsion and swarm behaviors of tunicates during the navigation and foraging process [29]. Bee colony optimization (BCO) [30] features the actions of individuals in various decentralized systems and is applicable to complex combinatorial optimization problems. Bacterial foraging optimization (BFO) mimics how bacteria forage over a landscape of nutrients to perform parallel non-gradient optimization [31]. Artificial bee colony (ABC) [32] is inspired by the foraging behavior of honey bees and consists of three essential components: (1.) employed foraging bees, (2.) unemployed foraging bees, and (3.) food sources. ABC has simple implementation and efficient exploration capabilities, but can become stuck in local optima and requires fine-tuning due to its sensitivity to hyperparameters [33,34].

Zervoudakis et al. [35] introduced the mayfly optimization (MO) algorithm, where the swarm of individuals are categorized as male and female mayflies and each gender exhibits distinct swarm (population) updating behavior. In the MO algorithm, individuals situated far from the best candidate or historical best trajectories move toward the optimal position at a reduced speed. On the contrary, when individuals are close to the global best candidate or historical trajectories, they move at a faster pace, which slows down the convergence rate [36]. The firefly algorithm (FA) [37] mimics the flashing behavior of fireflies to solve complex optimization problems. It suffers from some drawbacks, such as a slow convergence rate, becoming stuck in local optima, and sensitivity to the choice of hyperparameters. The dragonfly algorithm (DA) [38] originates from the static and dynamic swarming behaviors of dragonflies in nature. It can solve complex and multi-objective optimization problems and can handle constraints, while searching for diverse solutions.

The cuckoo search (CS) [39] optimization is inspired by the brood parasitism of cuckoo species with the behavior of laying eggs in the nests of other bird species. The grasshopper optimization algorithm (GOA) [40] is a multi-objective algorithm inspired by the navigation of grasshopper swarms that features the interaction of individuals in the swarm, including the attraction force, repulsion force, and comfort zone. The whale optimization algorithm (WOA) [41] mimics the social behavior of humpback whales, inspired by the bubble-net hunting strategy. The grey wolf optimizer (GWO) [42] mimics the leadership hierarchy and hunting mechanism of grey wolves in nature. Jaya [43] is a simple and parameter-less optimization algorithm designed for both constrained and unconstrained optimization. It does not require the tuning of hyperparameters, making it user-friendly. The sine cosine algorithm (SCA) [44] balances exploration and exploitation, with promising results across various domains.

In general, most of these metaheuristic optimization algorithms have the advantage of being easy to implement and providing gradient-free optimization and are suitable in problems where gradient information is not theoretically available or is computationally difficult to obtain. These are population-based algorithms that have a pool of individuals that compete and collaborate to form new solutions over time. However, they have some drawbacks, such as slow convergence, low precision in solving problems, hyperparameter tuning, and a limited ability to perform local searches. The algorithms presented earlier focus on continuous parameter optimization and can be extended to apply to discrete-parameter and constraint-parameter optimization problems.

3. Quantum Predator–Prey Algorithm

We again note that our quantum predator–prey algorithm (QPPA) combines the principles of quantum computing and swarm-based optimization. QPPA has the potential to significantly reduce the computational time, i.e., the number of iterations required to find the optimal solution, making it a promising alternative in solving computationally intensive problems.

3.1. Algorithm

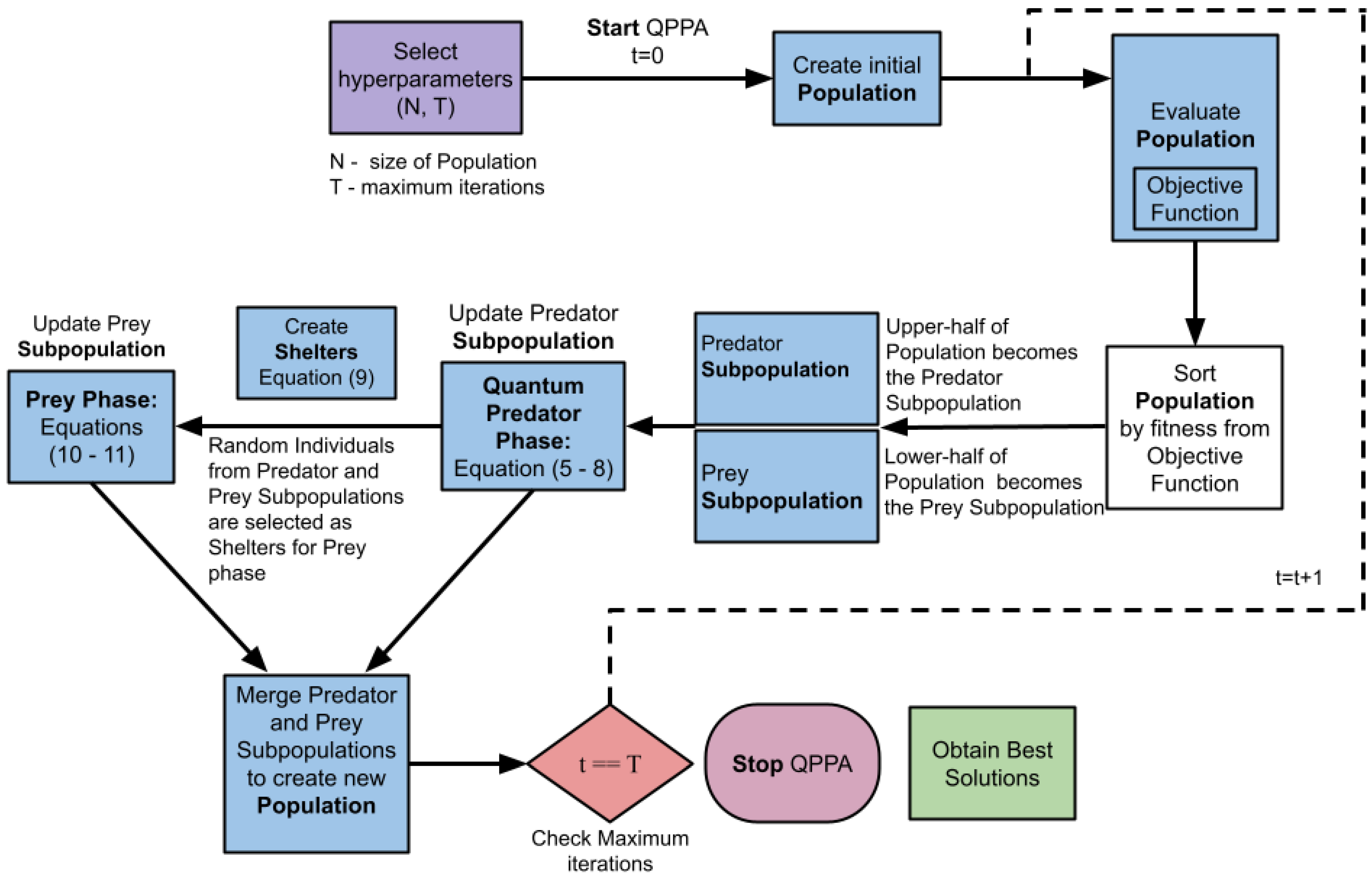

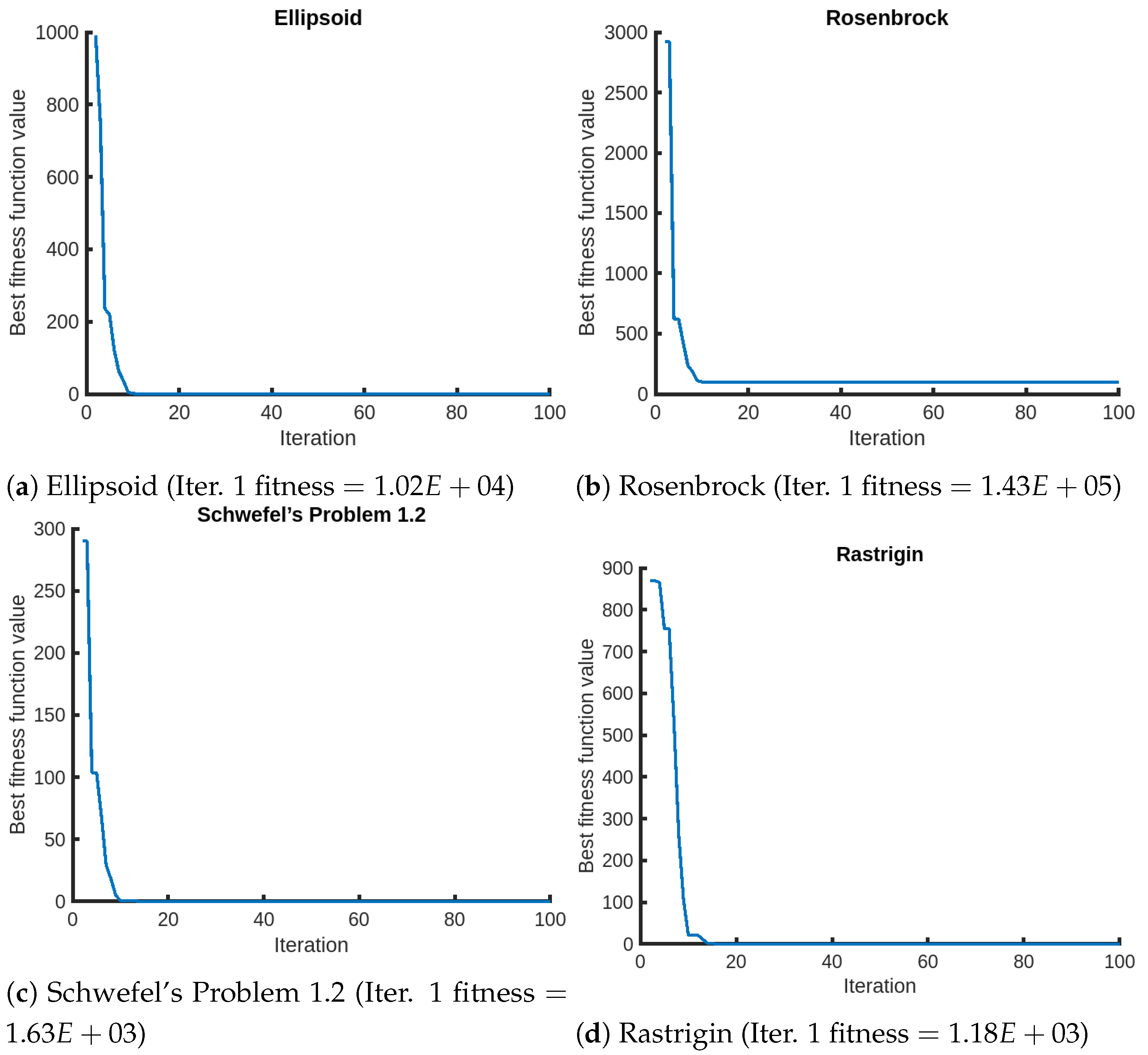

We present QPPA in the framework shown in Figure 1, which is further described in Algorithm 1, featuring a population and predator and prey subpopulations. The predator subpopulation represents a process that actively seeks to improve a solution. Similar to a predator in an ecological setting hunting for prey, the predator creates better solutions by exploiting the prey subpopulation. During the process of the creation of new individuals in the population, in the first phase, we model the movement of the quantum predator toward the prey through Equations (14)–(32). We provide further details on how we derived Equation (7) in Section 3.2. Similar to an ecological setting where the prey tries to avoid capture, as shown in Figure 1, the prey hides in the shelter that is represented as a subpopulation in transition, leading to further improvement.

Figure 1.

QPPA framework highlighting the different stages of the evolution process with predator and prey subpopulations.

We begin with population X of N individuals with M decision variables (parameters) that are initialized randomly as shown in Equation (1), where signifies the d-th decision variable of the i-th member of the population.

We then select each individual from population X and compute the fitness score based on the objective function. We represent the set of fitness values for the entire population as shown in Equation (2).

We then sort population X based on the fitness values in increasing order. and correspond to the sorted population matrix and the sorted fitness vector, respectively, as shown in Equations (3) and (4).

We then update the predator subpopulation of individuals and prey subpopulation of individuals by dividing the sorted population . The prey subpopulation consists of solutions with lower fitness values (Equation (5)), while the predator population consists of solutions with higher fitness values (Equation (6)), as highlighted in Algorithm 1 and Figure 1.

| Algorithm 1 Pseudocode of QPPA |

|

We now execute the quantum predator phase (Figure 1), where we update the predator subpopulation using Equation (7). In this update, we implement the analogy wherein the individuals in the predator subpopulation are chasing the individuals in the prey subpopulation. The quantum mechanism implies that an individual from the predator subpopulation can feature two copies of itself to attack the individuals from the prey subpopulation.

The constants and employed in Equations (7) and (8) are random variables within the range of 0–1. In the new predator subpopulation , d denotes the decision variable of the j-th population. Similarly, in the new prey subpopulation , d denotes the decision variable of the j-th population. Additionally, represents the shelter subpopulation that hosts the random individuals from the X selected by the j-th prey.

where

In the prey phase (Figure 1), the escape of the prey to the shelter subpopulation takes place, where random individuals are taken from the predator and prey subpopulations. Note that the shelter subpopulation is the same size as the prey subpopulation, where the shelter gives a space for every individual in the prey subpopulation for refuge from the predators. It is mathematically modeled using Equations (11)–(13).

where

3.2. Quantum Formulation

We give further details of the equations that have been used to create new individuals from the population in QPPA through quantum formulation via the predator and prey subpopulations. We use the foundations of quantum mechanics to formulate the quantum predator phase shown in Algorithm 1 and Figure 1.

A wave function can be solved using the Schrödinger equation [45]:

V is the representation of the potential energy function, h-cross (ℏ) is Plank’s constant divided by , and i represents an iota that gives the imaginary part of a complex number.

In order to find the probability of a particle at point x and time t, we solve . Therefore, gives the probability of finding the particle between x and at time t. The variable separation method [] is used to solve the Schrödinger equation.

The variable separation method creates two different functions dependent on x and t, respectively. Therefore, the Schrödinger equation can be written as in Equation (16):

The second part of the above equation is dependent on x and independent of t and is therefore called the time-independent Schrödinger equation. The specification of the potential function is important to solve the Schrödinger equation. Some of the most common potential functions are the one-dimensional delta potential well, the harmonic oscillator, and the square potential well. In our algorithm, we use the delta potential function, and therefore we define the Dirac delta function as in Equation (17).

is a similar function that has the same properties at position c as the origin in . If we multiply by an ordinary function , it is the same as multiplying .

Integrating both sides from −∞ with ∞, we obtain , where c is a constant.

We can consider the potential of the form of Equation (20):

Replacing the potential function in a time-independent Schrödinger equation, we have Equation (21):

which can be written in the form given in Equation (22):

where

This potential yields both bound states and scattering states . In the region , , and E is negative by assumption, so k is real and positive, and hence we obtain Equation (24).

In Equation (24), we assume that the pre-exponential factor is kept at 0 for the negative x part, for which the power of exponential would be , as it would blow up when .

In the region , , we obtain Equation (25):

The above equation does not include the term whose power of exponential is because it would blow up as , so the pre-exponential factor is kept at 0. On keeping , we obtain a general formula:

We assume that the particle is present in a one-dimensional space. We refer to point p as the local attractor that is at the center of the potential well. Equation (27) represents the potential energy of the particle in a one-dimensional delta potential well.

Let m denote the mass of the particle and . Below are the steps involved in integrating the Schrödinger equation from to .

taking the limit as goes to 0,

We integrate to determine the constants

where L is the length of the potential well; therefore, the represents the normalized wave function as given in Equation (29).

We assume that s is a random number in the range (0, 1/L) and s is the probability of the existence of a quantum body in a particular position p, as given in Equation

u is a random number in the interval (0,1).

where p is the local attractor used to define a new random location between the best and current solution for better diversification in each iteration, which increases the exploratory tendency of the optimization technique.

We substitute Equation (30) with Equation (31) and obtain the following equation to update the position of the particles.

and are the solutions before and after updating, respectively.

4. Empirical Evaluation

4.1. Experimental Settings

We assess the efficacy of QPPA for selected real-parameter optimization benchmark problems and compare it with related algorithms from the literature. We first outline the experimental configuration employed to evaluate the performance of the selected algorithms. We select a set of fourteen benchmark objective functions, as shown in Table 1, which provides the function name, formulation, and search space. We compare QPPA with established optimization methods from the literature using the hyperparameters given in their original papers, as shown in shown in Table 2. We make an exception for the case of PSO for the F7 objection function (HGBat) given in Table 1, where we fine-tune the hyperparameters in trial experiments.

Table 1.

Benchmark objective functions from Congress on Evolutionary Computation, 2022.

Table 2.

Hyperparameters for the selected optimization algorithms from the literature.

In our algorithmic analysis, we investigate two different settings. In Setting 1, we focus on evaluating the algorithm’s efficiency and convergence behavior in a lower-dimensional solution space. Hence, in Setting 1, we use a population size (), maximum iterations (), and dimension (). In Setting 2, we maintain a consistent population size () and iteration count () and consider a much higher-dimensional space (). This choice allows us to showcase the algorithm’s scalability and robustness when solving more complex, high-dimensional optimization problems. By systematically varying these settings, we aim to comprehensively understand the algorithm’s strengths and limitations across a wide range of optimization scenarios.

4.2. Results

We report the performance results of the respective optimization algorithms using two indicators: the fitness score of the average of the best (quasi-optimal) solutions and the standard deviation (st. dev) of the best solutions. We present the results in Table 3, which compares our QPPA with well-known optimization methods, including PSO [7], real-coded GA [6], GSA [14], SCA [44], Jaya [43], GWO [42], MA [35], and LSA [46]. The reported mean and standard deviation values are outcomes derived from five independent runs.

Table 3.

Results of optimization algorithms on objective functions in Setting 2 (). We highlight the best results in bold.

We find that QPPA is demonstrated to be the strongest performer in 12 out of 14 functions in Setting 1, as shown in Table 3. The algorithm’s ability to adapt effectively and find a balance between exploring new possibilities and exploiting promising ones allows it to navigate complex search landscapes efficiently, which leads to optimal or near-optimal solutions. Although MA stands out in problems such as HGBat, Katsuura, and Modified Schwefel, QPPA’s widespread success across a diverse set of functions highlights its potential as a versatile optimization method.

We further provide the results for a more complex case (Setting 2) in Table 4. In Setting 2, we observe that QPPA demonstrates superior performance by outperforming all other algorithms in 13 out of 14 functions. Notably, MA surpasses QPPA in optimizing the Happycat function. These findings underscore the commendable performance of QPPA, particularly in the realm of higher-dimensional problems.

Table 4.

Results of optimization algorithms on objective functions in Setting 2 (). We highlight the best results in bold.

We present a statistical analysis to evaluate the significance of QPPA using an unpaired t-test [47] of the results from Setting 1. An unpaired t-test, also known as an independent t-test, compares the means of two unrelated groups to determine whether there is a noteworthy difference between them. We can determine whether the difference is statistically significant or whether it occurs by chance alone. Table 5 presents the outcomes (p-values) of the unpaired t-test performed between QPPA and the other algorithms, where we use a threshold value of 0.02 to determine whether QPPA is significantly better. Based on the results, we find that QPPA provides better performance than PSO, Real-GA, GSA, SCA, Jaya, GWO, MA, and LSA.

Table 5.

p-values for the t-test for the effectiveness of the respective algorithms coupled with QPPA (e.g., PSO vs. QPPA) in Setting 1 (, , ).

Table 6 presents the iteration-wise outcomes of QPPA across the 14 objective functions, maintaining consistent parameters with Setting 2. We provide the results for every 10th percent of 1000 iterations. This detailed breakdown provides insights into the convergence behavior of QPPA. Additionally, for a comprehensive comparative analysis, we include results for PSO under identical settings, detailed in Table 7.

Table 6.

Iteration-wise results for QPPA in Setting 2 (, , ).

Table 7.

Iteration-wise results for PSO in Setting 2 (, , ).

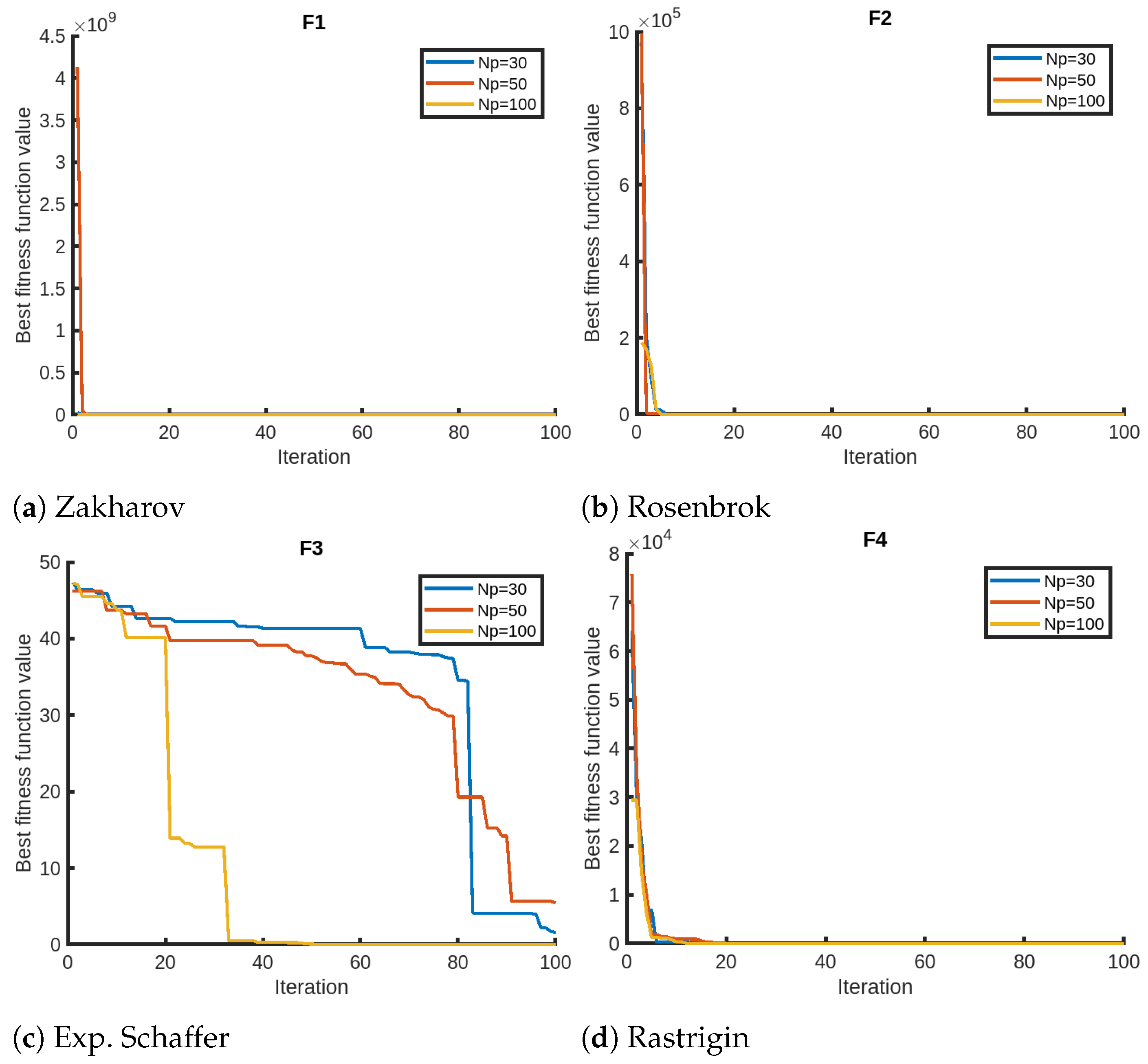

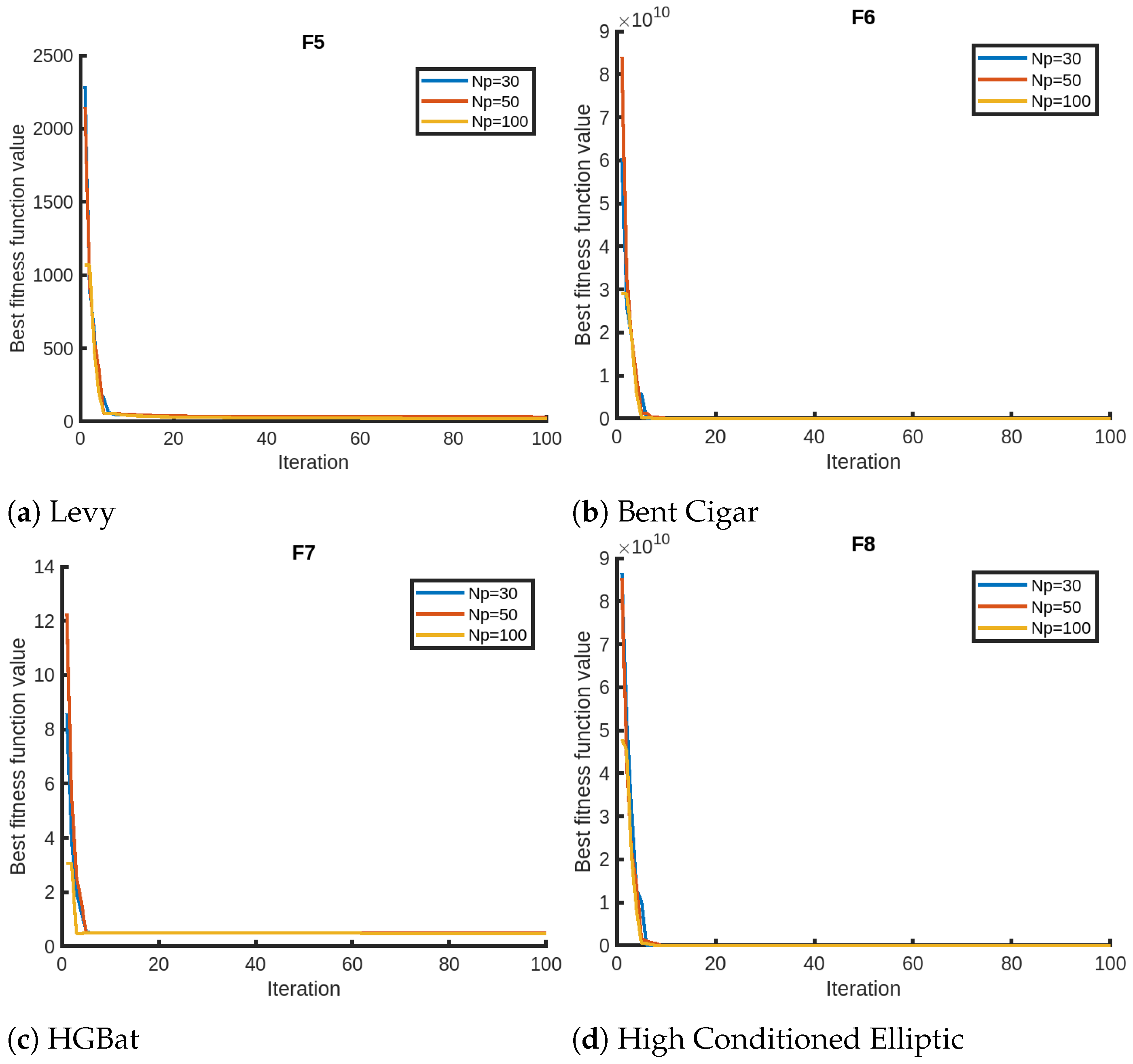

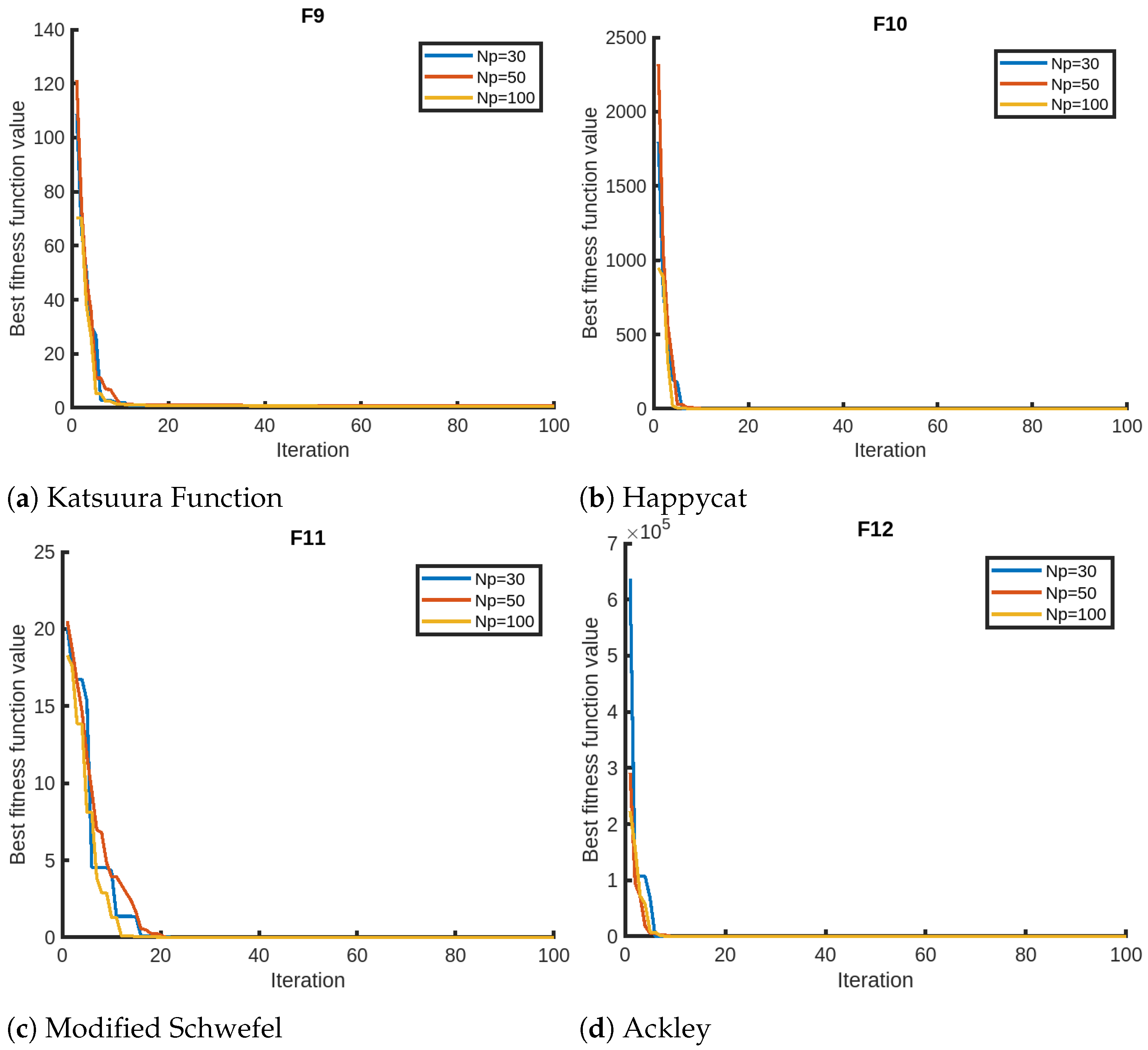

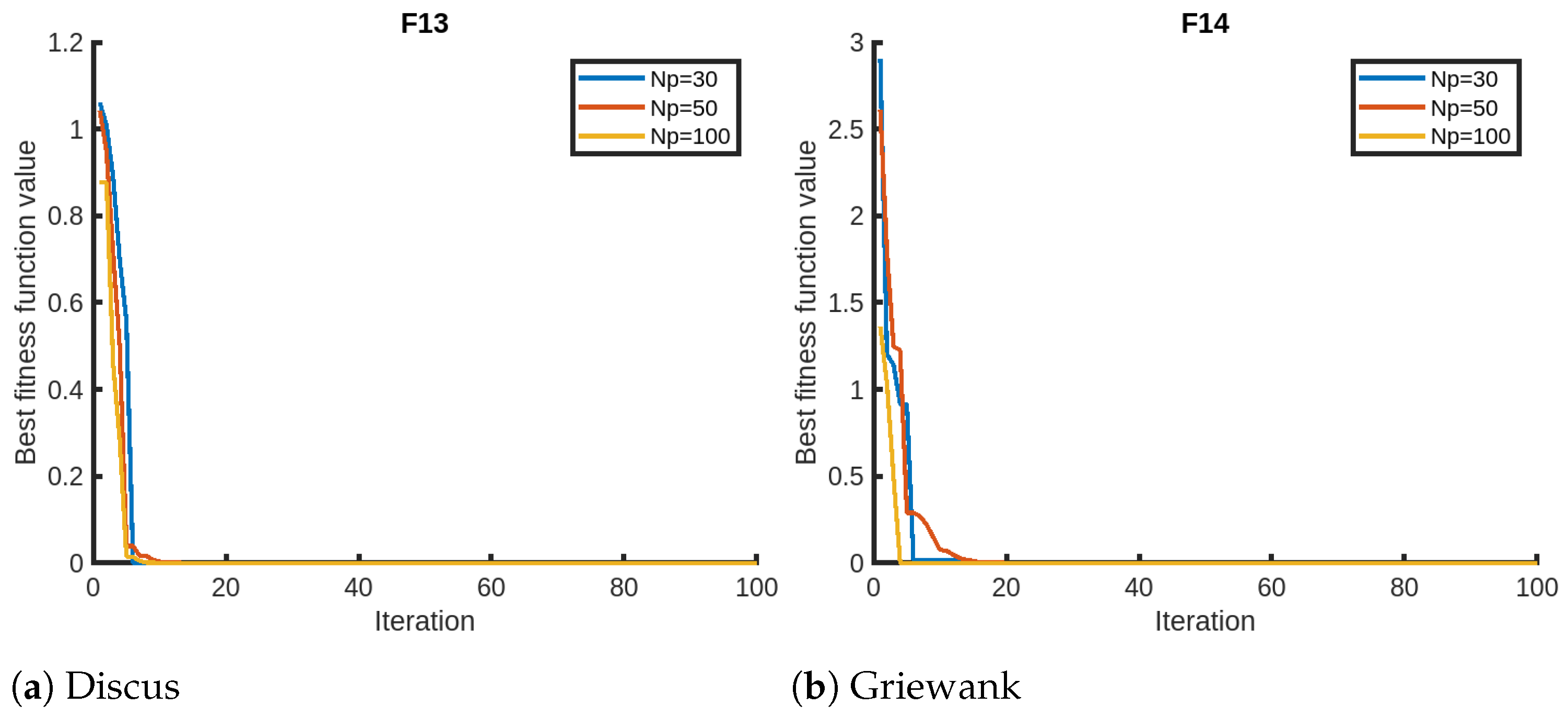

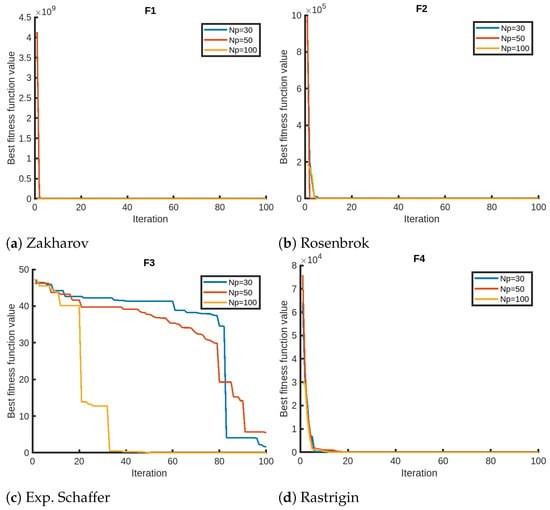

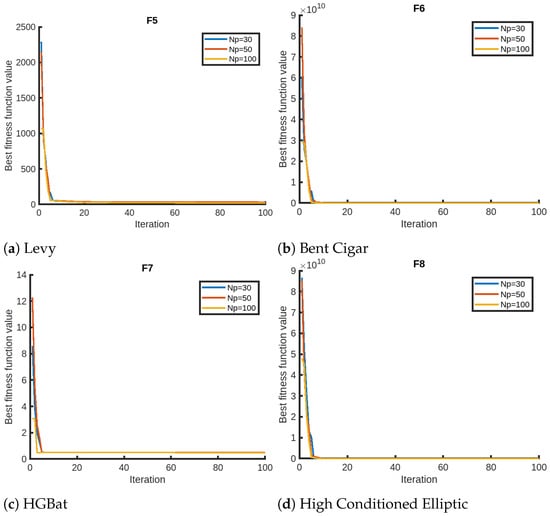

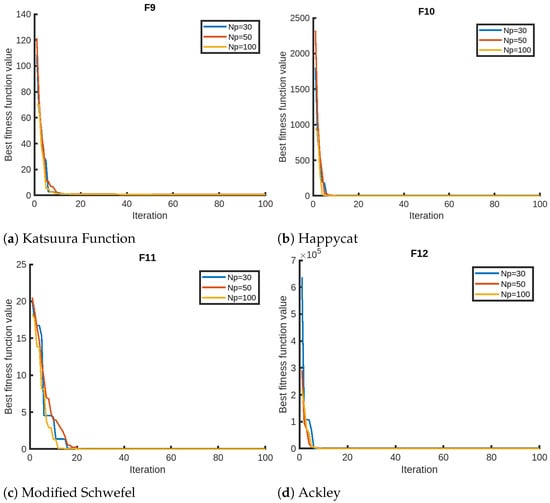

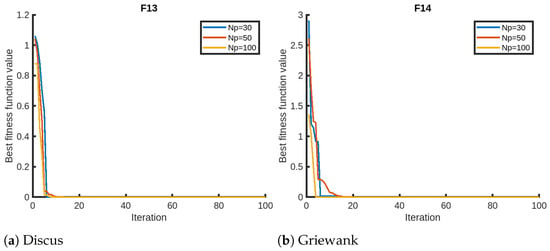

4.3. Sensitivity Analysis

We finally present the sensitivity analysis of QPPA focusing on two parameters, the number of iterations and the number of members in the population, using Setting 1. We evaluate QPPA using , , and . We assess the results for a maximum of 100 iterations for the sensitivity analysis on the maximum number of iterations. We opt for 100 instead of the 1000 iterations due to the faster convergence of QPPA. In Figure 2, we provide visual representations of the sensitivity analysis for the objective functions (F1–F4) that suggest that the population size has a significant impact on the performance of QPPA. Specifically, increasing the population size from to , and leads to improved convergence (fitness). We notice that the algorithm takes a longer time than usual for the Exp. Schaffer problem (F3). We notice a similar trend in terms of an improvement in convergence for a larger population size for the rest of the objective functions, as shown in Figure 3, Figure 4 and Figure 5. It is also noticeable that the Ackley function shows a slightly different trend, taking more time than the others. We also note that the fitness score values of HGBat are less than 1, and functions such as Griewank have a smaller range of fitness values. Furthermore, Modified Schwefel has the highest fitness values being reduced, and they are no lower than 3770. These differences are due to the nature of the objective functions according to the properties of their fitness landscapes.

Figure 2.

Sensitivity analyses of QPPA for the population size ().

Figure 3.

Sensitivity analyses of QPPA for the population size ().

Figure 4.

Sensitivity analyses of QPPA for the population size ().

Figure 5.

Sensitivity analyses of QPPA for the population size ().

4.4. Further Comparisons

Cooperative coevolution [48] leverages a divide-and-conquer paradigm in evolutionary algorithms to effectively decompose intricate problems into subcomponents (subpopulations) that collaborate and compete among themselves. The collaboration is implemented in terms of fitness evaluations, since the subcomponents feature partial solutions. Cooperative coevolution has been prominent for global optimization problems [49] and neuroevolution [50,51]. There have been a number of extensions of the cooperative coevolution framework [52], including the multi-island competitive cooperative coevolution (MICCC) [53], which used a real-coded genetic algorithm in the subpopulations. Bali and Chandra [54] demonstrated significant improvements in MICC when compared to earlier counterparts, and hence we use it to further compare our results for Setting 2 ().

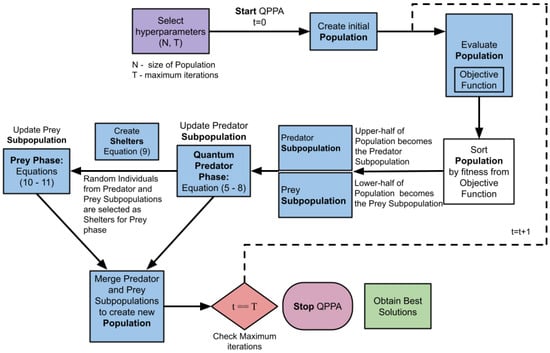

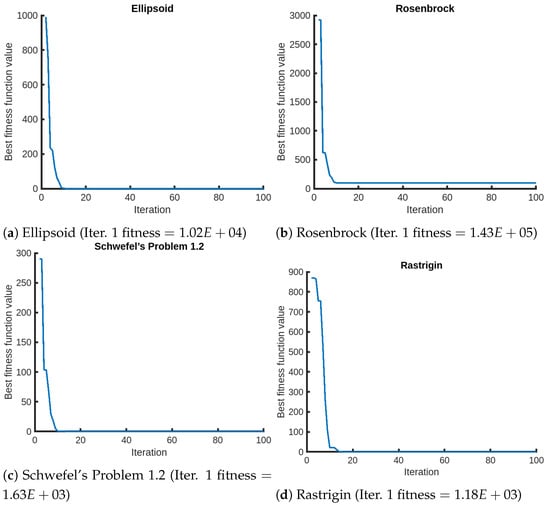

In Table 8, we present the results of QPPA against MICCC-6 and MICCC-7 across four benchmark functions, where 6 and 7 refer to the number of islands used for competition. Additionally, we include the number of iterations when QPPA achieves the required minimum fitness threshold. We observe the rapid convergence of QPPA in the optimization process. In Figure 6, we present the convergence plots of QPPA for selected objective functions from the 2nd to the 100th iteration. The results of the first iteration, denoting the initial performance, are explicitly reported in the figure captions for a better understanding.

Table 8.

Optimization results compared to MICCC (, , ).

Figure 6.

Convergence graphs of QPPA for selected functions for Setting 2 ().

5. Discussion

In summary, QPPA demonstrates superiority over existing algorithms due to the incorporation of quantum principles and predator–prey dynamics. One of the key features is the utilization of a quantum predator mechanism, which enhances the exploration capabilities and enables QPPA to overcome local minima. Instances of this can be seen in Table 3, where QPPA finds the global minima for multiple functions, including Zakharov, Schaffer, Rastrigin, Bent Cigar, High Conditioned Elliptic, Discus, and Griewank. The convergence of QPPA can also be seen in Figure 2, Figure 3, Figure 4 and Figure 5, where it approximately takes 10–20 iterations to converge for simpler problem instances and more iterations for larger problem instances (Figure 2). Moreover, note that in specific cases (), as shown in Figure 2b for the Rosenbrock function, we see that there is convergence with a fitness value of less than 100, remaining in a local minimum for a large period of time that exceeds 1000 iterations (Table 6—F2). In Table 8, we note that the results are shown for 15,000 iterations, where QPPA achieves 5.37E+01 for Rosenbrock and MICCC achieves 3.47E+01. However, we note that MICCC uses 1,500,000 function evaluations with a population size of 100, which is equivalent to 150,000 iterations. Hence, we can conclude that QPPA achieves similar convergence to MICCC at a fraction of the time.

Although QPPA performs exceptionally well in general, there are some limitations in certain situations. QPPA in some cases is not able to achieve the same level of performance as algorithms that contain more hyperparameters, which are specifically tuned for the problems. For example, as shown in Table 3, the mayfly optimization algorithm outperforms QPPA in the HGBat, Katsuura, and Modified Schwefel functions, suggesting that QPPA may not be able to adapt to certain problem domains.

In future work, QPPA can be extended by leveraging parallel computing, investigating hybridization strategies, and applying it to the training of machine learning models. Incorporating parallel computing techniques could enhance the efficiency and scalability of QPPA, enabling it to handle larger-scale optimization tasks [55,56]. There are several promising directions for adaptations of QPPA to solve complex problems in the area of discrete parameter optimization. Exploring hybridization strategies for QPPA with other metaheuristic algorithms and for the training of machine learning models could yield enhanced performance, which has been demonstrated for hybrid metaheuristic algorithms in the literature [57,58,59,60]. Finally, machine learning models [61,62] such as deep learning models could enable better performance with the automatic design of network architectures via QPPA-based neuroevolution [63]. Furthermore, QPPA can be implemented using parallel computing and also be applied to large dimensions for the same or similar functions [64], i.e., 1000 dimensions, for it to be compared to large-scale global optimization benchmarks from the literature [65].

Metaheuristic optimization algorithms have drawn criticism in terms of novelty due to an overemphasis on renaming similar processes in nature [66], e.g., there is not a significant difference between the firefly and dragonfly algorithms, and an objective evaluation of their properties remains to be performed. Rajwal et al. [67] reported that more than 500 new metaheuristic algorithms have been introduced in the past few decades. Moreover, many population-based metaheuristics have similar properties, and, over time, many benchmark problems have been defined by researchers, with minimal changes [67]. Hence, it is important to take into account these factors while designing new algorithms, since they could be simply re-implementations of existing algorithms on newer optimization function benchmarks. There has been less focus on parallel implementations and the open sharing of code and resources, which makes the field’s progress challenging. In our study, we try to address some of these shortcomings through a comprehensive study and the open sharing of the code and resources.

6. Conclusions

We demonstrate empirically that QPPA provides a significant contribution to the area of metaheuristic optimization. QPPA features predator–prey interactions and principles of quantum computing, where it emulates the behavior of predators chasing prey. QPPA demonstrated superior performance when compared to eight other algorithms across 14 benchmark optimization functions. Our results demonstrate that QPPA has the ability to reach global minima about 8 - 10 times faster when compared to related algorithms for a wide range of problems. Additionally, it can handle high-dimensional optimization problems and provides better solutions compared to traditional optimization algorithms.

Author Contributions

Conceptualization, A.A.K. and A.A.K.; methodology, A.A.K. and S.H.; software, A.A.K. and S.H.; validation, A.A.K., S.H. and R.C.; formal analysis, A.A.K. and R.C.; investigation, A.A.K. and R.C.; code curation, A.A.K. and S.H.; writing—original draft preparation, A.A.K. and R.C.; writing—review and editing, A.A.K. and R.C.; visualization, A.A.K. S.H.; supervision, R.C.; project administration, A.A.K. and R.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Code and data are available via Github repository https://github.com/sydney-machine-learning/predator-prey-optimisation (accessed on 5 October 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hussain, K.; Mohd Salleh, M.N.; Cheng, S.; Shi, Y. Metaheuristic research: A comprehensive survey. Artif. Intell. Rev. 2019, 52, 2191–2233. [Google Scholar] [CrossRef]

- Silveira, C.L.B.; Tabares, A.; Faria, L.T.; Franco, J.F. Mathematical optimization versus Metaheuristic techniques: A performance comparison for reconfiguration of distribution systems. Electr. Power Syst. Res. 2021, 196, 107272. [Google Scholar] [CrossRef]

- Halim, A.H.; Ismail, I.; Das, S. Performance assessment of the metaheuristic optimization algorithms: An exhaustive review. Artif. Intell. Rev. 2021, 54, 2323–2409. [Google Scholar] [CrossRef]

- Chand, S.; Rajesh, K.; Chandra, R. MAP-Elites based Hyper-Heuristic for the Resource Constrained Project Scheduling Problem. arXiv 2022, arXiv:2204.11162. [Google Scholar]

- Storn, R.; Price, K. Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341. [Google Scholar] [CrossRef]

- Mitchell, M. An Introduction to Genetic Algorithms; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Chandra, R.; Sharma, Y.V. Surrogate-assisted distributed swarm optimisation for computationally expensive geoscientific models. Comput. Geosci. 2023, 27, 939–954. [Google Scholar] [CrossRef]

- Alba, E.; Luque, G.; Nesmachnow, S. Parallel metaheuristics: Recent advances and new trends. Int. Trans. Oper. Res. 2013, 20, 1–48. [Google Scholar] [CrossRef]

- Alba, E. Parallel Metaheuristics: A New Class of Algorithms; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Siddique, N.; Adeli, H. Physics-based search and optimization: Inspirations from nature. Expert Syst. 2016, 33, 607–623. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W.; Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Future Gener. Comput. Syst. 2019, 101, 646–667. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Tayarani-N, M.H.; Akbarzadeh-T, M. Magnetic optimization algorithms a new synthesis. In Proceedings of the 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008; pp. 2659–2664. [Google Scholar]

- Birbil, Ş.İ.; Fang, S.C. An electromagnetism-like mechanism for global optimization. J. Glob. Optim. 2003, 25, 263–282. [Google Scholar] [CrossRef]

- Fiori, S.; Di Filippo, R. An improved chaotic optimization algorithm applied to a DC electrical motor modeling. Entropy 2017, 19, 665. [Google Scholar] [CrossRef]

- Steane, A. Quantum computing. Rep. Prog. Phys. 1998, 61, 117. [Google Scholar] [CrossRef]

- Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2018, 2, 79. [Google Scholar] [CrossRef]

- Dunjko, V.; Briegel, H.J. Machine learning & artificial intelligence in the quantum domain: A review of recent progress. Rep. Prog. Phys. 2018, 81, 074001. [Google Scholar]

- Majot, A.; Yampolskiy, R. Global catastrophic risk and security implications of quantum computers. Futures 2015, 72, 17–26. [Google Scholar] [CrossRef]

- De Leon, N.P.; Itoh, K.M.; Kim, D.; Mehta, K.K.; Northup, T.E.; Paik, H.; Palmer, B.; Samarth, N.; Sangtawesin, S.; Steuerman, D.W. Materials challenges and opportunities for quantum computing hardware. Science 2021, 372, eabb2823. [Google Scholar] [CrossRef]

- Khan, A.A.; Ahmad, A.; Waseem, M.; Liang, P.; Fahmideh, M.; Mikkonen, T.; Abrahamsson, P. Software architecture for quantum computing systems—A systematic review. J. Syst. Softw. 2023, 201, 111682. [Google Scholar] [CrossRef]

- Malossini, A.; Blanzieri, E.; Calarco, T. Quantum genetic optimization. IEEE Trans. Evol. Comput. 2008, 12, 231–241. [Google Scholar] [CrossRef]

- Yang, S.; Wang, M.; Jiao, L. A quantum particle swarm optimization. In Proceedings of the 2004 Congress on Evolutionary Computation (IEEE Cat. No. 04TH8753), Portland, OR, USA, 19–23 June 2004; Volume 1, pp. 320–324. [Google Scholar]

- Kaveh, A.; Kamalinejad, M.; Hamedani, K.B.; Arzani, H. Quantum Teaching-Learning-Based Optimization algorithm for sizing optimization of skeletal structures with discrete variables. Structures 2021, 32, 1798–1819. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Mohammadi, D.; Oliva, D.; Salimifard, K. Quantum marine predators algorithm for addressing multilevel image segmentation. Appl. Soft Comput. 2021, 110, 107598. [Google Scholar] [CrossRef]

- Chu, S.C.; Tsai, P.W.; Pan, J.S. Cat swarm optimization. In Proceedings of the PRICAI 2006: Trends in Artificial Intelligence: 9th Pacific Rim International Conference on Artificial Intelligence, Guilin, China, 7–11 August 2006; Proceedings 9. Springer: Berlin/Heidelberg, Germany, 2006; pp. 854–858. [Google Scholar]

- Kaur, S.; Awasthi, L.K.; Sangal, A.; Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Teodorović, D. Bee colony optimization (BCO). In Innovations in Swarm Intelligence; Springer: Berlin/Heidelberg, Germany, 2009; pp. 39–60. [Google Scholar]

- Passino, K.M. Bacterial foraging optimization. In Innovations and Developments of Swarm Intelligence Applications; IGI Global: Hershey, PA, USA, 2012; pp. 219–234. [Google Scholar]

- Tereshko, V.; Loengarov, A. Collective decision making in honey-bee foraging dynamics. Comput. Inf. Syst. 2005, 9, 1. [Google Scholar]

- Yazdani, D.; Meybodi, M.R. A novel artificial bee colony algorithm for global optimization. In Proceedings of the 2014 4th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 29–30 October 2014; pp. 443–448. [Google Scholar]

- Pian, J.; Wang, G.; Li, B. An improved ABC algorithm based on initial population and neighborhood search. IFAC-PapersOnLine 2018, 51, 251–256. [Google Scholar] [CrossRef]

- Zervoudakis, K.; Tsafarakis, S. A mayfly optimization algorithm. Comput. Ind. Eng. 2020, 145, 106559. [Google Scholar] [CrossRef]

- Gao, Z.M.; Zhao, J.; Li, S.R.; Hu, Y.R. The improved mayfly optimization algorithm. J. Phys. Conf. Ser. 2020, 1684, 012077. [Google Scholar] [CrossRef]

- Johari, N.F.; Zain, A.M.; Noorfa, M.H.; Udin, A. Firefly algorithm for optimization problem. Appl. Mech. Mater. 2013, 421, 512–517. [Google Scholar] [CrossRef]

- Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 2016, 27, 1053–1073. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar] [CrossRef]

- Mirjalili, S.Z.; Mirjalili, S.; Saremi, S.; Faris, H.; Aljarah, I. Grasshopper optimization algorithm for multi-objective optimization problems. Appl. Intell. 2018, 48, 805–820. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Rao, R. Jaya: A simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int. J. Ind. Eng. Comput. 2016, 7, 19–34. [Google Scholar]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Berezin, F.A.; Shubin, M. The Schrödinger Equation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 66. [Google Scholar]

- Shareef, H.; Ibrahim, A.A.; Mutlag, A.H. Lightning search algorithm. Appl. Soft Comput. 2015, 36, 315–333. [Google Scholar] [CrossRef]

- Heeren, T.; D’Agostino, R. Robustness of the two independent samples t-test when applied to ordinal scaled data. Stat. Med. 1987, 6, 79–90. [Google Scholar] [CrossRef] [PubMed]

- Potter, M.A.; De Jong, K.A. A cooperative coevolutionary approach to function optimization. In Proceedings of the International Conference on Parallel Problem Solving from Nature, Jerusalem, Israel, 9–14 October 1994; Springer: Berlin/Heidelberg, Germany, 1994; pp. 249–257. [Google Scholar]

- Yang, Z.; Tang, K.; Yao, X. Large scale evolutionary optimization using cooperative coevolution. Inf. Sci. 2008, 178, 2985–2999. [Google Scholar] [CrossRef]

- Potter, M.A.; Jong, K.A.D. Cooperative coevolution: An architecture for evolving coadapted subcomponents. Evol. Comput. 2000, 8, 1–29. [Google Scholar] [CrossRef] [PubMed]

- Chandra, R.; Ong, Y.S.; Goh, C.K. Co-evolutionary multi-task learning for dynamic time series prediction. Appl. Soft Comput. 2018, 70, 576–589. [Google Scholar] [CrossRef]

- Ma, X.; Li, X.; Zhang, Q.; Tang, K.; Liang, Z.; Xie, W.; Zhu, Z. A survey on cooperative co-evolutionary algorithms. IEEE Trans. Evol. Comput. 2018, 23, 421–441. [Google Scholar] [CrossRef]

- Bali, K.K.; Chandra, R. Multi-island competitive cooperative coevolution for real parameter global optimization. In Proceedings of the Neural Information Processing: 22nd International Conference, ICONIP 2015, Istanbul, Turkey, 9–12 November 2015; Proceedings Part III 22. Springer: Berlin/Heidelberg, Germany, 2015; pp. 127–136. [Google Scholar]

- Bali, K.K.; Chandra, R. Scaling up multi-island competitive cooperative coevolution for real parameter global optimisation. In Proceedings of the AI 2015: Advances in Artificial Intelligence: 28th Australasian Joint Conference, Canberra, ACT, Australia, 30 November–4 December 2015; Proceedings 28. Springer: Berlin/Heidelberg, Germany, 2015; pp. 34–48. [Google Scholar]

- Alba, E.; Tomassini, M. Parallelism and evolutionary algorithms. IEEE Trans. Evol. Comput. 2002, 6, 443–462. [Google Scholar] [CrossRef]

- Sudholt, D. Parallel evolutionary algorithms. In Springer Handbook of Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2015; pp. 929–959. [Google Scholar]

- Das, S.; Abraham, A.; Konar, A. Particle swarm optimization and differential evolution algorithms: Technical analysis, applications and hybridization perspectives. In Advances of Computational Intelligence in Industrial Systems; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–38. [Google Scholar]

- Fister, I.; Mernik, M.; Brest, J. Hybridization of evolutionary algorithms. arXiv 2013, arXiv:1301.0929. [Google Scholar]

- Grosan, C.; Abraham, A. Hybrid evolutionary algorithms: Methodologies, architectures, and reviews. In Hybrid Evolutionary Algorithms; Springer: Berlin/Heidelberg, Germany, 2007; pp. 1–17. [Google Scholar]

- Martínez-Estudillo, A.C.; Hervás-Martínez, C.; Martínez-Estudillo, F.J.; García-Pedrajas, N. Hybridization of evolutionary algorithms and local search by means of a clustering method. IEEE Trans. Syst. Man, Cybern. Part B (Cybernetics) 2006, 36, 534–545. [Google Scholar] [CrossRef]

- Squillero, G.; Tonda, A. Evolutionary algorithms and machine learning: Synergies, Challenges and Opportunities. In Proceedings of the GECCO 2020: Genetic and Evolutionary Computation Conference Companion, Cancún, Mexico, 8–12 July 2020; Association for Computing Machinery, Inc.: New York, NY, USA, 2020; pp. 1190–1205. [Google Scholar]

- Ibrahim, O.A.S. Evolutionary Algorithms and Machine Learning Techniques for Information Retrieval. Ph.D. Thesis, University of Nottingham, Nottingham, UK, 2017. [Google Scholar]

- Stanley, K.O.; Clune, J.; Lehman, J.; Miikkulainen, R. Designing neural networks through neuroevolution. Nat. Mach. Intell. 2019, 1, 24–35. [Google Scholar] [CrossRef]

- Jamil, M.; Yang, X.S. A literature survey of benchmark functions for global optimisation problems. Int. J. Math. Model. Numer. Optim. 2013, 4, 150–194. [Google Scholar] [CrossRef]

- Mahdavi, S.; Shiri, M.E.; Rahnamayan, S. Metaheuristics in large-scale global continues optimization: A survey. Inf. Sci. 2015, 295, 407–428. [Google Scholar] [CrossRef]

- Sörensen, K. Metaheuristics—The metaphor exposed. Int. Trans. Oper. Res. 2015, 22, 3–18. [Google Scholar] [CrossRef]

- Rajwar, K.; Deep, K.; Das, S. An exhaustive review of the metaheuristic algorithms for search and optimization: Taxonomy, applications, and open challenges. Artif. Intell. Rev. 2023, 56, 13187–13257. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).