Abstract

This paper proposes a new optimization algorithm for backpropagation (BP) neural networks by fusing integer-order differentiation and fractional-order differentiation, while fractional-order differentiation has significant advantages in describing complex phenomena with long-term memory effects and nonlocality, its application in neural networks is often limited by a lack of physical interpretability and inconsistencies with traditional models. To address these challenges, we propose a mixed integer-fractional (MIF) gradient descent algorithm for the training of neural networks. Furthermore, a detailed convergence analysis of the proposed algorithm is provided. Finally, numerical experiments illustrate that the new gradient descent algorithm not only speeds up the convergence of the BP neural networks but also increases their classification accuracy.

1. Introduction

Integer-order differentiation is a powerful tool for solving problems involving rates of change. Its roots are in Newton and Leibniz’s research and it has evolved into a fundamental branch of calculus. In addition, it possesses several excellent characteristics including ease of understanding, reliable numerical stability, and physical solvability, which make it highly applicable in real-world situations [1].

Meanwhile, fractional-order differentiation is an extended form of integer-order differentiation that enables the description of more complex phenomena such as long-term memory effects and nonlocality [2]. In the 1960s, it was first used to describe how heat energy moves through materials in the study of heat conduction and diffusion [3]. Over time, fractional-order differentiation spread to numerous other fields. In recent years, many researchers have established various physical models via fractional-order differentiation, leading to high-quality approximate solutions. For example, Matlob et al. [4] delved into the application of fractional-order differential calculus in modeling viscoelastic systems. Dehestani et al. [5] introduced fractional-order Legendre–Laguerre functions and explored their applications in solving fractional partial differential equations. Yuxiao et al. [6] discussed the variable order fractional grey model. Kuang et al. [7] focused on the application of fractional-order variable-gain supertwisting control to stabilize and control wafer stages in photolithography systems. Liu et al. [8] presented a two-stage fractional dynamical system model for microbial batch processes. These researchers have established physical models that utilize fractional-order differentiation, leading to high-quality approximate solutions. In addition, many optimal control problems also involve fractional-order differentiation. For example, Wang, Li, and Liu et al. [9] focused on establishing necessary optimality conditions and exact penalization techniques for constrained fractional optimal control problems. Bhrawy et al. [10] solved fractional optimal control problems using a Chebyshev–Legendre operational technique. Saxena et al. [11] presented a control strategy for load frequency using a fractional-order controller combined with reduced-order modeling. Mohammadi et al. [12] used Caputo-Fabrizio fractional modeling for analyzing hearing loss due to the Mumps virus.

The emergence of neural networks has greatly improved our ability to analyze and process huge volumes of data and deal with nonlinearity [13]. The concept of neural networks originated in the 1950s when people first used computers to simulate human thinking processes. The earliest neural network had a simple structure comprising neuron models and connection weights. With advancements in computer technology and data processing, neural network research experienced a breakthrough in the 1990s. During that period, the backpropagation algorithm and deep learning were introduced to enhance the performance of neural networks [14].

In recent years, fractional-order differentiation has found wide applications in neural networks with the rapid development of new technologies like big data, high-performance computing, and deep learning [15]. For example, Xu et al. [16] analyzed the characteristic equations of a neural network model using two different delays as bifurcation parameters. They developed a new fractional-order neural network model with multiple delays and demonstrated the impact of fractional order on stability. Pakdaman et al. [17] employed optimization methods to adjust the weights of artificial neural networks to solve different fractional-order equations, including linear and nonlinear terms. This approach ensures that the approximate solutions meet the requirements of the fractional-order equations. Similarly, Asgharinia et al. [18] employed a fractional-order controller in training radial basis function neural networks, resulting in improved performance and robustness. More evidence that fractional-order differentiation actively contributes to neural networks and produces desirable results is shown in the works of Fei et al. [19], Zhang et al. [20], and Cao et al. [21]. Comparing these models to integer-order models reveals that fractional-order neural networks exhibit high accuracy, fast convergence, and efficient memory usage. For BP neural networks, Bao et al. [22] and Han et al. [23] applied Caputo and Grünwald–Letnikov fractional-order differentiation to investigate their convergence performances, respectively, and achieved better results than those found with integer-order differentiation. However, only using fractional-order differentiation always lacks physical interpretation and produces inconsistency with many classical models. Thus, it necessitates the further study of the integration of the advantages of both integer-order differentiation and fractional-order differentiation for gradient descent learning of neural networks to improve the performance of neural networks in classification problems, which is what motivates the research.

This paper’s main contribution is to incorporate fractional-order differentiation, which can describe the memory effect and complex dynamic behavior, to optimize the traditional integer-order gradient descent process and construct a novel BP neural network using the proposed MIF optimization algorithm. Experimental results demonstrate that the new neural network exhibits both integer-order and fractional-order differentiation advantages.

The remainder of this paper proceeds as follows: Section 2 proposes the novel MIF optimization algorithm for BP neural networks; this is followed by the corresponding convergence analysis in Section 3. Next, Section 4 illustrates the numerical experiments and the result analysis. Finally, Section 5 provides concluding remarks.

2. Method

2.1. Network Structure

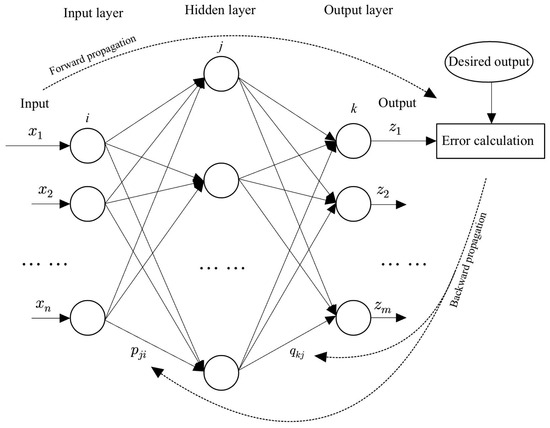

We employ a three-layer BP neural network structure, as illustrated in Figure 1. In the neural network model, the input layer consists of n neurons, the hidden layer contains h neurons, and the output layer has m neurons. The neural network has both forward propagation and backward propagation. The forward propagation process consists of learning data features of the neural network. For given sample data, let be the variable vector of the input layer, the variable vector of the hidden layer, the variable vector of the output layer, and the desired output. Moreover, let be the weight matrix connected with the input layer and the hidden layer, and the weight matrix connected to the hidden layer and the output layer, where , , and . The input and output of neurons in the hidden layer are described by

and

where , and f is an activation function. The output layer’s input and output are given by

and

where Then, in every iteration, we obtain the output result of the neural network. Now, we consider a total of t sample inputs. For the d-th sample input (), the neural network model automatically corrects the weight and the weight . The total error equation of the neural network’s performance evaluation is formulated by

where

and

The error calculation process above is used as the forward propagation process of the neural network model. Its weight update by gradient descent through the error equation can be regarded as the backpropagation of the neural network. For the k-th output layer’s error , according to the chain rule, the partial derivative of with respect to is given by

Similarly, the partial derivative of with respect to is expressed by

Then, the update functions of the weights and are calculated by

and

where l is the iteration number, and is the learning rate. The neural network’s backward propagation process is implemented by using the updated weights, which will be applied to the next iteration.

Figure 1.

A three-layer BP neural network architecture.

2.2. Fractional Parameter Update

We incorporate the fractional derivative parameter update method into the neural network to prevent the neural network from becoming trapped in local optima during the training process [24]. In the existing literature, the Riemann–Liouville fractional derivative, the Caputo fractional derivative, and the Grunwald–Letnikov fractional derivative are the three most commonly used types of fractional-order derivatives.

Definition 1.

The Riemann–Liouville fractional-order derivative of is defined as

where α is a positive real number and n is the smallest integer greater than α, and .

Definition 2.

The Caputo fractional-order derivative of is defined as

where α is a positive real number and .

Definition 3.

The Grunwald–Letnikov fractional-order derivative is defined as

where α is a positive real number.

We employ Caputo fractional-order differentiation in this paper for the following reasons: (1) Caputo fractional-order differentiation has a more unambiguous physical significance. It can be regarded as performing integer-order differentiation on function f first, followed by integration with a weight. This weight can be viewed as a “memory effect”, in which the function’s value at time x is influenced by its value at time t, with the degree of influence decreasing with the increase in time interval [25]. (2) The Caputo fractional order’s initial point is the same as that for the integer order. We know that the Caputo fractional-order derivative’s initial value problem can be written as . These are all integer-order derivatives in those forms. Since the initial values are known and the calculation of complicated fractional-order derivatives is not necessary, this greatly simplifies the problem’s solution [26]. (3) In comparison to the other two definitions of fractional order, the Caputo fractional-order derivative in the application of neural networks greatly reduces the amount of calculation. Meanwhile, it is closer to the integer order, making it more appropriate for neural networks’ error calculation process.

In order to simplify the computational complexity of neural networks, this paper chooses the power function and calculates its fractional derivative. For the power function , its n-th derivative is

Substituting into the Caputo derivative definition 2 yields

This integral can be further simplified by the properties of the Gamma function. When (in which case p is an integer and ), the integration becomes relatively simple. Finally, we obtain a specific form of fractional derivative for the power function used in this paper

Therefore, the gradient is calculated by

Finally, according to Formulas (1)–(18), combining fractional-order gradients and the integer-order gradient, the updated weights of the neural network model are

where is a parameter greater than zero.

2.3. Algorithms

This subsection will provide the proposed MIFBP neural network (MIFBPNN) algorithms. To further demonstrate the algorithm’s superiority, this paper compares this algorithm to the BP neural network (BPNN) and fractional-order BP neural network (FBPNN) algorithms. These three algorithms are presented in Algorithms 1–3.

| Algorithm 1 BPNN |

|

| Algorithm 2 FBPNN |

|

| Algorithm 3 MIFBPNN |

|

3. Convergence Analysis

In this section, we will prove the convergence properties of the MIFBPNN. To proceed, we need the following assumptions.

Assumption 1.

The activation function f is a Sigmoid function.

Assumption 2.

The weights and are bounded during the MIFBPNN training process.

Assumption 3.

The learning rate η and the parameter τ are positive numbers and have upper bounds.

We have the following main results.

Theorem 1.

Suppose that the Assumptions (1)–(3) are satisfied, then we conclude that the MIFBPNN is convergent.

Proof of Theorem 1.

This proof consists of two parts. First, we prove that the error function sequence is monotonic. Then we demonstrate that the sequence is bounded. To explore the monotonicity of the sequence , we compute

According to the Taylor expansion formula and (21), we obtain

where is the Lagrange constant between and .

Moreover, we see that

where and

Then, substituting (22) to (21) yields

We process according to the Taylor extension formula to obtain

where and is the Lagrange constant between and . According to (20), (23) and (24), we have

where

For simplicity, we define

From (17), we obtain

Furthermore, it follows from (26), (27) and (32) that

where and

By using (2), (3), (18) and (19), we have

and

where

Thus, we obtain

where .

Next, let

where represents the vector consisting of all stretched weighted parameters and . Then, we obtain through (33)–(42) that

Moreover, (24), (43) and (44) imply

Hence, from (27)–(46), we obtain

We note from (45) that

So, using (46)–(52), we convert into the following:

Therefore, the error function sequence is monotonically decreasing. Next, since

then, we obtain the learning rate

Now, we start to prove the above sequence is also bounded. Let

According to (53)–(56) we obtain

4. Numerical Experiments

4.1. Experiment Preparation

In this section, we use the MNIST handwritten dataset to evaluate the efficiency of the MIFBPNN. We also use BPNN and FBPNN to perform comparison experiments. The MNIST handwritten digit dataset contains 70,000 handwritten digit images ranging from 0 to 9, each with a size of 28 × 28 pixels, represented as a 784 × 1 vector. Each element in the vector takes values ranging from 0 to 255. We first build a 784-50-10 neural network model () based on the characteristics of the MNIST data, and in our experiments, we found that when the number of hidden layers is 50, we could achieve high accuracy in the test set, which greatly reduces the computational amount of the neural network model. The activation function uses the Sigmoid function, which is expressed as . Then, we divided the dataset into 60,000 handwritten images to use as the training set, and the remaining 10,000 sets of data to use as the test set. As the convergence of the neural network does not mean that the network has completely learned all the features and patterns of the dataset, but rather that the network has reached a relatively stable state, in order to study this stable state, in the experiments of this paper, we set the condition: When the accuracy of the test set of every 10 epochs grows no more than 0.001, then exit the training. In this way, it is possible to determine whether the neural network has reached a stable state based on the number of epochs, and thus better evaluate the neural network. (The experimental platform for these experiments was a PC running Windows OS, with an i7-11657G7 CPU and M40 GPU).

4.2. Optimal Parameters Tuning

In the experiment, we set the same parameters for each model to more effectively compare the three models used in this paper. There are three parameters in the proposed MIFBPNN. We first determined and based on the BP neural network training result in Table 1 and the training result of the FBPNN in Table 2. Based on the training outcomes of the MIFBPNN in Table 3, we discovered .

Table 1.

Test set accuracy of the BP neural network with various parameter values of .

Table 2.

Test set accuracy of the FBPNN with and various parameter values of .

Table 3.

Test set accuracy of the MIFBPNN with , and various parameter values of .

4.3. Training for Different Training Sets’ Sizes

A larger training set can provide more sample data for the model to learn from, which can help to improve the accuracy and generalizability of the model. Typically, the size of the training set can have an impact on the training results. Here, we investigate the performance of the three neural networks by adjusting various training set sizes, and the experimental results are displayed in Table 4, which shows that a larger training set can aid the model in better-capturing patterns and features from the data.

Table 4.

Performance of different neural networks under optimal hyperparameters on various sizes of training sets.

Table 4 shows that, in terms of training accuracy and the number of layers to stop training, the MIFBPNN performs best on training datasets of all sizes, followed by the FBPNN. It is worth noting that change in the MIFBPNN’s accuracy from the training dataset of 10,000 to 60,000 was the smallest, followed by that of the FBPNN. This can also be viewed as evidence that the MIFBPNN is more general and has a stronger capacity for convergence and learning while maintaining high accuracy.

4.4. Training Performances of Different Neural Networks

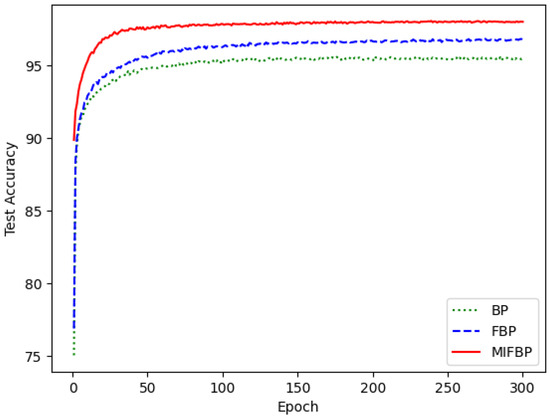

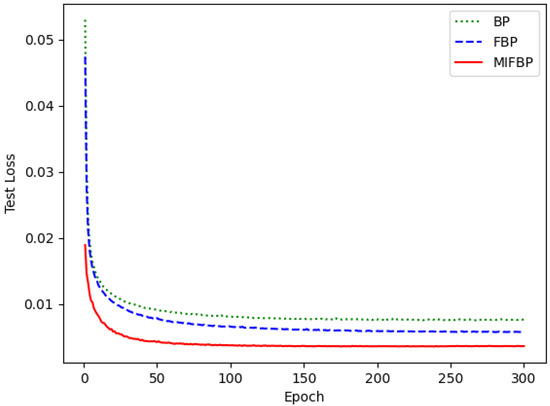

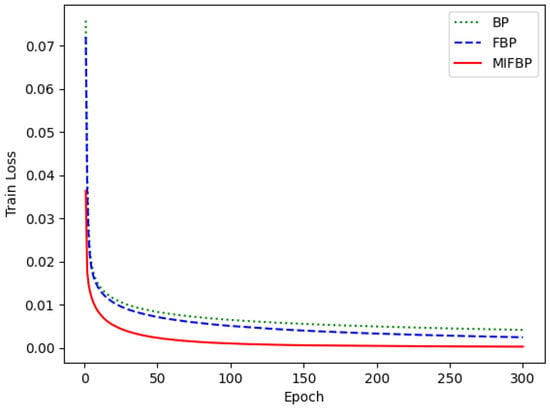

In this subsection, we demonstrate the performance of the three neural networks using the optimal parameters and a training dataset of 60,000. Figure 2 and Figure 3 depict the three neural networks’ test accuracy and test set loss performance, respectively, and Figure 4 depicts the three neural networks’ training set loss performance.

Figure 2.

Test accuracy performance.

Figure 3.

Test loss performance.

Figure 4.

Training loss performance.

The MIFBPNN has the highest accuracy, followed by the FBPNN, as can be seen in Figure 3. In terms of convergence speed, the accuracy of the MIFBPNN and FBPNN reaches a relatively stable state in about 50 and 80 epochs, while the BP neural network experiences this process in about 120 epochs. The MIFBPNN has the smallest loss and can reach the minimum loss fastest, both in the training set loss and the test set loss, as shown by Figure 3 and Figure 4.

Based on the above experimental results, it can be concluded that MIFBPNN exhibits high accuracy and fast convergence speed in various training set sizes under different experimental conditions. Especially on the maximum size training set (60,000 samples), the MIFBPNN not only achieved the highest accuracy (97.64%) but also had lower training epoch (71 epochs) than the other two networks, demonstrating its excellent learning ability and generalization ability. Furthermore, from the chart analysis, it can be seen that the MIFBPNN outperforms BPNN and FBPNN in terms of testing accuracy, testing loss, and training loss. Especially in terms of minimizing losses, MIFBP networks can achieve lower loss values faster, which is of great significance for optimizing and improving efficiency in practical applications. Secondly, the MIFBPNN also demonstrated its superiority in parameter adjustment experiments. After fixing and , and adjusting the parameters, it is found that the network performed best at , with an accuracy of 97.64%. This result not only demonstrates the advantage of MIFBPNN in parameter sensitivity but also highlights its adjustment flexibility and efficiency in practical applications. However, the limitation of this article is that it only applies the MIF algorithm to BPNN, and manual adjustments are used during the parameter adjustment process. In future work, attempts can be made to apply the MIF algorithm to other neural networks, and heuristic algorithms can be considered when adjusting parameters , , and . Furthermore, the computational effort of the MIFBPNN is not explained in this article. These issues are worth exploring in future work.

5. Conclusions

In this paper, we develop a new MIF gradient descent algorithm to construct the MIFBP neural network. Our research demonstrates the MIFBP neural network’s superior performance on the typical MNIST handwritten dataset. For various training set sizes, the MIFBP network outperforms conventional BP and FBPNN in terms of accuracy, convergence speed, and generalization. Additionally, in both training and testing scenarios, the MIFBPNN achieves the highest accuracy and the lowest loss under optimal parameter conditions. The MIFBPNN has shown good classification performance in the MNIST handwritten data and may perform challenging pattern recognition and prediction tasks in various fields in the future.

Author Contributions

Y.Z.: conceptualization, methodology, software, validation, formal analysis, investigation, and writing—original draft preparation. H.X.: conceptualization, methodology, writing—review and editing, supervision, and funding acquisition. Y.L.: methodology, project administration, and funding acquisition. G.L.: writing—review and editing, and validation. L.Z.: methodology, formal analysis, and investigation. C.T.: methodology, software, and validation. Y.W.: conceptualization and supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded an Australian Research Council linkage grant (LP160100528), an innovative connection grant (ICG002517), and an industry grant (SE67504).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Edwards, C.J. The Historical Development of the Calculus; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Oldham, K.; Spanier, J. The Fractional Calculus Theory and Applications of Differentiation and Integration to Arbitrary Order; Academic Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Hahn, D.W.; Özisik, M.N. Heat Conduction; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Matlob, M.A.; Jamali, Y. The concepts and applications of fractional order differential calculus in modeling of viscoelastic systems: A primer. Crit. Rev. Biomed. Eng. 2019, 47, 249–276. [Google Scholar] [CrossRef] [PubMed]

- Dehestani, H.; Ordokhani, Y.; Razzaghi, M. Fractional-order Legendre–Laguerre functions and their applications in fractional partial differential equations. Appl. Math. Comput. 2018, 336, 433–453. [Google Scholar] [CrossRef]

- Yuxiao, K.; Shuhua, M.; Yonghong, Z. Variable order fractional grey model and its application. Appl. Math. Model. 2021, 97, 619–635. [Google Scholar] [CrossRef]

- Kuang, Z.; Sun, L.; Gao, H.; Tomizuka, M. Practical fractional-order variable-gain supertwisting control with application to wafer stages of photolithography systems. IEEE/ASME Trans. Mechatronics 2021, 27, 214–224. [Google Scholar] [CrossRef]

- Liu, C.; Yi, X.; Feng, Y. Modelling and parameter identification for a two-stage fractional dynamical system in microbial batch process. Nonlinear Anal. Model. Control 2022, 27, 350–367. [Google Scholar] [CrossRef]

- Wang, S.; Li, W.; Liu, C. On necessary optimality conditions and exact penalization for a constrained fractional optimal control problem. Optim. Control Appl. Methods 2022, 43, 1096–1108. [Google Scholar] [CrossRef]

- Bhrawy, A.H.; Ezz-Eldien, S.S.; Doha, E.H.; Abdelkawy, M.A.; Baleanu, D. Solving fractional optimal control problems within a Chebyshev–Legendre operational technique. Int. J. Control 2017, 90, 1230–1244. [Google Scholar] [CrossRef]

- Saxena, S. Load frequency control strategy via fractional-order controller and reduced-order modeling. Int. J. Electr. Power Energy Syst. 2019, 104, 603–614. [Google Scholar] [CrossRef]

- Mohammadi, H.; Kumar, S.; Rezapour, S.; Etemad, S. A theoretical study of the Caputo–Fabrizio fractional modeling for hearing loss due to Mumps virus with optimal control. Chaos Solitons Fractals 2021, 144, 110668. [Google Scholar] [CrossRef]

- Aldrich, C. Exploratory Analysis of Metallurgical Process Data with Neural Networks and Related Methods; Elsevier: Amsterdam, The Netherlands, 2002. [Google Scholar]

- Bhattacharya, U.; Parui, S.K. Self-adaptive learning rates in backpropagation algorithm improve its function approximation performance. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 5, pp. 2784–2788. [Google Scholar]

- Niu, H.; Chen, Y.; West, B.J. Why do big data and machine learning entail the fractional dynamics? Entropy 2021, 23, 297. [Google Scholar] [CrossRef]

- Xu, C.; Liao, M.; Li, P.; Guo, Y.; Xiao, Q.; Yuan, S. Influence of multiple time delays on bifurcation of fractional-order neural networks. Appl. Math. Comput. 2019, 361, 565–582. [Google Scholar] [CrossRef]

- Pakdaman, M.; Ahmadian, A.; Effati, S.; Salahshour, S.; Baleanu, D. Solving differential equations of fractional order using an optimization technique based on training artificial neural network. Appl. Math. Comput. 2017, 293, 81–95. [Google Scholar] [CrossRef]

- Asgharnia, A.; Jamali, A.; Shahnazi, R.; Maheri, A. Load mitigation of a class of 5-MW wind turbine with RBF neural network based fractional-order PID controller. ISA Trans. 2020, 96, 272–286. [Google Scholar] [CrossRef] [PubMed]

- Fei, J.; Wang, H.; Fang, Y. Novel neural network fractional-order sliding-mode control with application to active power filter. IEEE Trans. Syst. Man Cybern. Syst. 2021, 52, 3508–3518. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, M.; Cao, J.; Zheng, W.X. Dynamical bifurcation of large-scale-delayed fractional-order neural networks with hub structure and multiple rings. IEEE Trans. Syst. Man Cybern. Syst. 2020, 52, 1731–1743. [Google Scholar] [CrossRef]

- Cao, J.; Stamov, G.; Stamova, I.; Simeonov, S. Almost periodicity in impulsive fractional-order reaction–diffusion neural networks with time-varying delays. IEEE Trans. Cybern. 2020, 51, 151–161. [Google Scholar] [CrossRef] [PubMed]

- Bao, C.; Pu, Y.; Zhang, Y. Fractional-order deep backpropagation neural network. Comput. Intell. Neurosci. 2018, 2018, 7361628. [Google Scholar] [CrossRef] [PubMed]

- Han, X.; Dong, J. Applications of fractional gradient descent method with adaptive momentum in BP neural networks. Appl. Math. Comput. 2023, 448, 127944. [Google Scholar] [CrossRef]

- Prasad, K.; Krushna, B. Positive solutions to iterative systems of fractional order three-point boundary value problems with Riemann–Liouville derivative. Fract. Differ. Calc. 2015, 5, 137–150. [Google Scholar] [CrossRef]

- Hymavathi, M.; Ibrahim, T.F.; Ali, M.S.; Stamov, G.; Stamova, I.; Younis, B.; Osman, K.I. Synchronization of fractional-order neural networks with time delays and reaction-diffusion terms via pinning control. Mathematics 2022, 10, 3916. [Google Scholar] [CrossRef]

- Wu, Z.; Zhang, X.; Wang, J.; Zeng, X. Applications of fractional differentiation matrices in solving Caputo fractional differential equations. Fractal Fract. 2023, 7, 374. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).