Enhancing Program Synthesis with Large Language Models Using Many-Objective Grammar-Guided Genetic Programming

Abstract

:1. Introduction

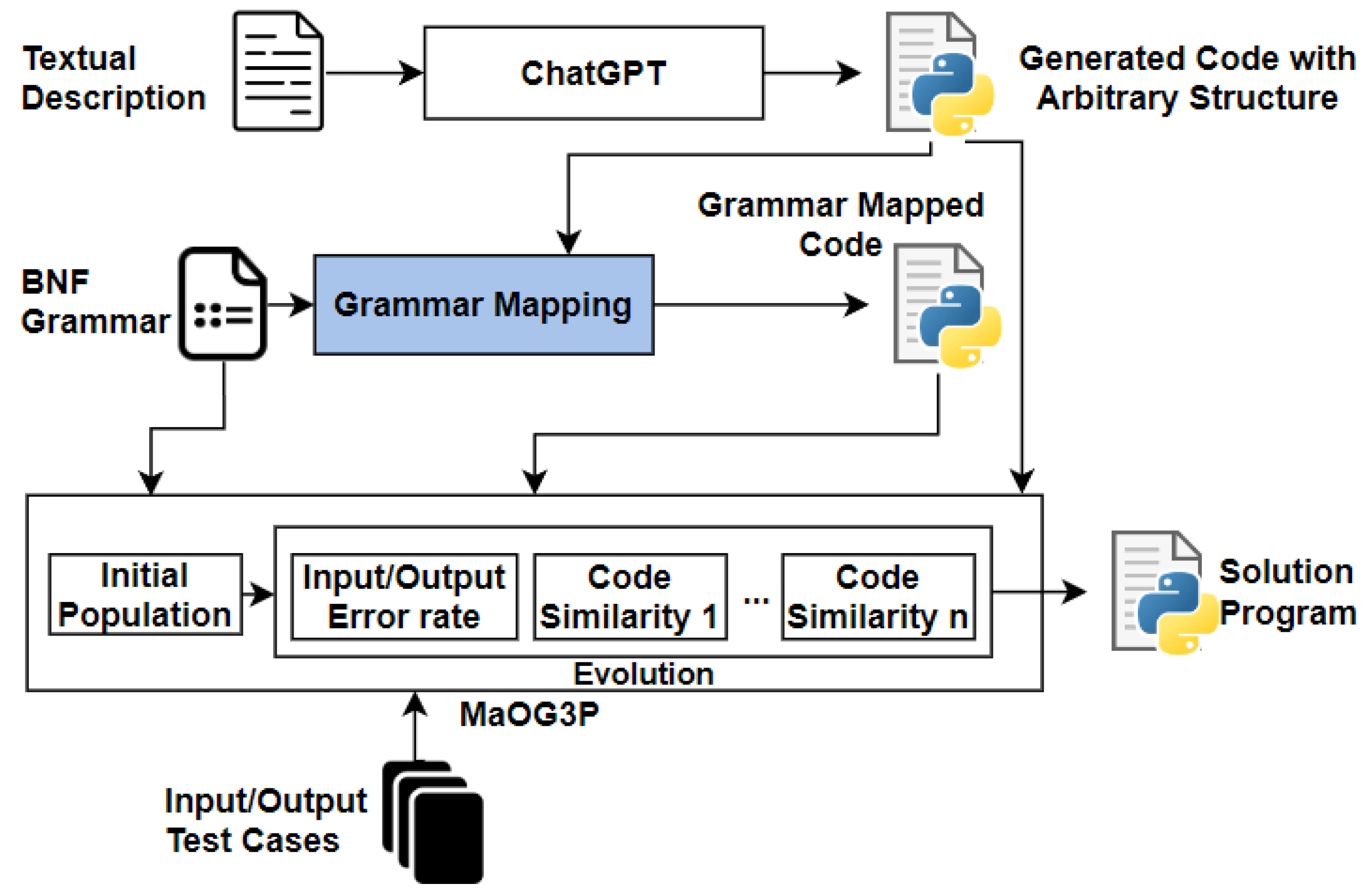

- We show that while our considered LLM (i.e., ChatGPT) successfully generates correct programs for most tasks in a well-known program synthesis benchmark, it struggles to generate programs that obey the predefined grammars.

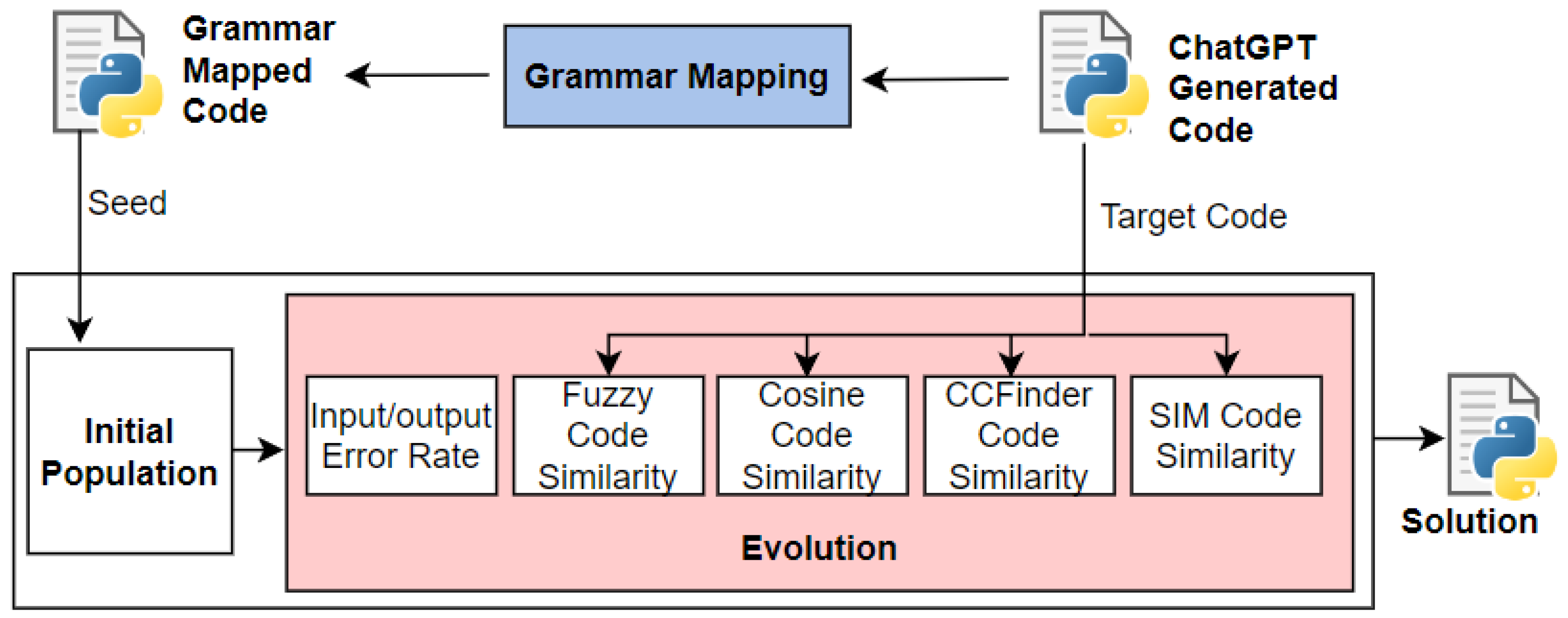

- We propose an evolutionary approach, i.e., Many-Objective Grammar-Guided Genetic Programming (MaOG3P), that exploits the LLM-generated program in two parts while guaranteeing that any evolved programs adhere to the predefined grammar:

- –

- Leveraging many-objective similarity measures towards the LLM-generated code to guide the search process throughout the evolution.

- –

- Mapping the LLM-generated program to another program that obeys the predefined grammar while maintaining as much information as possible and using it as a seed in the initial population.

2. Background and Related Work

2.1. Genetic Programming

2.2. Grammar-Guided Genetic Programming

2.3. Large Language Models

2.4. Approaches for Detecting Program Similarity

2.4.1. FuzzyWuzzy

2.4.2. Cosine

- Preprocessing and tokenisation: During this phase, we eliminate extraneous structural elements and tokenise the source code.

- Construct frequency vector: We compute the token frequencies and store them in a vector representing term frequencies.

- Similarity score calculation: As shown in Equation (1), we calculate the similarity score between two source programs by applying the cosine similarity function between two frequency vectors (denoted as vectors and ) from the previous step.

2.4.3. CCFinder

- Given our goal of generating a similarity score for two code snippets, we divide the length of the cloned code by the length of the source code:where represents the longest code clone between the code snippets a and b, while denotes the length of the code snippet a in terms of the number of characters.

- Instead of employing the suffix-tree matching algorithm, we calculate the length of the common tokens between two code snippets in a matrix form. Each token sequence corresponds to a dimension in this matrix.

- We also removed the reporting step of code clones. This decision was made because reporting the line number is no longer essential for our purposes.

2.4.4. SIM

3. Proposed Approach

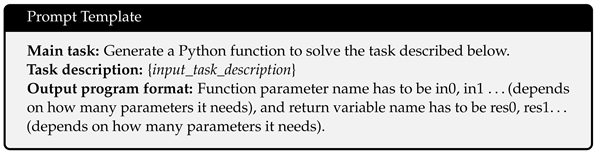

3.1. LLM Code Generation

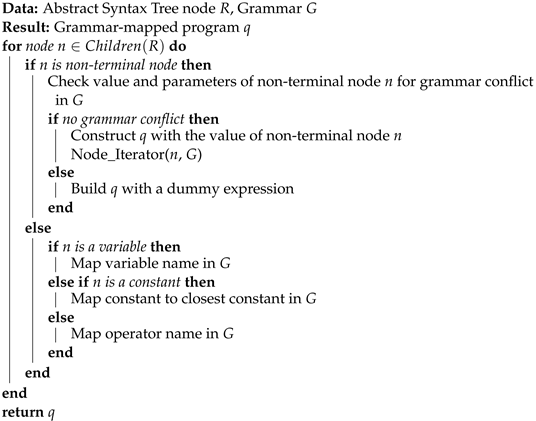

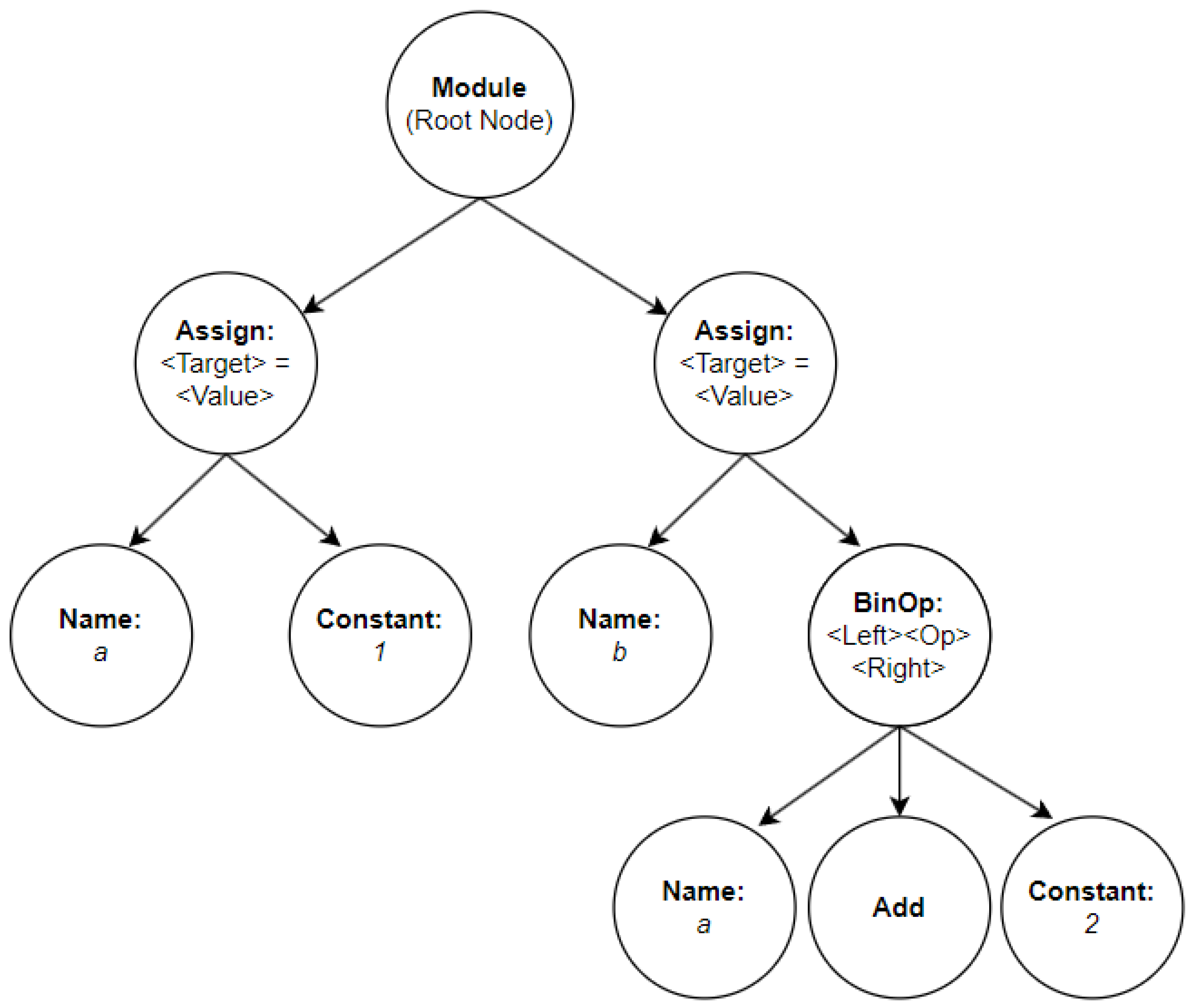

3.2. Grammar-Mapping of LLM-Generated Code

| Algorithm 1: Grammar Mapping Algorithm. |

Data: Program p from arbitrary source, Grammar G Result: Grammar-mapped program q Build Abstract Syntax Tree T from p Get Root of the T Node_Iterator(R, G) return q |

| Algorithm 2: Node_Iterator. |

|

3.3. Many-Objective G3P with Seeding

4. Experiment Setup

4.1. Research Questions

- RQ1: How effective is ChatGPT at program synthesis?

- RQ2: Could we fit ChatGPT-generated code to predefined grammars?

- RQ3: Could we improve the performance of ChatGPT at synthesising programs that fit predefined grammars using MaOG3P?

4.2. Benchmark Dataset

4.3. Generating Programs Using ChatGPT

4.4. Parameter Settings

5. Results

5.1. Effectiveness of ChatGPT at Program Synthesis (RQ1)

) notes the problem was solved violating the predefined grammar, while a cross symbol (✘) means it failed to generate a correct program.

) notes the problem was solved violating the predefined grammar, while a cross symbol (✘) means it failed to generate a correct program.5.2. Fitting ChatGPT-Generated Code to Predefined Grammars (RQ2)

) when most information is successfully mapped in a code that fits the predefined grammar. Otherwise, we note the mapping status as “poorly mapped” (denoted as ✘), which indicates the grammar-mapped code contains minimal information from the source code. For 13 problems, the LLM-generated code was successfully mapped to programs that adhere to the BNF grammar without any loss of information (i.e., completely mapped). The LLM-generated code was mapped to nearly solved programs in four cases, demonstrating close alignment with the desired solutions (i.e., partially mapped). Unfortunately, for 10 problems, large grammar conflicts prevented successful mapping. These cases are categorised as poorly mapped.

) when most information is successfully mapped in a code that fits the predefined grammar. Otherwise, we note the mapping status as “poorly mapped” (denoted as ✘), which indicates the grammar-mapped code contains minimal information from the source code. For 13 problems, the LLM-generated code was successfully mapped to programs that adhere to the BNF grammar without any loss of information (i.e., completely mapped). The LLM-generated code was mapped to nearly solved programs in four cases, demonstrating close alignment with the desired solutions (i.e., partially mapped). Unfortunately, for 10 problems, large grammar conflicts prevented successful mapping. These cases are categorised as poorly mapped.5.2.1. Partially Mapped Code Analysis

- Replace Space with Newline: This task involves counting the number of newline characters in a given string. LLM-generated code counts the number of newlines by using an advanced Python grammar “List Comprehension”. However, this particular grammar rule is not included in the predefined BNF grammar. Consequently, the grammar-mapping algorithm replaced it with a dummy expression.

- Even Squares: In this task, ChatGPT used a range function with three parameters to iterate through the loop. However, in our grammar, the range function contains only two parameters. Therefore, when mapping the range function, the algorithm ignored the extra parameter (i.e., the step parameter). Another grammar conflict occurred when mapping the is_integer function because the predefined grammar does not support such a function. This type of grammar conflict is replaced with a dummy expression for further evolutionary improvements.

- Last Index of Zero: The same parameter conflict for mapping the range function occurred in this task. In addition to this similar conflict, ChatGPT used the break statement to terminate the loop while the break statement is not defined in the BNF grammar.

- Digits: ChatGPT solved this task using multiple return statements with different outputs, while the MaOG3P grammar only allows the program to use the return statement once to return the output variable. The mapping algorithm handled this conflict by replacing the extra return statements with dummy expressions.

5.2.2. Poorly Mapped Programs Analysis

- “String Lengths Backwards”, "Sum of Squares”, Vectors Summed”, and “Negative To Zero”: ChatGPT solved these problems with one line “list comprehension” functions while such expressions are not defined in our grammar.

- “Checksum”, Vector Average”: When mapping the program for these tasks, significant grammatical conflicts adversely affected the mapping performance.

- “X-Word Lines”, Pig Latin”, Word Stats”: ChatGPT used the split function to solve these string manipulation tasks. However, our BNF grammar defined a split function integrated with a loop that cannot be used separately. This raised a grammar conflict that the mapping algorithm could not address. ChatGPT also builds the output string for the task by using the join function, which is not defined in our grammar.

- “Super Anagrams”, Scrabble Score”: The choice of data structure is limited in our BNF grammar. ChatGPT used a dict to solve these problems while the dict data structure is not defined in the grammar.

5.3. Performance of Our Proposed Approach (RQ3)

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hara, A.; Kushida, J.I.; Tanabe, S.; Takahama, T. Parallel Ant Programming using genetic operators. In Proceedings of the IEEE IWCIA, Hiroshima, Japan, 13 July 2013; pp. 75–80. [Google Scholar]

- Masood, N.; Seyed, A.M.; Emadaldin, M.; Amir, H.G. Introduction of ABCEP as an automatic programming method. Inf. Sci. 2021, 545, 575–594. [Google Scholar]

- Hosseini Amini, S.M.H.; Abdollahi, M.; Amir Haeri, M. Rule-centred genetic programming (RCGP): An imperialist competitive approach. Appl. Intell. 2020, 50, 2589–2609. [Google Scholar] [CrossRef]

- Kim, H.T.; Kang, H.K.; Ahn, C.W. A conditional dependency based probabilistic model building grammatical evolution. IEICE Trans. Inf. Syst. 2016, 99, 1937–1940. [Google Scholar] [CrossRef]

- Mahanipour, A.; Nezamabadi-Pour, H. GSP: An automatic programming technique with gravitational search algorithm. Appl. Intell. 2019, 49, 1502–1516. [Google Scholar] [CrossRef]

- Lopes, R.L.; Costa, E. GEARNet: Grammatical Evolution with Artificial Regulatory Networks. In Proceedings of the GECCO, Amsterdam, The Netherlands, 6–10 July 2013; pp. 973–980. [Google Scholar]

- Bowers, M.; Olausson, T.X.; Wong, L.; Grand, G.; Tenenbaum, J.B.; Ellis, K.; Solar-Lezama, A. Top-Down Synthesis for Library Learning. Proc. ACM Program. Lang. 2023, 7, 41. [Google Scholar] [CrossRef]

- Lee, W.; Heo, K.; Alur, R.; Naik, M. Accelerating Search-Based Program Synthesis Using Learned Probabilistic Models. In Proceedings of the PLDI, Philadelphia, PA, USA, 18–22 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 436–449. [Google Scholar]

- Ameen, S.; Lelis, L.H. Program synthesis with best-first bottom-up search. J. Artif. Intell. Res. 2023, 77, 1275–1310. [Google Scholar] [CrossRef]

- Guria, S.N.; Foster, J.S.; Van Horn, D. Absynthe: Abstract Interpretation-Guided Synthesis. Proc. ACM Program. Lang. 2023, 7, 171. [Google Scholar] [CrossRef]

- Yuan, Y.; Banzhaf, W. Iterative genetic improvement: Scaling stochastic program synthesis. Artif. Intell. 2023, 322, 103962. [Google Scholar] [CrossRef]

- Miltner, A.; Fisher, K.; Pierce, B.C.; Walker, D.; Zdancewic, S. Synthesizing Bijective Lenses. Proc. ACM Program. Lang. 2017, 2, 1. [Google Scholar] [CrossRef]

- Valizadeh, M.; Berger, M. Search-Based Regular Expression Inference on a GPU. Proc. ACM Program. Lang. 2023, 7, 160. [Google Scholar] [CrossRef]

- Helmuth, T.; Frazier, J.G.; Shi, Y.; Abdelrehim, A.F. Human-Driven Genetic Programming for Program Synthesis: A Prototype. In Proceedings of the GECCO, Lisbon, Portugal, 15–19 July 2023; pp. 1981–1989. [Google Scholar]

- Cropper, A.; Dumancic, S. Learning large logic programs by going beyond entailment. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI-20), Yokohama, Japan, 7–15 January 2021; pp. 2073–2079. [Google Scholar]

- Arcuri, A.; Yao, X. Co-evolutionary automatic programming for software development. Inf. Sci. 2014, 259, 412–432. [Google Scholar] [CrossRef]

- Botelho Guerra, H.; Ferreira, J.A.F.; Costa Seco, J.A. Hoogle: Constants and λ-abstractions in Petri-net-based Synthesis using Symbolic Execution. In Proceedings of the ECOOP, Seattle, WA, USA, 17–21 July 2023; Volume 263, pp. 4:1–4:28. [Google Scholar]

- Tao, N.; Ventresque, A.; Saber, T. Program synthesis with generative pre-trained transformers and grammar-guided genetic programming grammar. In Proceedings of the LA-CCI, Recife-Pe, Brazil, 29 October–1 November 2023; pp. 1–6. [Google Scholar]

- Tao, N.; Ventresque, A.; Saber, T. Assessing similarity-based grammar-guided genetic programming approaches for program synthesis. In Proceedings of the OLA, Sicilia, Italy, 18–20 July 2022; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Tao, N.; Ventresque, A.; Saber, T. Many-objective Grammar-guided Genetic Programming with Code Similarity Measurement for Program Synthesis. In Proceedings of the IEEE LA-CCI, Recife-Pe, Brazil, 29 October–1 November 2023. [Google Scholar]

- Tao, N.; Ventresque, A.; Saber, T. Multi-objective grammar-guided genetic programming with code similarity measurement for program synthesis. In Proceedings of the IEEE CEC, Padua, Italy, 18–23 July 2022. [Google Scholar]

- Saha, R.K.; Ura, A.; Mahajan, S.; Zhu, C.; Li, L.; Hu, Y.; Yoshida, H.; Khurshid, S.; Prasad, M.R. SapientML: Synthesizing Machine Learning Pipelines by Learning from Human-Writen Solutions. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 21–29 May 2022; pp. 1932–1944. [Google Scholar]

- Poliansky, R.; Sipper, M.; Elyasaf, A. From Requirements to Source Code: Evolution of Behavioral Programs. Appl. Sci. 2022, 12, 1587. [Google Scholar] [CrossRef]

- Beltramelli, T. pix2code: Generating code from a graphical user interface screenshot. In Proceedings of the ACM SIGCHI, Paris, France, 19–22 June 2018. [Google Scholar]

- Li, Y.; Choi, D.; Chung, J.; Kushman, N.; Schrittwieser, J.; Leblond, R.; Eccles, T.; Keeling, J.; Gimeno, F.; Dal Lago, A.; et al. Competition-Level Code Generation with AlphaCode, 2022. Available online: https://doi.org/10.1126/science.abq1158 (accessed on 27 March 2024).

- Sobania, D.; Briesch, M.; Rothlauf, F. Choose your programming copilot: A comparison of the program synthesis performance of github copilot and genetic programming. In Proceedings of the GECCO, Boston, MA, USA, 9–13 July 2022. [Google Scholar]

- Koza, J.R. Genetic Programming II: Automatic Discovery of Reusable Programs; MIT Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Forstenlechner, S.; Fagan, D.; Nicolau, M.; O’Neill, M. A grammar design pattern for arbitrary program synthesis problems in genetic programming. In Proceedings of the Genetic Programming: 20th European Conference, EuroGP 2017, Amsterdam, The Netherlands, 19–21 April 2017; Proceedings 20. Springer: Berlin/Heidelberg, Germany, 2017; pp. 262–277. [Google Scholar]

- Li, T.O.; Zong, W.; Wang, Y.; Tian, H.; Wang, Y.; Cheung, S.C.; Kramer, J. Nuances are the Key: Unlocking ChatGPT to Find Failure-Inducing Tests with Differential Prompting. In Proceedings of the IEEE/ACM ASE, Luxembourg, 11–15 September 2023; pp. 14–26. [Google Scholar]

- Ma, W.; Liu, S.; Wenhan, W.; Hu, Q.; Liu, Y.; Zhang, C.; Nie, L.; Liu, Y. The Scope of ChatGPT in Software Engineering: A Thorough Investigation. arXiv 2023, arXiv:2305.12138. [Google Scholar]

- Surameery, N.; Shakor, M. Use Chat GPT to Solve Programming Bugs. Int. J. Inf. Technol. Comput. Eng. 2023, 3, 17–22. [Google Scholar] [CrossRef]

- Xie, Z.; Chen, Y.; Zhi, C.; Deng, S.; Yin, J. ChatUniTest: A ChatGPT-based automated unit test generation tool. arXiv 2023, arXiv:2305.04764. [Google Scholar]

- Minaee, S.; Mikolov, T.; Nikzad, N.; Chenaghlu, M.; Socher, R.; Amatriain, X.; Gao, J. Large Language Models: A Survey. arXiv 2024, arXiv:2401.14423. [Google Scholar]

- Jesse, K.; Ahmed, T.; Devanbu, P.T.; Morgan, E. Large language models and simple, stupid bugs. In Proceedings of the IEEE/ACM MSR, Melbourne, Australia, 15–16 May 2023; pp. 563–575. [Google Scholar]

- Asare, O.; Nagappan, M.; Asokan, N. Is github’s copilot as bad as humans at introducing vulnerabilities in code? Empir. Softw. Eng. 2023, 28, 129. [Google Scholar] [CrossRef]

- Schuster, R.; Song, C.; Tromer, E.; Shmatikov, V. You autocomplete me: Poisoning vulnerabilities in neural code completion. In Proceedings of the USENIX Security 21, Virtual, 11–13 August 2021; pp. 1559–1575. [Google Scholar]

- Stechly, K.; Marquez, M.; Kambhampati, S. GPT-4 Doesn’t Know It’s Wrong: An Analysis of Iterative Prompting for Reasoning Problems. arXiv 2023. Available online: https://openreview.net/forum?id=PMtZjDYB68 (accessed on 27 March 2024).

- Krishna, S.; Agarwal, C.; Lakkaraju, H. Understanding the Effects of Iterative Prompting on Truthfulness. arXiv 2024, arXiv:2402.06625v1. [Google Scholar]

- Fraser, G.; Arcuri, A. The seed is strong: Seeding strategies in search-based software testing. In Proceedings of the 2012 IEEE Fifth International Conference on Software Testing, Verification and Validation, Montreal, QC, Canada, 17–21 April 2012; pp. 121–130. [Google Scholar]

- Saber, T.; Brevet, D.; Botterweck, G.; Ventresque, A. Is seeding a good strategy in multi-objective feature selection when feature models evolve? Inf. Softw. Technol. 2018, 95, 266–280. [Google Scholar] [CrossRef]

- Wick, J.; Hemberg, E.; O’Reilly, U.M. Getting a head start on program synthesis with genetic programming. In Proceedings of the Genetic Programming: 24th European Conference, EuroGP 2021, Held as Part of EvoStar 2021, Virtual Event, 7–9 April 2021; Proceedings 24. Springer: Berlin/Heidelberg, Germany, 2021; pp. 263–279. [Google Scholar]

- Helmuth, T.; Spector, L. General program synthesis benchmark suite. In Proceedings of the GECCO, Madrid, Spain, 11–15 July 2015. [Google Scholar]

- Miller, J.F.; Harding, S.L. Cartesian genetic programming. In Proceedings of the GECCO, Atlanta, GA, USA, 12–16 July 2008. [Google Scholar]

- Brameier, M.; Banzhaf, W.; Banzhaf, W. Linear Genetic Programming; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- O’Neill, M.; Ryan, C. Volume 4 of Genetic programming. In Grammatical Evolution: Evolutionary Automatic Programming in a Arbitrary Language; Kluwer Academic Publishers: Norwell, MA, USA, 2003. [Google Scholar]

- Whigham, P.A. Grammatical Bias for Evolutionary Learning. Ph.D. Thesis, University College, Australian Defence Force Academy, University of New South Wales, Canberra, Campbell, ACT, Australia, 1997. [Google Scholar]

- O’Neill, M.; Nicolau, M.; Agapitos, A. Experiments in program synthesis with grammatical evolution: A focus on integer sorting. In Proceedings of the IEEE CEC, Beijing, China, 6–11 July 2014. [Google Scholar]

- Saber, T.; Wang, S. Evolving better rerouting surrogate travel costs with grammar-guided genetic programming. In Proceedings of the IEEE CEC, Glasgow, UK, 19–24 July 2020. [Google Scholar]

- Lynch, D.; Saber, T.; Kucera, S.; Claussen, H.; O’Neill, M. Evolutionary learning of link allocation algorithms for 5G heterogeneous wireless communications networks. In Proceedings of the GECCO, Prague, Czech Republic, 13–17 July 2019. [Google Scholar]

- Saber, T.; Fagan, D.; Lynch, D.; Kucera, S.; Claussen, H.; O’Neill, M. Multi-level Grammar Genetic Programming for Scheduling in Heterogeneous Networks. In Proceedings of the EuroGP, Parma, Italy, 4–6 April 2018. [Google Scholar]

- Saber, T.; Fagan, D.; Lynch, D.; Kucera, S.; Claussen, H.; O’Neill, M. A multi-level grammar approach to grammar-guided genetic programming: The case of scheduling in heterogeneous networks. Genet. Program. Evol. Mach. 2019, 20, 245–283. [Google Scholar] [CrossRef]

- Saber, T.; Fagan, D.; Lynch, D.; Kucera, S.; Claussen, H.; O’Neill, M. Hierarchical Grammar-Guided Genetic Programming Techniques for Scheduling in Heterogeneous Networks. In Proceedings of the IEEE CEC, Glasgow, UK, 19–24 July 2020. [Google Scholar]

- Saber, T.; Fagan, D.; Lynch, D.; Kucera, S.; Claussen, H.; O’Neill, M. A Hierarchical Approach to Grammar-Guided Genetic Programming The case of Scheduling in Heterogeneous Networks. In Proceedings of the TPNC, Dublin, Ireland, 12–14 December 2018. [Google Scholar]

- Forstenlechner, S.; Fagan, D.; Nicolau, M.; O’Neill, M. Extending program synthesis grammars for grammar-guided genetic programming. In Proceedings of the PPSN, Coimbra, Portugal, 8–12 September 2018; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Manning, C.D. Human language understanding & reasoning. Daedalus 2022, 151, 127–138. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report; OpenAI: San Francisco, CA, USA, 2023. [Google Scholar]

- Manyika, J.; Hsiao, S. An overview of Bard: An early experiment with generative AI. AI Google Static Doc. 2023. Available online: https://ai.google/static/documents/google-about-bard.pdf (accessed on 27 March 2024).

- Wang, B.; Wang, Z.; Wang, X.; Cao, Y.; A Saurous, R.; Kim, Y. Grammar prompting for domain-specific language generation with large language models. Adv. Neural Inf. Process. Syst. 2024. Available online: https://dl.acm.org/doi/10.5555/3666122.3668959 (accessed on 27 March 2024).

- Hartmann, B.; MacDougall, D.; Brandt, J.; Klemmer, S.R. What would other programmers do: Suggesting solutions to error messages. In Proceedings of the SIGCHI, Atlanta, GA, USA, 5–10 April 2010. [Google Scholar]

- Ragkhitwetsagul, C.; Krinke, J.; Clark, D. A comparison of code similarity analysers. Empir. Softw. Eng. 2018, 23, 2464–2519. [Google Scholar] [CrossRef]

- Cohen, A. FuzzyWuzzy: Fuzzy String Matching in Python, 2011. Available online: https://chairnerd.seatgeek.com/fuzzywuzzy-fuzzy-string-matching-in-python/ (accessed on 27 March 2024).

- Kamiya, T.; Kusumoto, S.; Inoue, K. CCFinder: A multilinguistic token-based code clone detection system for large scale source code. IEEE Trans. Softw. Eng. 2002, 28, 654–670. [Google Scholar] [CrossRef]

- Gitchell, D.; Tran, N. Sim: A utility for detecting similarity in computer programs. ACM Sigcse Bull. 1999, 31, 266–270. [Google Scholar] [CrossRef]

- Gao, T.; Fisch, A.; Chen, D. Making pre-trained language models better few-shot learners. arXiv 2020, arXiv:2012.15723. [Google Scholar]

- White, J.; Fu, Q.; Hays, S.; Sandborn, M.; Olea, C.; Gilbert, H.; Elnashar, A.; Spencer-Smith, J.; Schmidt, D.C. A prompt pattern catalog to enhance prompt engineering with chatgpt. arXiv 2023, arXiv:2302.11382. [Google Scholar]

- Baxter, I.D.; Yahin, A.; Moura, L.; Sant’Anna, M.; Bier, L. Clone detection using abstract syntax trees. In Proceedings of the ICSME, Bethesda, MD, USA, 20 November 1998. [Google Scholar]

- Helmuth, T.; Spector, L. Detailed Problem Descriptions for General Program Synthesis Benchmark Suite; University of Massachusetts Amherst: Amherst, MA, USA, 2015. [Google Scholar]

| Problem Name | Description |

|---|---|

| Even Squares | Given an integer n, print all of the positive even perfect squares less than n on separate lines. |

| Last Index of Zero | Given a vector of integers, at least one of which is 0, return the index of the last occurrence of 0 in the vector. |

| Scrabble Score | Given a string of visible ASCII characters, return the Scrabble score for that string. Each letter has a corresponding value according to normal Scrabble rules, and non-letter characters are worth zero. |

| Wallis Pi | John Wallis gave the following infinite product that converges to /4: (2/3) * (4/3) * (4/5) * (6/5) * (6/7) * (8/7) * (8/9) * (10/9) * … Given an integer input n, compute an approximation of this product out to n terms. Results are rounded to 5 decimal places. |

| Name | Train | Test | Name | Train | Test |

|---|---|---|---|---|---|

| Number IO | 25 | 1000 | Count Odds | 200 | 2000 |

| Small Or Large | 100 | 1000 | Mirror Image | 100 | 1000 |

| For Loop Index | 100 | 1000 | Super Anagrams | 200 | 2000 |

| Compare String Lengths | 100 | 1000 | Sum of Squares | 50 | 50 |

| Double Letters | 100 | 1000 | Vectors Summed | 150 | 1500 |

| Collatz Numbers | 200 | 2000 | X-Word Lines | 150 | 2000 |

| Replace Space with Newline | 100 | 1000 | Pig Latin | 200 | 1000 |

| Even Squares | 100 | 1000 | Negative To Zero | 200 | 2000 |

| Wallis Pi | 150 | 50 | Scrabble Score | 200 | 1000 |

| String Lengths Backwards | 100 | 1000 | Word Stats File | 100 | 1000 |

| Last Index of Zero | 150 | 1000 | Checksum | 100 | 1000 |

| Vector Average | 100 | 1000 | Digits | 100 | 1000 |

| Grade | 200 | 2000 | Median | 100 | 1000 |

| Smallest | 100 | 1000 | Syllables | 100 | 1000 |

| Parameter | Setting |

|---|---|

| Runs | 30 |

| Generation | 300 a |

| Population size | 1000 |

| Tournament size | 7 |

| Crossover probability | 0.9 |

| Mutation probability | 0.05 |

| Node limit | 250 |

| Variable per type | 3 |

| Max execution time (s) | 1 |

| Benchmark Problem | G3P | ChatGPT |

|---|---|---|

| Number IO | ✔ | ✔ |

| Small Or Large | ✔ |  |

| For Loop Index | ✘ |  |

| Compare String Lengths | ✘ |  |

| Double Letters | ✘ |  |

| Collatz Numbers | ✘ |  |

| Replace Space with Newline | ✔ |  |

| Even Squares | ✘ |  |

| Wallis Pi | ✘ | ✘ |

| String Lengths Backwards | ✔ |  |

| Last Index of Zero | ✔ |  |

| Vector Average | ✘ |  |

| Count Odds | ✔ | ✔ |

| Mirror Image | ✔ |  |

| Super Anagrams | ✘ |  |

| Sum of Squares | ✘ |  |

| Vectors Summed | ✘ |  |

| X-Word Lines | ✘ |  |

| Pig Latin | ✘ |  |

| Negative To Zero | ✔ |  |

| Scrabble Score | ✘ |  |

| Word Stats | ✘ |  |

| Checksum | ✘ |  |

| Digits | ✘ | ✘ |

| Grade | ✔ |  |

| Median | ✔ |  |

| Smallest | ✔ |  |

| Syllables | ✔ |  |

| Number of Problems Solved | 12 | 2 a |

| Problem | Grammar Mapping Status | Problem | Grammar Mapping Status |

|---|---|---|---|

| NumberIO | ✔ | Last Index of Zero |  |

| Small Or Large | ✔ | Vector Average | ✘ |

| For Loop Index | ✔ | Count Odds | ✔ |

| Compare String Lengths | ✔ | Mirror Image | ✔ |

| Double Letters | ✔ | Super Anagrams | ✘ |

| Collatz Numbers | ✔ | Sum of Squares | ✘ |

| Replace Space with Newline |  | Vectors Summed | ✘ |

| Even Squares |  | X-Word Lines | ✘ |

| Wallis Pi | ✔ | Pig Latin | ✘ |

| String Lengths Backwards | ✘ | Negative To Zero | ✘ |

| Digits |  | Scrabble Score | ✘ |

| Grade | ✔ | Word Stats | ✘ |

| Median | ✔ | Checksum | ✘ |

| Smallest | ✔ | Syllables | ✔ |

). When It generated an incorrect solution, we noted it as failed (✘).

). When It generated an incorrect solution, we noted it as failed (✘).

). When It generated an incorrect solution, we noted it as failed (✘).

). When It generated an incorrect solution, we noted it as failed (✘).| Benchmark Problem | ChatGPT | G3P | G3P ChatGPT Seeding | MaOG3P No Seeding | MaOG3P ChatGPT Seeding |

|---|---|---|---|---|---|

| NumberIO | ✔ | 17 | 30 | 20 | 30 |

| Small Or Large |  | 0 | 30 | 0 | 30 |

| For Loop Index |  | 0 | 30 | 0 | 30 |

| Compare String Lengths |  | 0 | 30 | 0 | 30 |

| Double Letters |  | 0 | 30 | 0 | 30 |

| Collatz Numbers |  | 0 | 30 | 0 | 30 |

| Replace Space with Newline |  | 1 | 10 | 1 | 12 |

| Even Squares |  | 0 | 0 | 0 | 0 |

| Wallis Pi | ✘ | 0 | 0 | 0 | 0 |

| String Lengths Backwards |  | 3 | 1 | 2 | 4 |

| Last Index of Zero |  | 6 | 30 | 5 | 30 |

| Vector Average |  | 0 | 0 | 0 | 0 |

| Count Odds | ✔ | 0 | 30 | 0 | 30 |

| Mirror Image |  | 20 | 30 | 21 | 30 |

| Super Anagrams |  | 0 | 0 | 0 | 0 |

| Sum of Squares |  | 0 | 0 | 0 | 0 |

| Vectors Summed |  | 0 | 0 | 0 | 0 |

| X-Word Lines |  | 0 | 0 | 0 | 0 |

| Pig Latin |  | 0 | 0 | 0 | 0 |

| Negative To Zero |  | 1 | 2 | 0 | 3 |

| Scrabble Score |  | 0 | 0 | 0 | 0 |

| Word Stats |  | 0 | 0 | 0 | 0 |

| Checksum |  | 0 | 0 | 0 | 0 |

| Digits | ✘ | 0 | 0 | 0 | 0 |

| Grade |  | 0 | 30 | 0 | 30 |

| Median |  | 12 | 30 | 14 | 30 |

| Smallest |  | 28 | 30 | 30 | 30 |

| Syllables |  | 0 | 30 | 0 | 30 |

| Number of Problems Solved | 2 | 8 | 16 | 7 | 16 |

| Problem | p-Value | Problem | p-Value |

|---|---|---|---|

| NumberIO | 0.034843 | Last Index of Zero | 0.000751 |

| Small Or Large | 0.000062 | Vector Average | 0.143140 |

| For Loop Index | 0.000064 | Count Odds | 0.000064 |

| Compare String Lengths | 0.000033 | Mirror Image | 0.077872 |

| Double Letters | 0.000055 | Super Anagrams | 0.377587 |

| Collatz Numbers | 0.000063 | Sum of Squares | 0.739364 |

| Replace Space with Newline | 0.001609 | Vectors Summed | 0.393048 |

| Even Squares | 0.040793 | X-Word Lines | 0.795936 |

| Wallis Pi | 0.357296 | Pig Latin | 0.325468 |

| String Lengths Backwards | 0.037062 | Negative To Zero | 0.265681 |

| Digits | 0.000064 | Scrabble Score | 0.421242 |

| Grade | 0.000064 | Word Stats | 0.279861 |

| Median | 0.005943 | Checksum | 0.545199 |

| Smallest | 0.168078 | Syllables | 0.000064 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tao, N.; Ventresque, A.; Nallur, V.; Saber, T. Enhancing Program Synthesis with Large Language Models Using Many-Objective Grammar-Guided Genetic Programming. Algorithms 2024, 17, 287. https://doi.org/10.3390/a17070287

Tao N, Ventresque A, Nallur V, Saber T. Enhancing Program Synthesis with Large Language Models Using Many-Objective Grammar-Guided Genetic Programming. Algorithms. 2024; 17(7):287. https://doi.org/10.3390/a17070287

Chicago/Turabian StyleTao, Ning, Anthony Ventresque, Vivek Nallur, and Takfarinas Saber. 2024. "Enhancing Program Synthesis with Large Language Models Using Many-Objective Grammar-Guided Genetic Programming" Algorithms 17, no. 7: 287. https://doi.org/10.3390/a17070287