Abstract

Cloud service providers deliver computing services on demand using the Infrastructure as a Service (IaaS) model. In a cloud data center, several virtual machines (VMs) can be hosted on a single physical machine (PM) with the help of virtualization. The virtual machine placement (VMP) involves assigning VMs across various physical machines, which is a crucial process impacting energy draw and resource usage in the cloud data center. Nonetheless, finding an effective settlement is challenging owing to factors like hardware heterogeneity and the scalability of cloud data centers. This paper proposes an efficient algorithm named VMP-ER aimed at optimizing power consumption and reducing resource wastage. Our algorithm achieves this by decreasing the number of running physical machines, and it gives priority to energy-efficient servers. Additionally, it improves resource utilization across physical machines, thus minimizing wastage and ensuring balanced resource allocation.

1. Introduction

The demand for cloud services keeps growing, which means more energy is used and higher emissions of CO2 are generated. Amazon estimates that up to 42% of a data center’s operating expenses are due to the energy it uses [1]. This increased energy usage is a big problem for cloud providers because it makes owning and running data centers more expensive.

Server virtualization stands as a pivotal technology within cloud computing systems, permitting the deployment and operation of numerous virtual machines on a single physical server. Periodically, incoming requests are evaluated and transformed into virtual machines, thereafter receiving allocation of cloud infrastructure resources. Within the cloud environment, a diverse pool of PM resources with varying capacities exists.

The task of assigning various virtual machines to a particular group of PMs is known as the virtual machine placement (VMP) problem, which is a critical factor in optimizing resource utilization and managing energy consumption within the cloud framework [1]. Nonetheless, due to factors such as request diversity, disparities in PM capabilities, resource multidimensionality, and scale, devising an efficient solution is inherently complex. This mapping endeavor necessitates a design that meets the core data center requirements including the reduction of energy consumption and costs while increasing profit.

The issue concerning the placement of virtual machines (VMs) and the optimal selection of destinations for migrations can be framed as a multi-objective bin-packing challenge. Here, the objective is to assign items (VMs) to bins (servers) while keeping the number of bins to a minimum. Each VM is characterized by its size, ensuring it fits within the designated container size without exceeding it.

Many existing VMP heuristics have focused on the exchange between energy consumption and performance of the system. However, reducing energy usage may come at the expense of performance degradation and increased service level agreement (SLA) violations due to frequent live migrations. Another challenge is the consequence of incessant VM consolidation on system reliability [2]. This can result in increased system failure probabilities by overburdening certain servers. Therefore, an effective VM consolidation method must consider more than just the ideal energy consumption, such as compliance with resource optimization, SLA, and quality of service requirements.

In this paper, we propose an efficient algorithm (namely VMP-ER) designed to address the challenge of minimizing the (1) energy consumption, (2) resource wastage, and (3) SLA violation within cloud data centers, which involves resource heterogeneity in the context of a multi-objective bin-packing problem. Unlike other algorithms that are focused on the data center’s overall power and resource consumption when placing VMs, we consider resource usage in addition to power efficiency factors at the host level during the implementation of our algorithm. The main idea is to balance resources accross PMs, leading to overall improvements in the data center resources.

Our proposed algorithm focuses on reducing power usage by minimizing the number of PMs and leveraging energy-efficient options. Furthermore, it aims to minimize resource wastage by enhancing resource utilization and achieving a balance among various resources on each PM. To achieve these objectives, we leverage the concept of the resource usage factor [1,3] to guide VM placement and prioritize the selection of PMs for hosting VMs, ensuring that energy-efficient PMs are utilized first.

This paper is organized as follows: Section 2 provides an overview of related works. Section 3 gives an introduction to the methods used and presents a detailed explanation of the proposed algorithm. Section 4 is devoted to presenting an evaluation and discussion. Finally, Section 5 provides a conclusion of the paper and outlines future work.

2. Literature Review

The VMP in cloud data centers has attracted significant attention due to its critical role in enhancing energy efficiency, resource utilization, and overall system performance.

Azizi et al. [1] presented an energy-efficient heuristic algorithm named minPR for optimizing VM placement in cloud data centers. Their approach focuses on minimizing energy consumption while ensuring optimal resource utilization and performance. By introducing the resource wastage factor model, the authors manage VM placement on PMs using reward and penalty mechanisms. The proposed algorithm outperformed other approaches like MBFD and RVMP [4] considering the total number of PMs and total resource waste. However, simulation results showed that the algorithm performance varies depending on the VM specification and the type of workloads.

A dynamic VM consolidation approach was introduced by Sayadnavard et al. [2] using a Discrete Time Markov Chain (DTMC) model and the e-MOABC algorithm, aiming to balance energy consumption, resource wastage, and system reliability in cloud data centers. The algorithm favors VMs with higher impact on the CPU utilization of the server in order to minimize VM migrations. Devising a resource usage factor technique to utilize PM resources efficiently, Gupta et al. [5] proposed a new VM placement algorithm to minimize the power consumption of the data center by decreasing number of total active PMs. While these approaches showed substantial improvements in energy efficiency, SLA violation need to be considered during the evaluations.

Ghetas [6] proposed the MBO-VM method to reduce energy consumption and minimize resource wastage by maximizing the packaging efficiency. Utilizing a multi-objective Monarch Butterfly Algorithm, the approach considers the CPU and memory dimensions for VM placement optimization. Khan [7] proposed a normalization-based VM consolidation (NVMC) strategy that aims to place VMs while minimizing energy usage and SLA violations by reducing the number of VM live migrations. Despite considering multiple resources of PMs, reliance on CPU-centric calculations and VM sorting poses limitations in heterogeneous environments with varying workloads.

The proposed approach by Beloglazov et al. [8] splits the VM consolidation problem into hosts overloaded/underload detection, the selection of VMs to be migrated, and VMs allocation. Their algorithm includes sorting the VMs in decreasing order based on CPU utilization and allocating each VM to the host that results in the least increase in power consumption due to the allocation. However, the proposed approach focused on improving energy efficiency without optimizing resource wastage. A Load-Balanced Multi-Dimensional Bin-Packing heuristic (LBMBP) to optimize resource allocation in cloud data centers was introduced by Nehra et al. [9]. Their approach balances active host loads while detecting overloaded and underloaded hosts for VM migration, resulting in improved resource utilization. Nevertheless, the exclusion of resource availability beyond CPU in the power model limits its reliability.

Mahmoodabadi et al. [10] focused on the bin packing with linear usage cost (BPLUC). They examined the VM placement problem with three dimensions, CPU, RAM, and bandwidth, aiming at reducing the power consumption. They compared their result with PABFD [8], GRVMP [11], and AFED-EF [12]. The approach demonstrates efficiency compared to existing methods. Following bin-packing heuristics, Sunil et al. [13] proposed energy-efficient VM placement algorithms, EEVMP and MEEVMP, considering server energy efficiency. The researchers aims to optimize energy consumption, QoS, and resource utilization while minimizing SLA violations. However, evaluations of the varied workloads and infrastructures while considering additional resources are necessary to ascertain the adaptability of these algorithms.

An enhanced levy-based particle swarm optimization algorithm with variable sized bin packing (PSOLBP) is proposed by Fatima et al. [14] for solving the VM placement problem combining levy flight and PSO algorithms. An EVMC method for energy-aware virtual machines consolidation was proposed by Zolfaghari et al. [15], integrating machine learning and meta-heuristic techniques to optimize the energy consumption. While considering all server resources, including CPU, RAM, storage, and bandwidth, the method’s scalability remains untested on larger cloud infrastructures.

Tarafdar et al. [16] proposed an energy-efficient and QoS-aware approach for VM consolidation using Markov chain-based prediction and linear weighted sum. The simulation results showed a substantial reduction in the energy consumption, number of VM migrations and SLA violations compared with other VM consolidation approaches. A two-phase energy-aware load-balancing algorithm (EALBPSO) using PSO for VM migration in DVFS-enabled cloud data centers was presented by Masoudi et al. [17]. While demonstrating improvements in power consumption and migration, scalability testing in larger data center settings is essential to validate its effectiveness.

Many researchers have focused on cloud service selection as well as task assignments and their effect on resource utilization and energy consumption by effectively scheduling user tasks. Nagarajan et al. [18] intorduced a comprehensive survey discussing the advantages and limitations of research investigating the cloud service brokerage concept. They also outlined various open research challenges and provided recommendations. Finally, they introduced an intelligent cloud broker for the effective selection and delivery of cloud services aiming at utilizing cloud resources.

An energy-optimized embedded load-balancing approach that prioritizes the tasks regarding their execution deadline was proposed by Javadpour et al. [19]. It also categorized the physical machines considering their configuration status. The algorithm prioritizes tasks based on execution deadlines and server configuration, utilizing DVFS to reduce energy consumption. A multi-resource alignment algorithm for VM placement and resource management in cloud environment was analyzed by Gabhane et al. [20]. Several algorithms were compared based on CPU and memory utilization as well as the probability of task failure. Multi-resource alignment demonstrated better performance in CPU and memory utilization compared to other algorithms. However, scalability concerns and the need for evaluation in larger data center environments persist.

Lima et al. [21] introduced a VMP algorithm for optimizing the service allocation process. In their evaluation, they considered two scenarios based on task arrival. The first scenario assumes no prior knowledge of task execution time, and the second scenario assumes all tasks arrive simultaneously. The results indicated a significant improvement in the average task waiting time. A two-phase multi-objective VM placement and consolidation approach employing the DVFS technique was proposed by Nikzad et al. [22]. Using a multi-objective ant colony algorithm, the approach aims to improve energy consumption and SLA violations.

An effective and efficient VM consolidation approach EQ-VMC was introduced by Li et al. [23], which has the goal of optimizing energy efficiency and service quality integrating discrete differential evolution and heuristic algorithms. By considering host overload detection and VM selection, EQ-VMC aims to reduce energy consumption and enhance QoS. Simulation results demonstrated improvements in energy consumption and host overloading risk as well as improved QoS. A Best Fit Decreasing algorithm for VMP formulated as a bin-packing problem was proposed by Tlili et al. [24]. The simulation results demonstrated higher packing efficiency compared to other algorithms. Nevertheless, they did not take SLA violation into account.

While numerous energy-aware algorithms focus primarily on reducing energy consumption within data centers, none have simultaneously considered PMs’ heterogeneity and multidimensional resources, minimizing the energy consumption, balancing the resource wastage, and improving SLA altogether. Our proposed algorithm adopts a more comprehensive approach. In addition to enhancing energy usage, we consider power efficiency, SLA violation, and resource wastage (both CPU and memory) on host servers collectively during VM allocation and placement. Table 1 gives a summary of the resources and evaluation metrics used in the literature.

Table 1.

Resources and evaluation metrics used by authors in the literature.

3. Methodology

In large-scale cloud environments with numerous high-performance computing devices, energy inefficiency becomes a significant concern due to the underutilization of resources. Virtualization addresses this issue by allowing multiple instances of VMs to be hosted on a single physical server, thereby reducing operational costs, power consumption, and resource wastage. To further improve resource utilization, underutilized servers can be transitioned to a low-power state.

VMP aims to minimize the number of physical servers required to host VMs while generating migration maps for live migration. However, the excessive migration of VMs during consolidation may degrade application performance and response times. Additionally, efforts to minimize energy consumption through VM consolidation may inadvertently violate the SLA between service providers and customers.

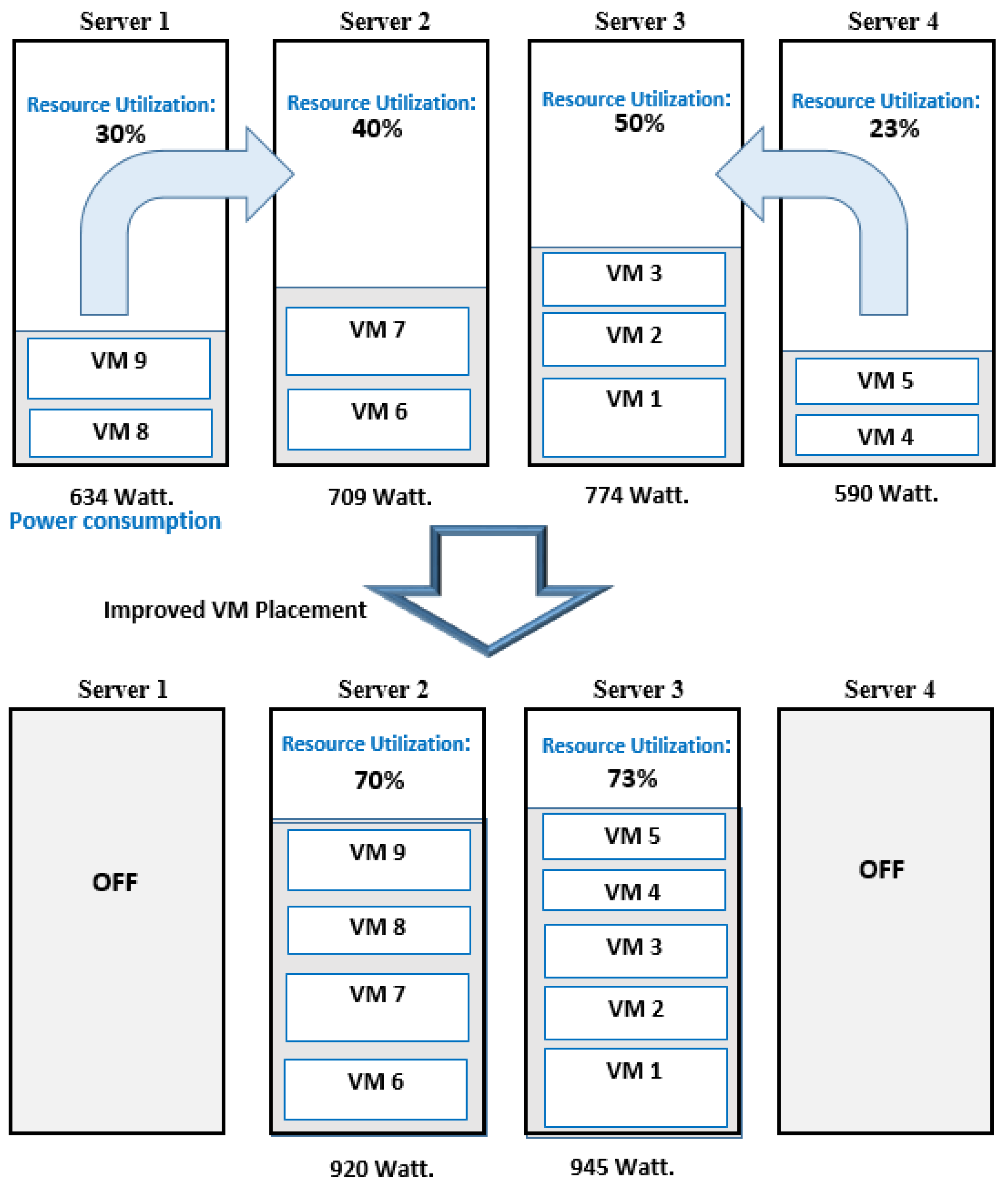

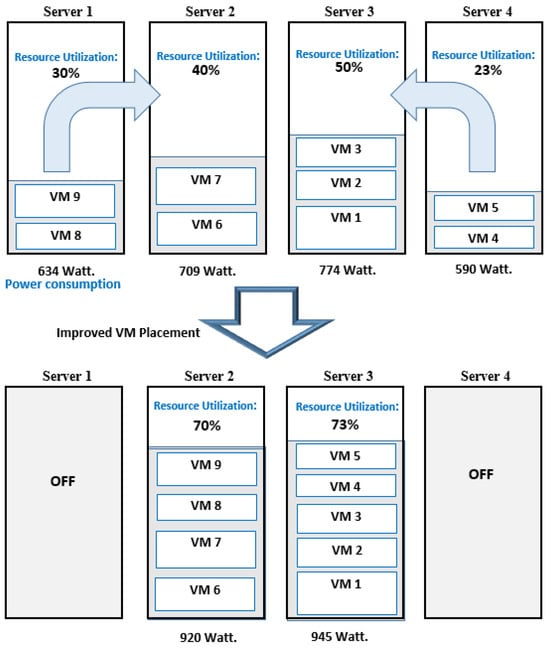

Figure 1 gives an example of improved VM placement aiming at reducing the power consumption and resource wastage. In this figure, 9 VMs are placed on 4 different PMs utilizing resources to some extent. It can be seen that the PMs’ resources are not utilized properly. One way to improve resource utilization is by migrating VMs from server 1 to server 2 and from server 4 to server 3 so that server 2 and server 3 become more utilized and well balanced in term of resource usages, while servers 1 and 4 are switched off to save energy. All servers in the figure are assumed to be Lenovo ThinkSystem SD535 V3, where power consumption at different utilization levels is stated in Table 2.

Figure 1.

An example of improved VM placement.

Table 2.

Power usage in watts of different servers at different utilization levels.

Incorporating the resource wastage factor into the energy-efficiency calculation aids in alleviating potential VM migrations caused by resource shortages on hosting servers. Additionally, it facilitates the efficient utilization of a PM’s resources and fosters a balance among remaining resources across multiple dimensions.

When two VMs operate on the same server, the server’s CPU and memory utilization are approximated by adding up the individual CPU and memory utilizations of the VMs. For instance, if one VM requests 30% CPU and 40% memory, and another VM requests 20% CPU and 20% memory, the combined utilization of the server hosting both VMs would be estimated at 50% CPU and 60% memory, respectively, reflecting the summation of the utilization vectors. To prevent the server’s CPU and memory usage from reaching full capacity (100%), it becomes necessary to set an upper limit on the resource utilization per server, which is denoted by a threshold value. This precautionary measure is rooted in the understanding that operating at full capacity can lead to significant performance deterioration and also because VM live migration consumes some CPU processing resources on the migrating node.

3.1. System Model

We model the power consumption, SLA and resource wastage of a data center in the following subsections. Following the earlier works of [1,3], we exploit the resource wastage factor to devise the resource usage at the PM level. Table 3 includes the list of notations used in this paper for better readability.

Table 3.

List of notations used in this paper.

3.1.1. Power Consumption Modeling

The power consumption of the PM is described as a scale of CPU utilization with linear relation. So, a server’s power consumption is a function of its CPU utilization, as shown below:

where and are the power usage of host h when it is within an idle and 100% utilization state. denotes the normalized CPU utilization. The overall power draw for the data center will be the summation of all the PMs in the data center.

3.1.2. Power Efficiency Modeling

If we consider a physical machine h with a CPU capacity of (expressed in Million Instructions Per Second, or MIPS) and a maximum power consumption of (measured in watts) reflecting the overall power draw from all the PM dimensions (CPU, RAM, network, and disk storage), we can define the power efficiency of h as follows:

This metric denotes the computational power provided by a specific physical machine per watt of energy consumed. For instance, the machine in our running example from Table 2, Lenovo ThinkSystem SD535, V3 boasts a computational power of 288,000 MIPS and a maximum power consumption of 1321 watts, yielding a power efficiency score of 218.02. Conversely, Lenovo ThinkSystem SR850 V3 offers a computational power of 456,000 MIPS and a maximum power consumption of 1916 watts, resulting in a power efficiency score of 237.99. Consequently, Lenovo SR850 V3 demonstrates superior power efficiency compared to ThinkSystem SD535.

3.1.3. Resource Wastage Modeling

The available remaining resources on individual servers can significantly differ across various VM placement strategies. To effectively harness multidimensional resources, the subsequent equation is employed to quantify the potential expense incurred from the unused resources of a single server:

where and are the normalized used CPU and RAM, while and indicate the normalized remaining CPU and RAM. The idea of having is that resource wastage cannot be entirely eliminated even when hosts are fully utilized, as there is always some degree of resource waste. Additionally, it helps to avoid zero values when both CPU and RAM have identical normalized utilization rates. can be set to any small positive number like 0.0001 for instance.

The above equation helps identify cases where one can improve resource utilization across various dimensions and ensure a balanced distribution of remaining resources on each server. This is achieved by prioritizing PMs that give the lowest average resource waste in all considered dimensions when hosting certain VMs. We exploit the factor to determine the normalized resource usage for each PM where the one with more balanaced resources across all dimensions will have a higher priority to host VMs.

3.1.4. SLA Modeling

SLA violation indicates situations in which a host cannot fulfill a specific VM’s request for a certain amount of resources at a given time. We expressed this with the following equation:

where T denotes the total time required to execute the input workload, and represents the binary value for host h, which is 0 if no violation occurs at that time and 1 otherwise. The value for represents the overall time in which host h was active. A violation occurs when the host experiences 100% CPU utilization.

3.2. Proposed Algorithm

Let represent our target function that encompasses the energy consumption, resource wastage, and SLA violations of host h within a data center.

As a first step toward our algorithm, we define . This denotes a potential power draw improvement of a host if it is also hosting VM v:

Similarly, we define as the potential SLA violation change of a host when it starts hosting a new VM v:

We next define the overall improvement of the host as follows:

We are using only non-zero improvement components, so we can use this in the denominator of our overall target function:

Now, we are ready to discuss Algorithm 1, which builds on the previously discussed equations. In general, our algorithm, just like any typical VMP, takes a list of PMs and a list of VMs as input, and then it produces a set of VM–PM pairs as output, in which each VM is hosted by certain hosts as guided by our target function.

First, the VMs are sorted in decreasing order according to CPU demands. For each VM in the VM list, the algorithm checks the set of active servers that have enough resources to accommodate the VM request and calculates the energy efficiency for each one based on Equation (8). To calculate for each host, the algorithm determines the variation of power consumption before and after hosting VM v using Equation (5). Thus, the host with the lowest increase in the power consumption will have a better chance at hosting. The factor helps with choosing the server with the highest power efficiency. This means that the host with a higher is preferred, since it can host more VMs with a lower increase in power consumption compared to other PMs. This leads to minimizing the overall power consumption of the DC by utilizing as few hosts as possible. The potential SLA violation impact before and after VM placement is calculated using Equation (6).

The resource usage factor of the nominated servers is calculated by considering two dimensions, CPU and RAM, based on Equation (3). This factor is important for selecting an appropriate PM in terms of resource utilization across multiple dimensions. Additionally, it aids in balancing the resource wastage of PMs by giving priority to those with higher resource utilization.

| Algorithm 1 VMP-ER Placement Algorithm. |

|

The energy-efficiency metric for each server will be obtained based on the power effiency, resource usage, and potential occurrence of SLA, which all contribute to the selection of PMs. The most suitable one will be chosen to host the VM while pairing between VM–PM is updated (line 4). If there is no active server to accommodate the VM, the algorithm selects an appropriate host from the set of inactive servers that has the most energy-efficienct metric (line 6). The chosen host will be associated to the VM (line 7). Finally, when all the VMs are allocated to their hosts, the algorithm returns the final set of VM–PM pairings.

Let there be n number of VMs in set and m number of PMs in set . The algorithm takes to sort VMs in descending order based on resource demands. For each VM, the algorithm checks all active PMs to find a suitable host and then checks all inactive ones in case there is no active host available. All calculations for take a constant amount of time () and can be ignored. Thus, the overall time to sort VMs and to check all hosts takes . As the number of PMs is smaller than the number of VMs in the cloud system, we can express the complexity for the algorithm as . As n increases, the overall complexity can be simplified to .

The fundamental concept of including the factor in the choice of PM is to enhance the utilization of a physical machine’s resources across various dimensions while ensuring a balanced distribution of remaining resources. It also helps with mitigating unnecessary potential migrations due to resource contention, thus increasing the energy optimization for the whole data center.

Since represents the normalized resource wastage of the host, () reflects the normalized resource usage, which we include in our calculation of the energy-efficiency factor (). This means that the host that has an acceptable amount of resource usage will be prioritized for hosting the VM and thus lead to more utilized hosts while minimizing the number of powered-on servers overall. This indicates a reduction in the total power consumption of the data center caused by decreasing the number of active hosts.

To reduce the data center’s power consumption, overloaded and underloaded machines are not considered for hosting. This exclusion occurs when a physical machine’s remaining capacity surpasses a specific threshold, either exceeding an upper threshold or falling below a lower one. Consequently, VMs on underloaded PMs will be migrated to other hosts, enabling the shutdown of those machines to save energy.

4. Experimental Evaluation

A modern data center is characterized by its heterogeniety, comprising various generations of PMs with differing configurations, particularly in processor speed and capacity. These servers are typically integrated into the data center incrementally, often replacing older legacy machines within the infrastructure [25]. Thus, we considered a heterogenous data center that contains two types of servers from different generations, as shown in Table 4.

Table 4.

Configuration of PMs.

Five types of VMs are used, as shown in Table 5. Also, we set all VMs to have a single CPU core, and we assumed task independency in which there are no communications required among the VMs during the task execution. To assess the performance of VMP-ER, we utilized various metrics including the number of active PMs, total power draw, and resource waste. In our experiment, we used real data taken from the PlanetLab workload trace [26]. Java programming language was used to perform the experiments.

Table 5.

Configuration of VMs.

Let us first consider a scenario in which two types of Lenovo servers, SD535 and SR850, are available within a data center for hosting VMs. For simplicity, let us assume that the following resources are currently available in these servers: one active SD535 server has 2604 MIPS and 2800 MB available, while one active SR850 server has 3724 MIPS and 1600 MB available. Now, let , , and represent VMs awaiting placement within the data center. The resource requirements for each VM are as follows: (1800 MIPS, 1100 MB), (1600 MIPS, 1000 MB), and (1100 MIPS, 520 MB).

Through the analysis of various algorithms, it has been observed that some algorithms prefer SR850 servers, while others favor SD535 servers. For instance, LBMBP [9] and EEVMP [13] algorithms would opt to place on a SR850 server due to its higher power efficiency factor compared to SD535. Subsequently, would be placed on a SD535 server, since the available resources on the SR850 server are insufficient. For , EEVMP would activate a new physical machine to accommodate it, as neither SD535 nor SR850 has adequate resources.

In contrast, our algorithm takes resource usage into account while identifying the most energy-efficient PM among the active PMs. This involves calculating the () term for both PMs using Equation (3), resulting in values of 0.942 for SD535 and 0.861 for SR850. Subsequently, the power efficiency factor () is computed using Equation (2), yielding values of 218.02 for SD535 and 237.99 for SR850. The difference in power consumption before and after placing is then determined, resulting in a difference of 27.2 watts for SD535 and 28.8 watts for SR850. Assuming no SLA violations occur, the E-efficiency factor () for both servers is calculated to be 7.55 for SD535 and 7.14 for SR850, leading to the selection of SD535 to host .

For and , since only the SR850 server possesses sufficient CPU and RAM resources, both VMs are hosted on the SR850 server without the need to activate another physical machine. Consequently, our proposed algorithm utilizes fewer PMs, leading to increased energy savings for the data center. Additionally, resource wastage is minimized as PM resources are utilized properly. While the LBMBP algorithm moves VMs to different PMs during the migration phase, it tends to result in higher energy consumption compared to the proposed algorithm. This is primarily because more energy is consumed during migrations. In data centers with diverse PMs and VM instances operating at scale, our algorithm is expected to deliver better performance.

In addition, our proposed algorithm enhances the quality of service of running PMs and minimizes the threat of PM overload by considering both the current and future resource usage status. This approach ensures effective destination PM selection. We consider both the load and dependability of PMs. Thus, the election of safer and balanced PMs for hosting results in properly utilized PMs and hence reduces the likelihood of potential migrations. This reduction significantly improves the performance degradation due to the migration metric. By enhancing the servers’ resource usages, the algorithm ensures that fewer PMs will be able to host more VMs. This improvement becomes viable when the number of VMs increases.

For large instances of VMs like Amazon EC2 M5.4xlarge (24,800 MIPS, 64,000 MB) and M5.8xlarge (49,600 MIPS, 128,000 MB), the LBMBP algorithm initially places them on SR850 servers but later migrates them to SD535 servers to minimize resource wastage. This approach performs better with certain types of workloads where VMs are not fully utilized most of the time (workloads do not request 100% of their demands). However, the likelihood of SLA violations will increase when these VMs operate at full utilization. Considering the energy dissipation caused by VM migration, our proposed algorithm obtains better results than the LBMBP by achieving better resource balancing and energy optimization on average. This is accomplished by avoiding frequent VM migrations and incorporating the SLA metric into the PM selection process, thereby improving QoS.

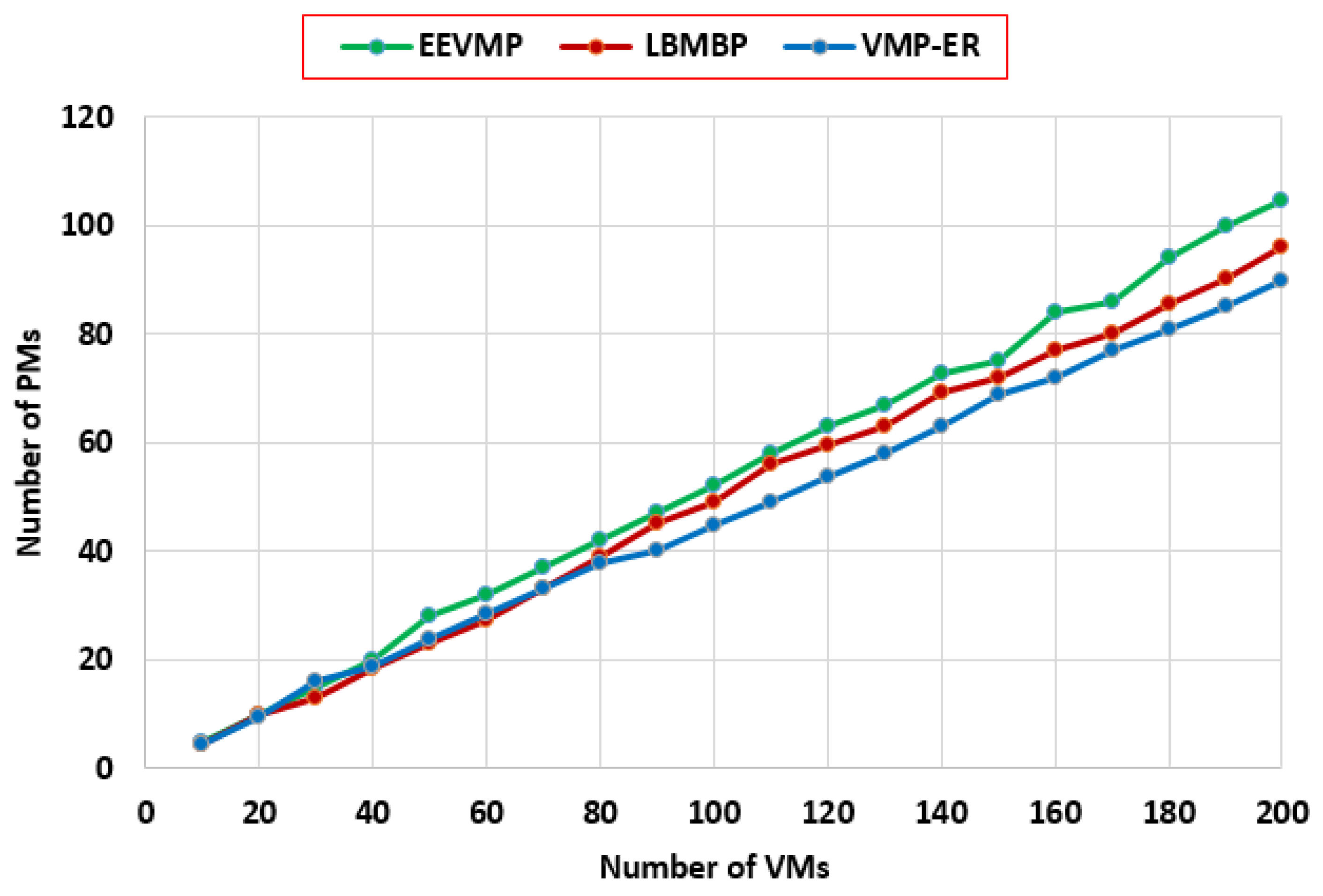

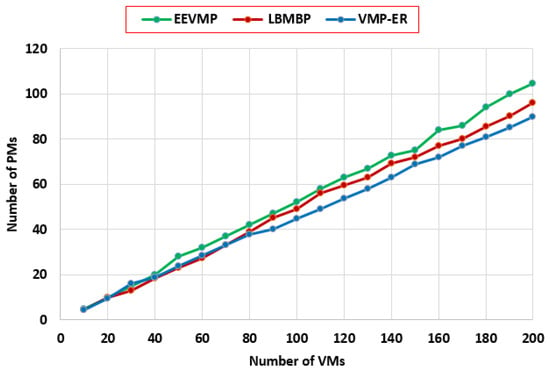

Figure 2 indicates the number of PMs required to host varying numbers of VMs. VMP-ER outperforms EEVMP and LBMBP in terms of the total number of active PMs due to its packing efficiency aiming at allocating more VMs to a single PM while taking load balancing into account. In contrast, EEVMP and LBMBP tend to place most VMs on SR850 servers first, as their decisions are based mostly on power efficiency. As the number of VM requests increases, both algorithms start to activate more PMs to accommodate these requests, as previously discussed in this section.

Figure 2.

The number of PMs required to host the given number of VMs.

EEVMP requires a higher number of PMs, as shown in the figure. This is primarily because EEVMP focuses on the lowest increase in power consumption when hosting particular VMs without considering resource wastage, often leading to the selection of an idle PM for initial VM placement. It is noteworthy that LBMBP involves two phases in VM placement: an initial placement phase and a replacement phase, where VMs are migrated to different PMs. In our comparison, we only consider the initial placement phase in the evaluation.

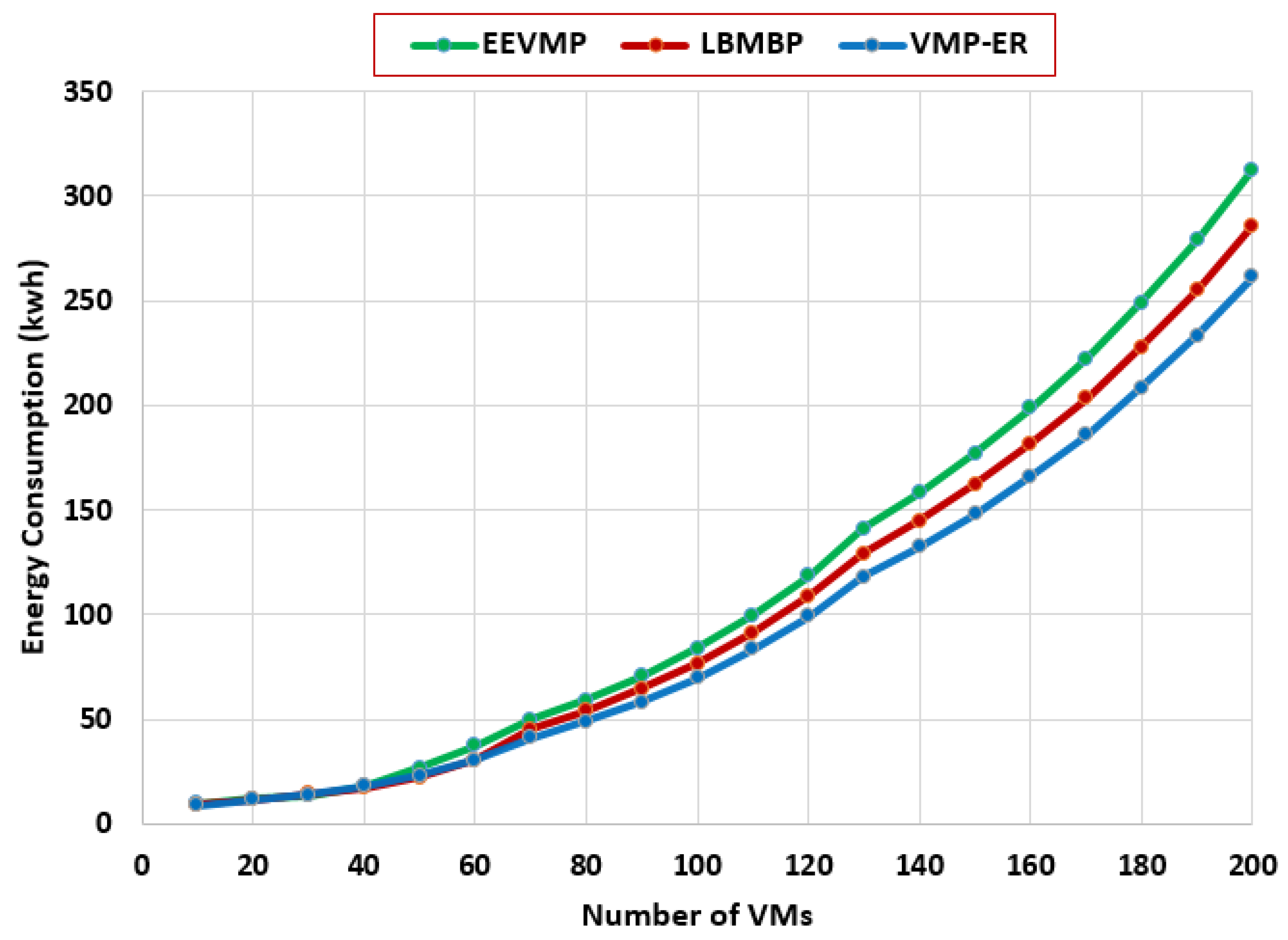

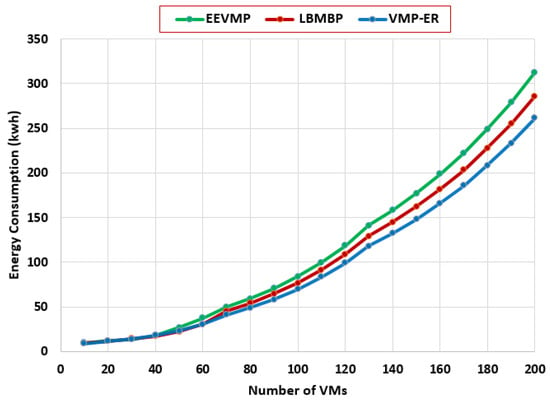

Figure 3 compares the energy consumption for different numbers of VMs, ranging from 10 to 200, considering different types of VMs, as shown in Table 5. From the figure, we observe similar performance for a small number of VMs. However, our algorithm begins to outperform EEVMP and LBMBP as the number of VMs increases.

Figure 3.

Energy consumption for given number of VMs.

Since VMP-ER requires fewer active PMs to accommodate VM requests, it results in lower energy consumption. Additionally, balancing the resource placement of VMs leads to more efficient utilization and avoids unnecessary potential migrations, positively impacting the overall data center energy consumption. In contrast, LBMBP tends to migrate VMs in its second phase to achieve better placement, which consequently results in additional energy dissipation due to migration. EEVMP shows higher energy consumption as the number of VMs increases because the algorithm primarily focuses on CPU resources when allocating VMs, neglecting other resources like RAM. This results in an increased number of migrations for workloads where VMs request a higher amount of RAM, thereby affecting energy consumption.

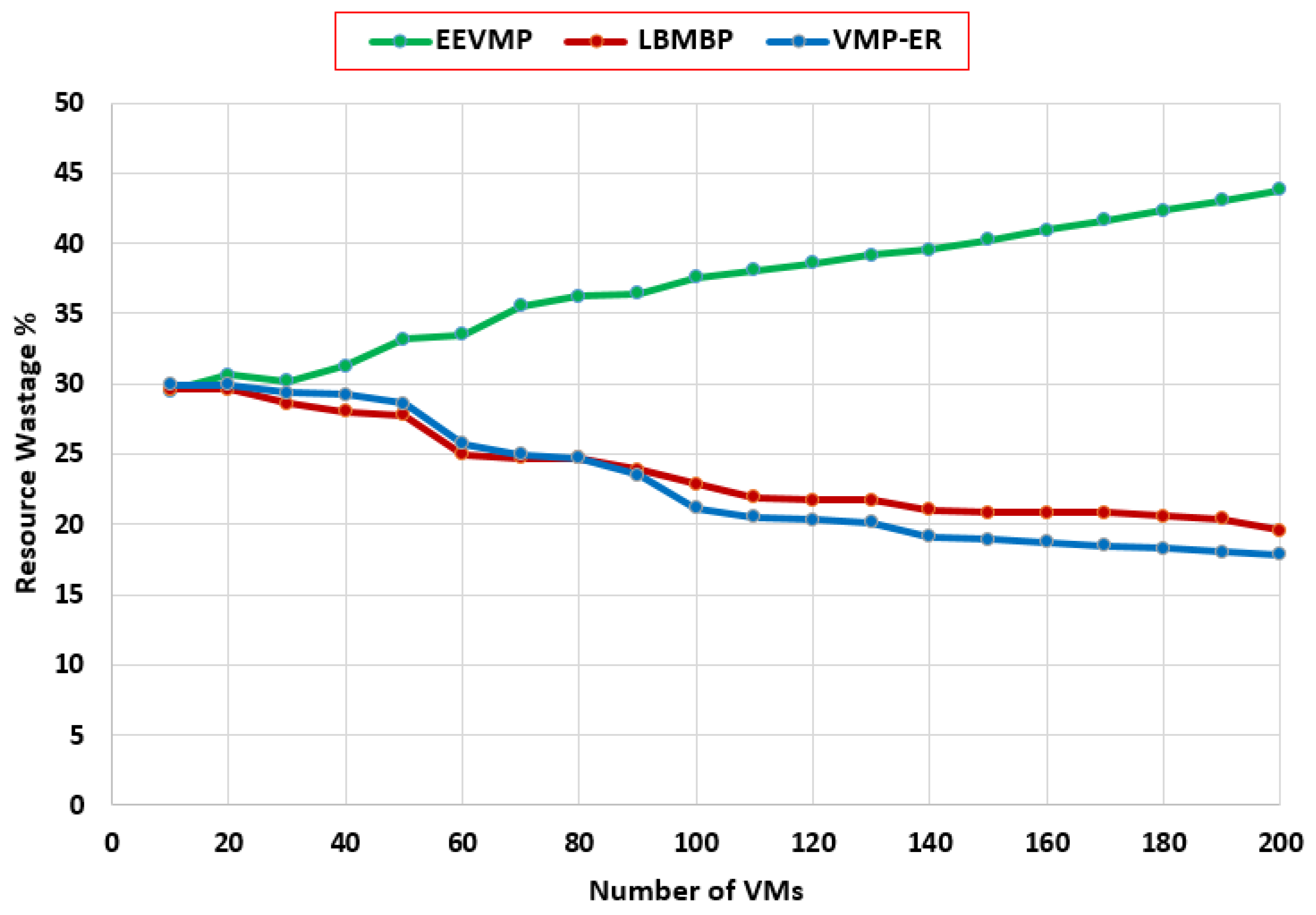

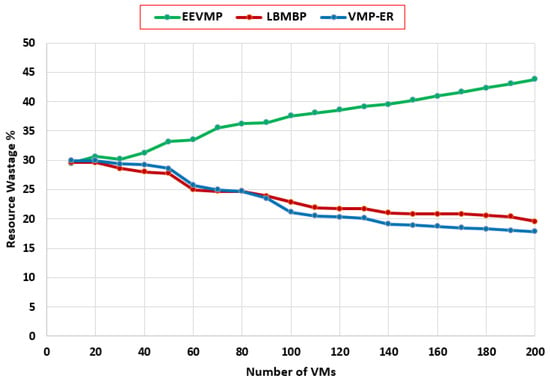

Figure 4 shows the percentage of average resource wastage (CPU and RAM) when hosting different numbers of VMs. A reduction in the number of active hosts indicates an improvement in the average utilization of resources. For a small number of VMs, LBMBP performs better than both EEVMP and VMP-ER. Comparing the performance of our algorithm to LBMBP, both algorithms utilize resources effectively with minimal resource waste. However, as the number of VMs increases, our algorithm starts to yield better results. This is because VMP-ER maintains higher resource utilization by incorporating the resource usage factor at the host level, leading to more efficient host selection and overall energy saving in the data center.

Figure 4.

Average resource wastage for given number of VMs.

5. Conclusions

Given the diverse characteristics of PMs and VMs, along with the multidimensional resources and large-scale infrastructure of the cloud environment, the placement of virtual machines has emerged as a significant area of research. Our study addresses this challenge by introducing an effective algorithm aimed at minimizing power draw and resource waste.

The proposed algorithm achieves power consumption reduction by decreasing the number of running PMs, minimizing VM migrations while giving priority to energy-efficient PMs. Additionally, it minimizes resource wastage by optimizing resource allocation across physical machines and enhancing their utilization efficiency while ensuring a balanced distribution of resources on the PM level.

As future work, we will work on the VM selection problem when certain PMs become overloaded. In addition, we want to extend the algorithm to manage multiple resources within each dimension, such as network and disk storage. We also plan to evaluate our proposed approach in a real cloud environment.

Author Contributions

Conceptualization, H.D.R. and G.K.; methodology, H.D.R.; validation, H.D.R.; formal analysis, H.D.R.; writing—original draft preparation, H.D.R.; writing—review and editing, H.D.R. and G.K.; visualization, H.D.R.; supervision, G.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used in this research are publicly available and cited where relevant. Other supporting material is available upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Azizi, S.; Zandsalimi, M.; Li, D. An energy-efficient algorithm for virtual machine placement optimization in cloud data centers. Clust. Comput. 2020, 23, 3421–3434. [Google Scholar] [CrossRef]

- Sayadnavard, M.; Haghighat, A.; Rahmani, A. A multi-objective approach for energy-efficient and reliable dynamic vm consolidation in cloud data centers. Eng. Sci. Technol. Int. J. 2022, 26, 100995. [Google Scholar] [CrossRef]

- Gao, Y.; Guan, H.; Qi, Z.; Hou, Y.; Liu, L. A multi-objective ant colony system algorithm for virtual machine placement in cloud computing. J. Comput. Syst. Sci. 2013, 79, 1230–1242. [Google Scholar] [CrossRef]

- Panda, S.; Jana, P. An efficient request-based virtual machine placement algorithm for cloud computing. In Distributed Computing and Internet Technology, Proceedings of the 13th International Conference, ICDCIT 2017, Bhubaneswar, India, 13–16 January 2017; Proceedings 13; Springer: Cham, Switzerland, 2017; pp. 129–143. [Google Scholar]

- Gupta, M.; Amgoth, T. Resource-aware virtual machine placement algorithm for iaas cloud. J. Supercomput. 2018, 74, 122–140. [Google Scholar] [CrossRef]

- Ghetas, M. A multi-objective monarch butterfly algorithm for virtual machine placement in cloud computing. Neural Comput. Appl. 2021, 33, 11011–11025. [Google Scholar] [CrossRef]

- Khan, M. An efficient energy-aware approach for dynamic vm consolidation on cloud platforms. Clust. Comput. 2021, 24, 3293–3310. [Google Scholar] [CrossRef]

- Beloglazov, A.; Buyya, R. Optimal online deterministic algorithms and adaptive heuristics for energy and performance efficient dynamic consolidation of virtual machines in cloud data centers. Concurr. Comput. Pract. Exp. 2012, 24, 1397–1420. [Google Scholar] [CrossRef]

- Nehra, P.; Kesswani, N. Efficient resource allocation and management by using load balanced multi-dimensional bin packing heuristic in cloud data centers. J. Supercomput. 2023, 79, 1398–1425. [Google Scholar] [CrossRef]

- Mahmoodabadi, Z.; Nouri-Baygi, M. An approximation algorithm for virtual machine placement in cloud data centers. J. Supercomput. 2024, 80, 915–941. [Google Scholar] [CrossRef]

- Azizi, S.; Shojafar, M.; Abawajy, J.; Buyya, R. GRVMP: A Greedy Randomized Algorithm for Virtual Machine Placement in Cloud Data Centers. IEEE Syst. J. 2021, 15, 2571–2582. [Google Scholar] [CrossRef]

- Zhou, Z.; Shojafar, M.; Alazab, M.; Abawajy, J.; Li, F. AFED-EF: An Energy-Efficient VM Allocation Algorithm for IoT Applications in a Cloud Data Center. IEEE Trans. Green Commun. Netw. 2021, 5, 658–669. [Google Scholar] [CrossRef]

- Sunil, S.; Patel, S. Energy-efficient virtual machine placement algorithm based on power usage. Computing 2023, 105, 1597–1621. [Google Scholar] [CrossRef]

- Fatima, A.; Javaid, N.; Sultana, T.; Aalsalem, M.Y.; Shabbir, S. An efficient virtual machine placement via bin packing in cloud data centers. In Advanced Information Networking and Applications: Proceedings of the 33rd International Conference on Advanced Information Networking and Applications (AINA-2019) 33, Matsue, Japan, 27–29 March 2019; Springer: Cham, Switzerland, 2020; pp. 977–987. [Google Scholar]

- Zolfaghari, R.; Sahafi, A.; Rahmani, A.M.; Rezaei, R. An energy-aware virtual machines consolidation method for cloud computing: Simulation and verification. Softw. Pract. Exp. 2022, 52, 194–235. [Google Scholar] [CrossRef]

- Tarafdar, A.; Debnath, M.; Khatua, S.; Das, R.K. Energy and quality of service-aware virtual machine consolidation in a cloud data center. J. Supercomput. 2020, 76, 9095–9126. [Google Scholar] [CrossRef]

- Masoudi, J.; Barzegar, B.; Motameni, H. Energy-aware virtual machine allocation in dvfs-enabled cloud data centers. IEEE Access 2021, 10, 3617–3630. [Google Scholar] [CrossRef]

- Nagarajan, R.; Thirunavukarasu, R. A review on intelligent cloud broker for effective service provisioning in cloud. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 519–524. [Google Scholar]

- Javadpour, A.; Sangaiah, A.K.; Pinto, P.; Ja’fari, F.; Zhang, W.; Abadi, A.M.H.; Ahmadi, H. An energy-optimized embedded load balancing using dvfs computing in cloud data centers. Comput. Commun. 2023, 197, 255–266. [Google Scholar] [CrossRef]

- Gabhane, J.P.; Pathak, S.; Thakare, N. An improved multi-objective eagle algorithm for virtual machine placement in cloud environment. Microsyst. Technol. 2023, 30, 489–501. [Google Scholar] [CrossRef]

- Lima, D.; Aquino, A.; Curado, M. A Virtual Machine Placement Algorithm for Resource Allocation in Cloud-Based Environments. In Workshop De Gerência E Operação De Redes E Serviços (WGRS); Sociedade Brasileira de Computação: Porto Alegre, Brazil, 2023; pp. 113–124. [Google Scholar]

- Nikzad, B.; Barzegar, B.; Motameni, H. Sla-aware and energy-efficient virtual machine placement and consolidation in heterogeneous dvfs enabled cloud datacenter. IEEE Access 2022, 10, 81787–81804. [Google Scholar] [CrossRef]

- Li, Z.; Yu, X.; Yu, L.; Guo, S.; Chang, V. Energy-efficient and quality-aware vm consolidation method. Future Gener. Comput. Syst. 2020, 102, 789–809. [Google Scholar] [CrossRef]

- Tlili, T.; Krichen, S. Best Fit Decreasing Algorithm for Virtual Machine Placement Modeled as a Bin Packing Problem. In Proceedings of the 2023 9th International Conference on Control, Decision and Information Technologies (CoDIT), Rome, Italy, 3–6 July 2023; pp. 1261–1266. [Google Scholar]

- Bai, W.; Xi, J.; Zhu, J.; Huang, S. Performance analysis of heterogeneous data centers in cloud computing using a complex queuing model. Math. Probl. Eng. 2015, 2015, 980945. [Google Scholar] [CrossRef]

- Peterson, L.; Bavier, A.; Fiuczynski, M.; Muir, S. Experiences building planetlab. In Proceedings of the 7th Symposium on Operating Systems Design and Implementation, Seattle, WA, USA, 6–8 November 2006; pp. 351–366. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).