Frequency-Domain and Spatial-Domain MLMVN-Based Convolutional Neural Networks

Abstract

1. Introduction

2. MVN and MLMVN Fundamentals

2.1. MVN

2.2. MLMVN

3. CNNMVN Learning Algorithm and Error Backpropagation

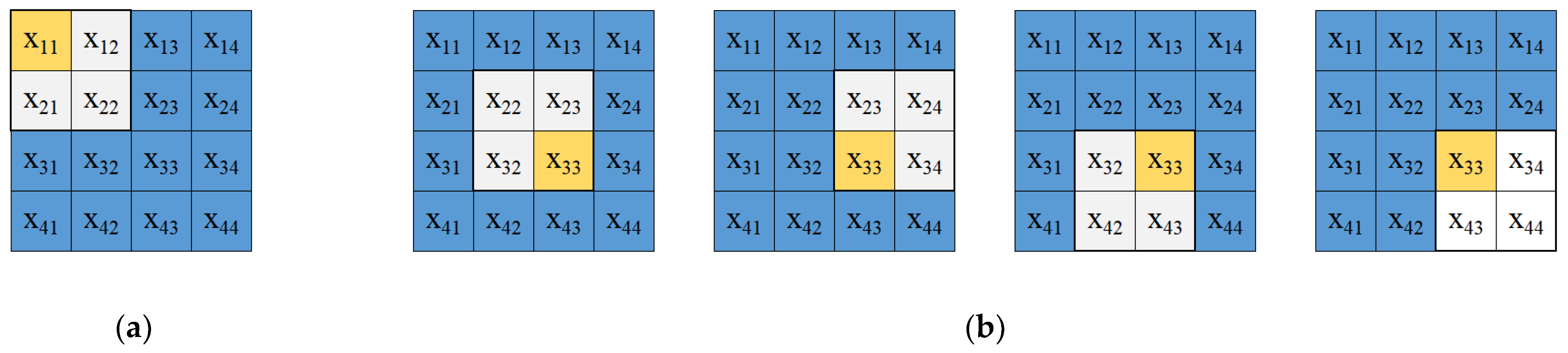

3.1. CNNMVN Feedforward Process

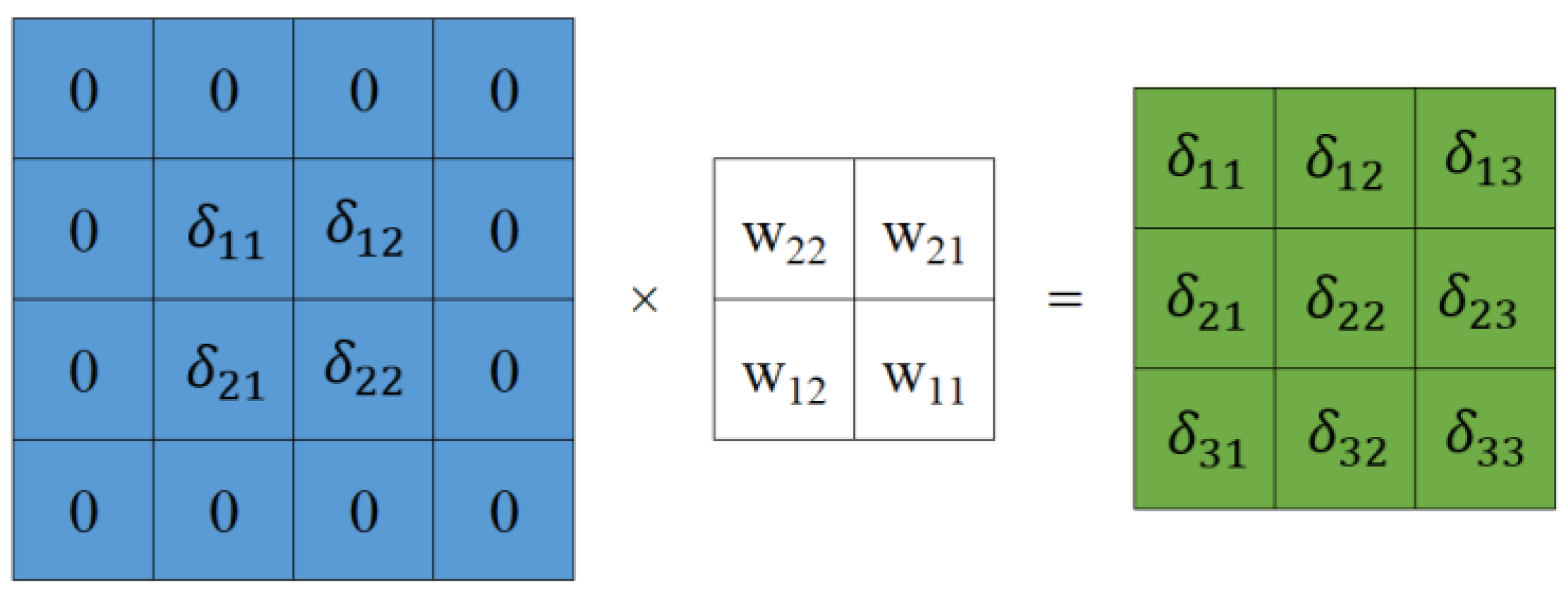

3.2. CNNMVN Error Backpropagation

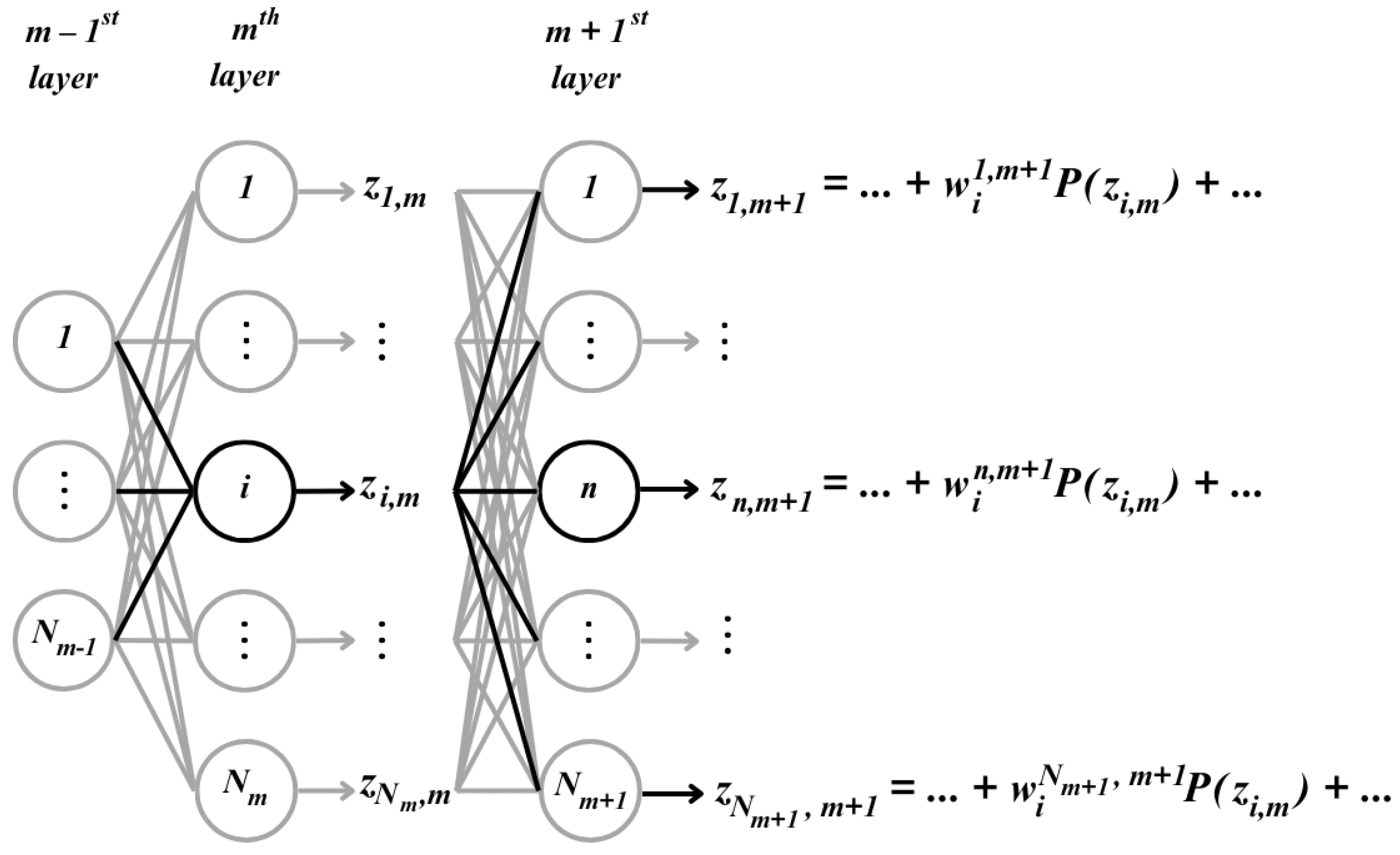

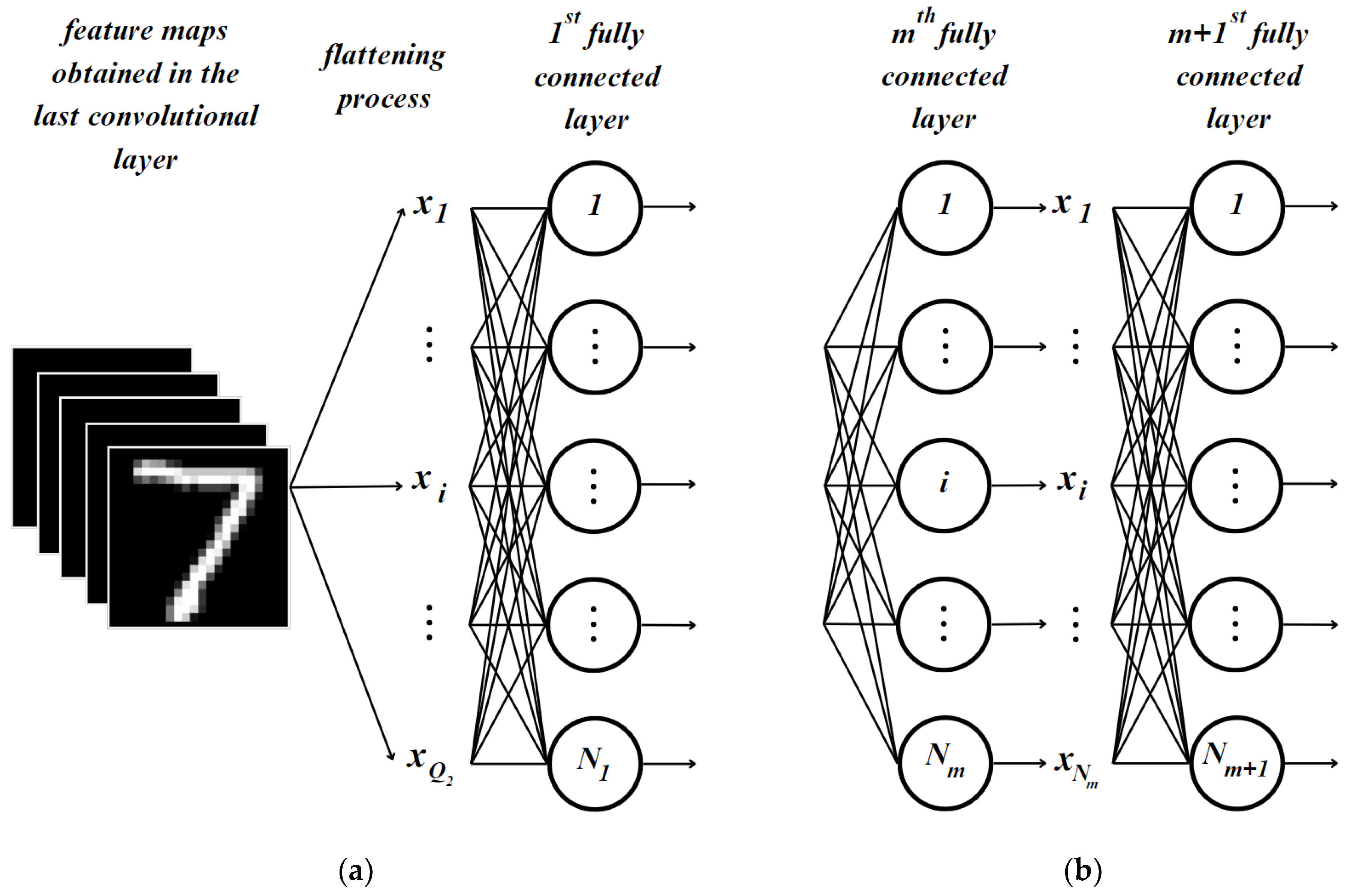

3.2.1. Error Backpropagation in the Fully Connected Part

3.2.2. Simple CNNMVN with Two Convolutional Layers

3.2.3. CNNMVN: The General Case

3.3. Adjustment of the Weights in CNNMVN

3.4. Pooling Layers for CNNMVN

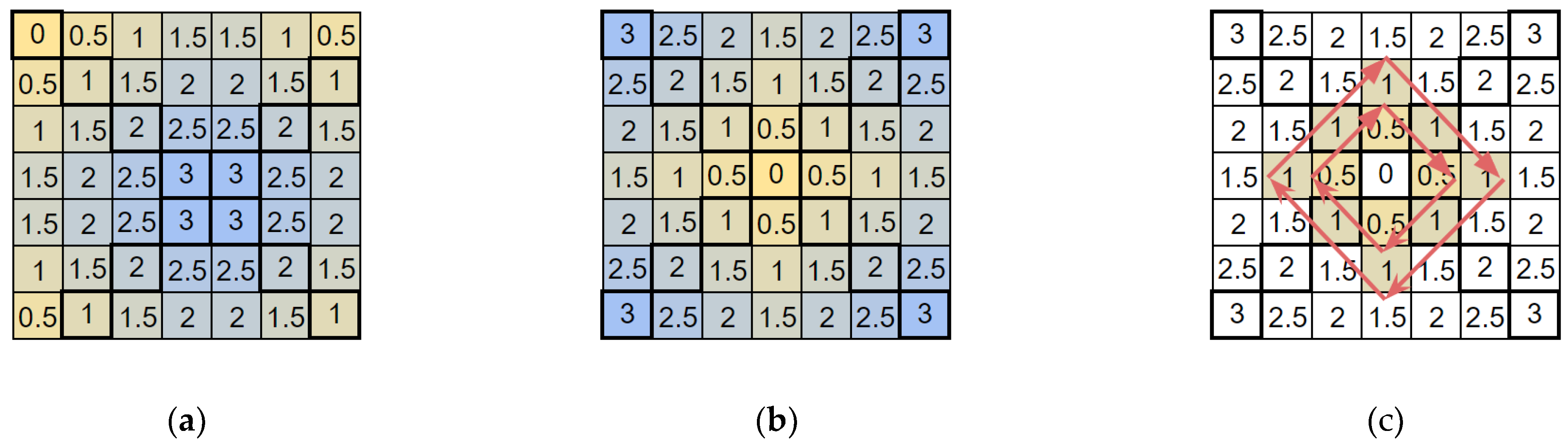

4. MLMVN as a Frequency-Domain CNN and the Frequency-Domain Pooling

4.1. MLMVN as a Frequency-Domain CNN

4.2. Frequency Domain Pooling

5. Simulation Results and Discussion

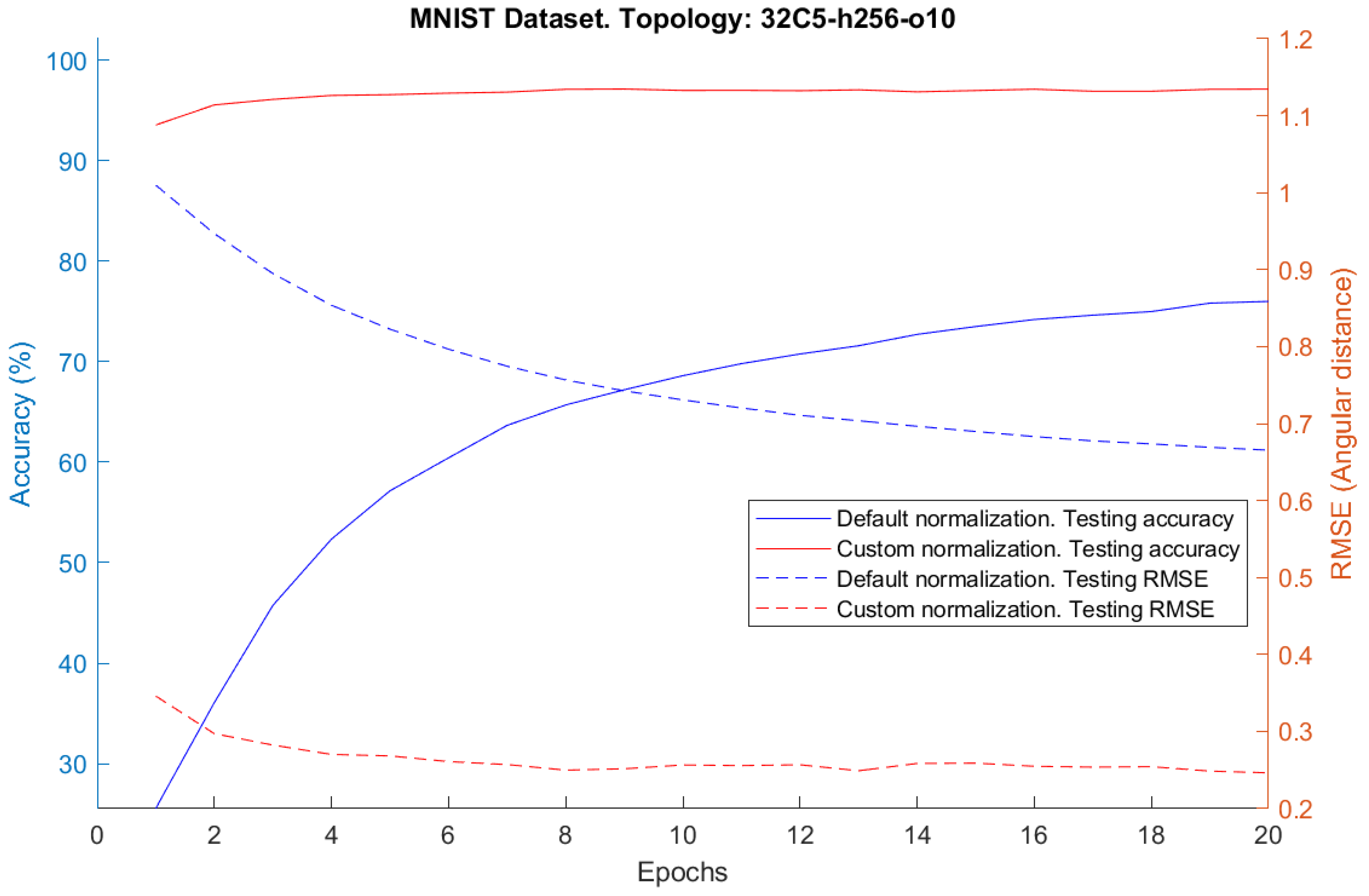

5.1. Custom Normalization for CNNMVN and Experiments

5.2. MLMVN as a CNN in the Frequency Domain

5.3. Comparative Analysis of Convolved Images Produced by CNNMVN and MLMVN as a CNN in the Frequency Domain

5.4. Comparison of the Capabilities of CNNMVN and MLMVN as a Frequency-Domain CNN with Those of Other Networks

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Curran Associates, Inc.: Red Hook, NY, USA, 2013; Volume 25. [Google Scholar]

- LeCun, Y.; Huang, F.J.; Bottou, L. Learning Methods for Generic Object Recognition with Invariance to Pose and Lighting. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; IEEE: Washington, DC, USA, 2004; Volume 2, pp. 97–104. [Google Scholar]

- Jarrett, K.; Kavukcuoglu, K.; Ranzato, M.A.; LeCun, Y. What Is the Best Multi-Stage Architecture for Object Recognition? In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 27 September–4 October 2009; IEEE: Kyoto, Japan, 2009; pp. 2146–2153. [Google Scholar]

- Gifford, N.; Ahmad, R.; Soriano Morales, M. Text Recognition and Machine Learning: For Impaired Robots and Humans. Alta. Acad. Rev. 2019, 2, 31–32. [Google Scholar] [CrossRef]

- Wu, D.; Zhang, J.; Zhao, Q. A Text Emotion Analysis Method Using the Dual-Channel Convolution Neural Network in Social Networks. Math. Probl. Eng. 2020, 2020, 6182876. [Google Scholar] [CrossRef]

- Kaur, P.; Garg, R. Towards Convolution Neural Networks (CNNs): A Brief Overview of AI and Deep Learning. In Inventive Communication and Computational Technologies; Ranganathan, G., Chen, J., Rocha, Á., Eds.; Lecture Notes in Networks and Systems; Springer: Singapore, 2020; Volume 89, pp. 399–407. ISBN 9789811501456. [Google Scholar]

- Lin, W.; Ding, Y.; Wei, H.-L.; Pan, X.; Zhang, Y. LdsConv: Learned Depthwise Separable Convolutions by Group Pruning. Sensors 2020, 20, 4349. [Google Scholar] [CrossRef]

- Wang, A.; Wang, M.; Jiang, K.; Cao, M.; Iwahori, Y. A Dual Neural Architecture Combined SqueezeNet with OctConv for LiDAR Data Classification. Sensors 2019, 19, 4927. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Lu, J.; Chen, X. An Accelerator Design Using a MTCA Decomposition Algorithm for CNNs. Sensors 2020, 20, 5558. [Google Scholar] [CrossRef]

- Caldeira, M.; Martins, P.; Cecílio, J.; Furtado, P. Comparison Study on Convolution Neural Networks (CNNs) vs. Human Visual System (HVS). In Beyond Databases, Architectures and Structures. Paving the Road to Smart Data Processing and Analysis; Kozielski, S., Mrozek, D., Kasprowski, P., Małysiak-Mrozek, B., Kostrzewa, D., Eds.; Communications in Computer and Information Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 1018, pp. 111–125. ISBN 978-3-030-19092-7. [Google Scholar]

- Yar, H.; Abbas, N.; Sadad, T.; Iqbal, S. Lung Nodule Detection and Classification Using 2D and 3D Convolution Neural Networks (CNNs). In Artificial Intelligence and Internet of Things; CRC Press: Boca Raton, FL, USA, 2021; pp. 365–386. ISBN 978-1-00-309720-4. [Google Scholar]

- Gad, A.F. Convolutional Neural Networks. In Practical Computer Vision Applications Using Deep Learning with CNNs; Apress: Berkeley, CA, USA, 2018; pp. 183–227. ISBN 978-1-4842-4166-0. [Google Scholar]

- Beysolow Ii, T. Convolutional Neural Networks (CNNs). In Introduction to Deep Learning Using R.; Apress: Berkeley, CA, USA, 2017; pp. 101–112. ISBN 978-1-4842-2733-6. [Google Scholar]

- Lin, L.; Liang, L.; Jin, L.; Chen, W. Attribute-Aware Convolutional Neural Networks for Facial Beauty Prediction. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; International Joint Conferences on Artificial Intelligence Organization: Macao, China, 2019; pp. 847–853. [Google Scholar]

- Hua, J.; Gong, X. A Normalized Convolutional Neural Network for Guided Sparse Depth Upsampling. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; International Joint Conferences on Artificial Intelligence Organization: Stockholm, Sweden, 2018; pp. 2283–2290. [Google Scholar]

- Singh, P.; Namboodiri, V.P. SkipConv: Skip Convolution for Computationally Efficient Deep CNNs. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: Glasgow, UK, 2020; pp. 1–8. [Google Scholar]

- Magalhães, D.; Pozo, A.; Santana, R. An Empirical Comparison of Distance/Similarity Measures for Natural Language Processing. In Proceedings of the Anais do XVI Encontro Nacional de Inteligência Artificial e Computacional (ENIAC 2019), Salvador, Brazil, 15–18 October 2019; Sociedade Brasileira de Computação—SBC: Porto Alegre, RS, Brazil, 2019; pp. 717–728. [Google Scholar]

- Xiao, X.; Qiang, Y.; Zhao, J.; Zhao, P. A Deep Learning Model of Automatic Detection of Pulmonary Nodules Based on Convolution Neural Networks (CNNs). In Bio-Inspired Computing—Theories and Applications; Gong, M., Pan, L., Song, T., Zhang, G., Eds.; Communications in Computer and Information Science; Springer: Singapore, 2016; Volume 681, pp. 349–361. ISBN 978-981-10-3610-1. [Google Scholar]

- Venkatesan, R.; Li, B. Modern and Novel Usages of CNNs. In Convolutional Neural Networks in Visual Computing; CRC Press: Boca Raton, FL, USA; Taylor & Francis: London, UK, 2017; pp. 117–146. ISBN 978-1-315-15428-2. [Google Scholar]

- Sirish Kaushik, V.; Nayyar, A.; Kataria, G.; Jain, R. Pneumonia Detection Using Convolutional Neural Networks (CNNs). In Proceedings of First International Conference on Computing, Communications, and Cyber-Security (IC4S 2019); Singh, P.K., Pawłowski, W., Tanwar, S., Kumar, N., Rodrigues, J.J.P.C., Obaidat, M.S., Eds.; Lecture Notes in Networks and Systems; Springer: Singapore, 2020; Volume 121, pp. 471–483. ISBN 9789811533686. [Google Scholar]

- Rath, M.; Reddy, P.S.D.; Singh, S.K. Deep Convolutional Neural Networks (CNNs) to Detect Abnormality in Musculoskeletal Radiographs. In Second International Conference on Image Processing and Capsule Networks; Chen, J.I.-Z., Tavares, J.M.R.S., Iliyasu, A.M., Du, K.-L., Eds.; Lecture Notes in Networks and Systems; Springer International Publishing: Cham, Switzerland, 2022; Volume 300, pp. 107–117. ISBN 978-3-030-84759-3. [Google Scholar]

- Wang, Z.; Lan, Q.; He, H.; Zhang, C. Winograd Algorithm for 3D Convolution Neural Networks. In Artificial Neural Networks and Machine Learning—ICANN 2017; Lintas, A., Rovetta, S., Verschure, P.F.M.J., Villa, A.E.P., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 10614, pp. 609–616. ISBN 978-3-319-68611-0. [Google Scholar]

- Xiao, L.; Zhang, H.; Chen, W.; Wang, Y.; Jin, Y. Transformable Convolutional Neural Network for Text Classification. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; International Joint Conferences on Artificial Intelligence Organization: Stockholm, Sweden, 2018; pp. 4496–4502. [Google Scholar]

- Xie, C.; Li, C.; Zhang, B.; Chen, C.; Han, J.; Liu, J. Memory Attention Networks for Skeleton-Based Action Recognition. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; International Joint Conferences on Artificial Intelligence Organization: Stockholm, Sweden, 2018; pp. 1639–1645. [Google Scholar]

- Xu, J.; Zhang, X.; Li, W.; Liu, X.; Han, J. Joint Multi-View 2D Convolutional Neural Networks for 3D Object Classification. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, Yokohama, Japan, 11–17 July 2020; International Joint Conferences on Artificial Intelligence Organization: Yokohama, Japan, 2020; pp. 3202–3208. [Google Scholar]

- Toledo, Y.; Almeida, T.D.; Bernardini, F.; Andrade, E. A Case of Study about Overfitting in Multiclass Classifiers Using Convolutional Neural Networks. In Proceedings of the Anais do XVI Encontro Nacional de Inteligência Artificial e Computacional (ENIAC 2019), Salvador, Brazil, 15–18 October 2019; Sociedade Brasileira de Computaçã—SBC: Porto Alegre, RS, Brazil; pp. 799–810. [Google Scholar]

- Zeng, L.; Wang, Z.; Tian, X. KCNN: Kernel-Wise Quantization to Remarkably Decrease Multiplications in Convolutional Neural Network. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; International Joint Conferences on Artificial Intelligence Organization: Macao, China, 2019; pp. 4234–4242. [Google Scholar]

- Nikzad, M.; Gao, Y.; Zhou, J. Attention-Based Pyramid Dilated Lattice Network for Blind Image Denoising. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021; International Joint Conferences on Artificial Intelligence Organization: Montreal, QC, Canada, 2021; pp. 931–937. [Google Scholar]

- Yin, W.; Schütze, H. Attentive Convolution: Equipping CNNs with RNN-Style Attention Mechanisms. Trans. Assoc. Comput. Linguist. 2018, 6, 687–702. [Google Scholar] [CrossRef][Green Version]

- Park, S.-S.; Chung, K.-S. CENNA: Cost-Effective Neural Network Accelerator. Electronics 2020, 9, 134. [Google Scholar] [CrossRef]

- Cho, H. RiSA: A Reinforced Systolic Array for Depthwise Convolutions and Embedded Tensor Reshaping. ACM Trans. Embed. Comput. Syst. 2021, 20, 1–20. [Google Scholar] [CrossRef]

- Kim, H. AresB-Net: Accurate Residual Binarized Neural Networks Using Shortcut Concatenation and Shuffled Grouped Convolution. PeerJ Comput. Sci. 2021, 7, e454. [Google Scholar] [CrossRef]

- Sarabu, A.; Santra, A.K. Human Action Recognition in Videos Using Convolution Long Short-Term Memory Network with Spatio-Temporal Networks. Emerg Sci J 2021, 5, 25–33. [Google Scholar] [CrossRef]

- Yan, Z.; Zhang, H.; Piramuthu, R.; Jagadeesh, V.; DeCoste, D.; Di, W.; Yu, Y. HD-CNN: Hierarchical Deep Convolutional Neural Network for Large Scale Visual Recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; IEEE Computer Society: Washington, DC, USA, 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- The Ho, Q.N.; Do, T.T.; Minh, P.S.; Nguyen, V.-T.; Nguyen, V.T.T. Turning Chatter Detection Using a Multi-Input Convolutional Neural Network via Image and Sound Signal. Machines 2023, 11, 644. [Google Scholar] [CrossRef]

- Hirose, A. Complex-Valued Neural Networks; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2012; Volume 400, ISBN 978-3-642-27631-6. [Google Scholar]

- Aizenberg, I. Complex-Valued Neural Networks with Multi-Valued Neurons; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2011; Volume 353, ISBN 978-3-642-20352-7. [Google Scholar]

- Hirose, A. Complex-Valued Neural Networks. IEEJ Trans. EIS 2011, 131, 2–8. [Google Scholar] [CrossRef]

- Boonsatit, N.; Rajendran, S.; Lim, C.P.; Jirawattanapanit, A.; Mohandas, P. New Adaptive Finite-Time Cluster Synchronization of Neutral-Type Complex-Valued Coupled Neural Networks with Mixed Time Delays. Fractal Fract 2022, 6, 515. [Google Scholar] [CrossRef]

- Nitta, T. Orthogonality of Decision Boundaries in Complex-Valued Neural Networks. Neural Comput. 2004, 16, 73–97. [Google Scholar] [CrossRef] [PubMed]

- Nitta, T. Learning Transformations with Complex-Valued Neurocomputing. Int. J. Organ. Collect. Intell. 2012, 3, 81–116. [Google Scholar] [CrossRef][Green Version]

- Guo, S.; Du, B. Global Exponential Stability of Periodic Solution for Neutral-Type Complex-Valued Neural Networks. Discret. Dyn. Nat. Soc. 2016, 2016, 1–10. [Google Scholar] [CrossRef]

- Nitta, T. The uniqueness theorem for complex-valued neural networks with threshold parameters and the redundancy of the parameters. Int. J. Neur. Syst. 2008, 18, 123–134. [Google Scholar] [CrossRef]

- Valle, M.E. Complex-Valued Recurrent Correlation Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 1600–1612. [Google Scholar] [CrossRef] [PubMed]

- Kobayashi, M. Symmetric Complex-Valued Hopfield Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 1011–1015. [Google Scholar] [CrossRef]

- Suresh, S.; Sundararajan, N.; Savitha, R. Supervised Learning with Complex-Valued Neural Networks; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2013; Volume 421, ISBN 978-3-642-29490-7. [Google Scholar]

- Zhang, Z.; Wang, Z.; Chen, J.; Lin, C. Complex-Valued Neural Networks Systems with Time Delay: Stability Analysis and (Anti-)Synchronization Control; Intelligent Control and Learning Systems; Springer Nature: Singapore, 2022; Volume 4, ISBN 978-981-19544-9-8. [Google Scholar]

- Bruna, J.; Chintala, S.; LeCun, Y.; Piantino, S.; Szlam, A.; Tygert, M. A Mathematical Motivation for Complex-Valued Convolutional Networks. arXiv 2015. [Google Scholar] [CrossRef]

- Guberman, N. On Complex Valued Convolutional Neural Networks. arXiv 2016, arXiv:1602.09046. [Google Scholar]

- Popa, C.-A. Complex-Valued Convolutional Neural Networks for Real-Valued Image Classification. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: Anchorage, AK, USA, 2017; pp. 816–822. [Google Scholar]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.-Q. Complex-Valued Convolutional Neural Network and Its Application in Polarimetric SAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Sunaga, Y.; Natsuaki, R.; Hirose, A. Similar Land-Form Discovery: Complex Absolute-Value Max Pooling in Complex-Valued Convolutional Neural Networks in Interferometric Synthetic Aperture Radar. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: Glasgow, UK, 2020; pp. 1–7. [Google Scholar]

- Meyer, M.; Kuschk, G.; Tomforde, S. Complex-Valued Convolutional Neural Networks for Automotive Scene Classification Based on Range-Beam-Doppler Tensors. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; IEEE: Rhodes, Greece, 2020; pp. 1–6. [Google Scholar]

- Fuchs, A.; Rock, J.; Toth, M.; Meissner, P.; Pernkopf, F. Complex-Valued Convolutional Neural Networks for Enhanced Radar Signal Denoising and Interference Mitigation. In Proceedings of the 2021 IEEE Radar Conference (RadarConf21), Atlanta, GA, USA, 8–14 May 2021; IEEE: Atlanta, GA, USA, 2021; pp. 1–6. [Google Scholar]

- Hongo, S.; Isokawa, T.; Matsui, N.; Nishimura, H.; Kamiura, N. Constructing Convolutional Neural Networks Based on Quaternion. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: Glasgow, UK, 2020; pp. 1–6. [Google Scholar]

- Rawat, S.; Rana, K.P.S.; Kumar, V. A Novel Complex-Valued Convolutional Neural Network for Medical Image Denoising. Biomed. Signal Process. Control 2021, 69, 102859. [Google Scholar] [CrossRef]

- Chatterjee, S.; Tummala, P.; Speck, O.; Nürnberger, A. Complex Network for Complex Problems: A Comparative Study of CNN and Complex-Valued CNN. arXiv 2023. [Google Scholar] [CrossRef]

- Yadav, S.; Jerripothula, K.R. FCCNs: Fully Complex-Valued Convolutional Networks Using Complex-Valued Color Model and Loss Function. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: Paris, France, 2023; pp. 10655–10664. [Google Scholar]

- Aizenberg, I.; Moraga, C. Multilayer Feedforward Neural Network Based on Multi-Valued Neurons (MLMVN) and a Backpropagation Learning Algorithm. Soft Comput. 2007, 11, 169–183. [Google Scholar] [CrossRef]

- Aizenberg, I. MLMVN With Soft Margins Learning. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 1632–1644. [Google Scholar] [CrossRef]

- Aizenberg, I.; Vasko, A. Convolutional Neural Network with Multi-Valued Neurons. In Proceedings of the 2020 IEEE Third International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 21–25 August 2020; IEEE: Lviv, Ukraine, 2020; pp. 72–77. [Google Scholar]

- Aizenberg, I.; Herman, J.; Vasko, A. A Convolutional Neural Network with Multi-Valued Neurons: A Modified Learning Algorithm and Analysis of Performance. In Proceedings of the 2022 IEEE 13th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 26–29 October 2022; IEEE: New York, NY, USA, 2022; pp. 0585–0591. [Google Scholar]

- Pratt, H.; Williams, B.; Coenen, F.; Zheng, Y. FCNN: Fourier Convolutional Neural Networks. In Machine Learning and Knowledge Discovery in Databases; Ceci, M., Hollmén, J., Todorovski, L., Vens, C., Džeroski, S., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 10534, pp. 786–798. ISBN 978-3-319-71248-2. [Google Scholar]

- Chen, W.; Wilson, J.; Tyree, S.; Weinberger, K.Q.; Chen, Y. Compressing Convolutional Neural Networks in the Frequency Domain. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: San Francisco, CA, USA, 2016; pp. 1475–1484. [Google Scholar]

- Xu, K.; Qin, M.; Sun, F.; Wang, Y.; Chen, Y.-K.; Ren, F. Learning in the Frequency Domain. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2020, 1740–1749. [Google Scholar]

- Lopez-Pacheco, M.; Morales-Valdez, J.; Yu, W. Frequency Domain CNN and Dissipated Energy Approach for Damage Detection in Building Structures. Soft Comput. 2020, 24, 15821–15840. [Google Scholar] [CrossRef]

- Lin, J.; Ma, L.; Cui, J. A Frequency-Domain Convolutional Neural Network Architecture Based on the Frequency-Domain Randomized Offset Rectified Linear Unit and Frequency-Domain Chunk Max Pooling Method. IEEE Access 2020, 8, 98126–98155. [Google Scholar] [CrossRef]

- Li, X.; Zheng, J.; Li, M.; Ma, W.; Hu, Y. Frequency-Domain Fusing Convolutional Neural Network: A Unified Architecture Improving Effect of Domain Adaptation for Fault Diagnosis. Sensors 2021, 21, 450. [Google Scholar] [CrossRef] [PubMed]

- Gao, D.; Zheng, W.; Wang, M.; Wang, L.; Xiao, Y.; Zhang, Y. A Zero-Padding Frequency Domain Convolutional Neural Network for SSVEP Classification. Front. Hum. Neurosci. 2022, 16, 815163. [Google Scholar] [CrossRef]

- Kane, R. Fourier Transform in Convolutional Neural Networks 2022. Available online: https://rajrkane.com/blog/FourierTransformInConvolutionalNeuralNetworks/ (accessed on 10 August 2024).

- Pan, H.; Chen, Y.; Niu, X.; Zhou, W.; Li, D. Learning Convolutional Neural Networks in the Frequency Domain. arXiv 2022, arXiv:2204.06718. [Google Scholar]

- Aizenberg, I.; Vasko, A. MLMVN as a Frequency Domain Convolutional Neural Network. In Proceedings of the 2023 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 13–15 December 2023; IEEE: Las Vegas, NV, USA, 2023; pp. 341–347. [Google Scholar]

- Aizenberg, I.; Luchetta, A.; Manetti, S. A Modified Learning Algorithm for the Multilayer Neural Network with Multi-Valued Neurons Based on the Complex QR Decomposition. Soft Comput. 2012, 16, 563–575. [Google Scholar] [CrossRef]

- Aizenberg, E.; Aizenberg, I. Batch Linear Least Squares-Based Learning Algorithm for MLMVN with Soft Margins. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence and Data Mining (CIDM), Orlando, FL, USA, 9–12 December 2014; IEEE: Orlando, FL, USA, 2014; pp. 48–55. [Google Scholar]

- Shannon, C.E. Communication in the Presence of Noise. Proc. IRE 1949, 37, 10–21. [Google Scholar] [CrossRef]

- LeCun, Y.; Cortes, C.; Burges, C.J.C. The MNIST Database of Handwritten Digits. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 10 August 2024).

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best Practices for Convolutional Neural Networks Applied to Visual Document Analysis. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, Edinburgh, UK, 6 August 2003; IEEE Comput. Soc: Edinburgh, UK, 2003; Volume 1, pp. 958–963. [Google Scholar]

- Kadam, S.S.; Adamuthe, A.C.; Patil, A.B. CNN Model for Image Classification on MNIST and Fashion-MNIST Dataset. J. Sci. Res. 2020, 64, 374–384. [Google Scholar] [CrossRef]

- An, S.; Lee, M.; Park, S.; Yang, H.; So, J. An Ensemble of Simple Convolutional Neural Network Models for MNIST Digit Recognition. arXiv 2020, arXiv:2008.10400. [Google Scholar]

- Seo, Y.; Shin, K. Hierarchical Convolutional Neural Networks for Fashion Image Classification. Expert Syst. Appl. 2019, 116, 328–339. [Google Scholar] [CrossRef]

| Topology | Pool Type | Type of Error Normalization | Epoch When Maximum Accuracy Reached | Accuracy |

|---|---|---|---|---|

| 16C5-h128-o10 | None | Custom | 18 | 97.2 |

| 16C5-h256-o10 | None | Custom | 19 | 97.18 |

| 32C5-h128-o10 | None | Custom | 19 | 97.42 |

| 32C5-h256-o10 | None | Custom | 19 | 97.46 |

| 32C5-P2-h256-o10 | Max. pool | Custom | 10 | 96.36 |

| 32C5-P2-h256-o10 | Average pool | Custom | 19 | 94.63 |

| 32C5-h256-o10 | None | Default | 20 | 75.99 |

| Topology | Pool Type | Type of Error Normalization | Epoch When Maximum Accuracy Reached | Accuracy |

|---|---|---|---|---|

| 16C5-h128-o10 | None | Custom | 8 | 86.91 |

| 16C5-h256-o10 | None | Custom | 16 | 86.99 |

| 32C5-h128-o10 | None | Custom | 13 | 87.7 |

| 32C5-h256-o10 | None | Custom | 16 | 88.03 |

| 32C5-P2-h256-o10 | Max. pool | Custom | 13 | 86.99 |

| 32C5-P2-h256-o10 | Average pool | Custom | 8 | 84.78 |

| 32C5-h256-o10 | None | Default | 20 | 69.2 |

| Topology | Number of Frequencies Used | Epoch When Maximum Accuracy Reached | Accuracy |

|---|---|---|---|

| h1024-o10 | Images transformed by (1) | 199 | 93.18 |

| h2048-o10 | Images transformed by (1) | 190 | 94.13 |

| h1024-o10 | 5 | 145 | 97.86 |

| h2048-o10 | 5 | 193 | 98.11 |

| h1024-o10 | 6 | 183 | 97.91 |

| h2048-o10 | 6 | 85 | 98.09 |

| h1024-o10 | 7 | 200 | 97.78 |

| h2048-o10 | 7 | 157 | 97.86 |

| h1024-h2048-o10 | 5 | 139 | 97.18 |

| h2048-h1024-o10 | 5 | 137 | 96.85 |

| h2048-h2048-o10 | 5 | 45 | 97.3 |

| h2048-h3072-o10 | 5 | 187 | 97.58 |

| h1024-h2048-o10 | 6 | 40 | 97.08 |

| h2048-h1024-o10 | 6 | 124 | 96.9 |

| h2048-h2048-o10 | 6 | 113 | 97.41 |

| h2048-h3072-o10 | 6 | 95 | 97.44 |

| h1024-h2048-o10 | 7 | 25 | 96.68 |

| h2048-h1024-o10 | 7 | 38 | 96.66 |

| h2048-h2048-o10 | 7 | 64 | 96.71 |

| h2048-h3072-o10 | 7 | 65 | 97.15 |

| Topology | Number of Frequencies Used | Epoch When Maximum Accuracy Reached | Accuracy |

|---|---|---|---|

| h2048-o10 | Images transformed by (1) | 91 | 87.48 |

| h3072-o10 | Images transformed by (1) | 51 | 87.94 |

| h2048-o10 | 7 | 178 | 89.81 |

| h3072-o10 | 7 | 118 | 90 |

| h2048-o10 | 8 | 180 | 89.85 |

| h3072-o10 | 8 | 165 | 89.89 |

| h2048-o10 | 9 | 43 | 89.99 |

| h3072-o10 | 9 | 109 | 90 |

| h2048-h3072-o10 | 7 | 24 | 88.87 |

| h3072-h2048-o10 | 7 | 23 | 88.63 |

| h2048-h3072-o10 | 8 | 81 | 88.51 |

| h3072-h2048-o10 | 8 | 147 | 88.19 |

| h2048-h3072-o10 | 9 | 30 | 88.5 |

| h3072-h2048-o10 | 9 | 60 | 88.18 |

| Dataset | Topology | Number of Frequencies Used | # of Iteration * When Maximum Accuracy Reached | # of Iterations | Accuracy |

|---|---|---|---|---|---|

| MNIST | h2048-o10 | 5 | 3002 | 3238 | 98.2 |

| MNIST | h3072-o10 | 5 | 2274 | 2928 | 97.93 |

| Fashion MNIST | h2048-o10 | 7 | 4484 | 5351 | 89.54 |

| Fashion MNIST | h3072-o10 | 7 | 3719 | 4435 | 89.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aizenberg, I.; Vasko, A. Frequency-Domain and Spatial-Domain MLMVN-Based Convolutional Neural Networks. Algorithms 2024, 17, 361. https://doi.org/10.3390/a17080361

Aizenberg I, Vasko A. Frequency-Domain and Spatial-Domain MLMVN-Based Convolutional Neural Networks. Algorithms. 2024; 17(8):361. https://doi.org/10.3390/a17080361

Chicago/Turabian StyleAizenberg, Igor, and Alexander Vasko. 2024. "Frequency-Domain and Spatial-Domain MLMVN-Based Convolutional Neural Networks" Algorithms 17, no. 8: 361. https://doi.org/10.3390/a17080361

APA StyleAizenberg, I., & Vasko, A. (2024). Frequency-Domain and Spatial-Domain MLMVN-Based Convolutional Neural Networks. Algorithms, 17(8), 361. https://doi.org/10.3390/a17080361