Multimodal Video Summarization Using Machine Learning: A Comprehensive Benchmark of Feature Selection and Classifier Performance

Abstract

1. Introduction

- (a)

- keyframes/images [8], which represent extracted moments displayed sequentially and are often referred to as “static” summaries;

- (b)

- sets of video segments [9], frequently termed “dynamic” summaries and serving as an extension of the first type by retaining audio and visual motion elements;

- (c)

- graphical symbols [10], which complement other features with a form of graphical syntax to enhance user interpretation of summaries; and

- (d)

- automatically generated textual descriptions [11], designed to provide efficient content summaries for video materials.

- Stage 1 involves multimodal feature extraction and classification across video, audio, and fused modalities, providing a benchmark for algorithms designed to identify informative segments at the segment level; while

- Stage 2 focuses on the development and evaluation of a web service that leverages this classification capability. The service generates descriptive subtitles exclusively for the informative segments detected in Stage 1, employing a Beam Search strategy to ensure conciseness, coherence, and contextual consistency.

2. Related Work

2.1. Audio-Visual Integration in Video Summarization

2.2. Video Summarization Datasets Overview

2.3. Problem Definition and Motivation

- Feature Selection: Determining which features across each modality truly indicate summary-worthy content is nontrivial. Inadequate feature selection may propagate irrelevant or redundant information, reducing summary conciseness and informativeness.

- Cross-Modal Fusion and Alignment: Effective fusion strategies, especially those leveraging Transformers and attention mechanisms, are crucial to reconcile asynchronous or weakly correlated modalities. Yet, aligning diverse signals while maintaining computational tractability remains an open area of research.

- Utilization of External Knowledge: The integration of semantic, user, or task-specific knowledge has the potential to significantly enhance summary quality, but methods for knowledge injection and utilization are still in their infancy, often sacrificing efficiency or adaptability.

- Dataset Limitations: Many benchmark datasets (e.g., SumMe, TVSum) are relatively small-scale and may not fully reflect the diversity or complexity of real-world video data, hampering robust evaluation and generalization studies.

- Classifier Performance: The ultimate success of any machine-learning-driven summarization system depends not only on input representations and fusion strategies but also on the choice and training of classifiers to distinguish important segments—an area where comprehensive benchmarking is critically lacking [24].

- Comprehensive Evaluation of Multimodal Features: This study benchmarks and compares a diverse set of audio, visual, and combined (hybrid) segment-level feature representations for video summarization, quantifying their individual and joint contributions to summary informativeness and reliability.

- Systematic Classifier Benchmarking: A range of machine learning classifiers, including Naïve Bayes, K-nearest neighbours (KNN), logistic regression, decision trees, random forests, and XGBoost, are systematically evaluated on the task of multimodal segment classification, highlighting how classifier selection impacts summary accuracy, recall, and macro F1 performance metrics.

- Feature Selection and Ranking: The research applies recursive feature elimination (RFE) on the full feature set to identify and rank the most informative audio and video attributes, providing insights into which modalities and features most influence classifier performance and summary potential.

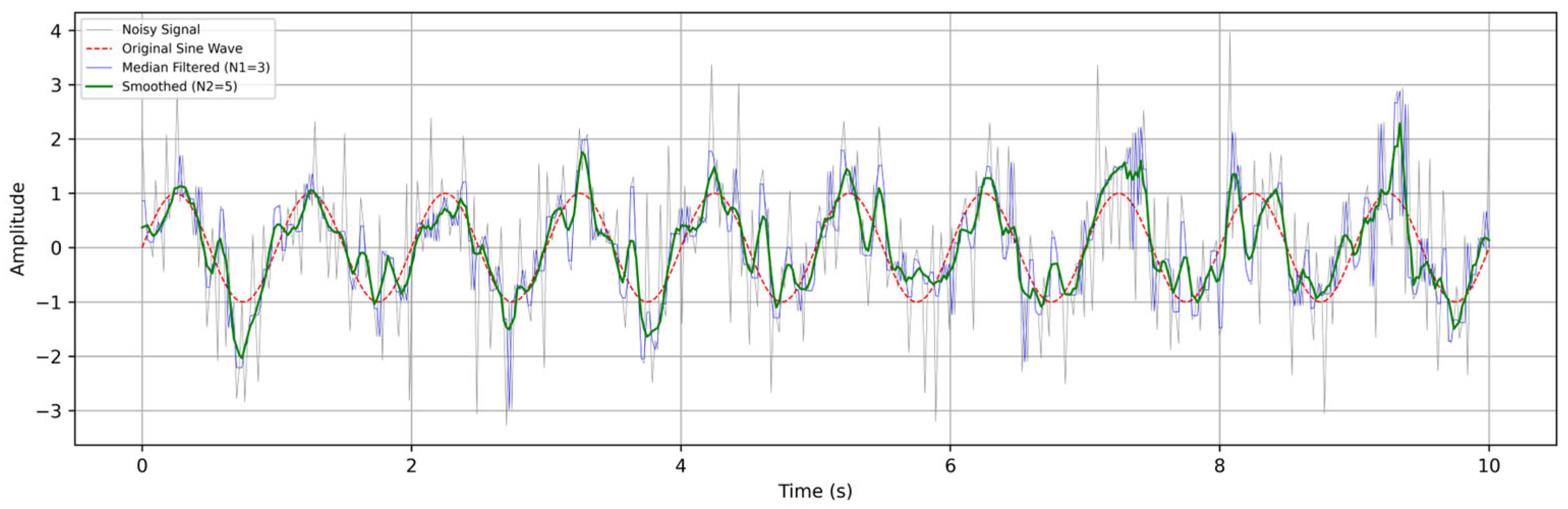

- Temporal Smoothing for Segment Continuity: Median filtering is implemented post-classification to improve the temporal smoothness of summary outputs by mitigating irregular transitions or overly fragmented segmentations that detract from the viewing experience.

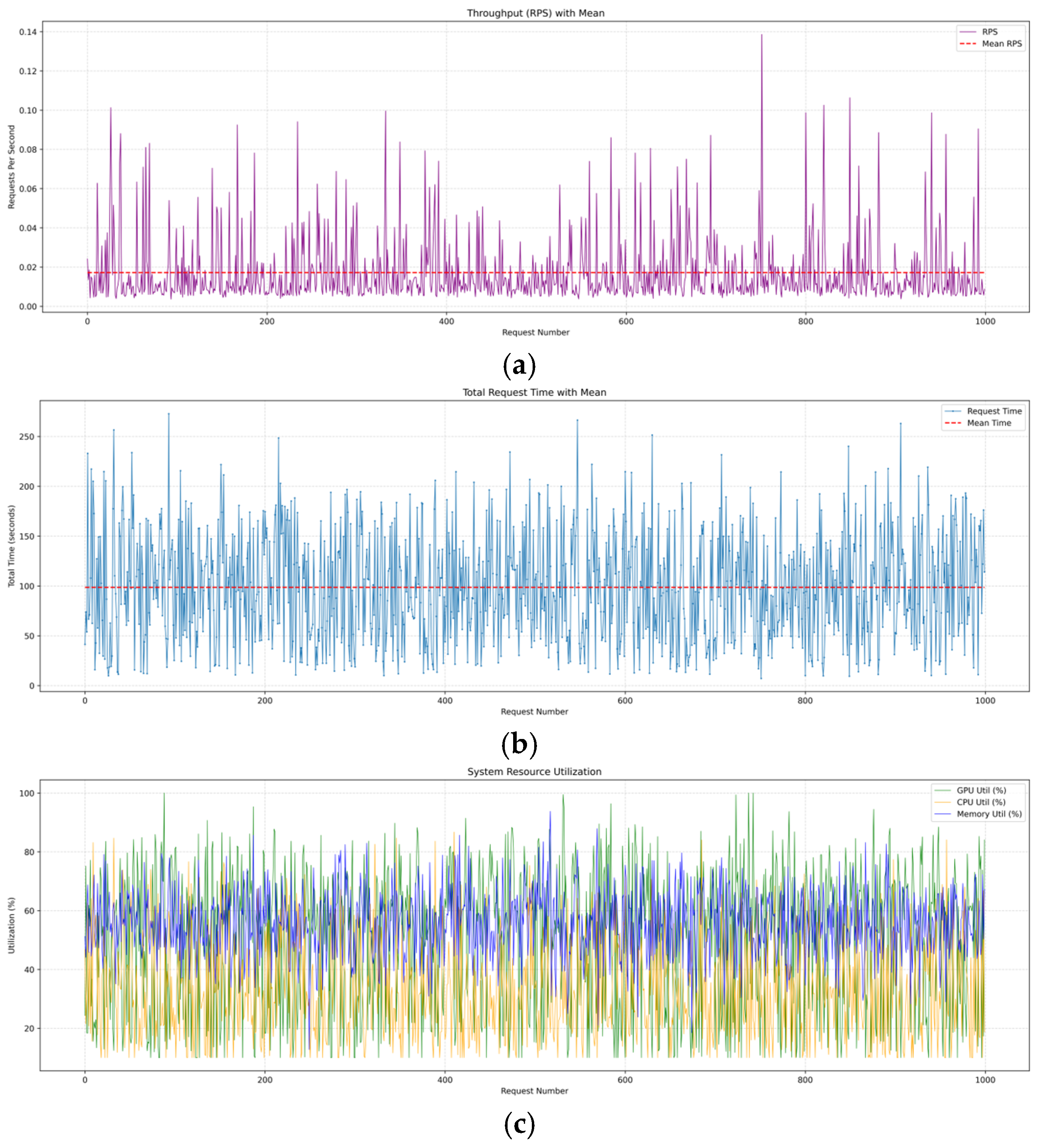

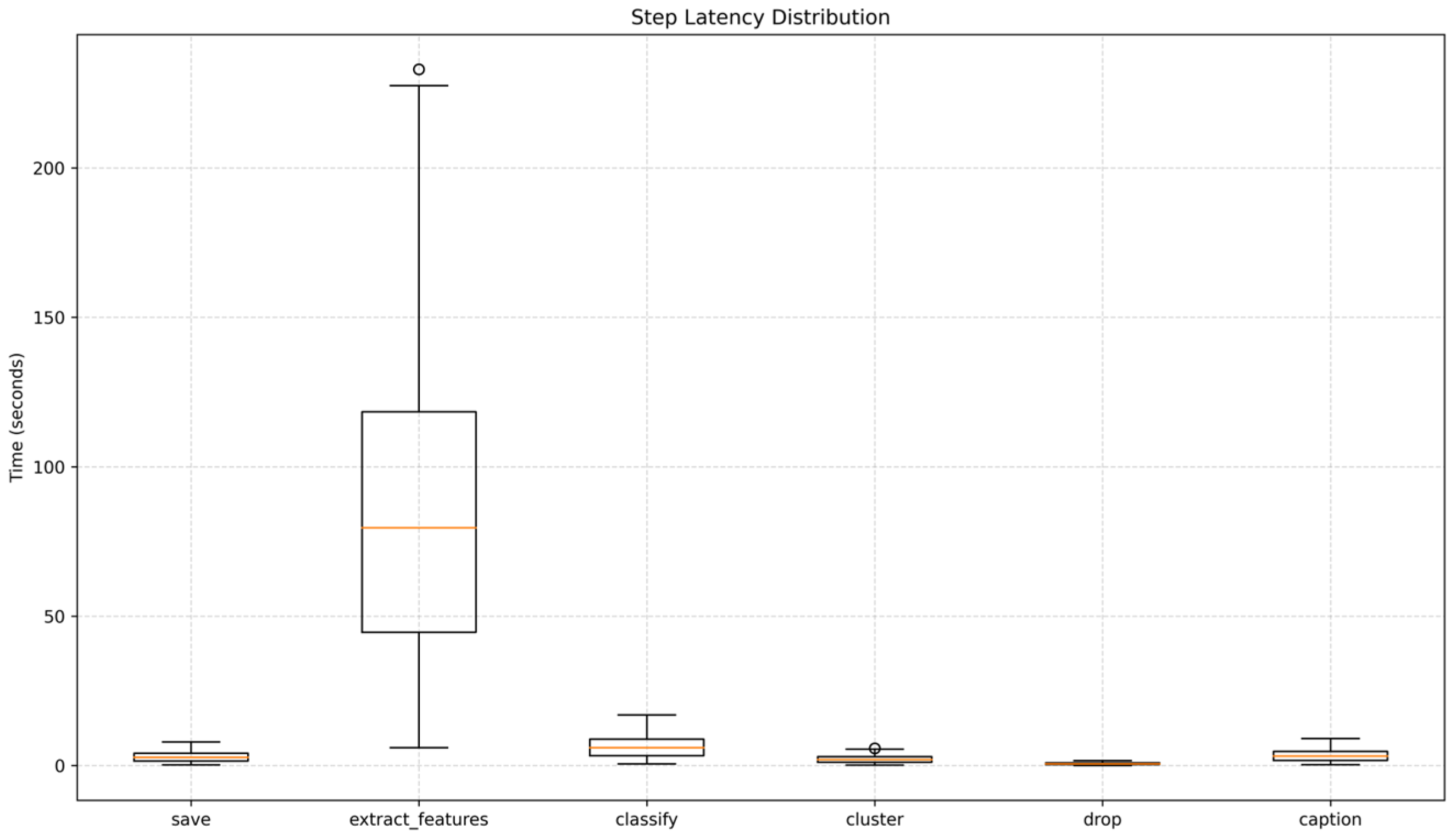

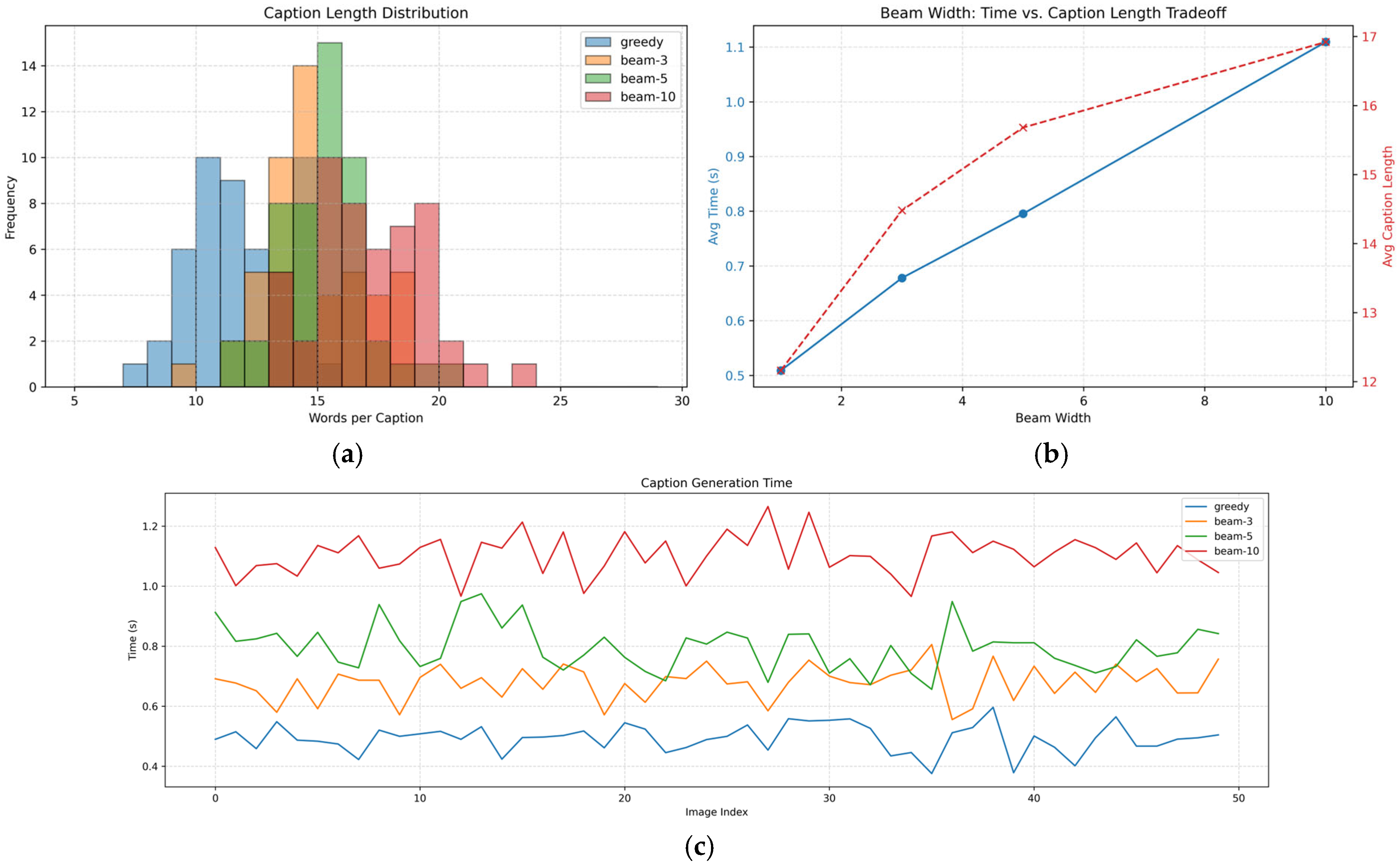

- Operational Metrics Benchmarking: The study introduces fine-grained tracking and evaluation of key operational metrics including step-wise latency (the execution time of each processing stage such as saving, feature extraction, clustering, dropping segments, and caption generation), total request time (overall end-to-end processing duration for each captioning request), simulated GPU and CPU utilization (reflecting computational load during processing), memory utilization to estimate runtime memory pressure, requests per second as a throughput measure, caption generation time comparisons between greedy and beam search decoding, caption length statistics as proxies for verbosity, and an analysis of beam width trade-offs that balances decoding complexity and caption richness.

- Unified Benchmarking Framework: The research integrates classification performance with operational system metrics, equipping practitioners with a holistic understanding of both model effectiveness and practical deployment considerations, ranging from computational efficiency to system scalability and real-world responsiveness.

- Generalizability and Guidance for Deployment: The results and framework established here are positioned to inform practical decisions for real-world video summarization deployment, enabling adaptive, resource-efficient, and user-oriented video content services in complex and high-demand multimedia environments.

3. Materials and Methods

3.1. Dataset and Annotations

- Frame-level training data, where columns “id” and “labels” identify video recordings and their categories, respectively, while “segment_start_times”, “segment_end_times”, “segments_labels”, and “segment_scores” define annotated segments as temporal intervals, category labels, and indicators of belonging to specific categories; and

- Video-level training data, characterized by columns “mean_rgb” and “mean_audio”, which represent average values of audio and video features in the form of decimal arrays.

- Videos with fewer than five annotations (with a minimum threshold of three annotations for this study);

- Videos with medium annotation counts (ranging between five and seven annotations); and

- Videos with higher annotation counts (exceeding seven annotations).

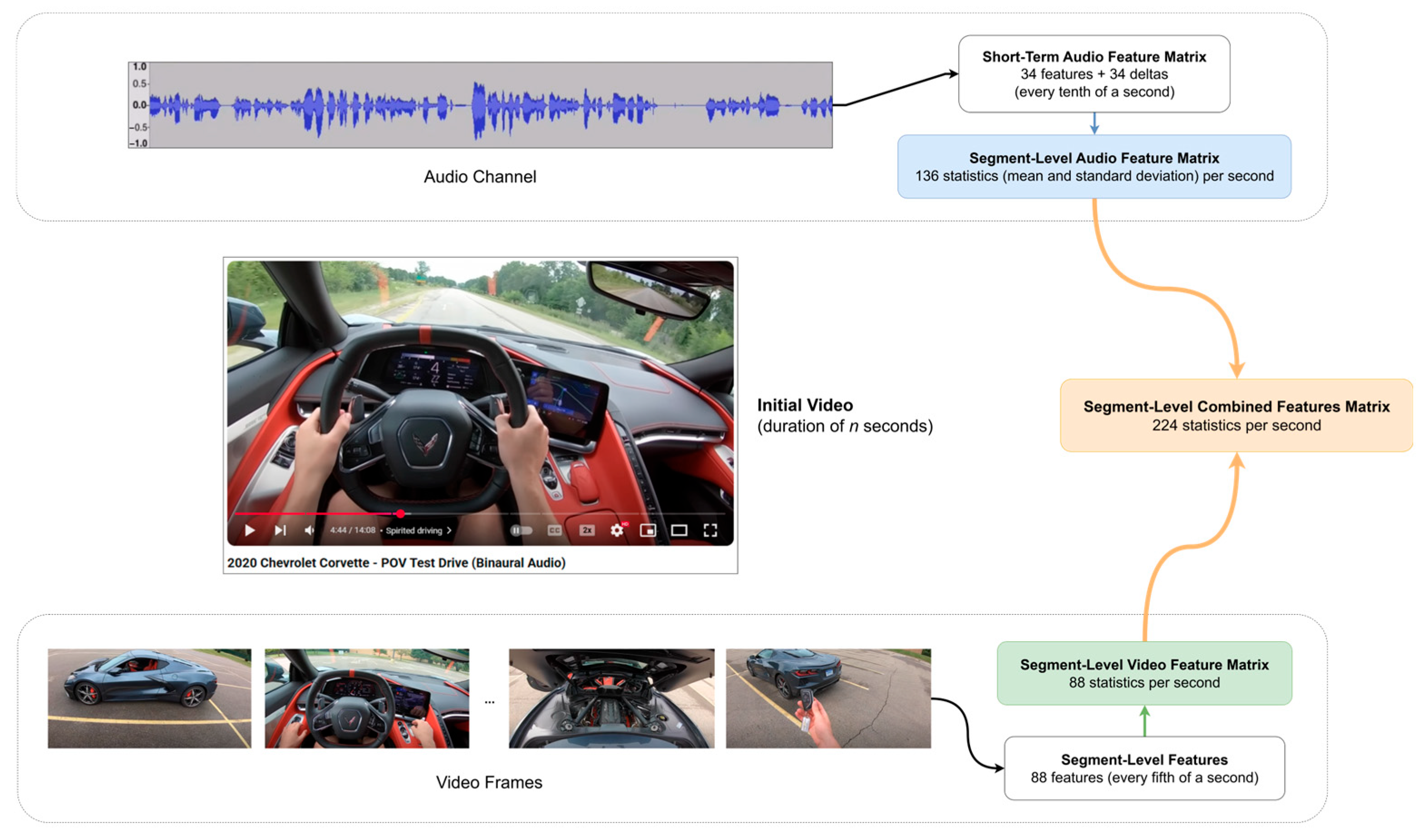

3.2. Feature Engineering

- 45 colour-related features, including: eight-class histogram of red, green and blue colour values, respectively, eight-class histogram of grayscale intensity values, five-class histogram of the ratio between maximum and mean values for each RGB channel, and eight-class histogram of saturation/intensity values

- Average absolute difference between two consecutive frames in grayscale (one feature)

- Two features for face recognition using the OpenCV-based implementation of the Viola-Jones algorithm: count of detected faces and average ratio of boundaries created around all faces within a frame relative to the total size of the frame

- Three features for optical flow estimation, calculated using the Lucas-Kanade method: mean magnitude of flow vectors, standard deviation of flow vector angles, and the ratio of these two features, which can indicate camera movement or tilt probability

- One feature for frame duration: Predefined within the library to return the number of frames composing a single second

- 36 object recognition-related features, derived using the Single Shot Multibox Detector: total number of detected objects, average confidence level of detection, mean ratio of object area relative to the frame size, and recognition across twelve object categories (“person”, “vehicle”, “nature”, “animal”, “tool/equipment”, “sport”, “kitchen”, “food”, “furniture”, “electronics”, “devices/appliances”, and “enclosed space”).

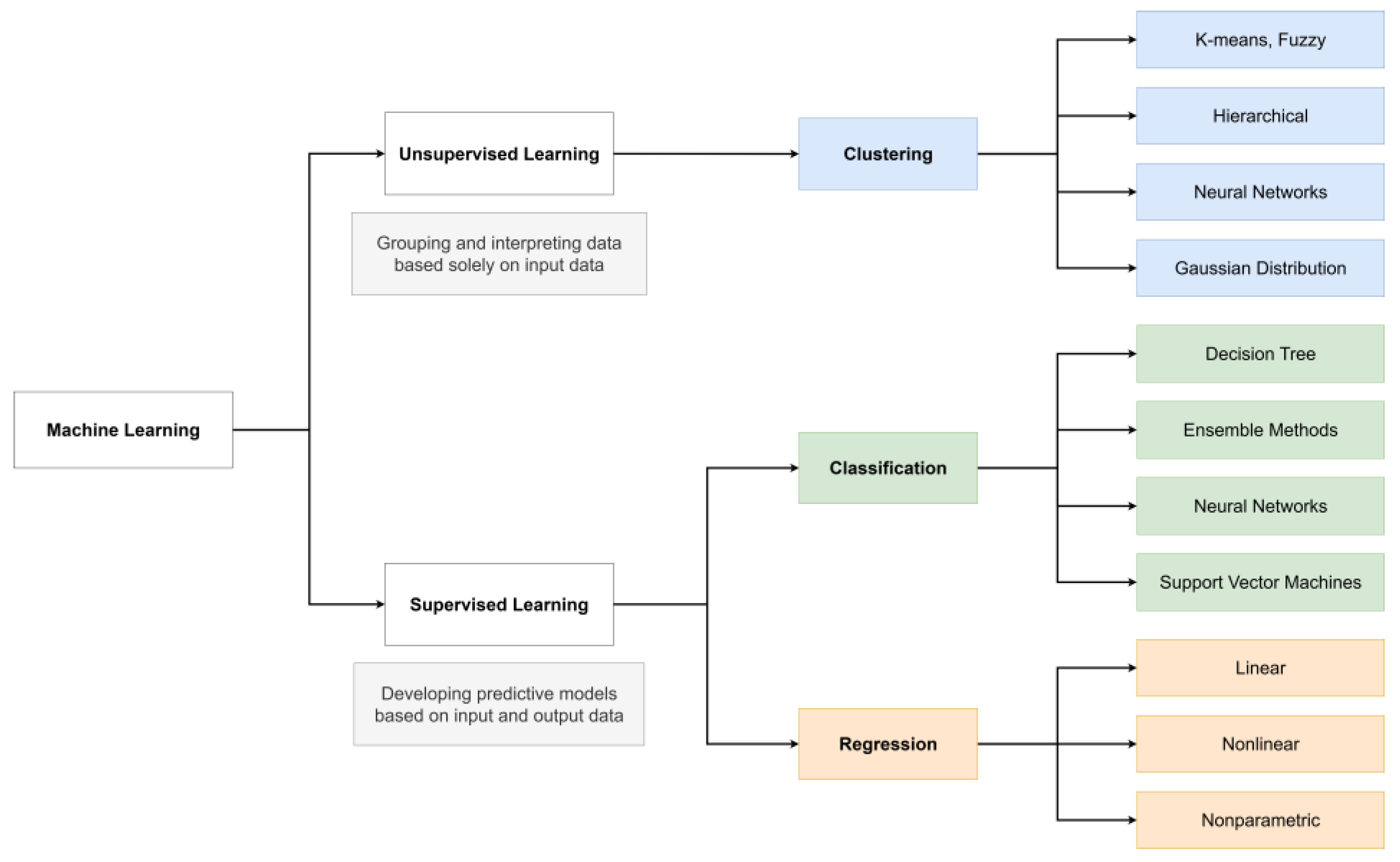

3.3. Machine Learning Models

- Naive Bayes Classifier

- K-Nearest Neighbours (KNN) algorithm

- Logistic Regression

- Decision Tree Algorithm

- Random Forest Algorithm

- XGBoost (eXtreme Gradient Boosting) Classifier.

4. Experimental Setup and Evaluation

4.1. Feature and Classifier Selection

- Audio features: 136-dimensional sound feature vector

- Video features: 88-dimensional video feature vector

- Audio-visual features: combined representation comprising 224 dimensions (based on early fusion methodology)

- Determination of audio, video, and combined features for each segment of the video

- Classification of each segment using one of the specified classifiers

- Post-processing of sequential classifier predictions to eliminate obvious errors or disruptions.

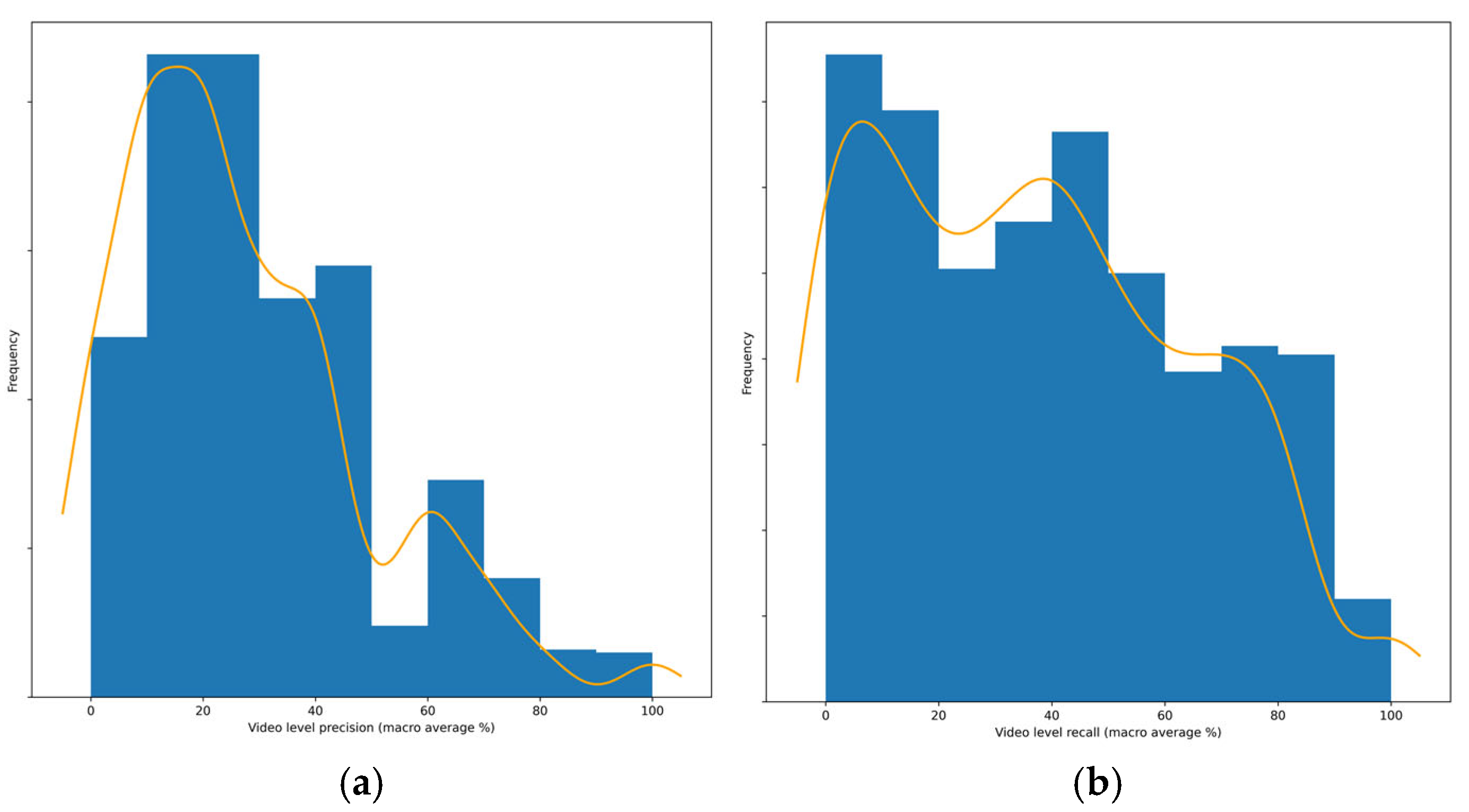

4.2. Evaluation Metrics

- Precision for the positive class: measures the proportion of segments classified as “informative” that correspond to actual informative segments according to ground truth labels

- Recall for the positive class: quantifies the proportion of informative segments identified as such by the classifier relative to all truly informative segments in the dataset

- F1 score: represents the macro average of individual F1 scores for each class, computed as the harmonic mean of precision and recall values per class. This metric serves as a normalized measure of overall classification performance

- Overall precision: calculates the total percentage of segments (both negative and positive) that are correctly classified by the system

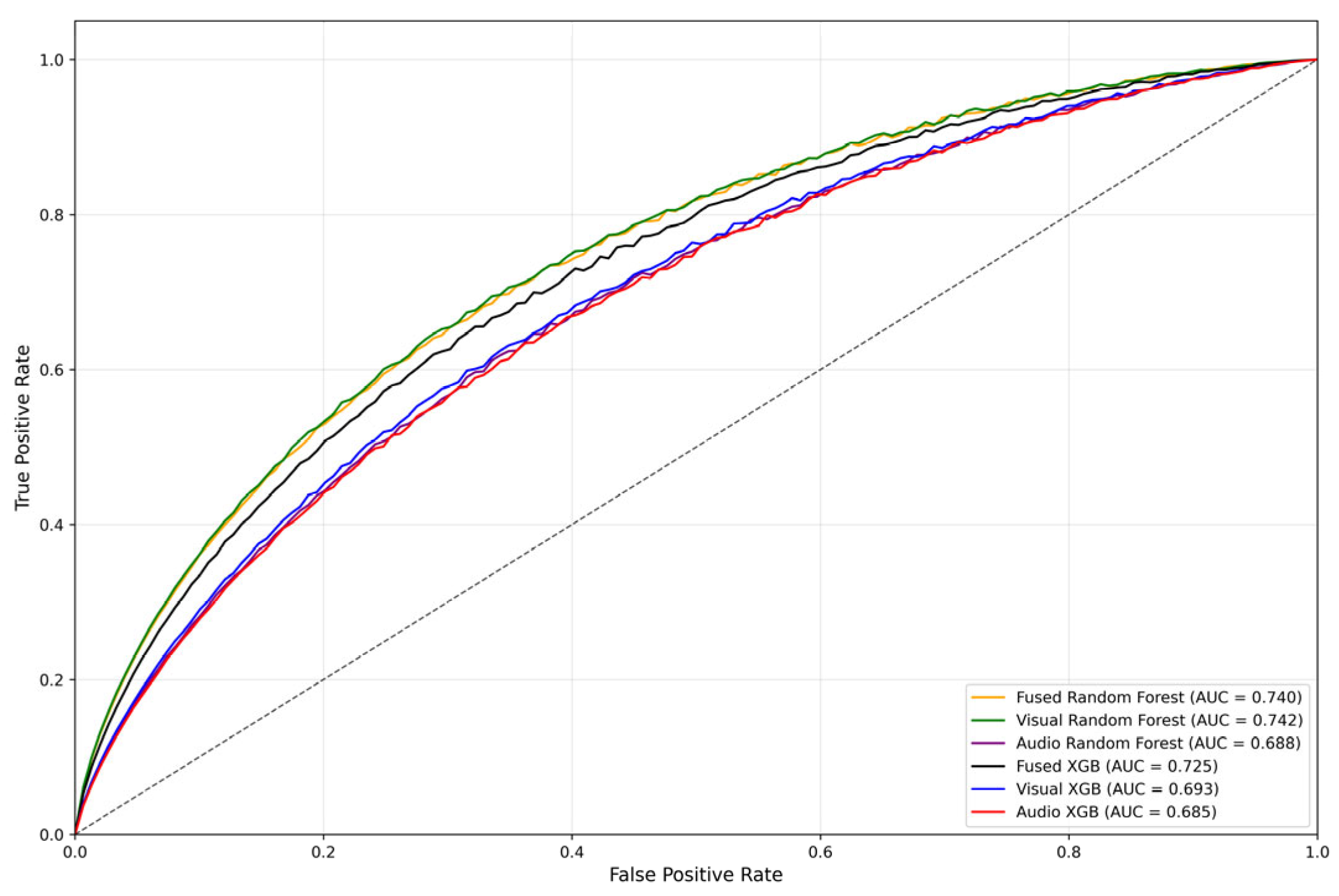

- Area under the receiver operating characteristic curve (AUC): functions as a general indicator of classifier performance, illustrating its ability to distinguish between classes across varying decision thresholds for the positive class output

4.3. Integration into an End-to-End Summarisation Pipeline

- Segment granularity: the use of fixed one-second intervals does not always coincide with semantic event boundaries, occasionally resulting in over-segmentation or the omission of short but meaningful actions.

- Computational overhead: while multimodal feature extraction substantially enhances classification accuracy, it also increases processing demands, which may constrain scalability in real-time or resource-limited environments.

- Sensitivity to variability: rapid scene transitions, background noise, or abrupt changes in visual dynamics can still introduce inconsistencies, making additional refinement or adaptive mechanisms necessary.

5. System Implementation

- Training and Testing Scripts/Jupyter Notebooks implemented in Python 3.7.9: These scripts are designed to train and evaluate models, ultimately saving the most recent model state after successful training for subsequent reuse or integration into server-side operations

- Server-Side Components (Backend): Implemented using Flask, this backend handles file uploads through a POST endpoint. After storing the video, it segments the input into fixed intervals, extracts frames, and computes the full set of multimodal features. These features are passed to a pre-trained Random Forest classifier for segment classification, followed by median filtering to refine predictions. Representative keyframes are then selected from informative segments and forwarded to the captioning model, which generates textual descriptions using greedy or beam search decoding. Both the classifier and captioning model are loaded from pre-trained checkpoints to ensure efficiency and consistency across deployments, and

- Client-Side Interface (Frontend): Built with ReactJS, this frontend consists of a single page where users can upload videos to the server and view the generated subtitles

5.1. Script for Model Training and Evaluation

- Library Integration: The script begins by importing essential libraries, including TensorFlow, matplotlib, scikit-learn, and other relevant dependencies required for data manipulation, visualization, and model development

- Data Acquisition and Preparation: The COCO dataset, which contains images and corresponding annotations/descriptions, is downloaded and preprocessed. The ‘tf.keras.utils.get_file’ function is utilized to retrieve the dataset files, which are subsequently stored in the “train2014” directory. Annotations are organized separately within the “annotations” folder

- Data Loading and Preprocessing: The script reads image annotations and file paths from the downloaded datasets. Initial and final labels are appended to each description, and the data is shuffled randomly. A maximum number of examples (“num_examples”) is set, followed by selection of a subset of data for training purposes

- Image Processing: A function named “load_image” is defined to read and process images using TensorFlow utilities. The InceptionV3 model is employed to extract image features, which are then saved as NumPy arrays for subsequent use in the model pipeline

- Description Tokenization: The ‘Tokenizer’ class from the ‘tf.keras.preprocessing.text’ library is employed to tokenize the textual descriptions and generate a vocabulary dictionary. The size of the vocabulary is restricted to the top $k$ most frequent words, while sequences are padded to ensure uniform length using the ‘pad_sequences’ function

- Dataset Splitting: The dataset is partitioned into training and test subsets using the ‘train_test_split’ function from the scikit-learn library. This step facilitates model evaluation by isolating a distinct portion of data for validation purposes

- TensorFlow Dataset Creation: A TensorFlow dataset is constructed from the training data, with the ‘map’ function applied to each image-path and annotation pair to execute the ‘load_image’ function. The resulting dataset undergoes shuffling, batching, and prefetching to optimize computational efficiency during model training

- Model Definition: Two architectures are defined: ‘CNN_Encoder’ and ‘RNN_Decoder’. The ‘CNN_Encoder’ employs a dense layer for encoding image features extracted from the InceptionV3 model, whereas the ‘RNN_Decoder’ incorporates an embedding layer and a gated recurrent unit (GRU) to generate descriptive text. Additionally, a custom class named ‘BahdanauAttention’ is implemented to handle attention mechanisms within the decoding process

- Loss Function and Optimization Definition: The sparse categorical cross-entropy loss function is selected for model training, complemented by the Adam optimizer to adjust parameters during the learning process

- Model Training: A training step function is defined using the ‘@tf.function’ decorator. This function iterates over data batches, performs forward and backward passes through the models, computes the loss, and updates weights accordingly. The training loop executes for a predefined number of epochs, with periodic checkpoints to save the model’s state at critical intervals. The evolution of the average training loss over time is illustrated in Figure 6.

- Evaluation: Two evaluation functions are implemented: ‘evaluate2’ generates descriptions using greedy search, while ‘evaluate’ employs beam search to produce multiple candidate descriptions and selects the most probable one. These functions leverage trained models to generate textual descriptions for input images

- Attention Visualization: The attention weights of the model are visualized for a given image and description through the ‘plot_attention’ function. This aids in interpreting how the model focuses on specific regions of the input during prediction, with a visual example provided in Figure 7.

- Testing and Final Evaluation: A training loop is executed to print loss values for each epoch, followed by a demonstration of description generation using sample images. This step validates the model’s performance and confirms its ability to produce coherent outputs consistent with the input data

5.2. Server-Side Application

- “beam_search_X” (where X denotes the number of results or word indices used as predic-tions) or “greedy”—a structure where keys correspond to static resources for accessing frames described by their values, and;

- “beam_search_caption” or “greedy_caption”—strings that consolidate all values within the respective “beam_search_X” or “greedy” objects into a single textual summary.

- “file”—the video requiring processing;

- “beam_index”—the number of results and word indices selected for prediction.

5.3. Client-Side Application

- A function named “handleBeamChange” that updates the index value associated with beam search parameters, and;

- An event handler “onChangeFile” responsible for retrieving the selected file and corresponding index, constructing a FormData object, initiating a POST request to the “/process” endpoint, and dynamically updating component content based on the server’s response containing the final description and frame-specific subtitles.

6. Results

6.1. Classification Performance Evaluation and Feature Importance Analysis

6.2. System Analysis During Load Testing

- Step-wise Latency: Execution time was measured independently for each processing stage: saving the uploaded video (save), extracting multimodal features for segment classification (extract_features), performing segment classification (classify), clustering similar frames from the informative segments (cluster), selecting representative keyframes (drop), and generating captions for these keyframes (caption). This breakdown enables identification of dominant computational bottlenecks across the entire two-stage pipeline.

- Total Request Time: The cumulative time from receiving a request to completing its classification and captioning response was recorded for each video. This end-to-end latency metric serves as the primary indicator of user-perceived responsiveness and system throughput under production-like conditions.

- GPU Utilization: The percentage of GPU usage was logged for each request throughout the process. This metric captures the intensity of model inference and feature extraction workloads offloaded to the GPU, and provides insight into accelerator saturation and resource efficiency.

- CPU Utilization: The corresponding CPU load was measured concurrently, reflecting the computational effort expended on orchestration, preprocessing, clustering, and token-level decoding steps that remain CPU-bound in the current implementation.

- Memory Utilization: The percentage of system memory usage was tracked per request to assess runtime memory consumption, particularly during the handling of high-resolution video frames and intermediate feature representations.

- Requests Per Second (RPS): For each individual request, system throughput was calculated as the reciprocal of the total request time. This metric characterizes the processing rate of the deployed service and is critical for estimating real-time scalability under concurrent access.

- Caption Generation Time: The duration of the captioning phase was measured separately for different decoding configurations, including greedy decoding and beam search with varying widths. This enables quantitative comparison of decoding strategies in terms of speed-performance trade-offs.

- Caption Length: The number of words in the generated caption was recorded for each request and decoding strategy. This metric serves as an indirect proxy for linguistic diversity and descriptiveness, and facilitates analysis of how decoding strategies influence output verbosity.

- Beam Width Trade-off: For each beam width setting, the average caption generation time and average caption length were aggregated. This joint metric illustrates the trade-off between computational cost and potential gains in caption quality or expressiveness when increasing decoding depth.

7. Concluding Remarks and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- The Social Shepherd. 23 Essential YouTube Statistics You Need to Know in 2023. Available online: https://thesocialshepherd.com/blog/youtube-statistics (accessed on 23 June 2023).

- Furini, M.; Ghini, V. An audio-video summarization scheme based on audio and video analysis. In Proceedings of the 3rd IEEE Consumer Communications and Networking Conference, Las Vegas, NV, USA, 8–10 January 2006. [Google Scholar] [CrossRef]

- Money, A.G.; Agius, H. Video summarisation: A conceptual framework and survey of the state of the art. J. Vis. Commun. Image Represent. 2008, 19, 121–143. [Google Scholar] [CrossRef]

- Xiong, Z.; Radhakrishnan, R.; Divakaran, A.; Rui, Y.; Huang, T.S. A Unified Framework for Video Summarization, Browsing, and Retrieval. In A Unified Framework for Video Framework for Video Summarization, Browsing and Retrieval; Academic Press: Cambridge, MA, USA, 2006; pp. 221–235. [Google Scholar] [CrossRef]

- Lai, P.K.; Decombas, M.; Moutet, K.; Laganiere, R. Video summarization of surveillance cameras. In Proceedings of the 2016 13th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Colorado Springs, CO, USA, 23–26 August 2016. [Google Scholar] [CrossRef]

- Priya, G.G.L.; Domnic, S. Medical video summarization using central tendency-based shot boundary detection. Int. J. Comput. Vis. Image Process. 2013, 3, 55–65. [Google Scholar] [CrossRef][Green Version]

- Trinh, H.; Li, J.; Miyazawa, S.; Moreno, J.; Pankanti, S. Efficient UAV video event summarization. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012) IEEE, Tsukuba, Japan, 11–15 November 2012. [Google Scholar]

- Spyrou, E.; Tolias, G.; Mylonas, P.; Avrithis, Y. Concept detection and keyframe extraction using a visual thesaurus. Multimed. Tools Appl. 2009, 41, 337–373. [Google Scholar] [CrossRef]

- Li, Y.; Merialdo, B.; Rouvier, M.; Linares, G. Static and dynamic video summaries. In Proceedings of the 19th ACM International Conference on Multimedia–MM ’11, ACM Press, Scottsdale, AZ, USA, 28 November–1 December 2011. [Google Scholar] [CrossRef]

- Lienhart, R.; Pfeiffer, S.; Effelsberg, W. The MoCA workbench: Support for creativity in movie content analysis. In Proceedings of the 3rd IEEE International Conference on Multimedia Computing and Systems, IEEE Computer Society Press, Hiroshima, Japan, 17–23 June 1996. [Google Scholar] [CrossRef]

- Chen, B.-C.; Chen, Y.-Y.; Chen, F. Video to text summary: Joint video summarization and captioning with recurrent neural networks. In Proceedings of the British Machine Vision Conference 2017, British Machine Vision Association, London, UK, 4–7 September 2017. [Google Scholar] [CrossRef]

- Zhou, K.; Qiao, Y.; Xiang, T. Deep reinforcement learning for unsupervised video summarization with diversity-representativeness reward. In Proceedings of the AAAI Conference on Artificial, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar] [CrossRef]

- Zhang, K.; Chao, W.-L.; Sha, F.; Grauman, K. Video summarization with long short-term memory. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Evangelopoulos, G.; Zlatintsi, A.; Potamianos, A.; Maragos, P.; Rapantzikos, K.; Skoumas, G.; Avrithis, Y. Multimodal saliency and fusion for movie summarization based on aural, visual, and textual attention. IEEE Trans. Multimed. 2013, 15, 1553–1568. [Google Scholar] [CrossRef]

- Wei, H.; Ni, B.; Yan, Y.; Yu, H.; Yang, X.; Yao, C. Video summarization via semantic attended networks. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar] [CrossRef]

- Pantazis, G.; Dimas, G.; Iakovidis, D.K. Salsum: Saliency-based video summarization using generative adversarial networks. arXiv 2020, arXiv:2011.10432. [Google Scholar] [CrossRef]

- Potapov, D.; Douze, M.; Harchaoui, Z.; Schmid, C. Category-Specific Video Summarization. In Computer Vision–ECCV 2014; Springer: Cham, Switzerland, 2014; Volume 8694, pp. 540–555. [Google Scholar] [CrossRef]

- Song, Y.; Vallmitjana, J.; Stent, A.; Jaimes, A. TV-Sum: Summarizing web videos using titles. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Gygli, M.; Grabner, H.; Riemenschneider, H.; Van Gool, L. Creating Summaries from User Videos. In Computer Vision–ECCV 2014; Springer: Cham, Switzerland, 2014; Volume 8695, pp. 505–520. [Google Scholar] [CrossRef]

- Lee, Y.J.; Ghosh, J.; Grauman, K. Discovering important people and objects for egocentric video summarization. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- de Avila, S.E.F.; Lopes, A.P.B.; da Luz, A., Jr.; de Albuquerque Araujo, A. Vsumm: A mechanism designed to produce static video summaries and a novel evaluation method. Pattern Recogn. Lett. 2011, 32, 56–68. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, J. MF2Summ: Multimodal Fusion for Video Summarization with Temporal Alignment. arXiv 2025, arXiv:2506.10430. [Google Scholar] [CrossRef]

- He, B.; Wang, J.; Qiu, J.; Bui, T.; Shrivastava, A.; Wang, Z. Align and Attend: Multimodal Summarization with Dual Contrastive Losses. arXiv 2023, arXiv:2303.07284. [Google Scholar] [CrossRef]

- Xu, Z.; Meng, X.; Wang, Y.; Su, Q.; Qiu, Z.; Jiang, X.; Liu, Q. Learning Summary-Worthy Visual Representation for Abstractive Summarization in Video. arXiv 2023, arXiv:2305.04824. [Google Scholar] [CrossRef]

- Xie, J.; Chen, X.; Zhao, S.; Lu, S.-P. Video summarization via knowledge-aware multimodal deep networks. Knowl. Based Syst. 2024, 293, 111670. [Google Scholar] [CrossRef]

- Kashid, S.; Awasthi, L.K.; Berwal, K.; Saini, P. Spatiotemporal Feature Fusion for Video Summarization. IEEE MultiMedia 2024, 31, 88–97. [Google Scholar] [CrossRef]

- Yang, Z.; He, J.; Toda, T. Multi-Modal Video Summarization Based on Two-Stage Fusion of Audio, Visual, and Recognized Text Information. In Proceedings of the 2024 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), IEEE, Macau, China, 3–6 December 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Yu, L.; Zhao, X.; Xie, L.; Liang, H.; Liang, R. Hierarchical Multi-Modal Video Summarization with Dynamic Sampling. IET Image Process. 2024, 18, 4577–4588. [Google Scholar] [CrossRef]

- Alaa, T.; Mongy, A.; Bakr, A.; Diab, M.; Gomaa, W. Video Summarization Techniques: A Comprehensive Review. arXiv 2024, arXiv:2410.04449. [Google Scholar] [CrossRef]

- Chen, B.; Zhao, X.; Zhu, Y. Personalized Video Summarization by Multimodal Video Understanding. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management (CIKM ’24), ACM, Boise, ID, USA, 21–25 October 2024; pp. 4382–4389. [Google Scholar] [CrossRef]

- Lee, M.J.; Gong, D.; Cho, M. Video Summarization with Large Language Models. arXiv 2025, arXiv:2504.11199. [Google Scholar] [CrossRef]

- Pang, Z.; Otani, M.; Nakashima, Y. Measure Twice, Cut Once: Grasping Video Structures and Event Semantics with LLMs for Video Temporal Localization. arXiv 2025, arXiv:2503.09027. [Google Scholar] [CrossRef]

- Guo, Y.; Xing, J.; Hou, X.; Xin, S.; Jiang, J.; Terzopoulos, D.; Jiang, C.; Liu, Y. CFSum: A Transformer-Based Multi-Modal Video Summarization Framework with Coarse-Fine Fusion. arXiv 2025, arXiv:2503.00364. [Google Scholar] [CrossRef]

- Psallidas, T.; Spyrou, E. Video Summarization Based on Feature Fusion and Data Augmentation. Computers 2023, 12, 186. [Google Scholar] [CrossRef]

- Kaggle. YouTube-8M Video Understanding Challenge 2019. Available online: https://www.kaggle.com/c/youtube8m-2019 (accessed on 27 July 2025).

- gsssrao. youtube-8m-videos-frames: YouTube-8M Videos, Frames, and IDs Generator. Available online: https://github.com/gsssrao/youtube-8m-videos-frames (accessed on 27 July 2025).

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, UK, 2014; pp. 73–86. [Google Scholar] [CrossRef]

- Obradović, S.; Milošević, B.; Štrbac, P.S. MATLAB i mašinsko učenje. In Proceedings of the XIII Međunarodni Naučno-Stručni Simpozijum INFOTEH—JAHORINA, Istočno Sarajevo, Bosnia and Herzegovina, 19–21 March 2014. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar] [CrossRef]

- Lemaître, G.; Nogueira, F.; Aridas, C.K. Imbalanced-learn: A python toolbox to tackle the curse of imbalanced datasets in machine learning. J. Mach. Learn. Res. 2017, 18, 559–563. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

| No. | Feature Name | Description |

|---|---|---|

| 1 | Zero-crossing rate | Measure of sign changes in signal values during the frame duration |

| 2 | Signal energy | Sum of squared signal values, normalized relative to the frame length |

| 3 | Energy entropy | Measure of abrupt changes in normalized subframe energy entropies |

| 4 | Spectral centroid | Centre of mass of the frequency distribution |

| 5 | Spectral spread | Second-order central moment of the spectrum |

| 6 | Spectral entropy | Entropy of normalized spectral energies for a given set of subframes |

| 7 | Spectral flux | Square difference between normalized magnitudes of two consecutive frames |

| 8 | Spectral roll-off | Frequency below which a specified percentage (typically 90%) of the magnitude spectrum is concentrated |

| 9–21 | Mel-frequency cepstral coefficients (MFCCs) | Cepstral representation of sound using the Mel frequency scale |

| 22–33 | Chromagram (Pitch Class Profile—PCP) | Twelve-dimensional Gaussian distribution of spectral energy, described by a vector of means and covariance matrix |

| 34 | PCP deviation | Standard deviation of the aforementioned twelve chromagram coefficients |

| Subset | Video Count | Segment Count | Average Video Duration |

|---|---|---|---|

| Training Set | 360 | 164,232 | 07:35 |

| Test Set | 90 | 51,768 | 09:37 |

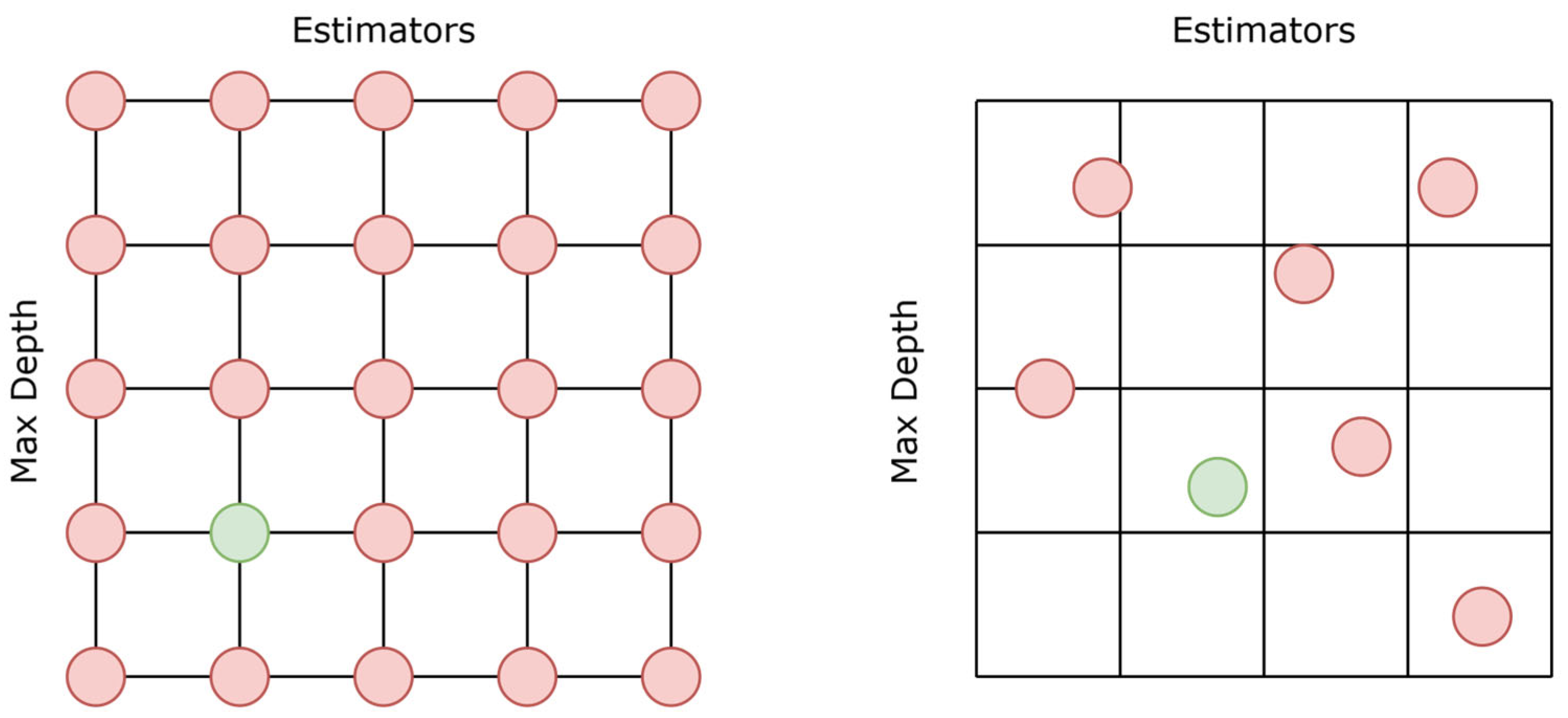

| Classifier | Hyperparameter | Best Value |

|---|---|---|

| Logistic Regression | C | 0.1 |

| KNN | k | 5 |

| Decision Tree | criterion | entropy |

| max_depth | 6 | |

| Random Forest | criterion | gini |

| Classifier | Area Under ROC Curve (AUC) | F1 Macro Average | ||||

|---|---|---|---|---|---|---|

| Audio | Video | Combined | Audio | Video | Combined | |

| Random Selection | 52.3% | 50.2% | ||||

| Naïve Bayes | 62.2% | 65.3% | 64.7% | 53.2% | 50.8% | 54.1% |

| KNN | 61.0% | 64.7% | 65.3% | 57.7% | 59.9% | 60.4% |

| Logistic Regression | 66.0% | 71.8% | 70.4% | 43.9% | 47.6% | 52.5% |

| Decision Tree | 63.2% | 70.6% | 69.8% | 44.1% | 48.1% | 48.6% |

| Random Forest | 69.0% | 74.4% | 74.2% | 60.5% | 63.7% | 64.2% |

| XGBoost | 68.7% | 69.5% | 72.7% | 63.0% | 63.1% | 66.1% |

| Parameter Combinations (N1–N2) | Precision for Positive Class | Recall | F1 Macro Average | Overall Precision |

|---|---|---|---|---|

| Without Parameters | 44.6% | 74.5% | 64.2% | 64.3% |

| 3–3 | 46.3% | 73.4% | 65.2% | 66.2% |

| 3–5 | 47.6% | 70.8% | 67.5% | 69.1% |

| 5–3 | 45.2% | 73.3% | 66.1% | 66.3% |

| 5–5 | 45.9% | 73.8% | 66.5% | 66.5% |

| Feature Name | Description | Modality |

|---|---|---|

| spectral_flux_mean | Mean value of spectral flux | Audio |

| delta spectral_spread_std | Delta standard deviation of spectral spread | Audio |

| delta mfcc_5_std | Delta standard deviation of the 5th Mel-Frequency Cepstral Coefficient (MFCC 5) | Audio |

| hist_v0 | First class of grey value histogram | Video |

| hist_v3 | Fourth class of grey value histogram | Video |

| hist_s1 | Second class of saturation value histogram | Video |

| hist_s5 | Sixth class of saturation value histogram | Video |

| frame_value_diff | Difference in frame values | Video |

| mag_std | Standard deviation of magnitude flow | Video |

| shot_durations | Duration of current frame | Video |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marevac, E.; Kadušić, E.; Živić, N.; Buzađija, N.; Tabak, E.; Velić, S. Multimodal Video Summarization Using Machine Learning: A Comprehensive Benchmark of Feature Selection and Classifier Performance. Algorithms 2025, 18, 572. https://doi.org/10.3390/a18090572

Marevac E, Kadušić E, Živić N, Buzađija N, Tabak E, Velić S. Multimodal Video Summarization Using Machine Learning: A Comprehensive Benchmark of Feature Selection and Classifier Performance. Algorithms. 2025; 18(9):572. https://doi.org/10.3390/a18090572

Chicago/Turabian StyleMarevac, Elmin, Esad Kadušić, Nataša Živić, Nevzudin Buzađija, Edin Tabak, and Safet Velić. 2025. "Multimodal Video Summarization Using Machine Learning: A Comprehensive Benchmark of Feature Selection and Classifier Performance" Algorithms 18, no. 9: 572. https://doi.org/10.3390/a18090572

APA StyleMarevac, E., Kadušić, E., Živić, N., Buzađija, N., Tabak, E., & Velić, S. (2025). Multimodal Video Summarization Using Machine Learning: A Comprehensive Benchmark of Feature Selection and Classifier Performance. Algorithms, 18(9), 572. https://doi.org/10.3390/a18090572