Identification of Damaged Canopies in Farmland Artificial Shelterbelts Based on Fusion of Unmanned Aerial Vehicle LiDAR and Multispectral Features

Abstract

1. Introduction

- To construct different machine learning and deep learning models, exploring models with optimal accuracy in identifying damaged and healthy canopy distributions in farmland shelterbelts.

- To analyze the impact of image fusion with different spectral gradients and LiDAR elevation features on classification accuracy using various feature-fused images.

2. Materials and Methods

2.1. Study Area

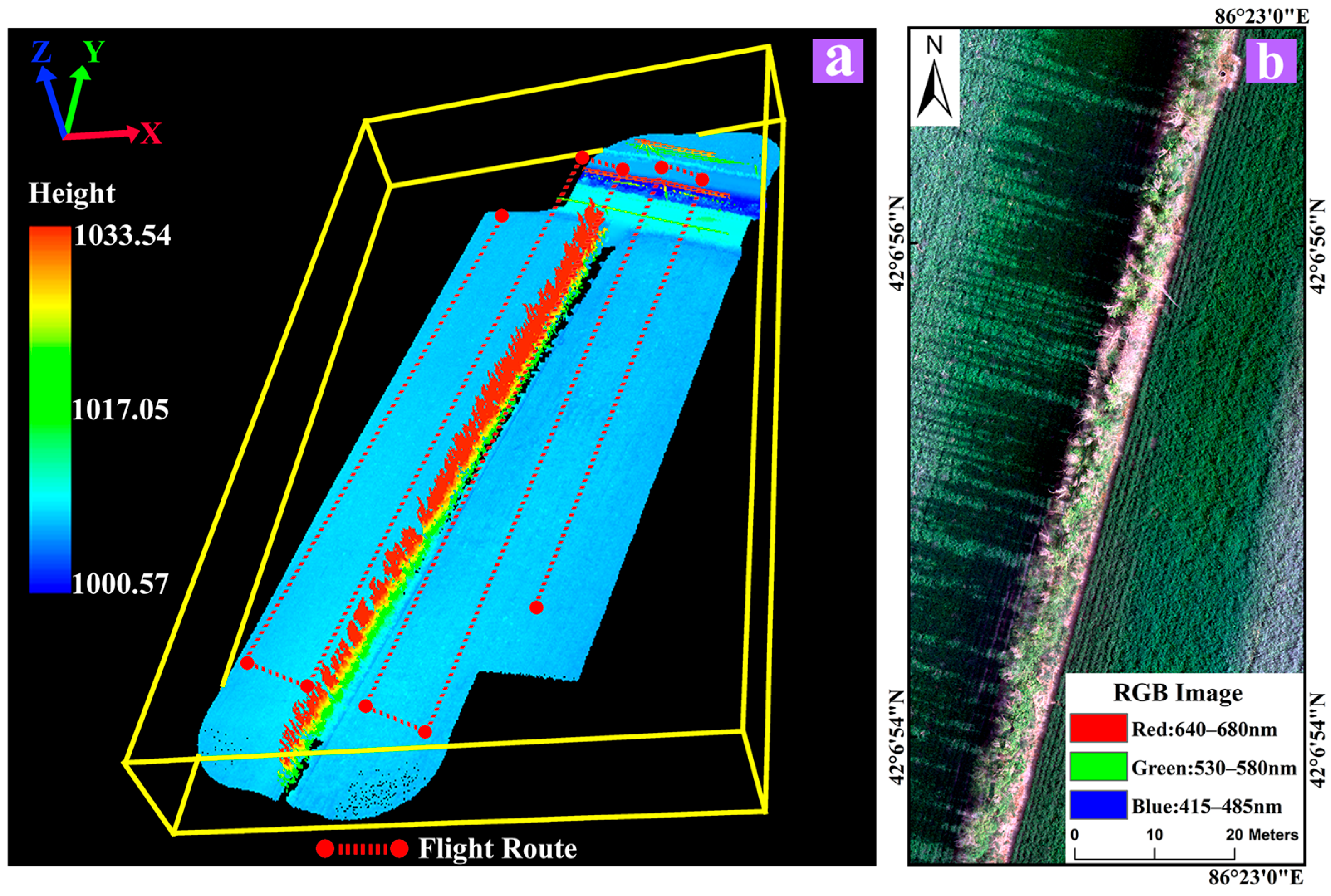

2.2. Data Acquisition and Preprocessing

2.2.1. UAV Data Acquisition

2.2.2. Ground Survey Data

2.2.3. Data Preprocessing

2.3. Research Methodology

2.3.1. Composition of Feature Fusion Images

2.3.2. Samples and Classifiers

2.3.3. Accuracy Evaluation Methods

3. Results

3.1. Spectral Reflectance Analysis

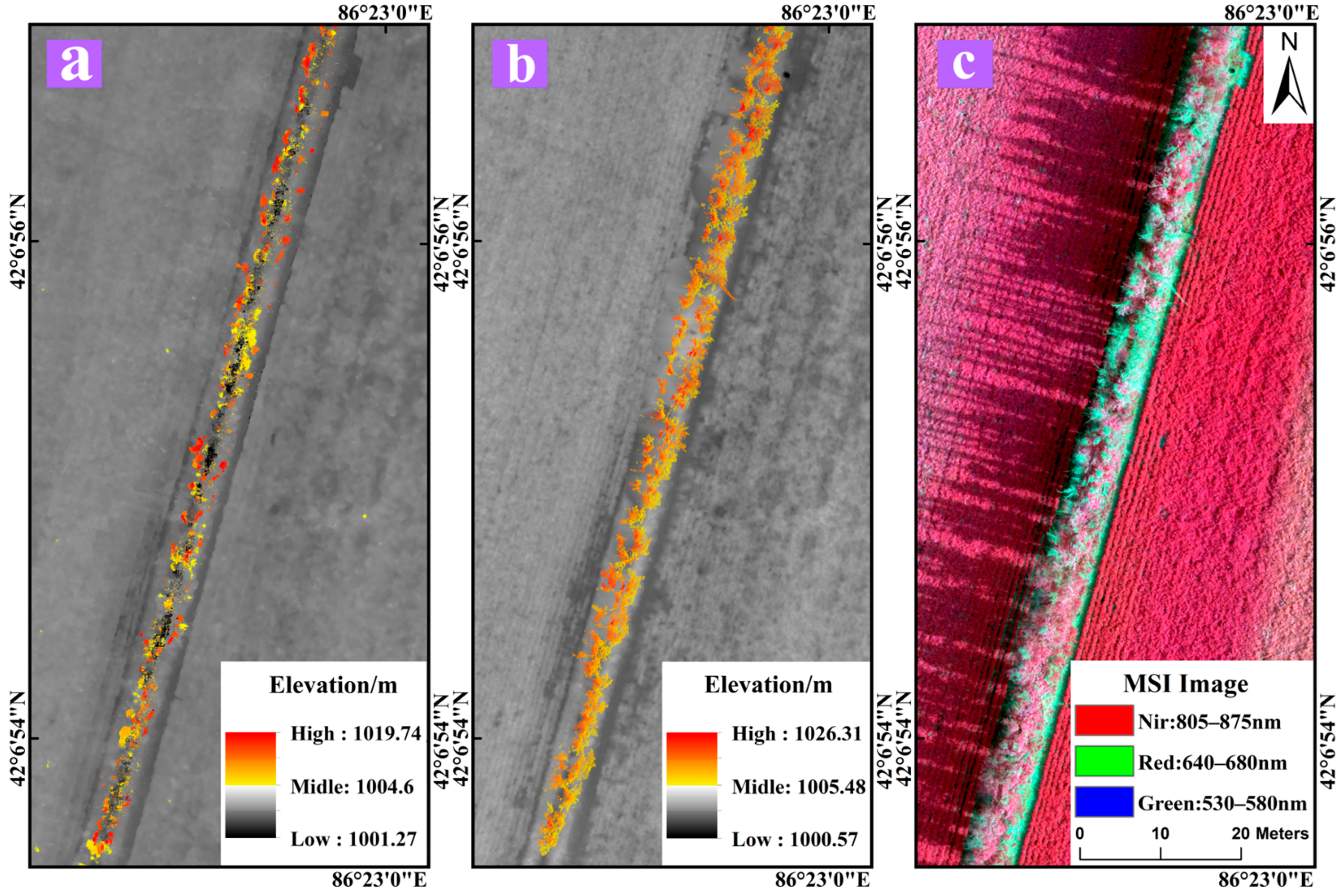

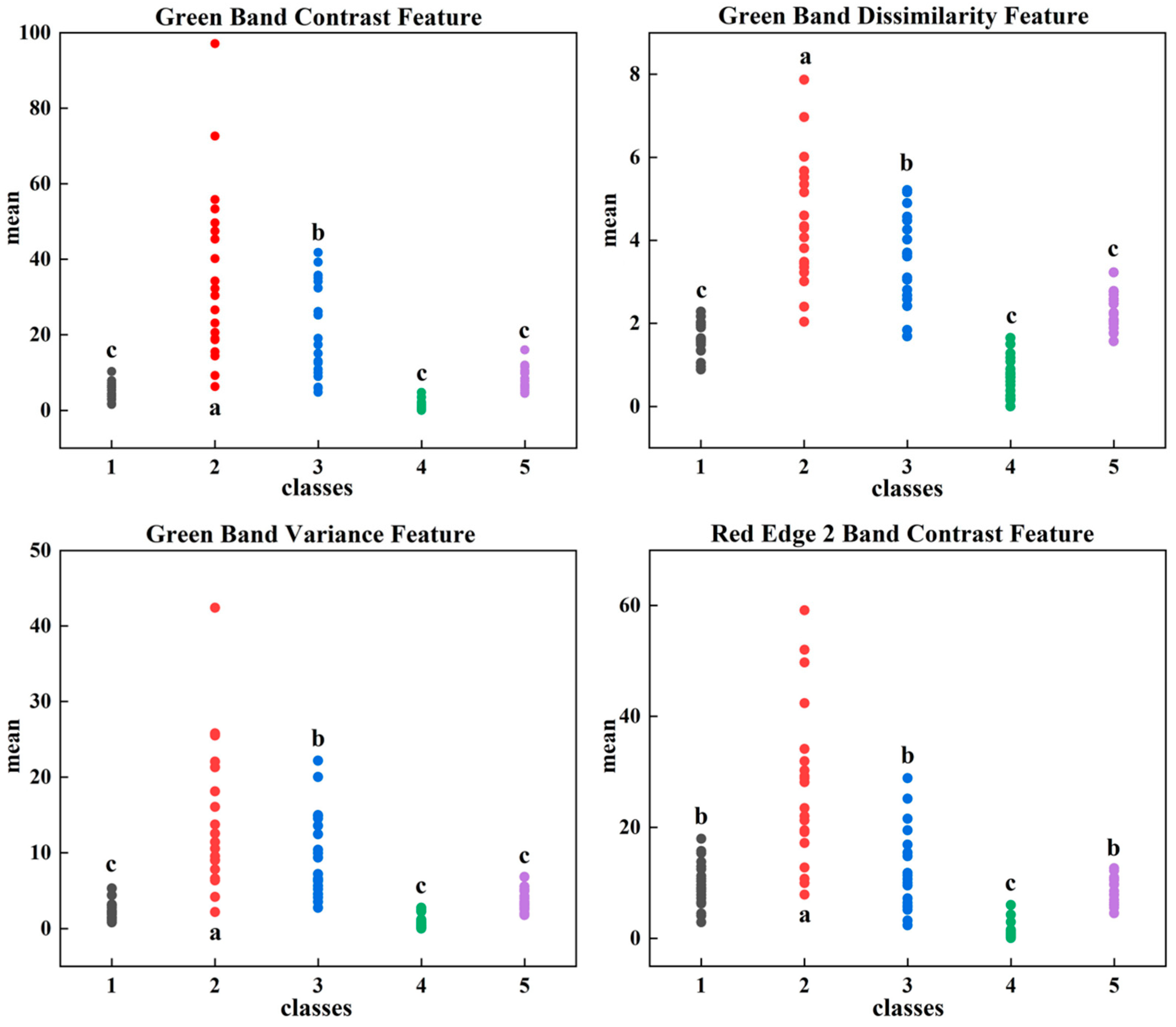

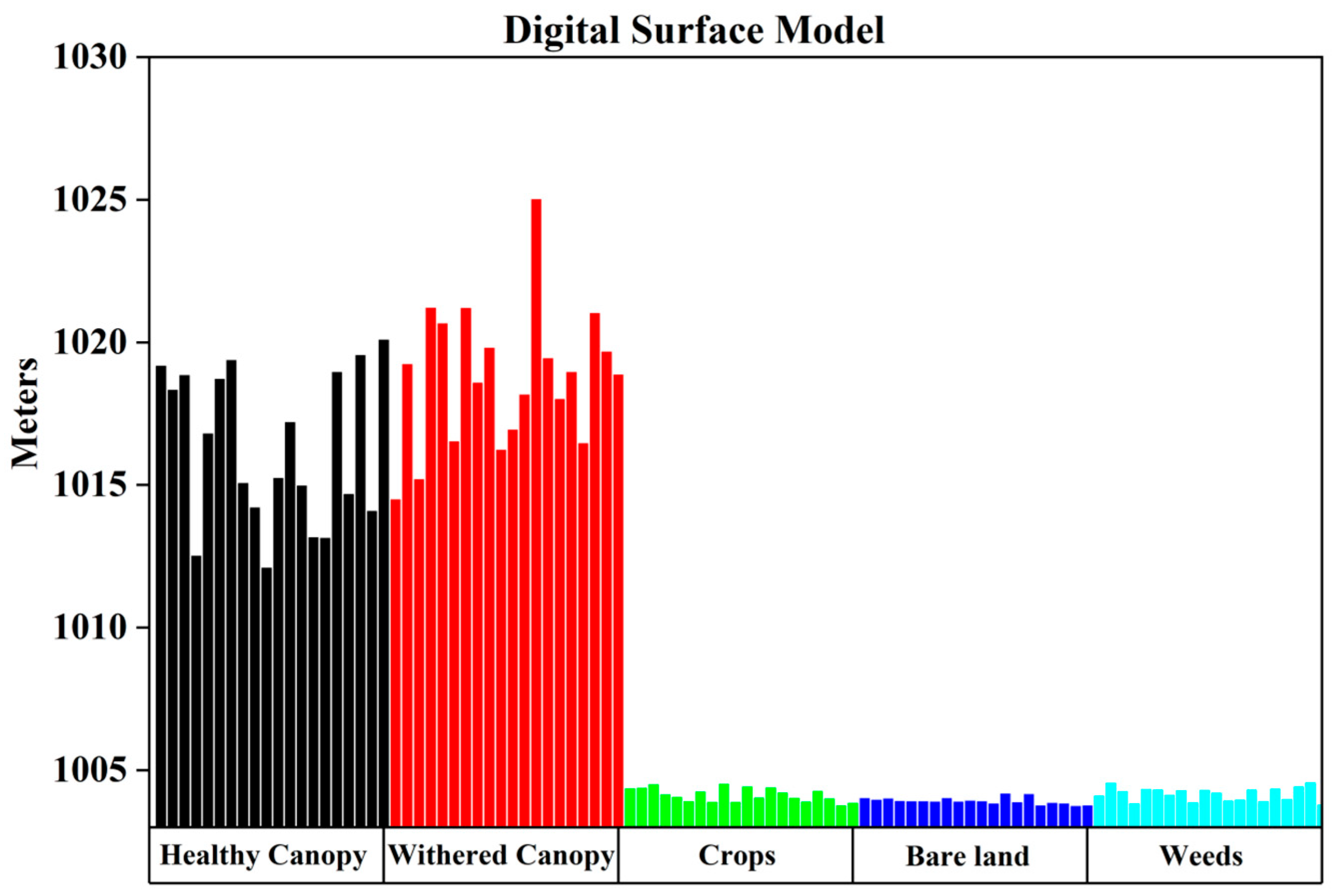

3.2. Texture Features and Elevation Analysis

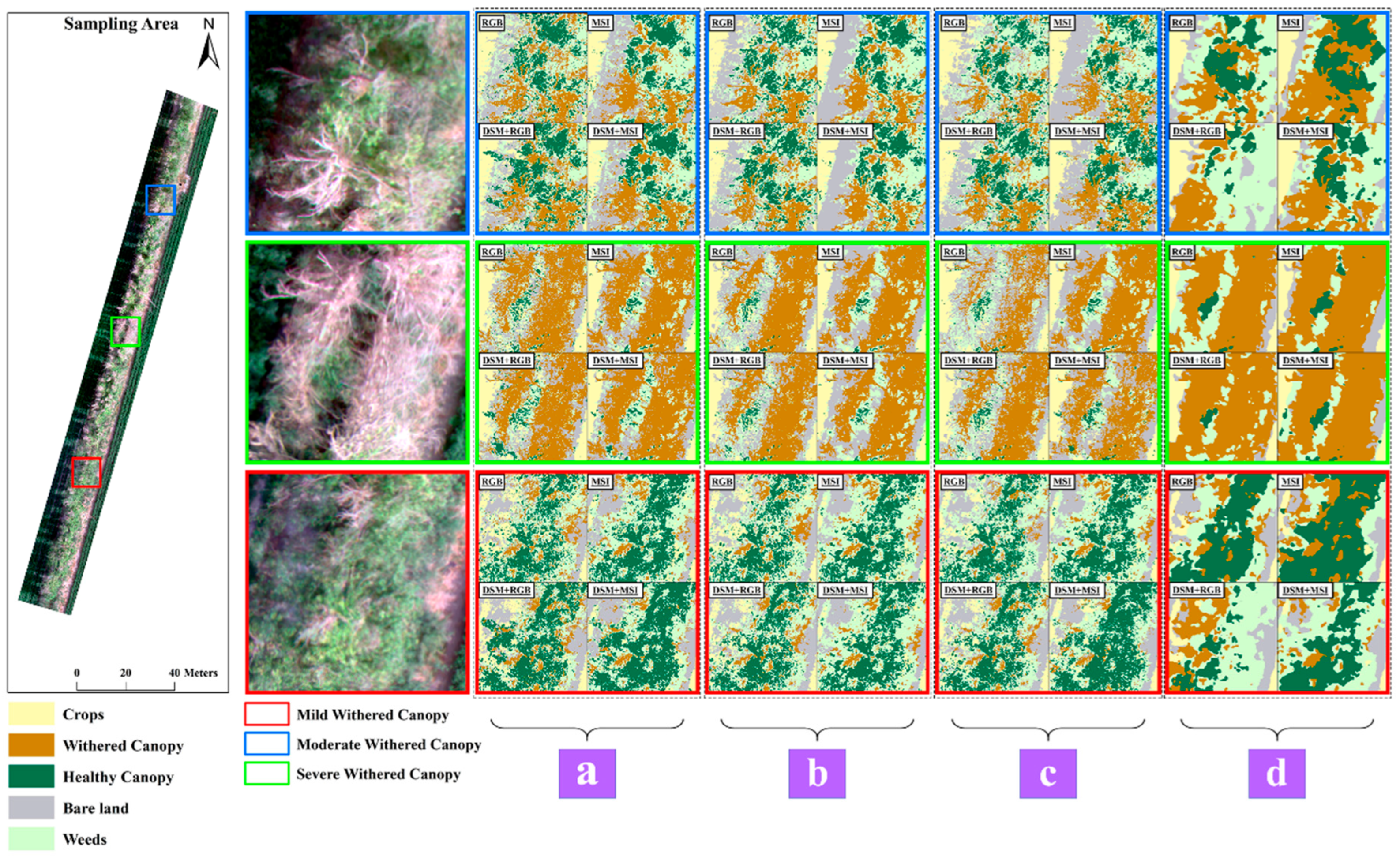

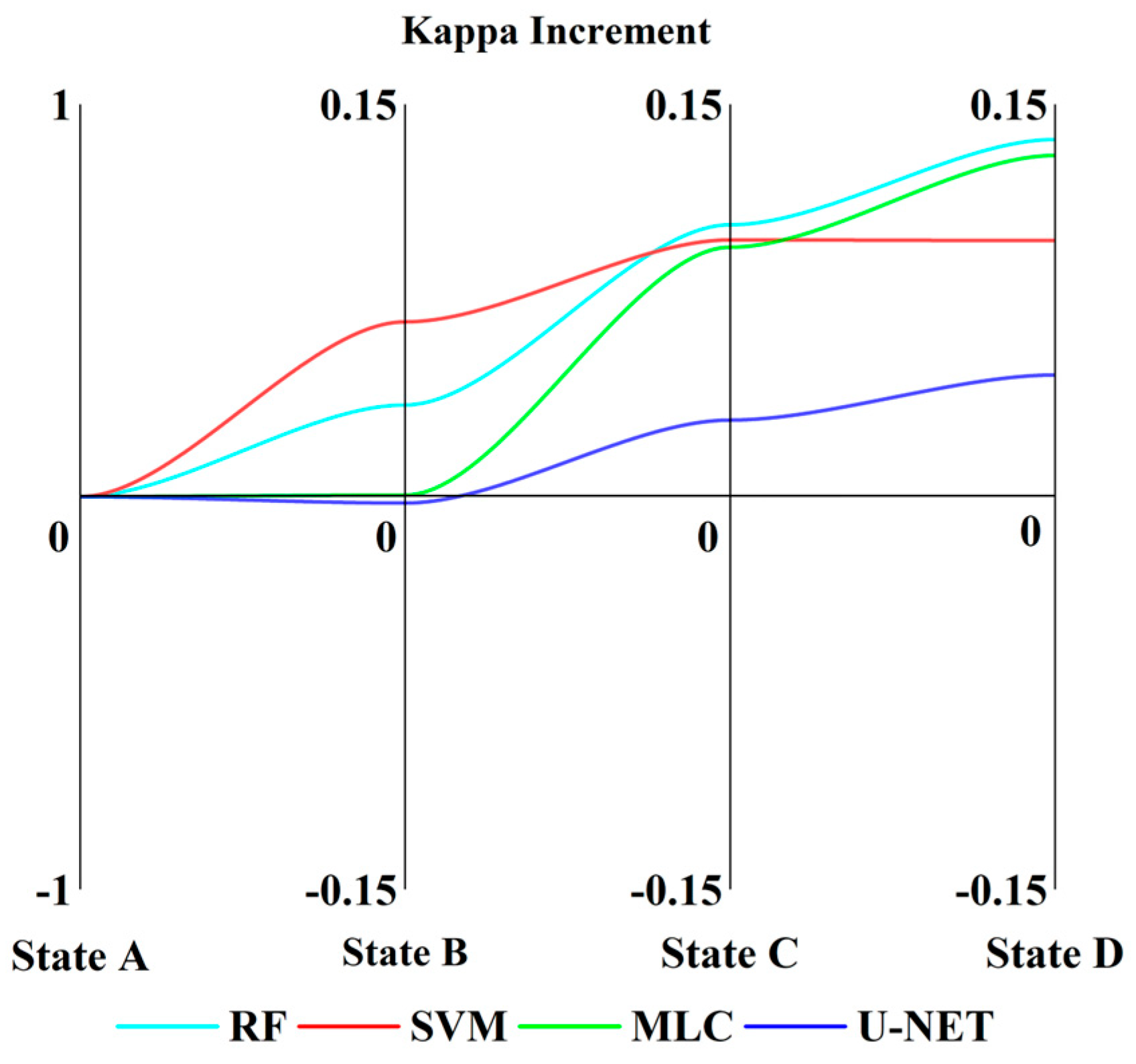

3.3. Classification Result Analysis

3.4. Comparison of Accuracy of Different Classification Models

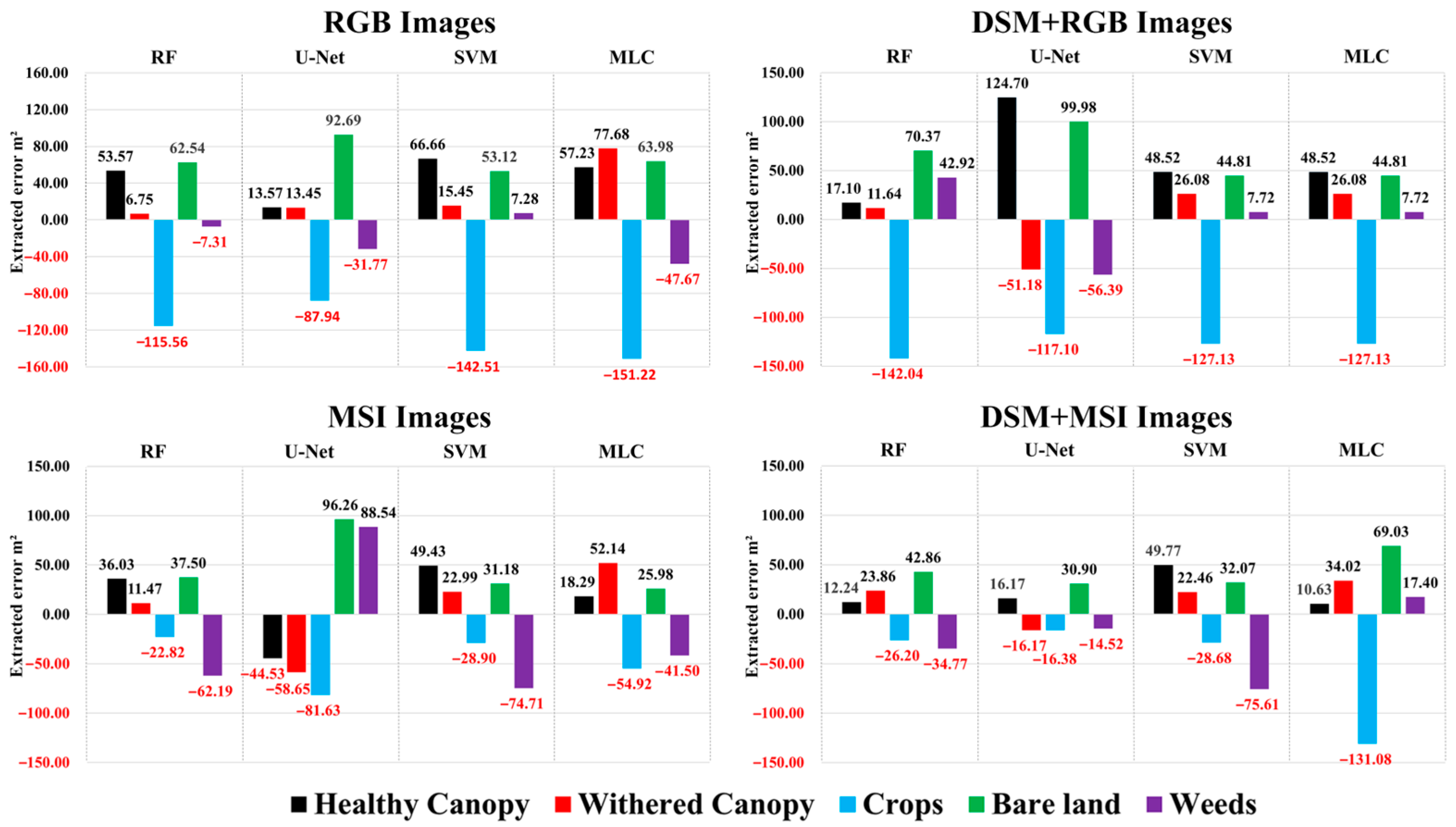

3.5. Canopy Classification Accuracy Analysis

4. Discussion

- Although farmland shelterbelts have certain inter-tree spacing, there is often cohesion between the canopies of mature Populus bolleana, such as lower branches or overlapping canopies between trees, which may lead to missed detections and unrecognized instances during UAV vertical-projection-based identification processes.

- Adult Populus bolleana typically grows to heights of 20–30 m, with canopies located more than 2 m above the ground. Due to their physiological characteristics, when affected by pests, diseases, growth decline, or dieback, the upper portions of the trees wilt first, while a significant number of healthy branches and leaves remain near the ground. Multispectral data acquisition from UAVs provides limited spectral characteristics for vertical cross-sections. Future exploration may involve increasing the tilt angle of UAVs or conducting the horizontal projection of vertical cross-sections, combined with radar information, to further enhance the identification of healthy canopy information in farmland shelterbelts.

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xue, B.; Jiang, Y.; Wang, Q.J.; Ma, B.; Hou, Z.A.; Liang, X.; Gui, Y.R.; Li, F.F. Seasonal transpiration dynamics and water use strategy of a farmland shelterbelt in Gurbantunggut Desert oasia, northwestern China. Agric. Water Manag. 2024, 295, 108777. [Google Scholar] [CrossRef]

- Zhou, S.H.; Ge, T.J.; Xing, C.L.; Xu, X.X.; Yang, L.Y.; Lü, R.H. Analysis on stoichiometric characteristics of a populus Alba L. var pyramidalis protective forest in Alar Reclamation Area. Bull. Soil Water Conserv. 2022, 42, 82–89. [Google Scholar]

- Lin, W.W.; Tian, C.M.; Xiong, D.G.; Ryhguli, S.; Liang, Y.M. Influencing factors of spider community diversity in poplar plantations in XinJiang, China. Biodivers. Sci. 2023, 31, 95–108. [Google Scholar] [CrossRef]

- Shao, P.P.; Yang, B.J.; Su, Z.; Sun, Z.X.; Wang, Z.; Liu, Y.T.; Wei, J.R. Preference of Anoplophora glabripennis to Populus alba var. pyramidalis and Elaeagnus angustifolia. For. Res. 2023, 36, 122–128. [Google Scholar]

- Liu, D.Q.; Zhang, T.Y.; Zhang, X.L.; Zong, S.X.; Huang, J.X. Spatial stratified heterogeneity and driving force of Anoplophora glabripennis in North China. Trans. Chin. Soc. Agric. Mach. 2022, 53, 215–223+369. [Google Scholar]

- Luo, Y.Q. Theory and Techniques of Ecological Regulation of Poplar Longhorned Beetle Disaster in Shelter-Forest. Ph.D. Thesis, BeiJing Forestry University, Beijing, China, 2005. [Google Scholar]

- Lausch, A.; Borg, E.; Bumberger, J.; Dietrich, P.; Heurich, M.; Huth, A.; Jung, A.; Klenke, R.; Knapp, S.; Mollenhauer, H.; et al. Understanding forest health with Remote Sensing, Part Ⅲ: Requirements for a scalable Multi-Source forest health monitoring network based on data science approaches. Remote Sens. 2018, 10, 1120. [Google Scholar] [CrossRef]

- Juha, H.; Hannu, H.; Mikko, I.; Marcus, E.; Susan, L.; Zhu, Y.H. Accuracy comparison of various remote sensing data sources in the retrieval of forest stand attributes. For. Ecol. Manag. 2000, 128, 109–120. [Google Scholar]

- Lu, D.S.; Chen, Q.; Wang, G.X.; Moran, E.; Batistella, M.; Zhang, M.Z.; Vaglio, L.G.; Saah, D. Aboveground Forest Biomass Estimation with Landsat and LiDAR Data and Uncertainty Analysis of the Estimates. Int. J. For. Res. 2012, 12, 436537. [Google Scholar] [CrossRef]

- Zhou, P.; Sun, Z.B.; Zhang, X.Q.; Wang, Y.X. A framework for precisely thinning planning in a managed pure Chinese fir forest based on UAV remote sensing. Sci. Total Environ. 2023, 860, 160482. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.W.; Teemu, H.; Mika, K.; Feng, Z.Y.; Tang, J.; Paula, L.; Antero, K.; Anttoni, J.; Juha, H. UAV-Borne Profiling Radar for Forest Research. Remote Sens. 2017, 9, 58. [Google Scholar] [CrossRef]

- Roope, N.; Eija, H.; Päivi, L.; Minna, B.; Paula, L.; Teemu, H.; Niko, V.; Tuula, K.; Topi, T.; Markus, H. Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar]

- Hall, R.J.; Castilla, G.; White, J.C.; Cooke, B.J.; Skakun, R.S. Remote sensing of forest pest damage: A review and lessons learned from a Canadian perspective. Can. Entomol. 2016, 148, 296–356. [Google Scholar] [CrossRef]

- Marian-Daniel, I.; Vasco, M.; Elsa, B.; Klaas, P.; Nicolas, L. A Machine Learning Approach to Detecting Pine Wilt Disease Using Airborne Spectral Imagery. Remote Sens. 2020, 12, 2280. [Google Scholar]

- del-Campo-Sanchez, A.; Ballesteros, R.; Hernandez-Lopez, D.; Ortega, J.F.; Moreno, M.A.; Agroforestry and Cartography Precision Research Group. Quantifying the effect of Jacobiasca lybica pest on vineyards with UAVs by combining geometric and computer vision techniques. PLoS ONE 2019, 14, 0215521. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.L.; Zhang, F.; Qi, Y.X.; Deng, L.F.; Wang, X.L.; Yang, S.T. New research methods for vegetation information extraction based on visible light remote sensing images from an unmanned aerial vehicle (UAV). Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 215–226. [Google Scholar] [CrossRef]

- You, Z.Y.; Wang, W.J.; Shao, L.J.; Guo, D.; Wu, S.Q.; Huang, S.G.; Zhang, F.P. Dead pine detection by mulit-color space based YOLOv5. J. Biosaf. 2023, 32, 282–289. [Google Scholar]

- Leidemer, T.; Gonroudobou, O.B.H.; Nguyen, H.T.; Ferracini, C.; Burkhard, B. Classifying the Degree of Bark Beetle-Induced Damage on Fir (Abies mariesii) Forests, from UAV-Acquired RGB Images. Computation 2022, 10, 63. [Google Scholar] [CrossRef]

- Bai, L.; Huang, X.; Dashzebeg, G.; Ariunaa, M.; Yin, S.; Bao, Y.; Bao, G.; Tong, S.; Dorjsuren, A.; Davaadorj, E. Potential of Unmanned Aerial Vehicle Red–Green–Blue Images for Detecting Needle Pests: A Case Study with Erannis jacobsoni Djak (Lepidoptera, Geometridae). Insects 2024, 15, 172. [Google Scholar] [CrossRef] [PubMed]

- Haidi, A.; Roshanak, D.; Andrew, K.S.; Thomas, A.G.; Marco, H. European spruce bark beetle (Ips typographus, L.) green attack affects foliar reflectance and biochemical properties. Int. Appl. Earth Obs. Geoinf. 2018, 64, 199–209. [Google Scholar]

- Abdollahnejad, A.; Panagiotidis, D. Tree Species Classification and Health Status Assessment for a Mixed Broadleaf-Conifer Forest with UAS Multispectral Imaging. Remote Sens. 2020, 12, 3722. [Google Scholar] [CrossRef]

- Li, P.L.; Huang, X.J.; Yin, S.; Bao, Y.H.; Bao, G.; Tong, S.Q.; Dashzeveg, G.; Nanzad, T.; Dorjsuren, A.; Enkhnasan, D.; et al. Optimizing spectral index to estimate the relative chlorophyll content of the forest under the damage of Erannis jacobsoni Djak in Mongolia. Ecol. Indic. 2013, 154, 110714. [Google Scholar] [CrossRef]

- Jiang, X.P.; Zhen, J.N.; Miao, J.; Zhao, D.M.; Wang, J.J.; Jia, S. Assessing mangrove leaf traits under different pest and disease severity with hyperspectral imaging spectroscopy. Ecol. Indic. 2021, 129, 10791. [Google Scholar] [CrossRef]

- Ding, R. Research on the Early Diagnosis of Poplar Rust Based on Spectral Remote Sensing. Ph.D. Thesis, Nanjing Forestry University, Nanjing, China, 2021. [Google Scholar]

- Li, H.; Xu, H.H.; Zheng, H.Y.; Chen, X.Y. Research on pine wood nematode surveillance technology based on unmanned aerial vehicle remote sensing image. J. Chin. Agric. Mech. 2020, 41, 170–175. [Google Scholar]

- Jung-il, S.; Won-woo, S.; Taejung, K.; Joowon, P.; Choong-shik, W. Using UAV Multispectral Images for Classification of Forest Burn Severity—A Case Study of the 2019 Gangneung Forest Fire. Forests 2019, 10, 1025. [Google Scholar]

- Sarkar, C.; Gupta, D.; Gupta, U.; Hazarika, B.B. Leaf disease detection using machine learning and deep learning: Review and challenges. Appl. Soft Comput. J. 2023, 145, 110534. [Google Scholar] [CrossRef]

- Carnegie, A.J.; Eslick, H.; Barber, P.; Nagel, M.; Stone, C. Airborne multispectral imagery and deep learning for biosecurity surveillance of invasive forest pests in urban landscapes. Urban For. Green. 2023, 81, 127859. [Google Scholar] [CrossRef]

- Ye, W.J.; Lao, J.M.; Liu, Y.J.; Chang, C.C.; Zhang, Z.W.; Li, H.; Zhou, H.H. Pine pest detection using remote sensing satellite images combined with a multi-scale attention-UNet model. Ecol. Inform. 2022, 72, 101906. [Google Scholar] [CrossRef]

- Mutiara, S.; Sung-Jae, P.; Chang-Wook, L. Detection of the Pine Wilt Disease Tree Candidates for Drone Remote Sensing Using Artificial Intelligence Techniques. Engineering 2020, 6, 919–926. [Google Scholar]

- Yu, R.; Luo, Y.Q.; Zhou, Q.; Zhang, X.D.; Wu, D.W.; Ren, L.L. Early detection of pine wilt disease using deep learning algorithms and UAV-based multispectral imagery. For. Ecol. Manag. 2021, 497, 119493. [Google Scholar] [CrossRef]

- Masek, J.G.; Hayes, D.J.; Hughes, M.J.; Healey, S.P.; Turner, D.P. The role of remote sensing in process-scaling studies of managed forest ecosystems. For. Ecol. Manag. 2015, 355, 109–123. [Google Scholar] [CrossRef]

- Azadeh, A.; Dimitrios, P.; Lukáš, B. An Integrated GIS and Remote Sensing Approach for Monitoring Harvested Areas from Very High-Resolution, Low-Cost Satellite Images. Remote Sens. 2019, 11, 2539. [Google Scholar]

- Li, X.Y.; Tong, T.; Luo, T.; Wang, J.X.; Rao, Y.M.; Li, L.Y.; Jin, D.C.; Wu, D.W.; Huang, H.G. Retrieving the Infected Area of Pine Wilt Disease-Disturbed Pine Forests from Medium-Resolution Satellite Images Using the Stochastic Radiative Transfer Theory. Remote Sens. 2022, 14, 1526. [Google Scholar] [CrossRef]

- Kyle, M. Early Detection of Mountain Pine Beetle Damage in Ponderosa Pine Forests of the Black Hills Using Hyperspectral and WorldView-2 Data. Master’s Thesis, Minnesota State University, Mankato, MN, USA, 2016. [Google Scholar]

- Abdollahnejad, A.; Panagiotidis, D.; Surový, P.; Modlinger, R. Investigating the Correlation between Multisource Remote Sensing Data for Predicting Potential Spread of Ips typographus L. Spots in Healthy Trees. Remote Sens. 2021, 13, 4953. [Google Scholar] [CrossRef]

- Sapes, G.; Lapadat, C.; Schweiger, A.K.; Juzwik, J.; Montgomery, R.; Gholizadeh, H.; Townsend, P.A.; Gamon, J.A.; Cavender, B.J. Canopy spectral reflectance detects oak wilt at the landscape scale using phylogenetic discrimination. Remote Sens. Environ. 2022, 273, 112961. [Google Scholar] [CrossRef]

- Sarah, J.H.; Thomas, T.V. Detection of spruce beetle-induced tree mortality using high- and medium-resolution remotely sensed imagery. Remote Sens. Environ. 2015, 168, 134–145. [Google Scholar]

- Ali, S.; John, C.T.; Russell, T. Pine plantation structure mapping using WorldView-2 multispectral image. Int. J. Remote Sens. 2013, 34, 3986–4007. [Google Scholar]

- Joan, E.L.; Richard, A.F.; Olivier, R.L.; Mélodie, B. Extending ALS-Based Mapping of Forest Attributes with Medium Resolution Satellite and Environmental Data. Remote Sens. 2019, 11, 1092. [Google Scholar]

- Bolton, D.K.; Tompalski, P.; Coops, N.C.; White, J.C.; Wulder, M.A.; Hermosilla, T.; Queinnec, M.; Luther, J.E.; van Lier, O.R.; Fournier, R.A.; et al. Optimizing Landsat time series length for regional mapping of lidar-derived forest structure. Remote Sens. Environ. 2020, 239, 111645. [Google Scholar] [CrossRef]

- Giona, M.; Txomin, H.; Michael, A.W.; Joanne, C.W.; Nicholas, C.C.; Geordie, W.H.; Harold, S.J.Z. Large-area mapping of Canadian boreal forest cover, height, biomass and other structural attributes using Landsat composites and lidar plots. Remote Sens. Environ. 2018, 209, 90–106. [Google Scholar]

- Dalla Corte, A.P.; Rex, F.E.; Almeida, D.R.A.D.; Sanquetta, C.R.; Silva, C.A.; Moura, M.M.; Wilkinson, B.; Zambrano, A.M.A.; Cunha Neto, E.M.D.; Veras, H.F.; et al. Measuring Individual Tree Diameter and Height Using GatorEye High-Density UAV-Lidar in an Integrated Crop-Livestock-Forest System. Remote Sens. 2020, 12, 863. [Google Scholar] [CrossRef]

- Terryn, L.; Calders, K.; Bartholomeus, H.; Bartolo, R.E.; Brede, B.; D’hont, B.; Disney, M.; Herold, M.; Lau, A.; Shenkin, A.; et al. Quantifying tropical forest structure through terrestrial and UAV laser scanning fusion in Australian rainforests. Remote Sens. Environ. 2022, 271, 112912. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Slavík, M. 3D point cloud fusion from UAV and TLS to assess temperate managed forest structure. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102917. [Google Scholar] [CrossRef]

- Antti, P.; Ilkka, K.; Mikko, V.; Samuli, J. Detecting tree mortality using waveform features of airborne LiDAR. Remote Sens. Environ. 2024, 303, 114019. [Google Scholar]

- Huang, K. Integration of Lighter LiDAR and Multispectral Imagery for Estimation of Tree Dieback Rate on Ground. Ph.D. Thesis, Beijing Forestry University, Beijing, China, 2020. [Google Scholar]

- Zhao, X.; Qi, J.B.; Xu, H.F.; Yu, Z.X.; Yuan, L.J.; Chen, Y.W.; Huang, H.G. Evaluating the potential of airborne hyperspectral LiDAR for assessing forest insects and diseases with 3D Radiative Transfer Modeling. Remote Sens. Environ. 2023, 297, 113759. [Google Scholar] [CrossRef]

- Oblinger, B.W.; Bright, B.C.; Hanavan, R.P.; Simpson, M.; Hudak, A.T.; Cook, B.D.; Corp, L.A. Identifying conifer mortality induced by Armillaria root disease using airborne lidar and orthoimagery in south central Oregon. For. Ecol. Manag. 2022, 511, 120126. [Google Scholar] [CrossRef]

- He-Ya, S.; Huang, X.; Zhou, D.; Zhang, J.; Bao, G.; Tong, S.; Bao, Y.; Ganbat, D.; Tsagaantsooj, N.; Altanchimeg, D.; et al. Identification of Larch Caterpillar Infestation Severity Based on Unmanned Aerial Vehicle Multispectral and LiDAR Features. Forests 2024, 15, 191. [Google Scholar] [CrossRef]

- Yang, Y.L.; Xiao, H.J.; Xin, Z.M.; Fan, G.P.; Li, J.R.; Jia, X.X.; Wang, L.T. Assessment on the declining degree of farmland shelter forest in a desert oasis based on LiDAR and hyperspectrum imagery. Chin. J. Appl. Ecol. 2023, 34, 1043–1050. [Google Scholar]

- Thomas, H.; Martin, L.; Nicholas, C.C.; Michael, A.W.; Glenn, J.N.; David, L.B.J.; Darius, S.C. Comparing canopy metrics derived from terrestrial and airborne laser scanning in a Douglas-fir dominated forest stand. Trees 2010, 24, 819–832. [Google Scholar]

- LY/T 3179-2020; Technical Regulation for the Restoration of Degraded Protective Forest. State Forestry and Grassland Administration: Beijing, China, 2020.

- Yu, L.; He, J.L.; Ai, A.T.; Zhang, Y.; Wang, P.N. Characteristics of hydrogen and oxygen stable isotopes in groundwater of Tiemenguan city, Xinjiang. J. Arid Land Resour. Environ. 2023, 37, 58–64. [Google Scholar]

- John, W.G. A Statistical Examination of Image Stitching Software Packages for Use with Unmanned Aerial Systems. Photogramm. Eng. Remote Sens. 2016, 82, 419–425. [Google Scholar]

- Li, W.K.; Guo, Q.H.; Jakubowski, M.K.; Kelly, M. A New Method for Segmenting Individual Trees from the Lidar Point Cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef]

- Yang, J.Q.; Fan, D.S.; Yang, J.B.; Yang, X.B.; Ji, S. A large scale online UAV mapping algorithm for the dense point cloud and digital surface model generation. J. Geod. Geoinf. Sci. 2023, 10, 47–53. [Google Scholar]

- Zhu, J.F.; Liu, Q.W.; Cui, X.M. Extraction of individual tree parameters by combining terrestrial and UAV LiDAR. Trans. Chin. Soc. Agric. Eng. 2022, 38, 51–58. [Google Scholar]

- Philippe, L.; Stéphanie, B.; Marc, P.; Jonathan, L. A Photogrammetric Workflow for the Creation of a Forest Canopy Height Model from Small Unmanned Aerial System Imagery. Forests 2013, 4, 922–944. [Google Scholar]

- Andrew, F.; Richard, M. Hypertemporal Imaging Capability of UAS Improves Photogrammetric Tree Canopy Models. Remote Sens. 2020, 12, 1238. [Google Scholar]

- Winsen, M.; Hamilton, G. A Comparison of UAV-Derived Dense Point Clouds Using LiDAR and NIR Photogrammetry in an Australian Eucalypt Forest. Remote Sens. 2023, 15, 1694. [Google Scholar] [CrossRef]

- Delibasoglu, I.; Cetin, M. Improved U-Nets with inception blocks for building detection. J. Appl. Remote 2020, 14, 044512. [Google Scholar] [CrossRef]

- Li, Z.K.; Deng, X.L.; Lan, Y.B.; Liu, C.J.; Qing, J.J. Fruit tree canopy segmentation from UAV orthophoto maps based on a lightweight improved U-Net. Comput. Electron. Agric. 2024, 217, 108538. [Google Scholar] [CrossRef]

- Zeng, T.W.; Wang, Y.; Yang, Y.Q.; Liang, Q.F.; Fang, J.H.; Li, Y.; Zhang, H.M.; Fu, W.; Wang, J.; Zhang, X.R. Early detection of rubber tree powdery mildew using UAV-based hyperspectral imagery and deep learning. Comput. Electron. Agric. 2024, 220, 108909. [Google Scholar] [CrossRef]

- Lin, Q.N.; Huang, H.G.; Wang, J.X.; Chen, L.; Du, H.Q.; Zhou, G.M. Early detection of pine shoot beetle attack using vertical profile of plant traits through UAV-based hyperspectral, thermal, and lidar data fusion. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103549. [Google Scholar] [CrossRef]

- Cao, J.J.; Leng, W.C.; Liu, K.; Liu, L.; He, Z.; Zhu, Y.H. Object-Based Mangrove Species Classification Using Unmanned Aerial Vehicle Hyperspectral Images and Digital Surface Models. Remote Sens. 2018, 10, 89. [Google Scholar] [CrossRef]

- da Silva, S.D.P.; Eugenio, F.C.; Fantinel, R.A.; de Paula Amaral, L.; dos Santos, A.R.; Mallmann, C.L.; dos Santos, F.D.; Pereira, R.S.; Ruoso, R. Modeling and detection of invasive trees using UAV image and machine learning in a subtropical forest in Brazil. Ecol. Inform. 2023, 74, 101989. [Google Scholar] [CrossRef]

- Lin, X.B.; Sun, J.H.; Yang, L.; Liu, H.; Wang, T.; Zhao, H. Application of UAV Multispectral Remote Sensing to Monitor Damage Level of Leaf-feeding Insect Pests of Oak. J. Northeast For. Univ. 2013, 51, 138–144. [Google Scholar]

- Julia, A.; Melanie, B.; Sebastian, P.; Tarek, N.; Marta, P. Evaluating Different Deep Learning Approaches for Tree Health Classification Using High-Resolution Multispectral UAV Data in the Black Forest, Harz Region, and Göttinger Forest. Remote Sens. 2024, 16, 561. [Google Scholar]

- Lei, S.H.; Luo, J.B.; Tao, X.J.; Qiu, Z.X. Remote Sensing Detecting of Yellow Leaf Disease of Arecanut Based on UAV Multisource Sensors. Remote Sens. 2021, 13, 4562. [Google Scholar] [CrossRef]

- Qin, J.; Wang, B.; Wu, Y.L.; Lu, Q.; Zhu, H.C. Identifying Pine Wood Nematode Disease Using UAV Images and Deep Learning Algorithms. Remote Sens. 2021, 13, 162. [Google Scholar] [CrossRef]

| Channel | Title 2 Properties | Central Wavelength/nm |

|---|---|---|

| Band 1 Band 2 Band 3 Band 4 Band 5 Band 6 | Blue Green Near infrared Red Red-edge 1 Red-edge 2 | 450@35 555@25 840@35 660@20 720@10 750@15 |

| Confusion Matrix | True Value | ||

|---|---|---|---|

| Positive | Negative | ||

| Predicted value | Positive | TP | FP |

| Negative | FN | TN | |

| Bands | Mean | Variance | Homogeneity | Contrast | Dissimilarity | Entropy | Second Moment | Correlation |

|---|---|---|---|---|---|---|---|---|

| Blue Band | −0.288 ** | −0.373 ** | 0.413 ** | −0.390 ** | −0.432 ** | −0.391 ** | 0.315 ** | 0.466 ** |

| Green Band | −0.481 ** | −0.615 ** | 0.487 ** | −0.620 ** | −0.639 ** | −0.357 ** | 0.292 ** | 0.322 ** |

| Red Band | −0.319 ** | −0.414 ** | 0.388 ** | −0.433 ** | −0.444 ** | −0.353 ** | 0.294 ** | 0.471 ** |

| Nir Band | −0.282 ** | −0.065 | 0.073 | −0.028 | −0.031 | −0.189 | 0.188 | 0.228 * |

| Re1 Band | −0.442 ** | −0.591 ** | 0.422 ** | −0.603 ** | −0.588 ** | −0.348 ** | 0.304 ** | 0.291 ** |

| Re2 Band | −0.328 ** | −0.290 ** | 0.263 ** | −0.285 ** | −0.275 ** | −0.310 ** | 0.294 ** | 0.273 ** |

| Images | Class | Ground Measurement Area | Classification Model | |||

|---|---|---|---|---|---|---|

| RF | U-NET | SVM | MLC | |||

| Extracted Area | Extracted Area | Extracted Area | Extracted Area | |||

| RGB | Healthy Canopy | 244.96 | 191.39 | 231.39 | 178.30 | 187.73 |

| Withered Canopy | 181.53 | 174.78 | 168.09 | 166.08 | 103.86 | |

| Crops | 594.09 | 709.65 | 682.03 | 736.60 | 745.31 | |

| Bare land | 289.05 | 226.51 | 196.37 | 235.93 | 225.07 | |

| Weeds | 272.54 | 279.85 | 304.31 | 265.26 | 320.22 | |

| DSM + RGB | Healthy Canopy | 244.96 | 227.86 | 120.26 | 196.44 | 196.44 |

| Withered Canopy | 181.53 | 169.89 | 232.71 | 155.46 | 155.46 | |

| Crops | 594.09 | 736.13 | 711.19 | 721.22 | 721.22 | |

| Bare land | 289.05 | 218.68 | 189.08 | 244.24 | 244.24 | |

| Weeds | 272.54 | 229.62 | 328.93 | 264.82 | 264.82 | |

| MSI | Healthy Canopy | 244.96 | 208.93 | 289.49 | 195.53 | 226.67 |

| Withered Canopy | 181.53 | 170.06 | 240.18 | 158.54 | 129.39 | |

| Crops | 594.09 | 616.91 | 675.73 | 622.99 | 649.01 | |

| Bare land | 289.05 | 251.55 | 192.79 | 257.88 | 263.07 | |

| Weeds | 272.54 | 334.73 | 184.00 | 347.25 | 314.04 | |

| DSM + MSI | Healthy Canopy | 244.96 | 232.72 | 228.80 | 195.20 | 234.33 |

| Withered Canopy | 181.53 | 157.67 | 197.70 | 159.07 | 147.51 | |

| Crops | 594.09 | 620.29 | 610.47 | 622.77 | 725.17 | |

| Bare land | 289.05 | 246.19 | 258.15 | 256.99 | 220.02 | |

| Weeds | 272.54 | 307.31 | 287.06 | 348.15 | 255.14 | |

| Images | RF | SVM | MLC | U-Net | ||||

|---|---|---|---|---|---|---|---|---|

| Kappa | OA | Kappa | OA | Kappa | OA | Kappa | OA | |

| RGB images | 0.7291 | 82.47% | 0.7126 | 81.35% | 0.7049 | 80.92% | 0.8147 | 87.71% |

| MSI images | 0.833 | 89.37% | 0.8107 | 87.91% | 0.8002 | 87.31% | 0.8440 | 89.63% |

| Fusion images of DSM + RGB | 0.7642 | 85.02% | 0.7794 | 85.88% | 0.7055 | 80.96% | 0.8122 | 87.71% |

| Fusion images of DSM + MSI | 0.8656 | 91.55% | 0.8105 | 87.90% | 0.8353 | 89.61% | 0.8612 | 91.59% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiang, Z.; Li, T.; Lv, Y.; Wang, R.; Sun, T.; Gao, Y.; Wu, H. Identification of Damaged Canopies in Farmland Artificial Shelterbelts Based on Fusion of Unmanned Aerial Vehicle LiDAR and Multispectral Features. Forests 2024, 15, 891. https://doi.org/10.3390/f15050891

Xiang Z, Li T, Lv Y, Wang R, Sun T, Gao Y, Wu H. Identification of Damaged Canopies in Farmland Artificial Shelterbelts Based on Fusion of Unmanned Aerial Vehicle LiDAR and Multispectral Features. Forests. 2024; 15(5):891. https://doi.org/10.3390/f15050891

Chicago/Turabian StyleXiang, Zequn, Tianlan Li, Yu Lv, Rong Wang, Ting Sun, Yuekun Gao, and Hongqi Wu. 2024. "Identification of Damaged Canopies in Farmland Artificial Shelterbelts Based on Fusion of Unmanned Aerial Vehicle LiDAR and Multispectral Features" Forests 15, no. 5: 891. https://doi.org/10.3390/f15050891

APA StyleXiang, Z., Li, T., Lv, Y., Wang, R., Sun, T., Gao, Y., & Wu, H. (2024). Identification of Damaged Canopies in Farmland Artificial Shelterbelts Based on Fusion of Unmanned Aerial Vehicle LiDAR and Multispectral Features. Forests, 15(5), 891. https://doi.org/10.3390/f15050891