A Novel Method for Extracting DBH and Crown Base Height in Forests Using Small Motion Clips

Abstract

:1. Introduction

2. Materials and Methods

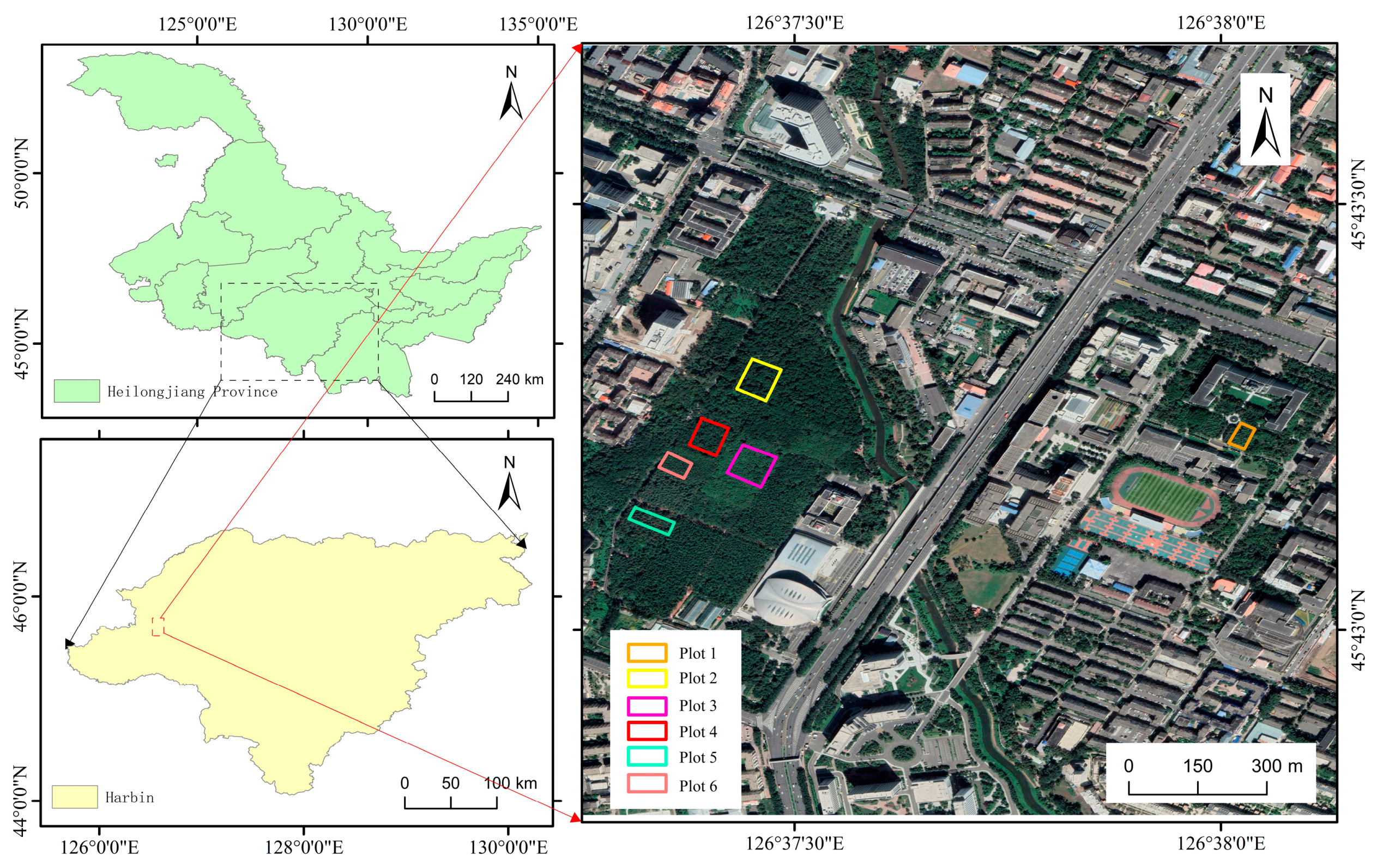

2.1. Study Area

2.2. Collection Data

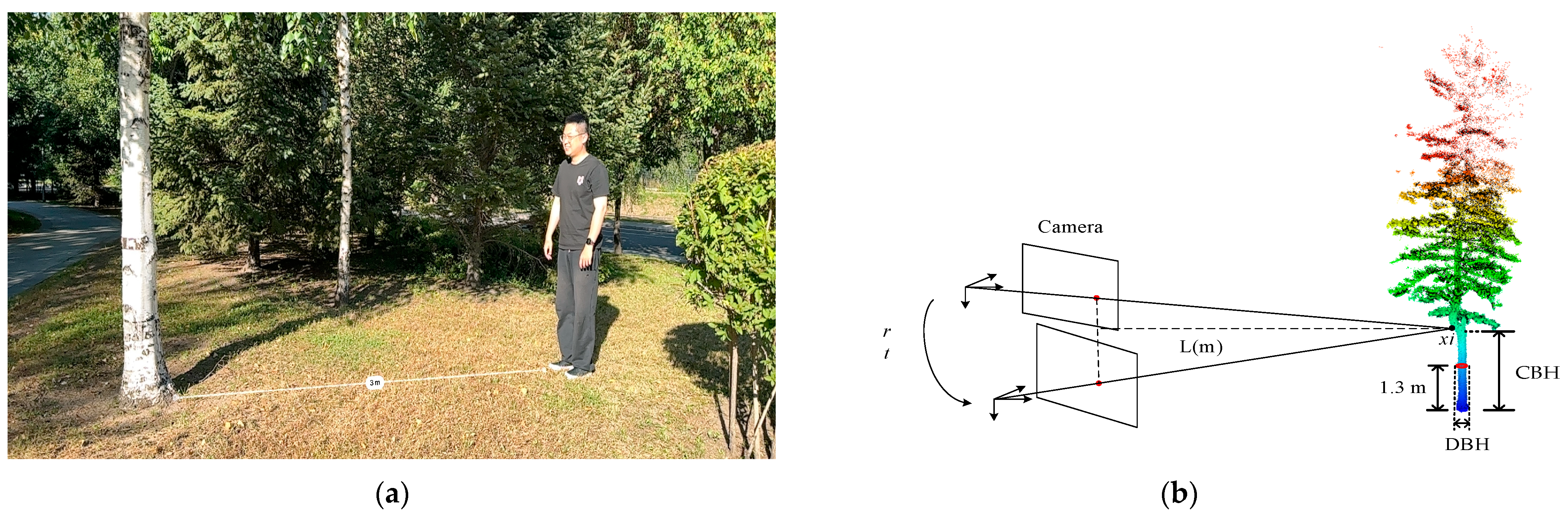

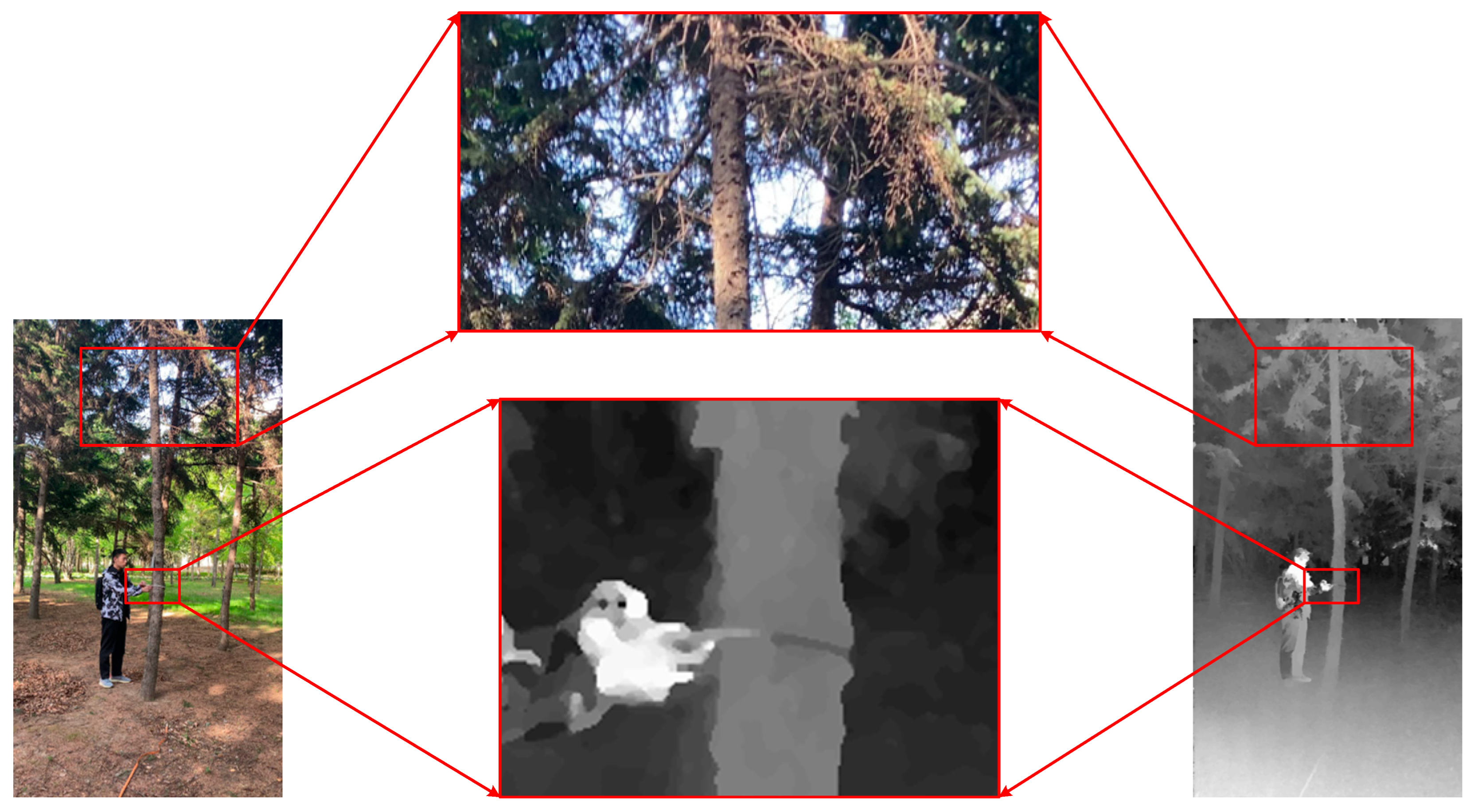

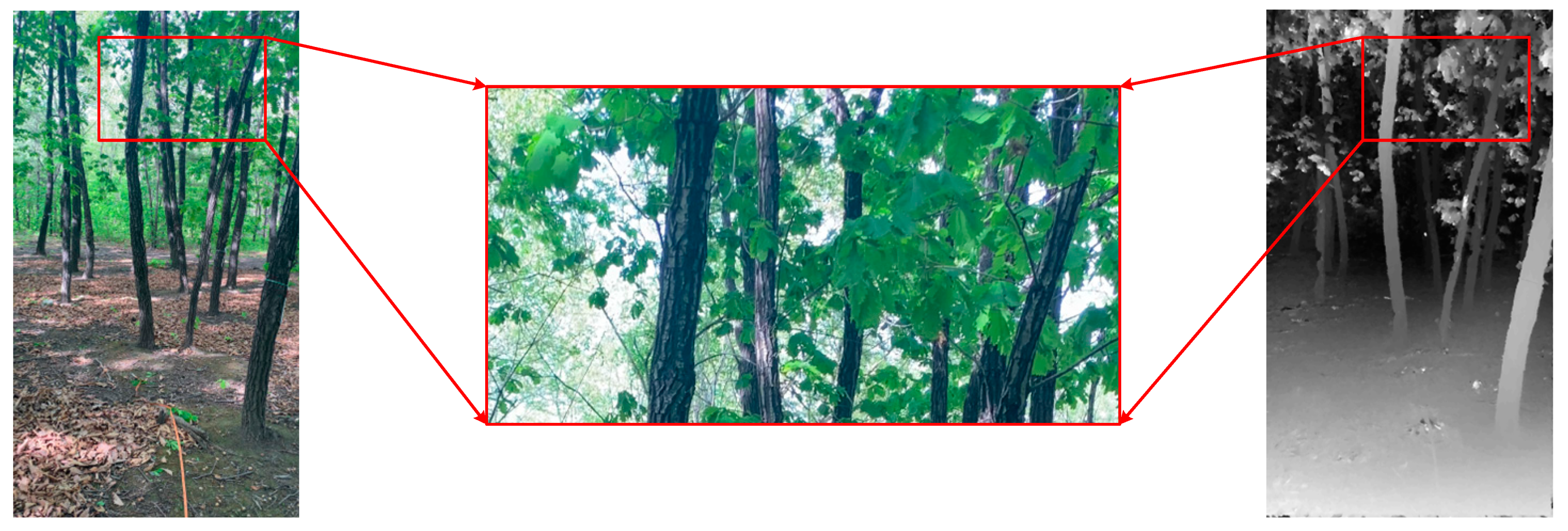

2.3. Small Motion Clip Data

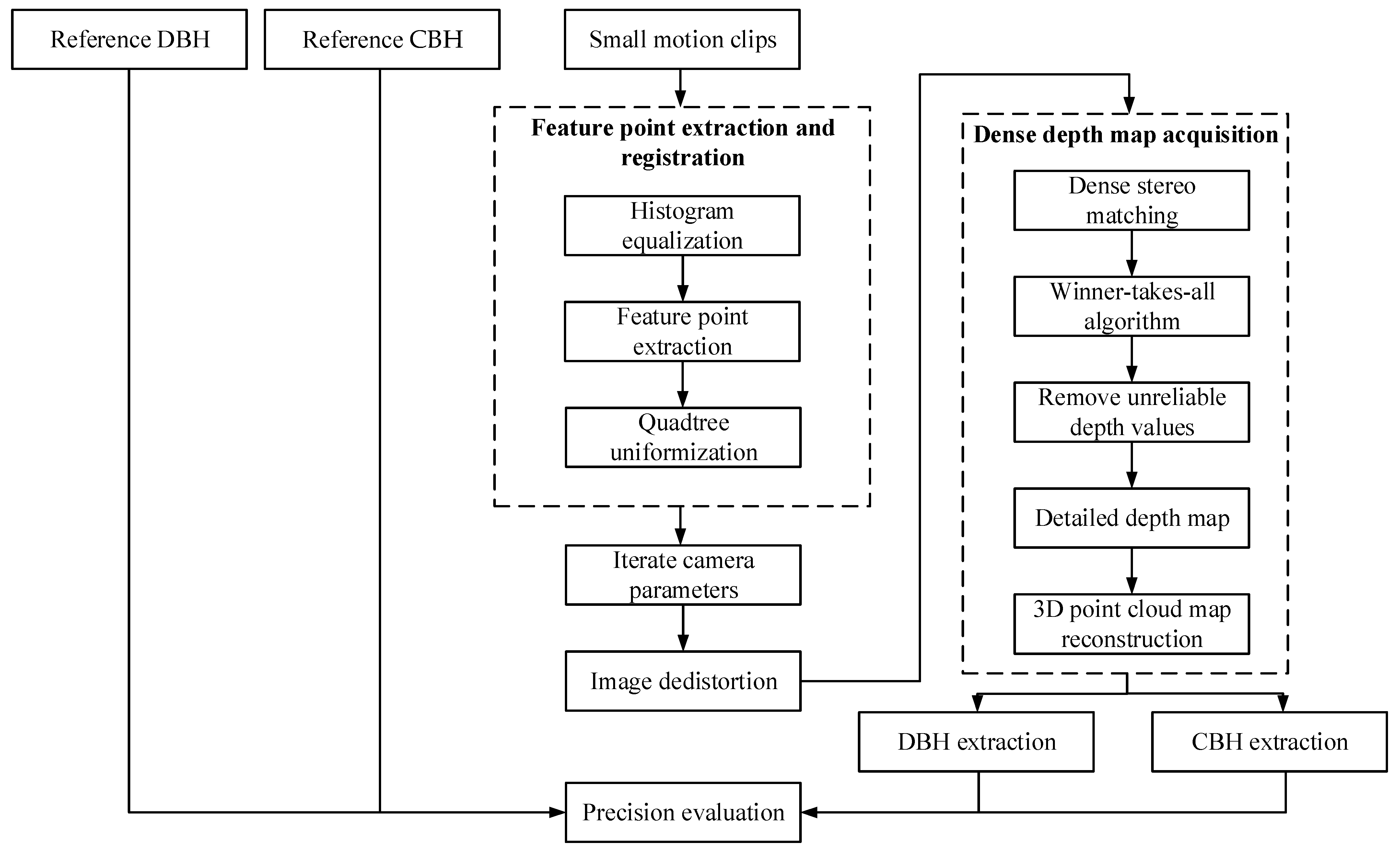

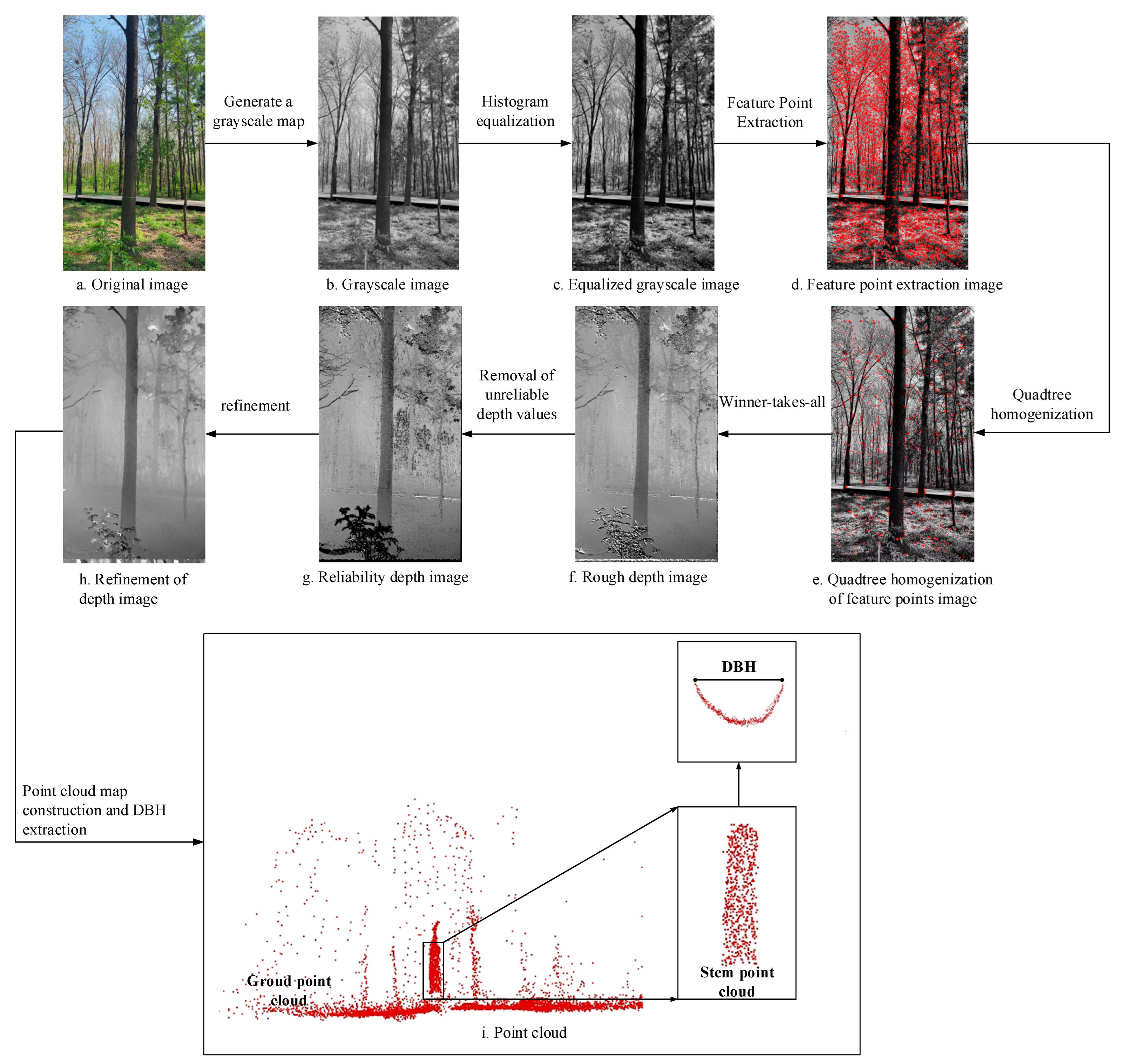

2.4. DBH and CBH Extraction Method Based on Small Motion Clip

2.4.1. Feature Point Extraction and Registration

2.4.2. Depth Estimation of Small Motion Clip

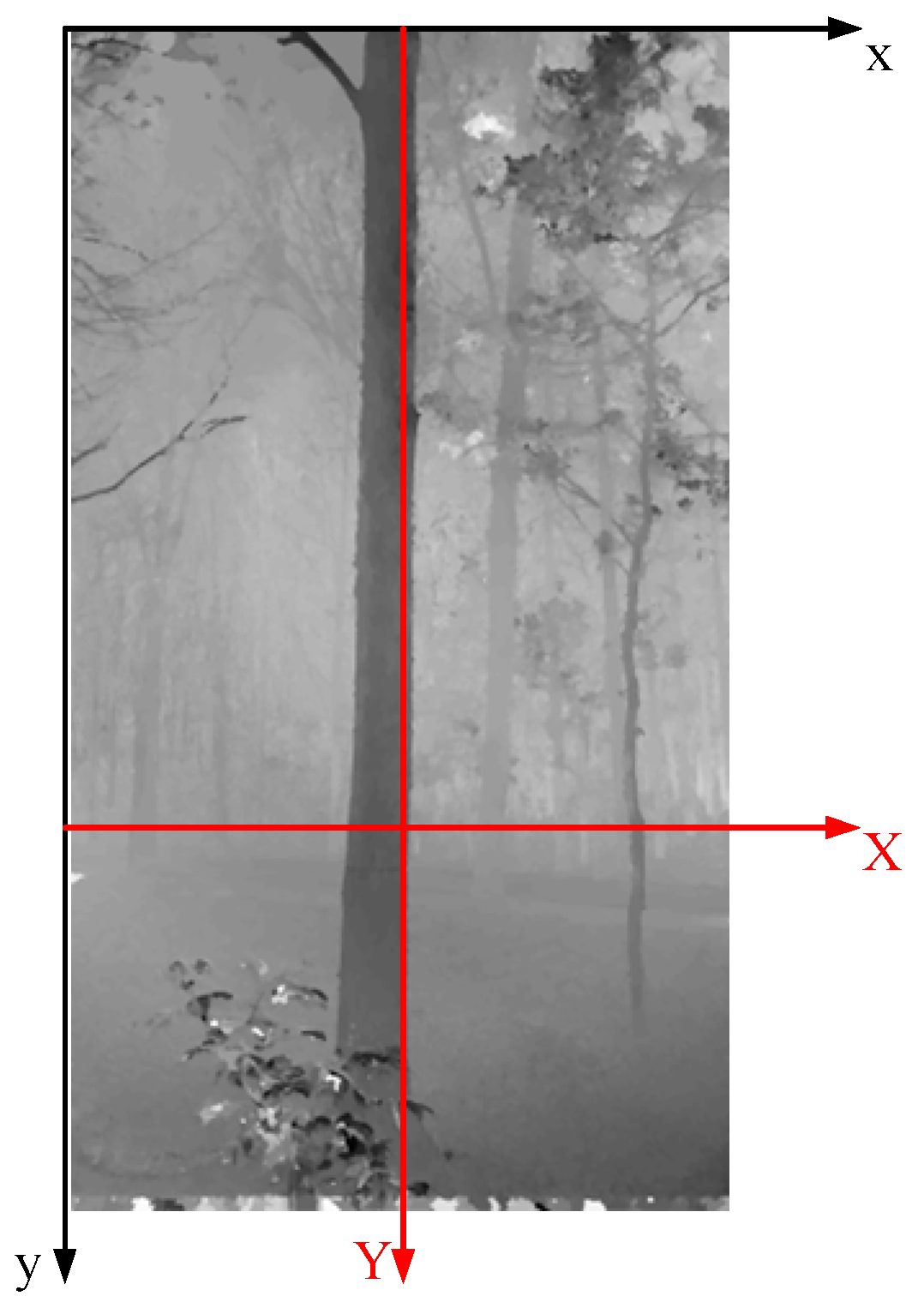

2.4.3. Point Cloud Map Reconstruction and Parameter Extraction

2.5. Evaluation Indicators

3. Results

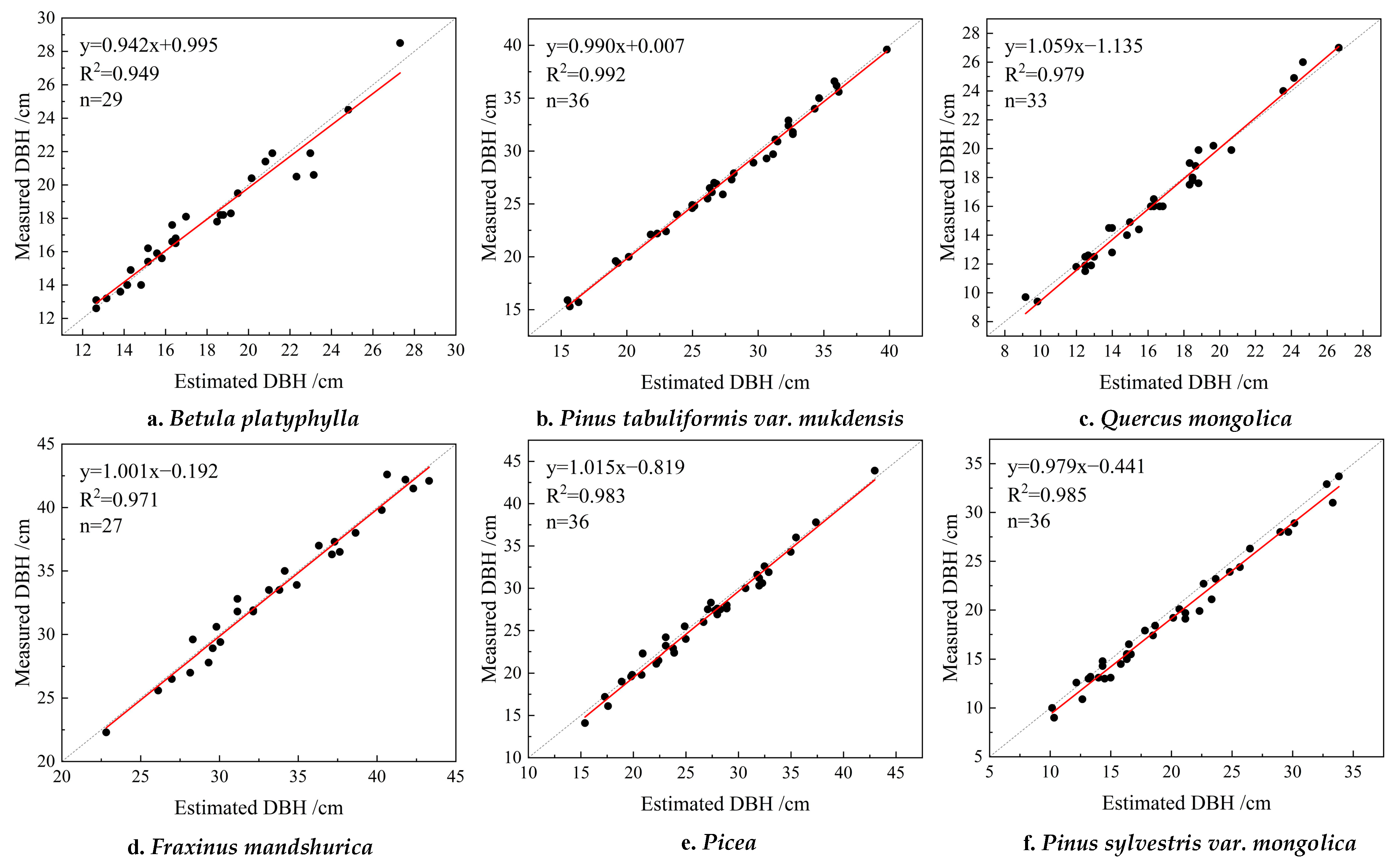

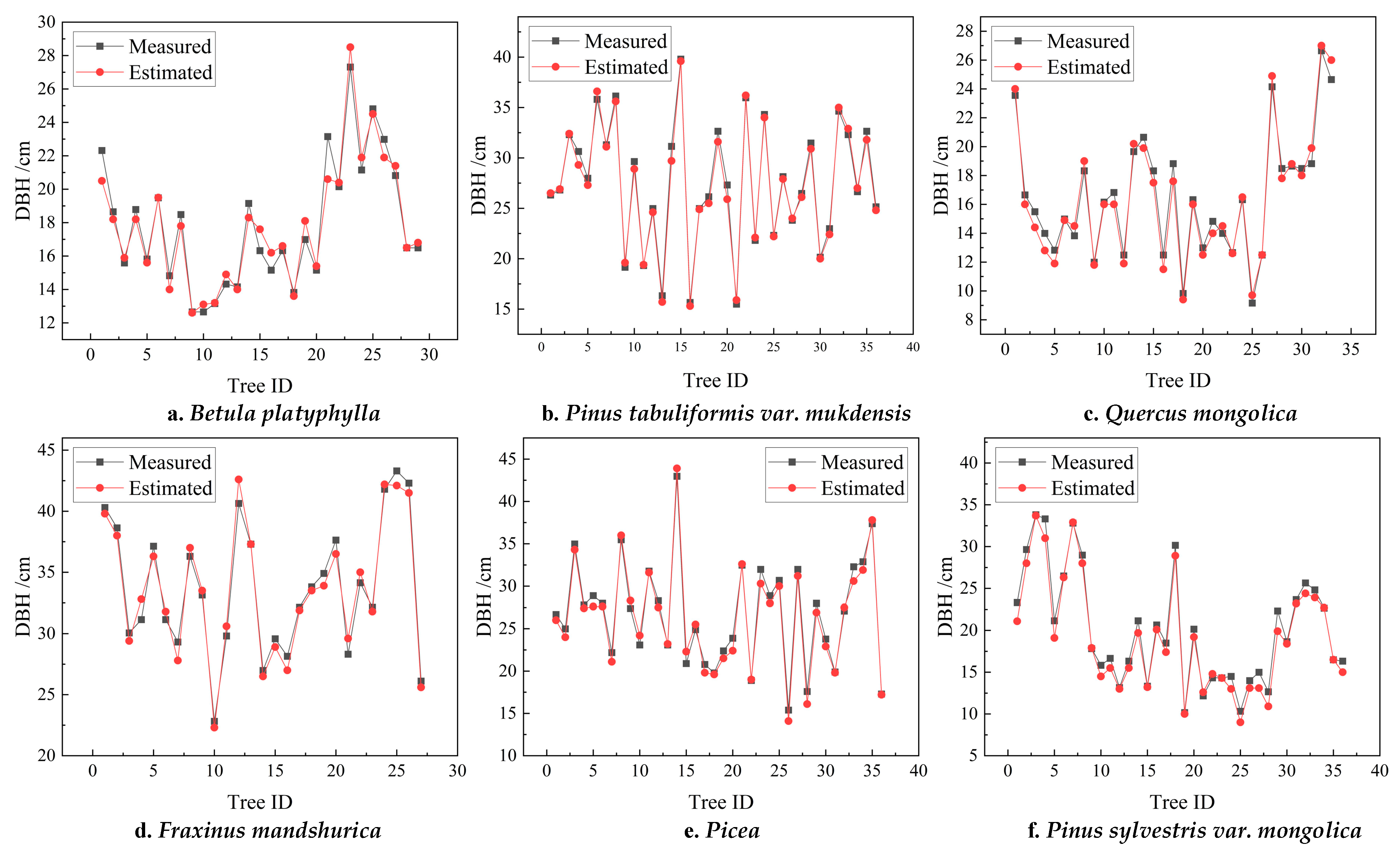

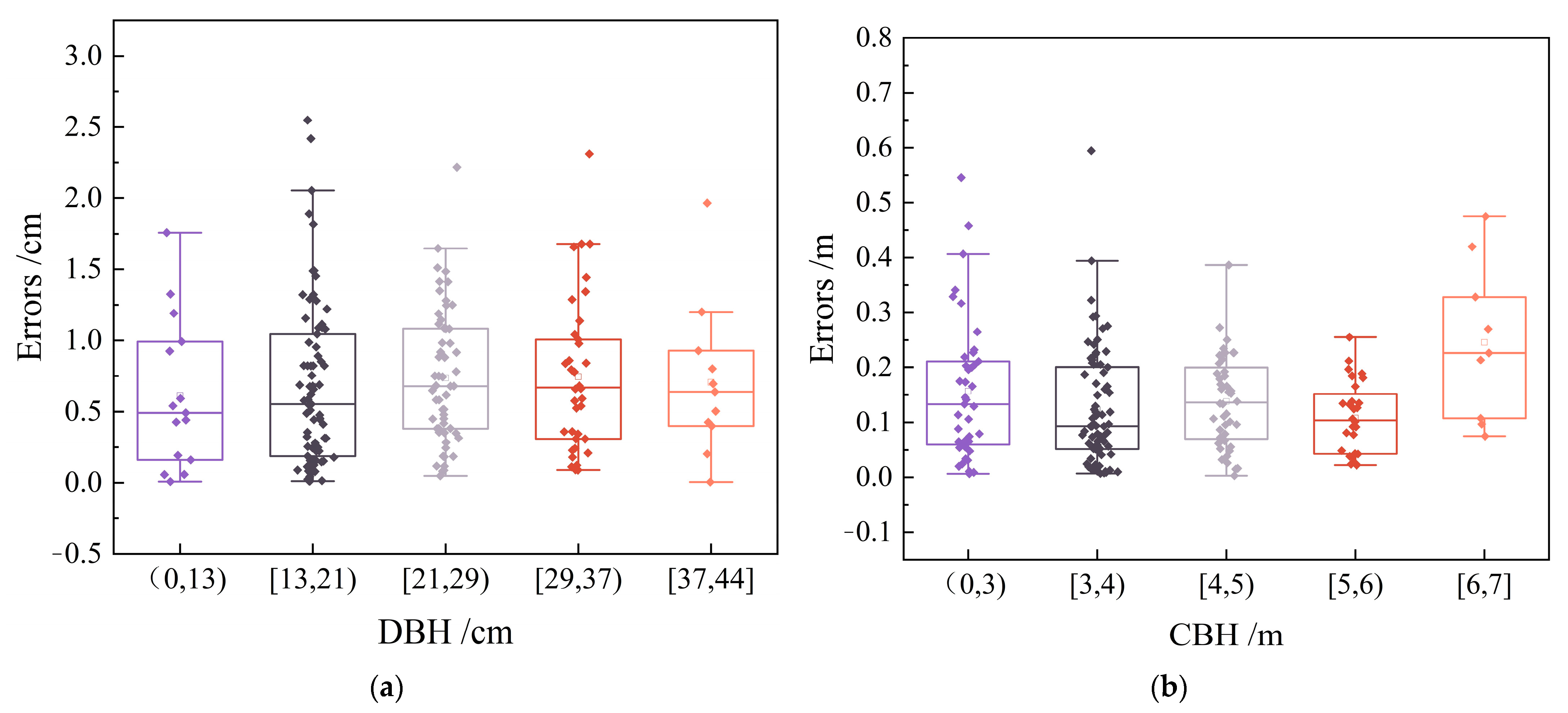

3.1. Accuracy Assessment and Error Analysis of DBH Estimation

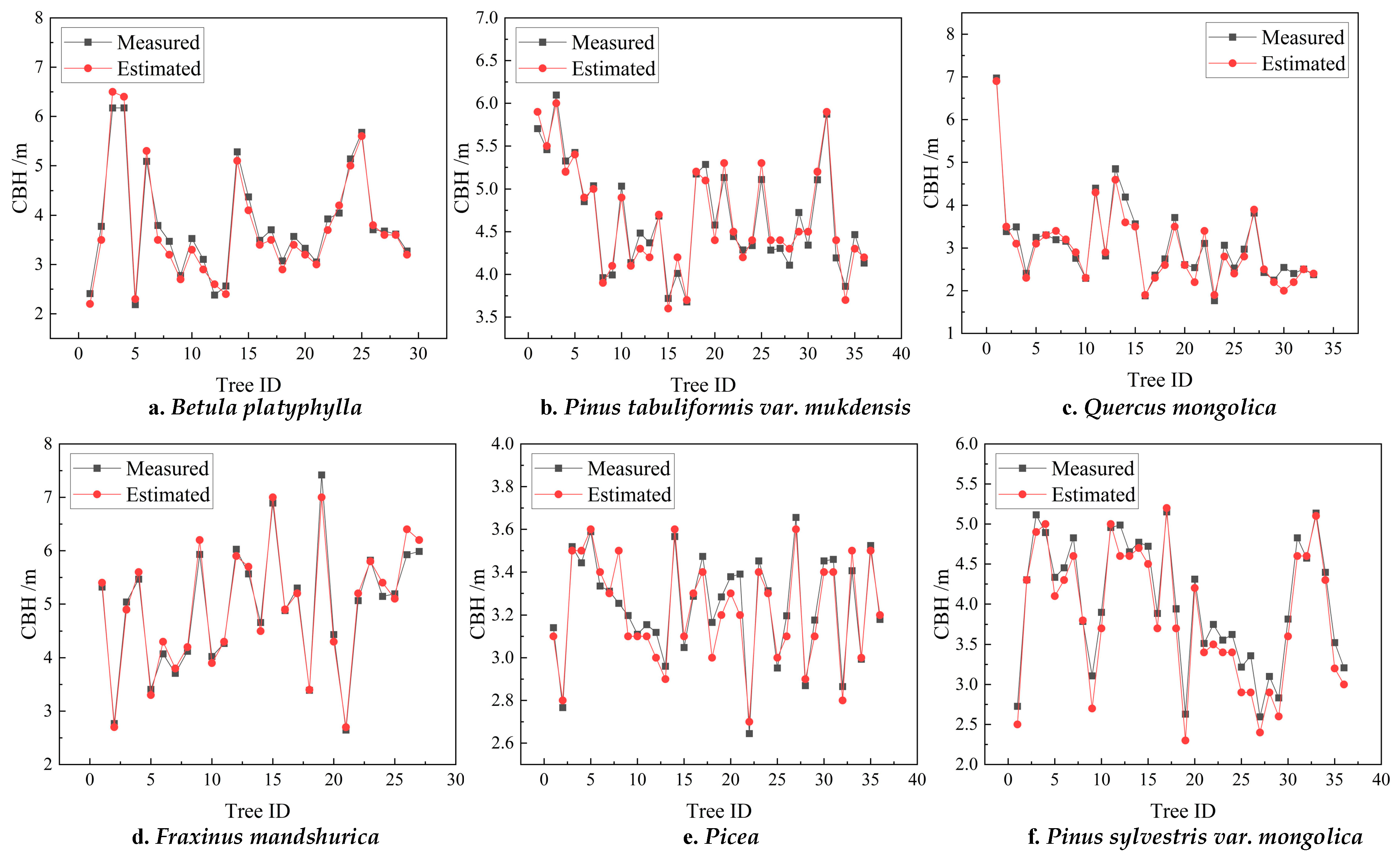

3.2. Accuracy Assessment and Error Analysis of CBH Estimation

4. Discussion

4.1. Impact of Tree Species Characteristics on the Accuracy of DBH and CBH Estimation and Error Analysis

4.2. Error Distribution and Key Influencing Factors in DBH and CBH Estimation

4.3. Comparison of DBH Extraction Algorithm Accuracy and Efficiency with Existing Methods

4.4. Comparison with LiDAR-Based Methods

5. Conclusions

- (1)

- To address the issues of large stereo matching errors, long acquisition times and extensive computational demands for generating 3D point cloud maps in traditional CRP, small motion clips are employed to estimate image depth, and the feature point extraction algorithm is optimized to reduce the mismatch rate of feature points. As a result, the relative error in DBH extraction is reduced to 3.35%, while the relative error in CBH extraction is 3.86%, achieving accuracy comparable to similar algorithms.

- (2)

- Different tree species can influence errors in DBH measurement. The DBH relative errors across different sample plots ranged from 1.81% to 5.42%, with Plot 6 exhibiting the highest accuracy due to the fact that the trunk of camphor pine has deep, scaly fissures, leading to a jagged depth map of the reconstructed trunk. The overall error trend indicates a correlation between tree species and extraction accuracy.

- (3)

- The estimation error of CBH is closely related to branch height variability. When branches are too low, they merge with understory shrubs, making them difficult to distinguish. When branches are too high, they overlap with nearby tree canopies, complicating segmentation. Both cases increase estimation errors, especially in plots with dense vegetation.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ge, J.; Zhang, Z.; Lin, B. Towards Carbon Neutrality: How Much Do Forest Carbon Sinks Cost in China? Environ. Impact Assess. Rev. 2023, 98, 106949. [Google Scholar] [CrossRef]

- Kurz, W.A.; Dymond, C.C.; Stinson, G.; Rampley, G.J.; Neilson, E.T.; Carroll, A.L.; Ebata, T.; Safranyik, L. Mountain Pine Beetle and Forest Carbon Feedback to Climate Change. Nature 2008, 452, 987–990. [Google Scholar] [CrossRef] [PubMed]

- Clawson, M. Forests in the Long Sweep of American History. Science 1979, 204, 1168–1174. [Google Scholar] [CrossRef]

- Montoya, O.; Icasio-Hernández, O.; Salas, J. TreeTool: A Tool for Detecting Trees and Estimating Their DBH Using Forest Point Clouds. SoftwareX 2021, 16, 100889. [Google Scholar] [CrossRef]

- Liu, G.; Wang, J.; Dong, P.; Chen, Y.; Liu, Z. Estimating Individual Tree Height and Diameter at Breast Height (DBH) from Terrestrial Laser Scanning (TLS) Data at Plot Level. Forests 2018, 9, 398. [Google Scholar] [CrossRef]

- Koreň, M.; Scheer, L.; Sedmák, R.; Fabrika, M. Evaluation of Tree Stump Measurement Methods for Estimating Diameter at Breast Height and Tree Height. Int. J. Appl. Earth Obs. Geoinf. 2024, 129, 103828. [Google Scholar] [CrossRef]

- Wang, B.; Xu, G.; Li, Z.; Cheng, Y.; Gu, F.; Xu, M.; Zhang, Y. Carbon Pools in Forest Systems and New Estimation Based on an Investigation of Carbon Sequestration. J. Environ. Manag. 2024, 360, 121124. [Google Scholar] [CrossRef]

- Guenther, M.; Heenkenda, M.K.; Morris, D.; Leblon, B. Tree Diameter at Breast Height (DBH) Estimation Using an iPad Pro LiDAR Scanner: A Case Study in Boreal Forests, Ontario, Canada. Forests 2024, 15, 214. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, S.; Zhou, S.; Kamruzzaman, M.M. Study on Modeling Method of Forest Tree Image Recognition Based on CCD and Theodolite. IEEE Access 2020, 8, 159067–159076. [Google Scholar] [CrossRef]

- Cova, G.R.; Prichard, S.J.; Rowell, E.; Drye, B.; Eagle, P.; Kennedy, M.C.; Nemens, D.G. Evaluating Close-Range Photogrammetry for 3D Understory Fuel Characterization and Biomass Prediction in Pine Forests. Remote Sens. 2023, 15, 4837. [Google Scholar] [CrossRef]

- Yan, X.; Chai, G.; Han, X.; Lei, L.; Wang, G.; Jia, X.; Zhang, X. SA-Pmnet: Utilizing Close-Range Photogrammetry Combined with Image Enhancement and Self-Attention Mechanisms for 3D Reconstruction of Forests. Remote Sens. 2024, 16, 416. [Google Scholar] [CrossRef]

- Kuelka, K.; Surov, P. Mathematically Optimized Trajectory for Terrestrial Close-Range Photogrammetric 3D Reconstruction of Forest Stands. ISPRS J. Photogramm. Remote Sens. 2021, 178, 259–281. [Google Scholar] [CrossRef]

- Bayati, H.; Najafi, A.; Vahidi, J.; Jalali, S.G. 3D Reconstruction of Uneven-Aged Forest in Single Tree Scale Using Digital Camera and SfM-MVS Technique. Scand. J. For. Res. 2021, 36, 210–220. [Google Scholar] [CrossRef]

- Xu, Z.; Shen, X.; Cao, L. Extraction of Forest Structural Parameters by the Comparison of Structure from Motion (SfM) and Backpack Laser Scanning (BLS) Point Clouds. Remote Sens. 2023, 15, 2144. [Google Scholar] [CrossRef]

- Hu, T.; Sun, Y.; Jia, W.; Li, D.; Zou, M.; Zhang, M. Study on the Estimation of Forest Volume Based on Multi-Source Data. Sensors 2021, 21, 7796. [Google Scholar] [CrossRef]

- Popescu, S.C. Estimating Biomass of Individual Pine Trees Using Airborne Lidar. Biomass Bioenergy 2007, 31, 646–655. [Google Scholar] [CrossRef]

- Bucksch, A.; Lindenbergh, R.; Abd Rahman, M.Z.; Menenti, M. Breast Height Diameter Estimation from High-Density Airborne LiDAR Data. Geosci. Remote Sens. Lett. 2014, 11, 1056–1060. [Google Scholar] [CrossRef]

- Bu, G.; Wang, P. Adaptive Circle-Ellipse Fitting Method for Estimating Tree Diameter Based on Single Terrestrial Laser Scanning. J. Appl. Remote Sens. 2016, 10, 026040. [Google Scholar] [CrossRef]

- Zhou, S.; Kang, F.; Li, W.; Kan, J.; Zheng, Y.; He, G. Extracting Diameter at Breast Height with a Handheld Mobile LiDAR System in an Outdoor Environment. Sensors 2019, 19, 3212. [Google Scholar] [CrossRef]

- Corte, A.P.D.; Souza, D.V.; Rex, F.E.; Sanquetta, C.R.; Broadbent, E.N. Forest Inventory with High-Density UAV-Lidar: Machine Learning Approaches for Predicting Individual Tree Attributes. Comput. Electron. Agric. 2020, 179, 105815. [Google Scholar] [CrossRef]

- Kaviriri, D.K.; Liu, H.; Zhao, X. Estimation of Genetic Parameters and Wood Yield Selection Index in a Clonal Trial of Korean Pine (Pinus koraiensis) in Northeastern China. Sustainability 2021, 13, 4167. [Google Scholar] [CrossRef]

- Moreira, B.M.; Goyanes, G.; Pina, P.; Vassilev, O.; Heleno, S. Assessment of the Influence of Survey Design and Processing Choices on the Accuracy of Tree Diameter at Breast Height (DBH) Measurements Using UAV-Based Photogrammetry. Drones 2021, 5, 43. [Google Scholar] [CrossRef]

- Mokroš, M.; Liang, X.; Surový, P.; Valent, P.; Čerňava, J.; Chudý, F.; Tunák, D.; Saloň, Š.; Merganič, J. Evaluation of Close-Range Photogrammetry Image Collection Methods for Estimating Tree Diameters. ISPRS Int. J. Geo-Inf. 2018, 7, 93. [Google Scholar] [CrossRef]

- Popescu, S.C.; Zhao, K. A Voxel-Based Lidar Method for Estimating Crown Base Height for Deciduous and Pine Trees. Remote Sens. Environ. 2008, 112, 767–781. [Google Scholar] [CrossRef]

- Vauhkonen, J. Estimating Crown Base Height for Scots Pine by Means of the 3D Geometry of Airborne Laser Scanning Data. Int. J. Remote Sens. 2010, 31, 1213–1226. [Google Scholar] [CrossRef]

- Fu, L.; Sun, H.; Sharma, R.P.; Lei, Y.; Zhang, H.; Tang, S. Nonlinear Mixed-Effects Crown Width Models for Individual Trees of Chinese Fir (Cunninghamia lanceolata) in South-Central China. For. Ecol. Manag. 2013, 302, 210–220. [Google Scholar] [CrossRef]

- Clark, N.A.; Wynne, R.H.; Schmoldt, D.L.; Winn, M. An Assessment of the Utility of a Non-Metric Digital Camera for Measuring Standing Trees. Comput. Electron. Agric. 2000, 28, 151–169. [Google Scholar] [CrossRef]

- Su, J.; Fan, Y.; Mannan, A.; Wang, S.; Long, L.; Feng, Z. Real-Time Estimation of Tree Position, Tree Height, and Tree Diameter at Breast Height Point, Using Smartphones Based on Monocular SLAM. Forests 2024, 15, 939. [Google Scholar] [CrossRef]

- Ferreira, R.T.; Viana, A.P.; Barroso, D.G.; Resende, M.D.V.D.; Amaral Júnior, A.T.D. Toona Ciliata Genotype Selection with the Use of Individual BLUP with Repeated Measures. Sci. Agric. 2012, 69, 210–216. [Google Scholar] [CrossRef]

- Fan, Y.; Feng, Z.; Mannan, A.; Khan, T.U.; Shen, C.; Saeed, S. Estimating Tree Position, Diameter at Breast Height, and Tree Height in Real-Time Using a Mobile Phone with RGB-D SLAM. Remote Sens. 2018, 10, 1845. [Google Scholar] [CrossRef]

- Wu, X.; Zhou, S.; Xu, A.; Chen, B. Passive Measurement Method of Tree Diameter at Breast Height Using a Smartphone. Comput. Electron. Agric. 2019, 163, 104875. [Google Scholar] [CrossRef]

- Xinmei, W.; Aijun, X.; Tingting, Y. Passive Measurement Method of Tree Height and Crown Diameter Using a Smartphone. IEEE Access 2020, 8, 11669–11678. [Google Scholar] [CrossRef]

- Wells, L.A.; Woodam, C. Evaluation of Ground Plane Detection for Estimating Breast Height in Stereo Images. For. Sci. 2020, 66, 612–622. [Google Scholar] [CrossRef]

- Trairattanapa, V.; Ravankar, A.A.; Emaru, T. Estimation of Tree Diameter at Breast Height Using Stereo Camera by Drone Surveying and Mobile Scanning Methods. In Proceedings of the 2020 59th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Chiang Mai, Thailand, 23–26 September 2020; pp. 946–951. [Google Scholar]

- Song, C.; Yang, B.; Zhang, L.; Wu, D. A Handheld Device for Measuring the Diameter at Breast Height of Individual Trees Using Laser Ranging and Deep-Learning Based Image Recognition. Plant Methods 2021, 17, 67. [Google Scholar] [CrossRef] [PubMed]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Galvez-López, D.; Tardos, J.D. Bags of Binary Words for Fast Place Recognition in Image Sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Ha, H.; Im, S.; Park, J.; Jeon, H.-G.; Kweon, I.S. High-Quality Depth from Uncalibrated Small Motion Clip. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 5413–5421. [Google Scholar]

- Nozick, V.; de Sorbier, F.; Saito, H. Plane-Sweep Algorithm: Various Tools for Computer Vision. Tech. Comm. Pattern Recognit. Media Underst. 2008, 107, 87–94. [Google Scholar]

- Sun, Y. Study on Single Tree Structure Parameters Extraction Based on Close-Range Photogrammetry. Master’s Thesis, Northeast Forestry University, Harbin, China, 2020. [Google Scholar]

- Wang, X.; Song, K.; Wang, Z.; Da, L.; Mokro, M. Usage of Structure-from-Motion for Urban Forest Inventory. J. Southwest For. Univ. 2021, 41, 139–148. [Google Scholar]

- Yang, S.; Xing, Y.; Xing, T.; Deng, H.; Xi, Z. Multi-Sensors Fusion SLAM-Aided Forest Plot Mapping with Backpack Dual-LiDAR System. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 1–21. [Google Scholar] [CrossRef]

- Yang, S.; Xing, Y.; Wang, D.; Deng, H. A Novel Point Cloud Adaptive Filtering Algorithm for LiDAR SLAM in Forest Environments Based on Guidance Information. Remote Sens. 2024, 16, 2714. [Google Scholar] [CrossRef]

- Su, Y.; Guo, Q.; Jin, S.; Guan, H.; Sun, X.; Ma, Q.; Hu, T.; Wang, R.; Li, Y. The Development and Evaluation of a Backpack LiDAR System for Accurate and Efficient Forest Inventory. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1660–1664. [Google Scholar] [CrossRef]

- Zhu, Y.; Sun, G.; Ding, G.; Zhou, J.; Wen, M.; Jin, S.; Zhao, Q.; Colmer, J.; Ding, Y.; Ober, E.S.; et al. Large-Scale Field Phenotyping Using Backpack LiDAR and CropQuant-3D to Measure Structural Variation in Wheat. Plant Physiol. 2021, 187, 716–738. [Google Scholar] [CrossRef] [PubMed]

- GB/T 38590-2020; Technical Regulations for Continuous Forest Inventory. National Forest Resources Standardization Technical Committee: Beijing, China, 2020.

| Plot ID | Dominant Tree Species | Mean DBH/cm | Stem Density (Stems/ha) | Understory Conditions |

|---|---|---|---|---|

| 1 | Betula platyphylla | 17.79 | 725 | More miscellaneous wood and good brightness |

| 2 | Pinus tabuliformis var. mukdensis | 27.10 | 900 | Non-miscellaneous tree and normal brightness |

| 3 | Quercus mongolica | 16.36 | 825 | Non-miscellaneous tree and normal brightness |

| 4 | Fraxinus mandshurica | 33.53 | 675 | Fewer miscellaneous tree and good brightness |

| 5 | Picea | 26.38 | 900 | Non-miscellaneous tree and good brightness |

| 6 | Pinus sylvestris var. mongolica | 19.16 | 900 | Non-miscellaneous tree and good brightness |

| Parameters | Value |

|---|---|

| Resolution | 3840 × 2160 |

| Frame rate | 30 Hz |

| Maximum Measurement Distance | 20 m |

| Field of View | 120° |

| Exposure Time | 1/4000~30 s |

| Focal Length | x: 3005.0, y: 3002.3 |

| Plot ID | BIAS/cm | rBIAS/% | RMSE/cm | rRMSE/% | η/% |

|---|---|---|---|---|---|

| 1 | 0.05 | 0.27 | 0.85 | 4.76 | 3.39 |

| 2 | 0.25 | 0.94 | 0.60 | 2.22 | 1.81 |

| 3 | 0.17 | 1.03 | 0.70 | 4.30 | 3.89 |

| 4 | 0.14 | 0.43 | 0.90 | 2.70 | 2.38 |

| 5 | 0.41 | 1.56 | 0.91 | 3.46 | 3.19 |

| 6 | 0.87 | 4.55 | 1.18 | 6.16 | 5.42 |

| Mean | 0.32 | 1.46 | 0.86 | 3.93 | 3.35 |

| Plot ID | BIAS/m | rBIAS/% | RMSE/m | rRMSE/% | η/% |

|---|---|---|---|---|---|

| 1 | 0.08 | 2.10 | 0.19 | 5.06 | 4.87 |

| 2 | −0.002 | 0.046 | 0.13 | 2.75 | 2.48 |

| 3 | 0.08 | 2.69 | 0.21 | 7.04 | 5.50 |

| 4 | −0.03 | −0.62 | 0.18 | 3.61 | 2.74 |

| 5 | 0.02 | 0.63 | 0.08 | 2.52 | 1.97 |

| 6 | 0.17 | 4.54 | 0.21 | 5.69 | 5.58 |

| Mean | 0.05 | 1.56 | 0.17 | 4.45 | 3.86 |

| Reference | Sample Plot Size/m2 | Acquisition Devices | Methodologies | Data Type | Average Image Acquisition time/s | Average Image Processing time/s | η/% |

|---|---|---|---|---|---|---|---|

| The study | 20 × 20 | Monocular camera | Small motion clip point cloud recovery | Small motion clip | 1~2 | 16 | 3.35% |

| Sun [40] | 20 × 20 | Monocular camera | Space Intersection | ≥2 | 60 | - | 10.0% |

| Wang et al. [41] | 62 × π | Monocular camera | SfM | ≈5 | 45 | 322 | 5.4% |

| Su et al. [28] | 7.5 × π | Monocular camera | Visual Odometry | Ordered image sequences | - | - | 3.59% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, S.; Xing, Y.; Yin, B.; Wang, D.; Chang, X.; Wang, J. A Novel Method for Extracting DBH and Crown Base Height in Forests Using Small Motion Clips. Forests 2024, 15, 1635. https://doi.org/10.3390/f15091635

Yang S, Xing Y, Yin B, Wang D, Chang X, Wang J. A Novel Method for Extracting DBH and Crown Base Height in Forests Using Small Motion Clips. Forests. 2024; 15(9):1635. https://doi.org/10.3390/f15091635

Chicago/Turabian StyleYang, Shuhang, Yanqiu Xing, Boqing Yin, Dejun Wang, Xiaoqing Chang, and Jiaqi Wang. 2024. "A Novel Method for Extracting DBH and Crown Base Height in Forests Using Small Motion Clips" Forests 15, no. 9: 1635. https://doi.org/10.3390/f15091635