Exploration of Suitable Spectral Bands and Indices for Forest Fire Severity Evaluation Using ZY-1 Hyperspectral Data

Abstract

:1. Introduction

2. Materials and Methods

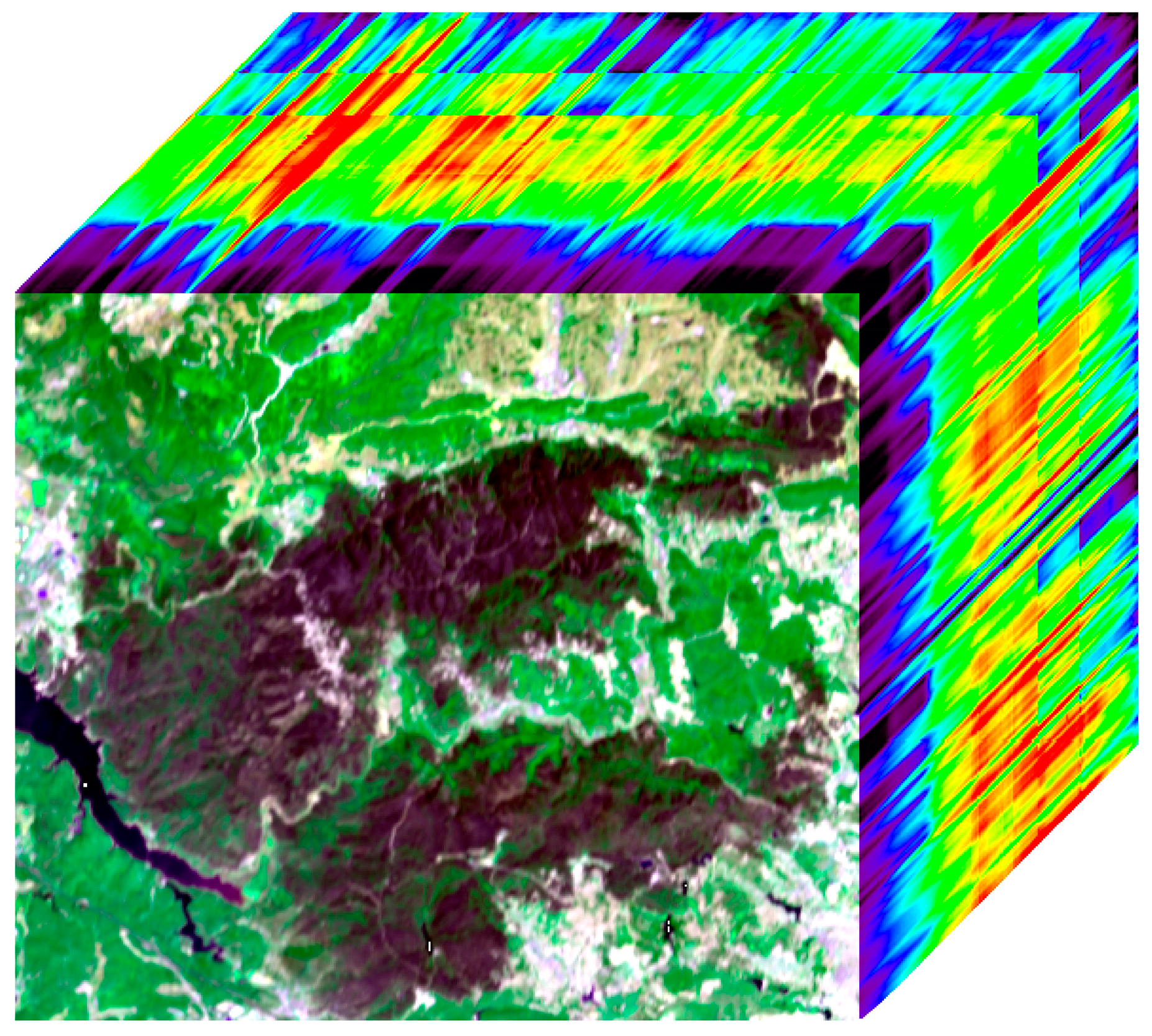

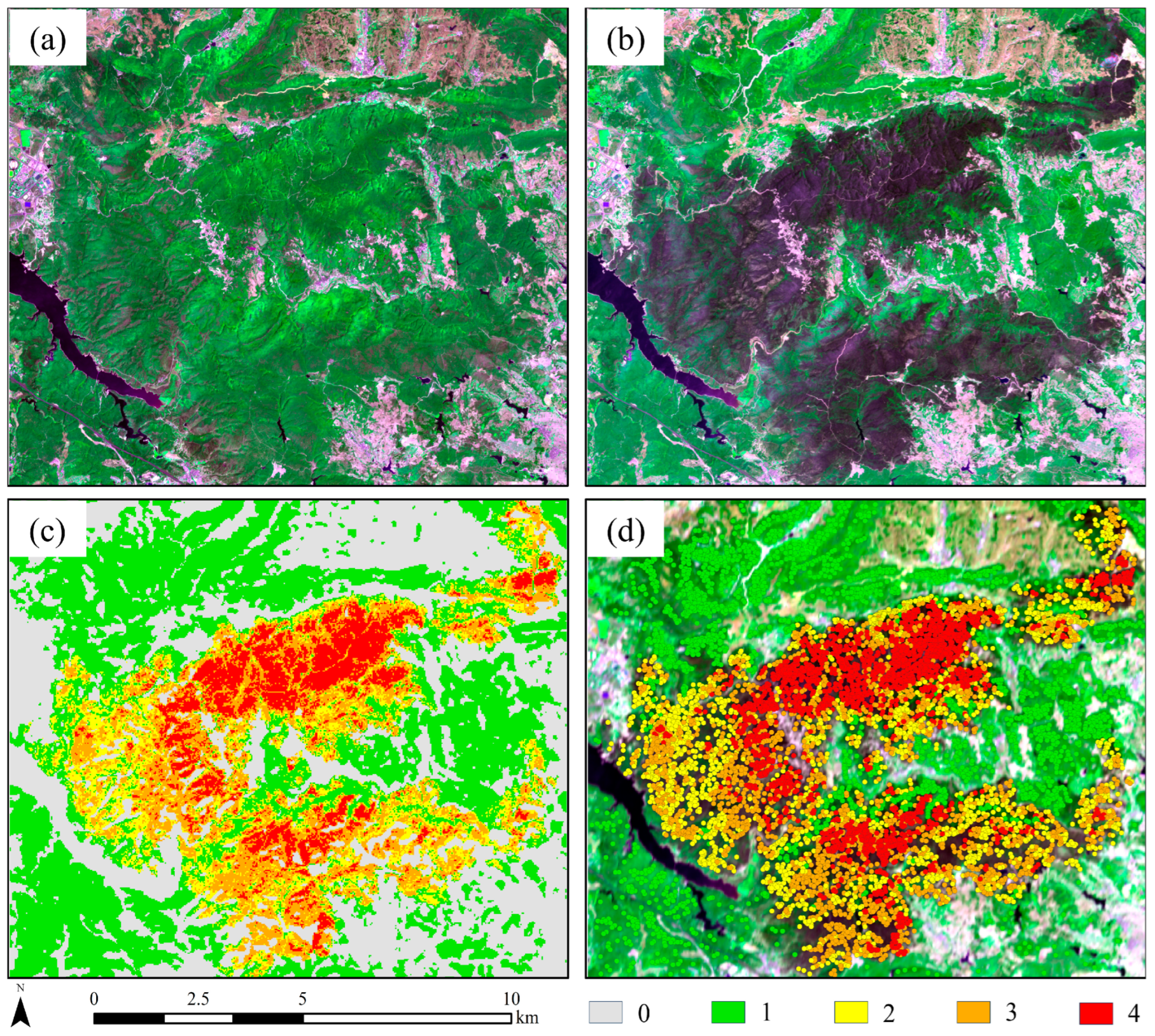

2.1. Study Area and Data Sources

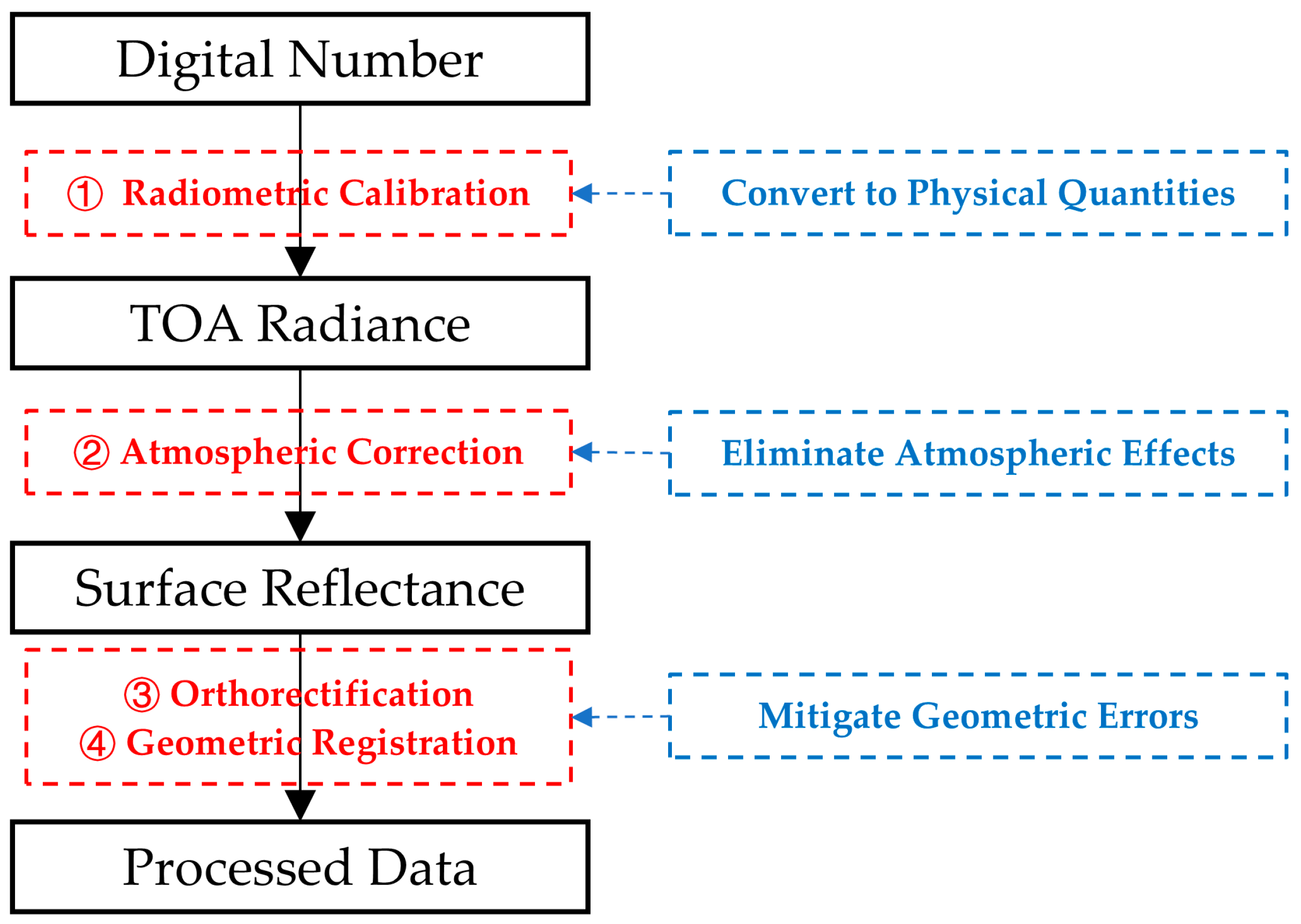

2.2. Data Processing and Sample Selection

2.3. Methods

2.3.1. Separability Calculation

2.3.2. Index Construction

2.3.3. Evaluation Methods

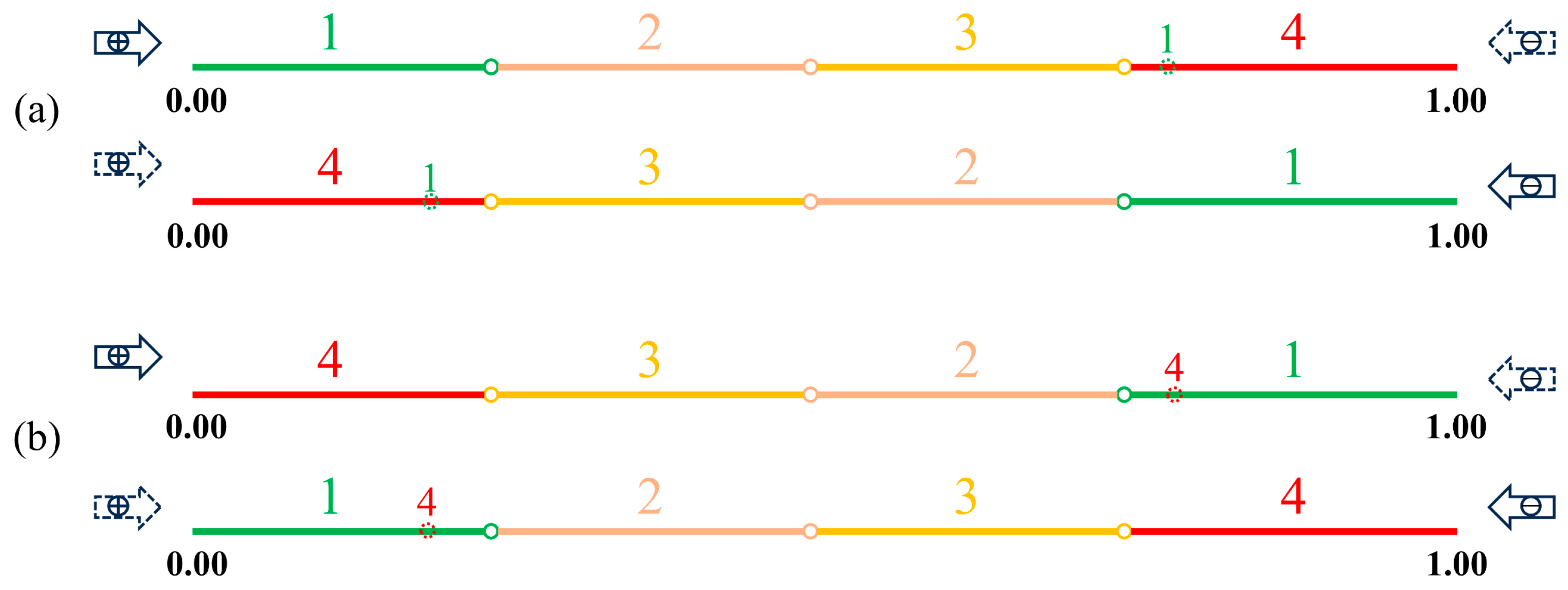

- Search method: The fixed step search method.

- Operational parameters: Step size (fixed at 0.01 prior to the search) and search direction (determined during the search as increasing (+) or decreasing (−)).

- Evaluation Metric: F-Score (the F1_Score for the target class in the validation data (Single F1_Score, SF1) was used during the search, and the Weighted F1_Score (WF1), representing the weighted average of the F1_Score for multiple fire severity categories in the validation data, was used as the evaluation standard after completion of the search.)

- Process Description: The severity levels were assessed in both ascending (1–4) and descending (4–1) orders. The initial thresholds were set to increase (0.00–1.00) for ascending and decrease (1.00–0.00) for descending. First, the ascending order thresholds were determined by a bidirectional search, identifying the optimal segmentation threshold for level 1. The subsequent search direction was adjusted accordingly (increasing or decreasing). Based on threshold a, the optimal segmentation threshold b for level 2 was determined, and further adjustments along the same direction were made to identify threshold c for level 3. The image was then segmented into four categories (1, 2, 3, and 4) using thresholds a, b, and c, respectively. The same process was applied for the descending order. The final classification result was based on the best evaluation from both processes.

3. Results and Discussion

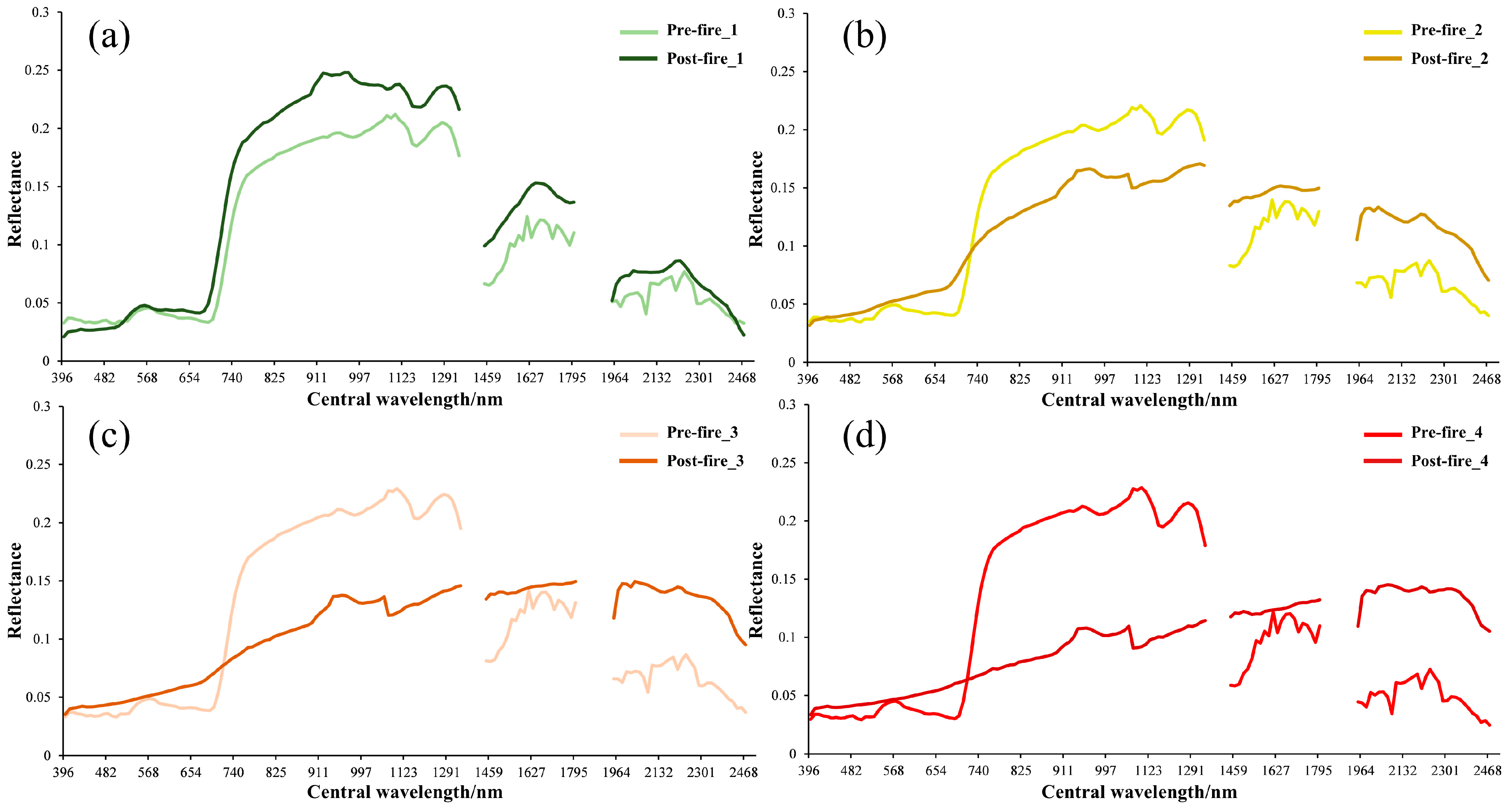

3.1. Comparison of Spectral Curves for Different Fire Severities

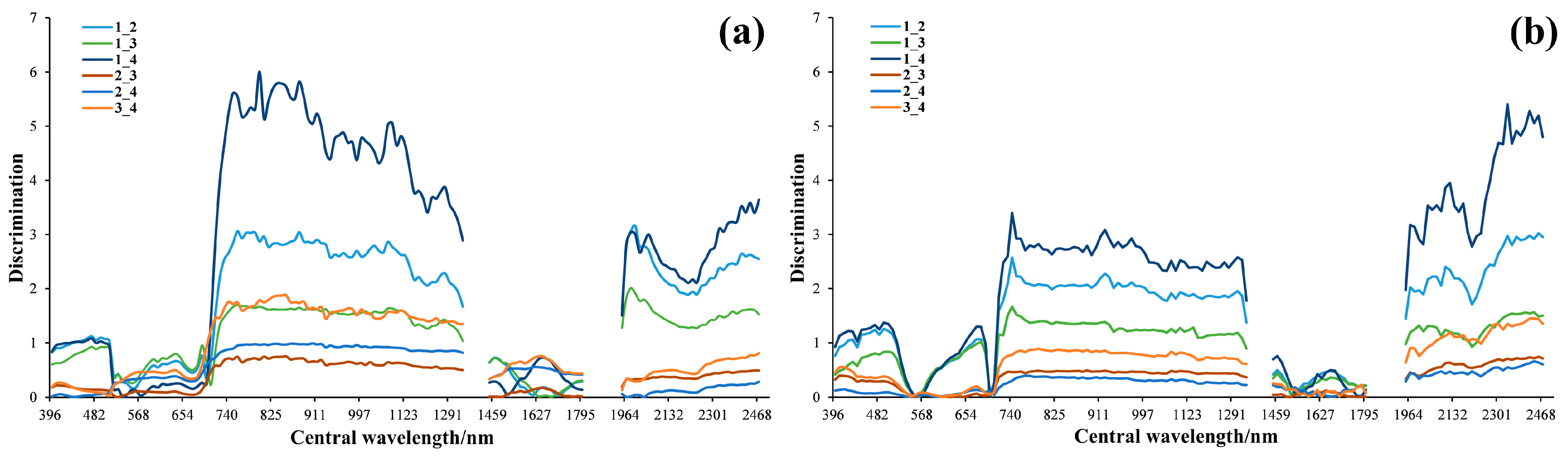

3.2. Comparison of Spectral Separability for Different Fire Severities

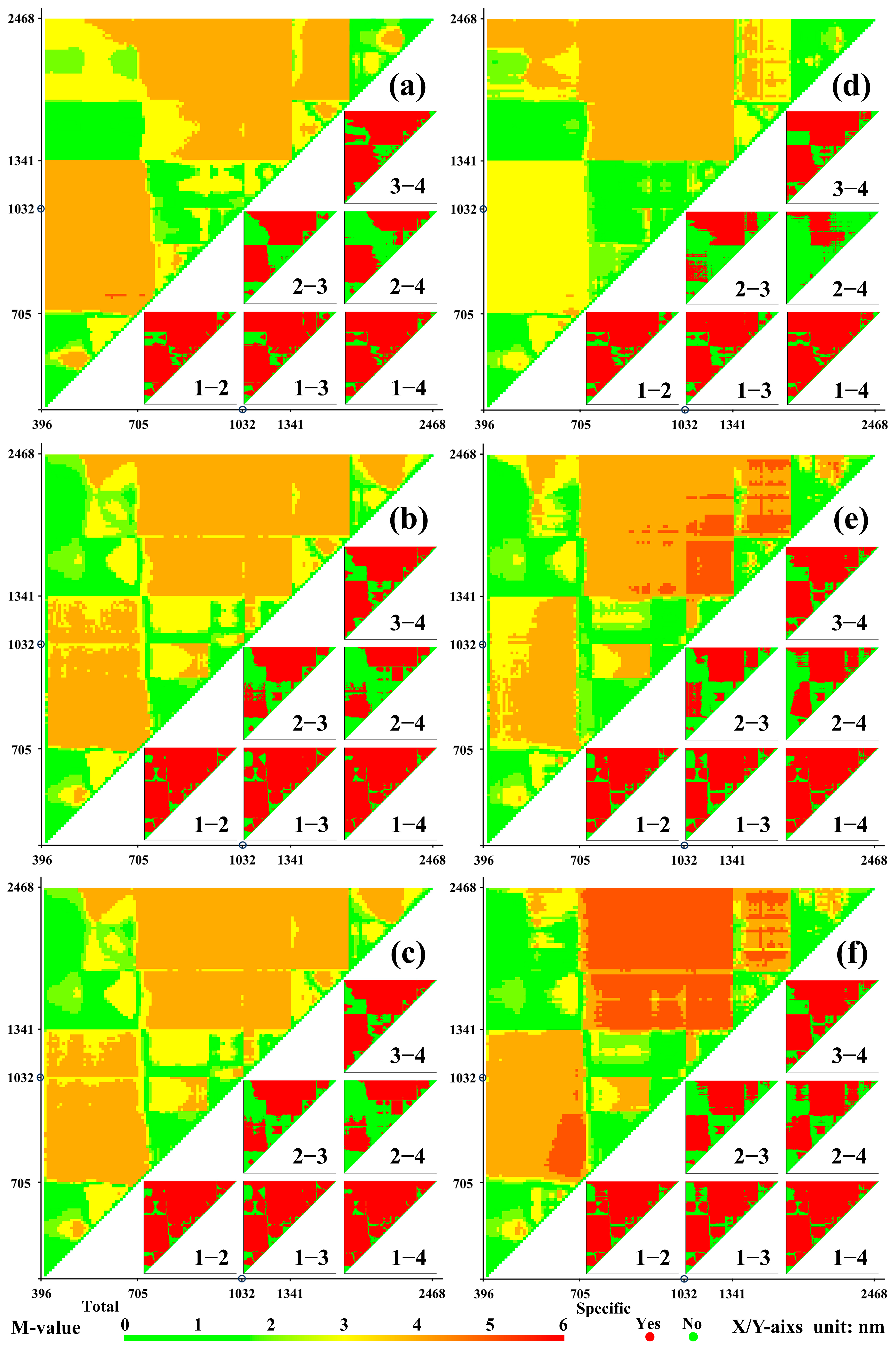

3.3. Comparison of Spectral Index Separability for Different Fire Severities

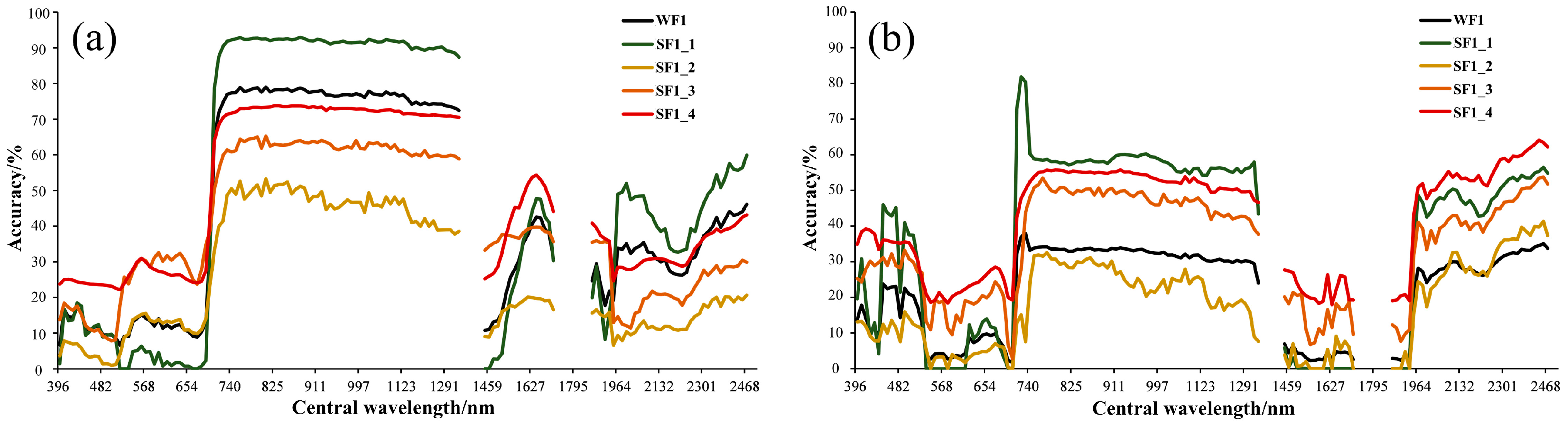

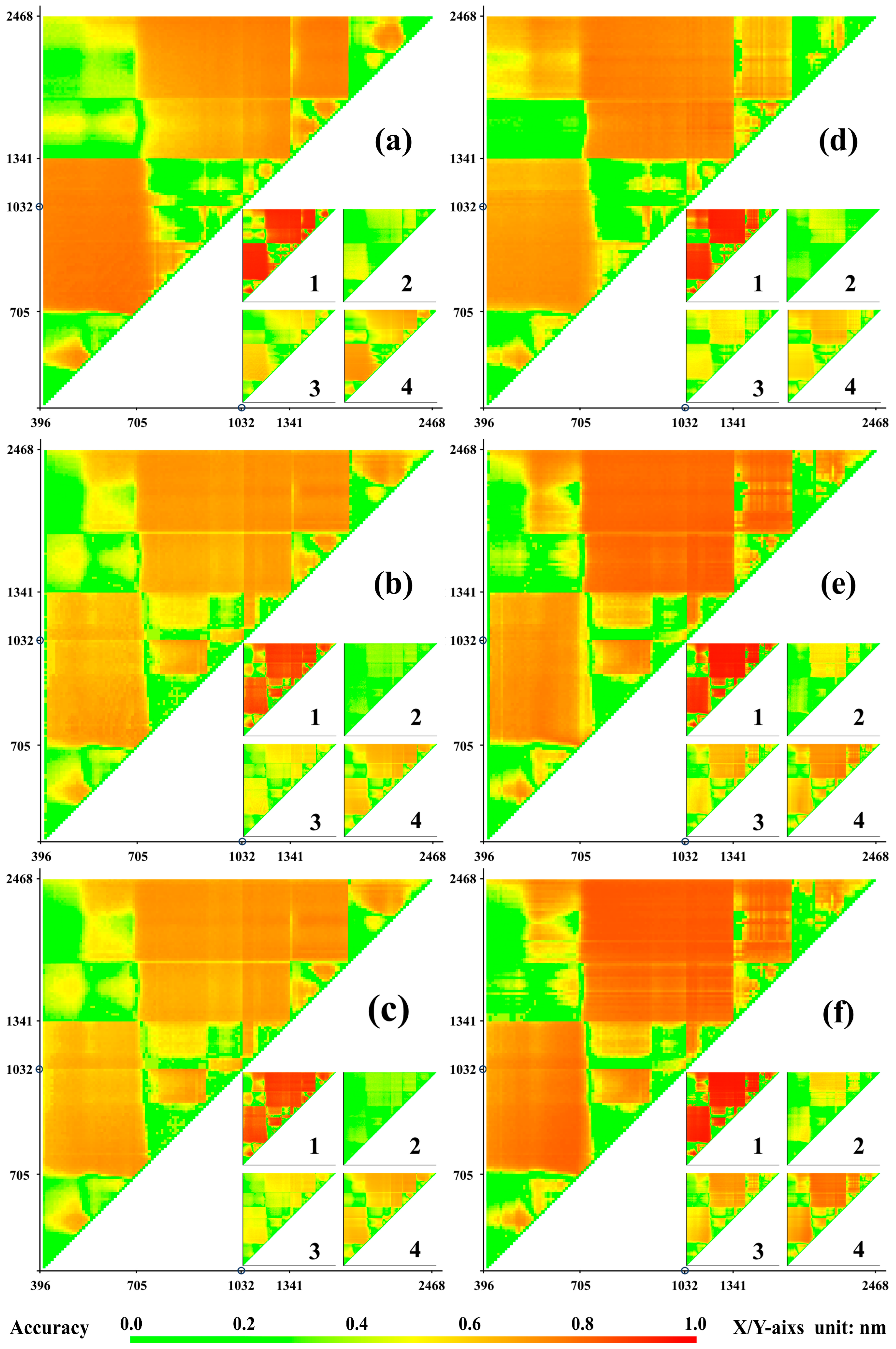

3.4. Analysis of Fire Severity Classification Accuracy for Different Spectral Bands

3.5. Analysis of Fire Severity Classification Accuracy for Different Indices

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Heydari, M.; Rostamy, A.; Najafi, F.; Dey, D. Effect of fire severity on physical and biochemical soil properties in Zagros oak (Quercus brantii Lindl.) forests in Iran. J. For. Res. 2017, 28, 95–104. [Google Scholar] [CrossRef]

- Singh, J.; Boucher, P.B.; Hockridge, E.G.; Davies, A.B. Effects of long-term fixed fire regimes on African savanna vegetation biomass, vertical structure and tree stem density. J. Appl. Ecol. 2023, 60, 1223–1238. [Google Scholar] [CrossRef]

- Fu, J.; Wu, Z.; Yan, S.; Zhang, Y.; Gu, X.; Du, L. Effects of climate, vegetation, and topography on spatial patterns of burn severity in the Great Xing’an Mountains. Acta Ecol. Sin. 2020, 40, 1672–1682. [Google Scholar]

- Chuvieco, E.; Mouillot, F.; Van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical background and current developments for mapping burned area from satellite Earth observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Han, D.; Di, X.; Yang, G.; Sun, L.; Weng, Y. Quantifying fire severity: A brief review and recommendations for improvement. Ecosyst. Health Sustain. 2021, 7, 1973346. [Google Scholar] [CrossRef]

- Miller, M.E.; Elliot, W.J.; Billmire, M.; Robichaud, P.R.; Endsley, K.A. Rapid-response tools and datasets for post-fire remediation: Linking remote sensing and process-based hydrological models. Int. J. Wildland Fire 2016, 25, 1061–1073. [Google Scholar] [CrossRef]

- Li, M.; Kang, X.; Fan, W. Burned area extraction in Huzhong forests based on remote sensing and the spatial analysis of the burned severity. Sci. Silvae Sin. 2017, 53, 163–174. [Google Scholar]

- Miller, C.W.; Harvey, B.J.; Kane, V.R.; Moskal, L.M.; Alvarado, E. Different approaches make comparing studies of burn severity challenging: A review of methods used to link remotely sensed data with the Composite Burn Index. Int. J. Wildland Fire 2023, 32, 449–475. [Google Scholar] [CrossRef]

- Saberi, S.J.; Agne, M.C.; Harvey, B.J. Do you CBI what I see? The relationship between the Composite Burn Index and quantitative field measures of burn severity varies across gradients of forest structure. Int. J. Wildland Fire 2022, 31, 112–123. [Google Scholar] [CrossRef]

- Yang, D.; Wu, Z.; Liang, Y.; He, H. Establishment of Quantitative Indexes of Forest Fire Severity in Forest Region. For. Grassl. Resour. Res. 2014, 6, 140–145. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Hook, S.; Hulley, G. An alternative spectral index for rapid fire severity assessments. Remote Sens. Environ. 2012, 123, 72–80. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Dennison, P.; Gitas, I.; Hulley, G.; Kalashnikova, O.; Katagis, T.; Kuai, L.; Meng, R.; Roberts, D.; Stavros, N. Hyperspectral remote sensing of fire: State-of-the-art and future perspectives. Remote Sens. Environ. 2018, 216, 105–121. [Google Scholar] [CrossRef]

- Chen, Y.; Lara, M.J.; Hu, F.S. A robust visible near-infrared index for fire severity mapping in Arctic tundra ecosystems. ISPRS J. Photogramm. Remote Sens. 2020, 159, 101–113. [Google Scholar]

- Kurbanov, E.; Vorobev, O.; Lezhnin, S.; Sha, J.; Wang, J.; Li, X.; Cole, J.; Dergunov, D.; Wang, Y. Remote sensing of forest burnt area, burn severity, and post-fire recovery: A review. Remote Sens. 2022, 14, 4714. [Google Scholar] [CrossRef]

- Parks, S.A.; Dillon, G.K.; Miller, C. A new metric for quantifying burn severity: The relativized burn ratio. Remote Sens. 2014, 6, 1827–1844. [Google Scholar]

- Carvajal-Ramírez, F.; Marques da Silva, J.R.; Agüera-Vega, F.; Martínez-Carricondo, P.; Serrano, J.; Moral, F.J. Evaluation of fire severity indices based on pre-and post-fire multispectral imagery sensed from UAV. Remote Sens. 2019, 11, 993. [Google Scholar] [CrossRef]

- McKenna, P.; Erskine, P.D.; Lechner, A.M.; Phinn, S. Measuring fire severity using UAV imagery in semi-arid central Queensland, Australia. Int. J. Remote Sens. 2017, 38, 4244–4264. [Google Scholar] [CrossRef]

- García-Llamas, P.; Suárez-Seoane, S.; Fernández-Guisuraga, J.M.; Fernández-García, V.; Fernández-Manso, A.; Quintano, C.; Taboada, A.; Marcos, E.; Calvo, L. Evaluation and comparison of Landsat 8, Sentinel-2 and Deimos-1 remote sensing indices for assessing burn severity in Mediterranean fire-prone ecosystems. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 137–144. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Verstraeten, W.W.; Lhermitte, S.; Goossens, R. Evaluating Landsat Thematic Mapper spectral indices for estimating burn severity of the 2007 Peloponnese wildfires in Greece. Int. J. Wildland Fire 2010, 19, 558–569. [Google Scholar] [CrossRef]

- Quintano, C.; Fernandez-Manso, A.; Roberts, D.A. Burn severity mapping from Landsat MESMA fraction images and Land Surface Temperature. Remote Sens. Environ. 2017, 190, 83–95. [Google Scholar]

- Quintano, C.; Fernández-Manso, A.; Calvo, L.; Marcos, E.; Valbuena, L. Land surface temperature as potential indicator of burn severity in forest Mediterranean ecosystems. Int. J. Appl. Earth Obs. Geoinf. 2015, 36, 1–12. [Google Scholar] [CrossRef]

- Zheng, Z.; Zeng, Y.; Li, S.; Huang, W. A new burn severity index based on land surface temperature and enhanced vegetation index. Int. J. Appl. Earth Obs. Geoinf. 2016, 45, 84–94. [Google Scholar] [CrossRef]

- Fernández-García, V.; Santamarta, M.; Fernández-Manso, A.; Quintano, C.; Marcos, E.; Calvo, L. Burn severity metrics in fire-prone pine ecosystems along a climatic gradient using Landsat imagery. Remote Sens. Environ. 2018, 206, 205–217. [Google Scholar] [CrossRef]

- Tan, L.; Zeng, Y.; Zhong, Z. An adaptability analysis of remote sensing indices in evaluating fire severity. Remote Sens. Nat. Resour. 2016, 28, 84–90. [Google Scholar]

- Fernandez-Manso, A.; Quintano, C.; Roberts, D.A. Burn severity analysis in Mediterranean forests using maximum entropy model trained with EO-1 Hyperion and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2019, 155, 102–118. [Google Scholar] [CrossRef]

- Chuvieco, E.; Riaño, D.; Danson, F.; Martin, P. Use of a radiative transfer model to simulate the postfire spectral response to burn severity. J. Geophys. Res. Biogeosci. 2006, 111. [Google Scholar] [CrossRef]

- Ghazali, N.N.; Saraf, N.M.; Rasam, A.R.A.; Othman, A.N.; Salleh, S.A.; Saad, N.M. Forest Fire Severity Level Using dNBR Spectral Index. Rev. Int. Geomat. 2025, 34, 89–101. [Google Scholar] [CrossRef]

- Morgan, P.; Keane, R.E.; Dillon, G.K.; Jain, T.B.; Hudak, A.T.; Karau, E.C.; Sikkink, P.G.; Holden, Z.A.; Strand, E.K. Challenges of assessing fire and burn severity using field measures, remote sensing and modelling. Int. J. Wildland Fire 2014, 23, 1045–1060. [Google Scholar] [CrossRef]

- Loboda, T.V.; French, N.H.; Hight-Harf, C.; Jenkins, L.; Miller, M.E. Mapping fire extent and burn severity in Alaskan tussock tundra: An analysis of the spectral response of tundra vegetation to wildland fire. Remote Sens. Environ. 2013, 134, 194–209. [Google Scholar] [CrossRef]

- Mallinis, G.; Mitsopoulos, I.; Chrysafi, I. Evaluating and comparing Sentinel 2A and Landsat-8 Operational Land Imager (OLI) spectral indices for estimating fire severity in a Mediterranean pine ecosystem of Greece. GIScience Remote Sens. 2018, 55, 1–18. [Google Scholar] [CrossRef]

- Tran, B.N.; Tanase, M.A.; Bennett, L.T.; Aponte, C. Evaluation of spectral indices for assessing fire severity in Australian temperate forests. Remote Sens. 2018, 10, 1680. [Google Scholar] [CrossRef]

- Lu, B.; He, Y.; Tong, A. Evaluation of spectral indices for estimating burn severity in semiarid grasslands. Int. J. Wildland Fire 2015, 25, 147–157. [Google Scholar] [CrossRef]

- Kadakci Koca, T. A statistical approach to site-specific thresholding for burn severity maps using bi-temporal Landsat-8 images. Earth Sci. Inform. 2023, 16, 1313–1327. [Google Scholar] [CrossRef]

- Amos, C.; Petropoulos, G.P.; Ferentinos, K.P. Determining the use of Sentinel-2A MSI for wildfire burning & severity detection. Int. J. Remote Sens. 2019, 40, 905–930. [Google Scholar]

- Badda, H.; Cherif, E.K.; Boulaassal, H.; Wahbi, M.; Yazidi Alaoui, O.; Maatouk, M.; Bernardino, A.; Coren, F.; El Kharki, O. Improving the accuracy of random forest classifier for identifying burned areas in the tangier-tetouan-al hoceima region using google earth engine. Remote Sens. 2023, 15, 4226. [Google Scholar] [CrossRef]

- Sun, G.; Qin, X.; Yin, L.; Liu, S.; Li, Z.; Chen, X.; Zhong, X. Changes analysis of post-fire vegetation spectrum and index based on time series GF-1 WFV images. Spectrosc. Spectr. Anal. 2018, 38, 511–517. [Google Scholar]

- Pang, Y.; Jia, W.; Qin, X.; Si, L.; Liang, X.; Lin, X.; Li, Z. Forest fire monitoring using airborne optical full spectrum remote sensing data. J. Remote Sens 2020, 24, 1280–1292. [Google Scholar] [CrossRef]

- van Gerrevink, M.J.; Veraverbeke, S. Evaluating the hyperspectral sensitivity of the differenced normalized burn ratio for assessing fire severity. Remote Sens. 2021, 13, 4611. [Google Scholar] [CrossRef]

- Hamilton, D.; Bowerman, M.; Colwell, J.; Donohoe, G.; Myers, B. Spectroscopic analysis for mapping wildland fire effects from remotely sensed imagery. J. Unmanned Veh. Syst. 2017, 5, 146–158. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Stavros, E.N.; Hook, S.J. Assessing fire severity using imaging spectroscopy data from the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) and comparison with multispectral capabilities. Remote Sens. Environ. 2014, 154, 153–163. [Google Scholar] [CrossRef]

- Quintano, C.; Calvo, L.; Fernández-Manso, A.; Suárez-Seoane, S.; Fernandes, P.M.; Fernández-Guisuraga, J.M. First evaluation of fire severity retrieval from PRISMA hyperspectral data. Remote Sens. Environ. 2023, 295, 113670. [Google Scholar] [CrossRef]

- Toshiharu, M. Illustrated Remote Sensing (Revised Version); Japan Association of Remote Sensing: Tokyo, Japan; Japan Association of Surveyors: Tokyo, Japan, 2011; pp. 8–9. [Google Scholar]

- Berk, A.; Anderson, G.P.; Acharya, P.K.; Bernstein, L.S.; Muratov, L.; Lee, J.; Fox, M.J.; Adler-Golden, S.M.; Chetwynd, J.H., Jr.; Hoke, M.L. MODTRAN5: A reformulated atmospheric band model with auxiliary species and practical multiple scattering options. In Proceedings of the Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery X, Orlando, FL, USA, 12–16 April 2004; pp. 341–347. [Google Scholar]

- Li, Y.; Deng, R.; Li, J.; Guo, Y.; Li, Y.; Kuang, Z.; Gu, Y.; Liang, Y. Integrated radiometric and atmospheric calibration method of orbita hyperspectral images combined with typical ground object spectra. Bull. Surv. Mapp. 2025, 52–58. [Google Scholar] [CrossRef]

- Wang, Z.; Li, X.; Li, S.; Chen, L. Quickly atmospheric correction for GF-1 WFV cameras. J. Remote Sens. 2016, 20, 353–360. [Google Scholar]

- Zhang, H.; Zhang, H.; Yan, D.; Liu, T.; Li, G.; Yi, P. Fast generation of MODTRAN-based Atmospheric Correction Lookup Tables in the cluster computing environment. Remote Sens. Technol. Appl. 2024, 39, 1442–1451. [Google Scholar]

- Du, Y.; Li, M.; Fan, W.; Wang, B. Estimation of forest stand age based on GWR model and forest fire remote sensing data. Sci. Silvae Sin. 2019, 55, 184–194. [Google Scholar]

- Kaufman, Y.J.; Remer, L.A. Detection of forests using mid-IR reflectance: An application for aerosol studies. IEEE Trans. Geosci. Remote Sens. 1994, 32, 672–683. [Google Scholar] [CrossRef]

- Roteta, E.; Bastarrika, A.; Padilla, M.; Storm, T.; Chuvieco, E. Development of a Sentinel-2 burned area algorithm: Generation of a small fire database for sub-Saharan Africa. Remote Sens. Environ. 2019, 222, 1–17. [Google Scholar] [CrossRef]

- Kebede, T.A.; Hailu, B.T.; Suryabhagavan, K.V. Evaluation of spectral built-up indices for impervious surface extraction using Sentinel-2A MSI imageries: A case of Addis Ababa city, Ethiopia. Environ. Chall. 2022, 8, 100568. [Google Scholar] [CrossRef]

- Zhu, X.; Li, Q.; Guo, C. Evaluation of the monitoring capability of various vegetation indices and mainstream satellite band settings for grassland drought. Ecol. Inform. 2024, 82, 102717. [Google Scholar] [CrossRef]

| Ranking | DI Category | RI Category | NDI Category | |||

|---|---|---|---|---|---|---|

| Combination/nm | WF1/% | Combination/nm | WF1/% | Combination/nm | WF1/% | |

| 1 | 757–611 | 79.26 | 2216–1728 | 75.08 | 2216–1728 | 74.90 |

| 2 | 705–645 | 79.11 | 2216–1577 | 74.97 | 2216–1712 | 74.71 |

| 3 | 705–654 | 79.04 | 2216–1543 | 74.76 | 2216–1577 | 74.70 |

| 4 | 714–637 | 78.94 | 2216–1560 | 74.68 | 2216–1594 | 74.69 |

| 5 | 731–619 | 78.92 | 2216–1712 | 74.65 | 2216–1745 | 74.60 |

| 6 | 731–594 | 78.78 | 2216–1627 | 74.55 | 1998–1577 | 74.59 |

| 7 | 757–559 | 78.74 | 2031–1510 | 74.45 | 2216–1678 | 74.54 |

| 8 | 775–611 | 78.66 | 2031–1678 | 74.39 | 2216–1560 | 74.47 |

| 9 | 722–645 | 78.65 | 2199–1543 | 74.39 | 2031–1577 | 74.44 |

| 10 | 783–619 | 78.65 | 2199–1661 | 74.35 | 2199–1543 | 74.44 |

| Ranking | dDI Category | dRI Category | dNDI Category | |||

|---|---|---|---|---|---|---|

| Combination/nm | WF1/% | Combination/nm | WF1/% | Combination/nm | WF1/% | |

| 1 | 1627–1224 | 78.33 | 1543–1241 | 82.75 | 2048–1106 | 83.39 |

| 2 | 1627–1241 | 78.02 | 2031–1661 | 82.73 | 2031–1106 | 83.38 |

| 3 | 1627–1308 | 77.98 | 1543–1324 | 82.66 | 2199–1073 | 83.33 |

| 4 | 1627–1207 | 77.84 | 2048–1610 | 82.63 | 2417–740 | 83.29 |

| 5 | 1627–1190 | 77.73 | 2048–1257 | 82.55 | 2031–1056 | 83.22 |

| 6 | 1627–1257 | 77.65 | 1745–1241 | 82.54 | 2115–834 | 83.18 |

| 7 | 2199–748 | 77.48 | 1745–1324 | 82.54 | 2216–1106 | 83.16 |

| 8 | 1644–1190 | 77.40 | 1610–1241 | 82.53 | 2048–1056 | 83.16 |

| 9 | 1627–1056 | 77.36 | 1610–1224 | 82.49 | 2401–757 | 83.15 |

| 10 | 2199–731 | 77.35 | 2031–1610 | 82.48 | 2216–1089 | 83.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, X.; Jiang, F.; Qin, X.; Huang, S.; Meng, F.; Yu, L. Exploration of Suitable Spectral Bands and Indices for Forest Fire Severity Evaluation Using ZY-1 Hyperspectral Data. Forests 2025, 16, 640. https://doi.org/10.3390/f16040640

Hu X, Jiang F, Qin X, Huang S, Meng F, Yu L. Exploration of Suitable Spectral Bands and Indices for Forest Fire Severity Evaluation Using ZY-1 Hyperspectral Data. Forests. 2025; 16(4):640. https://doi.org/10.3390/f16040640

Chicago/Turabian StyleHu, Xinyu, Feng Jiang, Xianlin Qin, Shuisheng Huang, Fangxin Meng, and Linfeng Yu. 2025. "Exploration of Suitable Spectral Bands and Indices for Forest Fire Severity Evaluation Using ZY-1 Hyperspectral Data" Forests 16, no. 4: 640. https://doi.org/10.3390/f16040640

APA StyleHu, X., Jiang, F., Qin, X., Huang, S., Meng, F., & Yu, L. (2025). Exploration of Suitable Spectral Bands and Indices for Forest Fire Severity Evaluation Using ZY-1 Hyperspectral Data. Forests, 16(4), 640. https://doi.org/10.3390/f16040640