1. Introduction

Forestry is a significant industry in New Zealand, being the third largest export earner and contributing approximately

$5 billion annually to New Zealand’s economy [

1]. New Zealand’s plantation forests, which are dominated by radiata pine (

Pinus radiata D. Don), cover approximately 1.70 million hectares as 1 April 2016 [

2]. The New Zealand National Exotic Forest Description (NEFD) [

2] indicates that approximately 70% of the plantations have an area over 1000 ha and are owned by large-scale owners, who are mainly corporate forest companies. These forests undergo regular monitoring and assessment by professional foresters. The area descriptions of large-scale forest owners are captured from the NEFD annual survey. The information from large-scale owners, especially those with more than 10,000 ha of forests, is considered the most reliable source of NEFD data [

2].

On the other hand, the remaining 30% are small-scale plantation forests owned by individual investors, farmers or local governments, who are less likely to have regular area assessment. The forest description of smaller-scale forests is less reliable due to inconsistent area definition and management practices. The data provided by small-scale forest owners is likely to be more variable in terms of reliability as (1) some of the areas reported may well be gross areas rather than net stocked areas; (2) data potentially contain higher non-sampling errors due to reporting inaccuracies and responses based on owners’ estimates and (3) errors raised in transferring non-electronic data into the database [

2]. In addition, the NEFD survey only directly surveys 232,000 ha of the 520,000 ha of small-scale forests in New Zealand; most of the remaining area is imputed from annual nursery surveys off the number of seedlings sold [

2]. The approach was considered a reasonable and efficient way of estimating, but also less accurate than alternative methods [

3]. However, since 2006 new plantings have not been added to the NEFD due to the low levels of new seedlings planted [

2]. The limitations of this process are that it does not provide direct measurement of the plantation area, nor describe where the plantations are located.

Overall, the lack of reliability of forest description for small-scale forests has led to insufficient understanding of the wood supply from these forests. These small-scale forests, which were mostly planted in the 1990s, will play an important role in providing wood supply in the next few decades. By 2020, the small-scale forests will have the capacity to provide around 15 million m

3 of radiata pine logs per annum, which will be over 40% of the total radiata pine supply [

4]. Therefore, it is critical to understand the location and area of these small-scale forests in order to effectively plan marketing, harvesting, logistics and transport capacity that are required for additional wood supply.

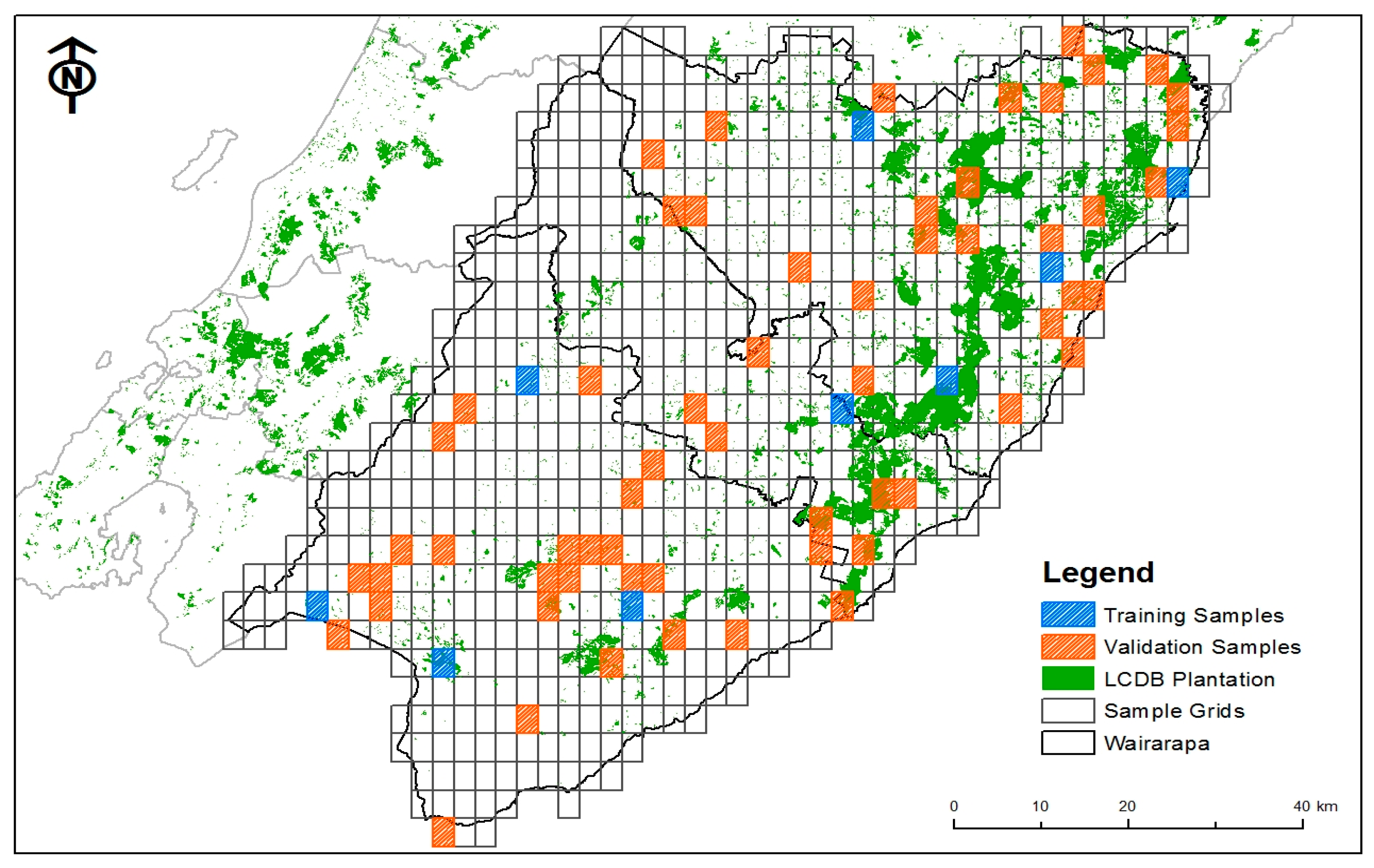

A remote sensing solution for small-scale forest description is necessary because conducting a comprehensive survey and field assessment of these patchy forests is impractical due to the substantial time and cost associated with it. The existing spatial description of plantation resources include the Land Cover Database (LCDB) and Land Use Carbon Analysis System (LUCAS) [

2]. However, the plantation areas for small-scale forests reported from these sources are gross area, including areas that are not intended for timber production [

2], and therefore are an overestimate of the net stocked area. The satellite imagery used to develop the LCDB were SPOT and Landsat [

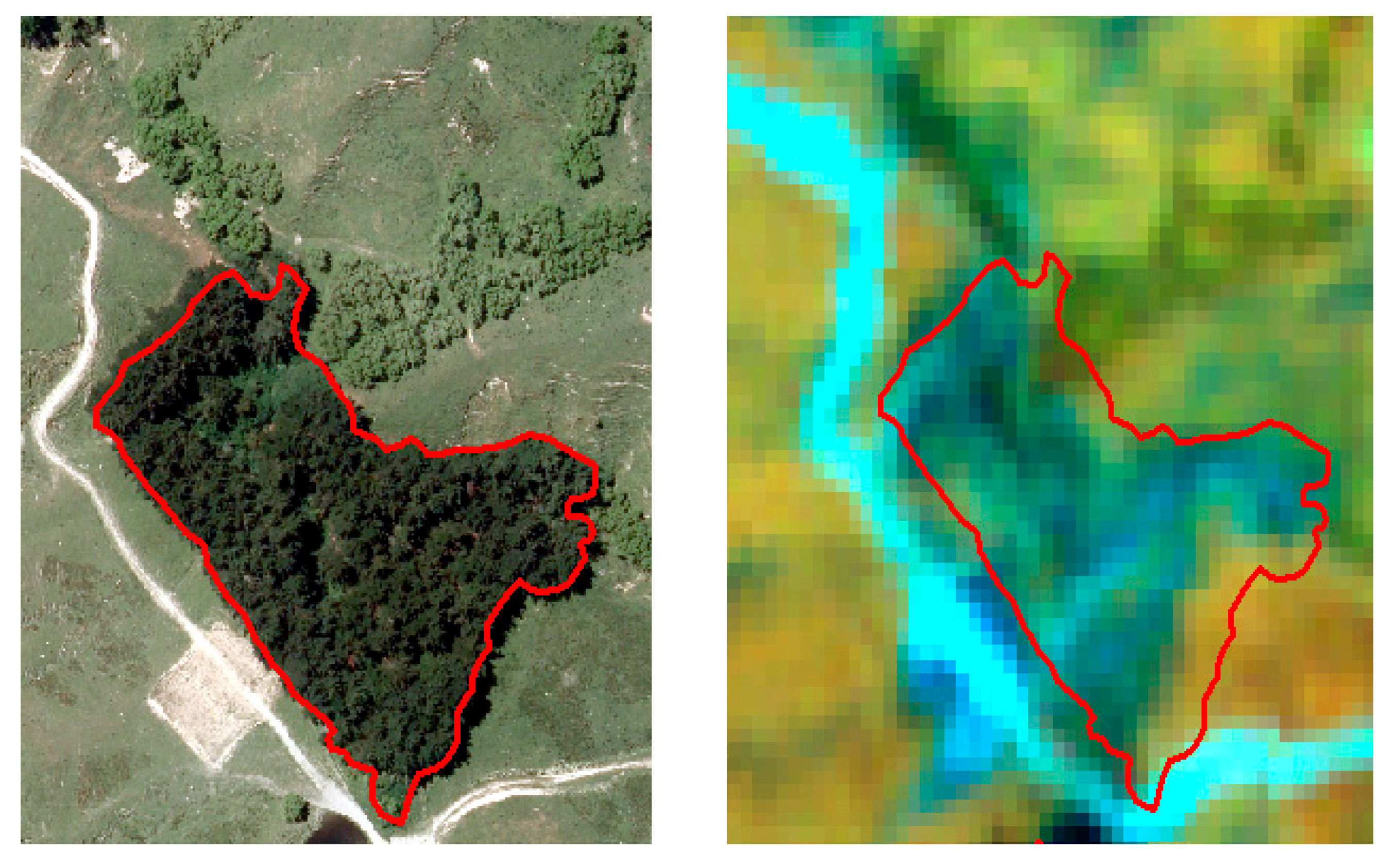

5], which are medium resolution (10–30 m) that potentially overlook small patches of forests and erroneously include gaps in forest area estimates.

Remote sensing has played an important role in forest classification and delineation. Aerial photography has traditionally been the most commonly used approach to determine forest area through manual interpretation despite being potentially subjective and time consuming [

6]. Optical sensors such as satellite imagery can also be used in forest classification and delineation, by automatically assigning forest cover types and estimating forest variables with algorithms based on the spectral, textural and auxiliary data in the images [

7]. This produces a more objective classification and delineation, which reduces time and associated costs [

8,

9]. LiDAR as an active sensor adds additional structural information for forest classification and delineation through direct estimation of forest canopy area and height [

10,

11].

Combining LiDAR with optical sensors has been used in a number of studies and more accurate forest classification and delineation results have been achieved relative to using optical sensors alone [

7,

12]. Nordkvist, et al. [

9] combined LiDAR-derived height metrics and SPOT 5-derived spectral information for vegetation classification, and achieved 16.1% improvement in classification accuracy compared to using SPOT only. Sasaki, et al. [

13] improved the overall land cover classification accuracy marginally by 2.5% by using high resolution spectral images and LiDAR-derived metrics including height, ratio [

14], pulse and intensity parameters. Furthermore, Bork and Su [

8] found that the fusion of LiDAR and multispectral imagery increased classification accuracy by 15–20%. Xu et al. [

12] reviewed a number of forest cover classification studies and concluded that using both LiDAR sensor and optical sensors produced up to 20% improvement in classification results compared with using a single sensor.

Object-based image analysis (OBIA) is an image analysis approach that groups and classifies similar pixels (i.e., image objects) rather than individual pixels [

15]. Pixel-based image classification tends to be sensitive to spectral variations, hence it is likely to result in a high level of misclassification and reduce the accuracy of classification [

16]. OBIA segmentation processes create image objects that are similar to real land cover features in size and shape [

17]. The approach allows use of multiple image elements, parameters and scales such as texture, shape and context, as opposed to pixel-based classification that solely relies on the pixel value. Overall, OBIA has been proven to produce more accurate classification results compared to pixel-based approaches using medium to high resolution imagery, producing improvements in classification accuracy ranging from 9–23% [

18,

19,

20].

Two commonly used supervised OBIA classification approaches include Nearest Neighbour (NN) and Classification and Regression Tree (CART). NN classification is a non-parametric classifier, and there is no Gaussian distribution for the input data [

21]. The classification algorithm computes the statistical distance iteratively from image objects to be classified to the nearest training sample points and assigns them into that class [

22]. NN has been applied in delineating forest polygons with OBIA analysis [

22], classifying forested land covers [

23], delineating forested and non-forested areas [

24] and describing vegetation species composition and structure [

25].

CART is also a non-parametric statistical technique that allows selection of the most appropriate explanatory variables through tree form learning, which can be used in data mining [

26]. The algorithm allows the classes from representative training samples to be split in an optimal manner. The purpose is to create a model that predicts the land cover of a target object based on attributes attached to training samples. A tree can be “learned” by splitting the source set into subsets based on an attribute value test. This process is repeated on each derived subset in a recursive manner called recursive partitioning. The subsequent subsets are separated further until no further division is possible or the tree reaches a defined maximum depth [

26,

27]. CART has been used in a number of studies for land cover classification [

28], delineating forest boundaries [

22] and extracting forest variables [

17].

This research aims to evaluate the performance of forest classification and delineation using RapidEye multispectral imagery alone and combined with LiDAR data. RapidEye imagery is a 5-m multispectral sensor containing five spectral bands including blue, green, red, red edge and near-inferred [

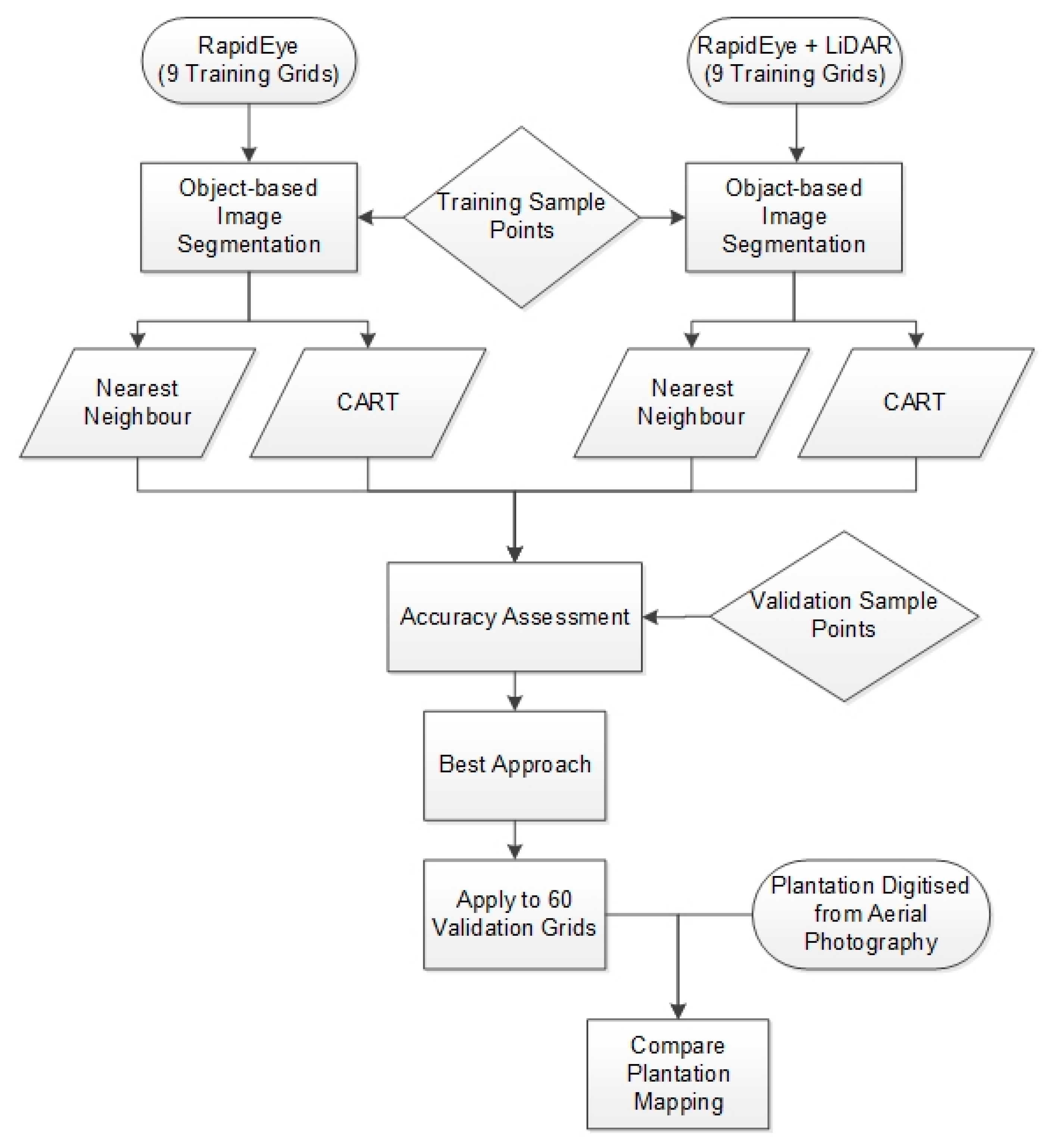

29]. The aim is to develop an automated approach to detect and provide accurate estimation of the stocked areas for small-scale forests using an OBIA approach. The key objective is to compare the net stocked plantation areas derived by different combinations of remote sensing datasets and mapping approaches with the plantation areas manually digitised from high-resolution aerial photography in order to determine which approach provides the best potential for accurate automated plantation mapping. Specifically, this study will address the following research questions: (1) Which combination of the remote sensing dataset and mapping approach produces the highest classification accuracy? (2) How different is the mapped plantation area compared with the manually digitised plantation area and (3) Is the performance of the classification approach affected by plantation patch size?

4. Conclusions

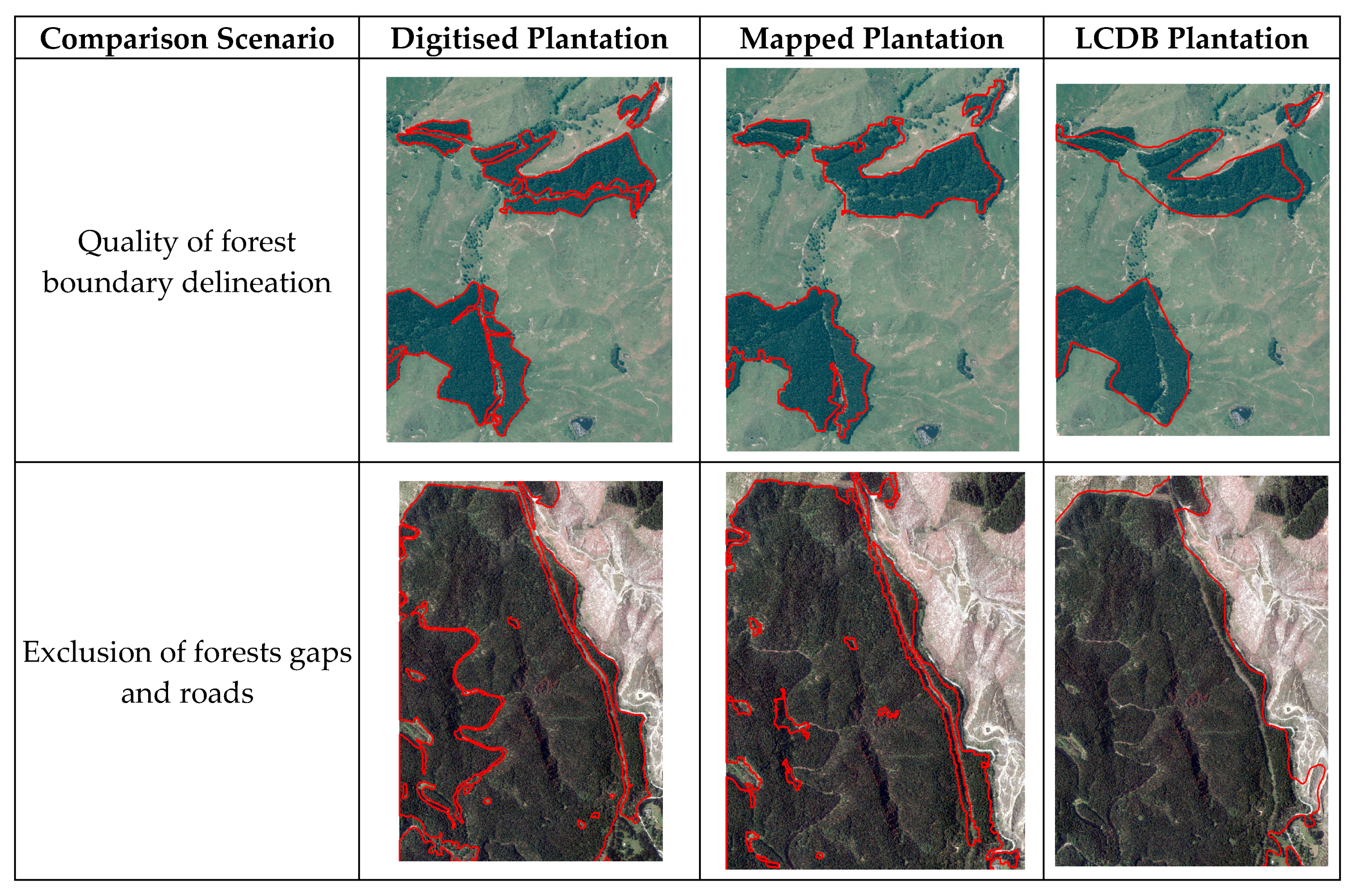

In this study, a factorial combination of two classification approaches and two remote sensing datasets were compared for their ability to accurately classify land cover, specifically plantation forest area. The approaches included nearest neighbour with RapidEye only, nearest neighbour with RapidEye and LiDAR, CART with RapidEye and CART with RapidEye and LiDAR. In an initial classification of nine training grids, CART with RapidEye and LiDAR outperformed the other three approaches, producing the highest overall accuracy and plantation accuracy. The addition of LiDAR data to RapidEye has improved the overall classification accuracy by 8% using the CART approach, and the producer’s accuracy of planted forest improved by 7% compared to using RapidEye images alone. Therefore, the CART approach with both RapidEye and LiDAR was chosen for land cover mapping in the remaining 60 validation grids.

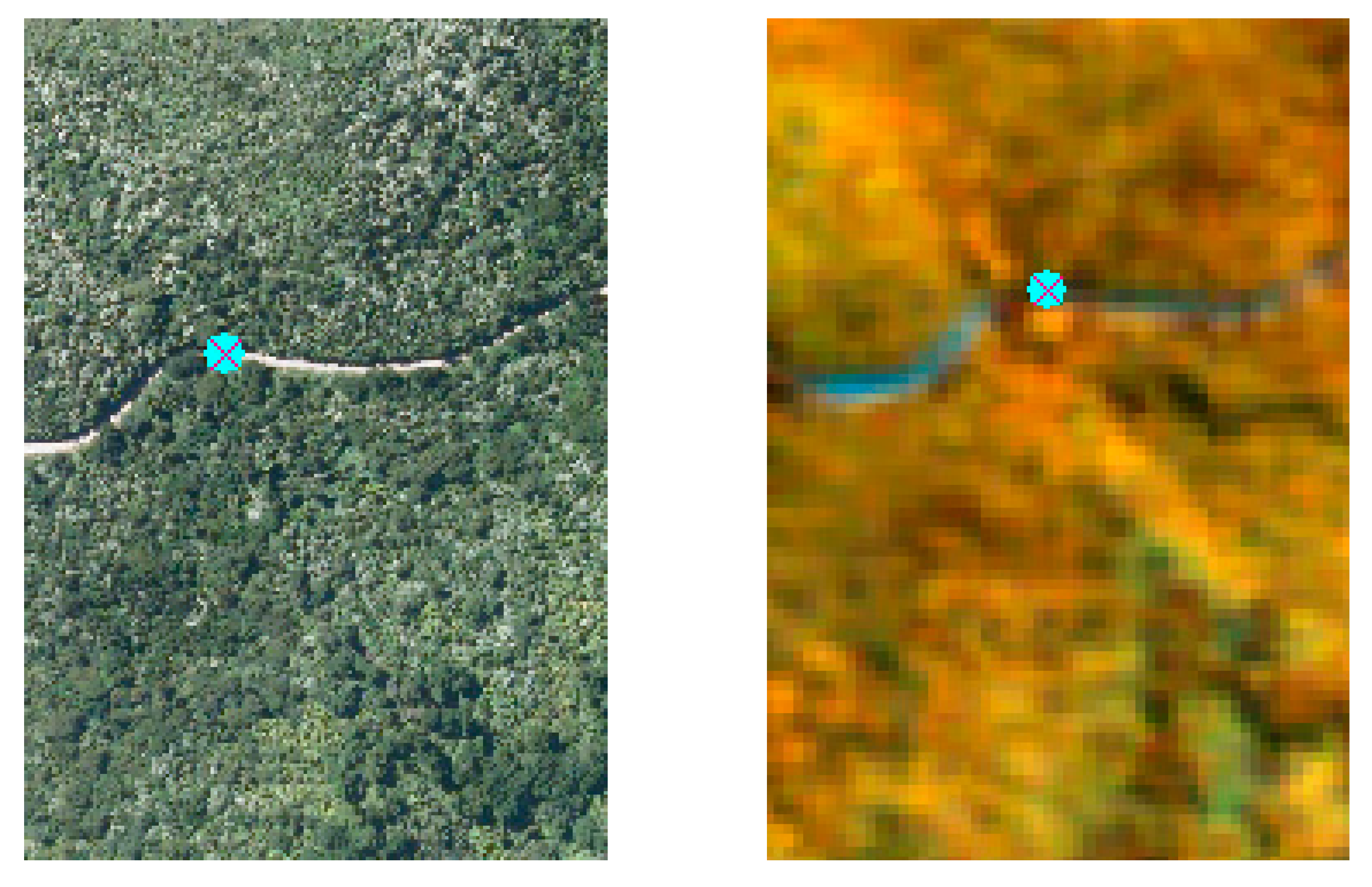

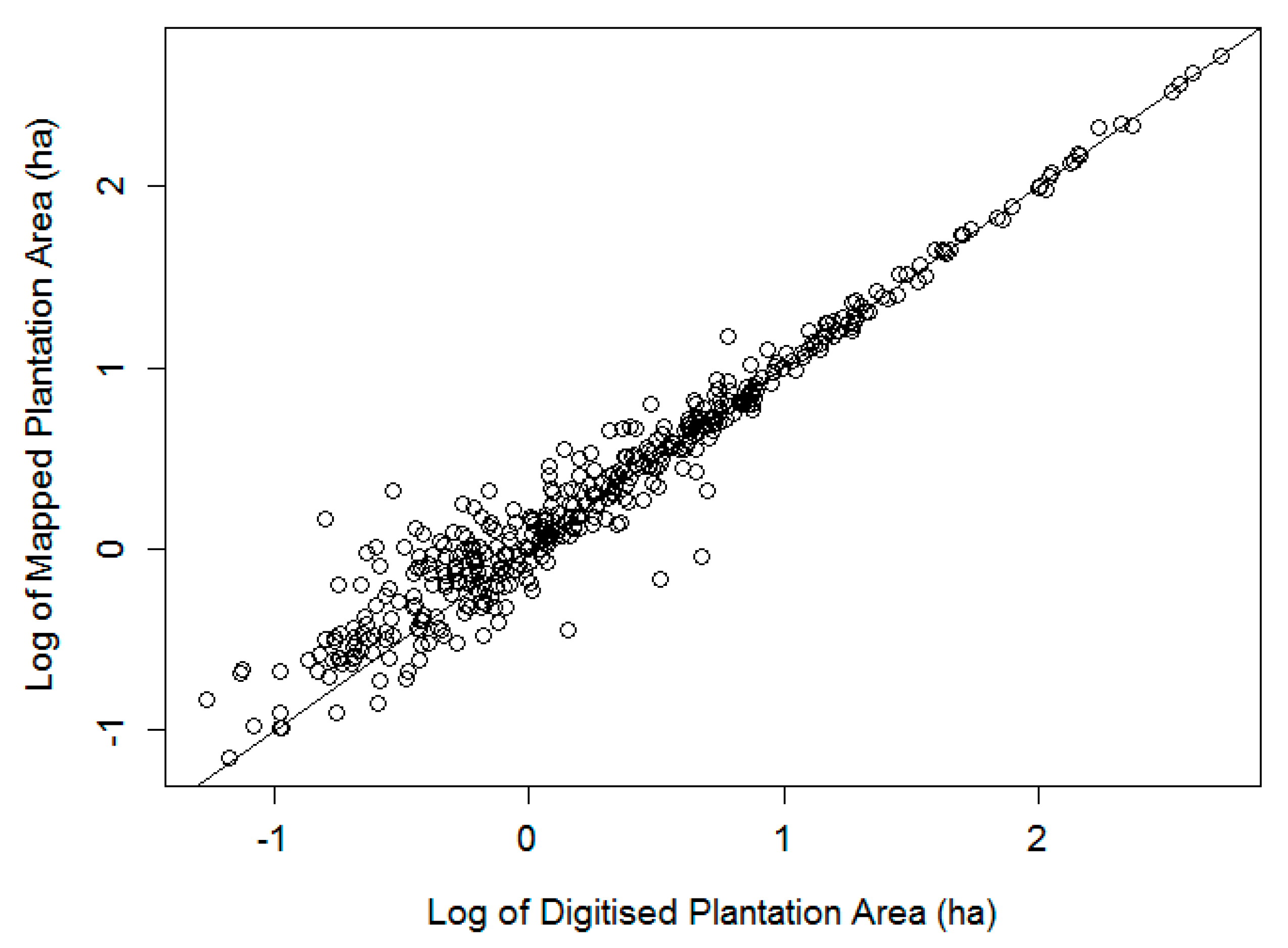

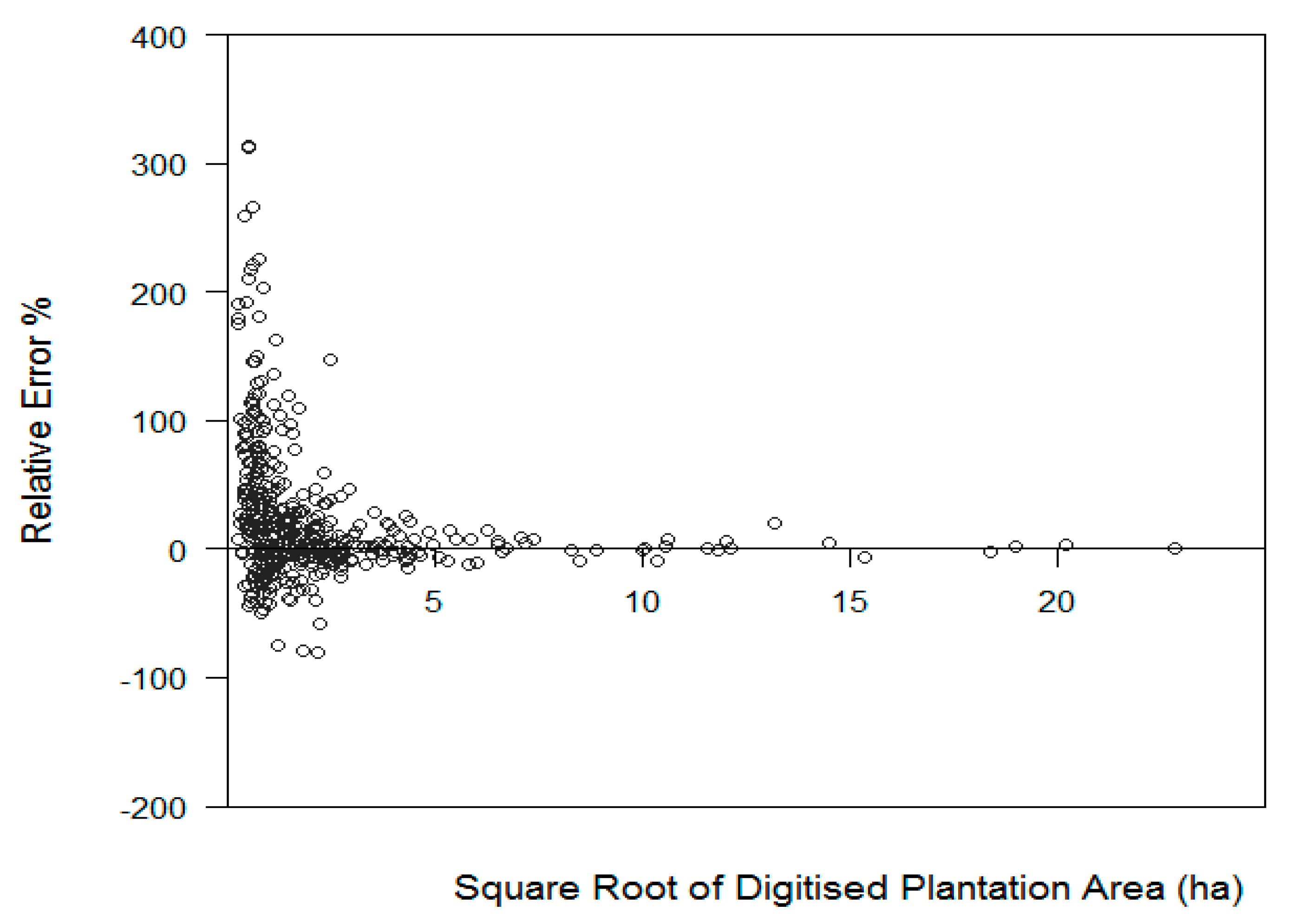

Overall, the selected mapping approach gave good classification results, producing 89% overall accuracy; the producer’s accuracy for plantation was 79% and user’s accuracy was 94%. After excluding the harvested area and new plantings due to temporal differences between the aerial photography and satellite imagery, the producer’s accuracy of plantation increased to 89%. The mapping approach used here has produced classification results comparable to previous studies [

9,

13,

23,

28,

49]. The accuracy of the method was further examined by comparing the mapped plantation area with manually digitised plantation area. For all sample grids, the mapping approach overestimated the total plantation area by 7.8%, and overestimated the plantation area excluding new plantings by 3%. Patch size proved to have an impact on mapping accuracy. Mapping of smaller patches (less than 10 ha) appears more variable and less accurate compared to “true” representation, whereas larger patches (over 10 ha) are generally more accurately mapped (less than 20% error).

The combination of multispectral RapidEye features and relatively low point density LiDAR-derived surfaces proved to be sufficient to detect land cover features, though the mapping accuracy decreased in small plantation patches. Another limitation with the mapping approach was that it failed to detect young plantings due to the resolution of remote sensing datasets. Together with the reduced accuracy for mapping forest patches smaller than 10 ha, this limitation hints at the relationship between mapping accuracy and the spatial resolution of the input data.

Though we did not test the effects of differing spatial resolutions in this study, other studies have generally shown improved forest mapping accuracy with higher spatial resolution datasets. For example, the average accuracy of urban forest species classification was increased by 16–18% using 2-m Worldview2 compared with using 4-m IKONOS [

55]. Another example showed that using grids derived from higher LiDAR point density (5 points m

−1) could produce 96% overall accuracy for delineating forested areas in a coniferous forest in Austria [

56].

A future study to test the accuracy of differing small-scale forest mapping approaches with input imagery of varying spatial resolutions would be useful. Such a study would improve the chances of successfully applying the automated mapping approach developed here over larger areas, for which RapidEye imagery and LiDAR data are not readily available. In such instances, freely available imagery (e.g., Landsat, Sentinel-2) have commonly been used for forest mapping. A spatial resolution sensitivity analysis, as proposed above, would help to determine the utility of such freely-available imagery for small-scale forest mapping. In addition, applying small-scale forest mapping approaches over a time series of imagery would be useful for offsetting the spatial resolution constraint.

In conclusion, the mapping approach developed in this study provides a proof of concept for estimating plantation area using remotely sensed data in the Wairarapa region of New Zealand, and the enhanced area description of small-scale forests will allow more effective planning for the forest industry.