Vehicle-To-Grid (V2G) Charging and Discharging Strategies of an Integrated Supply–Demand Mechanism and User Behavior: A Recurrent Proximal Policy Optimization Approach

Abstract

1. Introduction

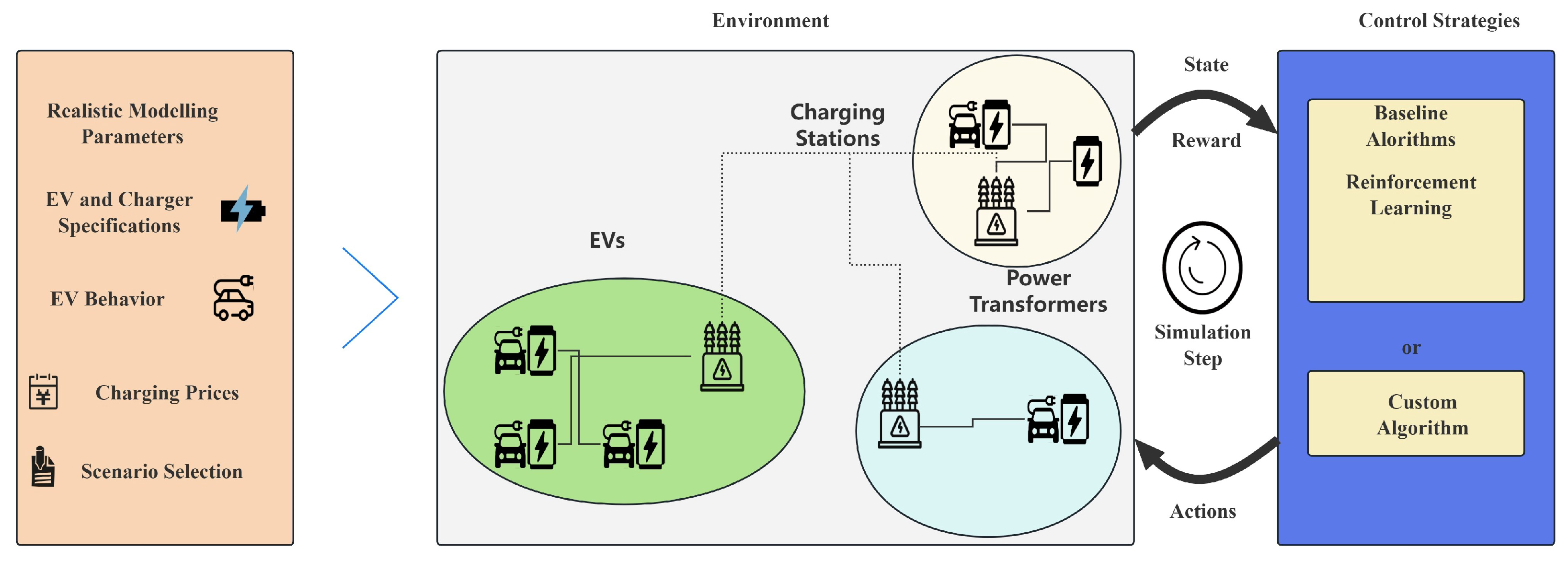

1.1. Integrated V2G Architecture

1.2. V2G Charging Strategies Considering Multiple Objectives

1.3. Intelligent Algorithms in V2G Charging Strategies

- Optimization Framework Considering Regional Dynamic Pricing: This study proposes an innovative V2G optimization framework that aims to facilitate energy interaction between EVs and the power grid while specifically focusing on optimizing charging behavior under different pricing strategies. By comprehensively considering factors such as grid stability, charging costs, and battery life, this framework dynamically adjusts charging and discharging plans to achieve optimal energy utilization. It also improves grid reliability and efficiency while reducing grid load.

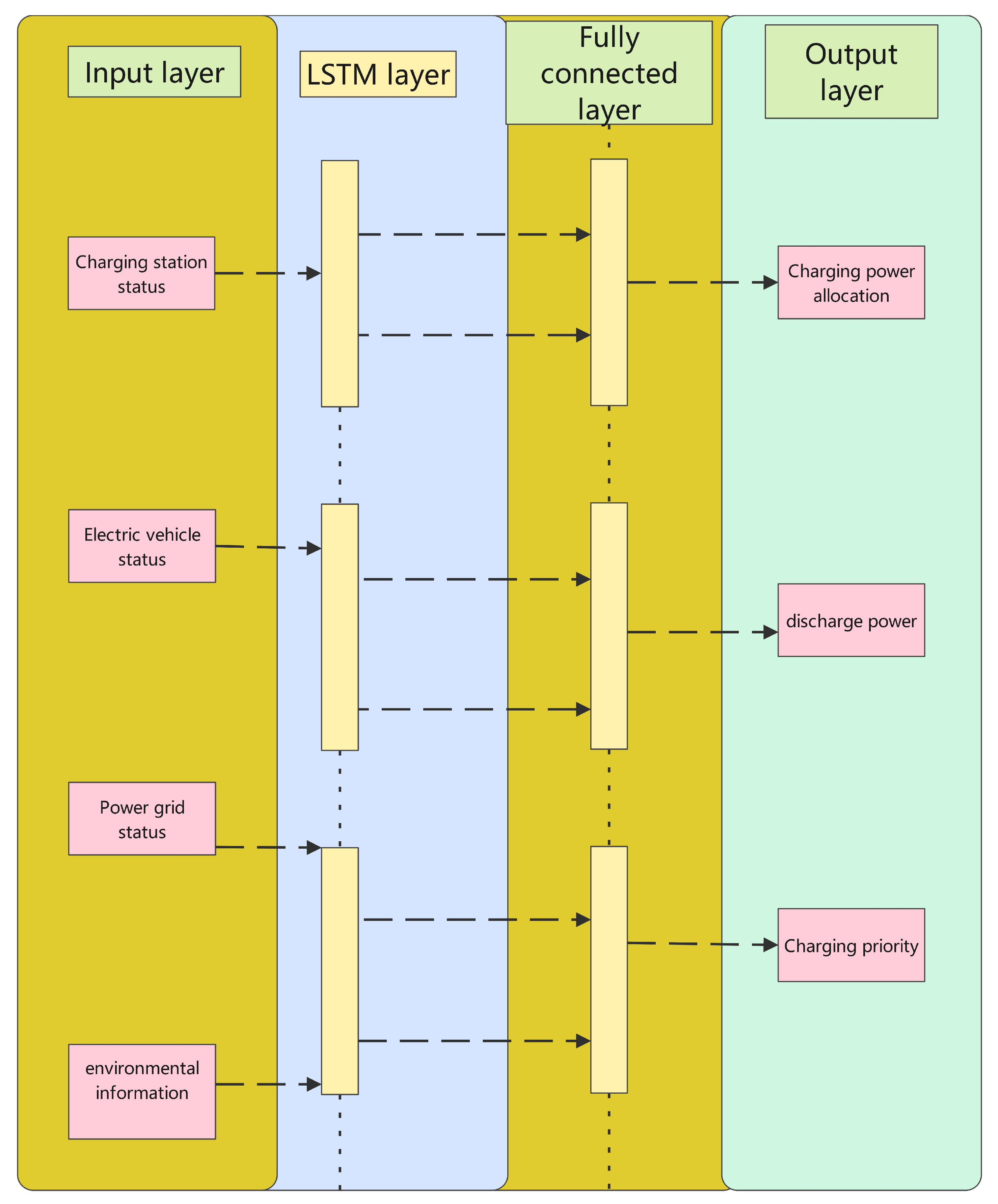

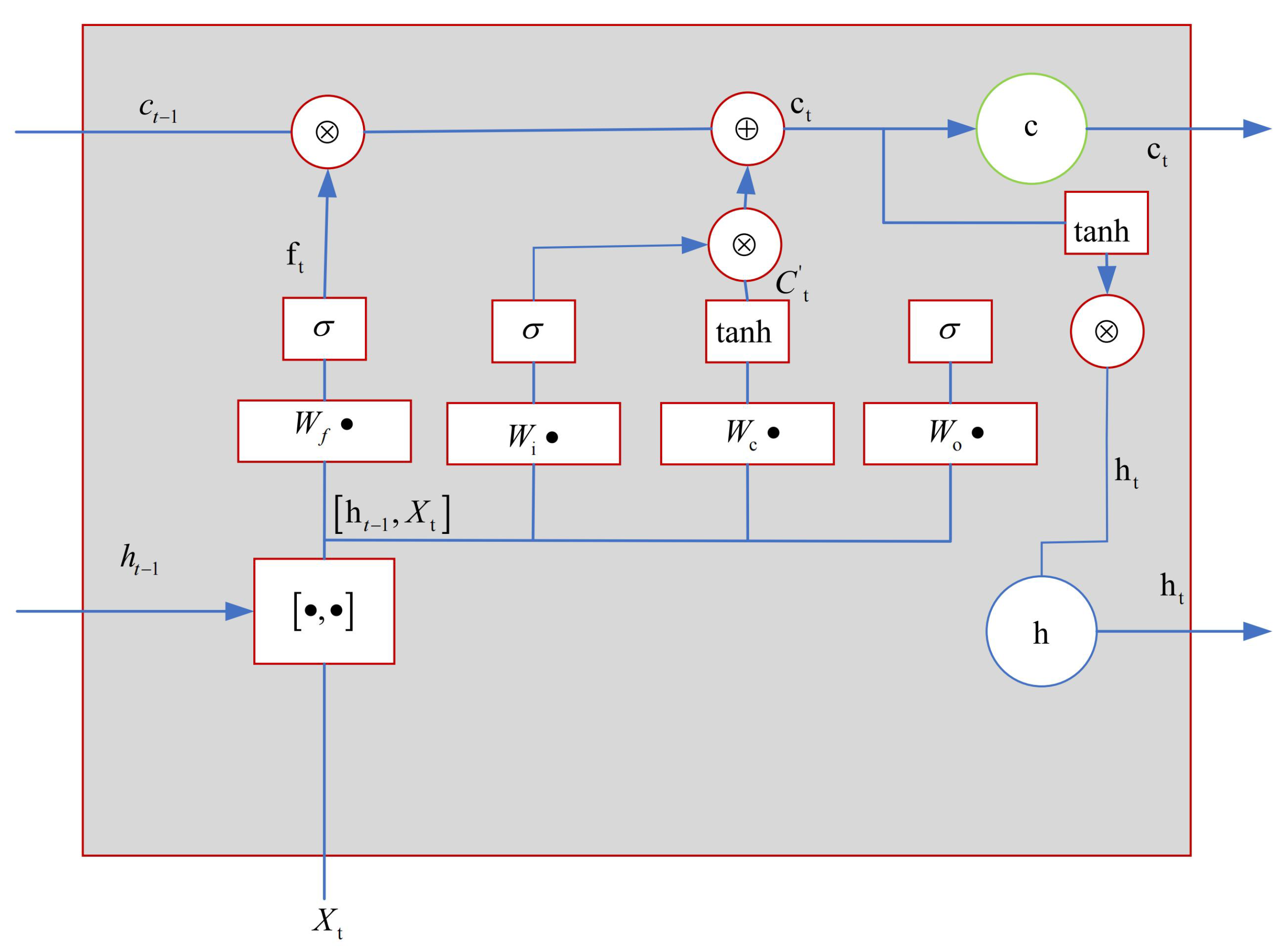

- An Integrated LSTM and RPPO Algorithm: To effectively address the complexity and uncertainty of EV charging demands, this study proposes an intelligent charging strategy combining LSTM and the RPPO algorithm. LSTM is used to capture the time-series characteristics of EV charging behavior and accurately predict future charging demand, while the RPPO is used to optimize EV charging and discharging strategies in complex and dynamic pricing environments. This algorithm combines the strengths of deep learning and reinforcement learning, enabling rapid iterative optimization in dynamic environments and significantly enhancing the intelligence of charging scheduling.

- Robustness Enhancement: The V2G optimization framework and corresponding algorithm designed in this study demonstrate strong robustness. They are not only capable of operating effectively under various pricing strategies but can also handle significant fluctuations in EV charging demand and dynamic changes in grid conditions. Through extensive simulation experiments, the solution has been proven to effectively respond to unpredictable changes in practical applications, ensuring the stability and efficiency of the charging process, thereby improving the reliability and adaptability of the entire system.

2. Network Architecture

3. Formulation of Multi-Objective Energy Management Problems

3.1. Description of Optimization Objectives

3.2. Energy Setting Tracking Issues

3.3. V2G Profit Maximization Problem

3.4. Algorithm Description

| Algorithm 1 RPPO Algorithm Optimization for the EV Charging Strategy |

| Input: N episodes, policy network , value network , learning rate , discount factor |

| Output: Optimized policy and value networks |

|

| Algorithm 2 Training Policy and Value Networks |

| Input: Initial parameters for policy network and value network |

| Output: ITrained policy and value networks |

| Initialize parameters of policy network and value network |

| 2: Reset environment and obtain initial state |

| for each time step t do |

| 4: Generate action using policy network based on current state and hidden state |

| Execute action in the environment, observe next state and reward |

| 6: Store in the replay buffer |

| Periodically sample data from the replay buffer |

| 8: Calculate the loss function |

| Update the model parameters (policy network and value network ) |

| 10: end for |

| Repeat until predetermined number of training steps is reached |

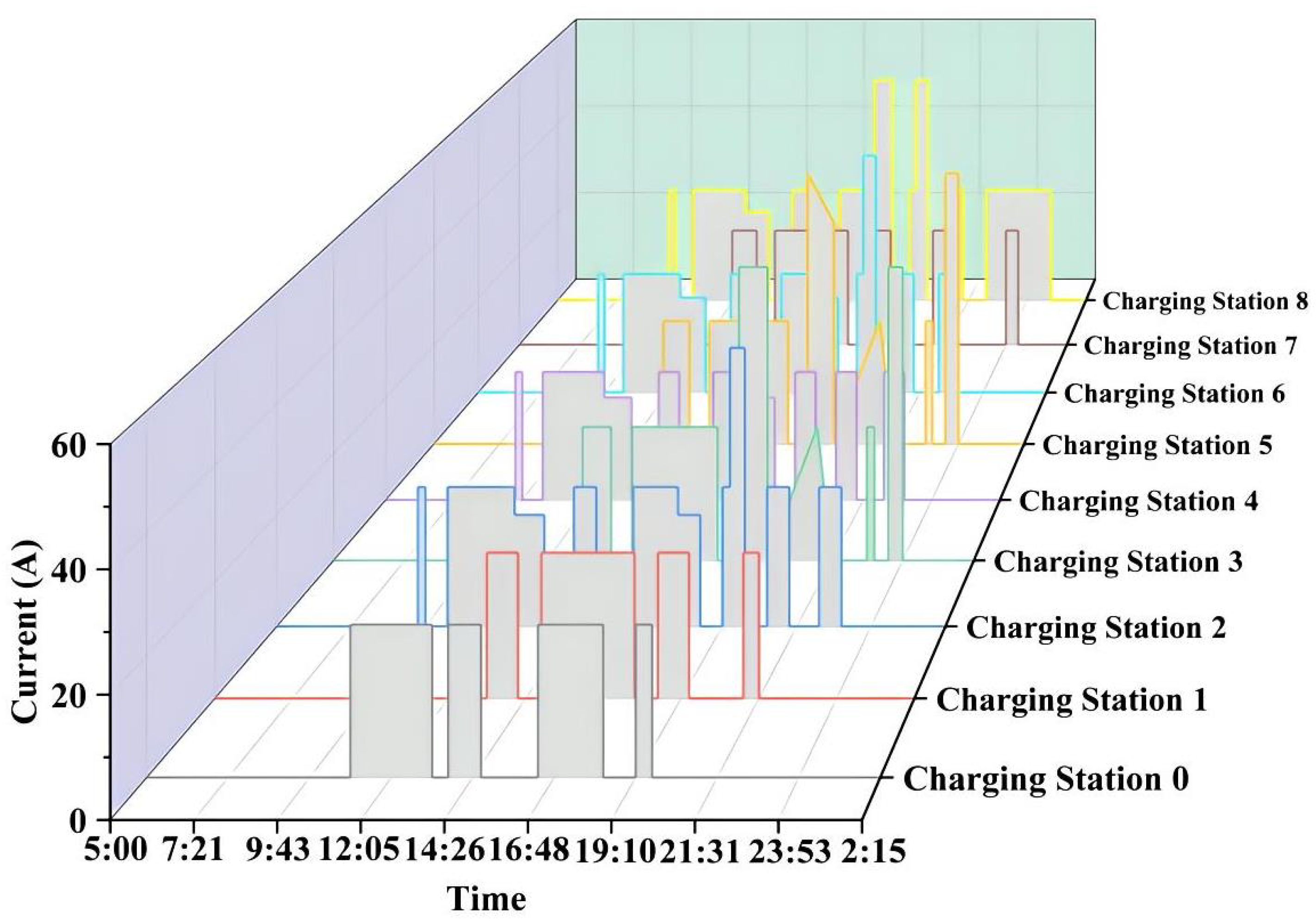

4. Results and Discussion

4.1. Parameter Settings

4.2. Environment Setup

- EVs Charging Stations: Each charging station contains several charging ports, capable of providing charging services to multiple EVs simultaneously. The location and number of charging stations are configured based on actual demand to simulate different cities and regions.

- Grid Model: A medium-voltage grid model is created using PandaPower, which includes transformers, loads, and distributed generation units (such as solar and wind power). The grid model can dynamically simulate the balance between power supply and demand, reflecting the actual operating conditions of the grid.

- EVs: Various types of EV are simulated, with different battery capacities, charging speeds, and arrival times. The charging demand and departure times of the EVs are randomly generated based on real-world conditions to improve the realism of the simulation.

- Charging Efficiency: Measures the amount of energy obtained by an EV per unit of time. Improving charging efficiency is one of the main optimization goals.

- Charging Cost: Calculates the charging cost for each EV and evaluates the algorithm’s effectiveness in reducing charging expenses.

- Battery Life: Monitors the health status of the battery and assesses the impact of the algorithm on battery lifespan.

- Grid Stability: Analyzes the grid’s performance under different load conditions and evaluates the contribution of the algorithm to grid stability.

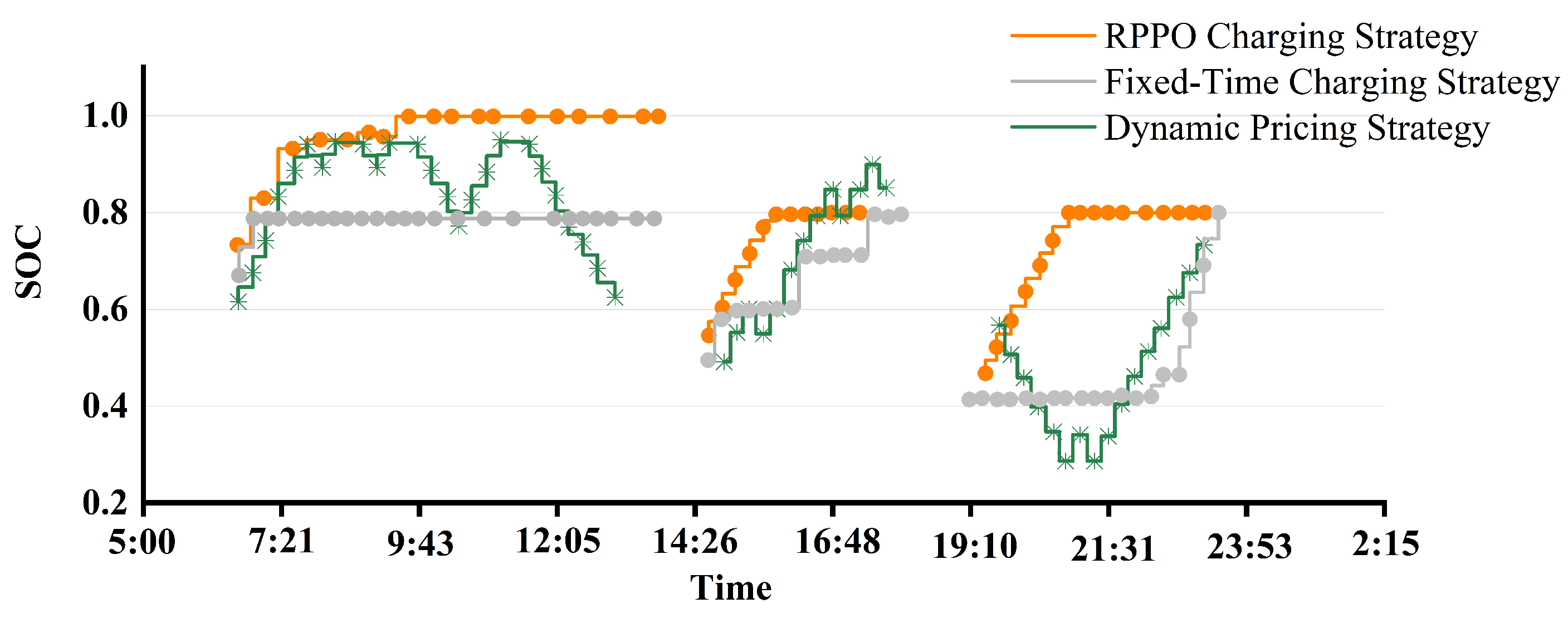

4.3. Results Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| EVs | Electric vehicles |

| V2G | Grid-to-vehicle |

| G2V | Recurrent neural network |

| RPPO | Recurrent proximal policy optimization |

| LSTM | Long short-term memory |

| IoT | Internet of Things |

| AI | Artificial intelligence |

| CPO | Charging point operators |

| ANN | Artificial neural networks |

References

- Sovacool, B.K.; Kester, J.; Noel, L.; de Rubens, G.Z. Actors, business models, and innovation activity systems for vehicle-to-grid (V2G) technology: A comprehensive review. Renew. Sustain. Energy Rev. 2020, 131, 109963. [Google Scholar] [CrossRef]

- Goncearuc, A.; De Cauwer, C.; Sapountzoglou, N.; Van Kriekinge, G.; Huber, D.; Messagie, M.; Coosemans, T. The barriers to widespread adoption of vehicle-to-grid: A comprehensive review. Energy Rep. 2024, 12, 27–41. [Google Scholar] [CrossRef]

- Hemavathi, S.; Shinisha, A. A study on trends and developments in electric vehicle charging technologies. J. Energy Storage 2022, 52, 105013. [Google Scholar] [CrossRef]

- Ashfaq, M.; Butt, O.; Selvaraj, J.; Rahim, N. Assessment of electric vehicle charging infrastructure and its impact on the electric grid: A review. Int. J. Green Energy 2021, 18, 657–686. [Google Scholar] [CrossRef]

- Moghaddam, Z.; Ahmad, I.; Habibi, D.; Phung, Q.V. Smart charging strategy for electric vehicle charging stations. IEEE Trans. Transp. Electrif. 2017, 4, 76–88. [Google Scholar] [CrossRef]

- Zhang, Q.; Hu, Y.; Tan, W.; Li, C.; Ding, Z. Dynamic time-of-use pricing strategy for electric vehicle charging considering user satisfaction degree. Appl. Sci. 2020, 10, 3247. [Google Scholar] [CrossRef]

- Jawale, S.A.; Singh, S.K.; Singh, P.; Kolhe, M.L. Priority wise electric vehicle charging for grid load minimization. Processes 2022, 10, 1898. [Google Scholar] [CrossRef]

- Sadeghian, O.; Oshnoei, A.; Mohammadi-Ivatloo, B.; Vahidinasab, V.; Anvari-Moghaddam, A. A comprehensive review on electric vehicles smart charging: Solutions, strategies, technologies, and challenges. J. Energy Storage 2022, 54, 105241. [Google Scholar] [CrossRef]

- Huang, J.; Wang, X.; Wang, Y.; Ma, Z.; Chen, X.; Zhang, H. Charging Navigation Strategy of Electric Vehicles Considering Time-of-Use Pricing. In Proceedings of the 2021 6th Asia Conference on Power and Electrical Engineering (ACPEE), Chongqing, China, 8–11 April 2021; pp. 715–720. [Google Scholar]

- Li, Y.; Han, M.; Yang, Z.; Li, G. Coordinating flexible demand response and renewable uncertainties for scheduling of community integrated energy systems with an electric vehicle charging station: A bi-level approach. IEEE Trans. Sustain. Energy 2021, 12, 2321–2331. [Google Scholar] [CrossRef]

- Noura, N.; Boulon, L.; Jemeï, S. A review of battery state of health estimation methods: Hybrid electric vehicle challenges. World Electr. Veh. J. 2020, 11, 66. [Google Scholar] [CrossRef]

- Zhou, G.; Zhu, Z.; Luo, S. Location optimization of electric vehicle charging stations: Based on cost model and genetic algorithm. Energy 2022, 247, 123437. [Google Scholar] [CrossRef]

- Şengör, İ.; Erdinç, O.; Yener, B.; Taşcıkaraoğlu, A.; Catalão, J.P. Optimal energy management of EV parking lots under peak load reduction based DR programs considering uncertainty. IEEE Trans. Sustain. Energy 2018, 10, 1034–1043. [Google Scholar] [CrossRef]

- Diaz-Londono, C.; Fambri, G.; Maffezzoni, P.; Gruosso, G. Enhanced EV charging algorithm considering data-driven workplace chargers categorization with multiple vehicle types. eTransportation 2024, 20, 100326. [Google Scholar] [CrossRef]

- Triviño-Cabrera, A.; Aguado, J.A.; de la Torre, S. Joint routing and scheduling for electric vehicles in smart grids with V2G. Energy 2019, 175, 113–122. [Google Scholar] [CrossRef]

- Goncearuc, A.; Sapountzoglou, N.; De Cauwer, C.; Coosemans, T.; Messagie, M.; Crispeels, T. Profitability Evaluation of Vehicle-to-Grid-Enabled Frequency Containment Reserve Services into the Business Models of the Core Participants of Electric Vehicle Charging Business Ecosystem. World Electr. Veh. J. 2023, 14, 18. [Google Scholar] [CrossRef]

- Kalakanti, A.K.; Rao, S. Computational challenges and approaches for electric vehicles. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Han, H.; Miu, H.; Lv, S.; Yuan, X.; Pan, Y.; Zeng, F. Fast Charging Guidance and Pricing Strategy Considering Different Types of Electric Vehicle Users’ Willingness to Charge. Energies 2024, 17, 4716. [Google Scholar] [CrossRef]

- Rana, R.; Saggu, T.S.; Letha, S.S.; Bakhsh, F.I. V2G based bidirectional EV charger topologies and its control techniques: A review. Discover Applied Sciences 2024, 6, 588. [Google Scholar] [CrossRef]

- Gough, R.; Dickerson, C.; Rowley, P.; Walsh, C. Vehicle-to-grid feasibility: A techno-economic analysis of EV-based energy storage. Appl. Energy 2017, 192, 12–23. [Google Scholar] [CrossRef]

- Chen, X.; Leung, K.C.; Lam, A.Y.; Hill, D.J. Online scheduling for hierarchical vehicle-to-grid system: Design, formulation, and algorithm. IEEE Trans. Veh. Technol. 2018, 68, 1302–1317. [Google Scholar] [CrossRef]

- Alfaverh, F.; Denaï, M.; Sun, Y. Optimal vehicle-to-grid control for supplementary frequency regulation using deep reinforcement learning. Electr. Power Syst. Res. 2023, 214, 108949. [Google Scholar] [CrossRef]

- Mathioudaki, A.; Tsaousoglou, G.; Varvarigos, E.; Fotakis, D. Data-Driven Optimization of Electric Vehicle Charging Stations. In Proceedings of the 2023 International Conference on Smart Energy Systems and Technologies (SEST), Mugla, Turkey, 4–6 September 2023; pp. 1–6. [Google Scholar]

- Yin, W.; Mavaluru, D.; Ahmed, M.; Abbas, M.; Darvishan, A. Application of new multi-objective optimization algorithm for EV scheduling in smart grid through the uncertainties. J. Ambient Intell. Humaniz. Comput. 2020, 11, 2071–2103. [Google Scholar] [CrossRef]

- Savari, G.F.; Krishnasamy, V.; Sugavanam, V.; Vakesan, K. Optimal charging scheduling of electric vehicles in micro grids using priority algorithms and particle swarm optimization. Mob. Netw. Appl. 2019, 24, 1835–1847. [Google Scholar] [CrossRef]

- Li, Y.; Hu, B. An iterative two-layer optimization charging and discharging trading scheme for electric vehicle using consortium blockchain. IEEE Trans. Smart Grid 2019, 11, 2627–2637. [Google Scholar] [CrossRef]

- Shabani, M.; Shabani, M.; Wallin, F.; Dahlquist, E.; Yan, J. Smart and optimization-based operation scheduling strategies for maximizing battery profitability and longevity in grid-connected application. Energy Convers. Manag. X 2024, 21, 100519. [Google Scholar] [CrossRef]

- Mohammad, A.; Zuhaib, M.; Ashraf, I.; Alsultan, M.; Ahmad, S.; Sarwar, A.; Abdollahian, M. Integration of electric vehicles and energy storage system in home energy management system with home to grid capability. Energies 2021, 14, 8557. [Google Scholar] [CrossRef]

- Farhadi, F.; Wang, S.; Palacin, R.; Blythe, P. Data-driven multi-objective optimization for electric vehicle charging infrastructure. IScience 2023, 26, 107737. [Google Scholar] [CrossRef]

- Tan, B.; Chen, H. Multi-objective energy management of multiple microgrids under random electric vehicle charging. Energy 2020, 208, 118360. [Google Scholar] [CrossRef]

- Li, T.; Tao, S.; He, K.; Lu, M.; Xie, B.; Yang, B.; Sun, Y. V2G multi-objective dispatching optimization strategy based on user behavior model. Front. Energy Res. 2021, 9, 739527. [Google Scholar] [CrossRef]

- Dorokhova, M.; Martinson, Y.; Ballif, C.; Wyrsch, N. Deep reinforcement learning control of electric vehicle charging in the presence of photovoltaic generation. Appl. Energy 2021, 301, 117504. [Google Scholar] [CrossRef]

- Erdogan, N.; Kucuksari, S.; Murphy, J. A multi-objective optimization model for EVSE deployment at workplaces with smart charging strategies and scheduling policies. Energy 2022, 254, 124161. [Google Scholar] [CrossRef]

- Escoto, M.; Guerrero, A.; Ghorbani, E.; Juan, A.A. Optimization Challenges in Vehicle-to-Grid (V2G) Systems and Artificial Intelligence Solving Methods. Appl. Sci. 2024, 14, 5211. [Google Scholar] [CrossRef]

- Kumar, N.; Kumar, D.; Dwivedi, P. Load forecasting for EV charging stations based on artificial neural network and long short term memory. In Proceedings of the International Conference on Advanced Network Technologies and Intelligent Computing, Varanasi, India, 17–18 December 2021; pp. 473–485. [Google Scholar]

- He, S.; Wang, Y.; Han, S.; Zou, S.; Miao, F. A robust and constrained multi-agent reinforcement learning framework for electric vehicle amod systems. Dynamics 2022, 8, 10. [Google Scholar]

- Datasets, ElaadNL Open. ElaadNL Open Datasets for Electric Mobility Research. Update April 2020. Available online: https://tki-robust.nl/wp-content/uploads/sites/378/2022/12/2022-Hijgenaar-Cyber-Attacks-on-Electric-Vehicle-Charging-Infrastructure-and-Impact-Analysis.pdf (accessed on 5 November 2024).

| 6976 | 28 | 730 | 11,160 |

| Description | Parameters | Value |

|---|---|---|

| Transformer power limit [kW] | 400 | |

| Maximum EVSE output power [kW] | 22 | |

| EVSE voltage (V) | V | 230 |

| EVSE phases | 3 | |

| EV battery capacity [kWh] | 50 | |

| Maximum EV power [kW] | 22 | |

| Minimum EV SoC when discharging | 10% | |

| Minimum EV SoC at departure | 80% | |

| Minimum EV time of connection [h] | 3 | |

| Charging efficiency | 100% | |

| Discharging efficiency | 100% | |

| Sample time [min] | 15 | |

| Operation time of the station [h] | T | 24 |

| Prediction horizon (2.5 h–10 h) | H | {2.5 × 4, 10 × 4} |

| Number of EVSEs | I | {5–50} |

| Number of EVs | J | {15–120} |

| Number of transformers | G | {1, 3} |

| Discharge price multiplier | m | {0.8–1.2} |

| EV scenario | “Residential” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, C.; Peng, J.; Jiang, W.; Wang, J.; Du, L.; Zhang, J. Vehicle-To-Grid (V2G) Charging and Discharging Strategies of an Integrated Supply–Demand Mechanism and User Behavior: A Recurrent Proximal Policy Optimization Approach. World Electr. Veh. J. 2024, 15, 514. https://doi.org/10.3390/wevj15110514

He C, Peng J, Jiang W, Wang J, Du L, Zhang J. Vehicle-To-Grid (V2G) Charging and Discharging Strategies of an Integrated Supply–Demand Mechanism and User Behavior: A Recurrent Proximal Policy Optimization Approach. World Electric Vehicle Journal. 2024; 15(11):514. https://doi.org/10.3390/wevj15110514

Chicago/Turabian StyleHe, Chao, Junwen Peng, Wenhui Jiang, Jiacheng Wang, Lijuan Du, and Jinkui Zhang. 2024. "Vehicle-To-Grid (V2G) Charging and Discharging Strategies of an Integrated Supply–Demand Mechanism and User Behavior: A Recurrent Proximal Policy Optimization Approach" World Electric Vehicle Journal 15, no. 11: 514. https://doi.org/10.3390/wevj15110514

APA StyleHe, C., Peng, J., Jiang, W., Wang, J., Du, L., & Zhang, J. (2024). Vehicle-To-Grid (V2G) Charging and Discharging Strategies of an Integrated Supply–Demand Mechanism and User Behavior: A Recurrent Proximal Policy Optimization Approach. World Electric Vehicle Journal, 15(11), 514. https://doi.org/10.3390/wevj15110514