Incremental Learning for LiDAR Attack Recognition Framework in Intelligent Driving Using Gaussian Processes

Abstract

1. Introduction

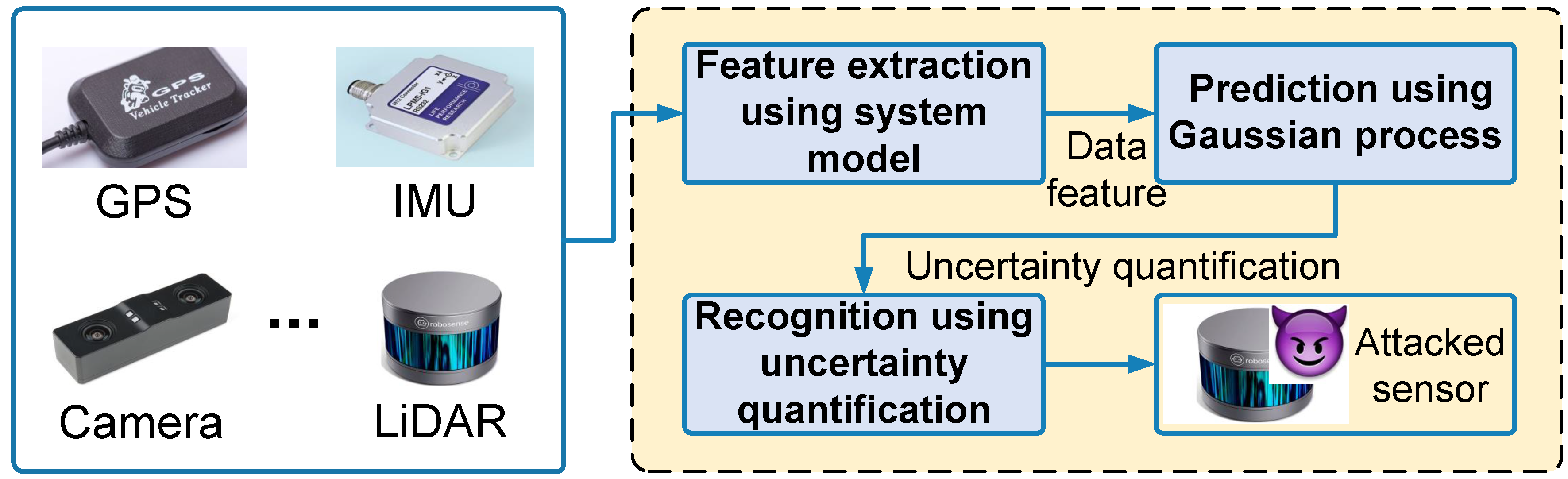

- We have developed an attack recognition framework for LiDAR attacks within intelligent driving perception systems, encompassing both localization and detection systems. In this framework, we model the localization system using a vehicle dynamics model and the detection system using an object tracking algorithm, from which we extract data features. Subsequently, we employ Gaussian Processes to perform probabilistic modeling of these data features, which predict uncertainty estimates to effectively recognize LiDAR attacks.

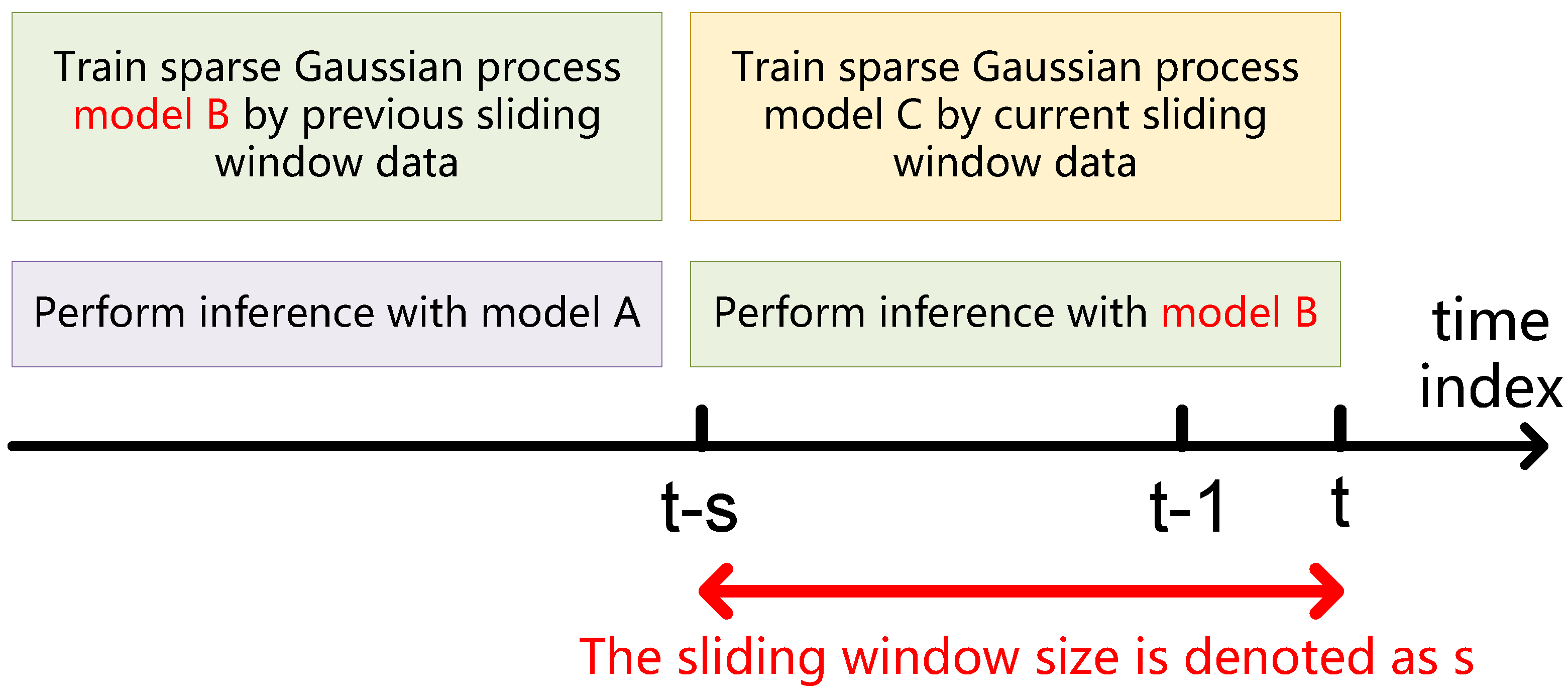

- We propose an innovative incremental learning framework for the adaptive recognition of sensor attacks in intelligent driving, capable of adapting to dynamically changing driving environments. Our approach integrates sliding window techniques, sparsification computing, and Gaussian Processes, which allow for updates within the sliding windows to continuously adjust the Gaussian Process possibility model for incremental learning. Compared to previous methods, our framework maintains a 100% accuracy rate and a 0% false positive rate in the localization system and improves the accuracy by an average of 3.43% in the detection system across various driving scenarios.

2. Related Works

2.1. LiDAR Sensor Attack

- LiDAR replay attack: Attackers record LiDAR data in a specific environment and replay it under different circumstances. This type of attack can cause the LiDAR system to misjudge the current environmental state, mistakenly identifying safe areas as obstructed, or vice versa, recognizing hazardous areas as safe. Such attacks pose a direct threat to the safe operation of intelligent driving [3].

- LiDAR spoofing attack: Attackers send forged signals to the LiDAR system, inducing incorrect environmental perception data. These attacks can lead to navigational errors and may prevent the intelligent driving system from correctly identifying other vehicles, pedestrians, or obstacles on the road, thereby causing severe traffic accidents.

2.2. Gaussian Process

3. Problem Statement

4. Proposed Framework

4.1. Feature Extraction Using System Model

4.2. Prediction Using Gaussian Process

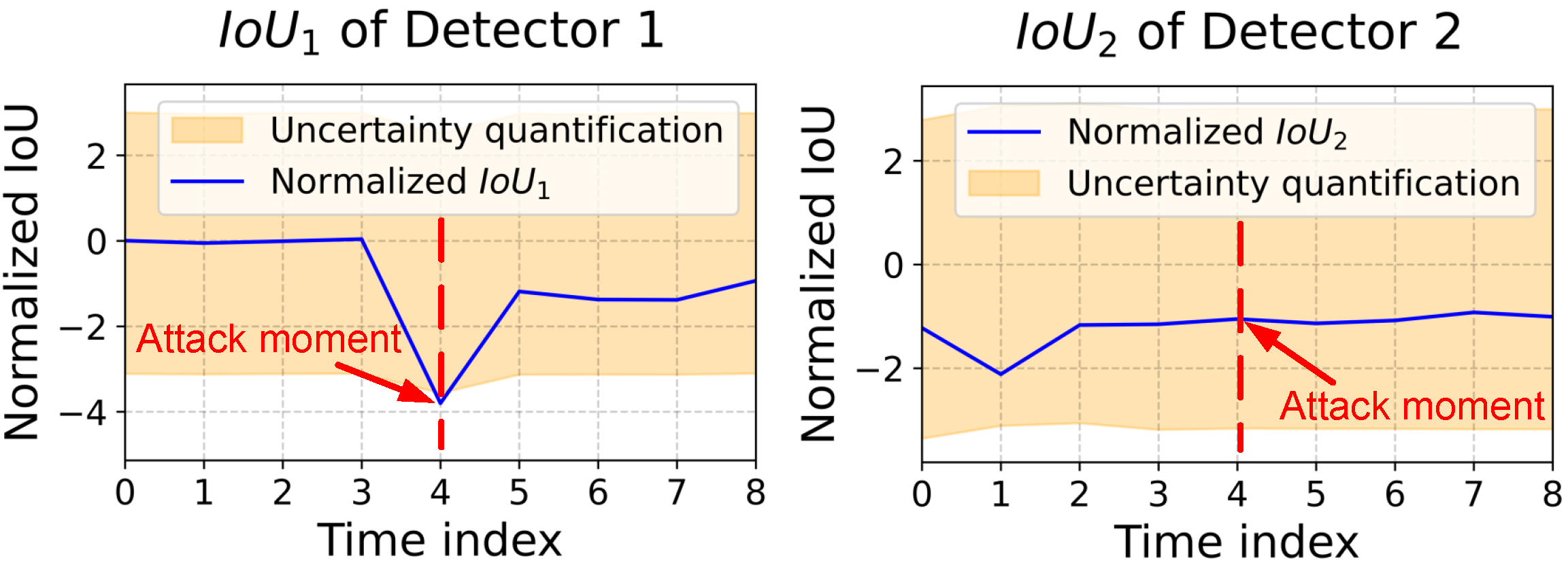

4.3. Recognition Using Uncertainty Quantification

5. Experimental Results

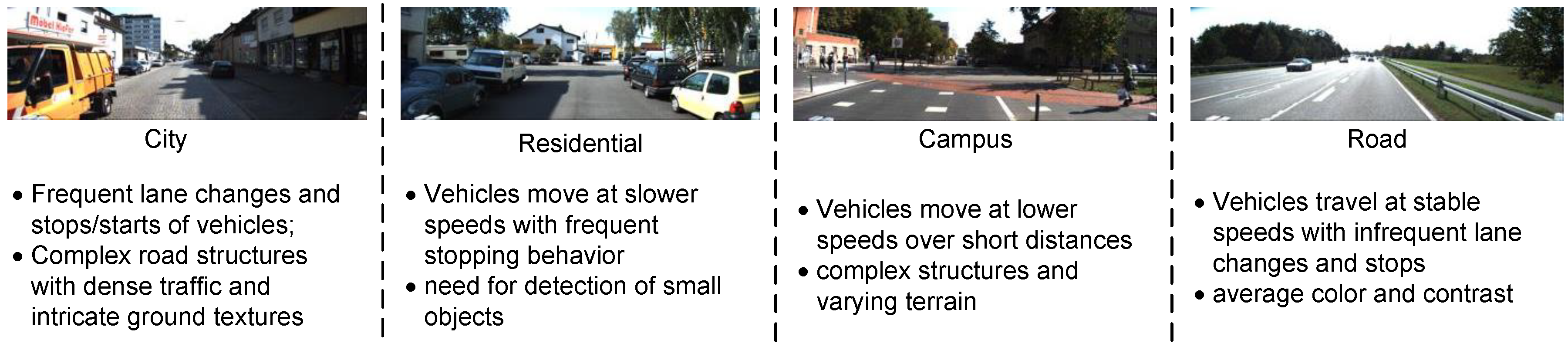

5.1. Experimental Setup

5.2. Discussion and Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| GP | Gaussian Process |

| LRA | LiDAR replay attack |

| LSA | LiDAR spoofing attack |

| OACS | Optimization-based attack against control systems |

| MDLAD | Multi-modal deep learning for vehicle sensor data abstraction and attack detection |

| CUSUM | Cumulative sum |

References

- Liu, J.; Park, J.M. “Seeing is not always believing”: Detecting perception error attacks against autonomous vehicles. IEEE Trans. Dependable Secur. Comput. 2021, 18, 2209–2223. [Google Scholar] [CrossRef]

- Gualandi, G.; Maggio, M.; Papadopoulos, A.V. Optimization-based attack against control systems with CUSUM-based anomaly detection. In Proceedings of the 2022 30th Mediterranean Conference on Control and Automation (MED), Vouliagmeni, Greece, 28 June–1 July 2022; pp. 896–901. [Google Scholar]

- Bendiab, G.; Hameurlaine, A.; Germanos, G.; Kolokotronis, N.; Shiaeles, S. Autonomous vehicles security: Challenges and solutions using blockchain and artificial intelligence. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3614–3637. [Google Scholar] [CrossRef]

- Kim, K.; Kim, J.S.; Jeong, S.; Park, J.H.; Kim, H.K. Cybersecurity for autonomous vehicles: Review of attacks and defense. Comput. Secur. 2021, 103, 102150. [Google Scholar] [CrossRef]

- Shin, J.; Baek, Y.; Eun, Y.; Son, S.H. Intelligent sensor attack detection and recognition for automotive cyber-physical systems. In Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence (SSCI), Honolulu, HI, USA, 27 November–1 December 2017; pp. 1–8. [Google Scholar]

- Zhang, J.; Pan, L.; Han, Q.; Chen, C.; Wen, S.; Xiang, Y. Deep learning based attack detection for cyber-physical system cybersecurity: A survey. IEEE/CAA J. Autom. Sin. 2021, 9, 377–391. [Google Scholar] [CrossRef]

- Kumar, G.; Kumar, K.; Sachdeva, M. The use of artificial intelligence based techniques for intrusion detection: A review. Artif. Intell. Rev. 2010, 34, 369–387. [Google Scholar] [CrossRef]

- Seeger, M. Gaussian process for machine learning. Int. J. Neural Syst. 2004, 14, 69–106. [Google Scholar] [CrossRef] [PubMed]

- Keipour, A.; Mousaei, M.; Scherer, S. Automatic real-time anomaly detection for autonomous aerial vehicles. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5679–5685. [Google Scholar]

- Girdhar, M.; Hong, J.; Moore, J. Cybersecurity of autonomous vehicles: A systematic literature review of adversarial attacks and defense models. IEEE Open J. Veh. Technol. 2023, 4, 417–437. [Google Scholar] [CrossRef]

- Cao, Y.; Xiao, C.; Cyr, B.; Zhou, Y.; Park, W.; Rampazzi, S.; Chen, Q.A.; Fu, K.; Mao, Z.M. Adversarial sensor attack on lidar-based perception in autonomous driving. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 2267–2281. [Google Scholar]

- Kumar, S.; Sharma, P.; Pal, N. Object tracking and counting in a zone using YOLOv4, DeepSORT and TensorFlow. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1017–1022. [Google Scholar]

- Cui, Y.; Osaki, S.; Matsubara, T. Reinforcement learning boat autopilot: A sample-efficient and model predictive control based approach. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 2868–2875. [Google Scholar]

- Snelson, E.; Ghahramani, Z. Sparse Gaussian processes using pseudo-inputs. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 5–8 December 2005; Volume 18. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KiTTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3354–3361. [Google Scholar]

- Hallyburton, R.S.; Liu, Y.; Cao, Y.; Mao, Z.M.; Pajic, M. Security analysis of Camera-LiDAR fusion against Black-Box attacks on autonomous vehicles. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022. [Google Scholar]

- Matthews, A.G.d.; Wilk, M.v.; Nickson, T.; Fujii, K.; Boukouvalas, A.; León-Villagrá, P.; Ghahramani, Z.; Hensman, J. GPflow: A Gaussian process library using TensorFlow. J. Mach. Learn. Res. 2017, 18, 1–6. [Google Scholar]

| Time Index | ||||||||

|---|---|---|---|---|---|---|---|---|

| 200 | 0.27 | 0.63 | 0.34 | 0.30 | −0.04 | [0.32, 2.71] | [−0.21, 2.14] | [−0.36, 0.45] |

| 201 | 0.29 | 0.63 | 0.34 | 0.31 | −0.03 | [0.17, 2.56] | [−0.31, 2.02] | [−0.35, 0.46] |

| 202 | 0.33 | 0.70 | 0.34 | 0.36 | −0.03 | [0.37, 2.70] | [−0.18, 2.12] | [−0.35, 0.46] |

| 203 | 0.31 | 0.64 | −0.54 | 0.34 | −0.01 | [0.36, 2.70] | [−0.17, 2.10] | [−0.34, 0.47] |

| 204 | 0.32 | 0.63 | 0.37 | 0.39 | −0.01 | [0.20, 2.55] | [−0.28, 1.97] | [−0.34, 0.48] |

| Time Index | Result | |||||

|---|---|---|---|---|---|---|

| 4 | 39 | −3.80 | −0.95 | [−3.56, 2.50] | [−4.34, 4.25] | LiDAR attack |

| 28 | 0.16 | −1.43 | [−3.26, 4.28] | [−4.36, 4.56] | ||

| 55 | 0.72 | 0.26 | [−4.49, 4.77] | [−4.22, 3.06] | ||

| 43 | −0.43 | 0.42 | [−2.94, 4.21] | [−4.24, 4.16] | ||

| 40 | 0.46 | 0.20 | [−4.48, 4.07] | [−4.63, 3.83] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miao, Z.; Shao, C.; Li, H.; Cui, Y. Incremental Learning for LiDAR Attack Recognition Framework in Intelligent Driving Using Gaussian Processes. World Electr. Veh. J. 2024, 15, 362. https://doi.org/10.3390/wevj15080362

Miao Z, Shao C, Li H, Cui Y. Incremental Learning for LiDAR Attack Recognition Framework in Intelligent Driving Using Gaussian Processes. World Electric Vehicle Journal. 2024; 15(8):362. https://doi.org/10.3390/wevj15080362

Chicago/Turabian StyleMiao, Zujia, Cuiping Shao, Huiyun Li, and Yunduan Cui. 2024. "Incremental Learning for LiDAR Attack Recognition Framework in Intelligent Driving Using Gaussian Processes" World Electric Vehicle Journal 15, no. 8: 362. https://doi.org/10.3390/wevj15080362

APA StyleMiao, Z., Shao, C., Li, H., & Cui, Y. (2024). Incremental Learning for LiDAR Attack Recognition Framework in Intelligent Driving Using Gaussian Processes. World Electric Vehicle Journal, 15(8), 362. https://doi.org/10.3390/wevj15080362