Robust 3D Object Detection in Complex Traffic via Unified Feature Alignment in Bird’s Eye View

Abstract

1. Introduction

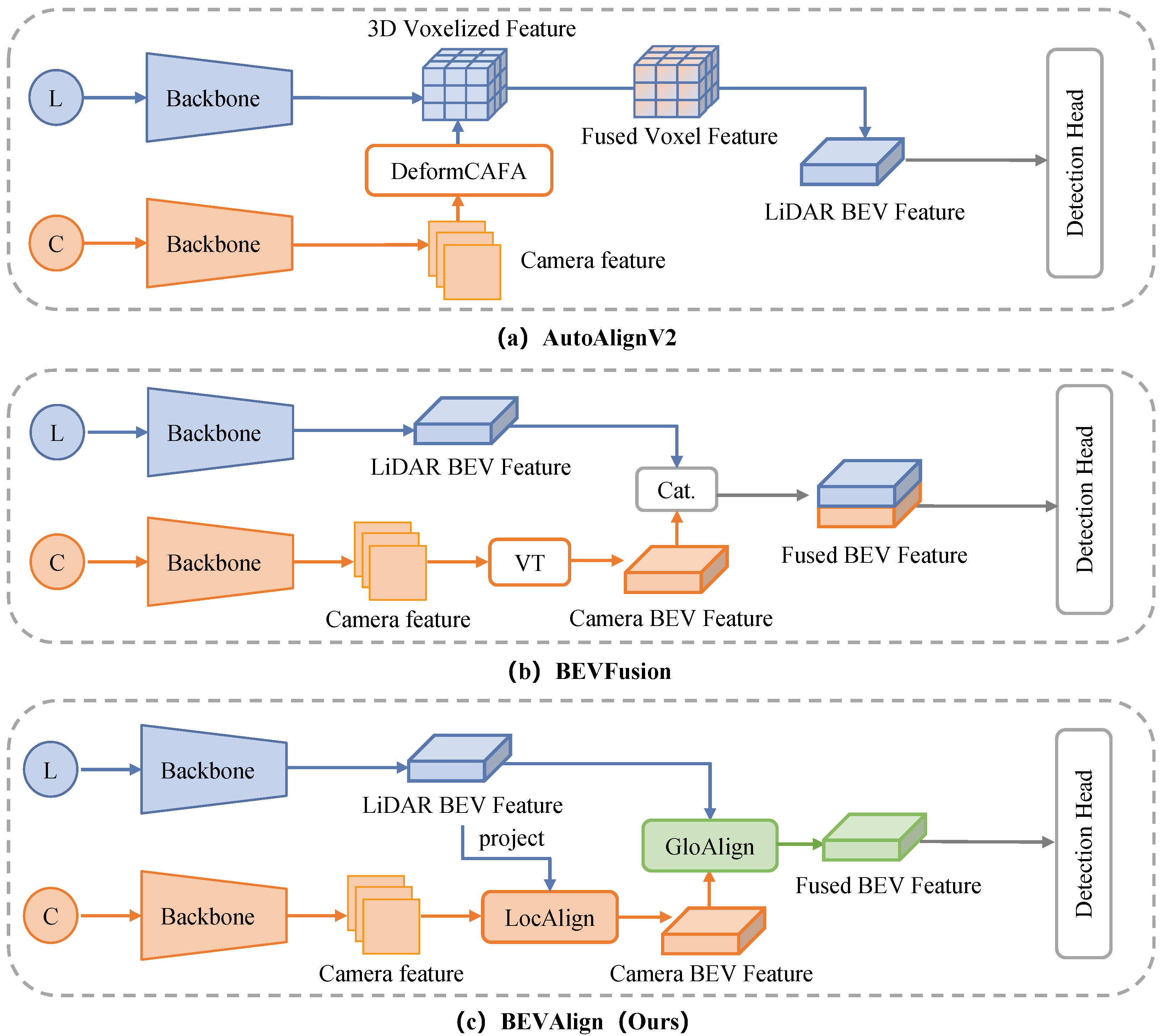

- We propose BEVAlign, a unified feature alignment framework that fuses LiDAR and camera data in BEV space to mitigate cross-modal misalignment.

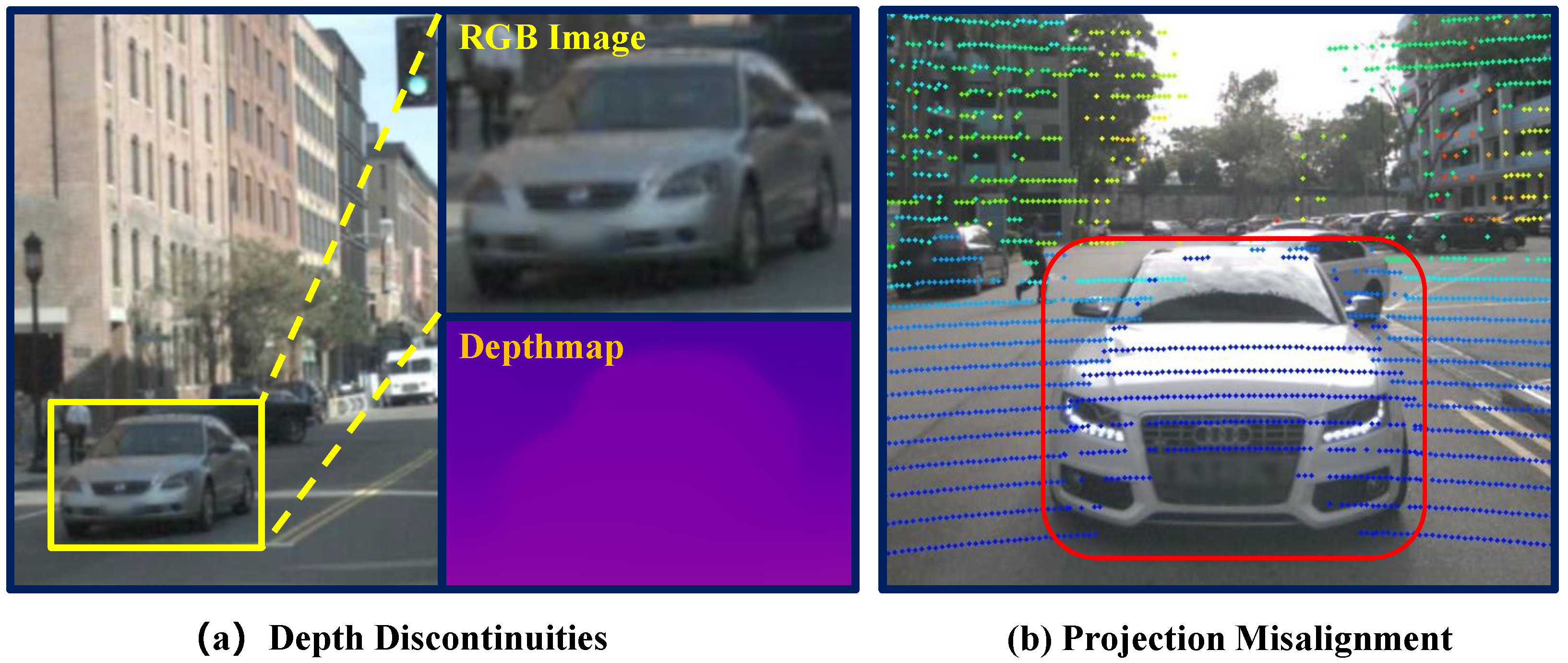

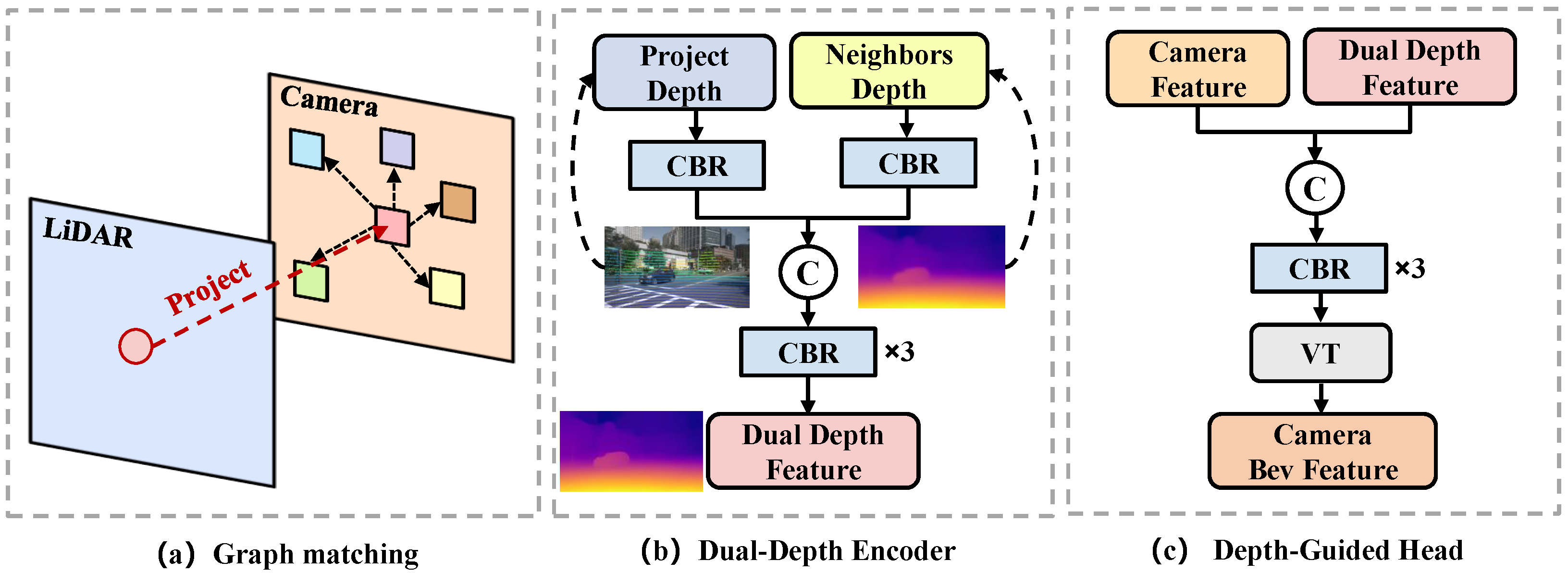

- We introduce a Local Alignment (LA) module that uses LiDAR-projected depth and graph-based neighbor depth to correct local errors in the camera-to-BEV transform.

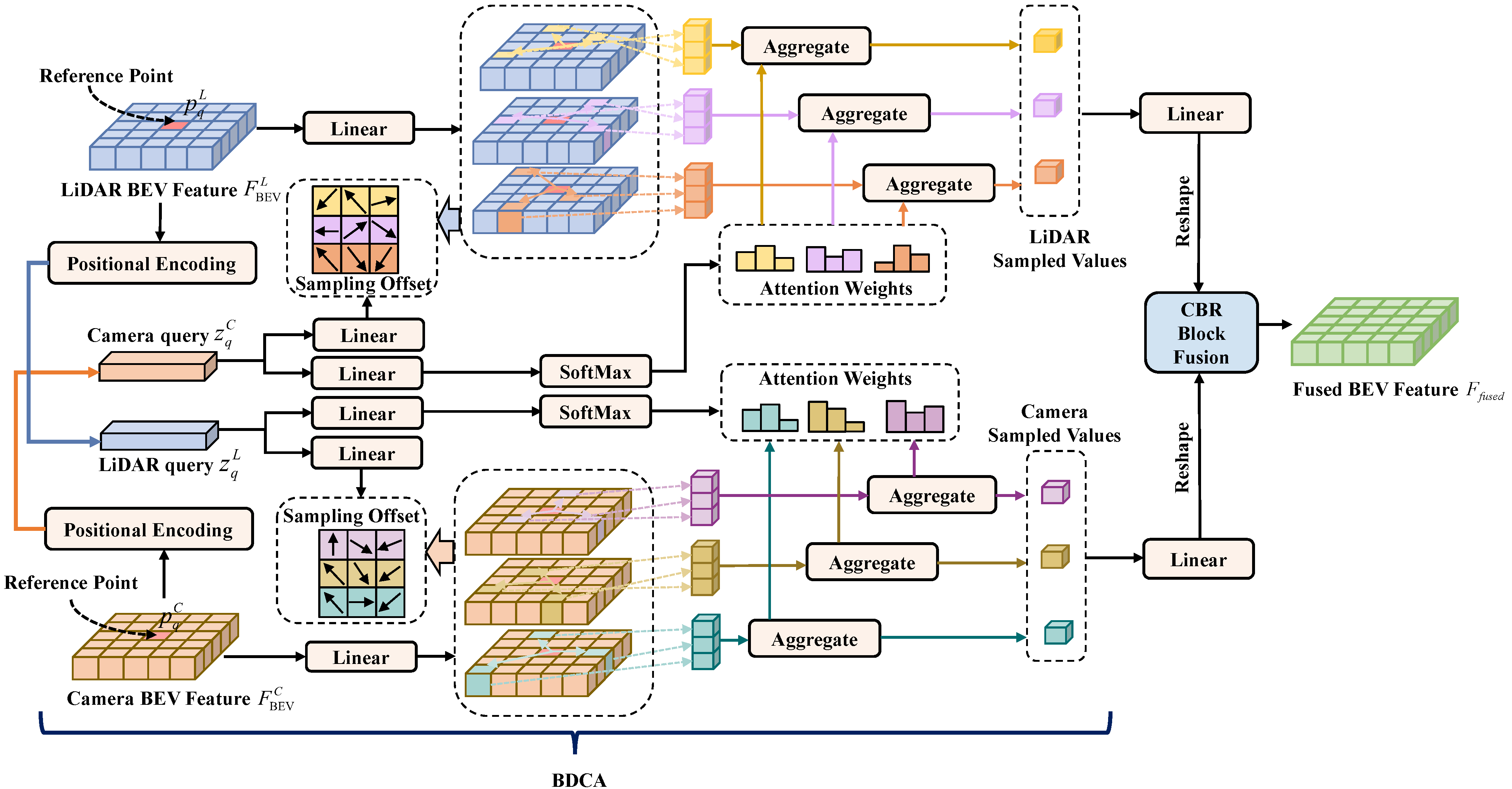

- We propose a Global Alignment (GA) module that employs bidirectional deformable cross-attention (BDCA) to align LiDAR and camera BEV features, ensuring semantic and geometric consistency. The aligned features are then fused by CBR blocks to produce a unified BEV representation.

- Evaluation on the nuScenes benchmark shows that BEVAlign achieves robust detection performance, particularly in detecting small and partially occluded objects.

2. Related Work

2.1. LiDAR-Driven Approaches for 3D Object Detection

2.2. Image-Centric Methods for 3D Object Detection

2.3. Multi-Sensor Fusion for 3D Object Detection

3. Methods

3.1. Overview

3.2. Depth-Guided Local Alignment

3.3. Global Alignment with BDCA

4. Experiments

4.1. Dataset and Evaluation Metrics

4.2. Implementation Details

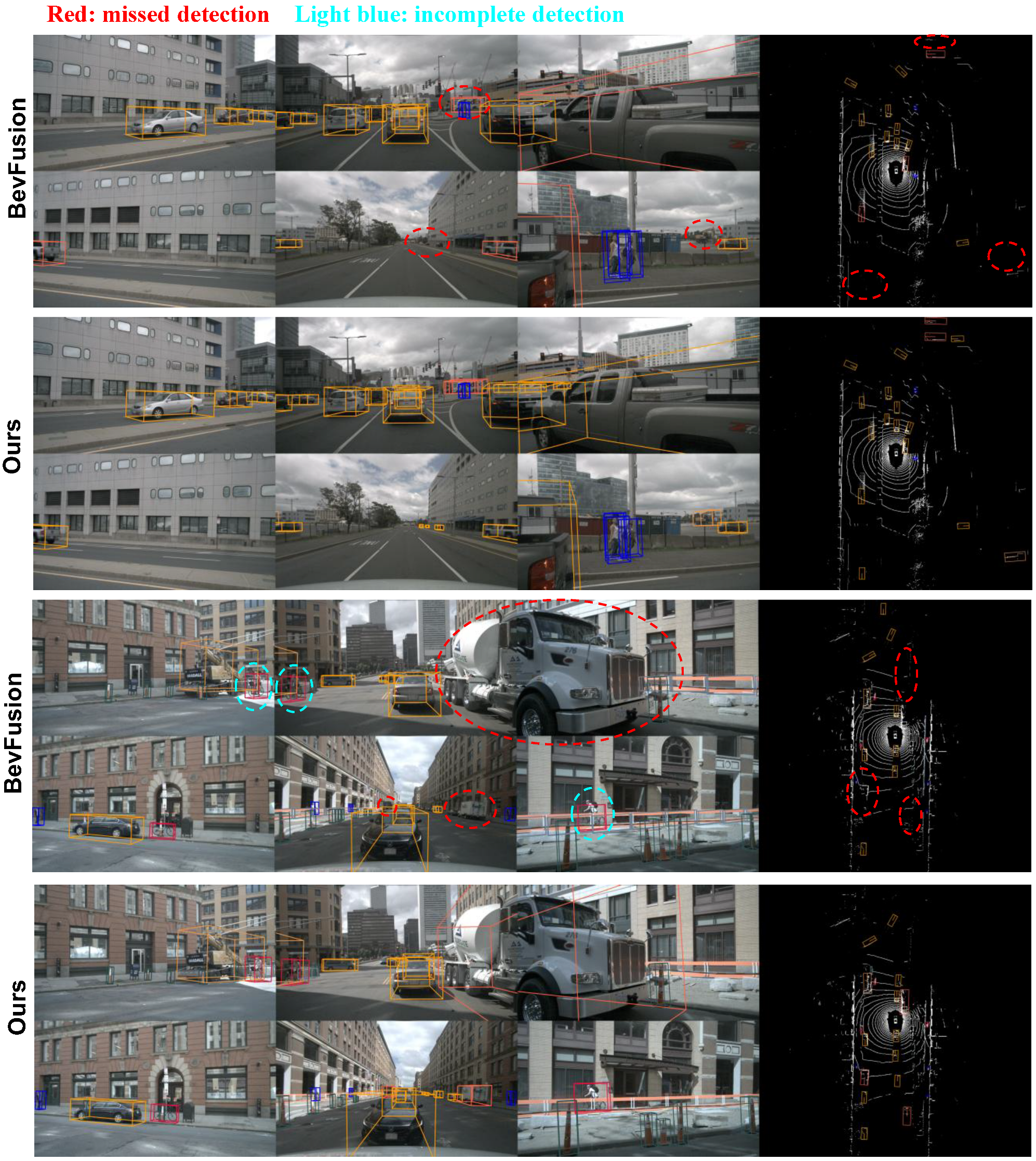

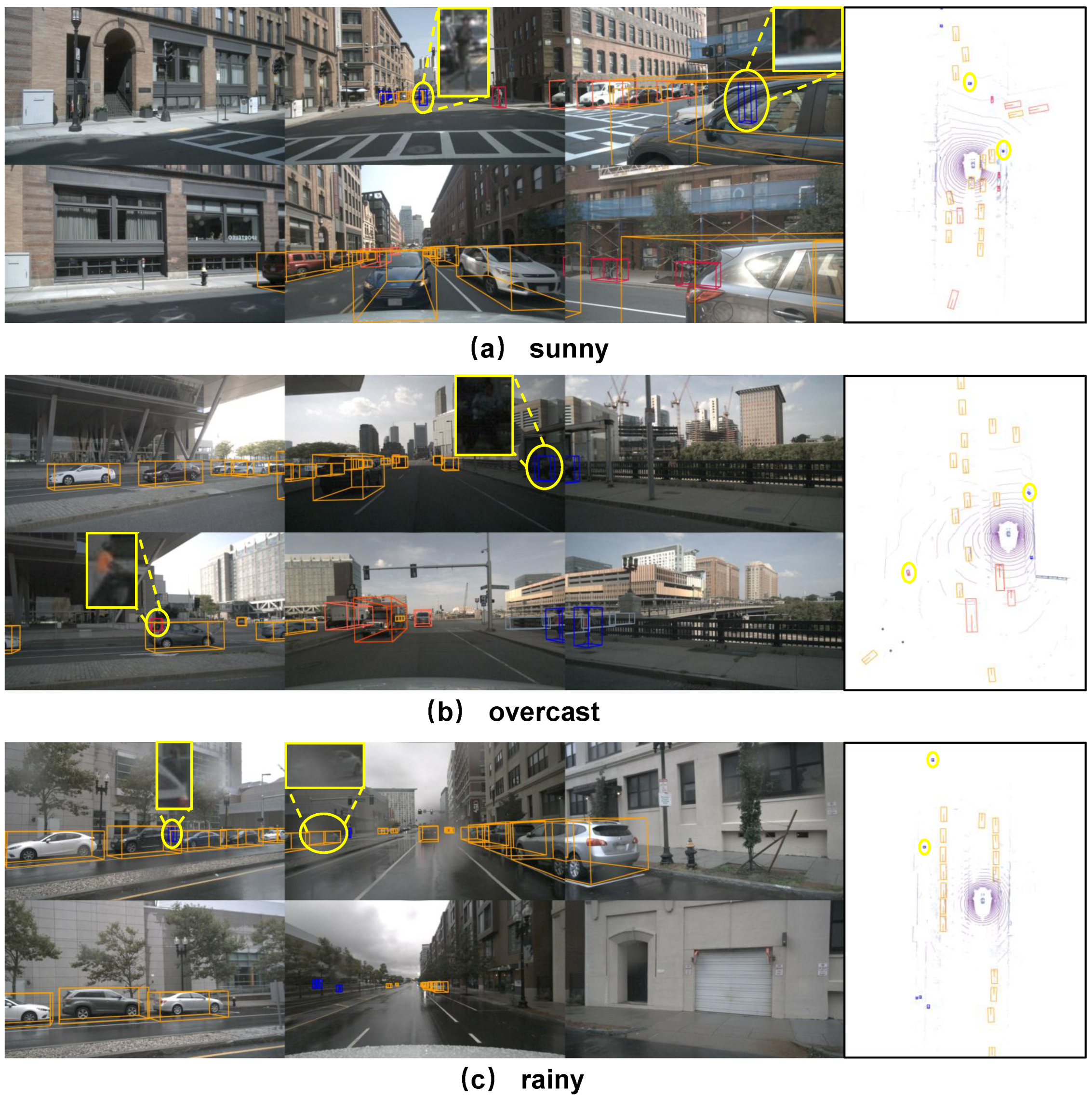

4.3. Comparison Results

4.4. Ablation Studies

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3D | Three-Dimensional |

| BEV | Bird’s Eye View |

| LA | Local Alignment |

| GA | Global Alignment |

| BDCA | Bidirectional Deformable Cross-Attention |

| CBR | Convolution–Batch Normalization–ReLU |

| DeformCAFA | Deformable Cross-Attention Feature Aggregation |

| VT | View Transformation |

| DDE | Dual-Depth Encoder |

| DGH | Depth-Guided Head |

| DA | Deformable Attention |

| PE | Positional encoding |

| IoU | Intersection over Union |

| mAP | mean Average Precision |

| NDS | nuScenes Detection Score |

| FPS | Frames Per Second |

| Intrinsic camera matrix | |

| Extrinsic camera parameters | |

| Image pixel coordinates | |

| h | Scaling factor |

| 3D LiDAR point coordinates | |

| Depth value in camera coordinates | |

| Reference point of the query | |

| Query feature vector | |

| F | Input feature map for attention |

| M | Number of attention heads |

| K | Number of sampling locations per head |

| Projection weights for head | |

| Attention weight assigned to each sampled location | |

| Learned offset applied to each sampled location | |

| Camera BEV feature | |

| LiDAR BEV feature | |

| Concatenated fused feature | |

| Refined fused feature after stacked CBR blocks | |

| Reference point of the LiDAR branch at a BEV location | |

| Reference point of the Camera branch at a BEV location | |

| Query vector derived from the LiDAR BEV feature | |

| Query vector derived from the Camera BEV feature | |

| Number of graph neighbors |

References

- Song, Z.; Liu, L.; Jia, F.; Luo, Y.; Jia, C.; Zhang, G.; Yang, L.; Wang, L. Robustness-Aware 3D Object Detection in Autonomous Driving: A Review and Outlook. IEEE Trans. Intell. Transp. Syst. 2024, 25, 15407–15436. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, X.; Song, Z.; Bi, J.; Zhang, G.; Wei, H.; Tang, L.; Yang, L.; Li, J.; Jia, C.; et al. Multi-Modal 3D Object Detection in Autonomous Driving: A Survey and Taxonomy. IEEE Trans. Intell. Veh. 2023, 8, 3781–3798. [Google Scholar] [CrossRef]

- Yan, J.; Liu, Y.; Sun, J.; Jia, F.; Li, S.; Wang, T.; Zhang, X. Cross Modal Transformer: Towards Fast and Robust 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 18268–18278. [Google Scholar] [CrossRef]

- Xie, Y.; Xu, C.; Rakotosaona, M.-J.; Rim, P.; Tombari, F.; Keutzer, K.; Tomizuka, M.; Zhan, W. SparseFusion: Fusing Multi-Modal Sparse Representations for Multi-Sensor 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 17591–17602. [Google Scholar] [CrossRef]

- Li, X.; Fan, B.; Tian, J.; Fan, H. GAFusion: Adaptive Fusing LiDAR and Camera with Multiple Guidance for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 21209–21218. [Google Scholar] [CrossRef]

- Wu, X.; Peng, L.; Yang, H.; Xie, L.; Huang, C.; Deng, C.; Liu, H.; Cai, D. Sparse Fuse Dense: Towards High Quality 3D Detection with Depth Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 5418–5427. [Google Scholar] [CrossRef]

- Chen, Z.; Li, Z.; Zhang, S.; Fang, L.; Jiang, Q.; Zhao, F. Deformable Feature Aggregation for Dynamic Multi-Modal 3D Object Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 628–644. [Google Scholar] [CrossRef]

- Song, Y.; Wang, L. BiCo-Fusion: Bidirectional Complementary LiDAR–Camera Fusion for Semantic- and Spatial-Aware 3D Object Detection. IEEE Robot. Autom. Lett. 2025, 10, 1457–1464. [Google Scholar] [CrossRef]

- Liu, Z.; Tang, H.; Amini, A.; Yang, X.; Mao, H.; Rus, D.L.; Han, S. BEVFusion: Multi-Task Multi-Sensor Fusion with Unified Bird’s-Eye View Representation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: New York, NY, USA, 2023; pp. 2774–2781. [Google Scholar] [CrossRef]

- Philion, J.; Fidler, S. Lift, Splat, Shoot: Encoding Images from Arbitrary Camera Rigs by Implicitly Unprojecting to 3D. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 194–210. [Google Scholar] [CrossRef]

- Song, Z.; Jia, C.; Yang, L.; Wei, H.; Liu, L. GraphAlign++: An Accurate Feature Alignment by Graph Matching for Multi-Modal 3D Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 2619–2632. [Google Scholar] [CrossRef]

- Cai, Q.; Pan, Y.; Yao, T.; Ngo, C.-W.; Mei, T. ObjectFusion: Multi-Modal 3D Object Detection with Object-Centric Fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 18067–18076. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 11621–11631. [Google Scholar] [CrossRef]

- Liu, Q.; Dong, Y.; Zhao, D.; Xiao, L.; Dai, B.; Min, C.; Zhang, J.; Nie, Y.; Lu, D. MT-SSD: Single-Stage 3D Object Detector Based on Magnification Transformation. IEEE Trans. Intell. Veh. 2024, 1–11. [Google Scholar] [CrossRef]

- Liu, H.; Ma, Y.; Wang, H.; Zhang, C.; Guo, Y. AnchorPoint: Query Design for Transformer-Based 3D Object Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10988–11000. [Google Scholar] [CrossRef]

- He, X.; Wang, Z.; Lin, J.; Nai, K.; Yuan, J.; Li, Z. Do-SA&R: Distant Object Augmented Set Abstraction and Regression for Point-Based 3D Object Detection. IEEE Trans. Image Process. 2023, 32, 5852–5864. [Google Scholar] [CrossRef]

- Song, Z.; Wei, H.; Jia, C.; Xia, Y.; Li, X.; Zhang, C. VP-Net: Voxels as Points for 3-D Object Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Mahmoud, A.; Hu, J.S.K.; Waslander, S.L. Dense Voxel Fusion for 3D Object Detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–7 January 2023; IEEE: New York, NY, USA, 2023; pp. 663–672. [Google Scholar] [CrossRef]

- An, P.; Duan, Y.; Huang, Y.; Ma, J.; Chen, Y.; Wang, L.; Yang, Y.; Liu, Q. SP-Det: Leveraging Saliency Prediction for Voxel-Based 3D Object Detection in Sparse Point Cloud. IEEE Trans. Multimed. 2023, 26, 2795–2808. [Google Scholar] [CrossRef]

- Wu, H.; Wen, C.; Li, W.; Li, X.; Yang, R.; Wang, C. Transformation-Equivariant 3D Object Detection for Autonomous Driving. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Washington, DC, USA, 7–14 February 2023; AAAI Press: Palo Alto, CA, USA, 2023; pp. 2795–2802. [Google Scholar] [CrossRef]

- Tao, L.; Wang, H.; Chen, L.; Li, Y.; Cai, Y. Pillar3D-Former: A Pillar-Based 3-D Object Detection and Tracking Method for Autonomous Driving Scenes. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar] [CrossRef]

- Liu, A.; Yuan, L.; Chen, J. CSA-RCNN: Cascaded Self-Attention Networks for High-Quality 3-D Object Detection from LiDAR Point Clouds. IEEE Trans. Instrum. Meas. 2024, 73, 1–13. [Google Scholar] [CrossRef]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point–Voxel Feature Set Abstraction with Local Vector Representation for 3D Object Detection. Int. J. Comput. Vis. 2023, 131, 531–551. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, X.; Li, J.; Wang, L.; Zhu, M.; Zhang, C.; Liu, H. Mix-Teaching: A Simple, Unified and Effective Semi-Supervised Learning Framework for Monocular 3D Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 6832–6844. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, Z.; Choy, C.; Wang, R.; Anandkumar, A.; Alvarez, J.M. Improving Distant 3D Object Detection Using 2D Box Supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 14853–14863. [Google Scholar] [CrossRef]

- Tao, C.; Cao, J.; Wang, C.; Zhang, Z.; Gao, Z. Pseudo-mono for Monocular 3D Object Detection in Autonomous Driving. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3962–3975. [Google Scholar] [CrossRef]

- Jiang, X.; Jin, S.; Lu, L.; Zhang, X.; Lu, S. Weakly Supervised Monocular 3D Detection with a Single-View Image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 10508–10518. [Google Scholar] [CrossRef]

- Chen, W.; Zhao, J.; Zhao, W.-L.; Wu, S.-Y. Shape-Aware Monocular 3D Object Detection. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6416–6424. [Google Scholar] [CrossRef]

- Huang, C.; He, T.; Ren, H.; Wang, W.; Lin, B.; Cai, D. OBMO: One Bounding Box Multiple Objects for Monocular 3D Object Detection. IEEE Trans. Image Process. 2023, 32, 6570–6581. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Bao, H.; Ge, Z.; Yang, J.; Sun, J.; Li, Z. BEVStereo: Enhancing Depth Estimation in Multi-View 3D Object Detection with Temporal Stereo. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Washington, DC, USA, 7–14 February 2023; AAAI Press: Palo Alto, CA, USA, 2023; pp. 1486–1494. [Google Scholar] [CrossRef]

- Wang, B.; Zheng, H.; Zhang, L.; Liu, N.; Anwer, R.M.; Cholakkal, H.; Zhao, Y.; Li, Z. BEVRefiner: Improving 3D Object Detection in Bird’s-Eye View via Dual Refinement. IEEE Trans. Intell. Transp. Syst. 2024, 25, 15094–15105. [Google Scholar] [CrossRef]

- Li, Z.; Lan, S.; Alvarez, J.M.; Wu, Z. BEVNeXt: Reviving Dense BEV Frameworks for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 20113–20123. [Google Scholar] [CrossRef]

- Wang, Y.; Chao, W.-L.; Garg, D.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8445–8453. [Google Scholar] [CrossRef]

- Wang, Z.; Huang, Z.; Gao, Y.; Wang, N.; Liu, S. MV2DFusion: Leveraging Modality-Specific Object Semantics for Multi-Modal 3D Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 1–15. [Google Scholar] [CrossRef]

- Guo, K.; Ling, Q. PromptDet: A Lightweight 3D Object Detection Framework with LiDAR Prompts. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Philadelphia, PA, USA, 25 February–4 March 2025; AAAI Press: Palo Alto, CA, USA, 2025; pp. 3266–3274. [Google Scholar] [CrossRef]

- Liang, T.; Xie, H.; Yu, K.; Xia, Z.; Lin, Z.; Wang, Y.; Tang, T.; Wang, B.; Tang, Z. BEVFusion: A Simple and Robust LiDAR–Camera Fusion Framework. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 10421–10434. [Google Scholar]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 4604–4612. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 1907–1915. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- OD Team. OpenPCDet: An Open-Source Toolbox for 3D Object Detection from Point Clouds. Available online: https://github.com/open-mmlab/OpenPCDet (accessed on 28 September 2025).

- Zhang, C.; Wang, H.; Cai, Y.; Chen, L.; Li, Y. TransFusion: Multi-Modal Robust Fusion for 3D Object Detection in Foggy Weather Based on Spatial Vision Transformer. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10652–10666. [Google Scholar] [CrossRef]

- Yang, Z.; Chen, J.; Miao, Z.; Li, W.; Zhu, X.; Zhang, L. DeepInteraction: 3D Object Detection via Modality Interaction. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 1992–2005. [Google Scholar]

- Chen, Y.; Li, Y.; Zhang, X.; Sun, J.; Jia, J. Focal Sparse Convolutional Networks for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 5418–5427. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, J.; Zhang, X.; Qi, X.; Jia, J. VoxelNeXt: Fully Sparse VoxelNet for 3D Object Detection and Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; IEEE: New York, NY, USA, 2023; pp. 21674–21683. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-Based 3D Object Detection and Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 11784–11793. [Google Scholar] [CrossRef]

- Chen, Y.; Yu, Z.; Chen, Y.; Lan, S.; Anandkumar, A.; Jia, J.; Alvarez, J.M. FocalFormer3D: Focusing on Hard Instance for 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 8394–8405. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krähenbühl, P. Multimodal Virtual Point 3D Detection. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2021), Virtual Event, 6–14 December 2021; Curran Associates, Inc.: Red Hook, NY, USA, 2021; Volume 34, pp. 16494–16507. [Google Scholar]

- Wang, C.; Ma, C.; Zhu, M.; Yang, X. PointAugmenting: Cross-Modal Augmentation for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 11794–11803. [Google Scholar] [CrossRef]

- Wang, H.; Tang, H.; Shi, S.; Li, A.; Li, Z.; Schiele, B.; Wang, L. UniTR: A Unified and Efficient Multi-Modal Transformer for Bird’s-Eye-View Representation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 6792–6802. [Google Scholar] [CrossRef]

- Song, Z.; Wei, H.; Bai, L.; Yang, L.; Jia, C. GraphAlign: Enhancing Accurate Feature Alignment by Graph Matching for Multi-Modal 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 3358–3369. [Google Scholar] [CrossRef]

- Li, Z.; Wang, W.; Li, H.; Xie, E.; Sima, C.; Lu, T.; Yu, Q.; Dai, J. BEVFormer: Learning Bird’s-Eye-View Representation From LiDAR–Camera via Spatiotemporal Transformers. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 2020–2036. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Liu, Y.; Wang, T.; Li, Y.; Zhang, X. Exploring Object-Centric Temporal Modeling for Efficient Multi-View 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: New York, NY, USA, 2023; pp. 3621–3631. [Google Scholar] [CrossRef]

- Park, J.; Xu, C.; Yang, S.; Keutzer, K.; Kitani, K.; Tomizuka, M.; Zhan, W. Time Will Tell: New Outlooks and a Baseline for Temporal Multi-View 3D Object Detection. arXiv 2022, arXiv:2210.02443. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, T.; Wang, Y.; Wang, Y.; Zhao, H. Futr3D: A Unified Sensor Fusion Framework for 3D Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 172–181. [Google Scholar] [CrossRef]

- Li, Y.; Yu, A.W.; Meng, T.; Caine, B.; Ngiam, J.; Peng, D.; Shen, J.; Lu, Y.; Zhou, D.; Le, Q.V.; et al. DeepFusion: LiDAR–Camera Deep Fusion for Multi-Modal 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 17182–17191. [Google Scholar] [CrossRef]

- Li, J.; Lu, M.; Liu, J.; Guo, Y.; Du, Y.; Du, L.; Zhang, S. BEV-LGKD: A Unified LiDAR-Guided Knowledge Distillation Framework for Multi-View BEV 3D Object Detection. IEEE Trans. Intell. Veh. 2023, 9, 2489–2498. [Google Scholar] [CrossRef]

- Xu, S.; Li, F.; Huang, P.; Song, Z.; Yang, Z.-X. TiGDistill-BEV: Multi-View BEV 3D Object Detection via Target Inner-Geometry Learning Distillation. IEEE Trans. Circuits Syst. Video Technol. 2025. [Google Scholar] [CrossRef]

- Shao, Z.; Wang, H.; Cai, Y.; Chen, L.; Li, Y. UA-Fusion: Uncertainty-Aware Multimodal Data Fusion Framework for 3-D Object Detection of Autonomous Vehicles. IEEE Trans. Instrum. Meas. 2025, 74, 1–16. [Google Scholar] [CrossRef]

- Yue, J.; Lin, Z.; Lin, X.; Zhou, X.; Li, X.; Qi, L.; Wang, Y.; Yang, M.-H. RobuRCDet: Enhancing Robustness of Radar-Camera Fusion in Bird’s Eye View for 3D Object Detection. arXiv 2025, arXiv:2502.13071. [Google Scholar]

| Method | Modality | mAP | NDS | Car | Bus | C.V. | Truck | T.L. | Ped. | M.T. | Bike | B.R. | T.C. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Focals Conv [44] | LiDAR | 63.8 | 70.0 | 86.7 | 67.7 | 23.8 | 56.3 | 59.5 | 87.5 | 64.5 | 36.3 | 74.1 | 81.4 |

| VoxelNeXt [45] | LiDAR | 64.5 | 70.0 | 84.6 | 64.7 | 28.7 | 53.0 | 55.8 | 85.8 | 73.2 | 45.7 | 74.6 | 79.0 |

| TransFusion-L [42] | LiDAR | 65.5 | 70.2 | 86.2 | 66.3 | 28.2 | 56.7 | 58.8 | 86.1 | 68.3 | 44.2 | 78.2 | 82.0 |

| CenterPoint [46] | LiDAR | 60.3 | 67.3 | 85.2 | 63.6 | 20.0 | 53.5 | 56.0 | 84.6 | 59.5 | 30.7 | 71.1 | 78.4 |

| FocalFormer3D [47] | LiDAR | 68.7 | 72.6 | 87.2 | 69.6 | 34.4 | 57.1 | 64.9 | 88.2 | 76.2 | 49.6 | 77.8 | 82.3 |

| MVP [48] | L + C | 66.4 | 70.5 | 86.8 | 67.4 | 26.1 | 58.5 | 57.3 | 89.1 | 70.0 | 49.3 | 74.8 | 85.0 |

| PointAugmenting [49] | L + C | 66.8 | 71.0 | 87.5 | 65.2 | 28.0 | 57.3 | 60.7 | 87.9 | 74.3 | 50.9 | 72.6 | 83.6 |

| BEVFusion-PKU [36] | L + C | 69.2 | 71.8 | 88.1 | 69.3 | 34.4 | 60.9 | 62.1 | 89.2 | 72.2 | 52.2 | 78.2 | 85.2 |

| TransFusion [42] | L + C | 68.9 | 71.7 | 87.1 | 68.3 | 33.1 | 60.0 | 60.8 | 88.4 | 73.6 | 52.9 | 78.1 | 86.7 |

| AutoAlignV2 [7] | L + C | 68.4 | 72.4 | 87.0 | 69.3 | 33.1 | 59.0 | 59.3 | 87.6 | 72.9 | 52.1 | - | - |

| UniTR [50] | L + C | 70.9 | 74.5 | 87.9 | 72.2 | 39.2 | 60.2 | 65.1 | 89.4 | 75.8 | 52.2 | 76.8 | 89.7 |

| DeepInteraction [43] | L + C | 70.8 | 73.4 | 87.9 | 70.8 | 37.5 | 60.2 | 63.8 | 90.3 | 75.4 | 54.5 | 80.4 | 87.0 |

| GraphAlign [51] | L + C | 66.5 | 70.6 | 87.6 | 66.2 | 26.1 | 57.7 | 57.8 | 87.2 | 72.5 | 49.0 | 74.1 | 86.3 |

| ObjectFusion [12] | L + C | 71.0 | 73.3 | 89.4 | 71.8 | 40.5 | 59.0 | 63.1 | 90.7 | 78.1 | 53.2 | 76.6 | 87.7 |

| BEVFusion [9] | L + C | 70.2 | 72.9 | 88.6 | 69.8 | 39.3 | 60.1 | 63.8 | 89.2 | 74.1 | 51.0 | 80.0 | 86.5 |

| BEVAlign (Ours) | L + C | 71.7 | 75.3 | 89.6 | 73.0 | 38.5 | 60.6 | 65.2 | 90.9 | 79.6 | 60.3 | 80.3 | 87.3 |

| Method | Modality | Backbone | mAP | NDS | FPS |

|---|---|---|---|---|---|

| BEVFormer [52] | C | ResNet-101 | 41.6 | 51.7 | 3.0 |

| StreamPETR [53] | C | ResNet50 | 43.2 | 54.0 | 6.4 |

| SOLOFusion [54] | C | ResNet50 | 42.7 | 53.4 | 1.5 |

| BEVNeXT [32] | C | ResNet50 | 53.5 | 62.2 | 4.4 |

| AutoAlignV2 [7] | L + C | ResNet-50 | 67.1 | 71.2 | - |

| TransFusion-LC [42] | L + C | ResNet-50 | 67.5 | 71.3 | 3.2 |

| FUTR3D [55] | L + C | ResNet-101 | 64.2 | 68.0 | 2.3 |

| CMT [3] | L + C | VoV-99 | 70.3 | 72.9 | 3.8 |

| DeepInteraction [43] | L + C | Swin-T | 69.9 | 72.6 | 2.6 |

| SparseFusion [4] | L + C | Swin-T | 71.0 | 73.1 | 5.3 |

| BEVFusionn [9] | L + C | Swin-T | 68.5 | 71.4 | 4.2 |

| BEVAlign (Ours) | L + C | Swin-T | 71.2 | 73.5 | 3.8 |

| mAP | NDS | FPS | |

|---|---|---|---|

| 0 | 68.5 | 71.4 | 4.2 |

| 5 | 69.3 | 72.1 | 4.0 |

| 8 | 71.2 | 73.5 | 3.8 |

| 12 | 70.4 | 73.0 | 3.5 |

| Method | Query | Key | Value | Attention | mAP | NDS |

|---|---|---|---|---|---|---|

| LearnableAlign | LiDAR | Image | Image | CA | 65.7 | 69.2 |

| DeformCAFA | LiDAR × Image | Image | Image | DA | 68.5 | 71.4 |

| BDCA | LiDAR & Image | Image & LiDAR | Image & LiDAR | DA × 2 | 71.2 | 73.5 |

| Method | LA | BDCA | CBR | mAP | NDS | RT |

|---|---|---|---|---|---|---|

| Baseline [9] | 68.5 | 71.4 | 238 ms | |||

| (a) | ✓ | 69.7 ↑ 1.2 | 72.4 ↑ 1.0 | 247 ms | ||

| (b) | ✓ | ✓ | 70.5 | 72.8 | 254 ms | |

| (c) | ✓ | ✓ | 71.0 | 73.2 | 260 ms | |

| (d) | ✓ | ✓ | ✓ | 71.2 ↑ 2.7 | 73.5 ↑ 2.1 | 263 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, A.; Zhang, Y.; Shi, H.; Chen, J. Robust 3D Object Detection in Complex Traffic via Unified Feature Alignment in Bird’s Eye View. World Electr. Veh. J. 2025, 16, 567. https://doi.org/10.3390/wevj16100567

Liu A, Zhang Y, Shi H, Chen J. Robust 3D Object Detection in Complex Traffic via Unified Feature Alignment in Bird’s Eye View. World Electric Vehicle Journal. 2025; 16(10):567. https://doi.org/10.3390/wevj16100567

Chicago/Turabian StyleLiu, Ajian, Yandi Zhang, Huichao Shi, and Juan Chen. 2025. "Robust 3D Object Detection in Complex Traffic via Unified Feature Alignment in Bird’s Eye View" World Electric Vehicle Journal 16, no. 10: 567. https://doi.org/10.3390/wevj16100567

APA StyleLiu, A., Zhang, Y., Shi, H., & Chen, J. (2025). Robust 3D Object Detection in Complex Traffic via Unified Feature Alignment in Bird’s Eye View. World Electric Vehicle Journal, 16(10), 567. https://doi.org/10.3390/wevj16100567