Digital Reconstruction Method for Low-Illumination Road Traffic Accident Scenes Using UAV and Auxiliary Equipment

Abstract

1. Introduction

- 1.

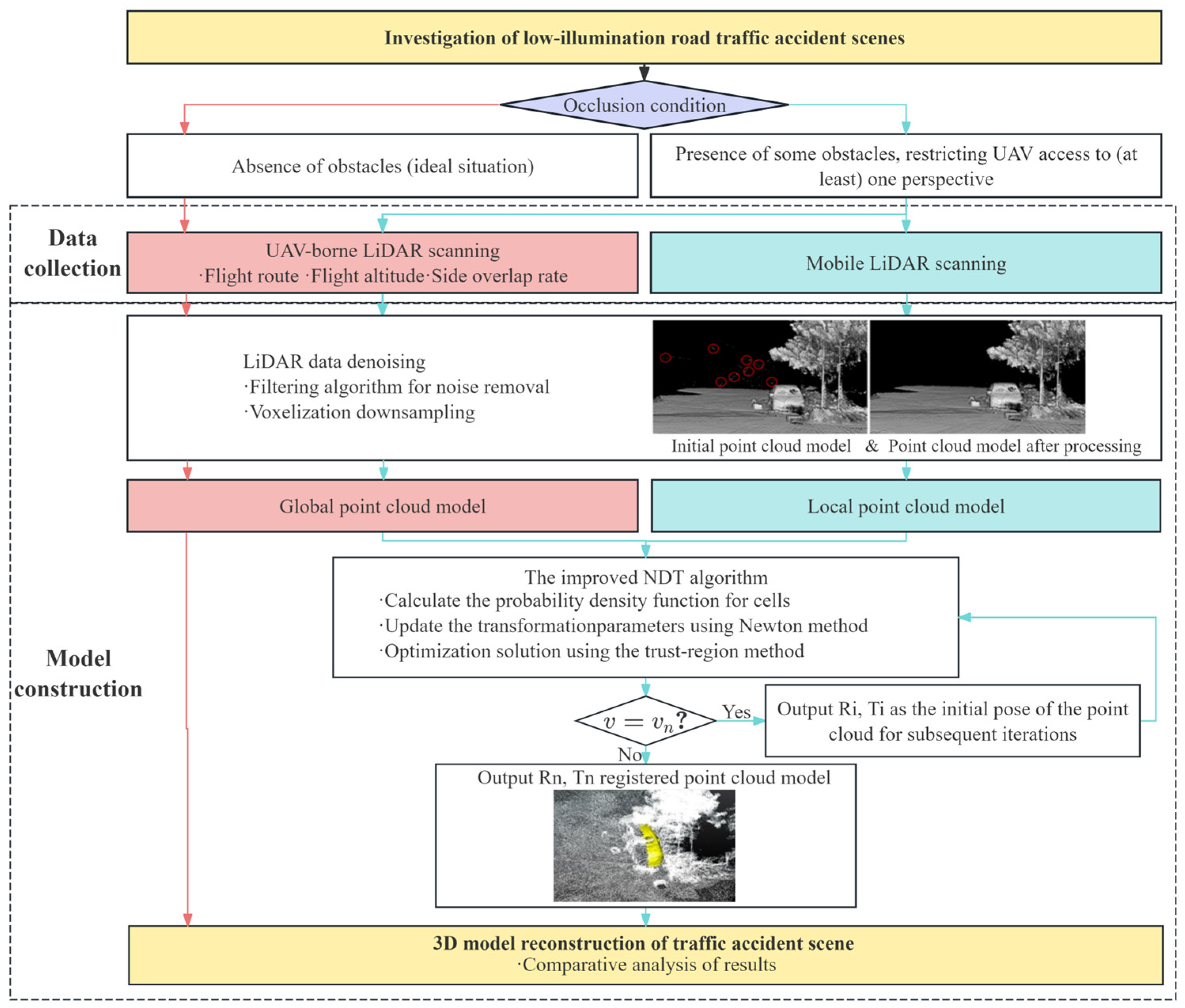

- A combined approach utilizing UAV-mounted LiDAR and mobile laser scanning is proposed and validated for reconstructing traffic accident scenes under low illumination, and the impact of occlusion on modeling accuracy is analyzed.

- 2.

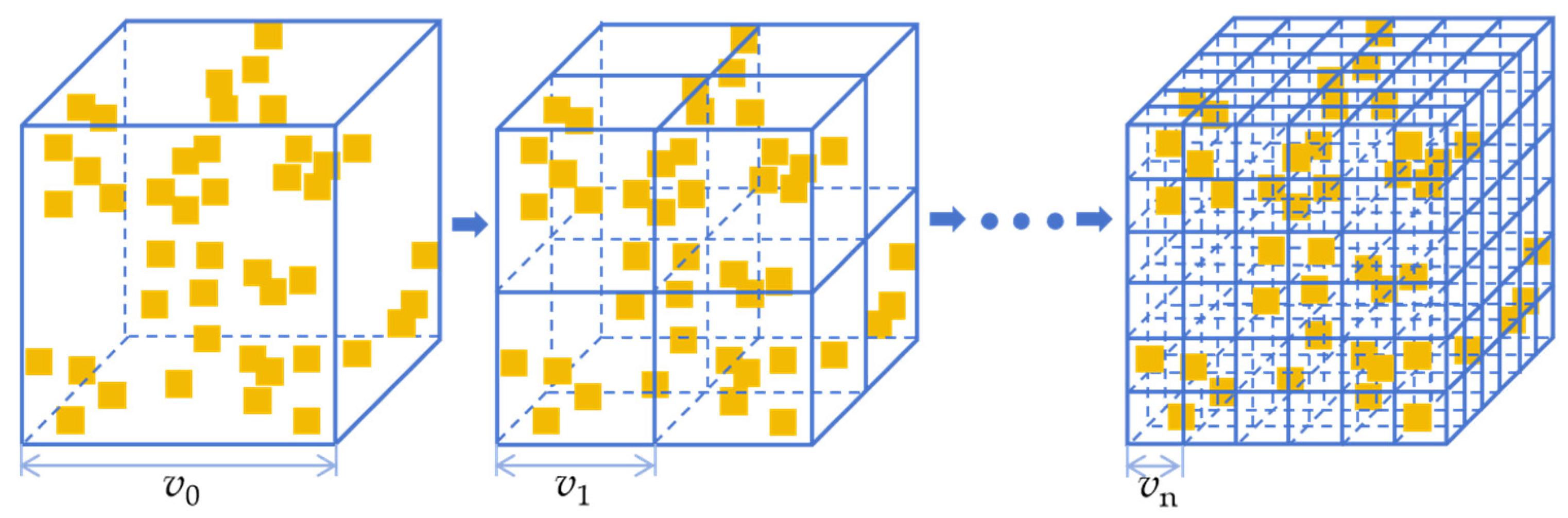

- A multiscale voxel iteration method is implemented for point cloud downsampling. An optimized NDT algorithm is integrated with various filtering algorithms to register point clouds from both sources, and the effectiveness of different registration approaches is evaluated.

2. Methodological Framework for UAV-Assisted Accident Survey

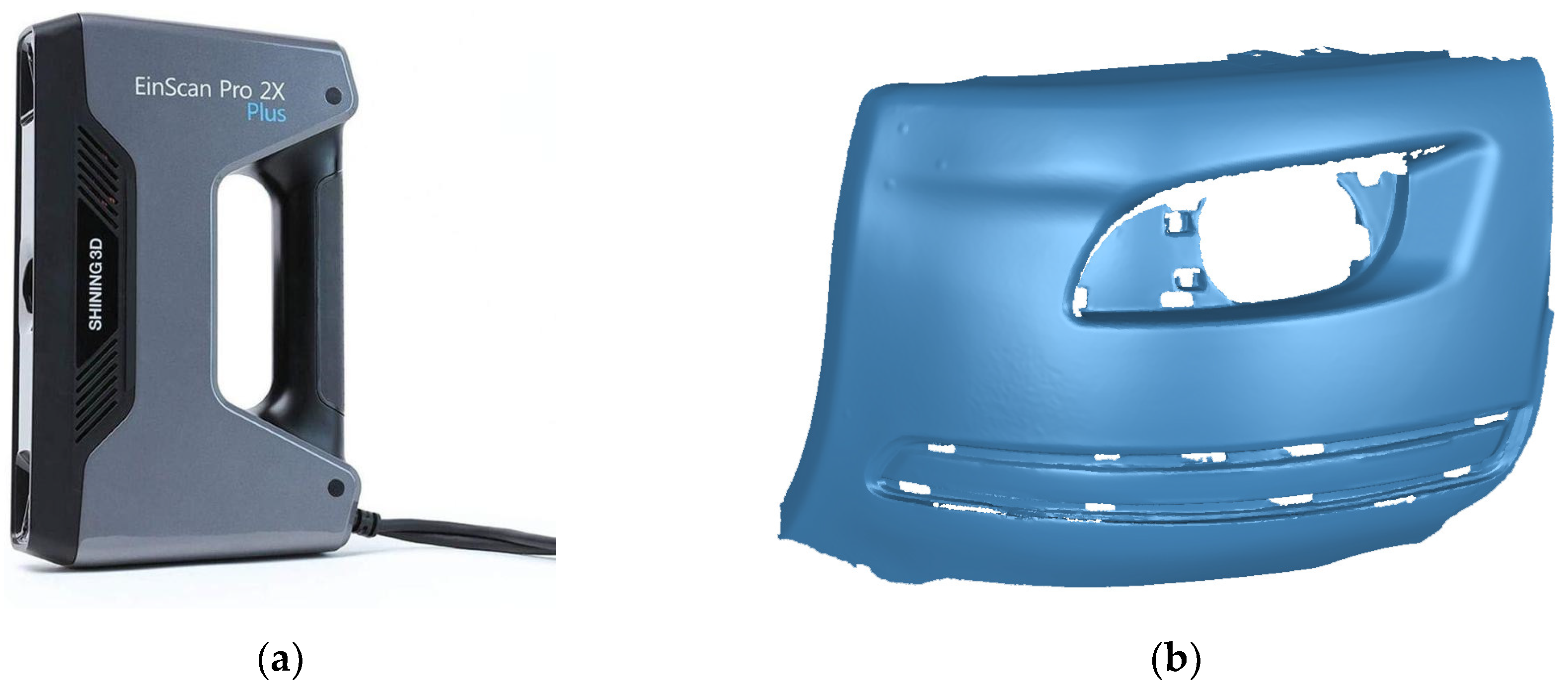

3. Selection of Surveying Equipment

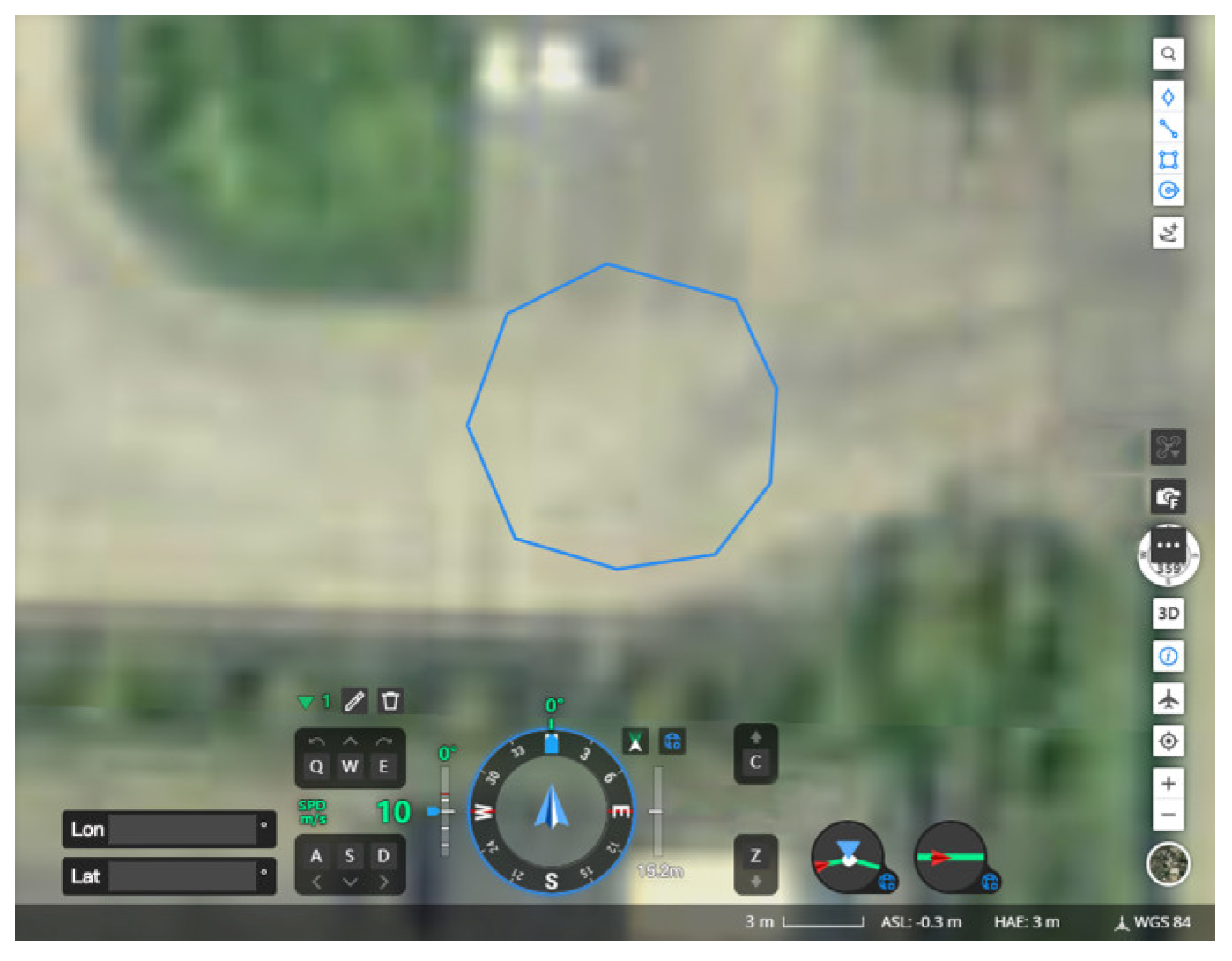

3.1. UAVs and Airborne LiDAR

- 1.

- Flight Path: Common flight patterns include back-and-forth and circular modes. The flight path is selected based on the scale of the accident [32]. The back-and-forth mode is suitable for large-scale accident sites (e.g., multi-vehicle collisions on highways) extending over 50 m, allowing rapid coverage of a large area. The circular flight mode is ideal for smaller accident sites (e.g., single-vehicle collisions) or fall-type accidents (e.g., vehicles falling off a cliff), and the UAV flies in a circle trajectory with the center at the target area and the radius extending to the accident farthest point, ensuring comprehensive data collection from multiple viewpoints. Since the simulated traffic accident scene is small, and the aerial survey aims to acquire the relative positions and geometric information of the accident objects, thus, the circular flight path is specially selected.

- 2.

- Flight Altitude: In non-restricted areas of general urban environments, the maximum allowable altitude for UAV flights is 120 m. To minimize interference from irrelevant surrounding environment data and maintain scanning accuracy, the UAV should fly as close to the accident site. However, real-world road environments often include obstacles such as streetlights, traffic signage, and trees, usually around 12 m high, necessitating adjustments to the flight altitude based on the specific obstacles at the accident site. Zulkifli et al. [20] recommended a drone flight height of 15 m for aerial survey, which can ensure the drone flight safety and avoid the environment obstacles, such as trees, traffic infrastructure, and so on. Therefore, this study selects a drone flight height of 15 m.

- 3.

- Side Overlap Rate: Airborne LiDAR systems can collect high-density point cloud data from multiple perspectives, allowing for precise capture of the geometric features and three-dimensional information of accident sites. A scan overlap rate exceeding 50% is typical to ensure adequate data coverage. The point cloud data from different perspectives are integrated and processed using the side overlap rate and pose data, ultimately generating high-precision 3D models or point cloud datasets.

3.2. Mobile LiDAR

4. Point Cloud Processing

4.1. Denoising and Downsampling of Point Clouds

4.2. Improved NDT Algorithm for Point Cloud Registration

5. Preliminary Experiment: Verification of the Registration Method

5.1. ASL Dataset

5.2. Point Cloud Registration Analysis

6. Field Experiment and Its Analysis

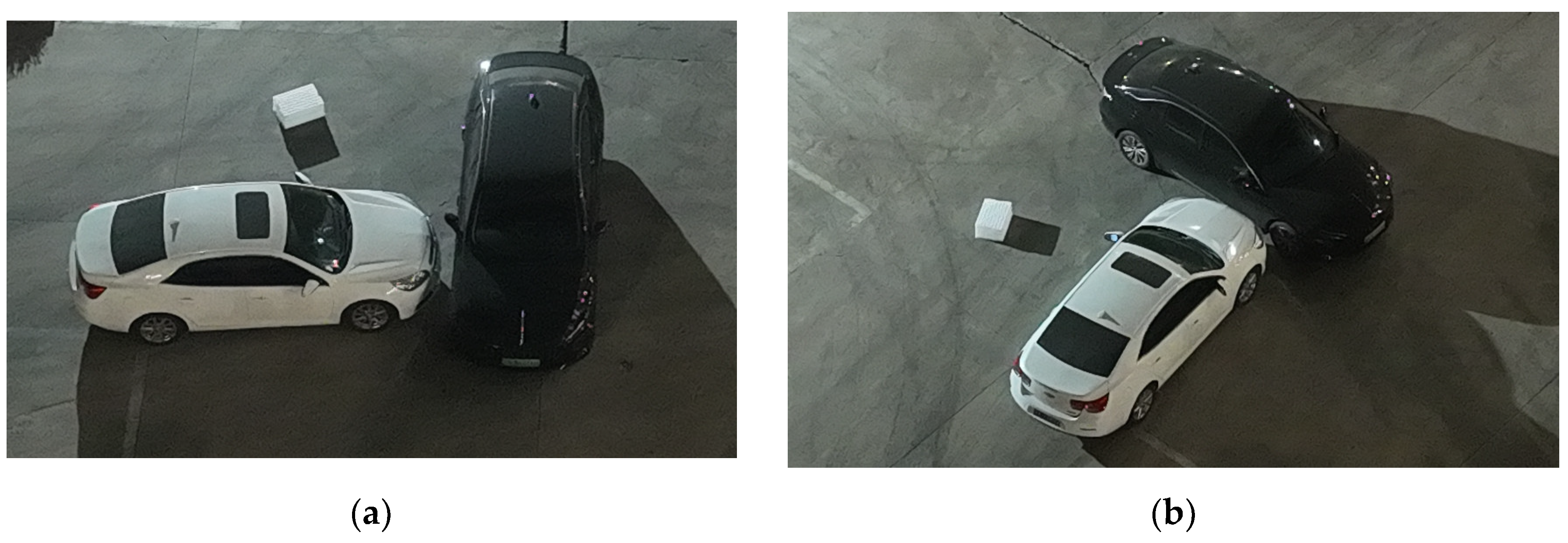

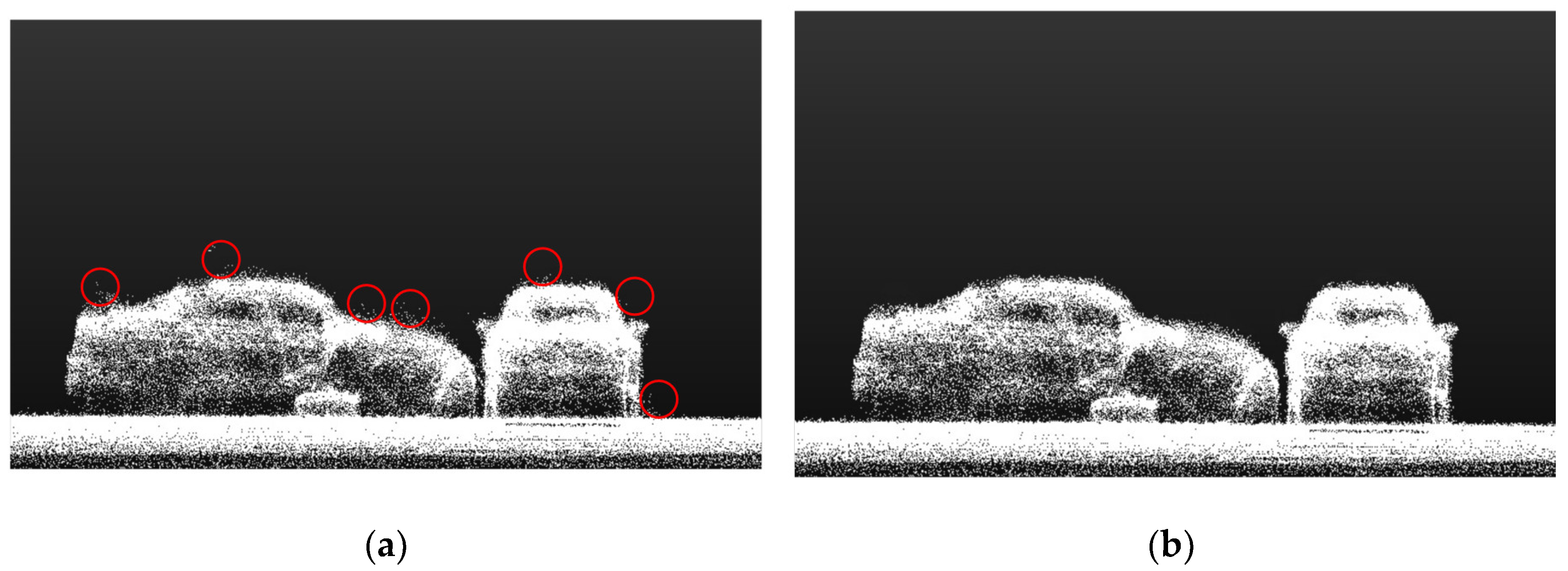

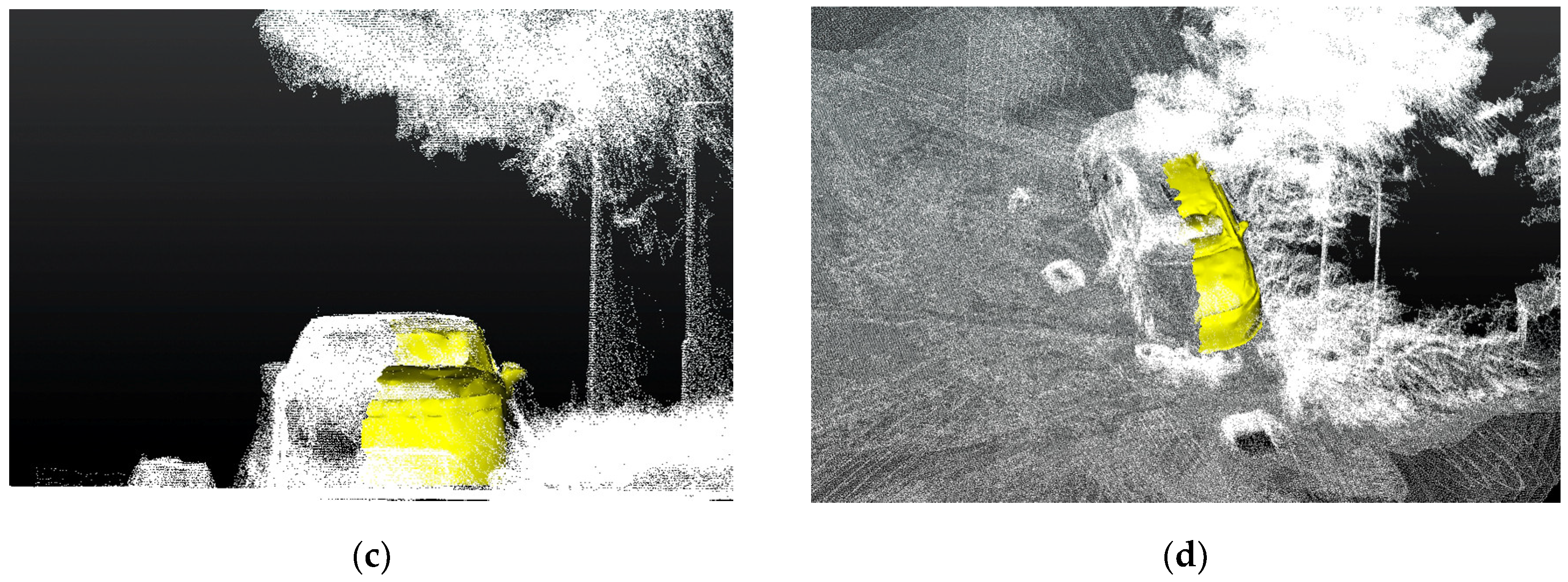

6.1. Scene 1: Traffic Accident in Low-Illumination Scene

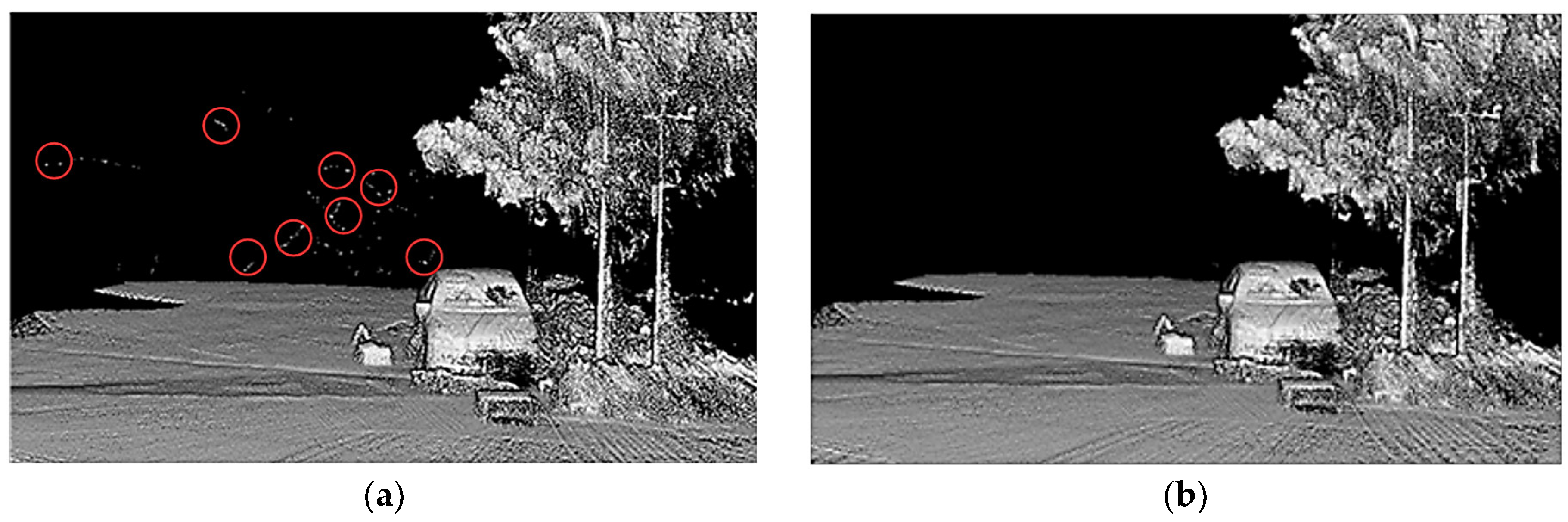

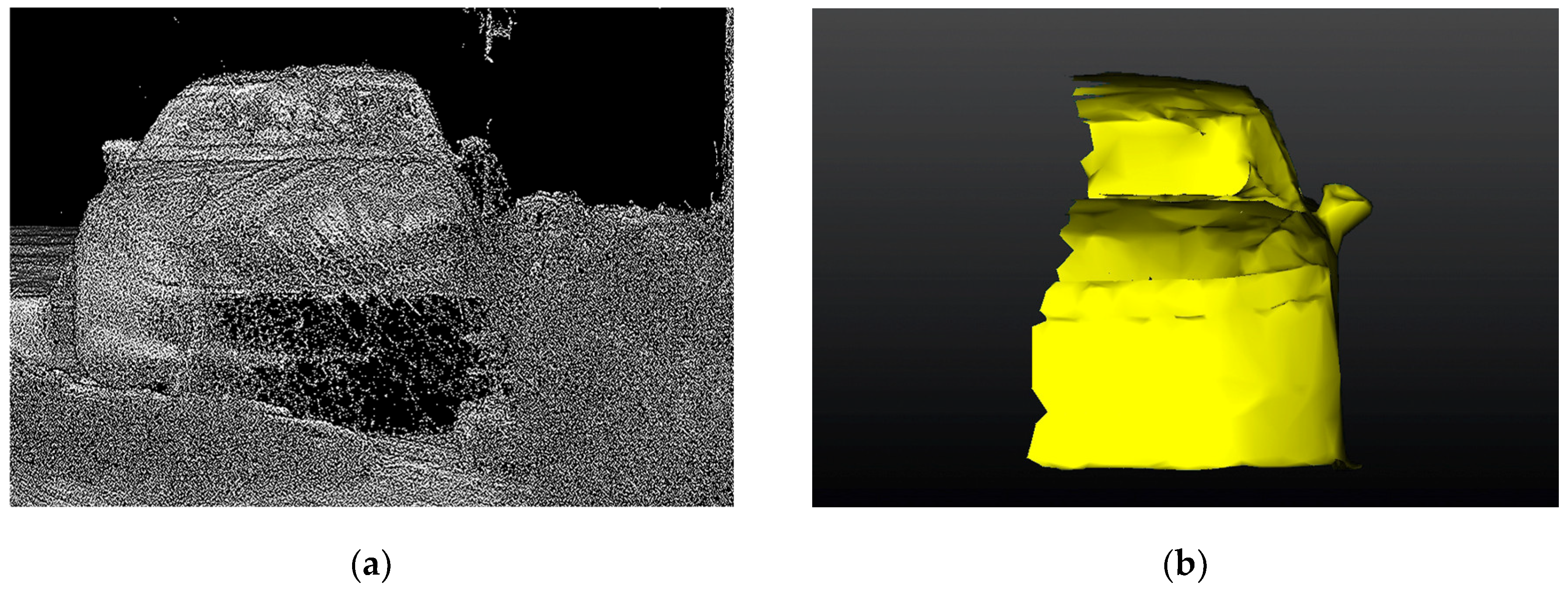

6.2. Scene 2: Traffic Accidents with Occlusion at Night

7. Conclusions and Discussions

- The integration of airborne LiDAR and mobile laser scanning technologies is feasible and effective for traffic accident investigations. Airborne LiDAR rapidly generates a global point cloud model of the accident scene, unaffected by lighting conditions, while mobile scanners complement occluded regions. This method effectively addresses the issue of information loss caused by environmental factors, ensuring the integrity of accident scene data. Additionally, it facilitates the rapid completion of accident investigations, thereby reducing traffic congestion and preventing secondary accidents.

- A balanced trade-off between processing speed and accuracy was achieved when the voxel size was set to 0.2 and multiscale iterative downsampling was applied to the point cloud model. Compared to the mean and median filtering, Gaussian filtering and the optimized NDT algorithm have higher accuracy in 3D model reconstruction.

- Field experiments demonstrate that the proposed method outperforms the alternatives in traffic accident investigations under low illumination, generating high-precision, centimeter-level 3D models.

- 1.

- Flight regulation and privacy protection. When utilizing UAVs for data collection, it is crucial to comply with the relevant laws, regulations, and privacy policies to ensure the legality and safety of the investigation. Operations in populated areas or restricted airspace must strictly adhere to airspace management regulations to avoid privacy infringements and public alarm. Additionally, UAV regulations differ across countries and regions, encompassing restrictions on flight altitude, designated areas, and operational time, which may influence the efficiency and effectiveness of UAV-based investigations. Furthermore, safeguarding personal privacy must remain a top priority. The stringent management of data storage and processing is crucial to ensuring data security, thereby increasing stakeholder acceptance of this technology.

- 2.

- Environmental factors and weather. Extreme weather conditions, such as strong winds, heavy rain, lightning, or dense fog, will disrupt UAV operations and reduce the effective range of LiDAR sensing. Wet road surfaces and reflective objects may lead to erroneous laser returns and severely impact the UAV stability and data quality. In complex urban environments with dense buildings or structures, UAV flight and positioning may be constrained, thereby affecting the effectiveness of investigations. Additionally, electromagnetic interference from the accident site may disrupt UAV remote control and navigation systems, increasing operational complexity and risk. These challenges can be mitigated through a combination of hardware advancements and algorithmic enhancements. Developing waterproof UAVs will enable the operation in rainy and snowy weather. Designing UAVs with enhanced control mechanisms will improve the UAV flight stability in strong winds. Additionally, integrating multi-wavelength LiDAR sensors, refining signal processing algorithms, and employing AI-based noise filtering techniques can enhance data reliability under adverse weather conditions. Furthermore, fusing LiDAR data with inertial measurement unit (IMU) and GNSS information can improve positioning accuracy in GPS-denied environments, ensuring stable and high-quality data acquisition.

- 3.

- Multi-source data fusion. The proposed method requires multi-source point cloud data processing. Traffic accident scenes are always complex and dynamic, and high-density point cloud data can lead to processing delays. Therefore, it is essential to explore modern data processing methods in the future. While deep learning techniques hold great promise for enhancing registration accuracy and automation, it still faces great challenges without large-scale labeled datasets of diverse accident scenarios. Future research could focus on developing domain adaptation strategies, self-supervised learning approaches, and robust feature extraction methods to improve the adaptability and efficiency of deep-learning-based registration techniques. By addressing these issues, it will be possible to achieve faster, more accurate, and more reliable 3D reconstruction of accident scenes under various environmental and operational constraints.

- 4.

- Cost–efficiency balance. This approach provides substantial societal benefits by enabling rapid traffic accident investigations and reducing traffic congestion. Furthermore, it accurately preserves the accident scene in a 3D model, facilitating subsequent examinations. However, considering the costs of equipment acquisition, labor, and maintenance, the cost per investigation is approximately 400–500 RMB, indicating that the current method remains relatively costly. Further reduction in UAV hardware and software costs is crucial to enhance its practical applicability.

- 5.

- Enhanced texture preservation. Photogrammetry can better represent textures, which is important for analyzing traffic accidents. However, in low-illumination traffic accident environments, the insufficient light received by the camera degrades the image quality, resulting in blurred surface textures and low measurement accuracy. To address this issue, the aerial RGB images can be used to color the LiDAR point cloud model, and this hybrid approach can ensure the geometric fidelity and visual texture.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tang, J.; Huang, Y.; Liu, D.; Xiong, L.; Bu, R. Research on Traffic Accident Severity Level Prediction Model Based on Improved Machine Learning. Systems 2025, 13, 31. [Google Scholar] [CrossRef]

- Tucci, J.; Dougherty, J.; Lavin, P.; Staes, L.; Safety, K.J. Effective Practices in Bus Transit Accident Investigations; United States, Department of Transportation, Federal Transit Administration: Washington, DC, USA, 2021; pp. 13–14. [Google Scholar]

- Kamnik, R.; Perc, M.N.; Topolšek, D. Using the scanners and drone for comparison of point cloud accuracy at traffic accident analysis. Accid. Anal. Prev. 2020, 135, 105391. [Google Scholar] [CrossRef]

- Garnaik, M.M.; Giri, J.P.; Panda, A. Impact of highway design on traffic safety: How geometric elements affect accident risk. Ecocycles 2023, 9, 83–92. [Google Scholar] [CrossRef]

- Pagounis, V.; Tsakiri, M.; Palaskas, S.; Biza, B.; Zaloumi, E. 3D laser scanning for road safety and accident reconstruction. In Proceedings of the XXIIIth international FIG congress, Munich, Germany, 8–13 October 2006. [Google Scholar]

- Buck, U.; Buße, K.; Campana, L.; Gummel, F.; Schyma, C.; Jackowski, C. What Happened Before the Run Over? Morphometric 3D Reconstruction. Forensic Sci. Int. 2020, 306, 110059. [Google Scholar] [CrossRef]

- Calders, K.; Adams, J.; Armston, J.; Bartholomeus, H.; Bauwens, S.; Bentley, L.P.; Chave, J.; Danson, F.M.; Demol, M.; Disney, M.; et al. Terrestrial laser scanning in forest ecology: Expanding the horizon. Remote Sens. Environ. 2020, 251, 112102. [Google Scholar] [CrossRef]

- Khodjaev, S.; Kuhn, L.; Bobojonov, I.; Glauben, T. Combining multiple UAV-Based indicators for wheat yield estimation, a case study from Germany. Eur. J. Remote Sens. 2024, 57, 2294121. [Google Scholar] [CrossRef]

- Pensado, E.A.; López, F.V.; Jorge, H.G.; Pinto, A.M. UAV Shore-to-Ship Parcel Delivery: Gust-Aware Trajectory Planning. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 6213–6233. [Google Scholar] [CrossRef]

- Wang, W.; Xue, C.; Zhao, J.; Yuan, C.; Tang, J. Machine learning-based field geological mapping: A new exploration of geological survey data acquisition strategy. Ore Geol. Rev. 2024, 166, 105959. [Google Scholar] [CrossRef]

- Kong, X.; Ni, C.; Duan, G.; Shen, G.; Yang, Y.; Das, S.K. Energy Consumption Optimization of UAV-Assisted Traffic Monitoring Scheme with Tiny Reinforcement Learning. IEEE Internet Things J. 2024, 11, 21135–21145. [Google Scholar] [CrossRef]

- Silva-Fragoso, A.; Norini, G.; Nappi, R.; Groppelli, G.; Michetti, A.M. Improving the Accuracy of Digital Terrain Models Using Drone-Based LiDAR for the Morpho-Structural Analysis of Active Calderas: The Case of Ischia Island, Italy. Remote Sens. 2024, 16, 1899. [Google Scholar] [CrossRef]

- Neuville, R.; Bates, J.S.; Jonard, F. Estimating Forest Structure from UAV-Mounted LiDAR Point Cloud Using Machine Learning. Remote Sens. 2021, 13, 352. [Google Scholar] [CrossRef]

- Zhang, Y.; Dou, X.; Zhao, H.; Xue, Y.; Liang, J. Safety Risk Assessment of Low-Volume Road Segments on the Tibetan Plateau Using UAV LiDAR Data. Sustainability 2023, 15, 11443. [Google Scholar] [CrossRef]

- Cherif, B.; Ghazzai, H.; Alsharoa, A. LiDAR from the Sky: UAV Integration and Fusion Techniques for Advanced Traffic Monitoring. IEEE Syst. J. 2024, 18, 1639–1650. [Google Scholar] [CrossRef]

- Wang, F.-H.; Li, L.-Y.; Liu, Y.-T.; Tian, S.; Wei, L. Road Traffic Accident Scene Detection and Mapping System Based on Aerial Photography. Int. J. Crashworthiness 2020, 26, 537–548. [Google Scholar]

- Chen, Q.; Li, D.; Huang, B. SUAV image mosaic based on rectification for use in traffic accident scene diagramming. In Proceedings of the 2020 IEEE 3rd International Conference of Safe Production and Informatization (IICSPI), Chongqing, China, 28–30 November 2020. [Google Scholar]

- Chen, Y.; Zhang, Q.; Yu, F. Transforming traffic accident investigations: A virtual-real-fusion framework for intelligent 3D traffic accident reconstruction. Complex Intell. Syst. 2025, 11, 76. [Google Scholar] [CrossRef]

- Norahim, M.N.I.; Tahar, K.N.; Maharjan, G.R.; Matos, J.C. Reconstructing 3D model of accident scene using drone image processing. Int. J. Electr. Comput. Eng. 2023, 13, 4087–4099. [Google Scholar] [CrossRef]

- Zulkifli, M.H.; Tahar, K.N. The Influence of UAV Altitudes and Flight Techniques in 3D Reconstruction Mapping. Drones 2023, 7, 227. [Google Scholar] [CrossRef]

- Amin, M.; Abdullah, S.; Abdul Mukti, S.N.; Mohd Zaidi, M.H.A.; Tahar, K.N. Reconstruction of 3D accident scene from multirotor UAV platform. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 451–458. [Google Scholar] [CrossRef]

- Pádua, L.; Sousa, J.; Vanko, J.; Hruška, J.; Adão, T.; Peres, E.; Sousa, J.J. Digital reconstitution of road traffic accidents: A flexible methodology relying on UAV surveying and complementary strategies to support multiple scenarios. Int. J. Environ. Res. Public Health 2020, 17, 1868. [Google Scholar] [CrossRef]

- Pérez, J.A.; Gonçalves, G.R.; Barragan, J.R.; Ortega, P.F.; Palomo, A.A. Low-cost tools for virtual reconstruction of traffic accident scenarios. Heliyon 2024, 10, 1–26. [Google Scholar]

- Nteziyaremye, P.; Sinclair, M. Investigating the effect of ambient light conditions on road traffic crashes: The case of Cape Town, South Africa. Light. Res. Technol. 2024, 56, 443–468. [Google Scholar] [CrossRef]

- Liang, S.; Chen, P.; Wu, S.; Cao, H. Complementary Fusion of Camera and LiDAR for Cooperative Object Detection and Localization in Low-Contrast Environments at Night Outdoors. IEEE Trans. Consum. Electron. 2024, 70, 6392–6403. [Google Scholar] [CrossRef]

- Li, Y.; Yu, A.W.; Meng, T.; Caine, B.; Ngiam, J.; Peng, D.; Shen, J.; Lu, Y.; Zhou, D.; Le, Q.V.; et al. Deep fusion: LiDAR-Camera Deep Fusion for Multi-Modal 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Hussain, A.; Mehdi, S.R. A Comprehensive Review: 3D Object Detection Based on Visible Light Camera, Infrared Camera, and Lidar in Dark Scene. Infrared Camera Lidar Dark Scene 2024, 2, 27. [Google Scholar]

- Pomerleau, F.; Liu, M.; Colas, F.; Siegwart, R. Challenging data sets for point cloud registration algorithms. Int. J. Robot. Res. 2012, 31, 1705–1711. [Google Scholar] [CrossRef]

- Vida, G.; Melegh, G.; Süveges, Á.; Wenszky, N.; Török, Á. Analysis of UAV Flight Patterns for Road Accident Site Investigation. Vehicles 2023, 5, 1707–1726. [Google Scholar] [CrossRef]

- The Johns Hopkins University. Operational Evaluation of Unmanned Aircraft Systems for Crash Scene Reconstruction, 1st ed.; Applied Physics Laboratory: Laurel, MD, USA, 2018; pp. 27–29. [Google Scholar]

- Liu, S.; Wei, Y.; Wen, Z.; Guo, X.; Tu, Z.; Li, Y. Towards robust image matching in low-luminance environments: Self-supervised keypoint detection and descriptor-free cross-fusion matching. Pattern Recognit. 2024, 153, 110572. [Google Scholar] [CrossRef]

- Zheng, J.; Yang, Q.; Liu, J.; Li, L.; Chai, Y.; Xu, P. Research on Road Traffic Accident Scene Investigation Technology Based on 3D Real Scene Reconstruction. In Proceedings of the 2023 3rd Asia Conference on Information Engineering (ACIE), Chongqing, China, 22 August 2023. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Sensor Fusion IV: Control Paradigms and Data Structures; Society of Photo-Optical Instrumentation Engineers: Boston, MA, USA, 1992. [Google Scholar]

- Peng, Y.; Yang, X.; Li, D.; Ma, Z.; Liu, Z.; Bai, X.; Mao, Z. Predicting Flow Status of a Flexible Rectifier Using Cognitive Computing. Expert Syst. Appl. 2025, 264, 125878. [Google Scholar] [CrossRef]

- Mao, Z.; Kobayashi, R.; Nabae, H.; Suzumori, K. Multimodal Strain Sensing System for Shape Recognition of Tensegrity Structures by Combining Traditional Regression and Deep Learning Approaches. IEEE Robot. Autom. Lett. 2024, 9, 10050–10056. [Google Scholar] [CrossRef]

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A Comprehensive Survey on Point Cloud Registration. arXiv 2021, arXiv:2103.02690. [Google Scholar]

- Shen, Z.; Feydy, J.; Liu, P.; Curiale, A.H.; San Jose Estepar, R.; Niethammer, M. Accurate Point Cloud Registration with Robust Optimal Transport. Adv. Neural Inf. Process. Syst. 2021, 34, 5373–5389. [Google Scholar]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Shen, Y.; Zhang, B.; Wang, J.; Zhang, Y.; Wu, Y.; Chen, Y.; Chen, D. MI-NDT: Multiscale Iterative Normal Distribution Transform for Registering Large-scale Outdoor Scans. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5705513. [Google Scholar] [CrossRef]

- Mohammadi, A.; Custódio, A.L. A trust-region approach for computing Pareto fronts in multiobjective optimization. Comput. Optim. Appl. 2024, 87, 149–179. [Google Scholar] [CrossRef]

| Equipment Parameters | Zenmuse L1 | EinScan Pro 2X |

|---|---|---|

| Scanning frequency | 240,000 points/second | 1,500,000 points/second |

| Scanning accuracy | 30 mm (within 100 m) | 0.1 mm (within 0.5 m) |

| Weight of equipment | 0.93 kg | 1.25 kg |

| Splice mode | overlapping splicing | feature splicing |

| Data output | output 3D model after post-processing | direct output 3D model |

| Operating temperature | −20–50 °C | 0–40 °C |

| Supported data format | PNTS/LAS/PLY/PCD/S3MB | OBJ/STL/ASC/PLY/P3/3MF |

| Model | Voxel Size | |||||

|---|---|---|---|---|---|---|

| 1 | 0.8 | 0.6 | 0.4 | 0.2 | 0.1 | |

| Classic NDT(Time/s|PRE) | 6.15|0.67 | 6.71|0.58 | 7.44|0.61 | 9.13|0.55 | 12.87|0.45 | 15.86|0.43 |

| Improved NDT(Time/s|PRE) | 6.82|0.62 | 7.69|0.59 | 8.35|0.55 | 9.75|0.50 | 14.15|0.39 | 18.26|0.36 |

| Downsampling Parameters | Registration Algorithms | Processing Time/s | RPE |

|---|---|---|---|

| 0.1 | Gaussian filtering + improved NDT | 20.38 | 0.32 |

| 0.1 | mean filtering + improved NDT | 20.62 | 0.36 |

| 0.1 | median filtering + improved NDT | 21.12 | 0.35 |

| 0.2 | Gaussian filtering + improved NDT | 15.54 | 0.34 |

| 0.2 | mean filtering + improved NDT | 15.83 | 0.37 |

| 0.2 | median filtering + improved NDT | 16.11 | 0.38 |

| 0.3 | Gaussian filtering + improved NDT | 10.21 | 0.45 |

| 0.3 | mean filtering + improved NDT | 10.74 | 0.48 |

| 0.3 | median filtering + improved NDT | 11.38 | 0.51 |

| Attribute | Aerial Photography Modeling | UAV-Borne LiDAR Modeling |

|---|---|---|

| Data acquisition | RGB images | Point cloud |

| Light condition | Sufficient | None |

| Imaging principle | Pinhole imaging | Laser reflection |

| Export result | 3D model | Point cloud model |

| Color and texture | Exist | None |

| Resolution | High | Low |

| Noise | None | Exist |

| Cost | Cheap | Expensive |

| Measurement Number | Measurement Objects | Tape Measurement Length/m | Photography Modeling Length/m | UAV-Borne LiDAR Method Length/m | Photography Modeling RE/% | UAV-Borne LiDAR Method RE/% |

|---|---|---|---|---|---|---|

| 1 | Length of the white vehicle | 4.88 | 4.83 | 4.92 | −1.02 | 0.82 |

| 2 | Width of the white vehicle | 1.78 | 1.72 | 1.83 | −3.37 | 2.81 |

| 3 | Length of the black vehicle | 4.93 | 4.77 | 4.99 | −3.25 | 1.22 |

| 4 | Width of the black vehicle | 1.84 | 1.78 | 1.80 | −3.26 | −2.17 |

| 5 | Right rear-view mirror of the white vehicle | 0.21 | 0.20 | 0.23 | −4.76 | 9.52 |

| 6 | Right door handle of the white vehicle | 0.26 | 0.25 | 0.27 | −3.85 | 3.85 |

| 7 | Right rear-view mirror of the black vehicle | 0.22 | 0.25 | 0.24 | 13.64 | 9.09 |

| 8 | Right door handle of the black vehicle | 0.28 | 0.26 | 0.30 | −7.14 | 7.14 |

| 9 | Wheel diameter | 0.65 | 0.62 | 0.66 | −4.62 | 1.54 |

| 10 | Length of box | 0.75 | 0.80 | 0.79 | 6.67 | 5.33 |

| 11 | Width of box | 0.55 | 0.52 | 0.59 | −5.45 | 7.27 |

| Measurement Number | Measurement Objects | Tape Measurement Length/m | Photography Modeling Length/m | UAV-Borne LiDAR Method Length/m | Photography Modeling RE/% | UAV-Borne LiDAR Method RE/% |

|---|---|---|---|---|---|---|

| 1 | Wheel diameter | 0.65 | 0.61 | 0.67 | −6.15 | 3.08 |

| 2 | Length of the vehicle | 4.93 | 4.81 | 4.99 | −2.43 | 1.22 |

| 3 | Width of the vehicle | 1.84 | 1.77 | 1.79 | −3.80 | −2.72 |

| 4 | Right rearview mirror | 0.22 | — | 0.22 | — | 0.00 |

| 5 | Right door handle | 0.28 | — | 0.28 | — | 0.00 |

| 6 | Length of box | 0.75 | 0.81 | 0.80 | 8.00 | 6.67 |

| 7 | Width of box | 0.55 | 0.52 | 0.58 | −5.45 | 5.45 |

| 8 | Bicycle length | 1.58 | 1.63 | 1.62 | 3.16 | 2.53 |

| 9 | Bicycle wheel | 0.56 | 0.58 | 0.60 | 3.57 | 7.14 |

| 10 | Length of carton | 0.42 | 0.46 | 0.45 | 9.52 | 7.14 |

| 11 | Width of carton | 0.42 | 0.45 | 0.44 | 7.14 | 4.76 |

| 12 | Tripod length | 0.44 | 0.46 | 0.48 | 4.55 | 9.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Guan, Z.; Liu, X.; Zhang, Z. Digital Reconstruction Method for Low-Illumination Road Traffic Accident Scenes Using UAV and Auxiliary Equipment. World Electr. Veh. J. 2025, 16, 171. https://doi.org/10.3390/wevj16030171

Zhang X, Guan Z, Liu X, Zhang Z. Digital Reconstruction Method for Low-Illumination Road Traffic Accident Scenes Using UAV and Auxiliary Equipment. World Electric Vehicle Journal. 2025; 16(3):171. https://doi.org/10.3390/wevj16030171

Chicago/Turabian StyleZhang, Xinyi, Zhiwei Guan, Xiaofeng Liu, and Zejiang Zhang. 2025. "Digital Reconstruction Method for Low-Illumination Road Traffic Accident Scenes Using UAV and Auxiliary Equipment" World Electric Vehicle Journal 16, no. 3: 171. https://doi.org/10.3390/wevj16030171

APA StyleZhang, X., Guan, Z., Liu, X., & Zhang, Z. (2025). Digital Reconstruction Method for Low-Illumination Road Traffic Accident Scenes Using UAV and Auxiliary Equipment. World Electric Vehicle Journal, 16(3), 171. https://doi.org/10.3390/wevj16030171