1. Introduction

The educational sector has recently evolved from a traditional educational paradigm to digital education, driven by the latest extraordinary events. The COVID-19 pandemic has caused a sudden disruption in schools’ face-to-face teaching and, learning activities worldwide, adapting distance teaching and learning strategies quickly [

1,

2]. On the other hand, the new industrial era (Industry 4.0) is underway and demands professionals with skills in the pillar technologies of Industry 4.0; autonomous robots, simulation, industrial internet of things, additive manufacturing, augmented reality, cybersecurity, cloud computing, big data, and analytics [

3].

The speed of adaptation of the institutions in their attempts to reduce the gap with the new industry and its requirements will be according to the design of the institutions’ curricula, which are generally subject to renewal/updating under educational and organizational policies, which can reduce or accelerate such adaptation. The current context brings an opportunity to develop a novel framework that allows the dynamics of higher education institutions to meet the speed of change in which technology evolves.

Historically, education has changed based on industry needs [

4,

5]. Naturally, institutions and colleges need to update their curricula to integrate Industry 4.0 elements such as educational technologies, methodologies, and topics that support the expertise and skills required by the industry’s changing expectations [

3,

6]. Moreover, integrating the educational components with Industry 4.0 has the potential to evolve into a flexible education system. Technology-based learning and pedagogical practices promote active learning and the development of critical and cognitive thinking skills by integrating technologies such as artificial intelligence (AI), data management, robots, cloud computing, among others [

7].

Therefore, proper technology implementation in education can improve teaching and learning strategies by maximizing students’ academic and practical experiences. As has been argued, robotics is part of the essential activities in the industry; today, smart factories depend on automation and robotics, where human-robot collaboration is now a reality. In addition, as technology becomes affordable, it is expected that even small companies will involve robots in their processes [

8], resulting in the critical need for professionals specialized in robotics.

In the trends toward a technological future, skills such as computational thinking become essential for elementary education, and robots are valuable tools in this aspect. Robots enable learning by interacting with the environment and enhance the student’s behavioral, social, scientific, cognitive, and intellectual skills, which also requires the specialization of the teachers in the area, thus creating a feasible ecosystem to implant robotics in high school and higher education [

9]. Educational robotics has been promoted in educational institutions, which refers to using a robotic element as a high-value learning tool for developing essential skills such as computational thinking and coding [

10].

Furthermore, according to Seymour Papert’s constructionism, technology integration in education can be enhanced by the concept that learning occurs when individuals actively construct their knowledge from their experiences and interactions with the environment [

11]. This postulate aligns with educational robotics, where a robot in educational settings can facilitate the development of critical thinking skills and problem-solving abilities, as students are challenged to discover how to design, build, and program the devices to perform specific tasks. Moreover, studies reveal the benefits of the robots in the classroom used for learning, among them; the understanding of abstract concepts related to science, technology, engineering, and mathematics (STEM), practical experience, and the acquisition of transversal skills such as team collaboration and communication [

12,

13]. In addition, robots are flexible devices that have reprogramming, multi-use, and reconfigurable features, allowing the creation of scenarios according to different educational contexts [

14].

Navigation and localization are the main challenges in robotics, and studying such topics are fundamental for achieving autonomous robots capable of performing tasks efficiently in real-world applications. Implementing computer vision (CV) enables a graphic scope for our proposed system in this context. CV is a technology that allows analysis and interpretation of visual information from images; it has numerous applications, such as object detection, tracking objects, image recognition, and health issues detection, among others [

15].

In this context, several works implement CV to complement educational robotics applications, e.g., a technological solution focused on learning robot navigation called MonitoRE system [

16]. The authors developed educational robotics scenarios with different levels of complexity. The application can analyze and follow the robot’s movements and superpose figures according to the tasks; the authors conclude that this solution promotes interest through real-time activities, demonstrating the robot’s behavior. However, a specific methodology is not presented in the construction of knowledge. Still, it is left open to the instructor’s consideration, which offers some flexibility.

In contrast, other works propose an education platform for teaching robotics subjects, implementing the Project-Based Learning Methodology [

17]. The platform uses MATLAB, Simulink, and LEGO Robot to learn about navigation in a real-time implementing CV. Students can test different algorithms or program a new one depending on the level. The authors conclude the platforms help to create real-time applications that can interact with the students, which motivates students and lecturers in the learning and teaching task.

Moreover, a systematic review of the implementation of CV in educational robotics indicates that incorporating computer vision tasks as an educational tool in educational robots is valuable for students [

18]. CV help them to build knowledge and enhance their academic success and/or professional skills, increase their interest, enhance their communication skills, and improve their social abilities.

To capitalize on the benefits of technology in an educational approach, employing an instructional design is an essential need. An instructional design aims to develop instructional specifications and tasks (problem-centered) to establish effective methods to construct the desired changes in the learner’s knowledge and skills [

19,

20,

21].

Thus, we propose developing an Educational Mechatronics (EM) training system based on computer vision that performs as a positioning system for mobile robots in the 2D plane. The CV algorithm retrieves the LEGO EV3 mobile robot position during the development of the pedagogical experiments. Moreover, it grants the position measurements of the robot. Then, this information is used as input for the overall educational framework levels and its instructional design, focusing on teaching/learning robotics navigation. Compared to the reported scientific literature, the novelty of this work relies on developing an educational scenario that can be considered to extend the Educational Mechatronics Conceptual Framework (EMCF).

2. Extended Educational Mechatronics Conceptual Framework

In order to transform educational practice, promoting learning spaces mediated by digital tools to generate educational experiences that place the learner as an active agent in their learning process is critical. Therefore, this work presents an extension of the EMCF, previously presented in [

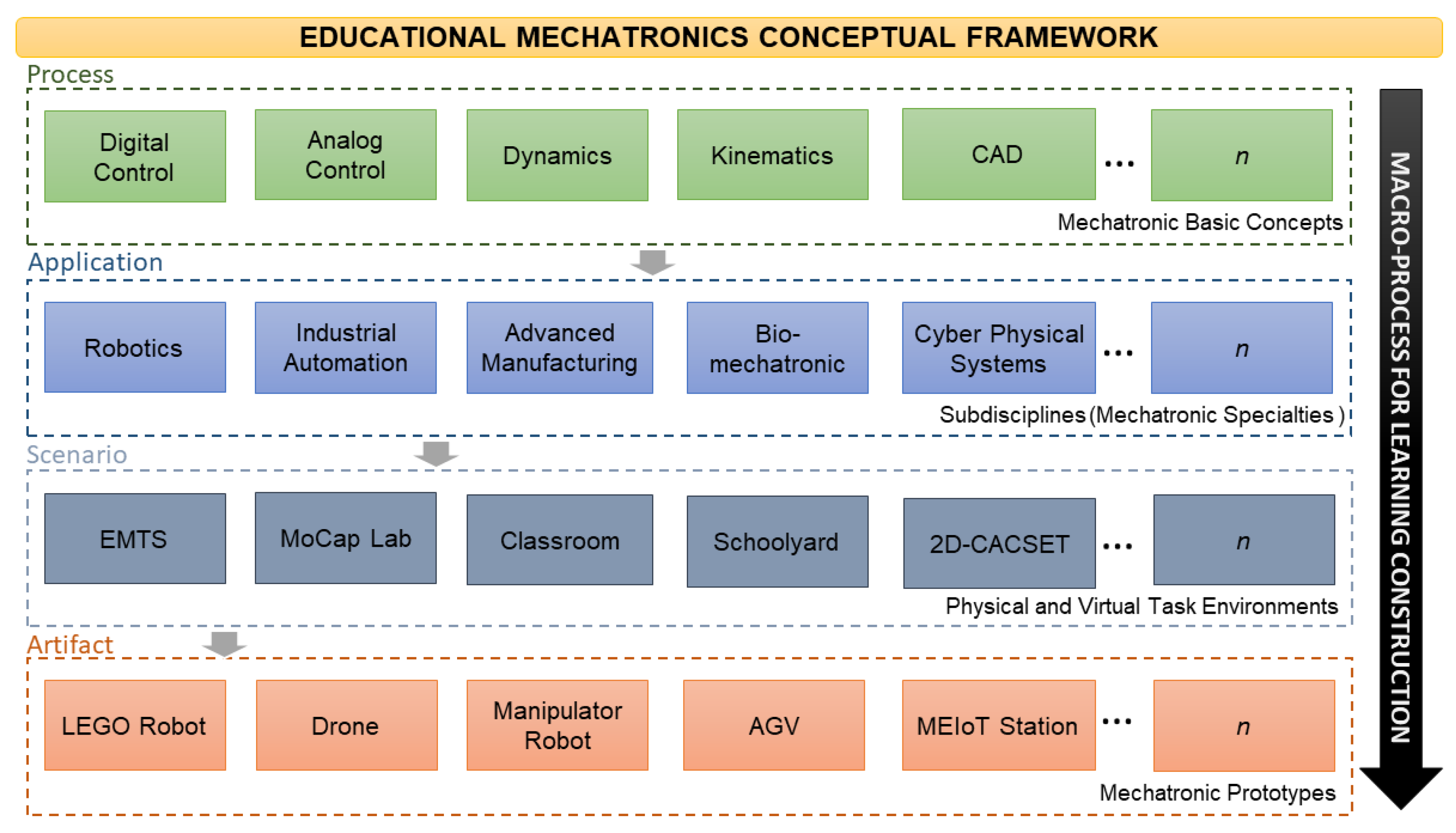

22], by extending the subprocess called EMCF Learning Construction Methodology (EMCF-LCM) to consider the learning scenario. This extended EMCF leads the student to understand abstract concepts from which mechatronics engineering tools are built via four reference perspectives; process, application, scenario, and artifact.

The process perspective is oriented to mechatronics’ basic concepts as a process. The application perspective includes all the applications from the basic concepts of mechatronics. Then, the scenario perspective contextualizes the application in terms of the physical environment, such as a laboratory and existing academic spaces, or, on the other hand, a virtual environment, serving as a remote laboratory, digital twin lab, and so on. Finally, as a result of the integration of these perspectives, mechatronics artifacts in the specific context are obtained; this is called the artifact perspective, which also has the potential for reuse within semantic mechatronics components. The extended EMCF perspectives are shown in

Figure 1.

The EMCF-LCM conducts students through three learning construction levels; concrete, graphic, and abstract learning. The concrete level refers to manipulating and experimenting with objects (mechatronics artifacts). At the graphic level, students relate the elements of reality (the concrete level) with symbolic representations. Finally, at the abstract level, the student associates the concrete and graphic levels with abstract concepts; it is about learning focused outside of reality and is responsible for a higher level of abstraction [

22].

It is essential to mention that we have previously used and developed several artifacts or mechatronic prototypes aligned within the EMCF, for example:

a robotic arm focused on the construction of the mechatronic concept of the workspace of a robot manipulator [

23]

a weather station focused on the construction of the mechatronic concept of linear regressions, quadratic, cubic approximations [

24]

a drone focused on the construction of the mechatronic concept of drone navigation by waypoints [

25]

an electronic Tag focused on the construction of the two-dimensional space concept [

26,

27]

Besides, these artifacts can be used in different robotic scenarios, in the sense of [

16], such as the MoCap laboratory, a remote lab, a classroom, and the schoolyard. It is worthwhile to mention that we have previously developed and used a learning scenario aligned within the EMCF: a two-dimensional Cartesian coordinate system educational toolkit (2D-CACSET) [

26,

27]. Since one of our research aims is to promote sustainable educational practices, we have decided to redesign the 2D-CACSET from a sustainable perspective, economically, environmentally, and socially [

28].

3. Implementation of the Educational Mechatronics Training System

The 2D-CACSET, presented in [

26], represents the basis of the new robotic scenario, the Educational Mechatronics Training System (EMTS). Therefore, the components of the 2D-CACSET are discussed, it requires a 2D board, laptop, and five electronic devices called Tags composed of one Ultra-Wide Band (UWB) sensor, Lithium-ion battery, and 3D-printed plastic enclosure. Its approximate production cost is around

$800 USD. To make the EMTS economically viable, a webcam to apply CV technology is chosen to locate an object in the 2D plane instead of the UWB sensor; this reduces the production cost. Moreover, to make the EMTS environmentally friendly, we decided to avoid using lithium-ion batteries. Considering these two design guidelines, we can generate a more significant social impact by proposing a more affordable robotic scenario for all; raising awareness of people’s needs. The EMTS can be used in any place. It just needs electrical power; therefore it does not requires a special educational facility-based training environment according to technological changes.

The EM training system is focused on promoting active learning in the navigation of mobile robots employing an observable scenario supported by a camera. The EM Training System comprises hardware (laptop, camera, structure, and whiteboard) and software (EM training system graphical user interface). For the EM training system to be used, a LEGO EV3 mobile robot is included to demonstrate its potential (See

Figure 2).

3.1. The EM Training System Hardware

The EM training system enables a localization method that materializes through CV algorithms and techniques, allowing real-time data, tracking the trajectory of a mobile robot, estimating angles, and finally employing this information in instructional activities. The minimum hardware requirements for the laptop running these algorithms are 512 MB of RAM, and 1 GHz CPU. The camera is the FIT0729 model, an 8000 K pixels driver-free camera of USB port with up to

resolution, and a

mm lens. Thanks to a structure with tripods, it is positioned at the height of 169 cm to obtain a complete workspace area view contained in a whiteboard (see

Figure 3). It is worth mentioning that LEGO EV3 mobile robot is placed on the whiteboard workspace to conduct the instructional activities.

3.2. EM Training System Software

The EM Training System graphical user interface is developed using pyQt5 (see

Figure 4); combining the OpenCV features complements the proposed overall educational framework. pyQt5 is one of the most popular libraries for developing modern desktop and mobile systems applications; it offers a high level of design and an extended list of useful widgets to implement in a graphical interface.

This interface application enables users to capture the robot motion movements into data (concrete level), allows them to virtualize the trajectory path and coordinates in the screen (graphic level), and finally, export the data for performing further analysis and calculations (abstract level). Various graphical elements and data information are displayed on the image depending on which one is selected. The image processing techniques and methods used in this work are described below.

3.2.1. Tracking and Localization Using Computer Vision

The proposed architecture corresponds with an indoor localization solution with 2D static camera features. This type of characteristic enables a viable solution for scenarios demanding high accuracy positioning in small spaces applying traditional image analysis [

29].

Figure 5 shows the complete image processing method to localize an object (LEGO EV3 mobile robot) implementing the OpenCV library in the python programming language. OpenCV is a powerful tool that includes most of the popular real-time CV algorithms oriented to efficient image processing. Moreover, the Numpy library operates arrays and matrices in the image processing procedure.

3.2.2. Camera Calibration

Camera calibration is necessary to estimate the parameters of the lens of cameras since they produce two central optical distortions; radial and tangential. The radial distortion originated due to the shape of the lens. In contrast, tangential distortion occurs by manufacturing the camera itself, deforming the image captured, causing straight lines to appear curved, and creating the effect where objects are closer than expected. Therefore, calibrating the camera allows us to minimize the distortions and measure the distance and the size of an object in the image [

30]. Thus, the implemented camera calibration technique requires capturing a set of calibration templates (chessboard pattern) with known square sizes from different orientations, detecting the square corners in the chessboard, and finally estimating the distortions coefficients, the intrinsic and extrinsic parameters of the camera [

31,

32]. This data enables us to undistort images and compute the real-world coordinates of objects.

A chessboard pattern containing 9 × 6 squares with

cm in size, printed on A4 paper, was employed to calibrate the camera. Then, forty images at different orientations were captured from the fixed-positioned camera. This procedure is depicted in

Figure 6.

The obtained values of the internal and external parameters of the camera are:

where

A is the intrinsic parameters matrix with

and

the focal elements expressed in pixel units,

and

the coordinates of the main point; usually at the image center. While the obtained distortion coefficients vector,

, is:

It is worthwhile to mention that further phase will use this data information.

3.2.3. Object Detection

The elements to be detected are the blue object placed in front of the robot and the green one in the back (see

Figure 7). The object detection process begins capturing the images (

Figure 8a), then, taking advantage of the physical characteristics of the Tags, we propose employing the color segmentation process, which extracts a specific color model. There are a few color models, such as RGB (Red, Green, and Blue), HSL (Hue, Saturation, and Lightness), and HSV (Hue, Saturation, and Value). Thus, the HSV color model was employed to detect the Tags; moreover, this model segmentation allows easier processing [

33]. In this way, The HSV parameters are set to segment blue and green color in two different channels as shown in

Figure 8b.

The next part of the process employs Canny detection; an algorithm widely used in image processing for object edge detection [

34]. The Canny operators choose the edge points by threshold approach; modifying these thresholds would detect the property of the edge of objects. Thus, before applying the canny detection, first, the image is converted to gray-scale; this way, the canny detection algorithm is used over a gray segmented image with a single figure (Tags). Then, the thresholds overstate the edge of the Tags (

Figure 8c), resulting in the detection of the object and finally assigning a Region of Interest (RoI) as is depicted in

Figure 8d.

3.3. Object Real-World Coordinates

To estimate the coordinates in the real world, it is necessary to relate the coordinates of their corresponding image projections in 2D. That is, given

obtain their three-dimensional equivalence

.

Figure 9 represents the pinhole camera model.

Equation (

2) is used to calculate the real-world coordinates of an object:

where

R is the rotation matrix of the camera,

T is the translation matrix of the camera,

A is the matrix of intrinsic parameters defined in Equation (

1),

z optical axis of the camera,

the coordinates of the projection points (pixels) in the image.

OpenCV library functions allow the user to get the camera’s extrinsic parameters (R and T) when the LEGO EV3 mobile robot is placed at the center of the camera’s optical axis . In this case, the z values equal the object’s height. In this application, the height of the LEGO EV3 mobile robot is 17 cm; however, when , a scaling factor is applied to compute the perspective.

3.4. Object Tracking

To avoid having many points displayed on the screen, only those coordinates where edges and corners are formed in the LEGO EV3 mobile robot’s path are of interest in this application. The implemented tracking method is the classic Shi-Tomasi method [

35] (see

Figure 10).

The process to track the object starts storing data of the robot path (

Figure 10a); this feature is activated from the user interface (explained in the next section). The captured data is employed in a new image; the picture only contains the path data (

Figure 10b). Later, the Shi-Tomasi method is applied to the new image, returning only those points where corners and edges are formed (

Figure 10c), finally those points are correlated to the coordinates in the real world and displayed on the screen (

Figure 10c).

3.5. LEGO EV3 Mobile Robot

Education benefits from educational robots, one of the most popular mobile robots is the LEGO Robot. Many educational projects employ these robots to explore diverse engineering subjects due to their high configuration flexibility. In this work, LEGO MINDSTORMS

® EV3 is used with a variant of the building instructions for Robot Educator, including two colored elements for detection purposes, as is shown in

Figure 7.

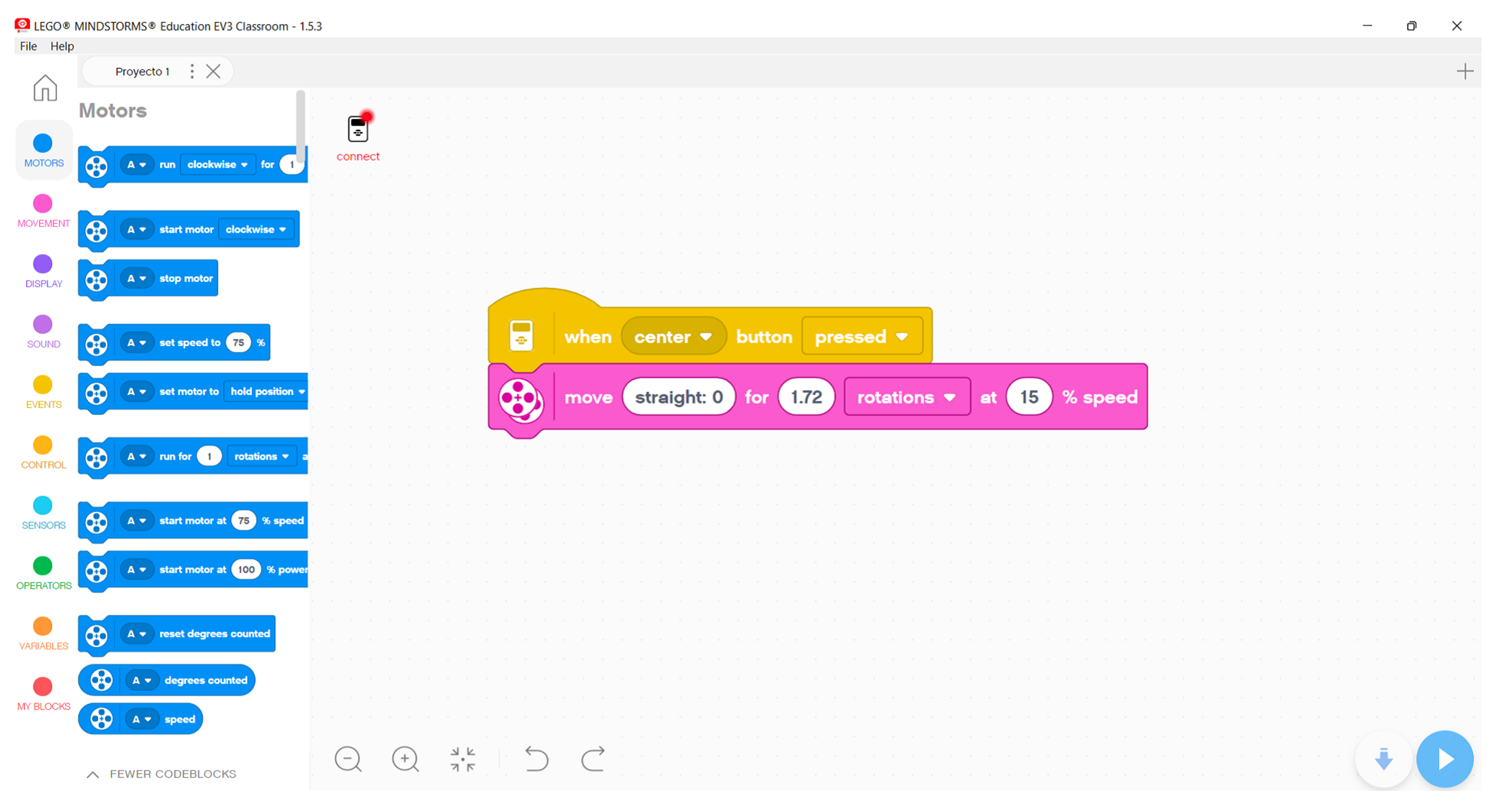

LEGO EV3 mobile robot is programmed using EV3 Classroom LEGO® Education, a standard LEGO icon-based software to create executable code for the EV3 containing the original LEGO firmware. It is a worthwhile highlight that even though employment the EV3 Classroom LEGO® Education for programming, any other programming tool can be implemented.

The practices and their corresponding instructional design are described in the following section.

4. Research Design Including the EM Training System

The proposed research design is based on the methodology presented in [

36], which requires knowing the population and defining a sample to collect data. Due to the nature of the EMTS, the population could include all the engineering students from any University. In contrast, the sample could include mechatronics engineering students from the first year. Once the sample is defined, two groups are formed: experimental and control.

For the experimental group, the conventional teaching methodology could be applied; this includes using LEGO EV3 mobile robot, whiteboard, and projector as didactic resources. For the control group, the proposed extended EMCF could be applied; this includes using the LEGO EV3 mobile robot and the EMTS and the application of practice developed in the instructional design (see

Section 4.1). This whole activity requires a class session of 60 min. Lastly, the students were asked to answer questions associated with one analytical thinking element process similar to [

36]. The complete methodology depicted in

Figure 11 is presented only for replicability of the research purposes since this work is focused on the development of the EMTS.

4.1. Instructional Design Including the EM Training System

This section describes the instructional design focused on constructing the mechatronic concepts: the line segment involved in mobile robotics. The instructional design is aligned with the EMCF, which involves three perspective entities: Dynamics (process) + Robotics (Application) + EM Training System (Scenario) and LEGO EV3 mobile robot (artifact). It consists of three hands-on-learning practices for the EMCF levels with the selected perspective developed in the subsequent subsections.

To conduct the practices, the student must initialize the EM training system user interface and place the LEGO EV3 mobile robot in the workspace. The system will localize the robot and add an arrow indicating the robot’s orientation; then, in the practices menu, select the practice to be conducted, as explained below.

Practice: Line Segment

This practice will help the student understand how a LEGO EV3 mobile robot executes movements in a line segment from point P to point Q. To achieve this, the EMCF-LCM is employed as follows:

The result of these instructions is shown in

Figure 13. Moreover, if necessary, the instructor and/or students can download the CSV file and open it using external tools (such as MatLab for python or MatLab). The data collected from the tag representing the position values with

x and

y can be plotted in MatLab as depicted in

Figure 14.

Figure 13.

Set of instructions for graphic level.

Figure 13.

Set of instructions for graphic level.

At the Abstract level, the student relates the symbols assigned at the graphic level with the assistance of the instructor involving the segment line concept as follows:

Line segment:

P and

Q represent two points in two-dimensional space with coordinates

and

, the portion

is called the line segment and represents the distances between

P and

Q, this can be calculated according to the Equation (

3).

then, substituting the captured data in the graphic level,

and

, we yield to

In this way, the measurement of the line segment is 30 cm, which means that the LEGO EV3 mobile robot traveled that distance when conducting the concrete level.

The final part of the practice is to replicate the initial movement from point

P to

Q programming; therefore, the student has to calculate the revolutions executed by the LEGO EV3 mobile robot to travel the previous line segment estimated. The dimensions of the wheels are 56 mm; therefore, the circumference is

cm, as is depicted in

Figure 15).

It is possible to obtain the distance the LEGO EV3 mobile robot travels by revolution using the Equation (

5)

The developed program for the LEGO EV3 mobile robot is depicted in

Figure 16.

To validate the program, the student positions the LEGO EV3 mobile robot over the

P point pointing in the direction of

Q and triggers the “Capture Data” button. Then, execute the program by pressing the center button; the robot performs the programmed revolutions and should reach

Q point according to the calculations. This sequence is available on

https://tinyurl.com/2az9s9j9 (accessed on 29 October 2022).

5. Results

Three static characteristics were considered to evaluate the performance of the EMTS: precision, accuracy, and resolution. As explained before, for a given

u and

v pixel coordinates, it is possible to estimate the real-world coordinates by employing the Equation (

2). Therefore, using these data makes it possible to determine the static characteristics mentioned before.

In this context, measurements were conducted employing a graduated table depicted in

Figure 17, the pitch between each circle is fifteen centimeters, and the center of the image is considering the

coordinate point. This way, the measurements consist of positioning the LEGO EV3 mobile robot in defined

X, and

Y coordinates points (each blue circle) and capturing one hundred readings for each position.

The result of the captured data can be seen in

Figure 18 and

Figure 19 for the EMTS accuracy assessment and the precision assessment, respectively.

5.1. EM Training System Accuracy Assessment

The difference between the captured measurements and the actual coordinates for each point was calculated for the accuracy assessment. Moreover, statistical means were employed for each point.

Figure 18 shows in better detail how the errors differ depending on the coordinates.

The results show that the furthest value recorded for the

X and

Y axis are

cm and

cm, respectively, according to the robot’s current position. Thus, the accuracy is equivalent to having a maximum error of

cm in determining the position. The peaks in

Figure 18 are produced due to the uncertainty in the measurements between the camera’s fixed position and the Lego Robot EV3 height at the different places (camera perspective), making a more significant error in estimating the Tag position.

5.2. EM Training System Precision Assessment

For precision assessment, the standard deviation is applied to the one hundred measurements to determine the variability of each measurement vs. the ground truth values.

Moreover, the variability of the dataset is depicted in great detail in

Figure 19.

The results for the precision assessment show a maximum variance value for the

X and

Y axis of

cm and

cm, respectively. Thus, the precision of the EMTS is equivalent to

cm. Several factors would influence the higher variance values recorded in

Figure 19, such as the perspective of the Tag object in the specific places over the board and the ambient light; those issues affect the determination of ROI and thus influence higher variance in the position estimation.

5.3. EM Training System Resolution Assessment

The sensor’s resolution is the minor change in the physical variable the system can detect. The relationship between the field of view (FOV) and the image projection is depicted in

Figure 20.

Then, the used image resolution in the camera is defined as

, while the focal length

is obtained in the intrinsic camera matrix calculated in Equation (

1), then, the horizontal and vertical angular field of view is calculated as follows:

Now, it is possible to solve for the field of view with a defined distance of 169 cm as follows:

Finally, the pixels per meter (PPM) concept is used to determine the EM training system resolution. The PPM value defines the number of units of pixels for each meter of the projection image that the camera is capturing.

Therefore, the results for the resolution assessment show px/m as horizontal and vertical resolution values, respectively. This means a resolution of m or =0.11 cm, which is an acceptable resolution to work with the activities of the EM training system.

6. Discussion

Industry 4.0 is a reality nowadays; as a matter of fact, industries are looking to hire well-taught applicants with the skills and abilities to face the challenges involved in adapting to the significant changes that Industry 4.0 brings. As an answer to the new demands of the industries, education has had to evolve quickly in the last three years due to the confinement that COVID-19 forced the world to be a part of it, changing the teaching-learning process from a face-to-face school system to digital education [

1,

2].

Most state-of-the-art developments focus on transforming a physical laboratory into a Remote Laboratory, Online Laboratory, Mobile Laboratory, Virtual Laboratory, among others [

6,

8,

10]. The work relies on adding an element to make that change, like an artifact or Internet of Things, to the in-place or off-line laboratory. Also, many other works are trying to develop educational kits, new kinds of robots, or innovations of some others like drones, manipulators, or mobile robots [

16,

17,

18]. In collaboration with some previous works where the EMCF methodology was applied, the main focus on the construction of mechatronic concepts was different in each of them, e.g., navigation [

25], linear regressions, quadratic, cubic approximations [

24], and cartesian coordinates system [

26,

27]. All these works were designed to generate educational mechatronic toolkits that enable the construction of different mechatronics concepts to interact with different artifacts and from the house. Also, they have the feature of expansion and reuse. Some of them were adapted to be used as a part of a remote laboratory [

37], and they are currently in use in some different institutions.

This work takes a step further, adding not only the artifacts and a new scenario but also a methodology called the extended EMCF focused on the teaching-learning process that can lead the student to appropriate complex robotics concepts through a methodology compost by three levels of developing construction of mechatronic concepts. Without the proper guidance and structured practices, students have another cool toy to play with, not taking advantage of what technology offers through these developed scenarios, artifacts, design practices, and methodology. Moreover, it is crucial to design an effective curriculum that should motivate all students to learn and continue to learn [

38]. They presented a research model that explains students’ behavioral intention to learn AI and its introduction to the curriculum for K-12 to improve students’ AI knowledge, skills, and attitude demanded by Industry 4.0. Their findings indicated that teachers or curriculum designers should choose available applications such as Google translation and teachable machines to foster students’ intention to learn about AI. Therefore the importance of creating new applications and platforms that promote the appropriation of the new technological advances driven by Industry 4.0.

Therefore, the EMTS based on computer vision for mobile robots aims to integrate Industry 4.0 elements into the curriculum for different Bachelor Programs such as Mechatronics. Bringing with it the development of abilities and skills demanded by the industry these days, as [

3,

7] mention in their respective works. The students need proper education and hands-on practices, like in a simulated real world, to realize how it is to get involved and practice what they are learning in the classroom. Besides, the EMTS can be used to evaluate how well the LEGO mobile robot is performing the task; moreover, the programming skills of the participant can be measured using a quantitative method and not only a qualitative one as it is usually done today.

Finally, it is essential to highlight that the presented work follows the universal principles for empowering learners: attainment-based instruction, task-centered instruction, personalized instruction, changed roles and curriculum [

39].