2. Background

Manufacturers are competing to satisfy the ever-changing market demands. This requires production lines to be adaptive, intelligent, and flexible enough to meet the updated requests. Business leaders and manufacturing managers have concluded that they should achieve an integration of business and industrial production. Such an integration requires considerable advancement in industrial process and strategies. Moreover, it is achievable only by integrating various facets of a company, including suppliers, production lines, and customers. This multi-faceted integration has been termed the Internet of Things (IoT), which is the main asset of Industry 4.0.

Originating from a German government strategy project [

3], the Fourth Industrial Revolution was an initiative strategy to transform manufacturing agents from fully physical systems to cyber-physical systems (CPS). Therefore, the foundation of Industry 4.0 is based on the CPS communicating with each other through the IoT. Real-time information exchange between CPS results in a large amount of data that requires an efficient and secure method of storage. Cloud storage is the most common solution. A great deal of analyses and processes is also required to obtain useful information from raw and huge data lakes. Combining the analyzed data with IoT, Industrial Internet was the next concept that emerged to bridge the digital and physical worlds.

The main drivers of Industry 4.0 can be listed as follows:

The Internet and IoT being available almost everywhere;

Business and manufacturing integration;

Digital twins of real-world applications;

Efficient production lines and smart products.

The new concepts introduced by Industry 4.0 include CPS, IoT, the smart factory, big data, cloud storage, and cybersecurity. When it comes to efficiency and costs, Industry 4.0 has decreased [

4]

Production costs by 10–30%;

Logistic costs by 10–30%;

Quality management costs by 10–20%.

Industry 4.0 applies IoT in the manufacturing workspaces and then analyzes the big data collected on cloud storage to efficiently increase autonomy and cybersecurity levels.

3. What is Industry 5.0 and Why is It Required?

Industry 4.0 is about automating processes and introducing edge computing in a distributed and intelligent manner. Its sole focus is to improve the efficiency of the process, and it thereby inadvertently ignores the human cost resulting from the optimization of processes. This is the biggest problem that will be evident in a few years when the full effect of Industry 4.0 comes into play. Consequently, it will face resistance from labor unions and politicians, which will see some of the benefits of Industry 4.0 neutralized as pressure to improve the employment number increases. However, it is not really necessary to be on the back foot when it comes to introducing process efficiency by means of introducing advanced technologies. It is proposed that Industry 5.0 is the solution we will need to achieve this once the backward push begins.

Furthermore, the world has seen a massive increase in environmental pollution beginning from the Second Industrial Revolution. However, unlike in the past several decades, the manufacturing industry is now more focused on controlling different aspects of waste generation and management and on reducing adverse impacts on the environment from its operation. Having environmental awareness is often considered a competitive edge due to the vast amount of support from government; international organizations like the UN, WHO, etc.; and even an ever-growing niche customer base that supports environmentally friendly companies. Unfortunately, Industry 4.0 does not have a strong focus on environmental protection, nor has it focused technologies to improve the environmental sustainability of the Earth, even though many different AI algorithms have been used to investigate from the perspective of sustainability [

5,

6,

7,

8] in the last decade. While the existing studies linking AI algorithms with environmental management have paved the way, the lack of strong focus and action leads to the need for a better technological solution to save the environment and increase sustainability. We envisage this solution to come out of Industry 5.0.

Bringing back human workers to the factory floors, the Fifth Industrial Revolution will pair human and machine to further utilize human brainpower and creativity to increase process efficiency by combining workflows with intelligent systems. While the main concern in Industry 4.0 is about automation, Industry 5.0 will be a synergy between humans and autonomous machines. The autonomous workforce will be perceptive and informed about human intention and desire. The human race will work alongside robots, not only with no fear but also with peace of mind, knowing that their robotic co-workers adequately understand them and have the ability to effectively collaborate with them. It will result in an exceptionally efficient and value-added production process, flourishing trusted autonomy, and reduced waste and associated costs. Industry 5.0 will change the definition of the word “robot”. Robots will not be only a programmable machine that can perform repetitive tasks but also will transform into an ideal human companion for some scenarios. Providing robotic productions with the human touch, the next industrial revolution will introduce the next generation of robot, commonly termed as cobots, that will already know, or quickly learn, what to do. These collaborative robots will be aware of the human presence; therefore, they will take care of the safety and risk criteria. They can notice, understand, and feel not only the human being but also the goals and expectations of a human operator. Just like an apprentice, cobots will watch and learn how an individual performs a task. Once they have learned, the cobots will execute the desired tasks as their human operators do. Therefore, the human experiences a different feeling of satisfaction while working alongside cobots.

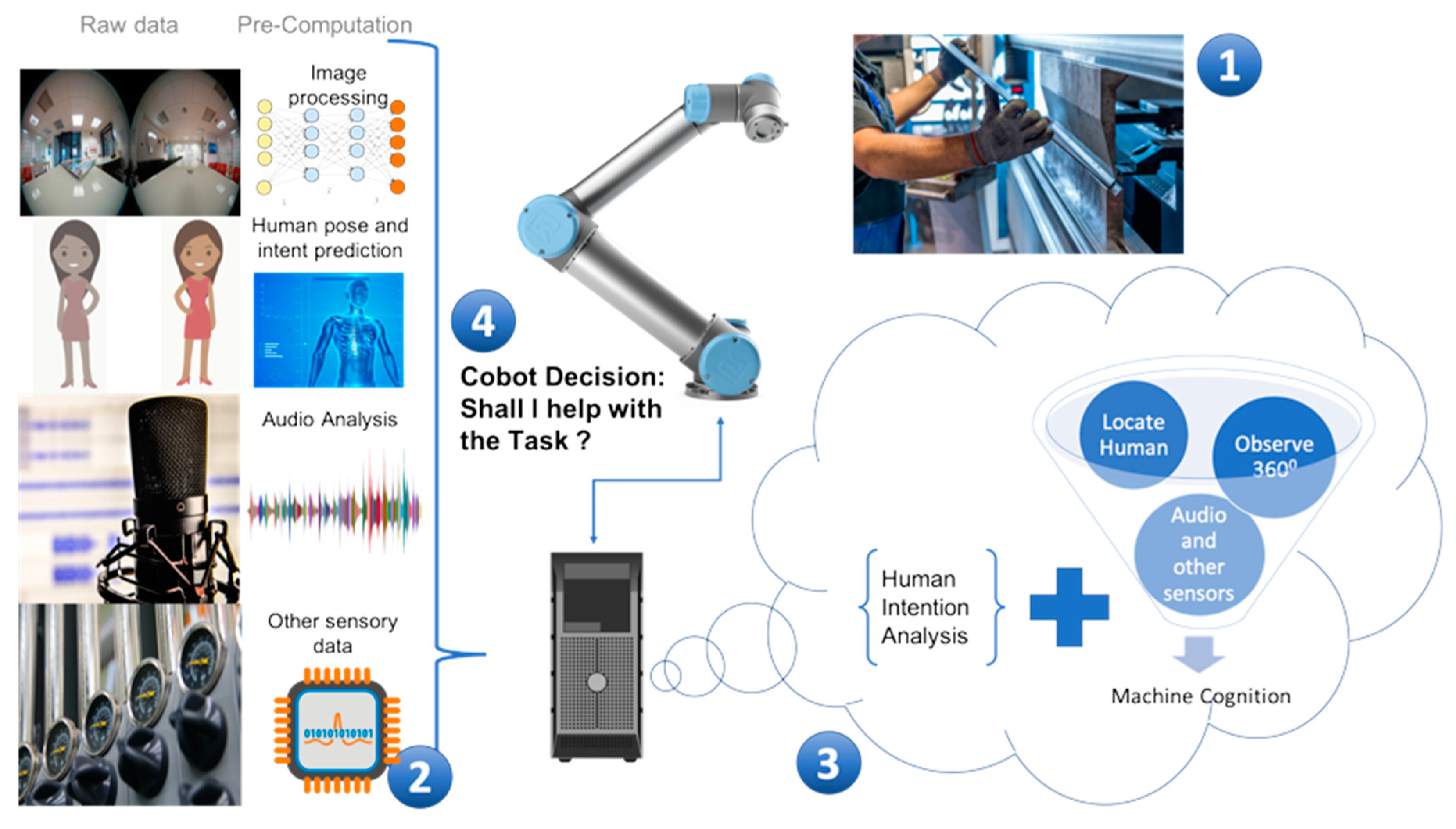

The concept of Industry 5.0 can be visualized using a production line example (shown in

Figure 2). It shows a human worker working on the assembly of an electro-mechanical machine. The human worker starts a task, and a robot observes the process using a camera on a gimbal. This camera works as the eye of the robot. The robot is also connected with a processing computer that takes the image, performs image processing, and learns the patterns using machine learning. It also observes the human, monitors the environment, and infers what the operator will do next using human intention analysis powered by deep learning. A crucial sensor that can be used to understand human intention is functional near-infrared spectroscopy (fNIRS) over a wireless communication channel to retrieve signals from the human brain. fNIRS is better suited for this task as it comes in the form of a headset and does not need time-consuming setup and calibration steps. Once the robot is confident about its prediction, it will attempt to help the human worker. It will be like another human standing with the human worker and attempting to help them, which will increase the overall process efficiency. In this example, the robot predicts that the human operator will use a certain part in the next step of the task. It then goes and fetches the part ahead of time and delivers it to the human when needed. The process occurs seamlessly so the human operator does not have to make any adjustments in his/her work process.

We anticipate that Industry 5.0 will create a new manufacturing role: Chief Robotics Officer (CRO). A CRO is an individual with expertise in understanding robots and their interactions with humans. The CRO will be responsible for making decisions for machines or robots to be added or removed from the environment/factory floor to achieve optimal performance and efficiency. CROs will have backgrounds in robotics, artificial intelligence, human factors modelling, and human–machine interaction. The CROs are better equipped with collaborative robotic technologies and, by harnessing power from advances in computation, will be suitably placed to make a positive impact on environment management as well. This will eventually increase the sustainability of human civilization by reducing pollution and waste generation and preserving the Earth.

4. Methodology for the Solution—What is Required for Industry 5.0?

As mentioned in the previous section, Industry 5.0 will solve the problems associated with the removal of human workers from different processes. However, it will need even more advanced technologies to achieve this, which are discussed below.

Networked Sensor Data Interoperability

Ranging from smart houses to autonomous manufactures, cobots, and other distributed intelligent systems, it makes sense that ubiquitous sensing and collection of big data is an unavoidable asset of the next industrial revolution and it is only possible through networked sensors. This also makes it possible to achieve faster analyses and customization processes. A network of sensors with some low-level intelligence and processing power could reduce the need for a high-bandwidth data transfer mechanism, while also allowing for some local preprocessing of data. This would, in turn, reduce network latency and overload, while also creating a level of “distributed intelligence” in the network. A common framework for information transfer, rather than a simple data transfer mechanism, will be needed to fully benefit from a sensor network. Once implemented, these networked sensors will open the possibility for unprecedented customization in manufacturing processes.

Multiscale Dynamic Modelling and Simulation: Digital Twins

With the intelligence of autonomous systems arises complexities in evaluation monitoring of the manufacturing setups. Visualization and modeling of the production line [

9] is a very useful tool for making policies and for managing and personalizing future products and product lines. A digital twin [

10] is “A virtual model of a process, product or service” [

11]. Bridging the virtual and physical worlds, digital twins provide manufacturing units with the ability to analyze data, monitor the production process, manage risk prior to its occurrence, reduce downtime, and further develop by simulations. With recent advancements in big data processing and artificial intelligence, it is now possible to create even more realistic digital twins that properly model different operating situations and characteristics of a process. When accounting for uncertainty in the process, digital twins present an immense opportunity by allowing reduced wastage in the process flow and system design. Coupled with state-of-the-art visualization and modelling techniques, technologies like digital twins are set to increase the productivity of all sectors in any industry.

Shopfloor Trackers

Shopfloor trackers improve real-time production tracking. They allow the association of sales orders from customers with production orders and supplementary materials. Subsequently, they lead to optimal and efficient resource management, which is a critical objective for manufacturers. Shofloor trackers also allow for real-time tracking of assets and process flow, which paves the way for online process optimization in the production process. These trackers can be implemented in the form of networked sensors or by utilizing the benefits offered by networked sensors. They could also lead to reductions in material wastage, theft prevention, and prevention of mismanagement of assets when coupled with technologies like IoT and machine learning.

Virtual Training

This started in 1997 and is a kind of training in which the trainee learns a specific task or skill in a virtual or simulated environment. In some cases, the trainer and the trainee are based in different locations. This type of training significantly reduces the costs and time for both parties. It is also flexible enough to be updated and reconfigured for new training courses. As an example, the haptically enabled Universal Motion Simulator (UMS) in

Figure 3 (Patent #9174344, filed in 2007) [

12,

13,

14] provides a safe and accurate yet cost-effective environment for training drivers, pilots, fire fighters, medical professionals, etc., far from the danger and risks they might face in real venues or without imposing risk to others.

Virtual training is also very important in creating a skilled workforce without risking the productivity of a running process or endangering a human worker. It is especially important in jobs and tasks that pose some form of risk due to a repeated action or posture during work. For example, if coupled with human posture analysis, virtual training can largely benefit a broad spectrum of the workforce by providing them with proper, cost-effective training without exposing them to potentially dangerous training scenarios.

Virtual training can be facilitated through a combination of virtual and augmented reality techniques. When combined with recent advances in graphical processing units (GPUs) and potentially big data and artificial intelligence, virtual training suddenly becomes a lot more realistic and beneficial than it was in the past. Furthermore, haptics technologies and devices can be very advantageous in virtual training as they can mimic the real touch and feel of the actual scenarios and activities involved.

Intelligent Autonomous Systems

Autonomously controlling production lines requires a great deal of artificial intelligence applied in the software agents operating in the factory. Autonomy in Industry 5.0 is considerably different from what was referred to as automation in Industry 3.0. Exercising autonomy that performs useful functions is very difficult if not impossible without artificial intelligence (AI). AI techniques allow machines to learn and therefore autonomously execute a desired task. State-of-the-art classification [

15,

16,

17], regression [

18,

19,

20], and clustering methodologies [

21,

22] empowered by deep learning strategies result in intelligent systems and solutions that can make decisions under unforeseen circumstances [

23,

24,

25]. Moreover, transfer learning is a critical aspect of implementation and personalization in Industry 5.0 environments, where most of the systems suffer from uncertainties. Transferring the gained knowledge and skill from a digital/virtual system to its physical twin, securely and robustly, plays a very important role in the Fifth Industrial Revolution.

Advances in Sensing Technologies and Machine Cognition

Intelligent autonomous systems will greatly depend on replication of the senses that we, humans, use to cooperate with others and learn in an adaptive manner [

26,

27]. Computer vision [

28], combined with deep learning [

29], reinforcement learning, and GPU-based computation [

30], has shown great promise in replicating primitive vision and sensory capabilities. However, for Industry 5.0 cobots, these capabilities must be improved significantly. For example, a human worker will stop working when he/she suspects something unnatural in his/her workspace, even when there is nothing wrong in plain sight but using their emotional intelligence. This sort of anticipatory behavior is very important in preventing workplace accidents. At this moment, our vision and cognition technologies cannot achieve this. In addition to vision and sensory technologies, machine cognition needs to improve in order to make the best judgements in an ever-changing workplace situation. Developing a highly adaptive system can achieve this capability, but it is not trivial to build such a system because, with our current technologies, no model, data, or rule-based system can accomplish this on its own. Furthermore, other sensory technologies and their analyses must be improved in order to replicate what a human operator would normally do in a given scenario.

Figure 4 describes the recommended operating principle of cobots for an assistive task in a trivial workplace task.

Even a simple assistive task, as described in

Figure 4, is complex for a cobot as a human operator normally makes, both consciously and subconsciously, many decisions before performing such a task. They will estimate the need for assistance, judge the risk in offering assistance, watch for safety factors, and then safely approach to offer help. Since cobots will cooperate with a human in the presence of other humans and machines, they need to have similar decision-making mechanisms built into their system, which requires advanced perception, localization, vision, and cognition abilities, along with improvement in computation power in embedded platforms. The current pace and trend in deep learning, machine learning, and embedded systems hint that more advances in these fields will greatly assist in achieving these required capabilities for a cobot.

Deep learning methods have recently shown promising performance in the field of robotic and computer vision, specifically. These methods have provided robots and intelligent machines with reliable cognition and visualization capability, which is necessary in autonomous applications, including in cobots. Deep learning strategies are basically founded on artificial neural networks with a comparatively large number of layers in their structure.

Figure 5 depicts a typical schematic of a multilayer neural network, generally called a deep neural network (DNN). The main asset of the deep learning algorithms is that they perform much better than conventional learning methods as the amount of training data increases. In other words, the more training data, the greater the effectiveness of deep learning methods. Interestingly, the performance efficiency of deep learning techniques improves with increasing quantity of training data, while the performance of traditional learning methods will become saturated if the training data exceed the optimum level (see

Figure 6).

Another example of smart sensing involves using the human brain as the source of signals. This can be achieved by electroencephalography (EEG), functional magnetic resonance imaging (fMRI), or functional near-infrared spectroscopy (fNIRS). Among these devices, fNIRS is portable and easier to use, due to lower setup time and built-in wireless connectivity for data transfer in most available headsets. These fNIRS headsets effectively capture brain activations and can be used for a wide range of tasks, including signal analysis, intention prediction, and contextual awareness. For example, such fNIRS devices can be used in a medical setup where an operator can control a robotic arm, equipped with a diagnostic or surgical instrument, to perform a certain task. These tasks can be as simple as handing over an instrument to the operator or may be as complex as performing an operation on a human body.

Figure 7 depicts such a futuristic setup where a universal robot is equipped with an ultrasound device and a human operator is controlling it in order to perform a scanning procedure.

5. How Industry 5.0 Will Affect Manufacturing Systems

Previous industrial revolutions demonstrate that manufacturing systems and strategies have been continuously changing towards greater productivity and efficiency. Although many conferences and symposia are being held with a focus on Industry 5.0, there are still several manufacturers and industry leaders under the belief that it is too soon for a new industrial revolution [

31]. On the other hand, accepting the next industrial revolution requires the adoption, standardization, and implementation of new technologies, which needs its own infrastructure and developments.

Industry 5.0 will bring unprecedented challenges in the field of human–machine interaction (HMI) as it will put machines very close to the everyday life of any human. Even though we are obsessed with machines such as programmable assistive devices and programmable cars, we do not consider them a version of cobots (even though the differences are not that great from a certain perspective), mostly because of their shape. Cobots will be very different as their organization and introduction will contain human-like functionalities such as gripping, pinching, and interaction based on intention and environmental factors. We also anticipate that Industry 5.0 will create many jobs in the field of HMI and computational human factors (HCF) analysis.

Industry 5.0 will revolutionize manufacturing systems across the globe by taking away dull, dirty, and repetitive tasks from human workers wherever possible. Intelligent robots and systems will penetrate the manufacturing supply chains and production shopfloors to an unprecedented level. This will be made possible by the introduction of cheaper and highly capable robots, made up of advanced materials such as carbon fiber and lightweight but strong materials, powered by highly optimized battery packs, cyber attack hardened, with stronger data handling processes (i.e., big data and artificial intelligence), and a network of intelligent sensors. Industry 5.0 will increase productivity and operational efficiency, be environmentally friendly, reduce work injury, and shorten production time cycles. However, contrary to immediate intuition, Industry 5.0 will create more jobs than it takes away. A large number of jobs will be created in the intelligent systems arena, AI and robotics programming, maintenance, training, scheduling, repurposing, and invention of a new breed of manufacturing robots. In addition, since repetitive tasks need not be performed by a human worker, it will allow for creativity in the work process to be boosted by encouraging everyone to innovatively use different forms of robots in the workplace.

Furthermore, as a direct impact of Industry 5.0, a large number of start-up companies will build a new ecosystem of providing custom robotic solutions, in terms of both hardware and software, across the globe. This will further boost the global economy and increase cash flow across the globe.