Why Students Have Conflicts in Peer Assessment? An Empirical Study of an Online Peer Assessment Community

Abstract

:1. Introduction

2. Literature Review and Theoretical Framework

2.1. Literature

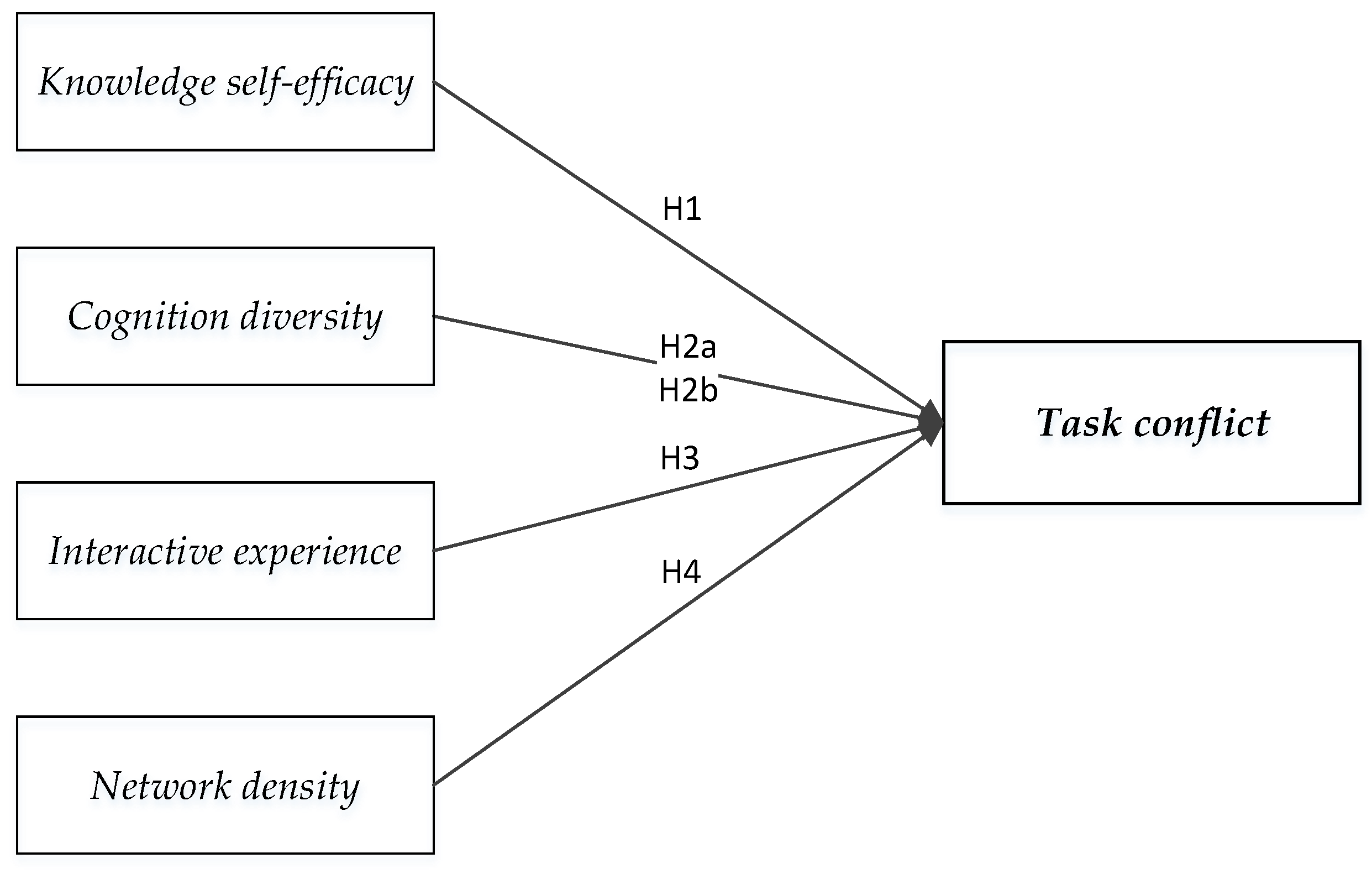

2.2. Hypotheses and Framework

2.2.1. Knowledge Self-Efficacy

2.2.2. Cognitive Diversity

2.2.3. Interactive Experience

2.2.4. Network Density

3. Measurement

3.1. Research Instrument

3.2. Data Collection and Procedure

3.3. Variables and Measurement

3.3.1. Dependent Variable

3.3.2. Independent Variables

3.4. Regression Analysis

4. Results

5. Discussion and Conclusions

5.1. Finding

5.2. Implications

5.3. Limitations and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Anderson, N.; Burch, G.S.J. Measuring person-team fit: Development and validation of the team selection inventory. J. Manag. Psychol. 2004, 19, 406–426. [Google Scholar]

- Davies, W.M. Groupwork as a Form of Assessment: Common Problems and Recommended Solutions. High. Educ. 2009, 58, 563–584. [Google Scholar] [CrossRef]

- Li, H.; Xiong, Y.; Zang, X.; LKornhaber, M.; Lyu, Y.; Chung, K.S.K.; Suen, H. Peer assessment in the digital age: A meta-analysis comparing peer and teacher ratings. Assess. Eval. High. Educ. 2016, 41, 245–264. [Google Scholar] [CrossRef]

- Wang, Y.; Li, H.; Feng, Y.; Jiang, Y.; Liu, Y. Assessment of programming language learning based on peer code review model: Implementation and experience report. Comput. Educ. 2012, 59, 412–422. [Google Scholar] [CrossRef]

- Roscoe, R.D.; Chi, M.T.H. Tutor learning: The role of explaining and responding to questions. Instr. Sci. 2008, 36, 321–350. [Google Scholar] [CrossRef]

- Miklas, E.J.; Kleiner, B.H. New developments concerning academic grievances. Manag. Res. News 2003, 26, 141–147. [Google Scholar] [CrossRef]

- Topping, K.J. Methodological quandaries in studying process and outcomes in peer assessment. Learn. Instr. 2010, 20, 339–343. [Google Scholar] [CrossRef]

- Haggis, T. What have we been thinking of? A critical overview of 40 years of student learning research in higher education. Stud. High. Educ. 2009, 34, 377–390. [Google Scholar] [CrossRef]

- Curşeu, P.L.; Janssen, S.E.; Raab, J. Connecting the dots: Social network structure, conflict, and group cognitive complexity. High. Educ. 2012, 63, 621–629. [Google Scholar] [CrossRef]

- Yuni, P.; Husamah, H. Self and Peer Assessments in Active Learning Model to Increase Metacognitive Awareness and Cognitive Abilities. Int. J. Instr. 2017, 10, 185–202. [Google Scholar]

- Driver, M.J.; Streufert, S. Integrative Complexity: An Approach to Individuals and Groups as Information-processing Systems. Adm. Sci. Q. 1969, 14, 272–285. [Google Scholar] [CrossRef]

- Moghavvemi, S.; Paramanathan, T.; Rahin, N.M.; Sharabati, M. Student’s perceptions towards using e-learning via Facebook. Behav. Inf. Technol. 2017, 29, 1–20. [Google Scholar] [CrossRef]

- Hsu, M.H.; Ju, T.L.; Yen, C.H.; Chang, C.M. Knowledge sharing behavior in virtual communities: The relationship between trust, self-efficacy, and outcome expectations. Int. J. Hum. Comput. Stud. 2007, 65, 153–169. [Google Scholar] [CrossRef]

- Jin, J.; Li, Y.; Zhong, X.; Zhai, L. Why users contribute knowledge to online communities. Inf. Manag. 2015, 52, 840–849. [Google Scholar] [CrossRef]

- Stebbins, M.W.; Shani, A.B. Organization design and the knowledge worker. Leadersh. Organ. Dev. J. 1995, 16, 23–30. [Google Scholar] [CrossRef]

- Hildreth, P.; Kimble, C.; Wright, P. Communities of practice in the distributed international environment. J. Knowl. Manag. 2000, 4, 27–38. [Google Scholar] [CrossRef]

- Ellis, A.P.; Hollenbeck, J.R.; Ilgen, D.R.; Porter, C.O.; West, B.J.; Moon, H. Team learning: Collectively connecting the dots. J. Appl. Psychol. 2003, 88, 821–835. [Google Scholar] [CrossRef]

- Rahim, M.A. Empirical studies on managing conflict. Int. J. Confl. Manag. 2000, 11, 5–8. [Google Scholar] [CrossRef]

- Nahapiet, J.; Ghoshal, S. Social Capital, Intellectual Capital, and the Organizational Advantage. Knowl. Soc. Cap. 1998, 23, 242–266. [Google Scholar]

- Crossan, M.M. The Knowledge-Creating Company: How Japanese Companies Create the Dynamics of Innovation. J. Int. Bus. Stud. 1996, 27, 196–201. [Google Scholar] [CrossRef]

- Thomas, K.W. Conflict and conflict management: Reflections and update. J. Organ. Behav. 2010, 13, 265–274. [Google Scholar] [CrossRef]

- Kurtzberg, T.R.; Mueller, J.S. The influence of daily conflict on perceptions of creativity: A longitudinal study. Int. J. Confl. Manag. 2005, 16, 335–353. [Google Scholar]

- Schweiger, D.M.; Sandberg, W.R.; Rechner, P.L. Experiential effects of dialectical inquiry, devil’s advocacy, and consensus approaches to strategic decision making. Acad. Manag. J. 1989, 32, 745–772. [Google Scholar]

- Sterling, S. Higher Education, Sustainability, and the Role of Systemic Learning. High. Educ. Chall. Sustain. 2004, 49–70. [Google Scholar] [CrossRef]

- Mannix, J.E.A. The Dynamic Nature of Conflict: A Longitudinal Study of Intragroup Conflict and Group Performance. Acad. Manag. J. 2001, 44, 238–251. [Google Scholar]

- Stock, R. Drivers of Team Performance: What Do We Know and What Have We Still To Learn? Schmalenbach Bus. Rev. 2004, 56, 274–306. [Google Scholar] [CrossRef]

- Wasko, M.L.; Faraj, S. Why Should I Share? Examining Social Capital and Knowledge Contribution in Electronic Networks of Practice. MIS Q. 2005, 29, 35–57. [Google Scholar] [CrossRef]

- Fullwood, R.; Rowley, J.; Delbridge, R. Knowledge sharing amongst academics in UK universities. J. Knowl. Manag. 2013, 17, 123–136. [Google Scholar] [CrossRef]

- De Wit, F.R.; Greer, L.L.; Jehn, K.A. The paradox of intragroup conflict: A meta-analysis. J. Appl. Psychol. 2012, 97, 360–390. [Google Scholar] [CrossRef]

- De Dreu, C.K.; Weingart, L.R. Task versus relationship conflict, team performance, and team member satisfaction: A meta-analysis. J. Appl. Psychol. 2003, 88, 741–749. [Google Scholar] [CrossRef]

- Zhu, Q.; Carless, D. Dialogue within peer feedback processes: Clarification and negotiation of meaning. High. Educ. Res. Dev. 2018, 37, 883–897. [Google Scholar] [CrossRef]

- Harrison, D.A.; Newman, D.A.; Roth, P.L. How Important Are Job Attitudes? Meta-Analytic Comparisons of Integrative Behavioral Outcomes and Time Sequences. Acad. Manag. J. 2006, 49, 305–325. [Google Scholar] [CrossRef]

- Allen, R.S.; Dawson, G.; Wheatley, K.; White, C.S. Perceived diversity and organizational performance. Empl. Relat. 2008, 30, 20–33. [Google Scholar] [CrossRef]

- Carless, D. Trust, distrust and their impact on assessment reform. Assess. Eval. High. Educ. 2009, 34, 79–89. [Google Scholar] [CrossRef]

- Rowe, D. Education for a sustainable future. Science 2007, 317, 323–324. [Google Scholar] [CrossRef]

- Zack, M.H. Developing a Knowledge Strategy. Calif. Manag. Rev. 1999, 41, 125–145. [Google Scholar] [CrossRef]

- Lurey, J.S.; Raisinghani, M.S. An empirical study of best practices in virtual teams. Inf. Manag. 2001, 38, 523–544. [Google Scholar] [CrossRef]

- Avgar, A.C.; Neuman, E.J. Seeing Conflict: A Study of Conflict Accuracy in Work Teams. Negot. Confl. Manag. Res. 2015, 8, 65–84. [Google Scholar] [CrossRef]

- Labianca, G.; Gray, B.B. Social Networks and Perceptions of Intergroup Conflict: The Role of Negative Relationships and Third Parties. Acad. Manag. J. 1998, 41, 55–67. [Google Scholar]

- Thoresen, C.J.; Bradley, J.C.; Bliese, P.D.; Thoresen, J.D. The big five personality traits and individual job performance growth trajectories in maintenance and transitional job stages. J. Appl. Psychol. 2004, 89, 835–853. [Google Scholar] [CrossRef] [Green Version]

- Barber, J.P. Integration of Learning: A Grounded Theory Analysis of College Students’ Learning. Am. Educ. Res. J. 2012, 49, 590–617. [Google Scholar] [CrossRef] [Green Version]

- Peralta, C.F.; Saldanha, M.F. Knowledge-centered culture and knowledge sharing: The moderator role of trust propensity. J. Knowl. Manag. 2014, 18, 538–550. [Google Scholar] [CrossRef] [Green Version]

- Paten, C.J.K. International Journal of Sustainability in Higher Education. Int. J. Sustain. High. Educ. 2000, 14, 25–41. [Google Scholar]

- Reagans, R.; Mcevily, Z.B. How to Make the Team: Social Networks vs. Demography as Criteria for Designing Effective Teams. Adm. Sci. Q. 2004, 49, 101–133. [Google Scholar]

- Lejk, M.; Wyvill, M. Peer Assessment of Contributions to a Group Project: A comparison of holistic and category-based approaches. Assess. Eval. High. Educ. 2001, 26, 61–72. [Google Scholar] [CrossRef]

- Oh, H.; Chung, M.H.; Labianca, G. Group Social Capital and Group Effectiveness: The Role of Informal Socializing Ties. Acad. Manag. J. 2004, 47, 860–875. [Google Scholar] [CrossRef]

- Fonseca, A.; Macdonald, A.; Dandy, E.; Valenti, P. The State of Sustainability Reporting in Universities. Int. J. Sustain. High. Educ. 2011, 12, 67–78. [Google Scholar] [CrossRef]

- Gardner, W.; Mulvey, E.P.; Shaw, E.C. Regression analyses of counts and rates: Poisson, overdispersed Poisson, and negative binomial models. Psychol. Bull. 1995, 118, 392–404. [Google Scholar] [CrossRef]

| Variable Name | Measure Item | Description |

|---|---|---|

| Dependent variable | ||

| Conflict task | Objection | The number of objections to review suggestions. |

| Independent variable | ||

| Knowledge self-efficacy | Score | A test score of the participant. |

| Cognition diversity | Suggestion | The number of general or specific knowledge suggestions for the participant. |

| Interactive experience | Praise | The number of praise for the participant. |

| Network density | Communication | The number of communication between members. |

| Variable | Mean | S.D. | Min | Max |

|---|---|---|---|---|

| Specific knowledge provided | 27.476 | 30.208 | 0 | 194 |

| General knowledge provided | 26.393 | 20.239 | 0 | 86 |

| Praise provided | 34.94 | 22.156 | 0 | 95 |

| Task conflict | 5.583 | 5.698 | 0 | 28 |

| Communication | 8.131 | 15.017 | 0 | 90 |

| Score | 14.524 | 5.649 | 4 | 27 |

| Variables | (1) | (2) | (3) | (4) | (5) |

|---|---|---|---|---|---|

| (1) Specific knowledge provided | 1.000 | ||||

| (2) General knowledge provided | 0.581 | 1.000 | |||

| (3) Praise provided | 0.154 | 0.131 | 1.000 | ||

| (4) Communication | 0.361 | 0.199 | 0.114 | 1.000 | |

| (5) Score | 0.517 | 0.311 | 0.233 | 0.289 | 1.000 |

| Task Conflict | Coef. | S.E. | [95% Conf | Interval] | Sig |

|---|---|---|---|---|---|

| Specific knowledge provided | −0.001 | 0.003 | −0.007 | 0.004 | ns |

| General knowledge provided | 0.018 | 0.006 | 0.007 | 0.029 | *** |

| Praise provided | −0.004 | 0.004 | −0.011 | 0.003 | ns |

| Communication | 0.013 | 0.003 | 0.006 | 0.019 | *** |

| Score | 0.034 | 0.014 | 0.006 | 0.061 | ** |

| Mean dependent var | 5.583 | SD dependent var | 5.698 | ||

| Akaike crit. (AIC) | 496.118 | Number of obs | 84.000 | ||

| Bayesian crit. (BIC) | 510.743 | ||||

| Task Conflict | Coef. | S.E. | [95% Conf | Interval] | Sig |

|---|---|---|---|---|---|

| Specific knowledge provided | 0.004 | 0.005 | −0.006 | 0.013 | ns |

| General knowledge provided | 0.018 | 0.005 | 0.008 | 0.028 | *** |

| Praise provided | −0.005 | 0.004 | −0.013 | 0.002 | ns |

| Communication | 0.014 | 0.005 | 0.005 | 0.023 | *** |

| Score | 0.037 | 0.016 | 0.006 | 0.067 | ** |

| Mean dependent var | 5.583 | SD dependent var | 5.698 | ||

| Akaike crit. (AIC) | 438.115 | Number of obs | 84.000 | ||

| Bayesian crit. (BIC) | 455.131 | ||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zong, Z. Why Students Have Conflicts in Peer Assessment? An Empirical Study of an Online Peer Assessment Community. Sustainability 2019, 11, 6807. https://doi.org/10.3390/su11236807

Wang Y, Zong Z. Why Students Have Conflicts in Peer Assessment? An Empirical Study of an Online Peer Assessment Community. Sustainability. 2019; 11(23):6807. https://doi.org/10.3390/su11236807

Chicago/Turabian StyleWang, Yanqing, and Zheng Zong. 2019. "Why Students Have Conflicts in Peer Assessment? An Empirical Study of an Online Peer Assessment Community" Sustainability 11, no. 23: 6807. https://doi.org/10.3390/su11236807