1. Introduction

Given the demand for information about universities and the object to compare them, university ranking systems were developed in many countries of the world (e.g., Australia, Canada, the UK, the US) [

1], but they were soon followed by Global University Rankings (GURs), and are a key factor in knowing a university’s performance, productivity and quality according to its position in these rankings. GURs emerged after 2003 to measure university performance. They evaluate universities’ management by using indicators associated with intangible assets, like organizational reputation or institutional prestige, and they employ different methodologies to assess their Intellectual Capital (IC) [

2]. The most currently available schemes for the performance-based ranking of Universities are Academic Ranking of World Universities (ARWU), best known as Shanghai Ranking’s Academic Ranking (since 2003), Quacquarelli Symonds (QS) World University Rankings (since 2004), and Times Higher Education (THE) World University rankings (since 2010). These GURs use a variety of criteria, including productivity, citations, awards, reputation, etc., while others are Ranking Web of Universities or Webometrics (since 2004), and Leiden and Scimago that employ only bibliometric indicators [

3].

Generally speaking, the parameters or indicators that these GURs apply are related to university research activity (citations, number of publications, industry income, number of highly cited researchers on staff, etc.) and training activity (number of alumni receiving Nobel Prizes (NP) or Fields Medals (FM), academic reputation, faculty/student ratio, etc.). Proposals have been put forward to modify GURs according to their websites [

4]. However, each GUR uses a series of different parameters to produce its ranking [

5] to determine each university’s position in rankings. For instance, in the ARWU system, three indicators of faculty members who have won NP and FM, and papers published in Nature and Science and in Science Citation Index and Social Science Citation Index journals, predict the ranking of universities. For the QS and THE systems, the more powerful contributors to the ranking of universities are expert-based reputation indicators [

6]. The distinct selection of indicators, their institutional coverage, rating methods, and the normalization of these GURs all influence not only the ranking positions of given institutions [

2,

7,

8] but also all the universities included in each GUR [

9].

Moreover, corporate social responsibility (CSR) or sustainability [

10] in the business world is understood from three areas of action or dimensions: the economic area, the social area, and the environmental area. A study [

11] argues that practitioners require scholars to reduce the ambiguity between the IC and its expected results. This would open the door to a potentially productive way of understanding IC and the complexity of economic, social, and environmental values. Some proposals to measure the CSR or corporate sustainability of companies and countries that have been prepared by private companies currently exist, and other open-access rankings have been developed, but these CSR rankings only include companies and not universities.

Universities as knowledge acquisition centers are key and necessary to implement sustainability policies [

12]. They must also play a critical decisive role in developing and promoting sustainability in environmental, economic, and social terms by including these three CSR dimensions because the performance of those institutions that integrate sustainability into its policies, curricula, and academic activities has a positive impact [

13,

14,

15,

16,

17]. Universities are drivers behind the achievement of the sustainability culture in society because they come over as models of sustainable development [

18]. The roles of universities’ sustainability must be extended so its social integral impact covers a wide scope [

19]. To this end, innovation is a relevant and influential factor that promotes sustainable development, and in such a rapidly growing field as environmental sustainability at universities [

20]. Sustainability reporting by universities has been considered useful tools for both accountability and improving socio-environmental performance. However, the literature shows that sustainability reporting by universities is still in its early stages, and relatively very few institutions currently publish sustainability reports [

21]. The use of the Information and Communication Technologies (ICT) is important to improve education quality through good teaching practices while generating environmental awareness and creating sustainable spaces [

22].

Universities have to contribute to overcoming the main twenty-first-century challenges, such as more environmental and socio-economic crises, unequal pay in countries, and political instability. To do so, they must integrate the sustainable development concept into future organizations, research, and education by training professionals in knowledge, competences, and skills to solve ecological, social, and economic problems in societies as a whole [

23]. However, structuring environmental sustainability efforts in accordance with the environmental management performance concept reveals a major weakness in the environmental sustainability management of higher education institutions [

24].

For all these reasons, universities play a key role in adopting policies for sustainable development-focused education, which must be set up in different dimensions by a holistic and integrative approach applied to all the fields that sustainability covers [

25]. It is important to see universities’ core activities as a provider of research and education in sustainability, and their activities as an organization. Systematic engagement activities have been suggested to benefit both the university’s ability to manage internal university processes (by learning from its peers) and its ability to produce the right graduates and knowledge [

26]. One study demonstrates that most analyzed universities do not yet seem to fully capitalize on the relation between economic effectiveness and socio-environmental efficiency. This situation could be a factor that slows down the diffusion of sustainability principles in university governance [

27].

Implementing green policies into campuses tends to be the first step that universities take toward a natural environment. They are concerned mainly about energy efficiency indicators, but importance must also be attached to other aspects like waste management, preserving and saving water, transport, and research to achieve all-round long-term sustainability [

14]. The green spaces on campuses can provide important benefits for their users, but not enough attention has been paid to them [

28]. Universities have significant impacts on greenhouse gas emissions because they contribute to transport on campuses, use water and energy, generate waste, etc., to a great extent Furthermore, the United Nations Development Programme’s 17 Sustainable Development Goals (UNDP 17 SDGs) expect universities to meet its SDG targets by 2030 [

29].

Consequently, just as GURs were developed to evaluate the academic reputation of universities by considering their research and training activity, other indices or rankings have been developed to quantify their environmental impact and contribution to cushion adverse effects. Some such examples include the Green League 2007, the Environmental and Social Responsibility Index 2009, and Universitas Indonesia (UI) GreenMetric [

30]. Universitas Indonesia developed this ranking in 2010, which is an online world university ranking developed to offer a portrait of current conditions and policies related to green campuses and the sustainability of universities worldwide [

29]. The UI GreenMetric adopts the environmental sustainability concept, which contains three elements: environmental (natural resources use, environmental management and pollution prevention), economic (cost-saving), and social (education and social involvement) [

31].

The main objective of the present research was to study if the universities better positioned in GURs are also more involved in creating environmental policies in their own institutions in accordance with the UI GreenMetric ranking. The second objective was to study if any geographical differences appeared in the interrelations between GURs and campus sustainability, and if the age of universities, them being public or private, technology or non-technology universities, could impact these interrelations.

In order to fulfill these objectives, we first carried out a descriptive analysis, followed by logistic regression analysis. The main highlighted conclusions are that European and North American universities predominate the Top 500 of GURs, while Asian ones predominate the Top 500 of the UI GreenMetric ranking, followed by European ones. Older, public and non-technology universities also predominate the Top 500 of GURs, whereas younger universities predominate the UI GreenMetric ranking. Although Latin American universities are barely present in GURs, the probability of them appearing in the Top 500 of the UI GreenMetric ranking is 5-fold higher than for other continents and is 1.5-fold more for European universities.

This study represents a novel contribution to the literature by comparing two university rankings: on the one hand, those evaluating academic/research activity and, on the other hand, those assessing their environmental activity on university campuses.

This article is arranged as follows:

Section 2 offers a background for understanding the selected sample and the objective set out in this research.

Section 3 describes the methodology, the sample, and the data employed herein.

Section 4 offers results and discussion. Finally,

Section 5 concludes the results and provides a few final remarks.

3. Materials and Methods

3.1. Sources of Information

For our study, we used the information that appears on the websites of all four GURs [

32,

33,

34,

35], in the study object and on the UI GreenMetric ranking website [

53].

For all five rankings, the universities in the 2018 Top 500 of each one were selected. A database was created with 2500 observations. The following information was obtained for these observations: the university’s name, its ranking position, its geographical location, and, on each university’s website, the year it began, its typology (public or private), and if it was technology or non-technology, were consulted. The geographical coordinates (longitude and latitude) were obtained for each university [

60].

3.2. Methods

First of all, a descriptive analysis was done of the positions that universities take in the Top 500 of each ranking to obtain their distribution by quartiles. To do so, the following were also considered: distribution by regions was also considered (North America, Europe, Asia, Oceania, Latin America, and Africa); age by distinguishing between being older and younger than 100 years; universities being public or private and technology or non-technology.

The geographical distribution of universities by quartiles was done visually by locating universities on maps, performed with the GIS software, QGIS version 2.18.15. [

61].

For each GUR, a crosstab was obtained to classify universities according to them being included, or not, in the UI GreenMetric ranking, along with the region where they were located. Crosstabs were also obtained for universities’ age and being public and non-technology.

By a statistical analysis of odds ratio (OR) [

9], the influence that the geographical location of a university, found in the Top 500 of a GUR, could have on it being in Top 500 of the UI GreenMetric ranking was calculated. This OR was done to measure the association between belonging to the Top 500 of the UI GreenMetric ranking and a university’s geographical location. An OR value = 1 indicates no association between being in the Top 500 of the UI GreenMetric ranking and location. The further away this value is from 1, the closer the relationship between both factors. Values less than 1 indicate a negative association between a given geographical location and being in the Top 500 of the UI GreenMetric ranking.

In this way, the OR values were calculated by considering the age of universities and distinguishing between being more than 100 years old and less than 100 years old, being public or private, and non-technology or technology.

The OR values were carefully compared with a binary logistic regression model. In general, this model quantified the influence of the explanatory variable (independent variable, regressor, or covariate), which is considered predictive, on the likelihood of being in the Top 500 of the UI GreenMetric ranking (dependent variable or explained variable). In the present work, only one explanatory variable was used in the binomial logistic regressions, whose mathematical expression is as follows:

where:

GM: takes a value of 1 if a university in the Top 500 of a GUR is also in the Top 500 of the UI GreenMetric ranking, and 0 otherwise.

α: Constant term.

: Coefficient of the explanatory variable dummy X, which takes a value of 1 if a university is located in North America, Europe, Asia, Oceania, or Latin America, is more than 100 years old, is public and is non-technology, and 0 otherwise.

ε: Random disturbance term.

The OR of the independent variable X is . Estimators were estimated by maximum likelihood calculation, and Wald (χ2) contrasts and the associated p-values were obtained for each one. The error considered levels were 1%, 5%, and 10%. The contrast over the overall model was performed with the likelihood ratio logarithm calculation (log. Likelihood ratio).

The statistical analyses were performed using the SPSS statistical package.

4. Results

4.1. Descriptive Analysis

The geographical distribution of the universities in the Top 500 of each ranking (

Table 1) showed for all the GURs that more universities were located in Europe: 254 (THE), 212 (QS), 192 (ARWU) and 192 (Webometrics), followed by North America: 183 (Webometrics), 156 (ARWU), 142 (THE) and 112 (QS). Nevertheless, Asia outperformed North America in QS with 117 universities. Performance considerably differed for the geographical regions of those universities in the Top 500 of the UI GreenMetric ranking as more universities were located in Asia with 200, followed by Europe with 154 and by Latin America with 71, all before North America with 67.

According to rankings, the main differences among regions were found for the THE ranking, in which 50.6% of universities were located in Europe, followed by North America with 28.6%, Asia had 12.2% and Oceania had 7.2%. Conversely, the smallest geographical differences appeared in the ARWU ranking, with 38.4% of universities in Europe, followed by North America with 31.2%, Asia with 21.6%, and Oceania had 5.8%.

For regions, more North American universities were present in the Top 500 of the Webometrics ranking (36.6%). However, North America was less represented in the Top 500 of the UI GreenMetric ranking (13.4%). More universities from Europe were present in the Top 500 of the THE ranking (50.6%), but fewer were present in the Top 500 of the UI GreenMetric ranking (30.8%). Asia had 40.0% of the universities in the Top 500 of the UI GreenMetric ranking, but only 12.2% in the Top 500 of the THE ranking. The presence of universities from Oceania in rankings ranged from 7.2% in the THE ranking, to 1% in the UI GreenMetric ranking, while the presence of Latin American universities ranged from 14.2% in the UI GreenMetric ranking to 0.4% in the THE ranking.

Nonetheless, this geographic distribution changed when only the composition of the first quartile (Q1) in the Top 500 of the five rankings was studied. In GURs, more universities came from North America, according to Webometrics (72), ARWU (64), and THE (53). For Europe, more universities appeared only in the QS ranking (47). The same occurred with the Top 500 of the UI GreenMetric ranking, where more universities were located in Europe (56), followed by Asia (37), North America (20), and Latin America (10).

When studying how the number of universities evolved with quartiles, this number clearly tended to lower from Q1 to Q4 for North America in the Top 500 of the five rankings and for Europe in the Top 500 of the UI GreenMetric ranking. The opposite was observed for Asia because the number of universities tended to rise from Q1 to Q4, and did so more obviously in the UI GreenMetric ranking. This means that although Europe predominated the Top 500 of GURs, the first posts went to universities from North America. The same applied to Asia because, despite having more sustainable universities, the first posts went to European universities.

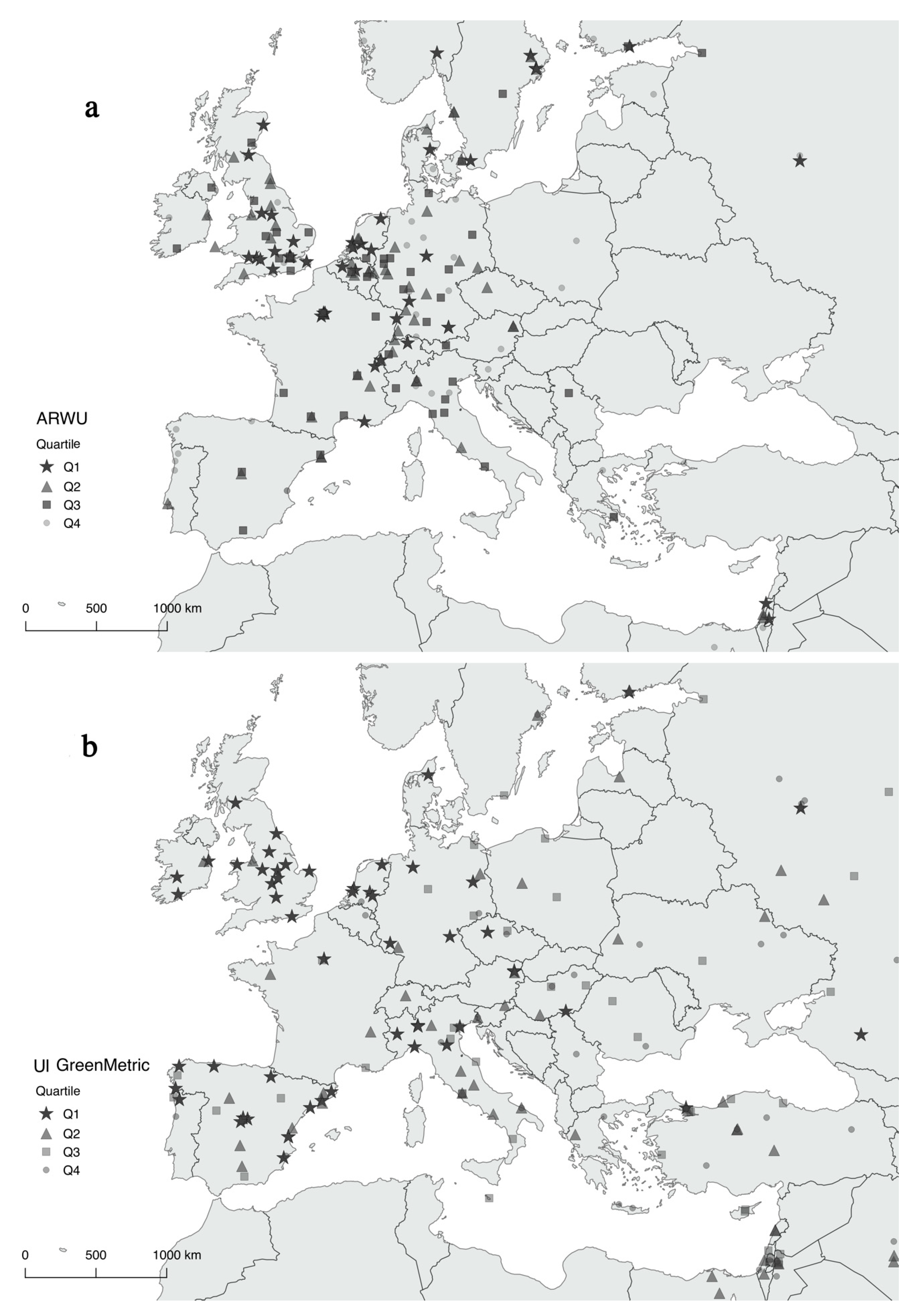

On the maps of universities per quartiles according to both the ARWU ranking (

Figure 1a) and UI GreenMetric ranking (

Figure 1b) rankings, we confirm how the universities in ARWU formed four regional clusters in the core of the world economy: the center of Europe, North America, eastern Asia, and Australia. Conversely in the Top 500 of the UI GreenMetric ranking, this geographic concentration was less marked and universities were more scattered worldwide, which meant that more universities appeared all over Asia and Latin America.

Although Europe is the region with a greater equilibrium between both ranking types,

Figure 2 details the distribution of universities per country in the Top 500 of the ARWU ranking (

Figure 2a) and of the UI GreenMetric ranking (

Figure 2b). The best positions occupied by universities in the ARWU ranking concentrate basically in the UK, Germany, and the Netherlands. When analyzing these positions in the UI GreenMetric ranking their composition changes in the UK, and virtually disappears in Germany and the Netherlands. Conversely, universities in Spain and north Italy are practically inexistent in the ARWU ranking but noticeably increase in the UI GreenMetric ranking while occupying the first quartile at the same time.

As for the distribution of universities per typologies (

Table 2), in the Top 500 of the four GURs, more than 80% of universities were public (78.6–85.2%). Moreover, most were non-technology (81.8–87.8%). Most of the universities in the Top 500 of the four GURs were more than 100 years old, and more universities of this age were also found in ARWU (65.4%) than in THE (60.2%) and QS (59.0%).

In the Top 500 of the UI GreenMetric ranking, most universities were non-technology (83.8%) and public (76.4%). Conversely, with GURs, only 29.6% of the universities were more than 100 years old.

The number of universities included in the Top 500 of the UI GreenMetric ranking was similar in the Top 500 of the four GURs: between 64 (12.8%) in Webometrics and 57 (11.6%) in ARWU (

Table 3). These percentages were also somewhat higher in European universities, which ranged between 16.7% in Webometrics and 12.3% in QS, which could be favored by being more present in the Top 500 of GURs. Asia also had a higher percentage than the mean, but only in QS with 13.7%. Latin America stands out more in the UI GreenMetric ranking, whose percentage of universities in the Top 500 of the UI GreenMetric ranking ranged between 25.0% in QS and 50.0% in THE.

According to typologies, the presence of universities in the Top 500 of the four GURs and in the Top 500 of the UI GreenMetric ranking was slightly higher in universities younger than 100 years (13.1% in THE–16.0% in Webometrics) than for those aged more than 100 years (10.2% in ARWU–11.1% in Webometrics), and in public universities (12.7% in THE–13.8% in Webometrics) versus private ones (5.4% in THE–7.7% in QS). The same can be stated of non-technology universities (11.5%–13.1%) versus technology ones (6.7%–13.1%).

4.2. Statistic Analysis

The statistical analysis allowed us to know if these differences, namely appearing in the Top 500 of the UI Green Metric ranking in all four GURs, per region and typologies of universities, were statistically significant (

Table 4). We observed how the Latin American universities in the Top 500 of any GUR were more likely to be in the Top 500 of the UI GreenMetric ranking than those from other geographical locations, and this possibility was also statistically significant at 99% and 90% in all cases, except for THE. Indeed, the Latin American universities in the Top 500 of both ARWU and Webometrics were 5-fold more likely to be in the Top 500 of the UI GreenMetric ranking than those from other regions, with an error of 1%. They were followed far behind by European universities, which were 1.7-fold more likely to belong to the Top 500 of the UI GreenMetric ranking than to the remaining regions to the ARWU, Webometrics, and THE rankings, with an error between 1% and 5%. Conversely, we found a negative association for in North American universities and belonging to the Top 500 of the UI GreenMetric ranking because their OR was always below 1, but this was only significant at 5% in the Webometrics ranking. For the remaining continents Asia and Oceania, no significant association appeared between being in the Top 500 of any GUR and in the Top 500 of the UI GreenMetric ranking.

Although the ORs of universities were below 1 according to their age, which indicated that those universities older than 100 years were less likely to appear in the Top 500 of the UI GreenMetric ranking than younger universities, these differences were not significant. Conversely, the public universities in the Top 500 of GURs were about twice as likely to be in the Top 500 of the UI GreenMetric ranking than private universities, which was significant at 90% in both ARWU and THE. Finally, no significant differences appeared between technology and non-technology universities, save QS, where non-technology universities were twice as likely to be in the Top 500 of the UI GreenMetric ranking than technology ones, with an error of 10%.

5. Discussion

Measuring the quality and scientific performance of universities is a very difficult task, which means that creating excellent and completely objective rankings is practically impossible [

47]. This might be due to science being abstract and is much more difficult to measure tan any physical product, which has led to studies that have compared the positions that universities occupy in rankings. Some such studies have found a high correlation among the positions occupied by universities in ARWU, QS, and THE [

2,

3,

7,

8], and a low correlation of three GURs with Webometrics [

3]. However, this work analyzed the geographical distribution of the universities in the Top 500 of the four studied GURs and evidences a different occupancy in them according to regions either for each quartile or the total of universities. This is because, although the four GURs aim to measure universities’ academic performance, they measure it differently, as we have seen in the Background section. This leads to confusion for society about what GURs actually measure. It is also hard to measure universities’ quality only using the few numbers that GURs are based on. Former studies have questioned their reliability and confirm that GURs’ indicators are based mainly on research, and leave teaching, community service, and their environmental commitments to one side, which has brought about inconsistencies in classification systems. To all this, we must add that today universities are too concerned about improving the positions they occupy in GURs, and many universities are pressured to increase their number of publications to improve their occupancy in GURs [

7,

46,

49] and might neglect other important objectives, like serving society and being committed to sustainability. A conflict arises given the need to simultaneously deal with national interests and international publications [

62]. This can have the reverse effect, with universities serving rankings and neglecting their other missions. Those in charge of university policies and academicians look more outwardly to meet external demands rather than maintain local values through education [

63]. Rankings are not per se a purpose [

49] and it is important to reflect on the profound impact that GURs may have as a form of governance among academicians [

62].

Yet despite the geographical differences found among the four GURs, these geographical outcomes corroborated those indicated in other works [

7,

8,

9,

52], in which more universities were found in Europe and North America, and in this order [

2,

7]. The rankings in which European universities predominate more than North American ones are THE and QS. This may be due to the ARWU ranking being of Chinese nationality, which might make it less biased to favor the Anglo-American culture, whereas the rankings THE and QS are English. Initially, THE and QS came about as a single ranking as a response from Europe to the ranking ARWU. In 2009, the two independent THE and QS rankings were formed, which continue today [

49]. This means that the rankings THE and QS are similar to one another because they use expert surveys as well as databases [

6], unlike the ARWU ranking that employs only databases. Like ARWU, Webometrics strikes a better balance between North America and Europe, possibly because of the open-access databases it uses, and due to North American universities caring more about their presence on public websites than universities in other regions.

In any case, hegemony appears: the world regions with good economic development generally house the universities with large research budgets where teachers know the English language better, specifically regions of central Europe, North America, eastern Asia, and Australia. This finding coincides with another work [

56], while South America and Africa do not appear. Prestigious universities with history tend to be more highly classified. Thus, English and western universities occupy better positions than Asian and African universities, which reflects the possibility of those surveyed tending to think that using English is always better than using other languages [

46].

Nonetheless, it would be interesting to analyze the evolution of this geographical distribution over time, which a previous work did [

36]. Its authors found that, in 2018 compared to 2010, the number of universities located in the USA, UK, and France and positioned in the ARWU, THE and QS ranking lowered, but universities from Singapore and China rose.

Although regional differences were verified among the four GURs, the map completely changes when we analyzed the geographical distribution of the universities in the Top 500 of the UI GreenMetric ranking, which is a novelty of the present work. Basically, the most sustainable universities are scattered around the world and appear in new less economically developed places like Asia and Latin America. This present academic Anglo-American hegemony in GURs can be considered threatened by a potential change toward eastern Asia and a proliferation of universities worldwide if sustainability is taken into account. Only about 60 universities of the Top 500 that appear in the four GURs are found in the Top 500 of the UI Green Metric ranking. In other words, the number of sustainable universities from North America and Oceania drastically drops, as does the number of sustainable European universities, but to a much lesser extent. All this favors Latin American and Asian universities, whose presence substantially increases in the UI GreenMetric ranking. This means that of all the world regions, Europe strikes a better balance between two classification types: GURs rankings and the UI GreenMetric ranking. That is, between academic performance and sustainability. Nonetheless, the detail provided for countries on the map of Europe enables us to see that the predominant universities in the Top 500 of ARWU are from the UK, Germany, and the Netherlands as opposed to more southern countries, which completely changes in the UI GreenMetric ranking. Once again differences appear between north and south Europe owing to the economic power, history, and culture of its nations. This evidences the GURs’ limitations to measure university performance as they completely neglect the role of developing environmental sustainability. These indices should be adapted to the world’s current requirements.

Besides geographical location, we also observed how universities more than 100 years old predominated in GURs, especially in ARWU and Webometrics, but slightly less in THE and QS. This is in keeping with other previous works [

46,

52] as these last two rankings had more emerging universities because the four GURs employs a different methodology. However, in the UI GreenMetric ranking, younger universities set up less than 100 years ago predominate. This could be related to younger universities being located in Asia as these universities are also the majority in the UI GreenMetric ranking, according to

Table 1. This might be due to universities’ age being closely related to Anglo-American academic hegemony. Perhaps older universities have more rigid structures that make it hard for their campuses to adapt to sustainability. Conversely, emerging universities have been more committed to the natural environment and have adapted to society’s new requirements. This was corroborated when we analyzed universities’ age in the number of universities in each GUR that are also found in the UI GreenMetric ranking as younger universities appear than those older than 100 years ago. This could be due to the fact that among the universities that better performed academically, younger ones were more sustainable because they had adapted their premises to environmental requirements.

For public versus private universities, we observed a certain effect on sustainability. Almost 2-fold more public universities present in the four GURs were also found in the UI GreenMeetric ranking versus private universities. This finding was significant in the ARWU and THE rankings. The public universities that better performed academically could have more means to face climate change than private ones, or are perhaps not as concerned about their institution’s economic profitability as some private universities possibly are. This is very important because it indicated the relevant role that a country’s government may play in climate change via its universities.

Regarding specialties, as non-technology universities predominated in the four GURs, the specialties of universities could bias the composition of these GURs [

9]. However, this was also the case of the UI Green Metric ranking. When GURs interacted with the UI Green Metric ranking, more sustainable and non-technology universities appeared in QS, which could be due to the different spatial structure between both these university typologies. Thus using “clusters of different university typologies” could be a recommendation for preparing environmental sustainability rankings [

14].

Notwithstanding, we stress that the present work analyzed environmental sustainability in universities via the UI GreenMetric ranking, which considers six quantitative indicators. It would be relevant to also assess the university’s relationship with the rest of society in sustainability terms and integrating sustainability into all university community members and strata [

64].

6. Conclusions

GURs intend to measure the performance of universities worldwide. As with other previous works, we confirm a different composition for the universities in the Top 500 of GURs, which derives from them employing distinct indicators and methodologies, just as their different geographic distribution demonstrates. Although universities from North America and Europe are more often found in the four GURs, the former predominate ARWU and QS and the latter predominate Webometrics and THE.

Former studies have doubted their reliability and confirmed that indicators of GURs are based fundamentally on research, while they leave teaching, serving the community and their commitment to the natural environment to one side, which has led to inconsistencies in classification systems. A university wrongly classified in GURs can be an excellent university in education or in other qualities that contribute to society compared to other universities included in GURs. Nowadays, climate change is a world threat and universities must be committed to climate and to promote actions that help to protect the natural environment and have to, thus, include this objective in their campus actions.

This study is the first to relate GURs to the UI GreenMetric ranking to examine sustainability on the campuses of those universities that perform better academically. The obtained results reveal that the universities included in GURs are not always the best ones in sustainability matters. This means that when sustainability is evaluated on their campuses, those located in Asia appeared more often in the Top 500 of the UI GreenMetric ranking, followed by European ones. This indicates that a low association exists between universities’ academic performance and their commitment to the natural environment in the heart of their institution.

Only about 12% of the universities in the Top 500 of the four GURs are also in the Top 500 of the UI GreenMetric ranking. The only region with the most universities that perform best academically and had more sustainable campuses is Europe, with a 1.5-fold more probability than the other continents. Although very few Latin American universities are among the best-performing ones in academic terms, more of them have sustainable campuses than other regions and a 5-fold more probability of being in the Top 500 of the UI GreenMetric ranking.

Per typologies, differences in performance also appear in each GUR in relation to its interaction with the UI GreenMetric ranking. Although younger universities are in the Top 500 of the UI GreenMetric ranking, universities’ age in GURs does not come over as a statistically significant factor for being more or less sustainable. Conversely, being a public university positively and significantly discriminates being in the Top 500 of the UI GreenMetric ranking versus private universities, but only in two GURs: ARWU and THE. Only in the QS ranking for the non-technology universities in its Top 500 does the possibility of being in the Top 500 of the UI GreenMetric ranking double that of the two technology ones.

For all these reasons, GURs should be studied and updated in light of the new challenges that society faces. To this end, including other indicators in GURs, apart from those already established, or even creating other integral measurement rankings, is proposed, which are related to environmental sustainability and social inclusion, and exactly in the same way as companies measure CSR: economic area, social area, and environmental area. Thus, universities would bear in mind that their objectives should not only include research and education, but also socio-environmental improvement. This would contribute to not only advance in research and education but also in social equality and an improved environment, while the government in different countries will be able to play a key role in this purpose through their universities.

So, those universities that perform well academically to increase their commitment to cushioning climate change can be an example for other universities to do the same. One way would be to improve educational quality through good teaching practices while generating environmental awareness and creating sustainable spaces. In this way, universities would be drivers toward a sustainability culture in society by becoming models for sustainable development by implementing green policies on their campuses, and prioritizing indicators of energy efficiency, waste management, preserving/saving water, transport, and research to achieve integral long-term sustainability.