Social Media Data Analytics to Enhance Sustainable Communications between Public Users and Providers in Weather Forecast Service Industry

Abstract

1. Introduction

2. Material and Method

2.1. Data Collection

2.2. Analysis Method

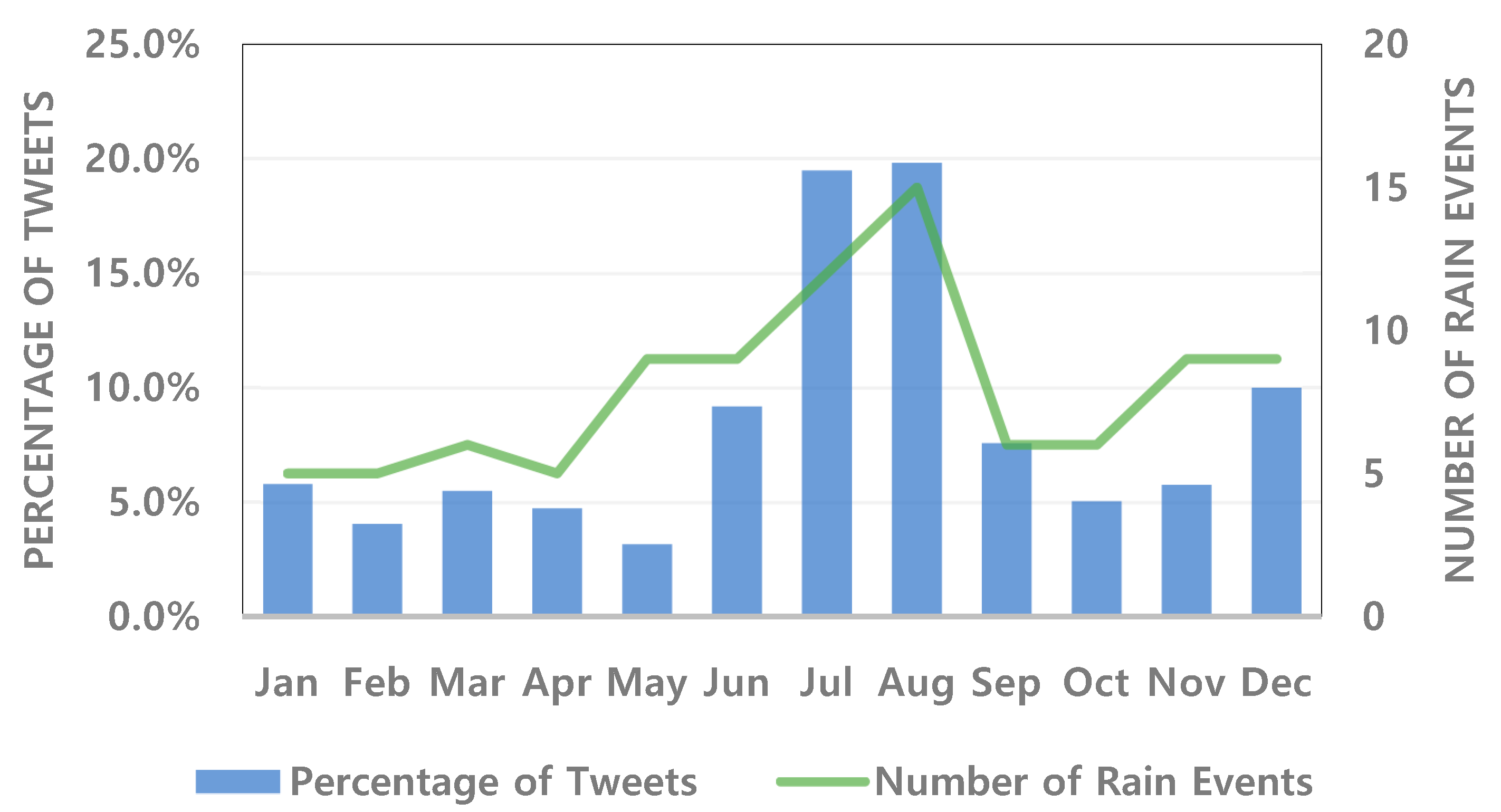

3. Results

3.1. Textual Analysis of Negative Opinions about the Weather Forecast Errors

3.2. Interpretation of Association Rules

- Hey, KMA... When is the shower coming? It’s hot enough.

- The forecast said it would rain heavily, but sweat is pouring like rain. I was tricked again by Korea Meteorological Administration.

- The weather service gave me muscle in my arm. What about my umbrella? It is only sunny.

- I even brought an umbrella and I’m wearing boots, believing it would rain. Instead of rain, the sun is sizzling ... Weather agency, are you kidding?

- It’s a lot of rain for the fall. The KMA said it’s 0.1mm per hour, but it doesn’t look like this.

- Hey, KMA guys. You said the weather would be mild and nice today, didn’t you? I got wet without an umbrella. If I have a lousy head, I’ll sue you.

- It’s raining. I didn’t bring an umbrella with me because it said it would rain around 9 p.m. So, I got rained on my way home from work. The weather agency’s supercomputer is worth 10 billion won? The salary of its employees is our tax.

- The rain is so severe that it is almost invisible like typhoon, but did you say it would just rain once or twice?

- Korea Meteorological Administration said this winter is supposed to be warm, but it is cold from early December! The weather forecast from the KMA is wrong again!

4. Discussion

5. Conclusions

- It is crucial to consider the behaviors of Korean people and improve the recognized accuracy of precipitation forecasts. It is particularly necessary to make even minor corrections to precipitation forecasts for each period to reduce the frequency of “False alarm” errors in spring and summer, and to prevent “Miss” errors in fall (see Rule A~D).

- In winter, the temperature forecast is more important than the precipitation forecast. The technical aspects of the long-term forecast related to winter cold, which is announced late in fall (the preparation time for winter) need improvement because this forecast has a greater impact on public impressions compared to 24-h forecasts (see Rule G).

Author Contributions

Funding

Conflicts of Interest

References

- Vo, L.V.; Le, H.T.T.; Le, D.V.; Phung, M.T.; Wang, Y.-H.; Yang, F.-J. Customer satisfaction and corporate investment policies. J. Bus. Econ. Manag. 2017, 18, 202–223. [Google Scholar] [CrossRef]

- Lam, S.Y.; Shankar, V.; Erramilli, M.K.; Murthy, B. Customer value, satisfaction, and switching costs: An illustration from business-to-business service context. JAMS 2004, 32, 293–311. [Google Scholar] [CrossRef]

- Fornell, C.; Rust, R.T.; Dekimpe, M.G. The effect of customer satisfaction on consumer spending growth. J. Mark. Res. 2010, 47, 28–35. [Google Scholar] [CrossRef]

- Baghestani, H.; Williams, P. Does customer satisfaction have directional predictability for U.S. discretionary spending? Appl. Econ. 2017, 49, 5504–5511. [Google Scholar] [CrossRef]

- Walle, S.V.D.; Ryzin, G.G.V. The order of questions in a survey on citizen satisfaction with public services: Lessons from a split-ballot experiment. Public Admin. 2011, 89, 1436–1450. [Google Scholar] [CrossRef]

- Morss, R.E.; Demuth, J.L.; Lazo, J.K. Communicating uncertainty in weather forecasts: A survey of the U.S. public. Weather Forecast. 2008, 23, 974–991. [Google Scholar] [CrossRef]

- Sorensen, J.H. Hazard warning systems: Review of 20 years of progress. Nat. Hazards Rev. 2000, 1, 119–125. [Google Scholar] [CrossRef]

- Compton, J. When weather forecasters are wrong: Image repair and public rhetoric after severe weather. Sci. Commun. 2018, 40, 778–788. [Google Scholar] [CrossRef]

- Murphy, A.H. Decision making and the value of forecasts in a generlized model of the cost-loss ratio situation. Mon. Weather Rev. 1985, 113, 362–369. [Google Scholar] [CrossRef]

- Katz, R.W.; Murphy, A.H. Economic Value of Weather and Climate Forecasts; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Mylne, K.R. Decision-making from probability forecasts based on forecast value. Meteorol. Appl. 2002, 9, 307–315. [Google Scholar] [CrossRef]

- Lee, K.-K.; Lee, J.W. The economic value of weather forecasts for decision-making problems in the profit/loss situation. Meteorol. Appl. 2007, 14, 455–463. [Google Scholar] [CrossRef]

- Kim, I.-G.; Kim, J.-Y.; Kim, B.-J.; Lee, K.-K. The collective value of weather probabilistic forecasts according to public threshold distribution patterns. Meteorol. Appl. 2014, 21, 795–802. [Google Scholar] [CrossRef]

- Zeigler, D.J.; Brunn, S.D.; Johnson, J.H., Jr. Focusing on Hurricane Andrew through the eyes of the victims. Area 1996, 28, 124–129. [Google Scholar]

- Moore, S.; Daniel, M.; Linnan, L.; Campbell, M.; Benedict, S.; Meier, A. After Hurricane Floyd passed: Investigating the social determinants of disaster preparedness and recovery. Fam. Commun. Health 2004, 27, 204–217. [Google Scholar] [CrossRef] [PubMed]

- Hoss, F.; Fischbeck, P. Increasing the value of uncertain weather and river forecasts for emergency managers. Bull. Am. Meteorol. Soc. 2016, 97, 85–97. [Google Scholar] [CrossRef]

- Zhang, F. An in-person survey investigating public perceptions of and response to Hurricane Rita forecasts along the Texas coast. Weather Forecast. 2007, 22, 1177–1190. [Google Scholar] [CrossRef]

- Lazo, J.K.; Morss, R.E.; Demuth, J.L. 300 billion served: Sources, Perceptions, Uses, and Values of weather forecasts. Bull. Am. Meteorol. Soc. 2009, 90, 785–798. [Google Scholar] [CrossRef]

- Joslyn, S.; Savelli, S. Communicating forecast uncertainty: Public perception of weather forecast uncertainty. Meteorol. Appl. 2010, 17, 180–195. [Google Scholar] [CrossRef]

- Silver, A.; Conrad, C. Public perception of and response to severe weather warnings in Nova Scotia, Canada. Meteorol. Appl. 2010, 17, 173–179. [Google Scholar] [CrossRef]

- Demuth, J.L.; Lazo, J.K.; Morss, R.E. Exploring variations in people’s sources, uses, and perceptions of weather forecasts. Weather Clim. Soc. 2011, 3, 177–192. [Google Scholar] [CrossRef]

- Drobot, S.; Anderson, A.R.S.; Burghardt, C.; Pisano, P.U.S. public preferences for weather and road condition information. Bull. Am. Meteorol. Soc. 2014, 95, 849–859. [Google Scholar] [CrossRef]

- Zabini, F.; Grasso, V.; Magno, R.; Meneguzzo, F.; Gozzini, B. Communication and interpretation of regional weather forecasts: A survey of the Italian public. Meteorol. Appl. 2015, 22, 495–504. [Google Scholar] [CrossRef]

- Reja, U.; Manfreda, K.L.; Hlebec, V.; Vehovar, V. Open-ended vs. close-ended questions in web questionaries. Dev. Appl. Stat. 2003, 19, 159–177. [Google Scholar]

- KISA. 2016 Survey on the Internet Usage Summary Report; Korea Internet & Security Agency: Naju, Korea, 2016. [Google Scholar]

- Mangold, W.G.; Faulds, D.J. Social media: The new hybrid element of the promotion mix. Bus. Horiz. 2009, 52, 357–365. [Google Scholar] [CrossRef]

- Hutto, C.J.; Gilbert, E. VADER: A parsimonious rule-based model for sentiment analysis of social media text. In Proceedings of the 8th International AAAI Conference on Weblogs and Social Media (ICWSM), Ann Arbor, MI, USA, 1–4 June 2014. [Google Scholar]

- Jansen, B.J.; Zhang, M.; Sobel, K.; Chowdury, A. Twitter power: Tweets as electronic word of mouth. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 2169–2188. [Google Scholar] [CrossRef]

- Culnan, M.J.; McHugh, P.J.; Zubilaga, J.I. How large U.S. companies can use Twitter and other social media to gain business value. Mis Q. Exec. 2010, 9, 243–259. [Google Scholar]

- Bollen, J.; Mao, H.; Zeng, X. Twitter mood predicts the stock market. J. Comput. Sci. 2011, 2, 1–8. [Google Scholar] [CrossRef]

- Dodds, P.S.; Harris, K.D.; Kloumann, I.M.; Bliss, C.A.; Danforth, C.M. Temporal patterns of happiness and information in a global social network: Hedonometrics and twitter. PLoS ONE 2011, 6, e226752. [Google Scholar] [CrossRef]

- Golbeck, J.; Hansen, D. A method for computing political preference among Twitter followers. Soc. Netw. 2014, 36, 177–184. [Google Scholar] [CrossRef]

- Dong, J.Q.; Wu, W. Business value of social media technologies: Evidence from online user innovation communities. J. Strategic Inf. Syst. 2015, 24, 113–127. [Google Scholar] [CrossRef]

- Baylis, P.; Obradovich, N.; Kryvasheyeu, Y.; Chen, H.; Coviello, L.; Moro, E. Weather impacts expressed sentiment. PLoS ONE 2018, 13, e0195750. [Google Scholar] [CrossRef]

- Olson, M.K.; Sutton, J.; Vos, S.C.; Prestley, R.; Renshaw, S.L.; Butts, C.T. Built community before the storm: The National Weather Service’s social media engagement. J. Contingencies Crisis Manag. 2019, 27, 359–373. [Google Scholar] [CrossRef]

- Silver, A.; Andrey, J. Public attention to extreme weather as reflected by social media activity. J. Contingencies Crisis Manag. 2019, 27, 346–358. [Google Scholar] [CrossRef]

- Sun, X.; Yang, W.; Sun, T.; Wang, Y.P. Negative Emotion under Haze: An Investigation Based on the Microblog and Weather Records of Tianjin, China. Int. J. Environ. Res. Public Health 2019, 16, 86. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Obradovich, N.; Zheng, S. A 43-Million-Person Investigation into Weather and Expressed Sentiment in a Changing Climate. One Earth 2020, 2, 568–577. [Google Scholar] [CrossRef]

- Spurce, M.; Arthur, R.; Williams, H.T.P. Using social media to measure impacts of named storm events in the United Kingdom and Ireland. Meteorol. Appl. 2020, 27, e1887. [Google Scholar] [CrossRef]

- KMA. Public Satisfaction Survey on National Weather Service in 2014; Hyundae Research Institute: Seoul, Korea, 2014. [Google Scholar]

- Fricker, R.D. Sampling Methods for Web and E-mail Surveys—The SAGE Handbook of Online Research Methods; SAGE Publications Ltd.: London, UK, 2008. [Google Scholar]

- Government Social Research. The Use of Social Media for Research and Analysis: A Feasibility Study; Department for Work & Pensions: London, UK, 2014. [Google Scholar]

- KMA. Public Satisfaction Survey on National Weather Service in 2011; Hyundae Research Institute: Seoul, Korea, 2011. [Google Scholar]

- Anderson, E.W. Customer satisfaction and word of mouth. J. Serv. Res. 1998, 1, 5–17. [Google Scholar] [CrossRef]

- Richins, M.L. Negative word-of mouth by dissatisfied consumers: A pilot study. J. Mark. 1983, 47, 68–78. [Google Scholar] [CrossRef]

- Skowronski, J.J.; Carlston, D.E. Negativity and extremly in impression formation: A review of explanations. Psychol. Bull. 1989, 105, 131–142. [Google Scholar] [CrossRef]

- Ledolter, J. Data Mining and Business Analytics with R; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- EMC Education Services. Data Science and Big Data Analytics—Discovering, Analyzing, Visualizing and Presenting Data; John Wiley & Sons: Indianapolis, IN, USA, 2015. [Google Scholar]

- Agarwal, R.; Srikant, R. Fast algorithms for mining association rules in large databases. In Proceedings of the 20th International Conference on Very Large Data Bases, Santiago de, Chile, Chile, 12–15 September 1994; pp. 487–499. [Google Scholar]

- Sen, S.; Lerman, D. Why are you telling me this? An examination into negative consumer reviews on the web. J. Interact. Mark. 2007, 21, 76–94. [Google Scholar] [CrossRef]

| Sentiment | Occurrences | Percentage |

|---|---|---|

| Negative | 2177 | 74.5% |

| Neutral | 637 | 21.8% |

| Positive | 107 | 3.7% |

| Total | 2921 | 100.0% |

| Case | Date | Observed Sentiment of Tweets | Weather Phenomena Causing Negative Sentiment | Type of Forecast Error | |||||

|---|---|---|---|---|---|---|---|---|---|

| Total | Negative | Rain | Heat | Downpour | Typhoon | FA | Miss | ||

| A | 25 Jul | 90 | 86 | 54 | 22 | - | - | 49 | 1 |

| B | 3 Aug | 61 | 53 | 27 | 3 | - | 16 | 24 | 3 |

| C | 18 Jul | 59 | 51 | 27 | 7 | 8 | - | 19 | 12 |

| D | 12 Sep | 48 | 43 | 31 | - | 8 | - | - | 36 |

| Total | 258 | 233 | 139 | 32 | 16 | 16 | 100 | 74 | |

| Item | Association Rule | Support | Confidence | Lift | Comparing the Rule with each Case in Table 3 | |||

|---|---|---|---|---|---|---|---|---|

| A | B | C | D | |||||

| A | {FA, Heat, Rain, Summer}→{Censure} | 0.013 | 0.674 | 1.958 | ○ | ○ | - | - |

| B | {Miss, Rain, Autumn}→{Censure} | 0.025 | 0.625 | 1.814 | - | - | - | ○ |

| C | {FA, Rain, Spring}→{Censure} | 0.011 | 0.605 | 1.757 | - | - | - | - |

| D | {FA, Paraphernalia, Rain, Summer}→{Censure} | 0.013 | 0.583 | 1.693 | - | - | ○ | - |

| E | {Miss, Paraphernalia, Rain}→{Censure} | 0.015 | 0.569 | 1.652 | - | - | ○ | - |

| F | {Miss, Rain, Time}→{Censure} | 0.010 | 0.512 | 1.485 | - | - | ○ | - |

| G | {Miss, Cold, Extended-Forecast, Winter}→{Censure} | 0.017 | 0.507 | 1.472 | - | - | - | - |

| H | {Miss, Downpour}→{Censure} | 0.010 | 0.500 | 1.451 | - | - | - | ○ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, K.-K.; Kim, I.-G. Social Media Data Analytics to Enhance Sustainable Communications between Public Users and Providers in Weather Forecast Service Industry. Sustainability 2020, 12, 8528. https://doi.org/10.3390/su12208528

Lee K-K, Kim I-G. Social Media Data Analytics to Enhance Sustainable Communications between Public Users and Providers in Weather Forecast Service Industry. Sustainability. 2020; 12(20):8528. https://doi.org/10.3390/su12208528

Chicago/Turabian StyleLee, Ki-Kwang, and In-Gyum Kim. 2020. "Social Media Data Analytics to Enhance Sustainable Communications between Public Users and Providers in Weather Forecast Service Industry" Sustainability 12, no. 20: 8528. https://doi.org/10.3390/su12208528

APA StyleLee, K.-K., & Kim, I.-G. (2020). Social Media Data Analytics to Enhance Sustainable Communications between Public Users and Providers in Weather Forecast Service Industry. Sustainability, 12(20), 8528. https://doi.org/10.3390/su12208528