Abstract

Recent civilizational transformations have led us to search for and introduce new didactic solutions. One of these is e-learning, which is a response to the needs of the education system and its individual stakeholders. The e-learning systems currently available offer similar solutions. Only during direct interaction with a given tool can one notice significant differences in their functionality. By carrying out evaluation studies the indicators that determine this functionality can be identified. The paper presents e-learning in the context of the academic training of the future generations of teachers. The reflections revolve around digital literacy and experience in using modern information and communication technologies in education. The goal of the research was to evaluate three areas: the functionality of the SELI platform, individual experiences with e-learning, and digital literacy. The technique used was an e-learning survey appended to the end of two e-learning courses offered on the platform. The survey was addressed to teachers and students of the biggest pedagogical university in Poland. The general impression of the content presented on the platform was, in most cases, rated as being very good or good. The platform itself was also evaluated positively. Based on the analyses conducted, two groups of platform users were identified. One third of the users have diverse experiences with e-learning, which corresponds with their digital literacy. The remaining two thirds of the respondents need more training in the areas evaluated. The authors of the paper believe that this type of study should accompany all activities that introduce e-learning at every stage of education. Only then will it be possible to discover where the digital divide lies among the teaching staff and learners.

Keywords:

e-learning; new platform; evaluation; SELI; experiences; students; teachers; digital literacy; Poland 1. Introduction

The development of modern means of communication has influenced contemporary education. Traditional forms of teaching and those based on e-learning have been enriched by new forms that overcome the barriers of time and space. Web-based training (WBT) and computer-based learning (CBL) not only expand the opportunities for teachers and students and increase the attractiveness of learning, but they also allow the elimination of factors that have previously limited both groups. Modern societies function mainly on the basis of information and knowledge, and thus their members need to develop their competencies both constantly and rapidly [1,2]. Individualization and easy access have become the categories that determine the attractiveness of educational offers. E-learning only requires access to dedicated tools, namely, e-learning platforms, which are usually intuitive and can be accessed using different technical devices.

To meet the current needs, universities offer classes using different LMS (learning management system) platforms. One important issue is the selection of the product that will meet the expectations of the academic teachers who develop the courses, and the students who participate in the resulting courses. To ensure the highest quality of learning and to include the needs of different study groups (as well as groups at risk of social marginalization), more and more advanced tools are designed. One of these is the SELI (Smart Ecosystem for Learning and Inclusion) platform, created as part of an international project. According to the requirements, the platform was subject to a complex evaluation that covered its different components. Evaluations of VLEs (virtual learning environments) are commonly used but most are limited to an assessment of the VLE’s functionality. The evaluation presented herein was expanded with the additional element of digital literacy (understood as knowledge, skills and previous experiences with technical operation and information management). Occasionally this subjective factor can affect the general evaluation of the product. The authors of the paper wanted to avoid this error. Verification of this variable enables an objective evaluation of the VLE, and also helps to separate opinions about purely technical aspects from opinions resulting from ignorance or subjective fears.

The lack of relevant digital literacy is one of the main factors that determine the digital divide. That is why all initiatives to develop digital literacy are so important. Education platforms provide an environment where this process takes place in a natural and practical way. The education market offers more and more advanced and user-friendly solutions. Monitoring the opinions of people from the risk group is a key element when developing innovative solutions to reduce educational failure among these individuals. Evaluation reports should be disseminated internationally because only the exchange of ideas and collaboration at this level will lead to the optimization of new e-learning environments.

Theoretical Framework

E-learning is defined as a formal approach to learning where the instructor and students interact remotely with one another using Internet technologies (e-infrastructure) [3]. There are different concepts and interpretations of e-learning. Each of these concepts is historically rooted and finds its expression in modern education institutions that are open to global culture, society, and individuals [4].

Pham et al. (2018) [5] list four main characteristics of e-learning. They include (1) instructor and students who work together remotely, (2) universities that support the learning and teaching process using online platforms together with adapted student evaluation strategies, (3) Internet technologies used for interactions between the instructor and the students, and (4) effective communication between all stakeholders.

The development of information and communication technologies and tools is rapid, and this is one of the reasons why e-learning is important from the perspective of mass implementation and development [6]. The rapid development of modern technologies forces researchers to undertake analyses systematizing changes related to technological determinism. The transformations resulting from the implementation of ICT (Information and Communication Technologies) in professional, private, and educational life are of interest not only to sociologists and computer scientists, but also to educators. Specialists in the field of education are currently analyzing more and more often the issue of the extent to which new technologies contribute to the improvement of digitally mediated didactics. In addition, researchers dealing with issues on the borderline between media sociology, pedagogy, and computer science are also trying to find factors that enable the effective implementation of new media in the educational process. Of course, researchers are aware of organizational, didactic, economic, motivational, and competence diversity. This diversity of determinants related to the implementation of new technologies in education has resulted in the emergence of several interesting theories useful in the analysis of the evaluation of new digital teaching tools. The list of the most popular theories used in research on digitally mediated didactics is presented in Table 1.

Table 1.

Review of the theories related to the inclusion of new technologies in educational processes.

When analyzing issues related to the inclusion of new solutions in the implementation of e-learning, it is worth referring to the results of research that are consistent with the objectives of this study. Rabiman et al. [25] compared the results of traditional education and e-learning, emphasizing that contemporary students prefer digital content. This is of positive value for online education activities in a situation in which didactic materials can be processed (created, stored, and shared) via the Internet. However, for education to be successful, it requires a high level of interaction and collaboration [26]. Collaboration is a “form of relations” between students, it is the “way of learning,” and it focuses on the relationships implied by the tasks and on the process of completing these tasks [27]. Collaboration during problem-solving is one of the skills best promoted by modern e-learning. Problem-solving skills are considered an integral part of the process of understanding specific concepts during learning. However, the literature often reports high indicators of failure and discontinuation of activities on the platform or mobile applications. At the same time, both students and teachers appreciate the opportunities provided by e-learning and highly rate the way it supports cognitive processes and engagement in students [28]. In the context of e-learning, the philosophical position is also recalled, namely, that of social constructivism, which suggests that group work, language, and discourse are crucial for effective learning [29].

Thanks to online learning/teaching platforms, e-learning has greatly influenced the higher education system [30]. Pham et al. [31] quote the results of a diagnostic survey conducted since 1997. The survey in the academic year 1997–1998 showed that more and more academic courses were implemented as e-learning, supporting the education of over 1,400,000 students; in the academic year 2005–2006, more than 318,000 people in the U.S. received their bachelor’s degree in business and more than 146,000 people received their master’s degree in business using e-learning. These authors say this tendency is still growing, as can be demonstrated by the fact that many advanced Internet applications are being introduced at different universities. Information technology used for academic e-learning must be constantly improved and adapted to the needs of these universities. Tawafak et al. [32] conducted a study to develop a structure based on the university communication model (UCOM). They assume that, following Al-Qirim [33], online learning has become one of the most frequently used forms of learning and that it is a necessary university practice that includes the integration of e-learning and scientific mediation strategies, support for active learning, and real collaboration between students through online technologies. At the same time, they emphasize that e-learning faces some problems and therefore, there is a need for institutions to plan remote education and introduce more and more best practices to evaluate e-learning in order to improve student outcomes. Based on the previous experiences of different countries, universities organize distance learning at an ever higher level. This is particularly important in the case of pre-service teacher training, as by improving the level of their metacognitive competencies, these teachers can prepare their students for permanent education. As early as the first decade of the 21st century, the emphasis was on the development of cloud computing, as this would represent a great step towards closing the gaps between using ICT in teacher training and in teachers’ future professional work [34]. All the research has indicated that teachers must thoroughly plan their didactic role to facilitate communication situations adapted to different needs in a virtual environment that is based on asynchronous learning. Other studies explore the effectiveness of online education platforms in motivating teachers to improve their didactic skills and in motivating students to learn, as well as strategic grounds for choosing technologies according to learning goals and content [35]. For at least the last several years, universities have successfully made use of the Moodle platform, mainly in hybrid education. Researchers analyze mixed-model learning based on three main theories: behaviorism, cognitivism, and constructivism. However, in their attempts to obtain the most effective outcomes, they face many limitations [36,37].

At present, the emphasis is on the further development of e-learning platforms and adding specific, professional content prepared by experts in the relevant fields. There are important indicators that recommend the formation of a community of practitioners—teachers from different universities who would cooperate with the teaching staff within pedagogical universities in order to solve the existing content-related, didactic, and technical problems [38]. At the international level, higher education institutions recognize the need to implement and integrate ICT in order to be able to make use of current opportunities and face the challenges that attend the various innovations in teaching and learning processes [39]. As a result, a variety of experts have combined their efforts to implement virtual learning environments called “learning management systems,” with functionalities that support flexible and active learning within the constructivist approach. Previous studies confirm the instrumental and functional use of the platform, which serves mainly as a repository of resources and information, and its didactic use remains limited [39]. This problem needs to be discussed and reflected upon from a systemic perspective, especially in universities that train pre-service teachers.

This text is part of the analysis related to the modernization of education. The creation of a new, international e-learning platform is not a task that occurs very often in the field of pedagogical practice. It is also not an activity that is easy to implement due to the interdisciplinary nature of undertaken multi-stage and complex activities requiring knowledge in the field of computer science, pedagogy, higher education didactics, pedeutology. The results of the data presented in the next part show a fragment of activities within SELI—the Smart Ecosystem for Learning and Inclusion project, which developed a new virtual learning environment that has been implemented in select countries of Latin America, the Caribbean, and Europe. The implementation of the platform was combined with evaluation of the platform, improvement of the SELI environment, and measurement of key parameters for the platform’s implementation. The comparison of the platform’s evaluation, self-evaluation of digital competences of future users, and experiences related to e-learning is unique. It is a text that characterizes two areas that are strategic for the implementation of new solutions based on e-learning or mixed learning.

The presented text is also a response to the needs of the school and academic community, which is looking for new virtual programs and websites to enhance the effectiveness of learning and teaching. The text is unique because it combines an international perspective and research aimed at changing educational practices, and shows the coexistence of key variables for implementing new e-learning solutions.

2. Materials and Methods

2.1. Research Objective and Problems

The main purpose of the study was to evaluate the new e-learning platform SELI and to compare this evaluation with previous e-learning experiences and an evaluation of individual digital literacy. The following research questions were identified:

- -

- How do students and teachers evaluate the new e-learning platform?

- -

- What is the difference in the evaluation of elements of the new e-learning platform by teachers and students?

- -

- What is the relationship between the evaluation in terms of general course quality, professionally prepared materials, content usefulness, visual design, and the innovative character of the platform?

- -

- To what extent is the evaluation connected with previous experiences of e-learning and the self-evaluation of digital literacy?

- -

- Based on the data collected, which groups of users of the new platform can be identified?

2.2. Research Procedure

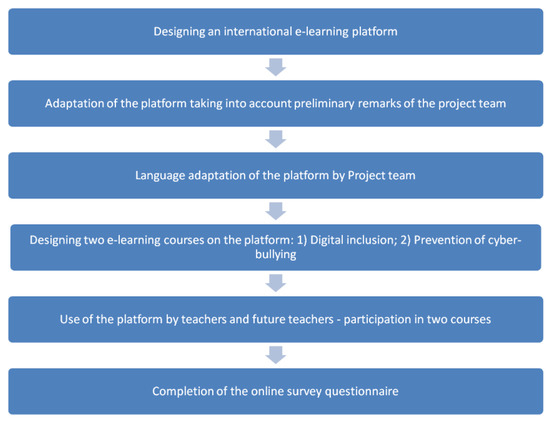

The study was conducted in the biggest Polish university that focuses mainly on the education of pre-service teachers. The research had a few stages. All stages of the study are shown in detail in the diagram below (Figure 1).

Figure 1.

Implementation and testing procedure.

The SELI platform is an international implementation-research project by several countries from Latin America, Europe, and the Caribbean. Its goal is to create a new, visually attractive, intuitive website to support learning and teaching at the academic level [40,41], as well as teacher training for active teachers [42]. Designed from scratch, the platform addresses different levels of the digital divide [43] by creating a friendly learning and teaching environment within formal, non-formal, and informal education. The platform has an interface that enables the website to be used by people with visual and hearing impairments [44,45,46]. The tool was created in cooperation with a team of IT specialists, teachers, pedagogues, and special education teachers [47]. The platform uses relatively new solutions based on digital storytelling, blockchain, and problem-based learning [48]. It is available in a number of languages: English, Spanish, Portuguese, Turkish, and Polish. This study involves a test of the platform by Polish students using the Polish interface. The server used in the tests was located in Europe to ensure the stable functioning of the learning environment (The University of Eastern Finland, Joensuu, Finland). In the first part, students and teachers were invited to explore one of the two e-learning courses available on the SELI platform—Smart Ecosystem for Learning and Inclusion [49].

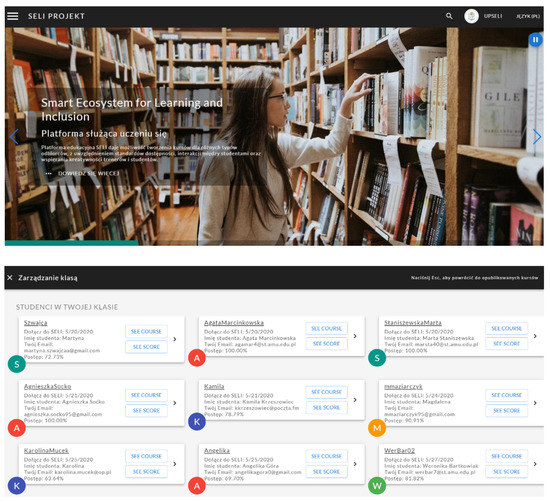

The students and teachers participating in the study were able to choose one of two courses: (1) Cyberbullying Prevention [50,51] or (2) Digital Inclusion [52]. The goal of the first course was to present information about the mechanisms and the scale of cyberbullying among children and adolescents, as well as the methods available to solve this problem in the context of teaching. The course was addressed to both active and pre-service teachers. The other course was designed to present the phenomenon of the digital divide and the methods by which digital inclusion can be achieved. It presented the complex methods used to improve the digital literacy of older people. Both courses were prepared by professionals with rich experience in research into cyberbullying and digital inclusion. The courses were subject to internal and external reviews. Screenshots of the platform interface are presented below (Figure 2).

Figure 2.

SELI platform interface.

Participation in testing and the final survey was voluntary. The study was conducted in Poland, with students of the pedagogical faculties and teachers participating in university vocational training between May and July 2020.

2.3. Sample Characteristics

There were 227 respondents. A total of 35.2% (N = 80) chose the Digital Inclusion course whereas 64.8% (N = 147) took part in the Cyberbullying Prevention course. A total of 98.2% were women (N = 223) and 1.8% men (N = 4). The disproportion in the sample results from the fact that the teaching profession and the student population in education faculties in Poland are highly female. A total of 83.3% (N = 189) of the respondents were students and 16.7% were active teachers (N = 38). A total of 46.3% (N = 105) of the respondents lived in rural areas, 26.4% lived in towns of up to 100,000 residents (N = 60), and 27.3% (N = 62) lived in a city with a population of more than 100,000. The sample does not allow for generalization into the broader population of pedagogy students or teachers. The average age of the respondents was 24.6, with a standard deviation of 7.47.

The teachers and future teachers examined were selected on a targeted basis. This was due to the assumptions of the project. The SELI platform is aimed at current and future pedagogical staff. Participation in the research was voluntary in Poland. Teachers cooperating with the Pedagogical University were invited to participate in the research, e.g., tutors of practices and teachers improving their professional qualifications. Students of pedagogical faculties also voluntarily chose the course in which they participated and then filled in the questionnaire. Students participating in academic courses related to new media were invited to participate in the survey. The instruction related to the platform and filling in the questionnaire was provided by the staff of the Pedagogical University in Krakow. The collected results do not allow for the transfer of the obtained regularities to all Polish teachers or pedagogical students. Nevertheless, the collected data allowed for the first testing of the SELI platform among people closely interested in the subject of digital inclusion and cyberbullying prevention.

2.4. Research Tools

Once they had tested the platform, the students and teachers were asked to complete a survey that consisted of several forms measuring:

- General evaluation of how the e-learning platform functioned, with a focus on the following elements: course quality, professionalism of the resources, usefulness of the content, graphic design, and modern character of the platform. Feedback was collected using the 5-degree Likert scale, where 1 is very poor and 5 is very good. The survey is an original tool with the following psychometric features:

| Scale Reliability Statistics | |||

| McDonald’s ω | Cronbach’s α | Gutmann’s λ6 | |

| scale | 0.880 | 0.876 | 0.879 |

- Experiences with e-learning during the last year, including activities such as participation in online training that was mandatory in the official study curricula or required as part of career development, searching for appropriate resources on the Internet in order to complete online training, participation in free e-learning courses (e.g., foreign languages, ICT), participation in paid online courses, and participation in online study groups. The respondents provided answers where 1 is never and 5 is very often. The tool had the following psychometric features:

| Scale Reliability Statistics | |||

| McDonald’s ω | Cronbach’s α | Gutmann’s λ6 | |

| scale | 0.680 | 0.666 | 0.651 |

- Self-evaluation of digital literacy, which included an evaluation of the skills and knowledge regarding the use of a text editor (e.g., Word), the use of a spreadsheet program (e.g., Excel, Calc), the ability to use presentation software (e.g., PowerPoint), the use of graphic software (e.g., Picasa, Gimp), and awareness of digital threats (e.g., cyberbullying, Internet addiction, sexting). The students evaluated their knowledge and skills using the 5-degree Likert scale where 1 is very low and 5 is very high.

| Scale Reliability Statistics | |||

| McDonald’s ω | Cronbach’s α | Gutmann’s λ6 | |

| scale | 0.788 | 0.768 | 0.776 |

The whole tool presents a satisfactory level of Cronbach’s inner coherence: α = 0.731.

2.5. Limitations and Directions of Research

The study was conducted according to the accepted standards of the social sciences. However, given the characteristics of the sample, the data collected cannot be generalized across the whole population. The non-random sampling and non-representative sample do not enable the generalization and transfer of the results to all students and teachers who seek to improve their professional qualifications in Poland or who are studying at the Pedagogical University of Krakow. The postulate is to test the platform in more representative samples and in different types and forms of professional training of pre-service and active teachers. In addition, the authors are aware that the evaluation of digital literacy through self-assessment is not the perfect way to measure this variable. It is assumed that in later editions of the study, the tests used will employ, for example, the European Certificate of Digital Literacy (ECDL) sets to measure Digital Literacy.

3. Results

3.1. Evaluation of the New E-Learning Platform

Prior to answering the research problems, the proposed research model was verified. Based on Kaiser–Meyer–Olkin, it was noticed that all indicators for the proposed model are found in a range from moderate to high (see Table 2). This means that the data collected allow for factor analysis. In the research, exploratory factor analysis (EFA) was used. The data collected are characterized by the following coefficients describing the structure of the indicators: Bartlett’s test: Χ² = 1235.524; df = 105.000, p < 0.001; chi-squared test: value = 222.472, df = 63, p < 0.001. For this test model, confirmatory factor analysis (CFA) was also carried out, which can be found in Appendix A.

Table 2.

Kaiser–Meyer–Olkin test.

The structure of the data is interesting. Based on the rotation method promax, three factors were identified. All the presented indicators are coherent in their attribution to the variables adopted in the model: evaluation of course quality (factor 1), self-evaluation of digital literacy (factor 2), and e-learning experiences in the calendar year (factor 3). The factor loadings for certain indicators is presented in detail in Table 3.

Table 3.

Factor loadings.

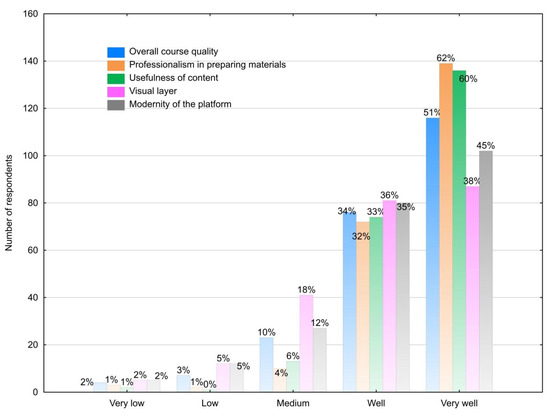

The majority of the students and teachers evaluated the new SELI platform as very good and good. The most highly rated factors were the quality of the content on the platform and the usefulness of the resources presented. A total of 95% of the respondents evaluated the professionalism of the materials as very high and high. Almost one in five students and teachers evaluated the visual side of the platform as average (18%). The same percentage of respondents, 18%, was not satisfied with the graphical layout of the new platform. It should be pointed out that during their studies, the pre-service and active teachers have the opportunity to explore the Moodle platform, as this is the official e-learning environment of the population studied. The detailed distribution of answers is presented in Figure 3.

Figure 3.

Evaluation of SELI platform in five aspects.

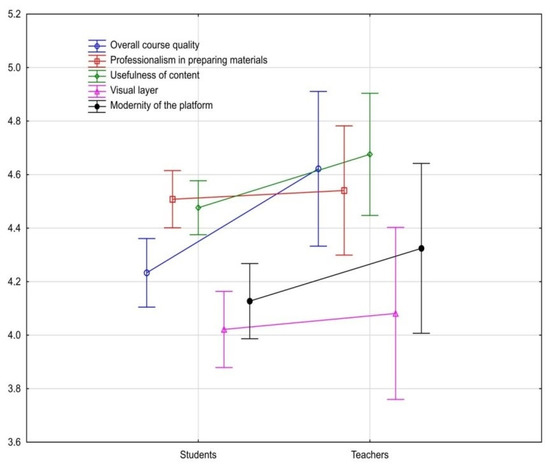

3.2. Evaluation of the New E-Learning Platform (Students vs. Teachers)

There are differences between the areas evaluated by students and teachers. The teachers evaluated the general aspects of the course more highly: F(1, 224) = 5.8716, p = 0.01618. Other factors such as content usefulness, innovative character, professionalism of the materials, and the visual aspects of the platform were all rated similarly. The differences are presented in Figure 4. Both groups provided very similar answers.

Figure 4.

Differences in the evaluation of particular components of SELI in the two groups.

3.3. Correlation between the Assessment of Individual Elements of the Platform

Using Spearman’s correlations coefficient (lack of nominal distribution), it was noticed that there is a correlation in the mean intensity across the majority of the platform elements that were evaluated. Thus, satisfaction in one area is correlated with a positive evaluation for other elements. However, these are not cause-and-effect correlations, so it cannot be stated which variable is dependent and which is independent. The correlations are also not particularly strong, so there are differences in the evaluation of certain areas. It is also interesting that the metric age is negatively correlated (weak correlation) with the evaluation of the visual appearance and the innovative character of the platform. Older respondents (active teachers) were less positive regarding these two areas. The strength of the correlations is presented in detail in Table 4.

Table 4.

Correlation between the evaluation of the indicators.

3.4. Predictive Evaluation of the Platform

Expanding the analysis to include other key factors, calculations were made using multilinear regression analysis for the following factors, which became the dependent variables: (1) general course quality, (2) professionally prepared materials, (3) content usefulness, (4) visual design, and (5) innovative character of the platform. In the case when the model includes all of the factors and the evaluation of the platform depends on digital literacy and previous e-learning experiences, it can be noticed that this assumption is true only for a low percentage of the respondents (the exception is the innovative character of the platform, where R2 = 58.6%). Only in two cases is having more experience connected with participation in free e-learning courses related to a more positive evaluation of the professionalism and usefulness of the platform’s content. Other factors have no significant impact on the dependent variables. The complete results of the multilinear regression analysis are presented in Table 5.

Table 5.

Multilinear regression analysis.

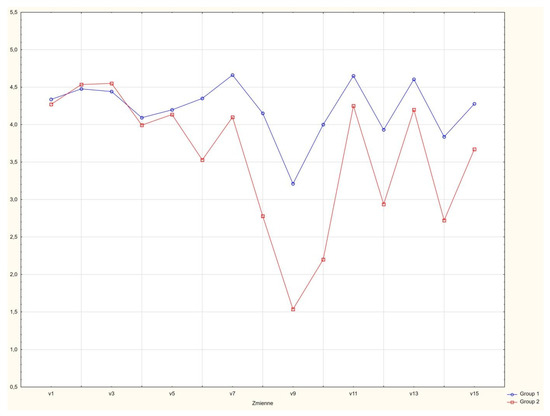

3.5. Platform Users—An Attempt to Group the Respondents

Using cluster analysis, two groups of respondents were identified. The first consisted of 86 persons (37.88%). This group evaluated the new platform in a similar way as group 2. However, the difference is seen mainly in the e-learning experiences of the respondents and their slightly higher evaluation of their own digital literacy. This group has quite strong potential connected with the implementation of teaching innovations and awareness of e-threats. The other group (the red cluster), composed of 140 individuals (62.12%), were respondents with less experience in e-learning, and who at the same time evaluated their digital literacy as being lower. This was two thirds of the sample: the persons who will be or are teachers. This group requires special training support during their pedagogical studies. The statistical differences between the groups are presented in Table 6 and Figure 5.

Table 6.

Differences between the groups.

Figure 5.

K-means cluster analysis.

4. Discussion

The popularity of e-learning platforms has been growing rapidly in recent years, and they are used in almost every sector of education. In the academic sector, this form of education has already been implemented on a mass scale. E-learning platforms have proven extremely useful during the COVID-19 pandemic [53]. For this and other reasons, using e-learning platforms in university and professional training has been the subject of many scientific investigations. The wide spectrum of e-learning applications has been studied, from the evaluation of the quality of e-learning systems and the self-evaluation of their users’ digital literacy, to the development of a successful e-learning model. There are numerous scientific publications addressing these issues, among them the research reports by Al-Fraihat et al. [54], Taufiq et al. [55], Khlifi [56], Yilmaz [57], Rodrigues et al. [58], the studies by Ventayen et al. [59], Fryer and Bovee [60], Yang et al. [61], and some older research into the use of the Moodle platform in higher education by Hölbl et al. [62].

One of the most popular e-learning platforms used by universities in recent years is Moodle. The experiences with the learning management system when using Moodle were studied by Hölbl et al. [62]. The authors presented the results of their research into students’ experiences with the Moodle platform, along with an evaluation of its functionality and data privacy issues. The research indicates that the respondents were satisfied with being able to access the study and practice materials from any place and any time and with the possibility to access all the materials through one platform. The respondents also pointed to the positive aspects of Moodle-based e-learning, such as notifications via email about activities and changes. The respondents think e-learning is a very interesting form of learning. More than 90% declared that they had no problems with completing evaluation surveys upon completing their e-learning course. As suggested by the researchers, this may have resulted from the fact that most students had a positive experience with the Moodle platform. Many students readily presented their suggestions for potential changes to how the platform operated, along with suggestions for improving the study materials. In their concluding remarks, the authors emphasized that students are generally well-prepared to participate in developing courses on the Moodle platform and, in general, they are aware of the benefits e-learning strategies bring to modern studying [62].

The fact is that the significant increase in the quantity of research into the use of e-learning platforms has influenced how we understand e-learning success factors such as the quality of the platform, the quality of information published on the platform, the quality of the services that are provided, user satisfaction, and the usefulness of the course. Consequently, the main challenge researchers face at present is to develop a model of e-learning success. The challenge was accepted by Al-Fraihat et al., whose studies explore the factors that determine the success of e-learning and propose a model that would include the determinants and aspects of e-learning success. They also studied the level of interest in e-learning platforms among their users. Another goal of the research was to share the experiences from measuring the success of e-learning in highly developed countries, through the example of Great Britain. The researchers identified seven types of quality factors that determined satisfaction in participating in e-learning training: the quality of the technical system, the quality of the information carried by the platform, the quality of the services provided, the quality of the education system, the quality of support, the quality of the students, and the quality of the instructors. The research also showed the relationship between the quality of the students, the quality of the instructor, the educational background of the students, and the quality of the e-learning system and its usefulness [54].

The most recent studies have revealed that school and university students are now much more aware of the usefulness of e-learning platforms and the benefits of using this form of learning. Further promotion of e-learning platforms may be planned in the form of workshops and training for school and university students. In addition, it is important to draw the attention of universities to the necessity of providing students with clear and concise information about the functions and workings of the e-learning platforms they have available to them. Such activities would increase the sense of usefulness and general satisfaction derived from using the platforms, which in turn will result in further benefits connected with the wider use of the e-learning system. Importantly, the e-learning platform should not only be intuitive and easily navigated but also reliable, personalized, user friendly, and visually attractive [54]. The studies show that self-evaluation (e-evaluation) performed following the completion of an e-learning course may be an appropriate method of evaluation. Its advantages include providing a fast and clear evaluation of the knowledge and learning opportunities of the users as well as an evaluation of the students’ abilities. Regular evaluation by platform users after they complete their e-learning course increasingly improves their digital literacy and their ability to use e-learning for studying individually. Another advantage of e-evaluation, as compared to traditional evaluation, is that it means that the students receive instantaneous feedback. Flexibility, reliability, and greater objectivity of this form of assessment is also important. In addition, e-evaluations can be archived on a server [56].

The research indicates that willingness to learn using e-learning is an important factor in determining student satisfaction with this form of education. Readiness and a mature attitude towards e-learning also motivate school and university students to engage in individual learning in this modern form. Yilmaz [57] showed that student satisfaction and motivation both have a significant impact on student motivation to use e-learning and to pursue individual development. The more motivated a student is to engage in e-learning and to improve their ability to study individually, the more satisfied they will be with the classes designed according to the FC teaching model. When analyzing other similar research, the authors concluded that successful e-learning also depends on the growth of motivation to participate in e-learning and to improve self-learning skills. Another important determinant of satisfaction and motivation is the sense of self-efficacy in using the Internet. The better the student’s self-learning skills, the greater their satisfaction and motivation to study using modern e-learning platforms. Students who struggle with individual learning during online courses will not know what they should do during the whole learning process and will feel confused and helpless. To increase such students’ level of readiness to engage in e-learning, it is necessary to offer them training and courses to help them expand their knowledge and improve their IT competencies. Without appropriate training, students who are forced to learn online when they are not prepared for it will adopt a negative attitude towards e-learning in general. According to Yilmaz [57], readiness to engage in e-learning, the sense of one’s own effectiveness in using the Internet, IT competencies, the sense of one’s own effectiveness in online communication, the ability to learn individually, and the level of control exercised by teachers, all work to determine student satisfaction with e-learning and students’ motivation to engage in this form of learning.

Different e-learning platforms are used in higher education in parallel with traditional teaching in many countries around the world, but in the era of the COVID-19 pandemic, many have turned out to be an excellent alternative to the previously used methods. The studies conducted in Indonesia have revealed that different technologies that support distance learning were available in university education and that these may be successfully applied in university management in the future. It is necessary to reinforce the various e-learning systems, but the priority is to strengthen the status of e-learning itself [55].

5. Summary

The evaluation presented herein focused on three areas: the quality of the courses, e-learning experiences during the last calendar year, and the self-evaluation of digital literacy. The SELI platform tested was rated positively by the respondents, thus proving the success achieved by the team that developed the tool [63].

Based on cluster analysis, two groups of respondents were identified. One third were individuals who had used e-learning before and evaluated their digital literacy more highly. The remaining two thirds of the respondents declared lower digital literacy, and this appears to translate into a lower frequency of using e-learning in the past.

The data analysis including the variable (student, active teacher) showed that teachers provided higher ratings within one factor. They were able to evaluate more precisely whether the course content was useful in their work. They also preferred concise forms of communicating information and focused mainly on practical aspects.

Based on the results of Spearman’s correlations coefficient, it was determined that there were correlations between most of the platform elements evaluated. The quality of the content on the platform and the usefulness of the materials presented were evaluated the highest. The majority of negative evaluations addressed the graphical layout of the content. This evaluation may have resulted from a lack of experience in learning using this type of tool (contact with Moodle only) and a poor knowledge of the methodology of developing e-learning trainings.

The authors are aware of the limitations of the results presented herein. The areas recommended for further studies include the evaluation of the platform performed in a bigger population of users, both students and academic teachers.

To summarize, the text fits the research into the conditions of academic e-learning. Secondly, based on the data collected and statements assigned to the universities, a new area of online education research emerges. Universities, in particular those training pre-service teachers, should in a decisive and bold way develop new educational solutions to improve the forms of learning. However, these transformations should take into account the relevant micro-conditions, such as the level of digital literacy of future teachers obtained during previous stages of education.

Author Contributions

Conceptualization, Ł.T.; methodology, Ł.T.; validation, A.W. and J.W.-G.; formal analysis, Ł.T.; investigation, A.W., J.W.-G., and K.P.; resources, Ł.T.; data curation, Ł.T.; writing—original draft preparation, Ł.T., K.P., A.W., J.W.-G., and N.D.; writing—review and editing, Ł.T.; visualization, Ł.T.; supervision, K.P. and N.D., project administration, Ł.T.; funding acquisition, Ł.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Center for Research and Development (NCBiR), grant number: Smart Ecosystem for Learning and Inclusion—ERANet17/ICT-0076 SELI and The APC was funded by NCBiR (Poland).

Acknowledgments

The project was supported by the National Centre for Research and Development (NCBiR). We also thank you for your organizational support in the implementation of the SELI project by the management of the Institute of Education Sciences—Pedagogical University of Krakow. We would like to express our separate thanks to the person who carried out the linguistic correction of this study—Christopher Walker.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Confirmatory Factor Analysis

Appendix A.1. Model Fit

| Chi-Square Test | |||

| Model | Χ² | df | p |

| Baseline model | 1274.046 | 105 | |

| Factor model | 268.003 | 87 | <0.001 |

| R-Squared | |

| R² | |

| General course quality | 0.734 |

| Professionally prepared materials | 0.540 |

| Content usefulness | 0.469 |

| Visual design | 0.648 |

| Innovative character of platform | 0.592 |

| I took part in online courses required in the official study curriculum or as part of my professional development. | 0.289 |

| I searched for relevant resources on the Internet to complete online classes. | 0.280 |

| I took part in free e-learning courses (online courses such as foreign languages, ICT). | 0.424 |

| I took part in paid online courses. | 0.264 |

| I took part in online joint study groups. | 0.244 |

| Using a text editor | 0.552 |

| Using a spreadsheet software | 0.424 |

| Using presentation software | 0.582 |

| Using graphic software | 0.329 |

| Awareness of digital threats | 0.270 |

Appendix A.2. Parameter Estimates

| Factor Loadings | ||||||||

| 95% Confidence Interval | ||||||||

| Factor | Indicator | Symbol | Estimate | Std. Error | z-Value | p | Lower | Upper |

| Factor 1 | General course quality | λ11 | 0.771 | 0.050 | 15.382 | <0.001 | 0.673 | 0.869 |

| Professionally prepared materials | λ12 | 0.545 | 0.044 | 12.308 | <0.001 | 0.458 | 0.632 | |

| Content usefulness | λ13 | 0.483 | 0.043 | 11.201 | <0.001 | 0.399 | 0.568 | |

| Visual design | λ14 | 0.796 | 0.057 | 14.018 | <0.001 | 0.684 | 0.907 | |

| Innovative character of platform | λ15 | 0.752 | 0.057 | 13.128 | <0.001 | 0.640 | 0.865 | |

| Factor 2 | I took part in online courses required in the official study curriculum or as part of my professional development. | λ21 | 0.628 | 0.088 | 7.128 | <0.001 | 0.456 | 0.801 |

| I searched for relevant resources on the Internet to complete online classes. | λ22 | 0.454 | 0.065 | 7.000 | <0.001 | 0.327 | 0.581 | |

| I took part in free e-learning courses (online courses such as foreign languages, ICT). | λ23 | 0.846 | 0.097 | 8.689 | <0.001 | 0.655 | 1.036 | |

| I took part in online courses required in the official study curriculum or as part of my professional development. | λ24 | 0.713 | 0.105 | 6.779 | <0.001 | 0.507 | 0.919 | |

| I searched for relevant resources on the Internet to complete online classes. | λ25 | 0.708 | 0.109 | 6.500 | <0.001 | 0.495 | 0.922 | |

| Factor 3 | Using a text editor | λ31 | 0.500 | 0.043 | 11.674 | <0.001 | 0.416 | 0.584 |

| Using a spreadsheet software | λ32 | 0.647 | 0.065 | 9.918 | <0.001 | 0.519 | 0.774 | |

| Using presentation software | λ33 | 0.560 | 0.046 | 12.058 | <0.001 | 0.469 | 0.651 | |

| Using graphic software | λ34 | 0.663 | 0.078 | 8.506 | <0.001 | 0.511 | 0.816 | |

| Awareness of digital threats | λ35 | 0.435 | 0.057 | 7.580 | <0.001 | 0.322 | 0.547 | |

| 95% Confidence Interval | |||||

| Factor | Estimate | Std. Error | z-Value | Lower | Upper |

| Factor 1 | 1.000 | 0.000 | 1.000 | 1.000 | |

| Factor 2 | 1.000 | 0.000 | 1.000 | 1.000 | |

| Factor 3 | 1.000 | 0.000 | 1.000 | 1.000 | |

| Factor Covariances | ||||||||

| 95% Confidence Interval | ||||||||

| Estimate | Std. Error | z-Value | p | Lower | Upper | |||

| Factor 1 | ↔ | Factor 2 | 0.073 | 0.085 | 0.865 | 0.387 | −0.093 | 0.239 |

| Factor 1 | ↔ | Factor 3 | 0.037 | 0.078 | 0.466 | 0.641 | −0.117 | 0.190 |

| Factor 2 | ↔ | Factor 3 | 0.315 | 0.083 | 3.798 | <0.001 | 0.153 | 0.478 |

| Residual Variances | ||||||

| 95% Confidence Interval | ||||||

| Indicator | Estimate | Std. Error | z-Value | p | Lower | Upper |

| General course quality | 0.216 | 0.031 | 6.867 | <0.001 | 0.154 | 0.277 |

| Professionally prepared materials | 0.254 | 0.028 | 9.048 | <0.001 | 0.199 | 0.309 |

| Content usefulness | 0.264 | 0.028 | 9.445 | <0.001 | 0.209 | 0.319 |

| Visual design | 0.344 | 0.042 | 8.105 | <0.001 | 0.261 | 0.427 |

| Innovative character of platform | 0.391 | 0.045 | 8.661 | <0.001 | 0.302 | 0.479 |

| I took part in online courses required in the official study curriculum or as part of my professional development. | 0.969 | 0.111 | 8.693 | <0.001 | 0.751 | 1.188 |

| I searched for relevant resources on the Internet to complete online classes. | 0.531 | 0.060 | 8.785 | <0.001 | 0.413 | 0.650 |

| I took part in free e-learning courses (online courses such as foreign languages, ICT). | 0.973 | 0.136 | 7.129 | <0.001 | 0.706 | 1.241 |

| I took part in paid online courses. | 1.422 | 0.159 | 8.934 | <0.001 | 1.110 | 1.734 |

| I took part in online joint study groups. | 1.556 | 0.171 | 9.107 | <0.001 | 1.221 | 1.891 |

| Using a text editor | 0.203 | 0.027 | 7.411 | <0.001 | 0.149 | 0.256 |

| Using a spreadsheet software | 0.567 | 0.065 | 8.742 | <0.001 | 0.440 | 0.695 |

| Using presentation software | 0.225 | 0.032 | 7.015 | <0.001 | 0.162 | 0.288 |

| Using graphic software | 0.897 | 0.096 | 9.388 | <0.001 | 0.710 | 1.084 |

| Awareness of digital threats | 0.510 | 0.053 | 9.698 | <0.001 | 0.407 | 0.613 |

References

- Kuzmanović, M.; Anđelković Labrović, J.; Nikodijević, A. Designing e-learning environment based on student preferences: Conjoint analysis approach. Int. J. Cogn. Res. Sci. Eng. Educ. 2019, 7, 37–47. [Google Scholar] [CrossRef]

- Potyrała, K. iEdukacja: Synergia Nowych Mediów i Dydaktyki: Ewolucja, Antynomie, Konteksty; Wydawnictwo Naukowe Uniwersytetu Pedagogicznego: Kraków, Poland, 2017. [Google Scholar]

- Fazlollahtabar, H.; Muhammadzadeh, A. A knowledge-based user interface to optimize curriculum utility in an e-learning system. Int. J. Enterp. Inf. Syst. 2012, 8, 34–53. [Google Scholar] [CrossRef][Green Version]

- Galustyan, O.V.; Borovikova, Y.V.; Polivaeva, N.P.; Bakhtiyor, K.R.; Zhirkova, G.P. E-learning within the field of andragogy. Int. J. Emerg. Technol. Learn. 2019, 14, 148–156. [Google Scholar] [CrossRef]

- Stošić, L.; Bogdanović, M. M-learning—A new form of learning and education. Int. J. Cogn. Res. Sci. Eng. Educ. 2019, 1, 114–118. [Google Scholar]

- Abakumova, I.; Bakaeva, I.; Grishina, A.; Dyakova, E. Active learning technologies in distance education of gifted students. Int. J. Cogn. Res. Sci. Eng. Educ. 2019, 7, 85–94. [Google Scholar] [CrossRef]

- Tan, P.J.B. English e-learning in the virtual classroom and the factors that influence ESL (English as a Second Language): Taiwanese citizens’ acceptance and use of the Modular Object-Oriented Dynamic Learning Environment. Soc. Sci. Inf. 2015, 54, 211–228. [Google Scholar] [CrossRef]

- Koehler, M.; Mishra, P. What is technological pedagogical content knowledge (TPACK)? Contemp. Issues Technol. Teach. Educ. 2009, 9, 60–70. [Google Scholar] [CrossRef]

- Novković Cvetković, B.; Stošić, L.; Belousova, A. Media and Information Literacy-the Basis for Applying Digital Technologies in Teaching from the Discourse of Educational Needs of Teachers. Croat. J. Educ. 2018, 20, 1089–1114. [Google Scholar]

- Tan, P.J.B.; Hsu, M.H. Designing a System for English Evaluation and Teaching Devices: A PZB and TAM Model Analysis. EURASIA J. Math. Sci. Technol. Educ. 2018, 14, 2107–2119. [Google Scholar] [CrossRef]

- Oyelere, S.S.; Suhonen, J.; Wajiga, G.M.; Sutinen, E. Design, development, and evaluation of a mobile learning application for computing education. Educ. Inf. Technol. 2018, 23, 467–495. [Google Scholar] [CrossRef]

- Tan, P.J.B.; Hsu, M.H. Developing a system for English evaluation and teaching devices. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 938–941. [Google Scholar]

- Sattari, A.; Abdekhoda, M.; Zarea Gavgani, V. Determinant Factors Affecting the Web-based Training Acceptance by Health Students, Applying UTAUT Model. Int. J. Emerg. Technol. Learn. 2017, 12, 112. [Google Scholar] [CrossRef]

- Tan, P.J.B. Applying the UTAUT to Understand Factors Affecting the Use of English E-Learning Websites in Taiwan. SAGE Open 2013, 3. [Google Scholar] [CrossRef]

- Lu, A.; Chen, Q.; Zhang, Y.; Chang, T. Investigating the Determinants of Mobile Learning Acceptance in Higher Education Based on UTAUT. In Proceedings of the 2016 International Computer Symposium, Chiayi, Taiwan, 15–17 December 2016. [Google Scholar] [CrossRef]

- Demeshkant, N. Future Academic Teachers’ Digital Skills: Polish Case-Study. Univers. J. Educ. Res. 2020, 8, 3173–3178. [Google Scholar] [CrossRef]

- Bomba, L.; Zacharová, J. Blended learning and lifelong learning of teachers in the post-communist society in Slovakia. Int. J. Contin. Eng. Educ. Life-Long Learn. 2014, 24, 329. [Google Scholar] [CrossRef]

- Eger, L. E-learning a Jeho Aplikace. Bachelor’s Thesis, Zapadoceska Univerzita v Plzni, Plzen, Czech Republic, 2020. [Google Scholar]

- Pozo-Sánchez, S.; López-Belmonte, J.; Fernández, M.F.; López, J.A. Análisis correlacional de los factores incidentes en el nivel de competencia digital del profesorado. Rev. Electron. Interuniv. Form. Profr. 2020, 23, 143–151. [Google Scholar] [CrossRef]

- Tomczyk, Ł. Skills in the area of digital safety as a key component of digital literacy among teachers. Educ. Inf. Technol. 2020, 25, 471–486. [Google Scholar] [CrossRef]

- Pozo-Sánchez, S.; López-Belmonte, J.; Rodríguez-García, A.M.; López-Núñez, J.A. Teachers’ digital competence in using and analytically managing information in flipped learning. Cult. Educ. 2020, 32, 213–241. [Google Scholar] [CrossRef]

- Ziemba, E. The Holistic and Systems Approach to the Sustainable Information Society. J. Comput. Inf. Syst. 2013, 54, 106–116. [Google Scholar] [CrossRef]

- Walter, N. Zanurzeni w Mediach. Konteksty Edukacji Medialnej; Wydawnictwo Uniwersytetu im. Adama Mickiewicza w Poznaniu: Poznań, Poland, 2016. [Google Scholar]

- Ziemba, E. The Contribution of ICT Adoption to the Sustainable Information Society. J. Comput. Inf. Syst. 2017, 59, 116–126. [Google Scholar] [CrossRef]

- Rabiman, R.; Nurtanto, M.; Kholifah, N. Design and Development E-Learning System by Learning Management System (LMS) in Vocational Education. Online Submiss. 2020, 9, 1059–1063. [Google Scholar]

- Ammenwerth, E.; Hackl, W.O.; Hoerbst, A.; Felderer, M. Indicators for Cooperative, Online-Based Learning and Their Role in Quality Management of Online Learning. In Student-Centered Virtual Learning Environments in Higher Education; IGI Global: Hershey, PA, USA, 2019; pp. 1–20. [Google Scholar]

- Beqiri, E. ICT and e-learning literacy as an important component for the new competency-based curriculum framework in Kosovo. J. Res. Educ. Sci. 2010, 1, 7–21. [Google Scholar]

- Malik, S.I.; Mathew, R.; Al-Nuaimi, R.; Al-Sideiri, A.; Coldwell-Neilson, J. Learning problem solving skills: Comparison of E-Learning and M-Learning in an introductory programming course. Educ. Inf. Technol. 2019, 24, 2779–2796. [Google Scholar] [CrossRef]

- Tularam, G.A. Traditional vs. Non-traditional Teaching and Learning Strategies–the case of E-learning. Int. J. Math. Teach. Learn. 2018, 19, 129–158. [Google Scholar]

- Fedeli, L. Embodiment e Mondi Virtuali: Implicazioni Didattiche; F. Angeli: Milano, Italy, 2013. [Google Scholar]

- Pham, L.; Williamson, S.; Berry, R. Student perceptions of e-learning service quality, e-satisfaction, and e-loyalty. Int. J. Enterp. Inf. Syst. 2018, 14, 19–40. [Google Scholar] [CrossRef]

- Tawafak, R.M.; Romli, A.B.; bin Abdullah Arshah, R.; Malik, S.I. Framework design of university communication model (UCOM) to enhance continuous intentions in teaching and e-learning process. Educ. Inf. Technol. 2020, 25, 817–843. [Google Scholar] [CrossRef]

- Al-Qirim, N.; Tarhini, A.; Rouibah, K.; Mohamd, S.; Yammahi, A.R.; Yammahi, M.A. Learning orientations of IT higher education students in UAE University. Educ. Inf. Technol. 2018, 23, 129–142. [Google Scholar] [CrossRef]

- Ze’ang, Z.; Jiawei, W. A study on cloud-computing-based education platform. Distance Educ. China 2010, 6, 66–68. [Google Scholar]

- Lai, C.; Shum, M.; Tian, Y. Enhancing learners’ self-directed use of technology for language learning: The effectiveness of an online training platform. Comput. Assist. Lang. Learn. 2016, 29, 40–60. [Google Scholar] [CrossRef]

- Liu, F.; Wang, Y. Teachers’ training based on moodle platform. Open Educ. Res. 2008, 5, 91–94. [Google Scholar]

- Yuan, J.G.; Li, Z.G.; Zhang, W.T. Blended Training System Design based on Moodle Platform. Appl. Mech. Mater. 2014, 644, 5745–5748. [Google Scholar] [CrossRef]

- Vlasenko, K.; Chumak, O.; Sitak, I.; Lovianova, I.; Kondratyeva, O. Training of mathematical disciplines teachers for higher educational institutions as a contemporary problem. Univers. J. Educ. Res. 2019, 7, 1892–1900. [Google Scholar] [CrossRef]

- Chen, C.W.J.; Lo, K.M.J. From Teacher-Designer to Student-Researcher: A Study of Attitude Change Regarding Creativity in STEAM Education by Using Makey Makey as a Platform for Human-Centred Design Instrument. J. STEM Educ. Res. 2019, 2, 75–91. [Google Scholar] [CrossRef]

- Tomczyk, Ł.; Oyelere, S.S. ICT for Learning and Inclusion in Latin America and Europe; Pedagogical University of Cracow: Cracow, Poland, 2019. [Google Scholar]

- Oyelere, S.S.; Tomczyk, L.; Bouali, N.; Agbo, F.J. Blockchain technology and gamification-conditions and opportunities for education. In Adult Education 2018-Transformation in the Era of Digitization and Artificial Intelligence; Česká andragogická společnost/Czech Andragogy Society: Praha, Czech Republic, 2019; pp. 85–96. [Google Scholar]

- Tomczyk, Ł.; Muñoz, D.; Perier, J.; Arteaga, M.; Barros, G.; Porta, M.; Puglia, E. ICT and preservice teachers. Short case study about conditions of teacher preparation in: Dominican republic, ecuador, uruguay and poland. Knowl. Int. J. 2019, 32, 15–24. [Google Scholar]

- Tomczyk, L.; Eliseo, M.A.; Costas, V.; Sanchez, G.; Silveira, I.F.; Barros, M.J.; Amado-Salvatierra, H.; Oyelere, S.S. Digital Divide in Latin America and Europe: Main Characteristics in Selected Countries. In Proceedings of the 2019 14th Iberian Conference on Information Systems and Technologies (CISTI), Coimbra, Portugal, 19–22 June 2019. [Google Scholar]

- Oyelere, S.S.; Silveira, I.F.; Martins, V.F.; Eliseo, M.A.; Akyar, Ö.Y.; Costas Jauregui, V.; Caussin, B.; Motz, R.; Suhonen, J.; Tomczyk, Ł. Digital Storytelling and Blockchain as Pedagogy and Technology to Support the Development of an Inclusive Smart Learning Ecosystem. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020; pp. 397–408. [Google Scholar]

- Martins, V.F.; Amato, C.; Tomczyk, Ł.; Oyelere, S.S.; Eliseo, M.A.; Silveira, I.F. Accessibility Recommendations for Open Educational Resources for People with Learning Disabilities. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020; pp. 387–396. [Google Scholar]

- Martins, V.; Oyelere, S.; Tomczyk, L.; Barros, G.; Akyar, O.; Eliseo, M.; Amato, C.; Silveira, I. A Blockchain Microsites-Based Ecosystem for Learning and Inclusion. Braz. Symp. Comput. Educ. 2019, 30, 229–238. [Google Scholar]

- Oyelere, S.S.; Bin Qushem, U.; Costas Jauregui, V.; Akyar, Ö.Y.; Tomczyk, Ł.; Sanchez, G.; Munoz, D.; Motz, R. Blockchain Technology to Support Smart Learning and Inclusion: Pre-service Teachers and Software Developers Viewpoints. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020; pp. 357–366. [Google Scholar]

- Tomczyk, L.; Oyelere, S.S.; Puentes, A.; Sanchez-Castillo, G.; Muñoz, D.; Simsek, B.; Akyar, O.Y.; Demirhan, G. Flipped learning, digital storytelling as the new solutions in adult education and school pedagogy. In Adult Education 2018-Transformation in the Era of Digitization and Artificial Intelligence; Česká andragogická společnost/Czech Andragogy Society: Praha, Czech Republic, 2019. [Google Scholar]

- Martins, V.F.; Tomczyk, Ł.; Amato, C.; Eliseo, M.A.; Oyelere, S.S.; Akyar, Ö.Y.; Motz, R.; Barros, G.; Sarmiento, S.M.A.; Silveira, I.F. A Smart Ecosystem for Learning and Inclusion: An Architectural Overview. In Proceedings of the International Conference on Computational Science and Its Applications, Cagliari, Italy, 1–4 July 2020; Springer: Cham, Germany, 2020; pp. 601–616. [Google Scholar]

- Wnęk-Gozdek, J.; Tomczyk, Ł.; Mróz, A. Cyberbullying prevention in the opinion of teachers. Media Educ. 2019, 59, 594–607. [Google Scholar]

- Tomczyk, Ł.; Włoch, A. Cyberbullying in the light of challenges of school-based prevention. Int. J. Cogn. Res. Sci. Eng. Educ. 2019, 7, 13–26. [Google Scholar] [CrossRef]

- Tomczyk, Ł.; Mróz, A.; Potyrała, K.; Wnęk-Gozdek, J. Digital inclusion from the perspective of teachers of older adults-expectations, experiences, challenges and supporting measures. Gerontol. Geriatr. Educ. 2020. [Google Scholar] [CrossRef]

- Pyżalski, J. Edukacja w Czasach Pandemii Wirusa COVID-19. Z Dystansem o Tym, co robimy Obecnie Jako Nauczyciele [Education during the COVID-19 Pandemic. With the Distance about What We Are Currently Doing as Teachers]; EduAkcja: Warszawa, Poland, 2020. [Google Scholar]

- Al-Fraihat, D.; Joy, M.; Masa’deh, R.; Sinclair, J. Evaluating E-learning systems success: An empirical study. Comput. Hum. Behav. 2020, 102, 67–86. [Google Scholar] [CrossRef]

- Taufi, R.; Baharun, M.; Sunaryo, B.; Pudjoatmodjo, B.; Utomo, W.M. Indonesia: Covid-19 and E-learning in Student Attendance Method. SciTech Framew. 2020, 2, 12–22. [Google Scholar]

- Khlifi, J. An Advanced Authentication Scheme for E-evaluation Using Students Behaviors Over E-learning Platform. Int. J. Emerg. Technol. Learn. 2020, 15, 90–111. [Google Scholar] [CrossRef]

- Yilmaz, R. Exploring the role of e-learning readiness on student satisfaction and motivation in flipped classroom. Comput. Hum. Behav. 2017, 70, 251–260. [Google Scholar] [CrossRef]

- Rodrigues, M.W.; Isotani, S.; Zárate, L.E. Educational Data Mining: A review of evaluation process in the e-learning. Telemat. Inform. 2018, 35, 1701–1717. [Google Scholar] [CrossRef]

- Ventayen, R.J.M.; Estira, K.L.A.; De Guzman, M.J.; Cabaluna, C.M.; Espinosa, N.N. Usability Evaluation of Google Classroom: Basis for the Adaptation of GSuite E-Learning Platform. Asia Pac. J. Educ. Arts Sci. 2018, 5, 47–51. [Google Scholar]

- Fryer, L.; Bovee, H.N. Supporting students’ motivation for e-learning: Teachers matter on and offline. Internet High. Educ. 2016, 30, 21–29. [Google Scholar] [CrossRef]

- Yang, J.C.; Quadir, B.; Chen, N.S.; Miao, Q. Effects of online presence on learning performance in a blog-based online course. Internet High. Educ. 2016, 30, 11–20. [Google Scholar] [CrossRef]

- Hölbl, M.; Welzer, T.; Nemec, L.; Sevčnikar, A. Student feedback experience and opinion using Moodle. In Proceedings of the 2011 22nd EAEEIE Annual Conference (EAEEIE), Maribor, Slovenia, 13–15 June 2011; pp. 1–4. [Google Scholar]

- Tomczyk, Ł.; Oyelere, S.; Amato, C.; Farinazzo Martins, V.; Motz, R.; Barros, G.; Yaşar Akyar, O.; Muñoz, D. Smart Ecosystem for Learning and Inclusion—Assumptions, actions and challenges in the implementation of an international educational project. In Adult Education 2019 in the Context of Professional. Development and Social Capital, Proceedings of the 9th International Adult Education Conference, Prague, Czech Republic, 11–12 December 2019; Charles University: Prague, Czech Republic, 2020; pp. 365–379. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).