Dashboard for Evaluating the Quality of Open Learning Courses

Abstract

:1. Introduction

2. Background

2.1. Open Learning, e-Learning, b-Learning and Other Related Concepts

2.2. Quality in Open Learning Systems

- Quality of the technology, from the technical point of view: availability, accessibility, security, etc.

- Quality of the learning resources included in the platform: content and learning activities.

- Quality of the instructional design of the learning experience: design of learning objectives, activities, timing, evaluation, etc.

- Quality of the teacher and student training in the e-Learning system.

- Quality of the services and support, help and technical and academic support offered to the users of the system.

2.3. Dashboards

- Monitor critical business processes and activities using metrics of business performance that trigger alerts when potential problems arise.

- Analyze the root cause of problems by exploring relevant and timely information from multiple perspectives and at various levels of detail.

- Manage people and processes to improve decisions, optimize performance, and steer the organization in the right direction.

- A dashboard is a visual presentation, a combination of text and graphics (diagrams, grids, indicators, maps...), but with an emphasis on graphics. An efficient and attractive graphical presentation can communicate more efficiently and meaningfully than just text.

- A dashboard shows the information needed to achieve specific objectives, so its design requires complex, unstructured and tacit information from various sources. Information is often a set of Key Performance Indicators (KPI), but other information may also be needed.

- A dashboard should fit on a single computer screen, so that everything can be seen at a glance. Scrolling or multiple screens should not be allowed.

- A dashboard presents up-to-date information, so some indicators may require real-time updating, but others may need to be updated with other frequencies.

- In a dashboard, data is abbreviated in the form of summaries or exceptions.

- A dashboard has simple, concise, clear, and intuitive visualization mechanisms with a minimum of unnecessary distractions.

- A dashboard must be customized, so that its information is adapted to different needs.

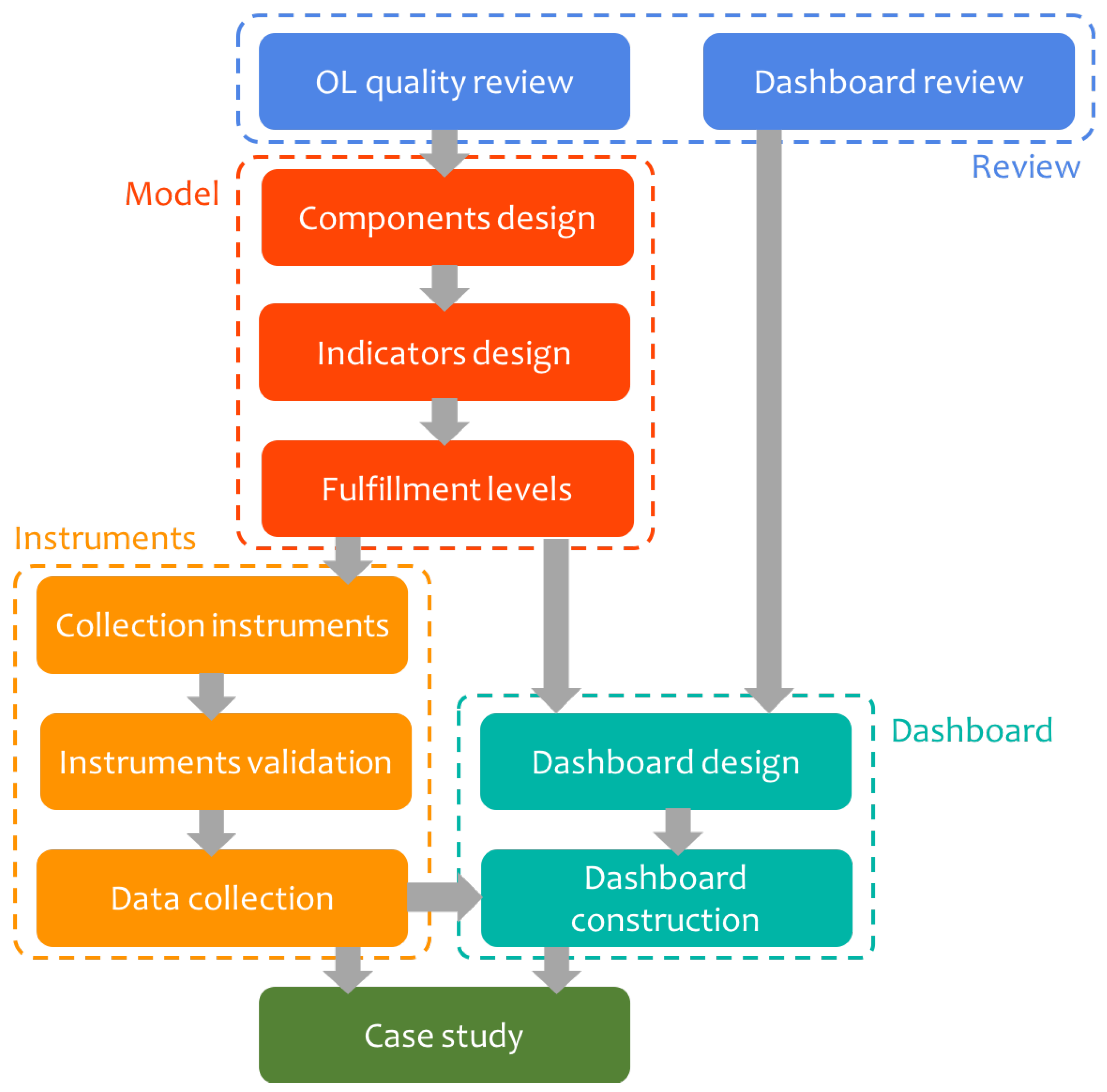

3. Methodology

- (1)

- Review: in this stage we have made a deep and systematic study of the literature, which has been presented in the previous section. This stage consists of two steps:

- (1)

- Literature review on the quality of Open Learning courses (Section 2.2)

- (2)

- Literature review on dashboard design (Section 2.3)

- (2)

- Model: The formulation of the model is key in our proposal. This formulation is presented in Section 4 and it is based on the literature review of the quality of the courses of the previous stage. The design of the model has been structured in three steps

- (3)

- Components design: The first step has been the structuring of the model in components, elements and attributes, inspired by the models found in the literature (Section 4.1).

- (4)

- Indicators design: The design of the indicators is presented in Section 4.2, which establishes the specific indicators that we have considered in our proposal.

- (5)

- Fulfillment levels: The last step of this stage is to establish the fulfillment levels we propose for the aggregation and comparison of the indicators (Section 4.3)

- (3)

- Instruments: Data collection is the objective of this stage (Section 5). It involves three steps:

- (6)

- Collection instruments: in this step the data collection instruments have been designed, based on the indicator design of the previous stage (Section 5.1).

- (7)

- Instruments validation: to ensure that the data collection instruments are useful and valid, a validation by experts has been carried out and is reported in Section 5.2.

- (8)

- Data collection: In this step the data of the specific institution are collected thanks to the application of the data collection instruments (Section 5.3)

- (4)

- Dashboard: the design and construction of the dashboard takes up the fourth stage (Section 6), divided into two steps:

- (9)

- Dashboard design: the dashboard is designed on the basis of the desirable characteristics established in the dashboard literature review, and on the basis of the model design, particularly the fulfillment levels (Section 6.1).

- (10)

- Dashboard construction: finally, thanks to the data collected, it is possible to build the dashboard designed in the previous step (Section 6.2).

- (5)

- Case study: the last step is the analysis and interpretation of the results of the case study (Section 7).

4. Model

- It must be supported by previous studies, which is why it is based on a systematic review of the literature.

- It must be integral, trying to include all aspects.

- It must be open, that’s why we use an iterative methodology that allows us to include new aspects in the future.

- It must be adaptable, being able to be applied in any e-Learning course with few adaptations.

- It must have a solid theoretical base, such as instructional design theories and process management.

- The literature describes five areas on which to study quality and which should appear in the model: learning resources, instructional design, user training and education, service and technology support, and learning management system (LMS).

- The instructional design ADDIE [36] (Analysis, Design, Development, Implementation and Evaluation), on the use of technology, should be taken into account.

4.1. Learning as a PROCESS: Components and Elements

- Components

- Elements

- Attributes

- Indicators

4.2. Attributes and Indicators

- Name, clearly identifying the meaning of the indicator.

- Type, indicating whether its value is quantitative or qualitative.

- Definition, explaining completely the nature and aim of the indicator.

- Evidence, indicating how to obtain its value (instrument).

- Standard, which represent the desirable qualities for the indicators and allow them to be compared with a standard measurement.

4.3. Fulfillment Levels

- If there are associated regulations, the regulations are used to establish the levels. For example, Ecuadorian regulations establish that universities must aspire to have at least 70% of their teaching staff have a doctorate, so the indicator “% of teaching staff with a doctorate” will have a level 5 if it is greater than 70% and the rest of the levels are established by dividing the range from 0% to 70% into 4 intervals.

- If there is no regulation that allows the establishment of reference points, the levels are defined by dividing the whole range into 5 parts, when they are quantitative, or they correspond to the 5 levels of a Likert scale, when they are qualitative. For example, for the indicator “% of teachers using the virtual classroom as a means of communication with students”, the 5 fulfillment levels are established homogeneously, in steps of 20%. Another example is that of the qualitative indicator “level of satisfaction of students with the learning experience”, for which a Likert scale with 5 values is used, equivalent to the fulfillment levels.

- The model is hierarchical, so that attributes are evaluated according to their indicators, elements according to attributes and components according to elements. The fulfillment level of a hierarchical layer is the average of the lower layers. However, it is possible to establish a weighted average, so that the weight of each part is different. The determination of the weights is very dependent on each particular case and it could be a powerful strategy tool for the institution. In the standard model, though, it has been decided the use of a uniform weighting.

- The simplification of the scale to 5 values is easier to interpret and allows us to establish a color code that will facilitate the graphic representation we are looking for, as we will see in Section 6.

5. Research Instruments

5.1. Collection Instruments

- Socio-demographic data (7 questions)

- Digital skills (2 questions)

- Use of the learning platform (1 question)

- Use of the virtual classroom (9 questions)

- Use of resources, learning activities, evaluation and collaborative work (6 questions)

- Learning experience (7 questions)

- Monitoring, feedback and training (7 questions)

- Quality of the virtual classroom (11 questions)

- Instructional design (1 question)

- Teacher training and updating (3 questions)

- Teaching and learning process supported by the virtual classroom (2 questions)

- Technological services provided by the institution for the operation of the virtual classroom (5 questions)

- Socio-demographic data (2 questions)

- Use of the LMS (1 question)

- Quality of instructional design (20 questions)

- Use of virtual classrooms (1 question)

- Digital skills (4 questions)

- Teacher training and updating (3 questions)

- Technological services provided by the institution for the operation of the virtual classroom (1 question)

- Recommendations (1 question)

- Training of LMS managers, collecting data on training hours.

- Characteristics of the technological infrastructure, in order to know, among other issues, the availability of the platform, the bandwidth, the security policies, the accessibility of the platform, the software update policies or the contingency plans.

- Training of teachers and students, to know the percentage of teachers and students trained in the use of learning support technologies and training programs.

- User support, collecting data on resolved incidents and response time.

- Use of the virtual classroom, to know the teachers and students who really use the LMS.

5.2. Instruments Validation and Redesign

- Relevance: The correspondence between the objectives and the items in the instrument.

- Technical quality and representativeness: The adequacy of the questions to the cultural, social and educational level of the population to which the instrument is directed.

- Language quality and writing: Use of appropriate language, writing and spelling, and use of terms known to the respondent.

5.3. Data Collection

- The population, i.e., the recipients of the survey. In this case the population is made up of the students and teachers of the university analysed, for each of the surveys carried out.

- The chosen sample, that is, who from the entire population will answer the questionnaires. In our case it is a voluntary survey, so it is not possible to define a sample size a priori. This introduces the problem of the possible non-representativeness of the sample, either because of an insufficient size or because it is not a random sample.

- The way to send them the questionnaire: on paper, by e-mail, by means of an online form...

6. Dashboard

6.1. Dashboard Design

- It is an efficient and attractive visual presentation, combining text and graphics.

- It shows information needed to achieve a specific objective (evaluate the quality of a Open Learning course), and has complex, unstructured and tacit information from various sources (data collection tools). It shows a set of KPIs (the 9 elements), but also other additional information (the attributes and indicators).

- It fits on a single computer screen.

- Allows for updated information if required.

- The information can be considered as an aggregated summary of the whole Open Learning quality assessment.

- A heat map is a simple, concise, clear and intuitive display mechanism.

- It could be customized, showing more or less rings depending on the needs.

6.2. Dashboard Construction

- Collection of indicator data from surveys and interviews.

- Calculation of the fulfillment levels, based on the value of the indicators and the established standards, as indicated in Section 4.3.

- Calculation of the fulfillment levels of the attributes, as an average of the fulfillment levels of the indicators of that attribute, with rounding to the nearest integer value.

- Calculation of the fulfillment levels of the elements (sub-elements), as an average of the fulfillment levels of the attributes of that element (sub-element), with rounding to the nearest integer value.

- Calculation of the fulfillment levels of the components, as an average of the fulfillment levels of the elements of that component, with rounding to the nearest integer value

- Assignment of a color level according to the fulfillment level. To do this:

- The hue depends on the component to which each element, attribute or indicator belongs: red for the human component, green for the methodological and technological component, blue for the process component.

- The saturation depends on the fulfillment level. Five levels of saturation are established, distributed in intervals of 20%, between 0% (white, minimum saturation) and 100% (maximum saturation).

7. Case Study

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Attributes and Indicators

| Attribute | Indicator | Description |

|---|---|---|

| Digital skills | Use of computer tools | % students who regularly use computer tools in their learning activities. |

| LMS training | Training | % students who have been trained in the use of LMS. |

| Attribute | Indicator | Description |

|---|---|---|

| Level of studies | Level of studies | % teachers who are PhD. |

| Digital skills | Basic notions | Average degree of use of basic learning resources in teaching practice. |

| Knowledge deepening | Average degree of use of knowledge deepening resources in teaching practice. | |

| Knowledge generation | Average degree of use of knowledge generation resources in teaching practice. | |

| LMS training | Training | % teachers who have been trained in the use of LMS. |

| Training expectation | % teachers who would like to be trained in the use of digital tools and resources. | |

| Contribution to transparency | Syllabus publication | % teachers who publish at least the subject syllabus on the institutional website. |

| Attribute | Indicator | Description |

|---|---|---|

| Training in LMS management | Training in LMS management | Average number of training hours of the LMS manager in the last year. |

References

- Anon. Cambridge Business English Dictionary; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar]

- Caliskan, H. Open Learning. In Encyclopedia of the Sciences of Learning; Seel, N.M., Ed.; Springer US: Boston, MA, USA, 2012; pp. 2516–2518. [Google Scholar] [CrossRef]

- Bates, T. Technology, e-Learning and Distance Education, 2nd ed.; RoutledgeFalmer Studies in Distance Education; OCLC: Ocm56493660; Routledge: London, UK; New York, NY, USA, 2005. [Google Scholar]

- Few, S. Information Dashboard Design: The Effective Visual Communication of Data, 1st ed.; OCLC: Ocm63676286; O’Reilly: Beijing, China; Cambride, MA, USA, 2006. [Google Scholar]

- Moore, J.L.; Dickson-Deane, C.; Galyen, K. e-Learning, online learning, and distance learning environments: Are they the same? Internet High. Educ. 2011, 14, 129–135. [Google Scholar] [CrossRef]

- Koper, R. Open Source and Open Standards. In Handbook of Research on Educational Communications and Technology, 3rd ed.; Spector, J.M., Ed.; OCLC: Ocn122715455; Lawrence Erlbaum Associates: New York, NY, USA, 2008; Volume 31. [Google Scholar]

- Pina, A.B. Blended learning. Conceptos básicos. Pixel-Bit. Rev. De Medios Y Educ. 2004, 23, 7–20. [Google Scholar]

- Llorens-Largo, F.; Molina-Carmona, R.; Compañ-Rosique, P.; Satorre-Cuerda, R. Technological Ecosystem for Open Education. In Frontiers in Artificial Intelligence and Applications; IOS Press: Amsterdam, The Netherlands, 2014; pp. 706–715. [Google Scholar] [CrossRef]

- Ehlers, U.D. Open Learning Cultures: A Guide to Quality, Evaluation, and Assessment for Future Learning; Springer: New York, NY, USA, 2013. [Google Scholar]

- Vagarinho, J.P.; Llamas-Nistal, M. Quality in e-learning processes: State of art. In Proceedings of the 2012 International Symposium on Computers in Education (SIIE), Andorra la Vella, Andorra, 29–31 October 2012; pp. 1–6. [Google Scholar]

- Martínez-Caro, E.; Cegarra-Navarro, J.G.; Cepeda-Carrión, G. An application of the performance-evaluation model for e-learning quality in higher education. Total Qual. Manag. Bus. Excell. 2015, 26, 632–647. [Google Scholar] [CrossRef]

- Huang, Y.M.; Chiu, P.S. The effectiveness of a meaningful learning-based evaluation model for context-aware mobile learning: Context-aware mobile learning evaluation model. Br. J. Educ. Technol. 2015, 46, 437–447. [Google Scholar] [CrossRef]

- Chiu, P.S.; Pu, Y.H.; Kao, C.C.; Wu, T.T.; Huang, Y.M. An authentic learning based evaluation method for mobile learning in Higher Education. Innov. Educ. Teach. Int. 2018, 55, 336–347. [Google Scholar] [CrossRef]

- Camacho Condo, A. Modelo de Acreditación de Accesibilidad en la Educación Virtual; Deliverable E3.2.1, European Union—Project ESVI-AL; European Union: Brussels, Belgium, 2013. [Google Scholar]

- Mejía-Madrid, G. El Proceso de Enseñanza Aprendizaje Apoyado en las Tecnologías de la Información: Modelo Para Evaluar la Calidad de Los Cursos b-Learning en Las Universidades. Ph.D. Thesis, Universidad de Alicante, Alicante, Spain, 2019. [Google Scholar]

- Martín Núñez, J.L. Aportes Para la Evaluación y Mejora de la Calidad en la Enseñanza Universitaria Basada en e-Learning. Ph.D. Thesis, Universidad de Alcalá, Alcalá de Henares, Spain, 2016. [Google Scholar]

- Santoveña Casal, S.M. Criterios de calidad para la evaluacion de cursos virtuales. Rev. Científica Electrónica De Educ. Y Comun. En La Soc. Del Conoc. 2004, 2, 18–36. [Google Scholar]

- Frydenberg, J. Quality Standards in eLearning: A matrix of analysis. Int. Rev. Res. Open Distrib. Learn. 2002, 3. [Google Scholar] [CrossRef] [Green Version]

- Mejía, J.F.; López, D. Modelo de Calidad de E-learning para Instituciones de Educación Superior en Colombia. Form. Univ. 2016, 9, 59–72. [Google Scholar] [CrossRef] [Green Version]

- Stefanovic, M.; Tadic, D.; Arsovski, S.; Arsovski, Z.; Aleksic, A. A Fuzzy Multicriteria Method for E-learning Quality Evaluation. Int. J. Eng. Educ. 2010, 26, 1200–1209. [Google Scholar]

- Tahereh, M.; Maryam, T.M.; Mahdiyeh, M.; Mahmood, K. Multi dimensional framework for qualitative evaluation in e-learning. In Proceedings of the 4th International Conference on e-Learning and e-Teaching, Shiraz, Iran, 13–14 February 2013; pp. 69–75. [Google Scholar] [CrossRef]

- Militaru, T.L.; Suciu, G.; Todoran, G. The evaluation of the e-learning applications’ quality. In Proceedings of the 54th International Symposium ELMAR-2012, Zadar, Croatia, 12–14 September 2012; pp. 165–169. [Google Scholar]

- Chatterjee, C. Measurement of e-learning quality. In Proceedings of the 2016 3rd International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 22–23 January 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Grigoraş, G.; Dănciulescu, D.; Sitnikov, C. Assessment Criteria of E-learning Environments Quality. Procedia Econ. Financ. 2014, 16, 40–46. [Google Scholar] [CrossRef] [Green Version]

- Nanduri, S.; Babu, N.S.C.; Jain, S.; Sharma, V.; Garg, V.; Rajshekar, A.; Rangi, V. Quality Analytics Framework for E-learning Application Environment. In Proceedings of the 2012 IEEE Fourth International Conference on Technology for Education, Hyderabad, India, 18–20 July 2012; pp. 204–207. [Google Scholar] [CrossRef]

- Skalka, J.; Švec, P.; Drlík, M. E-learning and Quality: The Quality Evaluation Model for E learning Courses. In Proceedings of the International Scientific Conference on Distance Learning in Applied Informatics, DIVAI 2012, Sturovo, Slovakia, 2–4 May 2012. [Google Scholar]

- Zhang, W.; Cheng, Y.L. Quality assurance in e-learning: PDPP evaluation model and its application. Int. Rev. Res. Open Distrib. Learn. 2012, 13, 66–82. [Google Scholar] [CrossRef]

- Arora, R.; Chhabra, I. Extracting components and factors for quality evaluation of e-learning applications. In Proceedings of the 2014 Recent Advances in Engineering and Computational Sciences (RAECS), Chandigarh, India, 6–8 March 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Casanova, D.; Moreira, A.; Costa, N. Technology Enhanced Learning in Higher Education: Results from the design of a quality evaluation framework. Procedia Soc. Behav. Sci. 2011, 29, 893–902. [Google Scholar] [CrossRef] [Green Version]

- Lim, K.C. Quality and Effectiveness of eLearning Courses—Some Experiences from Singapore. Spec. Issue Int. J. Comput. Internet Manag. 2010, 18, 11.1–11.6. [Google Scholar]

- Friesenbichler, M. E-learning as an enabler for quality in higher education. In Proceedings of the 2011 14th International Conference on Interactive Collaborative Learning, Piestany, Slovakia, 21–23 September 2011; pp. 652–655. [Google Scholar] [CrossRef]

- D’Mello, D.A.; Achar, R.; Shruthi, M. A Quality of Service (QoS) model and learner centric selection mechanism for e-learning Web resources and services. In Proceedings of the 2012 World Congress on Information and Communication Technologies, Trivandrum, India, 30 October–2 November 2012; pp. 179–184. [Google Scholar] [CrossRef]

- Tinker, R. E-Learning Quality: The Concord Model for Learning from a Distance. NASSP Bull. 2001, 85, 36–46. [Google Scholar] [CrossRef] [Green Version]

- Alkhalaf, S.; Nguyen, A.T.A.; Drew, S.; Jones, V. Measuring the Information Quality of e-Learning Systems in KSA: Attitudes and Perceptions of Learners. In Robot Intelligence Technology and Applications 2012; Kim, J.H., Matson, E.T., Myung, H., Xu, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 208, pp. 787–791. [Google Scholar] [CrossRef]

- Marković, S.; Jovanović, N. Learning style as a factor which affects the quality of e-learning. Artif. Intell. Rev. 2012, 38, 303–312. [Google Scholar] [CrossRef]

- Aissaoui, K.; Azizi, M. Improvement of the quality of development process of E-learning and M-learning systems. Int. J. Appl. Eng. Res. 2016, 11, 2474–2477. [Google Scholar]

- Hoffmann, M.H.W.; Bonnaud, O. Quality management for e-learning: Why must it be different from industrial and commercial quality management? In Proceedings of the 2012 International Conference on Information Technology Based Higher Education and Training (ITHET), Istanbul, Turkey, 21–23 June 2012; pp. 1–7. [Google Scholar] [CrossRef]

- Eckerson, W.W. Performance Dashboards: Measuring, Monitoring, and Managing Your Business, 2nd ed.; Wiley: New York, NY, USA, 2010. [Google Scholar]

- Chowdhary, P.; Palpanas, T.; Pinel, F.; Chen, S.K.; Wu, F.Y. Model-Driven Dashboards for Business Performance Reporting. In Proceedings of the 2006 10th IEEE International Enterprise Distributed Object Computing Conference (EDOC’06), Hong Kong, China, 16–20 October 2006; pp. 374–386. [Google Scholar] [CrossRef] [Green Version]

- Kintz, M. A semantic dashboard description language for a process-oriented dashboard design methodology. In Proceedings of the 2nd International Workshop on Model-Based Interactive Ubiquitous Systems, Modiquitous 2012, Copenhagen, Denmark, 25 June 2012; pp. 31–36. [Google Scholar]

- Brath, R.; Peters, M. Dashboard Design: Why Design is Important. DM Rev. 2004, 85, 1011285-1. [Google Scholar]

- Keck, I.R.; Ross, R.J. Exploring customer specific KPI selection strategies for an adaptive time critical user interface. In Proceedings of the 19th International Conference on Intelligent User Interfaces, Haifa, Israel, 24–27 February 2014; pp. 341–346. [Google Scholar] [CrossRef] [Green Version]

- Molina-Carmona, R.; Llorens-Largo, F.; Fernández-Martínez, A. Data-Driven Indicator Classification and Selection for Dynamic Dashboards: The Case of Spanish Universities. In Proceedings of the EUNIS (European University Information Systems), Paris, France, 5–8 June 2018; p. 10. [Google Scholar]

- Molina-Carmona, R.; Villagrá-Arnedo, C.; Compañ-Rosique, P.; Gallego-Duran, F.J.; Satorre-Cuerda, R.; Llorens-Largo, F. Technological Ecosystem Maps for IT Governance: Application to a Higher Education Institution. In Open Source Solutions for Knowledge Management and Technological Ecosystems; Garcia-Peñalvo, F.J., García-Holgado, A., Jennex, M., Eds.; Advances in Knowledge Acquisition, Transfer, and Management; IGI Global: Hershey, PA, USA, 2017; pp. 50–80. [Google Scholar] [CrossRef]

- ISO. ISO 9001:2015(es)—Sistemas de Gestión de la Calidad. Norma, International Organization for Standardization; ISO: Geneva, Switzerland, 2015. [Google Scholar]

- Biggs, J. What do inventories of students’ learning processes really measure? A theoretical review and clarification. Br. J. Educ. Psychol. 1993, 63, 3–19. [Google Scholar] [CrossRef] [PubMed]

- Kitchenham, B.A.; Pfleeger, S.L. Personal Opinion Surveys. In Guide to Advanced Empirical Software Engineering; Shull, F., Singer, J., Sjøberg, D.I.K., Eds.; OCLC: 1027138267; Springer: London, UK, 2010; Volume 3. [Google Scholar]

| Component | Element | Description |

|---|---|---|

| Human (Red) | Student | Learning recipient and input to the system. |

| Teacher | Who guides and creates the learning atmosphere using different methods and techniques. | |

| LMS manager | Who provides the management and administration services of the learning platform. | |

| Methodology and Technology (Green) | Instructional design | Academic activity devoted to designing and planning resources and learning activities. The instructional design corresponds to the ADDIE model. |

| LMS | Software platform that manages learning, where resources and activities are located. | |

| Helpdesk | Institutional service offered to students and teachers for the use, management and training of the LMS. | |

| Process (Blue) | Process | Interaction process of students, teachers and managers with each resource. It has 6 sub-processes, the result of combining each element of the human component with each element of the methodological and technological component.. |

| Result | Output of the teaching learning process in which a student with knowledge i ends up with knowledge j, where j > i. For evaluation, Kirkpatrick’s 4 levels of assessment are taken: reaction, learning, knowledge transfer and impact. | |

| Feedback | Improvement actions that imply a feedback to the system. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mejía-Madrid, G.; Llorens-Largo, F.; Molina-Carmona, R. Dashboard for Evaluating the Quality of Open Learning Courses. Sustainability 2020, 12, 3941. https://doi.org/10.3390/su12093941

Mejía-Madrid G, Llorens-Largo F, Molina-Carmona R. Dashboard for Evaluating the Quality of Open Learning Courses. Sustainability. 2020; 12(9):3941. https://doi.org/10.3390/su12093941

Chicago/Turabian StyleMejía-Madrid, Gina, Faraón Llorens-Largo, and Rafael Molina-Carmona. 2020. "Dashboard for Evaluating the Quality of Open Learning Courses" Sustainability 12, no. 9: 3941. https://doi.org/10.3390/su12093941