User Participation Behavior in Crowdsourcing Platforms: Impact of Information Signaling Theory

Abstract

:1. Introduction

2. Theoretical Background and Hypotheses

2.1. Theoretical Background

2.1.1. Crowdsourcing Platforms

2.1.2. Information Asymmetry and Trust

2.2. Hypotheses

2.3. Theoretical Framework

3. Material and Methods

3.1. Research Context and Data Collection

3.2. Variables and Overall Approach

3.2.1. Variables

3.2.2. Overall Approach

4. Empirical Models and Data Results

4.1. Adoption of Independent Variables and User Participation Behavior

4.2. Mediating Effect Analysis

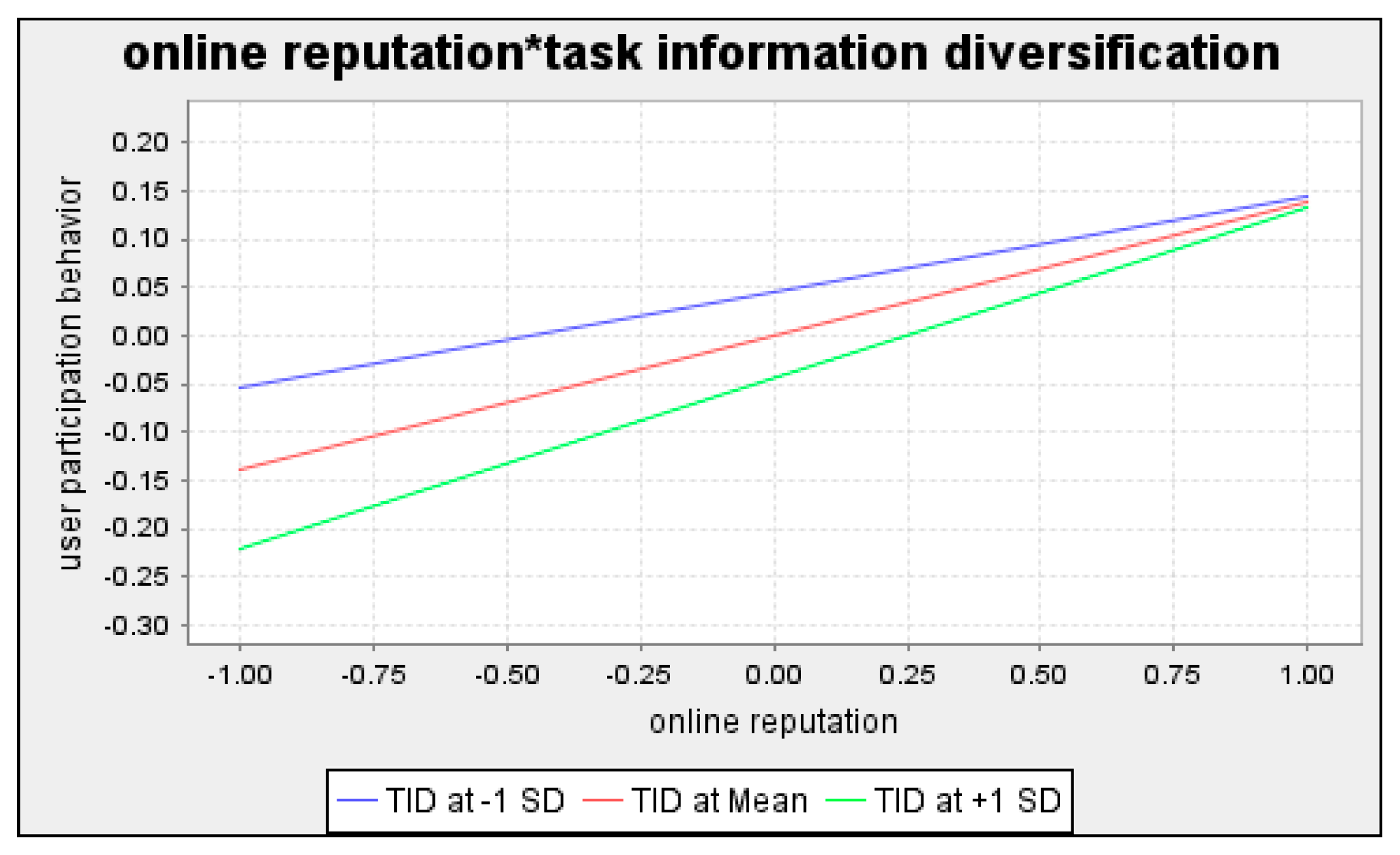

4.3. Moderating Effect Analysis

5. Discussion

5.1. Conclusions

5.2. Contributions

5.2.1. Theoretical Contributions

5.2.2. Practical Implications

5.3. Limitations and Future Research

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pohlisch, J. Internal Open Innovation—Lessons Learned from Internal Crowdsourcing at SAP. Sustainability 2020, 12, 4245. [Google Scholar] [CrossRef]

- Wooten, J.O.; Ulrich, K.T. Idea Generation and the Role of Feedback: Evidence from Field Experiments with Innovation Tournaments. Prod. Oper. Manag. 2016, 26, 80–99. [Google Scholar] [CrossRef]

- Cui, T.; Ye, H. (Jonathan); Teo, H.H.; Li, J. Information technology and open innovation: A strategic alignment perspective. Inf. Manag. 2015, 52, 348–358. [Google Scholar] [CrossRef] [Green Version]

- Howe, J. The Rise of Crowdsourcing. Wired 2006, 14, 1–4. [Google Scholar]

- Ahn, Y.; Lee, J. The Effect of Participation Effort on CSR Participation Intention: The Moderating Role of Construal Level on Consumer Perception of Warm Glow and Perceived Costs. Sustainability 2019, 12, 83. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.-M.; Wang, J.-J. Understanding Solvers’ Continuance Intention in Crowdsourcing Contest Platform: An Extension of Expectation-Confirmation Model. J. Theor. Appl. Electron. Commer. Res. 2019, 14, 17–33. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Liu, Y. The Effect of Workers’ Justice Perception on Continuance Participation Intention in the Crowdsourcing Market. Internet Res 2019, 29, 1485–1508. [Google Scholar] [CrossRef]

- Spence, M. Signaling in Retrospect and the Informational Structure of Markets. Am. Econ. Rev. 2002, 92, 434–459. [Google Scholar] [CrossRef]

- Zhou, T. Understanding online community user participation: A social influence perspective. Internet Res. 2011, 21, 67–81. [Google Scholar] [CrossRef] [Green Version]

- Lu, Y.; Zhao, L.; Wang, B. From virtual community members to C2C e-commerce buyers: Trust in virtual communities and its effect on consumers’ purchase intention. Electron. Commer. Res. Appl. 2010, 9, 346–360. [Google Scholar] [CrossRef]

- Wu, X.; Shen, J. A Study on Airbnb’s Trust Mechanism and the Effects of Cultural Values—Based on a Survey of Chinese Consumers. Sustainability 2018, 10, 3041. [Google Scholar] [CrossRef] [Green Version]

- García Martinez, M.; Walton, B. The wisdom of crowds: The potential of online communities as a tool for data analysis. Technovation 2014, 34, 203–214. [Google Scholar] [CrossRef]

- Taeihagh, A. Crowdsourcing, Sharing Economies and Development. J. Dev. Soc. 2017, 33, 191–222. [Google Scholar] [CrossRef]

- Luz, N.; Silva, N.; Novais, P. A survey of task-oriented crowdsourcing. Artif. Intell. Rev. 2015, 44, 187–213. [Google Scholar] [CrossRef]

- Glaeser, E.L.; Hillis, A.; Kominers, S.D.; Luca, M. Crowdsourcing City Government: Using Tournaments to Improve Inspection Accuracy. Am. Econ. Rev. 2016, 106, 114–118. [Google Scholar] [CrossRef] [Green Version]

- Mergel, I. Open collaboration in the public sector: The case of social coding on GitHub. Gov. Inf. Q. 2015, 32, 464–472. [Google Scholar] [CrossRef] [Green Version]

- Ye, H. (Jonathan); Kankanhalli, A. Solvers’ participation in crowdsourcing platforms: Examining the impacts of trust, and benefit and cost factors. J. Strat. Inf. Syst. 2017, 26, 101–117. [Google Scholar] [CrossRef]

- Stiglitz, J.E. Information and the Change in the Paradigm in Economics. Am. Econ. Rev. 2002, 92, 460–501. [Google Scholar] [CrossRef] [Green Version]

- Akerlof, G.A. The Market for “Lemons”: Quality Uncertainty and the Market Mechanism. Q. J. Econ. 1970, 84, 488–500. [Google Scholar] [CrossRef]

- Stiglitz, J.E. The Contributions of the Economics of Information to Twentieth Century Economics. Q. J. Econ. 2000, 115, 1441–1478. [Google Scholar] [CrossRef]

- Elitzur, R.; Gavious, A. Contracting, signaling, and moral hazard: A model of entrepreneurs, ‘angels,’ and venture capitalists. J. Bus. Ventur. 2003, 18, 709–725. [Google Scholar] [CrossRef]

- Spence, M. Job Market Signaling. Q. J. Econ. 1973, 87, 355–374. [Google Scholar] [CrossRef]

- Connelly, B.L.; Certo, S.T.; Ireland, R.D.; Reutzel, C.R. Signaling Theory: A Review and Assessment. J. Manag. 2010, 37, 39–67. [Google Scholar] [CrossRef]

- Standifird, S.S.; Weinstein, M.; Meyer, A.D. Establishing Reputation on the Warsaw Stock Exchange: International Brokers as Legitimating Agents. Acad. Manag. Proc. 1999, 1999, K1–K6. [Google Scholar] [CrossRef]

- Chakraborty, S.; Swinney, R. Signaling to the Crowd: Private Quality Information and Rewards-Based Crowdfunding. Manuf. Serv. Oper. Manag. 2020, 23, 155–169. [Google Scholar] [CrossRef]

- Gefen, D.; Benbasat, I.; Pavlou, P. A Research Agenda for Trust in Online Environments. J. Manag. Inf. Syst. 2008, 24, 275–286. [Google Scholar] [CrossRef]

- Teubner, T.; Hawlitschek, F.; Dann, D. Price Determinants on Airbnb: How Reputation Pays Off in the Sharing Economy. J. Self-Governance Manag. Econom. 2017, 5, 53–80. [Google Scholar]

- Zmud, R.W.; Shaft, T.; Zheng, W.; Croes, H. Systematic Differences in Firm’s Information Technology Signaling: Implications for Research Design. J. Assoc. Inf. Syst. 2010, 11, 149–181. [Google Scholar] [CrossRef]

- Benlian, A.; Hess, T. The Signaling Role of IT Features in Influencing Trust and Participation in Online Communities. Int. J. Electron. Commer. 2011, 15, 7–56. [Google Scholar] [CrossRef]

- Ba, S. Establishing online trust through a community responsibility system. Decis. Support Syst. 2001, 31, 323–336. [Google Scholar] [CrossRef]

- Leimeister, J.M.; Ebner, W.; Krcmar, H. Design, Implementation, and Evaluation of Trust-Supporting Components in Virtual Communities for Patients. J. Manag. Inf. Syst. 2005, 21, 101–131. [Google Scholar] [CrossRef]

- Khan, M.L. Social media engagement: What motivates user participation and consumption on YouTube? Comput. Hum. Behav. 2017, 66, 236–247. [Google Scholar] [CrossRef]

- Yao, Z.; Xu, X.; Shen, Y. The Empirical Research About the Impact of Seller Reputation on C2c Online Trading: The Case of Taobao. WHICEB Proc. 2014, 60, 427–435. [Google Scholar]

- Malaquias, R.F.; Hwang, Y. An empirical study on trust in mobile banking: A developing country perspective. Comput. Hum. Behav. 2016, 54, 453–461. [Google Scholar] [CrossRef]

- Horng, S.-M. A Study of Active and Passive User Participation in Virtual Communities. J. Electron. Comm. Res. 2016, 17, 289–311. [Google Scholar]

- Yang, D.; Xue, G.; Fang, X.; Tang, J. Incentive Mechanisms for Crowdsensing: Crowdsourcing With Smartphones. IEEE/ACM Trans. Netw. 2015, 24, 1732–1744. [Google Scholar] [CrossRef]

- Xu, Q.; Liu, Z.; Shen, B. The Impact of Price Comparison Service on Pricing Strategy in a Dual-Channel Supply Chain. Math. Probl. Eng. 2013, 2013, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Lee, H.G.; Lee, S.C.; Kim, H.Y.; Lee, R.H. Is the Internet Making Retail Transactions More Efficient?: Comparison of Online and Offline Cd Retail Markets. Electron. Commer. Res. Appl. 2003, 2, 266–277. [Google Scholar] [CrossRef]

- Delone, W.H.; McLean, E.R. Measuring e-Commerce Success: Applying the DeLone & McLean Information Systems Success Model. Int. J. Electron. Commer. 2004, 9, 31–47. [Google Scholar] [CrossRef]

- Pesämaa, O.; Zwikael, O.; Hair, J.F.; Huemann, M. Publishing quantitative papers with rigor and transparency. Int. J. Proj. Manag. 2021, 39, 217–222. [Google Scholar] [CrossRef]

- Hong, Z.; Zhu, H.; Dong, K. Buyer-Side Institution-Based Trust-Building Mechanisms: A 3S Framework with Evidence from Online Labor Markets. Int. J. Electron. Commer. 2020, 24, 14–52. [Google Scholar] [CrossRef]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2016. [Google Scholar]

- Mavlanova, T.; Benbunan-Fich, R.; Koufaris, M. Signaling theory and information asymmetry in online commerce. Inf. Manag. 2012, 49, 240–247. [Google Scholar] [CrossRef]

- Liang, Y.; Ow, T.T.; Wang, X. How do group performances affect users’ contributions in online communities? A cross-level moderation model. J. Organ. Comput. Electron. Commer. 2020, 30, 129–149. [Google Scholar] [CrossRef]

| Task ID | Task Model | Online Reputation | Salary Comparison | Interpersonal Trust | User Participation Behavior | Task Information Diversification | Task Information Overload | Number of Visitors | Duration of the Task |

|---|---|---|---|---|---|---|---|---|---|

| 1078 | Multiperson reward | 9 | 0.418 | 4 | 0.112 | 0 | 0 | 322 | 10 |

| 1833 | Piece-rate reward | 9 | 0.731 | 5 | 0.151 | 1 | 0 | 696 | 10 |

| 16170 | Single reward | 4 | 0.754 | 9 | 0.041 | 2 | 0 | 977 | 7 |

| 26128 | Employment task | 4 | 1.009 | 8 | 0.015 | 6 | 0 | 1104 | 15 |

| 94836 | Tendering task | 9 | 0.300 | 7 | 0.005 | 0 | 2557 | 6303 | 1 |

| Variable Type | Constructs | Variable Definitions | Measurement Methods |

|---|---|---|---|

| Independent variables | Online reputation (OR) | Credit | Credit rating of the seeker on the seeker’s homepage |

| Salary comparison (SC) | Task similarity–salary difference | The ratio of the task price to the average price of similar tasks | |

| Mediating variables | Interpersonal trust (IT) | Credit diversification | The number of solvers from different credit ratings of all solvers |

| Dependent variables | User participation behavior (UPB) | Bid ratio | The ratio of the number of solvers for a task to the number of visitors for a task |

| Moderating variables | Task information diversification (TID) | Number of attachment contents | The number of attachment contents, which represents other forms in addition to the text on the task release homepage |

| Task information overload (TIO) | Bytes of attachment text | The bytes of attachment text on the task release homepage | |

| Control variables | Number of visitors (NV) | Number of visitors | The number of visitors for a task |

| Duration of the task (DT) | Duration of the task | The number of days from task release to acceptance of payment |

| Variable | Obs | Min | Median | Mean | Max | Std. Dev. |

|---|---|---|---|---|---|---|

| User participation behavior | 28,887 | 0.000 | 0.145 | 0.266 | 10.000 | 0.343 |

| Online reputation | 28,887 | 3.010 | 9.542 | 9.254 | 10.000 | 1.162 |

| Salary comparison | 28,887 | 0.000 | 1.085 | 1.085 | 9.189 | 0.682 |

| Interpersonal trust | 28,887 | 3.010 | 9.031 | 8.311 | 10.000 | 1.593 |

| Task information diversification | 28,887 | 0.000 | 0.000 | 0.715 | 10.000 | 1.347 |

| Task information overload | 28,887 | 0.000 | 0.000 | 0.366 | 10.000 | 1.174 |

| Number of visits | 28,887 | 0.786 | 4.520 | 4.533 | 10.000 | 0.515 |

| Duration of the task | 28,887 | 0.000 | 4.177 | 4.068 | 10.000 | 1.520 |

| Variables | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| UPB | |||||||

| OR | 0.031 *** | ||||||

| SC | 0.13 *** | −0.22 *** | |||||

| IT | 0.229 *** | −0.027 *** | 0.231 *** | ||||

| TID | −0.042 *** | 0.005 | 0.004 | −0.096 *** | |||

| TIO | −0.1 *** | −0.032 *** | −0.003 | −0.065 *** | 0.006 | ||

| NV | −0.321 *** | 0.176 *** | 0.067 *** | 0.087 *** | −0.053 *** | 0.083 *** | |

| DT | 0.129 *** | −0.054 *** | 0.214 *** | 0.385 *** | 0.036 *** | −0.031 *** | 0.083 *** |

| Dependent Variable | OLS:1 | OLS:2 |

|---|---|---|

| UPB | UPB | |

| Constant | 0.901 ***(0.021) | 1.104 ***(0.017) |

| Control variables | ||

| NV | −0.235 ***(0.004) | −0.226 ***(0.004) |

| DT | 0.037 ***(0.001) | 0.029 ***(0.001) |

| Independent variables | ||

| OR | 0.03 ***(0.002) | |

| SC | 0.063 ***(0.003) | |

| Model summary | ||

| No. of Obs. | 28,887 | 28,887 |

| Adj-R2 | 0.1369 | 0.1417 |

| R2 | 0.137 | 0.1418 |

| F | 1528.69 *** | 1591.21 *** |

| Mean VIF | 1.03 | 1.04 |

| Dependent Variable | OLS:1 | OLS:3 | OLS:4 | OLS:2 | OLS:5 | OLS:6 |

|---|---|---|---|---|---|---|

| UPB | IT | UPB | UPB | IT | UPB | |

| Constant | 0.901 ***(0.021) | 6.075 ***(0.097) | 0.593 ***(0.022) | 1.104 ***(0.017) | 5.76 ***(0.077) | 0.834 ***(0.018) |

| Control variables | ||||||

| NV | −0.235 ***(0.004) | 0.182 ***(0.017) | −0.244 ***(0.004) | −0.226 ***(0.004) | 0.149 ***(0.017) | −0.233 ***(0.004) |

| DT | 0.037 ***(0.001) | 0.398 ***(0.006) | 0.017 ***(0.001) | 0.029 ***(0.001) | 0.365 ***(0.006) | 0.012 ***(0.001) |

| Independent variables | ||||||

| OR | 0.03 ***(0.002) | −0.022 ***(0.008) | 0.031 ***(0.002) | |||

| SC | 0.063 ***(0.003) | 0.358 ***(0.013) | 0.046 ***(0.003) | |||

| Mediating variable | ||||||

| IT | 0.051 ***(0.001) | 0.047 ***(0.001) | ||||

| Model summary | ||||||

| No. of Obs. | 28,887 | 28,887 | 28,887 | 28,887 | 28,887 | 28,887 |

| Adj-R2 | 0.1369 | 0.1516 | 0.184 | 0.1417 | 0.1737 | 0.1809 |

| R2 | 0.137 | 0.1517 | 0.1841 | 0.1418 | 0.1738 | 0.181 |

| F | 1528.69 *** | 1721.08 *** | 1629 *** | 1591.21 *** | 2025.33 *** | 1596.26 *** |

| Mean VIF | 1.03 | 1.03 | 1.11 | 1.04 | 1.04 | 1.13 |

| Total Effect | Z Value | Significance (p < 0.05) | Direct Effect | Z Value | Significance (p < 0.05) | Indirect Effect | Z Value | Significance (p < 0.05) | Hypothesis Is Supported | |

|---|---|---|---|---|---|---|---|---|---|---|

| Hypothesis 2 | 0.030 | 18.282 | YES | 0.031 | 19.504 | YES | −0.001 | −2.930 | YES | NO |

| Hypothesis 4 | 0.063 | 22.315 | YES | 0.046 | 16.496 | YES | 0.017 | 22.361 | YES | YES |

| Direct Effect | 95% Confidence Interval of the Direct Effect | t Value | Significance (p < 0.05) | Indirect Effect | 95% Confidence Interval of the Indirect Effect | t Value | Significance (p < 0.05) | Hypothesis Is Supported | |

|---|---|---|---|---|---|---|---|---|---|

| Hypothesis 2 | 0.143 | (0.131. 0.153) | 25.843 | YES | 0.005 | (0.003, 0.008) | 4.157 | YES | YES |

| Hypothesis 4 | 0.173 | (0.162, 0.185) | 29.718 | YES | 0.049 | (0.045. 0.053) | 23.436 | YES | YES |

| Dependent Variable | OLS:1 | OLS:7 | OLS:8 | OLS:2 | OLS:9 | OLS:10 |

|---|---|---|---|---|---|---|

| UPB | UPB | UPB | UPB | UPB | UPB | |

| Constant | 0.901 ***(0.021) | 0.92 ***(0.021) | 0.948 ***(0.022) | 1.104 ***(0.017) | 1.125 ***(0.017) | 1.13 ***(0.017) |

| Control variables | ||||||

| NV | −0.235 ***(0.004) | −0.237 ***(0.004) | −0.236 ***(0.004) | −0.226 ***(0.004) | −0.229 ***(0.004) | −0.229 ***(0.004) |

| DT | 0.037 ***(0.001) | 0.038 ***(0.001) | 0.038 ***(0.001) | 0.029 ***(0.001) | 0.03 ***(0.001) | 0.03 ***(0.001) |

| Independent variables | ||||||

| OR | 0.03 ***(0.002) | 0.03 ***(0.002) | 0.027 ***(0.002) | |||

| SC | 0.063 ***(0.003) | 0.063 ***(0.003) | 0.059 ***(0.003) | |||

| Moderator variable | ||||||

| TID | −0.017 ***(0.001) | −0.087 ***(0.013) | −0.017 ***(0.001) | −0.022 ***(0.003) | ||

| TIO | ||||||

| Interactions | ||||||

| OR × TID | 0.008 ***(0.001) | |||||

| OR × TIO | ||||||

| SC × TID | 0.005 ***(0.002) | |||||

| SC × TIO | ||||||

| Model summary | ||||||

| No. of Obs. | 28,887 | 28,887 | 28,887 | 28,887 | 28,887 | 28,887 |

| Adj-R2 | 0.1369 | 0.1414 | 0.1422 | 0.1417 | 0.1459 | 0.1461 |

| R2 | 0.137 | 0.1415 | 0.1423 | 0.1418 | 0.146 | 0.1462 |

| F | 1528.69 *** | 1189.95 *** | 958.34 *** | 1591.21 *** | 1234.91 *** | 989.19 *** |

| Mean VIF | 1.03 | 1.03 | 38.11 | 1.04 | 1.03 | 2.26 |

| Dependent Variable | OLS:1 | OLS:11 | OLS:12 | OLS:2 | OLS:13 | OLS:14 |

|---|---|---|---|---|---|---|

| UPB | UPB | UPB | UPB | UPB | UPB | |

| Constant | 0.901 ***(0.021) | 0.901 ***(0.021) | 0.881 ***(0.022) | 1.104 ***(0.017) | 1.096 ***(0.017) | 1.091 ***(0.017) |

| Control variables | ||||||

| NV | −0.235 ***(0.004) | −0.231 ***(0.004) | −0.231 ***(0.004) | −0.226 ***(0.004) | −0.223 ***(0.004) | −0.223 ***(0.004) |

| DT | 0.037 ***(0.001) | 0.036 ***(0.001) | 0.036 ***(0.001) | 0.029 ***(0.001) | 0.029 ***(0.001) | 0.029 ***(0.001) |

| Independent variables | ||||||

| OR | 0.03 ***(0.002) | 0.029 ***(0.002) | 0.031 ***(0.002) | |||

| SC | 0.063 ***(0.003) | 0.063 ***(0.003) | 0.068 ***(0.003) | |||

| Moderator variable | ||||||

| TID | ||||||

| TIO | −0.019 ***(0.002) | 0.029 ***(0.012) | −0.02 ***(0.002) | −0.008 ***(0.003) | ||

| Interactions | ||||||

| OR × TID | ||||||

| OR × TIO | −0.005 ***(0.001) | |||||

| SC × TID | ||||||

| SC × TIO | −0.011 ***(0.002) | |||||

| Model summary | ||||||

| No. of Obs. | 28,887 | 28,887 | 28,887 | 28,887 | 28,887 | 28,887 |

| Adj-R2 | 0.1369 | 0.1409 | 0.1414 | 0.1417 | 0.1464 | 0.1472 |

| R2 | 0.137 | 0.141 | 0.1415 | 0.1418 | 0.1465 | 0.1474 |

| F | 1528.69 *** | 1185.49 *** | 952.29 *** | 1591.21 *** | 1239.25 ** | 998.38 *** |

| Mean VIF | 1.03 | 1.03 | 21.89 | 1.04 | 1.03 | 1.84 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, S.; Jin, X.; Zhang, Y. User Participation Behavior in Crowdsourcing Platforms: Impact of Information Signaling Theory. Sustainability 2021, 13, 6290. https://doi.org/10.3390/su13116290

Gao S, Jin X, Zhang Y. User Participation Behavior in Crowdsourcing Platforms: Impact of Information Signaling Theory. Sustainability. 2021; 13(11):6290. https://doi.org/10.3390/su13116290

Chicago/Turabian StyleGao, Suying, Xiangshan Jin, and Ye Zhang. 2021. "User Participation Behavior in Crowdsourcing Platforms: Impact of Information Signaling Theory" Sustainability 13, no. 11: 6290. https://doi.org/10.3390/su13116290

APA StyleGao, S., Jin, X., & Zhang, Y. (2021). User Participation Behavior in Crowdsourcing Platforms: Impact of Information Signaling Theory. Sustainability, 13(11), 6290. https://doi.org/10.3390/su13116290