Product Service System Configuration Based on a PCA-QPSO-SVM Model

Abstract

:1. Introduction

2. Literature Review

2.1. PSS Design

2.2. PSS Configuration

2.3. PSS Configuration Optimization

- (1)

- A genetic algorithm is a computational model that simulates the biological evolution process of natural selection and the genetic mechanism of Darwin’s biological evolution theory. It is a method of searching for the optimal solution by simulating the natural evolution process. GA is often combined with SVM to optimize the parameters of SVM. Huang et al. [47] proposed the GA-SVM model to analyze the quantitative contribution of climate change and human activities to changes in vegetation coverage. The model used genetic algorithms to optimize the loss parameters, kernel function parameters, and loss function epsilon values in the SVM. Based on GA-SVM for rapid and effective screening of human papillomaviruses, Chen et al. [48] proposed a Raman spectroscopy technique that improved the accuracy of the model to optimize the penalty factors and nuclear function parameters in the SVM model. Li et al. [49] used the GA-SVM model to identify and classify flip chips.

- (2)

- Grid search is an exhaustive search method. By looping through the possible values of multiple parameters, it generates the parameter with the best performance, which is the optimal parameter. GS is a common method to optimize the parameters of SVM. Lv et al. [50] used PSO-SVM and GS-SVM to predict the corrosion rate of a steel cross-section. Tan et al. [51] proposed a method combining a successive projections algorithm (SPA) with an SVM based on GS-SVM to classify and identify apple samples with different degrees of bruising. Kong et al. [52] used the GS-SVM model to assess marine eutrophication states of coastal waters.

- (3)

- Particle swarm optimization is an optimization algorithm that simulates the predation behavior of bird swarms. The iteration process forms the optimal position and optimal direction, hence updating the particle swarm. Many scholars apply the PSO algorithm to optimize SVM parameters. García Nieto et al. [53] proposed a hybrid PSO optimized SVM model to predict the successful growth cycle of spirulina. Liu et al. [54] developed the PSO-SVM model to predict the daily PM2.5 level. Bonah et al. [55] combined Vis-NIR hyperspectral imaging with pixel analysis and a new CARS-PSO-SVM model to classify foodborne bacterial pathogens.

3. Research Framework

- (1)

- Data preparation and preprocessing

- (2)

- Reduction of the requirement dimension

- (3)

- Construction of the QPSO-SVM model

- (4)

- Prediction of the PSS configuration scheme

4. Construction of a PCA-QPSO-SVM Model

4.1. Principal Component Analysis

- Step 1: Set the initial dataset D = {x1, x2, …, xm} and the low-dimensional space dimension d’.

- Step 2: Centralize all samples: xi ← .

- Step 3: Calculate the sample covariance matrix and decompose the eigenvalues of the covariance matrix .

- Step 4: Take the eigenvector corresponding to the top d’ eigenvalues w1, w2, …, wd’.

4.2. Quantum Particle Swarm Optimization Algorithm

4.3. Support Vector Machine

4.4. Optimization of the SVM Parameters

- Step 1: Use a PCA algorithm to reduce the dimension of dataset Q to get a new dataset Q’.

- Step 2: Determine the initial parameters of the QPSO, such as the number of particle swarms, the range of the parameters, the alpha value, and so on.

- Step 3: Set the fitness function in QPSO. In this paper, the fitness function is the average of SVM cross-validation (CV), and its value represents the classification accuracy of the model. The optimal value pbest and the global optimal value gbest for each particle are updated by iterating the fitness function, where pbest is the penalty factor C, gbest is the kernel function σ.

- Step 4: Calculate the optimal position mbest of the particle swarm and update the new position of each particle.

- Step 5: Determine the end condition. When the optimal search reaches the maximum number of iterations, the optimal search ends; otherwise, go to Step 3.

- Step 6: The optimal parameters (C, σ) are brought into the SVM model to conduct prediction.

5. PSS Configuration Based on the PCA-QPSO-SVM Model

5.1. Data Collecting and Processing

5.2. Construction of the PCA-QPSO-SVM Model for PSS Configuration

- Step 1: Determine the product modules and service modules, then combine the corresponding instances to form different PSS configurations. According to the relevant historical data, the ‘requirements-configuration’ samples are collected to construct the model.

- Step 2: Reduce the dimension of requirement features by using the PCA algorithm. QPSO is used to perform k-fold cross-validation (CV) to find the best Gaussian kernel function σ and the penalty factor C. For k-fold CV, the entire training set is divided into k subsets with an equal number of samples. One of the subsets is selected as the testing set, and the remaining k-1 subsets are the training set.

- Step 3: Construct the multi-class SVM model by using the best parameter combination (C, σ) to test the testing set. After constructing a reliable classification model, PSS configuration can be predicted by inputting new customer requirements.

6. Case Study

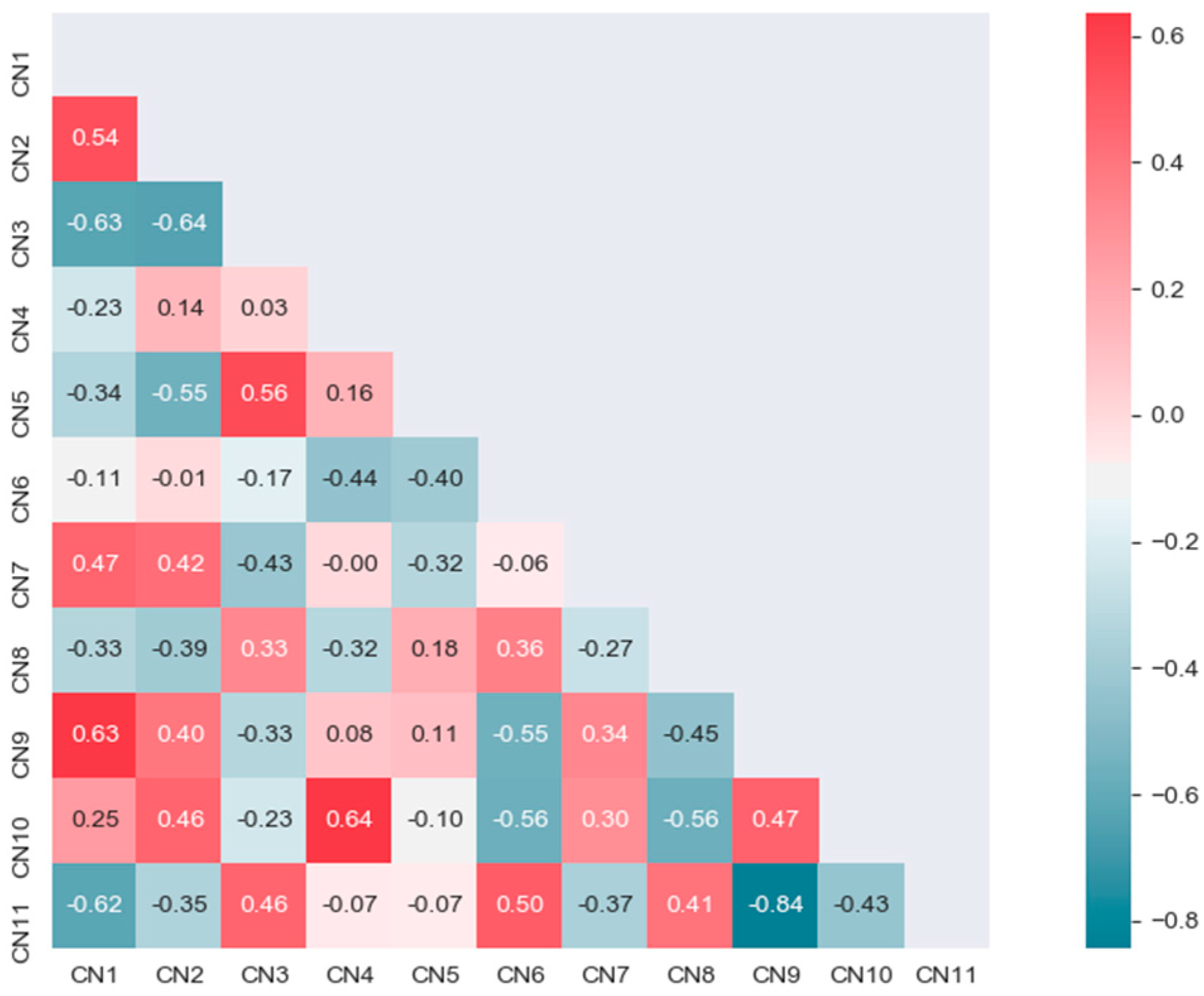

6.1. Data Coding and Features Analysis

6.2. PCA-QPSO-SVM Model Construction for PSS Configuration

6.2.1. Dimension Reduction of Requirement Feature

6.2.2. QPSO-SVM Model Construction and Parameters Setting

6.3. Prediction and Comparative Analysis of PCA-QPSO-SVM Model

6.4. Discussion of Results

7. Conclusions and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Matschewsky, J.; Kambanou, M.L.; Sakao, T. Designing and providing integrated product-service systems-challenges, opportunities and solutions resulting from prescriptive approaches in two industrial companies. Int. J. Prod. Res 2018, 56, 2150–2168. [Google Scholar] [CrossRef] [Green Version]

- Haber, N.; Fargnoli, M. Designing product-service systems: A review towards a unified approach. In Proceedings of the 7th International Conference on Industrial Engineering and Operations Management (IEOM), Rabat, Morocco, 11–13 April 2017; pp. 817–837. [Google Scholar]

- Shimomura, Y.; Nemoto, Y.; Kimita, K. A Method for Analysing Conceptual Design Process of Product-Service System. CIRP Ann. Manuf. Technol. 2015, 64, 145–148. [Google Scholar] [CrossRef]

- Schweitzer, E.; Aurich, J.C. Continuous Improvement of Industrial Product–service Systems. CIRP J. Manuf. Sci. Technol. 2010, 3, 158–164. [Google Scholar] [CrossRef]

- Song, W.; Chan, F.T.S. Multi-objective configuration optimization for product-extension service. J. Manuf. Syst. 2015, 37, 113–125. [Google Scholar] [CrossRef]

- Zhang, Z.; Chai, N.; Ostrosi, E.; Shang, Y. Extraction of association rules in the schematic design of product service system based on Pareto-MODGDFA. Comput. Ind. Eng. 2019, 129, 392–403. [Google Scholar] [CrossRef]

- Belkadi, F.; Colledani, M.; Urgo, M.; Bernard, A.; Colombo, G.; Borzi, G.; Ascheri, A. Modular design of production systems tailored to regional market requirements: A Frugal Innovation perspective. IFAC-Pap. 2018, 51, 96–101. [Google Scholar] [CrossRef]

- Rennpferdt, C.; Greve, E.; Krause, D. The Impact of Modular Product Architectures in PSS Design: A systematic Literature Review. Procedia CIRP 2019, 84, 290–295. [Google Scholar] [CrossRef]

- Geng, X.; Jin, Y.; Zhang, Y. Result-oriented PSS Modular Design Method based on FDSM. Procedia CIRP 2019, 83, 610–615. [Google Scholar] [CrossRef]

- Xuanyuan, S.; Jiang, Z.; Patil, L.; Li, Y.; Li, Z. Multi-objective optimization of product configuration. In Proceedings of the ASME 2008 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Brooklyn, NY, USA, 3–6 August 2008; pp. 961–968. [Google Scholar]

- Sheng, Z.; Xu, T.; Song, J. Configuration design of product service system for CNC machine tools. Adv. Mech. Eng. 2015, 2, 222–231. [Google Scholar] [CrossRef] [Green Version]

- Dong, M.; Yang, D.; Su, L. Ontology-based service product configuration system modeling and development. Expert Syst. Appl. 2011, 38, 11770–11786. [Google Scholar] [CrossRef]

- Shen, J.; Wang, L.; Sun, Y. Configuration of Product Extension Services in Servitisation Using an Ontology-based Approach. Int. J. Prod. Res. 2012, 50, 6469–6488. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, W.; Chai, N.; Fan, B.; Zhang, Z. Base type selection of product service system based on convolutional neural network. Procedia CIRP 2019, 83, 601–605. [Google Scholar] [CrossRef]

- Wei, W.; Fan, W.; Li, Z. Multi-objective optimization and evaluation method of modular product configuration design scheme. Int. J. Adv. Manuf. Technol. 2014, 75, 1527–1536. [Google Scholar] [CrossRef]

- Demidova, L.A.; Egin, M.M.; Tishkin, R.V. A Self-tuning Multiobjective Genetic Algorithm with Application in the SVM Classification. Procedia Comput. Sci. 2019, 150, 503–510. [Google Scholar] [CrossRef]

- Pławiak, P.; Abdar, M.; Acharya, U.R. Application of new deep genetic cascade ensemble of SVM classifiers to predict the Australian credit scoring. Appl. Soft. Comput. 2019, 84, 105740. [Google Scholar] [CrossRef]

- Sun, L.; Zou, B.; Fu, S.; Chen, J.; Wang, F. Speech emotion recognition based on DNN-decision tree SVM model. Speech Commun. 2019, 115, 29–37. [Google Scholar] [CrossRef]

- Guyon, I.; Gunn, S.; Nikravesh, M. Feature Extraction: Foundations and Applications; Springer Science Business Media: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Asante-Okyere, S.; Shen, C.; Ziggah, Y.Y.; Rulegeya, M.M.; Zhu, X. Principal component analysis (PCA) based hybrid models for the accurate estimation of reservoir water saturation. Comput. Geosci. UK 2020, 145, 104555. [Google Scholar] [CrossRef]

- Cao, S.; Hu, Z.; Luo, X.; Wang, H. Research on fault diagnosis technology of centrifugal pump blade crack based on PCA and GMM. Measurement 2021, 173, 108558. [Google Scholar] [CrossRef]

- Henry, Y.Y.S.; Aldrich, C.; Zabiri, H. Detection and severity identification of control valve stiction in industrial loops using integrated partially retrained CNN-PCA frameworks. Chemom. Intell. Lab. Syst. 2020, 206, 104143. [Google Scholar] [CrossRef]

- Lu, S.; Sun, C.; Lu, Z. An improved quantum-behaved particle-swarm optimization method for short-term combined economic emission hydrothermal scheduling. Energy. Convers. Manag. 2010, 51, 561–571. [Google Scholar] [CrossRef]

- Chen, M.; Ruan, J.; Xi, D. Micro grid scheduling optimization based on quantum particle swarm optimization (QPSO) algorithm. In Proceedings of the 2018 Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 6470–6475. [Google Scholar]

- Zhu, S.; Luo, P.; Yang, Y.; Lu, Q.; Chen, Q. Optimal dispatch for gridconnecting microgrid considering shiftable and adjustable loads. In Proceedings of the IECON 2017—43rd Annual Conference of the IEEE Industrial Electronics Society, Beijing, China, 29 October–1 November 2017; pp. 5575–5580. [Google Scholar]

- Baines, T.S.; Lightfoot, H.W.; Evans, S.; Neely, A.; Greenough, R.; Peppard, J.; Alcock, J.R. State-of-the-art in product-service systems. Proc. Inst. Mech. Eng. Part B: J. Eng. Manuf. 2007, 221, 1543–1552. [Google Scholar] [CrossRef] [Green Version]

- Durugbo, C.; Tiwari, A.; Alcock, J.R. A review of information flow diagrammatic models for product-service systems. Int. J. Adv. Manuf. Technol. 2011, 52, 1193–1208. [Google Scholar] [CrossRef]

- Chiu, M.C.; Kuo, M.Y.; Kuo, T.C. A systematic methodology to develop business model for a product service system. Int. J. Indus. Eng. 2015, 22, 369–381. [Google Scholar]

- Fargnoli, M.; Haber, N.; Sakao, T. PSS modularisation: A customer-driven integrated approach. Int. J. Prod. Res. 2019, 57, 4061–4077. [Google Scholar] [CrossRef] [Green Version]

- Lee, C.H.; Chen, C.H.; Trappey, A.J. A structural service innovation approach for designing smart product service systems: Case study of smart beauty service. Adv. Eng. Inf. 2019, 40, 154–167. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, C.H.; Zheng, P.; Li, X.; Khoo, L.P. A novel data-driven graph-based requirement elicitation framework in the smart product-service system context. Adv. Eng. Inform. 2019, 42, 100983. [Google Scholar] [CrossRef]

- Aurich, J.C.; Wolf, N.; Siener, M.; Schweitzer, E. Configuration of product-service systems. J. Manuf. Technol. Manag. 2009, 20, 591–605. [Google Scholar] [CrossRef]

- Haber, N.; Fargnoli, M. Sustainable product-service systems customization: A case study research in the medical equipment sector. Sustainability 2021, 13, 6624. [Google Scholar] [CrossRef]

- Yu, L.; Wang, L.; Yu, J. Identification of Product Definition Patterns in Mass Customization Using a Learning-Based Hybrid Approach. Int. J. Adv. Manuf. Technol. 2008, 38, 1061–1074. [Google Scholar] [CrossRef]

- Shen, J.; Wang, L. Configuration Rules Acquisition for Product Extension Services using Local Cluster Neural Network and Rulex Algorithm. In Proceedings of the 2010 International Conference on Artificial Intelligence and Computational Intelligence, Sanya, China, 23–24 October 2010; pp. 196–199. [Google Scholar]

- Ahlawat, S.; Choudhary, A. Hybrid CNN-SVM Classifier for Handwritten Digit Recognition. Procedia Comput. Sci. 2020, 167, 2554–2560. [Google Scholar] [CrossRef]

- Viloria, A.; Herazo-Beltran, Y.; Cabrera, D.; Pineda, O.B. Diabetes Diagnostic Prediction Using Vector Support Machines. Procedia Comput Sci. 2020, 170, 376–381. [Google Scholar] [CrossRef]

- Zhou, T.; Thung, K.H.; Liu, M.; Shi, F.; Zhang, C.; Shen, D. Multi-modal latent space inducing ensemble SVM classifier for early dementia diagnosis with neuroimaging data. Med. Image Anal. 2020, 60, 101630. [Google Scholar] [CrossRef]

- Shao, M.; Wang, X.; Bu, Z.; Chen, X.; Wang, Y. Prediction of energy consumption in hotel buildings via support vector machines. Sustain. Cities. Soc. 2020, 57, 102128. [Google Scholar] [CrossRef]

- Zhang, K.; Chen, J.; Zhang, T.; Zhou, Z. A Compact Convolutional Neural Network Augmented with Multiscale Feature Extraction of Acquired Monitoring Data for Mechanical Intelligent Fault Diagnosis. J. Manuf. Syst. 2020, 55, 273–284. [Google Scholar] [CrossRef]

- Kontonatsios, G.; Spencer, S.; Matthew, P.; Korkontzelos, I. Using a neural network-based feature extraction method to facilitate citation screening for systematic reviews. Expert. Syst. Appl. X 2020, 6, 100030. [Google Scholar] [CrossRef]

- Xiao, G.; Li, J.; Chen, Y.; Li, K. MalFCS: An effective malware classification framework with automated feature extraction based on deep convolutional neural networks. J. Parallel Distrib. Comput. 2020, 141, 49–58. [Google Scholar] [CrossRef]

- Zhang, D.; Zou, L.; Zhou, X.; He, F. Integrating feature selection and feature extraction methods with deep learning to predict clinical outcome of breast cancer. IEEE Access 2018, 6, 28936–28944. [Google Scholar] [CrossRef]

- Ratnasari, N.R.; Susanto, A.; Soesanti, I. Thoracic X-ray features extraction using thresholding-based ROI template and PCA-based features selection for lung TB classification purposes. In Proceedings of the 2013 3rd international conference on instrumentation, commmunications. Information Technology and Biomedical Engineering (ICICI-BME), Bandung, Indonesia, 7–8 November 2013. [Google Scholar]

- Ma, J.; Yuan, Y. Dimension reduction of image deep feature using PCA. J. Vis. Commun. Image Represent. 2019, 63, 102578. [Google Scholar] [CrossRef]

- Negi, S.; Kumar, Y.; Mishra, V.M. Feature extraction and classification for EMG signals using linear discriminant analysis. In Proceedings of the 2016 2nd international conference on advances in computing, communication, & automation (ICACCA), Bareilly, India, 30 September–1 October 2016. [Google Scholar]

- Huang, S.; Zheng, X.; Ma, L.; Wang, H.; Huang, Q.; Leng, Q.; Meng, E.; Guo, Y. Quantitative contribution of climate change and human activities to vegetation cover variations based on GA-SVM model. J. Hydrol. 2020, 584, 124687. [Google Scholar] [CrossRef]

- Chen, C.; Wang, J.; Chen, C.; Tang, J.; Lv, X.; Ma, C. Rapid and efficient screening of human papillomavirus by Raman spectroscopy based on GA-SVM. Optik 2020, 210, 164514. [Google Scholar] [CrossRef]

- Li, K.; Wang, L.; Wu, J.; Zhang, Q.; Liao, G.; Su, L. Using GA-SVM for defect inspection of flip chips based on vibration signals. Microelectron. Reliab. 2018, 81, 159–166. [Google Scholar] [CrossRef]

- Lv, Y.; Wang, J.; Wang, J.; Xiong, C.; Zou, L.; Li, L.; Li, D. Steel corrosion prediction based on support vector machines. Chaos Solitons Fractals 2020, 136, 109807. [Google Scholar] [CrossRef]

- Tan, W.; Sun, L.; Yang, F.; Che, W.; Ye, D.; Zhang, D.; Zou, B. Study on bruising degree classification of apples using hyperspectral imaging and GS-SVM. Optik 2018, 154, 581–592. [Google Scholar] [CrossRef]

- Kong, X.; Sun, Y.; Su, R.; Shi, X. Real-time eutrophication status evaluation of coastal waters using support vector machine with grid search algorithm. Mar. Pollut. Bull. 2017, 119, 307–319. [Google Scholar] [CrossRef] [PubMed]

- Nieto, P.J.G.; Gonzalo, E.G.; Fernández, J.R.A.; Muñiz, C.D. A hybrid PSO optimized SVM-based model for predicting a successful growth cycle of the Spirulina platensis from raceway experiments data. J. Comput. Appl. Math. 2016, 291, 293–303. [Google Scholar] [CrossRef]

- Liu, W.; Guo, G.; Chen, F.; Chen, Y. Meteorological pattern analysis assisted daily PM2.5 grades prediction using SVM optimized by PSO algorithm. Atmos. Pollut. Res. 2019, 10, 1482–1491. [Google Scholar] [CrossRef]

- Bonah, E.; Huang, X.; Yi, R.; Aheto, J.H.; Yu, S. Vis-NIR hyperspectral imaging for the classification of bacterial foodborne pathogens based on pixel-wise analysis and a novel CARS-PSO-SVM model. Infrared Phys. Technol. 2020, 105, 103220. [Google Scholar] [CrossRef]

- Ch, S.; Anand, N.; Panigrahi, B.K.; Mathur, S. Streamflow forecasting by SVM with quantum behaved particle swarm optimization. Neurocomputing 2013, 101, 18–23. [Google Scholar] [CrossRef]

- Li, B.; Li, D.; Zhang, Z.; Yang, S.; Wang, F. Slope stability analysis based on quantum-behaved particle swarm optimization and least squares support vector machine. Appl. Math. Model. 2015, 39, 5253–5264. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 2000. [Google Scholar]

- Long, H.J.; Wang, L.Y.; Shen, J.; Wu, M.X.; Jiang, Z.B. Product service system configuration based on support vector machine considering customer perception. Int. J. Prod. Res. 2013, 51, 5450–5468. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn 1995, 20, 273–297. [Google Scholar] [CrossRef]

| Feature | Option | Code | |

|---|---|---|---|

| CN1 | Environmental protection | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN2 | Stability | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN3 | Intelligence | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN4 | Simplicity | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN5 | Convenience | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN6 | Adaptability | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN7 | Reliability | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN8 | Comfort | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN9 | Energy saving | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN10 | Safety | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| CN11 | Heat dissipation | {L, ML, M, MH, H} | {−2, −1, 0, 1, 2} |

| Product Module | Instance | Code |

|---|---|---|

| Compressor | Permanent magnet synchronous frequency conversion screw type | A1 |

| Photovoltaic direct-drive frequency conversion centrifugal | A2 | |

| DC frequency conversion | A3 | |

| Permanent magnet synchronous frequency conversion centrifugal | A4 | |

| Condenser | Water-cooled condenser | B1 |

| Air-cooled condenser | B2 | |

| Evaporative condenser | B3 | |

| Evaporator | Horizontal evaporator | C1 |

| Vertical tube evaporator | C2 | |

| Throttling parts | Capillary | D1 |

| Throttle | D2 | |

| Fan | Axial fan | E1 |

| Centrifugal fan | E2 | |

| Reservoir | unidirectional | F1 |

| Bidirectional | F2 | |

| Vertical | F3 | |

| Horizontal | F4 | |

| Filter drier | Loose filling dry filter | G1 |

| Block filter | G2 | |

| Compact bead dryer filter | G3 | |

| Cooling Tower | Dry cooling tower | H1 |

| Temperature cooling tower | H2 |

| Service Module | Instance | Code |

|---|---|---|

| Recycling service | Home inspection | I1 |

| High price recycling | I2 | |

| Cash transaction | I3 | |

| Maintenance service | Annual maintenance | J1 |

| Quarterly maintenance | J2 | |

| Monthly maintenance | J3 | |

| Spare parts service | Original parts supply | K1 |

| Non-original parts supply | K2 | |

| Replacement of faulty spare parts | K3 | |

| Spare parts upgrade | K4 | |

| Install service | Remote installation and debugging | L1 |

| On-site installation and commissioning | L2 | |

| Fully commissioned installation and commissioning | L3 | |

| Control Technology Service | Adaptive location and weather | M1 |

| Self-regulation of demand | M2 | |

| Predictive self-diagnosis | M3 | |

| Cleaning service | Duct cleaning | N1 |

| Parts cleaning | N2 | |

| Cooling tower cleaning | N3 | |

| Condenser cleaning | N4 |

| Samples | Inputs | Outputs | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CN1 | CN2 | CN3 | CN4 | CN5 | CN6 | CN7 | CN8 | CN9 | CN10 | CN11 | ||

| 01 | 0 | 0 | 2 | 1 | 2 | −1 | 1 | 1 | 2 | 1 | −1 | 1 |

| 02 | 2 | 2 | −1 | −1 | −1 | 1 | 2 | 1 | 2 | 1 | −1 | 3 |

| 03 | 1 | 1 | 1 | 2 | 0 | 0 | 2 | 1 | 2 | 2 | 0 | 5 |

| 04 | 1 | 1 | 0 | 2 | 0 | −1 | 2 | 0 | 1 | 2 | −1 | 2 |

| 05 | −1 | 1 | 2 | 1 | 1 | 2 | 1 | 2 | 0 | −1 | 2 | 4 |

| …… | …… | …… | …… | …… | …… | …… | …… | …… | …… | …… | …… | …… |

| 96 | 0 | −1 | 1 | 1 | 2 | −1 | 0 | 0 | 2 | 1 | −1 | 1 |

| 97 | 0 | 0 | 2 | 0 | 2 | −1 | 2 | 1 | 2 | 0 | −1 | 1 |

| 98 | 0 | 2 | 0 | 2 | 1 | 0 | 2 | 1 | 1 | 2 | −2 | 2 |

| 99 | 1 | 2 | 1 | 2 | 1 | 0 | 2 | 1 | 2 | 2 | −1 | 2 |

| 100 | 1 | 2 | 2 | 1 | 0 | −1 | 1 | 1 | 1 | 2 | 1 | 5 |

| Samples | Inputs | Outputs | |

|---|---|---|---|

| X1 | X2 | ||

| 01 | −0.224301033 | −2.404274949 | 1 |

| 02 | −1.799516865 | 3.14492011 | 3 |

| 03 | −0.986306315 | −0.290209173 | 5 |

| 04 | −1.898471273 | −0.422895036 | 2 |

| 05 | 3.882774024 | 0.580798351 | 4 |

| …… | …… | …… | …… |

| 96 | −0.270004463 | −2.44421515 | 1 |

| 97 | 0.094105412 | −1.824376829 | 1 |

| 98 | −1.867236441 | −0.250669022 | 2 |

| 99 | −1.777916303 | −0.443148983 | 2 |

| 100 | −0.14995178 | −0.555739606 | 5 |

| Parameter | Settings |

|---|---|

| Number of particles | 50 |

| Particle dimension | 2 |

| The maximum number of iterations | 50 |

| Alpha | 0.6 |

| Maximum parameter | 15 |

| Minimum parameter | 0.01 |

| Fitness function | 2-fold CV classification accuracy |

| Algorithm stop condition | The number of iterations > 50 |

| Model | PCA-QPSO-SVM | PCA-PSO-SVM | PSO-SVM | GA-SVM | GS-SVM |

|---|---|---|---|---|---|

| Number of tests | 25 | 25 | 25 | 25 | 25 |

| Number of errors | 0 | 2 | 9 | 9 | 10 |

| Tests accuracy | 100% | 92% | 64% | 64% | 60% |

| Mean square error | 0 | 1.0 | 1.04 | 1.56 | 3.32 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, Z.; Geng, X. Product Service System Configuration Based on a PCA-QPSO-SVM Model. Sustainability 2021, 13, 9450. https://doi.org/10.3390/su13169450

Cui Z, Geng X. Product Service System Configuration Based on a PCA-QPSO-SVM Model. Sustainability. 2021; 13(16):9450. https://doi.org/10.3390/su13169450

Chicago/Turabian StyleCui, Zhaoyi, and Xiuli Geng. 2021. "Product Service System Configuration Based on a PCA-QPSO-SVM Model" Sustainability 13, no. 16: 9450. https://doi.org/10.3390/su13169450

APA StyleCui, Z., & Geng, X. (2021). Product Service System Configuration Based on a PCA-QPSO-SVM Model. Sustainability, 13(16), 9450. https://doi.org/10.3390/su13169450