Review on Lane Detection and Tracking Algorithms of Advanced Driver Assistance System

Abstract

:1. Introduction

1.1. Objectives and Scope of the Study

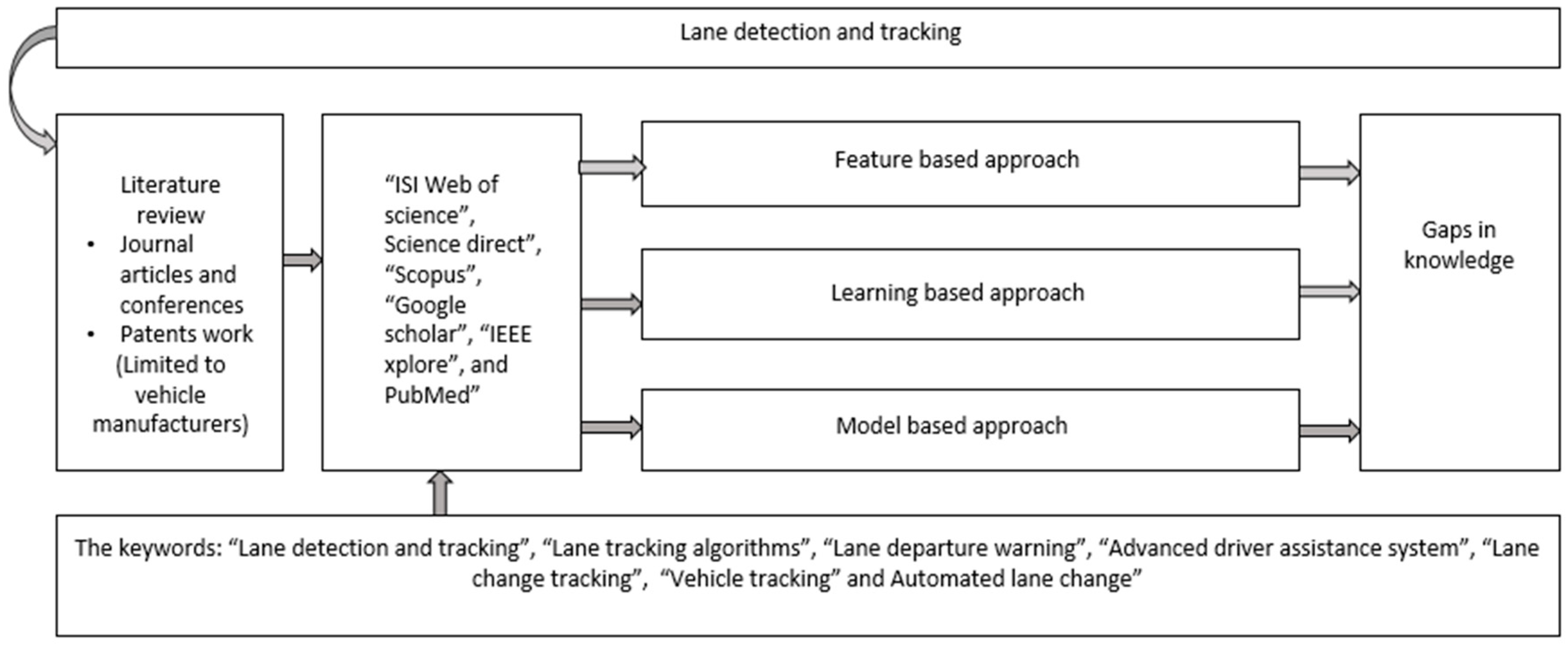

2. Methodology

3. Literature Review

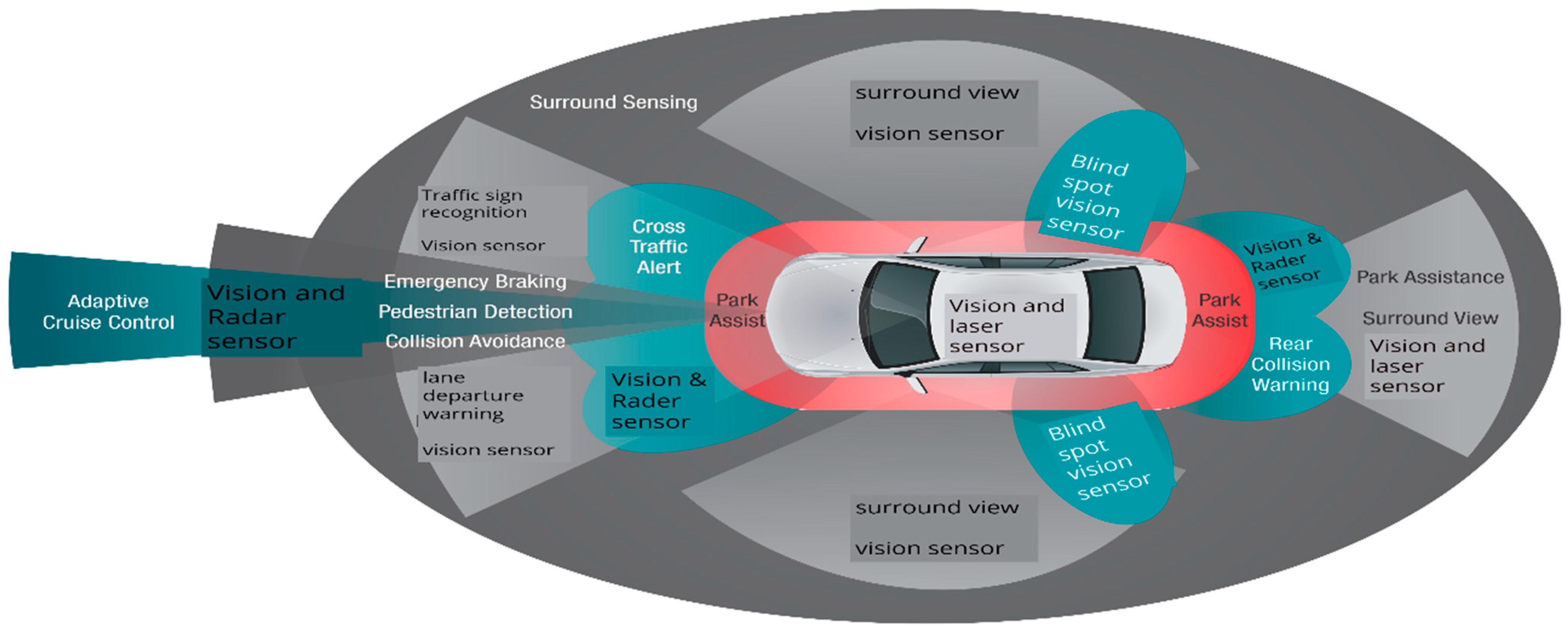

3.1. Sensors Used in the ADAS

3.1.1. LASER Based Sensors

3.1.2. RADAR Based Sensors

3.1.3. Vision-Based Sensors

3.2. Lane Detection and Tracking Algorithms

3.2.1. Features-Based Approach (Image and Sensor-Based Lane Detection and Tracking)

- Obtain the camera and 2D LIDAR data.

- Perform segmentation operation of the LIDAR data to determine groups of objects. It is done based on the distance among different points.

- Map the group or objects to the camera data.

- Turn the pixels of groups or objects into camera data. It is done by the formation of the region of interest based on a rectangular region. Straight lines are drawn from the location of the camera to the corner of the region of interest. The convex polygon algorithm determines the background and occluded region of the image.

- Apply lane detection to the binary image to detect the lanes. The proposed approach shows better accuracy compared with the traditional methods for a distance less than 9 m.

3.2.2. Model-Based Approach (Robust Lane Detection and Tracking)

3.2.3. Learning-Based Approach (Predictive Controller Lane Detection and Tracking)

| Methods | Steps | Tool Used | Data Used | Methods Classification | Remarks |

|---|---|---|---|---|---|

| Image and sensor-based lane detection and tracking |

|

| sensors values | Feature-based approach | Frequent calibration is required for accurate decision making in a complex environment |

| Predictive controller for lane detection and controller | Machine learning technique (e.g., neural networks,) |

| data obtained from the controller | Learning-based approach | Reinforcement learning with model predictive controller could be a better choice to avoid false lane detection. |

| Robust lane detection and tracking |

| Based on robust lane detection model algorithms | Real-time | Model-based approach | Provides better result in different environmental conditions. Camera quality plays important role in determining lanes marking |

| Sources | Data | Method Used | Advantages | Drawbacks | Results | Tool Used | Future Prospects | Data | Reason for Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|

| Simulation | Real | |||||||||

| [24] | Y | Inverse perspective mapping method is applied to convert the image to bird’s eye view. | Minimal error and quick detection of lane. | The algorithm performance drops when driving in tunnel due to the fluctuation in the lighting conditions. | The lane detection error is 5%. The cross-track error is 25% and lane detection time is 11 ms. | Fisheye dashcam, inertial measurement unit and ARM processor-based computer. | Enhancing the algorithm suitable for complex road scenario and with less light conditions. | Data obtained by using a model car running at a speed of 100 m/s. | Performance drop in determining the lane, if the vehicle is driving in a tunnel and the road conditions where there is no proper lighting. The complex environment creates unnecessary tilt causing some inaccuracy in lane detection. | |

| [25] | Y | Kinematic motion model to determine the lane with minimal parameters of the vehicle. | No need for parameterization of the vehicle with variables like cornering stiffness and inertia. Prediction of lane even in absence of camera input for around 3 s. | The algorithm suitable for different environment situation not been considered | Lateral error of 0.15 m in the absence of camera image. | Mobileye camera, carsim and MATLAB/Simulink, Auto box from dSPACE. | Trying the fault tolerant model in real vehicle. | Test vehicle | ---- | |

| [26] | Y | Usage of inverse mapping for the creation of bird’s eye view of the environment. | Improved accuracy of lane detection in the range of 86%to 96% for different road types. | Performance under different vehicle speed and inclement weather conditions not considered. | The algorithm requires 0.8 s to process frame. Higher accuracy when more than 59% of lane markers are visible. | Firewire color camera, MATLAB | Real-time implementation of the work | Highway and streets and around Atlanta | ---- | |

| [27] | Y | Y | Hough transform to extract the line segments, usage of a convolutional neural network-based classifier to determine the confidence of line segment. | Tolerant to noise | In the custom dataset, the performance drops compared to Caltech dataset. | For urban scenario, the proposed algorithm provides accuracy greater than 95%. The accuracy obtained in lane detection in the custom setup is 72% to 86%. | OV10650 camera and I MU is Epson G320. | Performance improvement is future consideration. | Caltech dataset and custom dataset. | The device specification and calibration, it plays important role in capturing the lane. |

| [28] | Y | Feature-line-pairs (FLP) along with Kalman filter for road detection. | Faster detection of lanes, suitable for real-time environment. | Testing the algorithm suitability under different environmental conditions could be done. | Around 4 ms to detect the edge pixels, 80 ms to detect all the FLPs, 1 ms to determine the extract road model with Kalman filter tracking. | C++; camera and a matrox meteor RGB/ PPB digitizer. | Robust tracking and improve the performance in urban dense traffic. | Test robot. | ------ | |

| [29] | Y | Dual thresholding algorithm for pre-processing and the edge is detected by single direction gradient operator. Usage of the noise filter to remove the noise. | The lane detection algorithm insensitive headlight, rear light, cars, road contour signs. | The algorithm detects the straight lanes during the night. | Detection Of straight lanes. | Camera with RGB channel. | ------- | Custom dataset | Suitability of the algorithm for different types of roads during night to be studied. | |

| [30] | Y | Determination of region of interest and conversion of binary image via adaptive threshold. | Better accuracy | The algorithm needs changes for checking its suitability for the day time lane detection | 90% accuracy during night at isolated highways | Firewire S400 camera and MATLAB | Geometrics transformation of image for increasing the accuracy and intensity normalization. | Custom dataset | The constraints and assumption considered do not suit for the day time. | |

| [31] | Y | Canny edge detector algorithm is used to detect the edges of the lanes. | Hough transform improves the output of the lane tracker. | ------ | Performance of the proposed system is better. | Raspberry pi based robust with camera and sensors. | Simulation of the proposed method by using raspberry Pi based robot with a monocular camera and radar-based sensors to determine the distance between neighboring vehicles. | Custom data | ------ | |

| [32] | Y | Video processing technique to determine the lanes illumination change on the region of interest. | ---- | ---- | Robust performance | vision-based vehicle | Determine the lanes illumination changes on the region of interest for curve line roads | Simulator | ---- | |

| [33] | Y | Y | A colour-based lane detection and representative line extraction algorithm is used. | Better accuracy in the day time. | Algorithm needs changes to test in different scenario. | The results show that the lane detection rate is more than 93%. | MATLAB | There is scope to test the algorithm in the night time. | Custom data | Unwanted noise reduces the performance of the algorithm. |

| [34] | Y | Proposed hardware architecture for detecting straight lane lines using Hough transform. | Proposed algorithm provides better accuracy for occlusion, poor line paintings. | Computer complexity and high cost of HT (Hough transform) | Algorithm tested under various conditions of roads such as urban street, highway and algorithm provides a detection rate of 92%. | Virtex-5 ML 505 platform | Algorithm need to test with different weather condition. | Custom | ----- | |

| [35] | Y | Proposed a lane detection methodology in a circular arc or parabolic based geometric method. | Video sensor improves the performance of the lane marking. | Performance dropped in lane detection when entering the tunnel region | Experiment performed with different road scene and provided better results. | maps, video sensors, GPS. | Proposed method can test with previously available data. | Custom | Due to low illumination | |

| [36] | Y | Proposed a hierarchical lane detection system to detect the lanes on the structured and unstructured roads. | Quick detection of lanes. | ---- | The system achieves an accuracy of 97% in lane detection. | MATLAB | Algorithm can test on an isolated highway, urban roads. | ---- | ||

| [37] | Y | LIDAR sensor-based boundary detection and tracking method for structured and unstructured roads. | Regardless of road types, algorithm detect accurate lane boundaries. | Difficult to track lane boundaries for unstructured roads because of low contract, arbitrary road shape | The road boundary detection accuracy is 95% for structured roads and 92% for unstructured roads. | Test vehicle with LIDAR, GPS and IMU. | Algorithm needs to test with RADAR based and vision-based sensors. | Custom data | Low contract arbitrary road shape | |

| [38] | Y | Proposed a method to detect the pedestrian lanes under different illumination conditions with no lane markings. | Robust performance for pedestrian lane detection under unstructured environment. | More challenging for indoor and outdoor environment. | The result shows that the lane detection accuracy is 95%. | MATLAB | There is scope for structured roads with different speeds limit | New dataset of 2000 images (custom) | Complex environment | |

| [39] | Y | Y | The proposed system is implemented using an improved Hough transform, which pre-process different light intensity road images and convert it to the polar angle constraint area. | Robust performance for a campus road, in which the road does not have lane markings. | Performance drops due to low intensity of light | ------ | Test vehicle and MATLAB | ------- | Custom data | Low illumination |

| [40] | Y | A lane detection algorithm based on camera and 2D LIDAR input data. | Computational and experimental results show the method significantly increases accuracy. | ------ | The proposed approach shows better accuracy compared with the traditional methods for distance less than 9 m. | Proposed method need to test with RADAR and vision-based sensors data | software based analysis and MATLAB | Fusion of camera and 2D LIDAR data | ----- | |

| [41] | Y | A deep learning-based approach for detecting lanes, object and free space. | The Nvidia tool comes with SDK (software development kit) with inbuild options for object detection, lane detection and free space. | Monocular camera with advance driver assistance system is costly. | The time taken to determine the lane falls under 6 to 9 ms. | C++ and NVidia’s drive PX2 platform | Complex road scenario with different high intensity of light. | KITT | ---- | |

| Sources | Data | Method | Advantages | Drawbacks | Result | Tool Used | Future Prospects | Data | Reason for Drawback | |

|---|---|---|---|---|---|---|---|---|---|---|

| Simulation | Real | |||||||||

| [42] | Y | Gradient cue, color cue and line clustering are used to verify the lane markings. | The proposed method works better under different weather conditions such as rainy and snowy environments. | The suitability of the algorithm for multi-lane detection of lane curvature is to be studied. | Except rainy condition during the day, the proposed system provides better results. | C++ and OpenCV on ubuntu operating system. Hardware: duel ARM cortex-A9 processors. | ---- | 48 video clips from USA and Korea | Since the road environment may not be predictable, leads to false detection. | |

| [43] | Y | Extraction of lanes from the captured image Random, sample consensus algorithm is used to eradicate error in lane detection. | Multilane detection even during poor lane markings. No prior knowledge about the lane is required. | Urban driving scenario quality has to be improved in cardova 2dataset since it perceives the curb of the sidewalk as a lane. | The Caltech lane datasets consisting of four types of urban driving scenarios: Cordova 1; Cordova 2; Washington2; with a total of 1224 frames containing 4172 lane markings. | MATLAB | Real time implementation of the proposed algorithm | Data from south Korea road and Caltech dataset. | IMU sensors could be incorporated to avoid the false detection of lanes. | |

| [44] | Y | Y | Rectangular detection region is formed on the image. Edge points of lane is extracted using threshold algorithm. A modified Brenham line voting space is used to detect lane segment. | Robust lane detection method by using a monocular camera in which the roads are provided with proper lane markings. | Performance drops when road is not flat | In Cardova 2 dataset, the false detection value is higher around 38%. The algorithm shows better performance under different roads geometries such as straight, curve, polyline and complex | Software based performance analysis on Caltech dataset for different urban driving scenario. Hardware implementation on the Tuyou autonomous vehicle. | ---- | Caltech and custom-made dataset | Due to the difficulty In image capturing false detection happened. More training or inclusion of sensors for live dataset collection will help to mitigate it. |

| [45] | Y | Based on voting map, detected vanishing points, usage of distinct property of lane colour to obtain illumination invariant lane marker and finally found main lane by using clustering methods. | Overall method test algorithm within 33 ms per frame. | Need to reduce computational complexity by using vanishing point and adaptive ROI for every frame. | Under various Illumination condition lane detection rate of the algorithm is an average 93% | Software based analysis done. | There are chances, to test algorithm at day time with inclement weather conditions. | Custom data based on Real-time | ----- | |

| [46] | Y | Proposed a sharp curve lane from the input image based on hyperbola fitting. The input image is converted to grayscale image and the feature namely left edge, right edge and the extreme points of the lanes are calculated | Better accuracy for sharp curve lanes. | The suitability of the algorithm for different road geometrics yet to study. | The results show that the accuracy of lane detection is around 97% and the average time taken to detect the lane is 20 ms. | Custom made simulator C/C++ and visual studio | ----- | Custom data | ----- | |

| [47] | Y | vanishing point detection method for unstructured roads | Accurate and robust performance for unstructured roads. | Difficult to obtain robust vanishing point for detection of lane for unstructured scene. | The accuracy of vanishing point range between 80.9% to 93.6% for different scenarios. | Unmanned ground vehicle and mobile robot. | Future scope for structured roads with different scenarios. | Custom data | Complex background interference and unclear road marking. | |

| [48] | Y | Proposed a lane detection approach using Gaussian distribution random sample consensus (G-RANSAC), usage of rider detector to extract the features of lane points and adaptable neural network for remove noise. | Provides better results during the presence of vehicle shadow and minimal illumination of the environment. | ---- | The proposed algorithm is tested under different illumination condition ranging from normal, intense, normal and poor and provides lane detection accuracy as 95%, 92%, 91% and 90%. | Software based analysis | Need to test proposed method under various times like day, night. | Test vehicle | ---- | |

| Sources | Data | Method Used | Advantages | Drawbacks | Result | Tool Used | Future Prospects | Data | Reason for Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|

| Simulation | Real | |||||||||

| [49] | Y | Inverse perspective mapping method is applied to convert the image to bird’s eye view. | Quick detection of lane. | The algorithm performance drops due to the fluctuation in the lighting conditions. | The lane detection error is 5%. The cross-track error is 25% lane detection time is 11 ms. | Fisheye dashcam: inertial measurement unit; Arm processor-based computer. | Enhancing the algorithm suitable for complex road scenario and with less light conditions. | Data obtained by using a model car running at a speed of 1 m/s | The complex environment creates unnecessary tilt causing some inaccuracy in lane detection. | |

| [50] | Y | Deep learning-based reinforcement learning is used for decision making in the changeover. The reward for decision making is based on the parameters like traffic efficiency | Cooperative decision-making processes involving the reward function comparing delay of a vehicle and traffic. | Validation expected to check the accuracy of the lane changing algorithm for heterogeneous environment | The performance is fine-tuned based on the cooperation for both accident and non-accidental scenario | Custom made simulator | Dynamic selection of cooperation coefficient under different traffic scenario | Newell car following model. | ---- | |

| [51] | Y | Reinforcement learning-based approach for decision making by using Q-function approximator. | Decision-making process involving reward function comprising yaw rate, yaw acceleration and lane changing time. | Need for more testing to check the efficiency of the approximator function for its suitability under different real-time conditions. | The reward functions are used to learn the lane in a better way. | Custom made simulator | To test the efficiency of the proposed approach under different road geometrics and traffic conditions. Testing the feasibility of the reinforcement learning with fuzzy logic for image input and controller action based on the current situation. | custom | More parameters could be considered for the reward function. | |

| [52] | Y | Probabilistic and prediction for the complex driving scenario. | Usage of deterministic and probabilistic prediction of traffic of other vehicles to improve the robustness | Analysis of the efficiency of the system under real-time noise is challenging. | Robust decision making compared to the deterministic method. Lesser probability of collision. | MATLAB/Simulink and carsim. Used real-time setup as following: Hyundai-Kia motors K7, mobile eye camera system, micro auto box II, Delphi radars, IBEO laser scanner. | Testing undue different scenario | Custom dataset (collection of data using test vehicle). | The algorithm to be modified for real suitability for real-time monitoring. | |

| [53] | Y | Usage of pixel hierarchy to the occurrence of lane markings. Detection of the lane markings using a boosting algorithm. Tracking of lanes using a particle filter. | Detection of the lane without prior knowledge on-road model and vehicle speed. | Usage of vehicles inertial sensors GPS information and geometry model further improve performance under different environmental conditions | Improved performance by using support vector machines and artificial neural networks on the image. | Machine with 4-GHz processor capable of working on image approximately 240 × 320 image at 15 frames per second. | To test the efficiency of the algorithm by using the Kalman filter. | custom data | Calibration of the sensors needs to be maintained. | |

- Frequent calibration is required for accurate decision making in a complex environment.

- Reinforcement learning with the model predictive control could be a better choice to avoid false lane detection.

- Model-based approaches (robust lane detection and tracking) provide better results in different environmental conditions. Camera quality plays an important role in determining lane marking.

- The algorithm’s performance depends on the type of filter used, and the Kalman filter is mostly used for lane tracking.

- In a vision-based system, image smoothing is the initial lane detection and tracking stage, which plays a vital role in increasing systems performance.

- External disturbances like weather conditions, vision quality, shadow and blazing, and internal disturbances such as too narrow, too wide, and unclear lane marking, drop algorithm performance.

- The majority of researchers (>90%) have used custom datasets for research.

- Monocular, stereo and infrared cameras have been used to capture images and videos. The algorithm’s accuracy depends on the type of camera used, and a stereo camera gives better performance than a monocular camera.

- The lane markers can be occluded by a nearby vehicle while doing overtake.

- There is an abrupt change in illumination as the vehicle gets out of a tunnel. Sudden changes in illumination affect the image quality and drop the system performance.

- The results show that the lane detection and tracking efficiency rate under dry and light rain conditions is near 99% in most scenarios. However, the efficiency of lane marking detection is significantly affected by heavy rain conditions.

- It has been seen that the performance of the system drops due to unclear and degraded lane markings.

- IMU (Inertia measurement unit) and GPS are examples that help to improve RADAR and LIDAR’s performance of distance measurement.

- One of the biggest problems with today’s ADAS is that changes in environmental and weather conditions have a major effect on the system’s performance.

3.3. Patented Works

- By following the method of image and sensor-based lane detection, separate courses are calculated for precisely two of the lane markings to be tracked, with a set of binary parameters indicating the allocation of the determined offset values to one of the two separate courses [54]

- By following the robust lane detection and tracking method, after a fixed number of computing cycles, a most probable hypothesis is calculated—the difference between the predicted courses of lane markings to only be tracked and the courses of recognized lane markings to be lowest [55].

- A parametric estimation method, in particular a maximum likelihood method, is used to assign the calculated offset values to each of the separate courses of the lane markings to be tracked [56].

- Only those two-lane markers that refer to the left and right lane boundaries of the vehicle’s own lane are applied to the tracking procedure [57].

- The positive and negative ratios of the extracted characteristics of the frame are used to assess the system’s correctness. The degree of accuracy is enhanced by including the judgment in all extracted frames [58].

- At a present calculation cycle, the lane change assistance calculates a target control amount comprising a feed-forward control using a target curvature of a track for changing the host vehicle’s lane [59].

- Extra details analyzing signals mounted to determine if a collision between the host vehicle and any other vehicle is likely to occur, allowing action to be done to avoid the accident [60].

- There are two kinds of issues that are often seen and corrected in dewarped perspective images: a stretching effect at the periphery region of a wide-angle image de warped by rectilinear projection, and duplicate images of objects in an area where the left and right camera views overlap [61].

- The object identification system examines the pixels in order to identify the object that has not previously been identified in the 3D Environment [62].

4. Discussion

5. Conclusions

- lane detection and tracking under different complex geometric road design models, e.g., hyperbola and clothoid

- achieving high reliability for detecting and tracking the lane under different weather conditions, different speeds and weather conditions, and

- lane detection and tracking for the unstructured roads

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nilsson, N.J. Shakey the Robot; Sri International Menlo Park: California, CA, USA, 1984. [Google Scholar]

- Tsugawa, S.; Yatabe, T.; Hirose, T.; Matsumoto, S. An Automobile with Artificial Intelligence. In Proceedings of the 6th International Joint Conference on Artificial Intelligence, Tokyo, Japan, 20 August 1979. [Google Scholar]

- Blackman, C.P. The ROVA and MARDI projects. In Proceedings of the IEEE Colloquium on Advanced Robotic Initiatives in the UK, London, UK, 17 April 1991; pp. 5/1–5/3. [Google Scholar]

- Thorpe, C.; Herbert, M.; Kanade, T.; Shafter, S. Toward autonomous driving: The CMU Navlab. II. Architecture and systems. IEEE Expert. 1991, 6, 44–52. [Google Scholar] [CrossRef]

- Horowitz, R.; Varaiya, P. Control design of an automated highway system. Proc. IEEE 2000, 88, 913–925. [Google Scholar] [CrossRef]

- Pomerleau, D.A.; Jochem, T. Rapidly Adapting Machine Vision for Automated Vehicle Steering. IEEE Expert. 1996, 11, 19–27. [Google Scholar] [CrossRef]

- Parent, M. Advanced Urban Transport: Automation Is on the Way. Intell. Syst. IEEE 2007, 22, 9–11. [Google Scholar] [CrossRef]

- Lari, A.Z.; Douma, F.; Onyiah, I. Self-Driving Vehicles and Policy Implications: Current Status of Autonomous Vehicle Development and Minnesota Policy Implications. Minn. J. Law Sci. Technol. 2015, 16, 735. [Google Scholar]

- Urmson, C. Green Lights for Our Self-Driving Vehicle Prototypes. Available online: https://blog.google/alphabet/self-driving-vehicle-prototypes-on-road/ (accessed on 30 September 2021).

- Campisi, T.; Severino, A.; Al-Rashid, M.A.; Pau, G. The Development of the Smart Cities in the Connected and Autonomous Vehicles (CAVs) Era: From Mobility Patterns to Scaling in Cities. Infrastructures 2021, 6, 100. [Google Scholar] [CrossRef]

- Severino, A.; Curto, S.; Barberi, S.; Arena, F.; Pau, G. Autonomous Vehicles: An Analysis both on Their Distinctiveness and the Potential Impact on Urban Transport Systems. Appl. Sci. 2021, 11, 3604. [Google Scholar] [CrossRef]

- Aly, M. Real time Detection of Lane Markers in Urban Streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar] [CrossRef] [Green Version]

- Bar Hillel, A.; Lerner, R.; Levi, D.; Raz, G. Recent progress in road and lane detection: A survey. Mach. Vis. Appl. 2014, 25, 727–745. [Google Scholar] [CrossRef]

- Ying, Z.; Li, G.; Zang, X.; Wang, R.; Wang, W. A Novel Shadow-Free Feature Extractor for Real-Time Road Detection. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016. [Google Scholar]

- Jothilashimi, S.; Gudivada, V. Machine Learning Based Approach. 2016. Available online: https://www.sciencedirect.com/topics/computer-science/machine-learning-based-approach (accessed on 20 August 2021).

- Zhou, S.; Jiang, Y.; Xi, J.; Gong, J.; Xiong, G.; Chen, H. A novel lane detection based on geometrical model and Gabor filter. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 59–64. [Google Scholar]

- Zhao, H.; Teng, Z.; Kim, H.; Kang, D. Annealed Particle Filter Algorithm Used for Lane Detection and Tracking. J. Autom. Control Eng. 2013, 1, 31–35. [Google Scholar] [CrossRef] [Green Version]

- Paula, M.B.; Jung, C.R. Real-Time Detection and Classification of Road Lane Markings. In Proceedings of the 2013 XXVI Conference on Graphics, Patterns and Images, Arequipa, Peru, 5–8 August 2013. [Google Scholar]

- Kukkala, V.K.; Tunnell, J.; Pasricha, S.; Bradley, T. Advanced Driver-Assistance Systems: A Path toward Autonomous Vehicles. In IEEE Consumer Electronics Magazine; IEEE: Eindhoven, The Netherlands, 2018; Volume 7, pp. 18–25. [Google Scholar] [CrossRef]

- Yenkanchi, S. Multi Sensor Data Fusion for Autonomous Vehicles; University of Windsor: Windsor, ON, Canada, 2016. [Google Scholar]

- Synopsys.com. What Is ADAS (Advanced Driver Assistance Systems)?—Overview of ADAS Applications|Synopsys. 2021. Available online: https://www.synopsys.com/automotive/what-is-adas.html (accessed on 12 October 2021).

- McCall, J.C.; Trivedi, M.M. Video-based lane estimation and tracking for driver assistance: Survey, system, and evaluation. In IEEE Transactions on Intelligent Transportation Systems; IEEE: Eindhoven, The Netherlands, 2006; Volume 7, pp. 20–37. [Google Scholar] [CrossRef] [Green Version]

- Veit, T.; Tarel, J.; Nicolle, P.; Charbonnier, P. Evaluation of Road Marking Feature Extraction. In Proceedings of the 2008 11th International IEEE Conference on Intelligent Transportation Systems, Beijing, China, 12–15 October 2008; pp. 174–181. [Google Scholar]

- Kuo, C.Y.; Lu, Y.R.; Yang, S.M. On the Image Sensor Processing for Lane Detection and Control in Vehicle Lane Keeping Systems. Sensors 2019, 19, 1665. [Google Scholar] [CrossRef] [Green Version]

- Kang, C.M.; Lee, S.H.; Kee, S.C.; Chung, C.C. Kinematics-based Fault-tolerant Techniques: Lane Prediction for an Autonomous Lane Keeping System. Int. J. Control Autom. Syst. 2018, 16, 1293–1302. [Google Scholar] [CrossRef]

- Borkar, A.; Hayes, M.; Smith, M.T. Robust lane detection and tracking with ransac and Kalman filter. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 3261–3264. [Google Scholar] [CrossRef]

- Sun, Y.; Li, J.; Sun, Z. Multi-Stage Hough Space Calculation for Lane Markings Detection via IMU and Vision Fusion. Sensors 2019, 19, 2305. [Google Scholar] [CrossRef] [Green Version]

- Lu, J.; Ming Yang, M.; Wang, H.; Zhang, B. Vision-based real-time road detection in urban traffic, Proc. SPIE 4666. In Real-Time Imaging VI; SPIE: Bellingham, WA, USA, 2002. [Google Scholar] [CrossRef]

- Zhang, X.; Shi, Z. Study on lane boundary detection in night scene. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 538–541. [Google Scholar] [CrossRef]

- Borkar, A.; Hayes, M.; Smith, M.T.; Pankanti, S. A layered approach to robust lane detection at night. In Proceedings of the 2009 IEEE Workshop on Computational Intelligence in Vehicles and Vehicular Systems, Nashville, TN, USA, 30 March–2 April 2009; pp. 51–57. [Google Scholar] [CrossRef]

- Priyadharshini, P.; Niketha, P.; Saantha Lakshmi, K.; Sharmila, S.; Divya, R. Advances in Vision based Lane Detection Algorithm Based on Reliable Lane Markings. In Proceedings of the 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India, 15–16 March 2019; pp. 880–885. [Google Scholar] [CrossRef]

- Hong, G.-S.; Kim, B.-G.; Dorra, D.P.; Roy, P.P. A Survey of Real-time Road Detection Techniques Using Visual Color Sensor. J. Multimed. Inf. Syst. 2018, 5, 9–14. [Google Scholar] [CrossRef]

- Park, H. Implementation of Lane Detection Algorithm for Self-driving Vehicles Using Tensor Flow. In International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing; Springer: Cham, Switzerland, 2018; pp. 438–447. [Google Scholar]

- El Hajjouji, I.; Mars, S.; Asrih, Z.; Mourabit, A.E. A novel FPGA implementation of Hough Transform for straight lane detection. Eng. Sci. Technol. Int. J. 2020, 23, 274–280. [Google Scholar] [CrossRef]

- Samadzadegan, F.; Sarafraz, A.; Tabibi, M. Automatic Lane Detection in Image Sequences for Vision-based Navigation Purposes. ISPRS Image Eng. Vis. Metrol. 2006. Available online: https://www.semanticscholar.org/paper/Automatic-Lane-Detection-in-Image-Sequences-for-Samadzadegan-Sarafraz/55f0683190eb6cb21bf52c5f64b443c6437b38ea (accessed on 12 August 2021).

- Cheng, H.-Y.; Yu, C.-C.; Tseng, C.-C.; Fan, K.-C.; Hwang, J.-N.; Jeng, B.-S. Environment classification and hierarchical lane detection for structured and unstructured roads. Comput. Vis. IET 2010, 4, 37–49. [Google Scholar] [CrossRef]

- Han, J.; Kim, D.; Lee, M.; Sunwoo, M. Road boundary detection and tracking for structured and unstructured roads using a 2D lidar sensor. Int. J. Automot. Technol. 2014, 15, 611–623. [Google Scholar] [CrossRef]

- Le, M.C.; Phung, S.L.; Bouzerdoum, A. Lane Detection in Unstructured Environments for Autonomous Navigation Systems. In Asian Conference on Computer Vision; Cremers, D., Reid, I., Saito, H., Yang, M.H., Eds.; Springer: Cham, Switzerland, 2015. [Google Scholar] [CrossRef]

- Wang, J.; Ma, H.; Zhang, X.; Liu, X. Detection of Lane Lines on Both Sides of Road Based on Monocular Camera. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 1134–1139. [Google Scholar]

- YenIaydin, Y.; Schmidt, K.W. Sensor Fusion of a Camera and 2D LIDAR for Lane Detection. In Proceedings of the 2019 27th Signal Processing and Communications Applications Conference (SIU), Sivas, Turkey, 24–26 April 2019; pp. 1–4. [Google Scholar]

- Kemsaram, N.; Das, A.; Dubbelman, G. An Integrated Framework for Autonomous Driving: Object Detection, Lane Detection, and Free Space Detection. In Proceedings of the 2019 Third World Conference on Smart Trends in Systems Security and Sustainablity (WorldS4), London, UK, 30–31 July 2019; pp. 260–265. [Google Scholar] [CrossRef]

- Lee, C.; Moon, J.-H. Robust Lane Detection and Tracking for Real-Time Applications. IEEE Trans. Intell. Transp. Syst. 2018, 19, 1–6. [Google Scholar] [CrossRef]

- Son, Y.; Lee, E.S.; Kum, D. Robust multi-lane detection and tracking using adaptive threshold and lane classification. Mach. Vis. Appl. 2018, 30, 111–124. [Google Scholar] [CrossRef]

- Li, Q.; Zhou, J.; Li, B.; Guo, Y.; Xiao, J. Robust Lane-Detection Method for Low-Speed Environments. Sensors 2018, 18, 4274. [Google Scholar] [CrossRef] [Green Version]

- Son, J.; Yoo, H.; Kim, S.; Sohn, K. Real-time illumination invariant lane detection for lane departure warning system. Expert Syst. Appl. 2014, 42. [Google Scholar] [CrossRef]

- Chae, H.; Jeong, Y.; Kim, S.; Lee, H.; Park, J.; Yi, K. Design and Vehicle Implementation of Autonomous Lane Change Algorithm based on Probabilistic Prediction. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2845–2852. [Google Scholar] [CrossRef]

- Chen, P.R.; Lo, S.Y.; Hang, H.M.; Chan, S.W.; Lin, J.J. Efficient Road Lane Marking Detection with Deep Learning. In Proceedings of the 2018 IEEE 23rd International Conference on Digital Signal Processing (DSP), Shanghai, China, 19–21 November 2018; pp. 1–5. [Google Scholar]

- Lu, Z.; Xu, Y.; Shan, X. A lane detection method based on the ridge detector and regional G-RANSAC. Sensors 2019, 19, 4028. [Google Scholar] [CrossRef] [Green Version]

- Bian, Y.; Ding, J.; Hu, M.; Xu, Q.; Wang, J.; Li, K. An Advanced Lane-Keeping Assistance System with Switchable Assistance Modes. IEEE Trans. Intell. Transp. Syst. 2019, 21, 385–396. [Google Scholar] [CrossRef]

- Wang, G.; Hu, J.; Li, Z.; Li, L. Cooperative Lane Changing via Deep Reinforcement Learning. arXiv 2019, arXiv:1906.08662. [Google Scholar]

- Wang, P.; Chan, C.Y.; de La Fortelle, A. A reinforcement learning based approach for automated lane change maneuvers. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1379–1384. [Google Scholar]

- Suh, J.; Chae, H.; Yi, K. Stochastic model-predictive control for lane change decision of automated driving vehicles. IEEE Trans. Veh. Technol. 2018, 67, 4771–4782. [Google Scholar] [CrossRef]

- Gopalan, R.; Hong, T.; Shneier, M.; Chellappa, R. A learning approach towards detection and tracking of lane markings. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1088–1098. [Google Scholar] [CrossRef]

- Mueter, M.; Zhao, K. Method for Lane Detection. US20170068862A1. 2015. Available online: https://patents.google.com/patent/US20170068862A1/en (accessed on 12 August 2021).

- Joshi, A. Method for Generating Accurate Lane Level Maps. US9384394B2. 2013. Available online: https://patents.google.com/patent/US9384394B2/en (accessed on 12 August 2021).

- Kawazoe, H. Lane Tracking Control System for Vehicle. US20020095246A1. 2001. Available online: https://patents.google.com/patent/US20020095246 (accessed on 12 August 2021).

- Lisaka, A. Lane Detection Sensor and Navigation System Employing the Same. EP1143398A3. 1996. Available online: https://patents.google.com/patent/EP1143398A3/en (accessed on 12 August 2021).

- Zhitong, H.; Yuefeng, Z. Vehicle Detecting Method Based on Multi-Target Tracking and Cascade Classifier Combination. CN105205500A. 2015. Available online: https://patents.google.com/patent/CN105205500A/en (accessed on 12 August 2021).

- Fujii, S. Steering Support Device. JP6589941B2, 2019. Patentimages.storage.googleapis.com. 2021. Available online: https://patentimages.storage.googleapis.com/0b/d0/ff/978af5acfb7b35/JP6589941B2.pdf (accessed on 12 August 2021).

- Gurghian, A.; Koduri, T.; Nariyambut Murali, V.; Carey, K. Lane Detection Systems and Methods. US10336326B2. 2016. Available online: https://patents.google.com/patent/US10336326B2/en (accessed on 12 August 2021).

- Zhang, W.; Wang, J.; Lybecker, K.; Piasecki, J.; Brian Litkouhi, B.; Frakes, R. Enhanced Perspective View Generation in a Front Curb Viewing System Abstract. US9834143B2. 2014. Available online: https://patents.google.com/patent/US9834143B2/en (accessed on 12 August 2021).

- Vallespi-Gonzalez, C. Object Detection for an Autonomous Vehicle. US20170323179A1. 2016. Available online: https://patents.google.com/patent/US20170323179A1/en (accessed on 12 August 2021).

- Cu Lane Dataset. Available online: https://xingangpan.github.io/projects/CULane.html (accessed on 13 April 2020).

- Caltech Pedestrian Detection Benchmark. Available online: http://www.vision.caltech.edu/Image_Datasets/CaltechPedestrians/ (accessed on 13 April 2020).

- Lee, E. Digital Image Media Lab. Diml.yonsei.ac.kr. 2020. Available online: http://diml.yonsei.ac.kr/dataset/ (accessed on 13 April 2020).

- Cvlibs.net. The KITTI Vision Benchmark Suite. Available online: http://www.cvlibs.net/datasets/kitti/ (accessed on 27 April 2020).

- Tusimple/Tusimple-Benchmark. Available online: https://github.com/TuSimple/tusimple-benchmark/tree/master/doc/velocity_estimation (accessed on 15 April 2020).

- Romera, E.; Luis, M.; Arroyo, L. Need Data for Driver Behavior Analysis? Presenting the Public UAH-Drive Set. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems, Rio de Janeiro, Brazil, 1–4 November 2016. [Google Scholar]

- BDD100K Dataset. Available online: https://mc.ai/bdd100k-dataset/ (accessed on 2 April 2020).

- Kumar, A.M.; Simon, P. Review of Lane Detection and Tracking Algorithms in Advanced Driver Assistance System. Int. J. Comput. Sci. Inf. Technol. 2015, 7, 65–78. [Google Scholar] [CrossRef]

- Hamed, T.; Kremer, S. Computer and Information Security Handbook, 3rd ed.; Elesevier: Amsterdam, The Netherlands, 2017; p. 114. [Google Scholar]

- Precision and Recall. Available online: https://en.wikipedia.org/wiki/Precision_and_recall (accessed on 13 January 2021).

- Fiorentini, N.; Losa, M. Long-Term-Based Road Blackspot Screening Procedures by Machine Learning Algorithms. Sustainability 2020, 12, 5972. [Google Scholar] [CrossRef]

- Wu, S.J.; Chiang, H.H.; Perng, J.W.; Chen, C.J.; Wu, B.F.; Lee, T.T. The heterogeneous systems integration design and implementation for lane keeping on a vehicle. IEEE Trans. Intell. Transp. Syst. 2008, 9, 246–263. [Google Scholar] [CrossRef]

- Liu, H.; Li, X. Sharp Curve Lane Detection for Autonomous Driving. Comput. Sci. Eng. 2019, 21, 80–95. [Google Scholar] [CrossRef]

- Han, J.; Yang, Z.; Hu, G.; Zhang, T.; Song, J. Accurate and robust vanishing point detection method in unstructured road scenes. J. Intell. Robot. Syst. 2019, 94, 143–158. [Google Scholar] [CrossRef]

- Tominaga, K.; Takeuchi, Y.; Tomoki, U.; Kameoka, S.; Kitano, H.; Quirynen, R.; Berntorp, K.; Di Cairano, S. GNSS Based Lane Keeping Assist System via Model Predictive Control. 2019. Available online: https://doi.org/10.4271/2019-01-0685 (accessed on 9 September 2021).

- Chen, Z.; Liu, Q.; Lian, C. PointLaneNet: Efficient end-to-end CNNs for Accurate Real-Time Lane Detection. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 2563–2568. [Google Scholar] [CrossRef]

- Feng, Y.; Rong-ben, W.; Rong-hui, Z. Research on Road Recognition Algorithm Based on Structure Environment for ITS. In Proceedings of the 2008 ISECS International Colloquium on Computing, Communication, Control, and Management, Guangzhou, China, 3–4 August 2008; pp. 84–87. [Google Scholar] [CrossRef]

- Nieuwenhuijsen, J.; de Almeida Correia, G.H.; Milakis, D.; van Arem, B.; van Daalen, E. Towards a quantitative method to analyze the long-term innovation diffusion of automated vehicles technology using system dynamics. Transp. Res. Part C Emerg. Technol. 2018, 86, 300–327. [Google Scholar] [CrossRef] [Green Version]

- Stasinopoulos, P.; Shiwakoti, N.; Beining, M. Use-stage life cycle greenhouse gas emissions of the transition to an autonomous vehicle fleet: A System Dynamics approach. J. Clean. Prod. 2021, 278, 123447. [Google Scholar] [CrossRef]

| Type of Sensors | Relative Velocity | Measured Distance | Strengths | Weaknesses | Application | Opportunities | Threats | Perceived Energy | Recognizing Vehicle |

|---|---|---|---|---|---|---|---|---|---|

| LASER based sensors | Derivative of range | Time of flight | Reliable for automatic car parking and collision mitigation | Vulnerable to dirty lenses and reflecting target reduced. | Collision warning, assistant automatic parking | Gives warning for excessive load or strain. | Failure due to inclement weather | 600–1000 emitted laser waves (Nanometers) | Resolved via spatial segmentation and motion |

| RADAR based sensors | Frequency | Time of flight | Suitable for collision mitigation and adaptive | Vulnerable and sometimes fails for extreme weather condition | Collision warning, assistant automatic parking | Better accuracy and required no attention | Inappropriate and difficult to implementation by non-professional | Emitted radio single wave (Millimeter) | Resolved via tracking |

| Vision based sensors | Derivative of range | Model parameter | Readily available and affordable in the automobile sector | Vulnerable to extreme weather conditions and sometimes fails to work in the night time. | Collision warning, assistant automatic parking. | Low cost, passive non-invasive sensors and low operating power. | Less effective for bad weather, for complex illumination and shadow | Ambient visible light | Resolved via motion and appearance |

| Country | Patent No | Assignee | Method | Key Finding | Approach | Inventor |

|---|---|---|---|---|---|---|

| USA | US20170068862A1 | Aptiv Technologies Ltd. | Camera based vision based driver assistance system. | State estimation and separate progression. | Feature based approach | Mirko Mueter, Kun Zhao |

| USA | US9384394B2 | Toyota motor corporation | Generates accurate lane estimation using course map information and LIDAR sensors. | Centre of the lane and multiple lanes. | Model based approach | Avdhut Joshi and Michael James |

| USA | US20020095246A1 | Nissan motor co Ltd. | Controller is designed in such way that it detect lanes by controlling steering angle when vehicle move out of desired track. | Measure the output of the signal. | Learning based approach | Hiroshi Kawazoe |

| Europe | EP1143398A3 | Panasonic Corporation | Proposed an extraction method using Hough transform to detect the lanes in the opposite side of roads. | Determine the maximum value of accumulators. | Feature based approach | Atsushi Lisaka, Mamoru Kaneko and Nobohiko Yasui |

| China | CN105205500A | Beijing University of post and telecommunication | Computer graphical and vision-based technology with multi target filtering and sorter training is used. | This method finds multi target tracking and cascade classifier with high detection processing speed. | Model based approach | Zhitong, H. and Yuefeng, Z |

| Japan | JP6589941B2 | Not available | Developed steering assist device for lane detection and tracking under periphery monitoring. | Objective of this method is relative position host vehicle and their relation with lane has been identified. | Model based approach | Shota Fujii |

| USA | US10336326 | Ford global technologies LLC | Proposed a deep learning-based front facing camera lane detection method. | Exacted features of lane boundaries with the help of camera mounted at front. | Feature based approach | Alexandru Mihai, Tejaswi Koduri, Vidya Nariyambut Marali Kyle J Carey |

| USA | US9834143B2 | GM Global Technology Operations LLC | The improved perspective view is produced a new camera imaging surface model and other distortion correcting technique. | Main objective is to improve the perspective view of the vehicle at front for lane detection and tracking. | Featured based approach | Wende Zhang, Jinsong Wang, Kent S Lybecker, Jeffrey S. Piasecki, Bakhtiar Brian Litkouhi, Ryan M. Frakes |

| USA | US20170323179A1 | Uber technologies Inc. | Sensor fusion data processing technique is used for surrounding object detection and lane detection. | Generate 3D envirmental data through sensor fusion to guide autonomous vehicle. | Leaning based approach | Carlos Vallespi-Gonzalez |

| Dataset | Features | Strength | Weakness |

|---|---|---|---|

| CU lane [63] | 55 h videos, 133,235 extracted frames, 88,880 training set, 9675 validations set and 34,680 test set. | For unseen or occluded lane marking annotated manually with a cubic spline. | Except for four lanes markings, others are not annotated |

| Caltech [64] | 10 h video 640 × 480 Hz of regular traffic in an urban environment. 250,000 frames, 350,000 boundary boxes annotated with occlusion and temporal. | Entire dataset annotated, testing data also provided (set 06–set 10) and training data (set 00–set 05) each 1 GB. | Not applicable for all types of road geometries and weather conditions. |

| Custom data (collection of data using test vehicle) | Not applicable | Available according to the requirements | Time-consuming and highly expensive |

| DIML [65] | Multimodal dataset: Sony cyber shot DSC-RX 100 camera, 5 different photometric variation pairs. RGB-D dataset: More than 200indoor/outdoor scenes, Kinect Vz and zed stereo camera obtain RGB-D frames. Lane dataset: 470 video sequences of downtown and urban roads. Emotion Recognition dataset (CAER): more than 13,000 videos and 13,000 annotated videos CoVieW18 dataset: untrimmed videos sample, 90,000 YouTube videos URLs. | Different scenarios have been covered, like a traffic jam, pedestrians and obstacles. | Dataset for different weather conditions and lanes with no markings are missing. |

| KITTI [66] | It contains stereo, optical flow, visual odometry etc. it contains an object detection dataset, monocular images and boundary boxes, 7481 training images, 7518 test images. | Evaluation is done of orientation estimation of bird’s eye view and applicable for real-time object detection and 3D tracking. Evaluation metrics provided. | Only 15 cars and 30 pedestrians have been considered while capturing images. Applicable for rural and highway roads dataset. |

| Tusimple [67] | Training: 3222 annotated vehicles in 20 frames per second for 1074 clips of 25 videos. Testing: 269 video clips Supplementary data: 5066 images of position and velocity of vehicle marked by range sensors. | Lane detection challenge, velocity estimation challenge and ground truths have been provided. | Calibration file for lane detection has not been provided. |

| UAH [68] | Raw real time data: Raw-GPS, RAW-Accelerometers. Processed data as continuous variables: pro lane detection, pro vehicle detection and pro OpenStreetMap data. Processed data as events: events list lane changes and events inertial. Sematic information: Sematic final and sematic online. | More than 500 min naturistic driving and processed sematic information have provided. | Limited accessibility to the research community |

| BDD100K [69] | 100,000 videos for more than 1000 h, road object detection, drivable area, segmentation and full frame sematic segmentation. | IMU data, timestamp and localization have been included in the dataset. | Data for unstructured road has not covered. |

| Possibility | Condition 1 | Condition 2 |

|---|---|---|

| True positive | Ground truth exists | When the algorithm detects lane markers. |

| False positive | No ground truth exists | When the algorithm detects lane markers. |

| False negative | Ground truth exists in the image | When the algorithm detects lane markers. |

| True negative | No ground truth exists in the image | When the algorithm is not detecting anything |

| Sr. no | Metrics | Formula * |

|---|---|---|

| 1. | Accuracy(A) | |

| 2. | Detection rate (DR) | |

| 3. | False positive rate (FPR) | |

| 4. | False negative rate (FNR) | |

| 5. | True negative rate (TNR) | |

| 6. | Precision | |

| 7. | F-measure | |

| 8. | Error rate |

| Methods | Strength | Weakness | Opportunities | Threats |

|---|---|---|---|---|

| Feature based approach | Feature extraction is used to determine false lane markings. | Time-consuming | Better performance in optimization | Less effective for complex illumination and shadow |

| Learning based approach | Easy and reliable method | Mismatching lanes | Computationally more efficient | Performance drops due to inclement weather |

| Model based approach | Camera quality improves system performance | Expensive and time-consuming | Robust performance for lane detection model | Difficult to mount sensor fusion system for complex geometry |

| Road Geometry | Pavement Marking | Weather Condition | Speed | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Sources | Straight | Clothoid | Hyperbola | Structured | Unstructured | Day | Night | Rain | |

| [26] Borkar et al. (2009) | √ | -- | √ | √ | -- | -- | -- | -- | -- |

| [28] Lu et al. (2002) | √ | -- | -- | √ | -- | √ | -- | -- | -- |

| [29] Zhang & Shi (2009) | √ | -- | -- | √ | -- | -- | √ | -- | -- |

| [32] Hong et al. (2018) | √ | -- | -- | √ | -- | √ | -- | -- | -- |

| [33] Park, H. et al. (2018) | √ | -- | -- | √ | -- | √ | -- | -- | Low (40 km/h) & high (80 km/h) |

| [34] EI Hajiouji, H. (2019) | √ | -- | -- | √ | -- | √ | √ | -- | 120 km/h |

| [35] Samadzadegan et al. (2006) | -- | -- | √ | √ | -- | √ | √ | -- | -- |

| [36] Cheng et al. (2010) | √ | √ | -- | √ | -- | √ | -- | -- | -- |

| [40] Yeniaydin et al. (2019) | √ | -- | √ | -- | √ | √ | -- | -- | -- |

| [41] Kemsoaram et al. (2019) | √ | -- | √ | -- | √ | -- | -- | -- | -- |

| [43] Son et al. (2019) | √ | -- | √ | √ | -- | √ | -- | -- | |

| [47] Chen et al. (2018) | √ | √ | -- | √ | -- | √ | -- | -- | -- |

| [52] Suh et al. (2019) | √ | -- | √ | √ | -- | √ | -- | -- | 60–80 km/h |

| [53] Gopalan et al. (2018) | √ | -- | √ | √ | √ | √ | -- | -- | -- |

| [74] Wu et al. (2008) | √ | -- | -- | √ | -- | √ | -- | -- | 40 km/h |

| [75] Liu & Li et al. (2018) | √ | -- | √ | √ | -- | √ | √ | √ | -- |

| [76] Han et al. (2019) | √ | -- | -- | √ | √ | √ | -- | -- | 30–50 km/h |

| [77] Tominaga et al. (2019) | -- | -- | -- | √ | -- | √ | -- | -- | 80 km/h |

| [78] Chen Z et al. (2019) | √ | -- | √ | √ | -- | -- | -- | -- | -- |

| [79] Feng et al. (2019) | √ | -- | √ | √ | -- | √ | √ | √ | 120 km/h |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Waykole, S.; Shiwakoti, N.; Stasinopoulos, P. Review on Lane Detection and Tracking Algorithms of Advanced Driver Assistance System. Sustainability 2021, 13, 11417. https://doi.org/10.3390/su132011417

Waykole S, Shiwakoti N, Stasinopoulos P. Review on Lane Detection and Tracking Algorithms of Advanced Driver Assistance System. Sustainability. 2021; 13(20):11417. https://doi.org/10.3390/su132011417

Chicago/Turabian StyleWaykole, Swapnil, Nirajan Shiwakoti, and Peter Stasinopoulos. 2021. "Review on Lane Detection and Tracking Algorithms of Advanced Driver Assistance System" Sustainability 13, no. 20: 11417. https://doi.org/10.3390/su132011417

APA StyleWaykole, S., Shiwakoti, N., & Stasinopoulos, P. (2021). Review on Lane Detection and Tracking Algorithms of Advanced Driver Assistance System. Sustainability, 13(20), 11417. https://doi.org/10.3390/su132011417