Reviewer Experience vs. Expertise: Which Matters More for Good Course Reviews in Online Learning?

Abstract

:1. Introduction

2. Literature Review

2.1. Online Learning and Learning Feedback

2.2. The Roles of Experience vs. Expertise in Task Performance

2.3. Online Customer Reviews

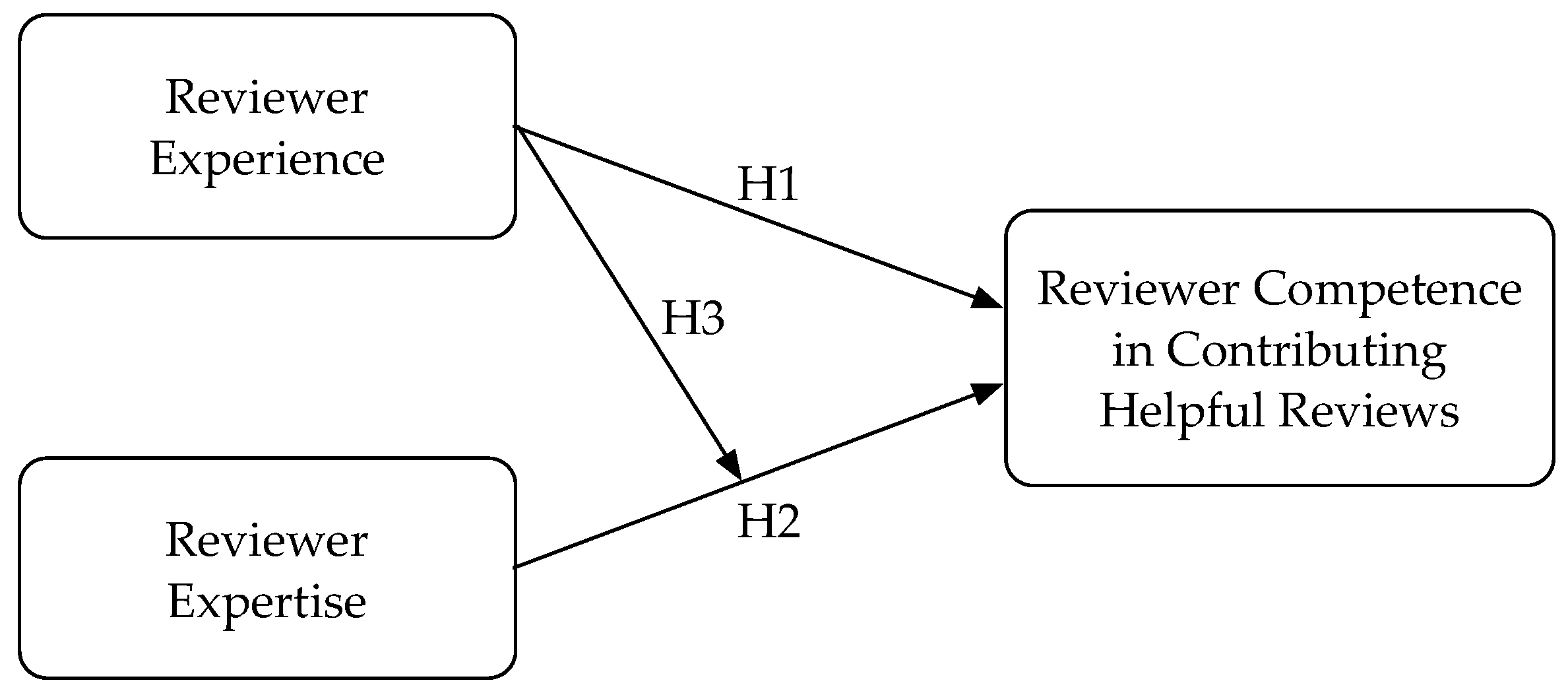

3. Hypotheses

4. Methodology

4.1. Data

- All course reviews that have received at least one helpfulness vote [53].

- First course review of each reviewer, because it does not allow for a reviewer expertise measurement.

- New course reviews that were posted within 60 days of data retrieval; this is to ensure a good measure of review helpfulness [64] (i.e., it takes time for an online course review to accrue helpfulness votes).

4.2. Variables

4.3. Empirical Model

+ γαi + δφi + θωi + εi

5. Descriptive Data Analysis

6. Results and Analysis

6.1. The Effects of Reviewer Experience and Reviewer Expertise on Review Helpfulness

6.2. Robustness Checks

7. Discussion

7.1. Theoretical Implications

7.2. Practical Implications

7.3. Limitations and Further Research

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Almatrafi, O.; Johri, A.; Rangwala, H. Needle in a haystack: Identifying learner posts that require urgent response in MOOC discussion forums. Comput. Educ. 2018, 118, 1–9. [Google Scholar] [CrossRef]

- Mandernach, B.J.; Dailey-Hebert, A.; Donnelli-Sallee, E. Frequency and time investment of instructors’ participation in threaded discussions in the online classroom. J. Interact. Online Learn. 2007, 6, 1–9. [Google Scholar]

- Cha, H.; So, H.J. Integration of formal, non-formal and informal learning through MOOCs. In Radical Solutions and Open Science: An Open Approach to Boost Higher Education; Burgos, D., Ed.; Springer: Singapore, 2020; pp. 135–158. [Google Scholar]

- Gutiérrez-Santiuste, E.; Gámiz-Sánchez, V.M.; Gutiérrez-Pérez, J. MOOC & B-learning: Students’ Barriers and Satisfaction in Formal and Non-formal Learning Environments. J. Interact. Online Learn. 2015, 13, 88–111. [Google Scholar]

- Harvey, L.; Green, D. Defining quality. Assess. Eval. High. Educ. 1993, 18, 9–34. [Google Scholar] [CrossRef]

- Geng, S.; Niu, B.; Feng, Y.; Huang, M. Understanding the focal points and sentiment of learners in MOOC reviews: A machine learning and SC-LIWC-based approach. Br. J. Educ. Technol. 2020, 51, 1785–1803. [Google Scholar] [CrossRef]

- Fan, J.; Jiang, Y.; Liu, Y.; Zhou, Y. Interpretable MOOC recommendation: A multi-attention network for personalized learning behavior analysis. Internet Res. 2021, in press. [Google Scholar] [CrossRef]

- Li, L.; Johnson, J.; Aarhus, W.; Shah, D. Key factors in MOOC pedagogy based on NLP sentiment analysis of learner reviews: What makes a hit. Comput. Educ. 2021, 176, 104354. [Google Scholar] [CrossRef]

- Cunningham-Nelson, S.; Laundon, M.; Cathcart, A. Beyond satisfaction scores: Visualising student comments for whole-of-course evaluation. Assess. Eval. High. Educ. 2021, 46, 685–700. [Google Scholar] [CrossRef]

- Decius, J.; Schaper, N.; Seifert, A. Informal workplace learning: Development and validation of a measure. Hum. Resour. Dev. Q. 2019, 30, 495–535. [Google Scholar] [CrossRef] [Green Version]

- Morris, R.; Perry, T.; Wardle, L. Formative assessment and feedback for learning in higher education: A systematic review. Rev. Educ. 2021, 9, e3292. [Google Scholar] [CrossRef]

- Deng, W.; Yi, M.; Lu, Y. Vote or not? How various information cues affect helpfulness voting of online reviews. Online Inf. Rev. 2020, 44, 787–803. [Google Scholar] [CrossRef]

- Yin, D.; Mitra, S.; Zhang, H. When Do Consumers value positive vs. negative reviews? An empirical investigation of confirmation bias in online word of mouth. Inf. Syst. Res. 2016, 27, 131–144. [Google Scholar] [CrossRef]

- Wu, P.F. In search of negativity bias: An empirical study of perceived helpfulness of online reviews. Psychol. Mark. 2013, 30, 971–984. [Google Scholar] [CrossRef] [Green Version]

- Jacoby, J.; Troutman, T.; Kuss, A.; Mazursky, D. Experience and expertise in complex decision making. ACR North Am. Adv. 1986, 13, 469–472. [Google Scholar]

- Braunsberger, K.; Munch, J.M. Source expertise versus experience effects in hospital advertising. J. Serv. Mark. 1998, 12, 23–38. [Google Scholar] [CrossRef]

- Bonner, S.E.; Lewis, B.L. Determinants of auditor expertise. J. Account. Res. 1990, 28, 1–20. [Google Scholar] [CrossRef]

- Banerjee, S.; Bhattacharyya, S.; Bose, I. Whose online reviews to trust? Understanding reviewer trustworthiness and its impact on business. Decis. Support Syst. 2017, 96, 17–26. [Google Scholar] [CrossRef]

- Huang, A.H.; Chen, K.; Yen, D.C.; Tran, T.P. A study of factors that contribute to online review helpfulness. Comput. Hum. Behav. 2015, 48, 17–27. [Google Scholar] [CrossRef]

- Han, M. Examining the Effect of Reviewer Expertise and Personality on Reviewer Satisfaction: An Empirical Study of TripAdvisor. Comput. Hum. Behav. 2021, 114, 106567. [Google Scholar] [CrossRef]

- Werquin, P. Recognising Non-Formal and Informal Learning: Outcomes, Policies and Practices; OECD Publishing: Berlin, Germany, 2010. [Google Scholar]

- O’Riordan, T.; Millard, D.E.; Schulz, J. Is critical thinking happening? Testing content analysis schemes applied to MOOC discussion forums. Comput. Appl. Eng. Educ. 2021, 29, 690–709. [Google Scholar] [CrossRef]

- Faulconer, E.; Griffith, J.C.; Frank, H. If at first you do not succeed: Student behavior when provided feedforward with multiple trials for online summative assessments. Teach. High. Educ. 2021, 26, 586–601. [Google Scholar] [CrossRef]

- Latifi, S.; Gierl, M. Automated scoring of junior and senior high essays using Coh-Metrix features: Implications for large-scale language testing. Language Testing 2021, 38, 62–85. [Google Scholar] [CrossRef]

- Noroozi, O.; Hatami, J.; Latifi, S.; Fardanesh, H. The effects of argumentation training in online peer feedback environment on process and outcomes of learning. J. Educ. Sci. 2019, 26, 71–88. [Google Scholar]

- Alturkistani, A.; Lam, C.; Foley, K.; Stenfors, T.; Blum, E.R.; Van Velthoven, M.H.; Meinert, E. Massive open online course evaluation methods: Systematic review. J. Med. Internet Res. 2020, 22, e13851. [Google Scholar] [CrossRef]

- Glaser, R.; Chi, M.T.; Farr, M.J. (Eds.) The Nature of Expertise; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1988. [Google Scholar]

- Torraco, R.J.; Swanson, R.A. The Strategic Roles of Human Resource Development. Hum. Resour. Plan. 1995, 18, 10–21. [Google Scholar]

- Herling, R.W. Operational definitions of expertise and competence. Adv. Dev. Hum. Resour. 2000, 2, 8–21. [Google Scholar] [CrossRef]

- Honoré-Chedozeau, C.; Desmas, M.; Ballester, J.; Parr, W.V.; Chollet, S. Representation of wine and beer: Influence of expertise. Curr. Opin. Food Sci. 2019, 27, 104–114. [Google Scholar] [CrossRef]

- Leone, L.; Desimoni, M.; Chirumbolo, A. Interest and expertise moderate the relationship between right–wing attitudes, ideological self–placement and voting. Eur. J. Personal. 2014, 28, 2–13. [Google Scholar] [CrossRef]

- Reuber, A.R.; Fischer, E.M. Entrepreneurs’ experience, expertise, and the performance of technology-based firms. IEEE Trans. Eng. Manag. 1994, 41, 365–374. [Google Scholar] [CrossRef]

- Reuber, R. Management experience and management expertise. Decis. Support Syst. 1997, 21, 51–60. [Google Scholar] [CrossRef]

- Faraj, S.; Sproull, L. Coordinating expertise in software development teams. Manag. Sci. 2000, 46, 1554–1568. [Google Scholar] [CrossRef]

- Martínez-Ferrero, J.; García-Sánchez, I.M.; Ruiz-Barbadillo, E. The quality of sustainability assurance reports: The expertise and experience of assurance providers as determinants. Bus. Strategy Environ. 2018, 27, 1181–1196. [Google Scholar] [CrossRef]

- Craciun, G.; Zhou, W.; Shan, Z. Discrete emotions effects on electronic word-of-mouth helpfulness: The moderating role of reviewer gender and contextual emotional tone. Decis. Support Syst. 2020, 130, 113226. [Google Scholar] [CrossRef]

- Shin, S.; Du, Q.; Ma, Y.; Fan, W.; Xiang, Z. Moderating Effects of Rating on Text and Helpfulness in Online Hotel Reviews: An Analytical Approach. J. Hosp. Mark. Manag. 2021, 30, 159–177. [Google Scholar]

- Li, H.; Qi, R.; Liu, H.; Meng, F.; Zhang, Z. Can time soften your opinion? The influence of consumer experience: Valence and review device type on restaurant evaluation. Int. J. Hosp. Manag. 2021, 92, 102729. [Google Scholar] [CrossRef]

- Bigné, E.; Zanfardini, M.; Andreu, L. How online reviews of destination responsibility influence tourists’ evaluations: An exploratory study of mountain tourism. J. Sustain. Tour. 2020, 28, 686–704. [Google Scholar] [CrossRef]

- Deng, T. Investigating the effects of textual reviews from consumers and critics on movie sales. Online Inf. Rev. 2020, 44, 1245–1265. [Google Scholar] [CrossRef]

- Ismagilova, E.; Dwivedi, Y.K.; Slade, E.; Williams, M.D. Electronic Word of Mouth (eWoM) in The Marketing Context; Springer: New York, NY, USA, 2017. [Google Scholar]

- Stats Proving the Value of Customer Reviews, According to ReviewTrackers. Available online: https://www.reviewtrackers.com/reports/customer-reviews-stats (accessed on 2 November 2021).

- Wang, F.; Karimi, S. This product works well (for me): The impact of first-person singular pronouns on online review helpfulness. J. Bus. Res. 2019, 104, 283–294. [Google Scholar] [CrossRef]

- Berger, J.A.; Katherine, K.L. What makes online content viral? J. Mark. Res. 2012, 49, 192–205. [Google Scholar] [CrossRef] [Green Version]

- Mudambi, S.M.; Schuff, D. What makes a helpful online review? A study of customer reviews on Amazon.com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef] [Green Version]

- Salehan, M.; Kim, D.J. Predicting the performance of online consumer reviews: A sentiment mining approach to big data analytics. Decis. Support Syst. 2016, 81, 30–40. [Google Scholar] [CrossRef]

- Craciun, G.; Moore, K. Credibility of negative online product reviews: Reviewer gender, reputation and emotion effects. Comput. Hum. Behav. 2019, 97, 104–115. [Google Scholar] [CrossRef]

- Forman, C.; Ghose, A.; Wiesenfeld, B. Examining the relationship between reviews and sales: The role of reviewer identity disclosure in electronic markets. Inf. Syst. Res. 2008, 19, 291–313. [Google Scholar] [CrossRef]

- Karimi, S.; Wang, F. Online review helpfulness: Impact of reviewer profile image. Decis. Support Syst. 2017, 96, 39–48. [Google Scholar] [CrossRef]

- Naujoks, A.; Benkenstein, M. Who is behind the message? The power of expert reviews on eWoM platforms. Electron. Commer. Res. Appl. 2020, 44, 101015. [Google Scholar] [CrossRef]

- Wu, X.; Jin, L.; Xu, Q. Expertise makes perfect: How the variance of a reviewer’s historical ratings influences the persuasiveness of online reviews. J. Retail. 2021, 97, 238–250. [Google Scholar] [CrossRef]

- Mathwick, C.; Mosteller, J. Online reviewer engagement: A typology based on reviewer motivations. J. Serv. Res. 2017, 20, 204–218. [Google Scholar] [CrossRef]

- Choi, H.S.; Leon, S. An empirical investigation of online review helpfulness: A big data perspective. Decis. Support Syst. 2020, 139, 113403. [Google Scholar] [CrossRef]

- Lee, M.; Jeong, M.; Lee, J. Roles of negative emotions in customers’ perceived helpfulness of hotel reviews on a user-generated review website: A text mining approach. Int. J. Contemp. Hosp. Manag. 2017, 29, 762–783. [Google Scholar] [CrossRef]

- Baek, H.; Ahn, J.H.; Choi, Y. Helpfulness of online consumer reviews: Readers’ objectives and review cues. Int. J. Electron. Commer. 2012, 17, 99–126. [Google Scholar] [CrossRef]

- Zhu, L.; Yin, G.; He, W. Is this opinion leader’s review useful? Peripheral cues for online review helpfulness. J. Electron. Commer. Res. 2014, 15, 267–280. [Google Scholar]

- Racherla, P.; Friske, W. Perceived ‘usefulness’ of online consumer reviews: An exploratory investigation across three services categories. Electron. Commer. Res. Appl. 2012, 11, 548–559. [Google Scholar] [CrossRef]

- Schmidt, F.L.; Hunter, J. General mental ability in the world of work: Occupational attainment and job performance. J. Personal. Soc. Psychol. 2004, 86, 162–173. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boshuizen, H.P.; Gruber, H.; Strasser, J. Knowledge restructuring through case processing: The key to generalise expertise development theory across domains? Educ. Res. Rev. 2020, 29, 100310. [Google Scholar] [CrossRef]

- Corrigan, J.A.; Slomp, D.H. Articulating a sociocognitive construct of writing expertise for the digital age. J. Writ. Anal. 2021, 5, 142–195. [Google Scholar]

- Schriver, K. What We Know about Expertise in Professional Communication. In Past, Present, and Future Contributions of Cognitive Writing Research to Cognitive Psychology; Berninger, V.W., Ed.; Psychology Press: New York, NY, USA, 2012; pp. 275–312. [Google Scholar]

- Kellogg, R.T. Professional writing expertise. In The Cambridge Handbook of Expertise and Expert Performance; Ericsson, K.A., Charness, N., Feltovich, P.J., Hoffman, R.R., Eds.; Cambridge University Press: Cambridge, UK, 2006; pp. 389–402. [Google Scholar]

- Earley, P.C.; Lee, C.; Hanson, L.A. Joint moderating effects of job experience and task component complexity: Relations among goal setting, task strategies, and performance. J. Organ. Behav. 1990, 11, 3–15. [Google Scholar] [CrossRef]

- Kuan, K.K.; Hui, K.L.; Prasarnphanich, P.; Lai, H.Y. What makes a review voted? An empirical investigation of review voting in online review systems. J. Assoc. Inf. Syst. 2015, 16, 48–71. [Google Scholar] [CrossRef] [Green Version]

- Fink, L.; Rosenfeld, L.; Ravid, G. Longer online reviews are not necessarily better. Int. J. Inf. Manag. 2018, 39, 30–37. [Google Scholar] [CrossRef]

- Guo, D.; Zhao, Y.; Zhang, L.; Wen, X.; Yin, C. Conformity feedback in an online review helpfulness evaluation task leads to less negative feedback-related negativity amplitudes and more positive P300 amplitudes. J. Neurosci. Psychol. Econ. 2019, 12, 73–87. [Google Scholar] [CrossRef]

- Liang, S.; Schuckert, M.; Law, R. How to improve the stated helpfulness of hotel reviews? A multilevel approach. Int. J. Contemp. Hosp. Manag. 2019, 31, 953–977. [Google Scholar] [CrossRef]

- Hausman, J.; Hall, B.H.; Griliches, Z. Econometric models for count data with an application to the patents-R&D relationship. Econometrica 1984, 52, 909–938. [Google Scholar]

| Effects | Reviewer Experience | Reviewer Expertise | Interaction of the Two |

|---|---|---|---|

| Review quality effects (experience and expertise as reviewer competence dimensions) | Hypothesized positive (This research) | Hypothesized positive (This research) | Hypothesized positive (This research) |

| Source cue effects (affecting readers’ perception of review helpfulness) | Mixed effects [18,19,57] | Positive effect [20,50] | None |

| Variables | Definitions |

|---|---|

| Dependent Variable | |

| RevHelp | Helpfulness of a course review to review readers. It is operationalized by the number of helpfulness votes that a course review has received. |

| Independent Variables | |

| Experience | Reviewer experience in writing course reviews. It is operationalized by the total number of course reviews that a reviewer has posted before the one under study. |

| Expertise | Reviewer expertise in writing helpful reviews. It is operationalized by the average number of helpfulness votes per review received by a reviewer on reviews posted before the one under study. |

| Control Variables | |

| RevExtremity | A dummy variable indicating the extremity of a course review. The value of the variable takes 1 for a review rating of 1 or 5, and 0 otherwise. |

| RevPositivity | A dummy variable indicating the positivity of a course review. The value of the variable takes 1 for a review rating of 4 or 5, and 0 otherwise. |

| RevLength | Length of a course review. It is operationalized as the number of Chinese characters in the textual comment of a course review. |

| RevInconsist | Review score inconsistency. It refers to the extent to which a review rating differs from the average rating of a course. It is operationalized by the absolute value of the difference between a review rating and the average rating of all previous reviews of a course. |

| RevAge | Review age. It indicates how long ago a course review has been posted. It is operationalized by the number of days between the posting date of a review and the data retrieval date. |

| RevHour | Indicates the hour of a day at which a course review was posted. |

| RevDoM | Indicates the day of a month on which a course review was posted. |

| RevDoW | Indicates the day of a week on which a course review was posted. |

| RevMonth | Indicates the month in which a course review was posted. |

| RevYear | Indicates the year in which a course review was posted. |

| CrsDiversity | Indicates the diversity of course categories for which a reviewer has posted reviews. It is operationalized by the number of course categories for which a reviewer has posted course reviews. |

| CrsPopul | Indicates the popularity of a course. It is operationalized by the number of reviews for a course. |

| CrsSatisf | Indicates learners’ overall satisfaction with a course. It is operationalized by the average rating of all reviews for a course. |

| CrsType | A set of dummy variables indicating the type of a course as specified by the MOOC platform, including general course, general basic course, special basic course, special course, etc. |

| CrsCategory | A set of dummy variables indicating the category of a course as specified by the MOOC platform, including agriculture, medicine, history, philosophy, engineering, pedagogy, literature, law, science, management, economics, art, etc. |

| CrsProvider | A set of dummy variables indicating the college or university that provides a course. |

| Variables | Number. of Obs. | Mean | Std. Dev. | Min. | Max. |

|---|---|---|---|---|---|

| RevHelp | 39,114 | 2.986 | 13.581 | 1 | 1445 |

| Experience | 39,114 | 7.192 | 35.886 | 1 | 807 |

| Expertise | 39,114 | 1.242 | 10.685 | 0 | 1195 |

| RevExtremity | 39,114 | 0.901 | 0.299 | 0 | 1 |

| RevPositivity | 39,114 | 0.945 | 0.228 | 0 | 1 |

| RevLength | 39,114 | 29.720 | 43.675 | 5 | 500 |

| RevInconsist | 39,114 | 0.391 | 0.608 | 0 | 3.950 |

| RevAge | 39,114 | 524.551 | 254.394 | 60 | 1105 |

| CrsDiversity | 39,114 | 4.611 | 8.095 | 1 | 72 |

| CrsPopul | 39,114 | 777.247 | 1932.504 | 1 | 28,063 |

| CrsSatisf | 39,114 | 4.760 | 0.160 | 2.538 | 5 |

| Variables | (1) | (2) | (3) | (4) |

|---|---|---|---|---|

| Experience | 0.001 ** (0.000) | 0.001 * (0.000) | 0.001 * (0.000) | |

| Expertise | 0.011 *** (0.001) | 0.011 *** (0.001) | 0.011 *** (0.001) | |

| Expertise | 0.000(0.000) | |||

| High-Low | 0.036 ** (0.016) | |||

| Low-High | 0.273 *** (0.018) | |||

| High-High | 0.333 *** (0.030) | |||

| CrDiversity | −0.002 * (0.001) | −0.002 * (0.001) | −0.002 * (0.001) | −0.002 *** (0.001) |

| RevExtremity | 0.147 *** (0.019) | 0.145 *** (0.019) | 0.137 *** (0.019) | |

| RevPositivity | −0.368 *** (0.049) | −0.368 *** (0.049) | −0.408 *** (0.049) | |

| Log#RevLength | 0.380 *** (0.006) | 0.380 *** (0.006) | 0.375 *** (0.006) | |

| RevInconsist | 0.113 *** (0.019) | 0.111 *** (0.019) | 0.086 *** (0.019) | |

| Log#RevAge | −0.210 *** (0.038) | −0.211 *** (0.038) | −0.210 *** (0.038) | −0.234 *** (0.038) |

| Log#CrsPopul | 0.123 *** (0.006) | 0.123 *** (0.006) | 0.123 *** (0.006) | 0.123 *** (0.006) |

| CrsSatisf | −0.082 ** (0.040) | −0.082 ** (0.040) | −0.082 ** (0.040) | −0.097 ** (0.039) |

| lnalpha | −0.536 *** (0.010) | −0.536 *** (0.010) | −0.536 *** (0.010) | −0.531 *** (0.010) |

| Review timing FE a | Y | Y | Y | Y |

| Course FE b | Y | Y | Y | Y |

| # observations | 39,114 | 39,114 | 39,114 | 39,114 |

| Log likelihood | −78,160 | −78,159 | −78,160 | −78,232 |

| AIC | 157,286 | 157,287 | 157,286 | 157,431 |

| BIC | 161,427 | 161,437 | 161,427 | 161,581 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, Z.; Wang, F.; Wang, S. Reviewer Experience vs. Expertise: Which Matters More for Good Course Reviews in Online Learning? Sustainability 2021, 13, 12230. https://doi.org/10.3390/su132112230

Du Z, Wang F, Wang S. Reviewer Experience vs. Expertise: Which Matters More for Good Course Reviews in Online Learning? Sustainability. 2021; 13(21):12230. https://doi.org/10.3390/su132112230

Chicago/Turabian StyleDu, Zhao, Fang Wang, and Shan Wang. 2021. "Reviewer Experience vs. Expertise: Which Matters More for Good Course Reviews in Online Learning?" Sustainability 13, no. 21: 12230. https://doi.org/10.3390/su132112230