Assessment of Online Deliberative Quality: New Indicators Using Network Analysis and Time-Series Analysis

Abstract

:1. Introduction

- How can the quality of online deliberation be monitored on government-run platforms?

- What new indicators can support such monitoring by applying network analysis and time-series analysis?

- How can the new monitoring indicators help to develop more resilient governance practices?

2. Theoretical Background of Deliberative Quality Indicators

2.1. Concept of Online Deliberation and Past Measurement Efforts

2.2. New Online Deliberative Quality Indicators

3. Empirical Case: OmaStadi Participatory Budgeting Project

- Proposal: Residents initiate proposals.

- Screening: City experts screen all proposals and mark them either as impossible (ei mahdollinen) or possible (mahdollinen). Once a proposal is labeled “impossible,” it is no longer proceeded with.

- Co-creation: Several “possible” proposals (ehdotukset) are combined into plans (suunnitelmat) based on traits and relevance in collaboration with residents and experts.

- Cost estimates: City experts estimate the budget for each plan. Plans are prepared for a popular vote.

- Voting: Citizens vote on desirable plans online or offline.

- Implementation: Voted plans are implemented in the following year.

4. Materials and Methods

4.1. Data Collection

4.2. Methods: Network Analysis and Time-Series Analysis

4.2.1. Participation Dimension

4.2.2. Deliberation Dimension

5. Results

5.1. Participation Rate

5.2. Activeness

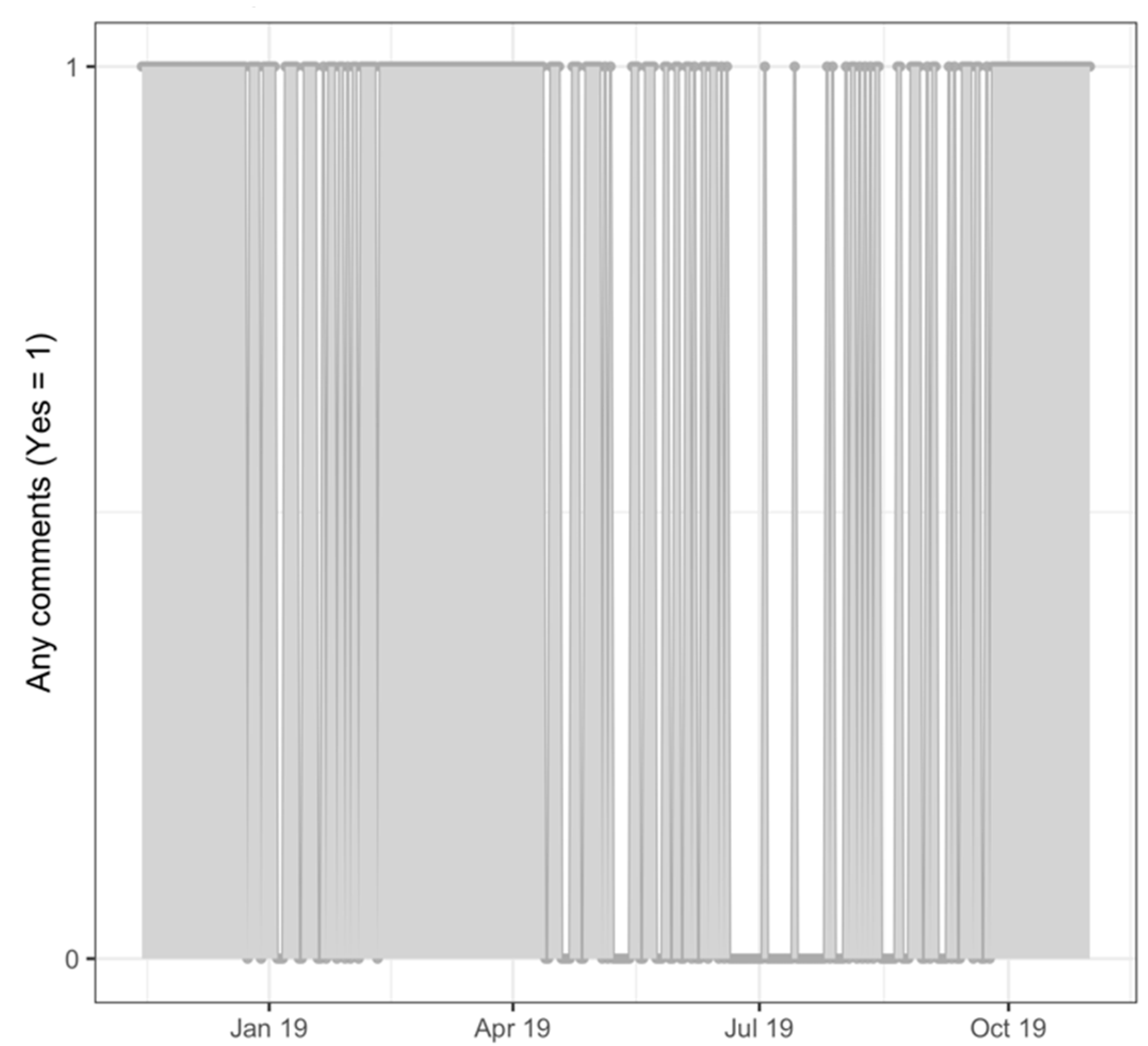

5.3. Continuity

5.4. Responsiveness

5.5. Inter-Linkedness

5.6. Commitment

6. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rask, M.; Mačiukaitė-Žvinienė, S.; Tauginienė, L.; Dikčius, V.; Matschoss, K.; Aarrevaara, T.; D’Andrea, L. Public Participation, Science and Society: Tools for Dynamic and Responsible Governance of Research and Innovation; Routledge: London, UK; New York, NY, USA, 2018. [Google Scholar]

- Lange, P.; Driessen, P.P.J.; Sauer, A.; Bornemann, B.; Burger, P. Governing Towards Sustainability—Conceptualizing Modes of Governance. J. Environ. Policy Plan. 2013, 15, 403–425. [Google Scholar]

- Loorbach, D.; Wittmayer, J.M.; Shiroyama, H.; Fujino, J.; Mizuguchi, S. Governance of Urban Sustainability Transitions; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Torfing, J.; Sørensen, E.; Røiseland, A. Transforming the Public Sector Into an Arena for Co-Creation: Barriers, Drivers, Benefits, and Ways Forward. Adm. Soc. 2019, 51, 795–825. [Google Scholar] [CrossRef]

- Toikka, A. Exploring the Composition of Communication Networks of Governance—A Case Study on Local Environmental Policy in Helsinki, Finland. Environ. Policy Gov. 2010, 20, 135–145. [Google Scholar]

- Borg, R.; Toikka, A.; Primmer, E. Social Capital and Governance: A Social Network Analysis of Forest Biodiversity Collaboration in Central Finland. For. Policy Econ. 2014, 50, 1–9. [Google Scholar]

- Ansell, C.; Torfing, J. Handbook on Theories of Governance; Edward Elgar Publishing: Cheltenham, UK, 2016. [Google Scholar]

- Colantonio, A.; Dixon, T. Urban Regeneration and Social Sustainability: Best Practice from European Cities; Wiley-Blackwell: Oxford, UK, 2011. [Google Scholar]

- Eizenberg, E.; Jabareen, Y. Social Sustainability: A New Conceptual Framework. Sustainability 2017, 9, 68. [Google Scholar]

- Van Zeijl-Rozema, A.; Cörvers, R.; Kemp, R.; Martens, P. Governance for Sustainable Development: A Framework. Sustain. Dev. 2008, 16, 410–421. [Google Scholar] [CrossRef]

- Healey, P. Transforming Governance: Challenges of Institutional Adaptation and a New Politicalcs of Space. Eur. Plan. Stud. 2006, 14, 299–320. [Google Scholar] [CrossRef]

- Klijn, E.-H.; Koppenjan, J. Governance Network Theory: Past, Present and Future. Policy Politicalcs 2012, 40, 587–606. [Google Scholar] [CrossRef] [Green Version]

- Jessop, B. The Rise of Governance and the Risks of Failure: The Case of Economic Development. Int. Soc. Sci. J. 1998, 50, 29–45. [Google Scholar] [CrossRef]

- Alibašić, H. Sustainability and Resilience Planning for Local Governments: The Quadruple Bottom Line Strategy; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Meuleman, L. Public Management and the Metagovernance of Hierarchies, Networks and Markets: The Feasibility of Designing and Managing Governance Style Combinations; Physica-Verlag: Leipzig, Germany, 2008. [Google Scholar]

- Voorberg, W.H.; Bekkers, V.J.J.M.; Tummers, L.G. A Systematic Review of Co-Creation and Co-Production: Embarking on the Social Innovation Journey. Public Manag. Rev. 2015, 17, 1333–1357. [Google Scholar]

- Healey, P. Collaborative Planning: Shaping Places in Fragmented Societies; Palgrave Macmillan: New York, NY, USA, 1997. [Google Scholar]

- Hajer, M.A.; Wagenaar, H. Deliberative Policy Analysis: Understanding Governance in the Network Society; Cambridge University Press: New York, NY, USA, 2003. [Google Scholar]

- McLaverty, P.; Halpin, D. Deliberative Drift: The Emergence of Deliberation in the Policy Process. Int. Politicalcal Sci. Rev. 2008, 29, 197–214. [Google Scholar] [CrossRef] [Green Version]

- Innes, J.E.; Booher, D.E. Reframing Public Participation: Strategies for the 21st Century. Plan. Theory Pract. 2004, 5, 419–436. [Google Scholar] [CrossRef]

- Duit, A.; Galaz, V.; Eckerberg, K.; Ebbesson, J. Governance, Complexity, and Resilience. Glob. Environ. Chang. 2010, 20, 363–368. [Google Scholar] [CrossRef]

- Skondras, N.A.; Tsesmelis, D.E.; Vasilakou, C.G.; Karavitis, C.A. Resilience–Vulnerability Analysis: A Decision-Making Framework for Systems Assessment. Sustainability 2020, 12, 9306. [Google Scholar] [CrossRef]

- Capano, G.; Woo, J.J. Resilience and Robustness in Policy Design: A Critical Appraisal. Policy Sci. 2017, 50, 399–426. [Google Scholar] [CrossRef]

- Chadwick, A. Bringing E-Democracy Back In: Why It Matters for Future Research on E-Governance. Soc. Sci. Comput. Rev. 2003, 21, 443–455. [Google Scholar] [CrossRef]

- Dawes, S.S. The Evolution and Continuing Challenges of E-Governance. Public Adm. Rev. 2008, 68, S86–S102. [Google Scholar] [CrossRef]

- Shane, P.M. Democracy Online: The Prospects for Politicalcal Renewal through the Internet; Routledge: New York, NY, USA; London, UK, 2004. [Google Scholar]

- Friess, D.; Eilders, C. A Systematic Review of Online Deliberation Research. Policy Internet 2015, 7, 319–339. [Google Scholar] [CrossRef]

- Janssen, D.; Kies, R. Online Forums and Deliberative Democracy. Acta Polít. 2005, 40, 317–335. [Google Scholar] [CrossRef]

- Lahlou, S. Digitization and Transmission of Human Experience. Soc. Sci. Inf. 2010, 49, 291–327. [Google Scholar] [CrossRef] [Green Version]

- Meijer, A.; Bolívar, M.P.R. Governing the Smart City: A Review of the Literature on Smart Urban Governance. Int. Rev. Adm. Sci. 2016, 82, 392–408. [Google Scholar] [CrossRef]

- Connelly, R.; Playford, C.J.; Gayle, V.; Dibben, C. The Role of Administrative Data in the Big Data Revolution in Social Science Research. Soc. Sci. Res. 2016, 59, 1–12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Höchtl, J.; Parycek, P.; Schöllhammer, R. Big Data in the Policy Cycle: Policy Decision Making in the Digital Era. J. Organ. Comput. Electron. Commer. 2016, 26, 147–169. [Google Scholar] [CrossRef]

- Jonsson, M.E.; Åström, J. The Challenges for Online Deliberation Research: A Literature Review. Int. J. E-Politicals 2014, 5, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Strandberg, K.; Grönlund, K. Online deliberation. In The Oxford Handbook of Deliberative Democracy; Bächtiger, A., Dryzek, J.S., Mansbridge, J., Warren, M., Eds.; Oxford University Press: Oxford, UK, 2018; pp. 365–377. [Google Scholar]

- Esau, K.; Fleuß, D.; Nienhaus, S. Different Arenas, Different Deliberative Quality? Using a Systemic Framework to Evaluate Online Deliberation on Immigration Policy in Germany. Policy Internet 2020, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Esau, K.; Friess, D.; Eilders, C. Design Matters! An Empirical Analysis of Online Deliberation on Different News Platforms. Policy Internet 2017, 9, 321–342. [Google Scholar] [CrossRef]

- Escher, T.; Friess, D.; Esau, K.; Sieweke, J.; Tranow, U.; Dischner, S.; Hagemeister, P.; Mauve, M. Online Deliberation in Academia: Evaluating the Quality and Legitimacy of Cooperatively Developed University Regulations. Policy Internet 2017, 9, 133–164. [Google Scholar]

- Black, L.W.; Welser, H.T.; Cosley, D.; DeGroot, J.M. Self-Governance Through Group Discussion in Wikipedia: Measuring Deliberation in Online Groups. Small Gr. Res. 2011, 42, 595–634. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Cao, X.; Tran, M.N. The Structural Features and the Deliberative Quality of Online Discussions. Telemat. Inform. 2013, 30, 74–86. [Google Scholar] [CrossRef]

- Jennstål, J. Deliberation and Complexity of Thinking. Using the Integrative Complexity Scale to Assess the Deliberative Quality of Minipublics. Swiss Political Sci. Rev. 2019, 25, 64–83. [Google Scholar] [CrossRef]

- Steenbergen, M.R.; Bächtiger, A.; Spörndli, M.; Steiner, J. Measuring Politicalcal Deliberation: A Discourse Quality Index. Comp. Eur. Politicalcs 2003, 1, 21–48. [Google Scholar] [CrossRef]

- Beauchamp, N. Modeling and Measuring Deliberation Online. In The Oxford Handbook of Networked Communication; Foucault, B., González-Bailón, S., Eds.; Oxford University Press: New York, NY, USA, 2018; pp. 322–349. [Google Scholar]

- Iliev, R.; Dehghani, M.; Sagi, E. Automated Text Analysis in Psychology: Methods, Applications, and Future Developments. Lang. Cogn. 2015, 7, 265–290. [Google Scholar] [CrossRef] [Green Version]

- Himelboim, I. Civil Society and Online Politicalcal Discourse: The Network Structure of Unrestricted Discussions. Communic. Res. 2011, 38, 634–659. [Google Scholar] [CrossRef]

- Choi, S. Flow, Diversity, Form, and Influence of Politicalcal Talk in Social-Media-Based Public Forums. Hum. Commun. Res. 2014, 40, 209–237. [Google Scholar] [CrossRef]

- Gonzalez-Bailon, S.; Kaltenbrunner, A.; Banchs, R.E. The Structure of Politicalcal Discussion Networks: A Model for the Analysis of Online Deliberation. J. Inf. Technol. 2010, 25, 230–243. [Google Scholar] [CrossRef]

- Walker, M.A.; Tree, J.E.F.; Anand, P.; Abbott, R.; King, J. A Corpus for Research on Deliberation and Debate. In Proceedings of the LREC, Istanbul, Turkey, 23–25 May 2012; Volume 12, pp. 812–817. [Google Scholar]

- Fournier-Tombs, E.; Di Marzo Serugendo, G. DelibAnalysis: Understanding the Quality of Online Politicalcal Discourse with Machine Learning. J. Inf. Sci. 2020, 46, 810–922. [Google Scholar] [CrossRef]

- Habermas, J. The Theory of Communicative Action, Volume 1; Beacon Press: Boston, MA, USA, 1984. [Google Scholar]

- Habermas, J. The Theory of Communicative Action, Volume 2; Beacon Press: Boston, MA, USA, 1987. [Google Scholar]

- Habermas, J. Three Normative Models of Democracy. Constellations 1994, 1, 1–10. [Google Scholar] [CrossRef]

- Goode, L. Jürgen Habermas: Democracy and the Public Sphere; Pluto Press: London, UK, 2005. [Google Scholar]

- Rittel, H.W.J.; Webber, M.M. Dilemmas in a General Theory of Planning. Policy Sci. 1973, 4, 155–169. [Google Scholar] [CrossRef]

- Cohen, J. Deliberation and Democratic Legitimacy. In Debates in Contemporary Politicalcal Philosophy: An Anthology; Derek, M., Pike, J., Eds.; Routledge: London, UK; New York, NY, USA, 2003; pp. 342–360. [Google Scholar]

- Fishkin, J.S.; Laslett, P. Debating Deliberative Democracy; Blackwell Publishing: Boston, MA, USA, 2003. [Google Scholar]

- Gastil, J.; Dillard, J.P. Increasing Politicalcal Sophistication through Public Deliberation. Political Commun. 1999, 16, 3–23. [Google Scholar] [CrossRef]

- Coleman, S.; Moss, G. Under Construction: The Field of Online Deliberation Research. J. Inf. Technol. Politicalcs 2012, 9, 1–15. [Google Scholar] [CrossRef]

- Stromer-Galley, J. Measuring Deliberation’s Content: A Coding Scheme. J. Public Delib. 2007, 3, 12. [Google Scholar]

- Dahlberg, L. The Internet and Democratic Discourse: Exploring the Prospects of Online Deliberative Forums Extending the Public Sphere. Inf. Commun. Soc. 2001, 4, 615–633. [Google Scholar] [CrossRef]

- Fishkin, J.S. When the People Speak: Deliberative Democracy and Public Consultation; Oxford University Press: Oxford, UK, 2009. [Google Scholar]

- Gastil, J.; Black, L. Public Deliberation as the Organizing Principle of Politicalcal Communication Research. J. Public Delib. 2008, 4, 1–49. [Google Scholar]

- Dahlberg, L. Net-Public Sphere Research: Beyond the “First Phase”. Public 2004, 11, 27–43. [Google Scholar] [CrossRef]

- Halpern, D.; Gibbs, J. Social Media as a Catalyst for Online Deliberation? Exploring the Affordances of Facebook and YouTube for Politicalcal Expression. Comput. Huma. Behav. 2013, 29, 1159–1168. [Google Scholar] [CrossRef]

- Himmelroos, S. Discourse Quality in Deliberative Citizen Forums-A Comparison of Four Deliberative Mini-publics. J. Public Delib. 2017, 13, 1–28. [Google Scholar] [CrossRef]

- Aragón, P.; Kaltenbrunner, A.; Calleja-López, A.; Pereira, A.; Monterde, A.; Barandiaran, X.E.; Gómez, V. Deliberative Platform Design: The Case Study of the Online Discussions in Decidim Barcelona. In Proceedings of the International Conference on Social Informatics, Oxford, UK, 13–15 September 2017; pp. 277–287. [Google Scholar]

- Gold, V.; El-Assady, M.; Hautli-Janisz, A.; Bögel, T.; Rohrdantz, C.; Butt, M.; Holzinger, K.; Keim, D. Visual Linguistic Analysis of Politicalcal Discussions: Measuring Deliberative Quality. Digit. Scholarsh. Humanit. 2017, 32, 141–158. [Google Scholar] [CrossRef] [Green Version]

- Parthasarathy, R.; Rao, V.; Palaniswamy, N. Deliberative Democracy in an Unequal World: A Text-As-Data Study of South India’s Village Assemblies. Am. Politicalcal Sci. Rev. 2019, 113, 623–640. [Google Scholar] [CrossRef]

- Muñiz, C.; Campos-Domínguez, E.; Saldierna, A.R.; Dader, J.L. Engagement of Politicalcians and Citizens in the Cyber Campaign on Facebook: A Comparative Analysis Between Mexico and Spain. Contemp. Soc. Sci. 2019, 14, 102–113. [Google Scholar] [CrossRef]

- Giraldo Luque, S.; Villegas Simon, I.; Duran Becerra, T. Use of the Websites of Parliaments to Promote Citizen Deliberation in the Process of Public Decision-making: Comparative Study of Ten Countries (America and Europe). Commun. Soc. 2017, 30, 77–97. [Google Scholar]

- Campos-Domínguez, E.; Calvo, D. Participation and Topics of Discussion of Spaniards in the Digital Public Sphere. Commun. Soc. 2016, 29, 219–232. [Google Scholar]

- Rowe, I. Deliberation 2.0: Comparing the Deliberative Quality of Online News User Comments Across Platforms. J. Broadcast. Electron. Media 2015, 59, 539–555. [Google Scholar]

- Habermas, J. Moral Consciousness and Communicative Action; MIT Press: Cambridge, MA, USA, 1990. [Google Scholar]

- Beierle, T.C. Digital Deliberation: Engaging the Public Through Online Policy Dialogues. In Democracy Online: The Prospects for Politicalcal Renewal through the Internet; Shane, P., Ed.; Routledge: London, UK, 2004; pp. 155–166. [Google Scholar]

- Coleman, S.; Shane, P.M. Connecting Democracy: Online Consultation and the Flow of Politicalcal Communication; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Peña-López, I. Decidim. Barcelona, Spain: Voice or Chatter? IT for Change: Bangalore, India, 2017. [Google Scholar]

- Rask, M.; Ertiö, T.-P.; Tuominen, P.; Ahonen, V. The Final Evaluation of the City of Helsinki Participatory Budgeting. OmaStadi 2018-2020 (Helsingin Kaupungin Osallistuvan Budjetoinnin Loppuarviointi. OmaStadi 2018-2020, in Finnish Only); BIBU: Helsinki, Finland, 2021; Available online: https://bibu.fi/osallistuvan-budjetoinnin-loppuarviointi/ (accessed on 13 January 2021).

- Ertiö, T.-P.; Tuominen, P.; Rask, M. Turning Ideas into Proposals: A Case for Blended Participation During the Participatory Budgeting Trial in Helsinki. In Electronic Participation, Proceedings of the International Conference, ePart 2019, San Benedetto Del Tronto, Italy, 2–4 September 2019; Panagiotopoulos, P., Edelmann, N., Glassey, O., Misuraca, G., Parycek, P., Lampoltshammer, T., Re, B., Eds.; Panagiotopoulos, P., Edelmann, N., Glassey, O., Misuraca, G., Parycek, P., Lampoltshammer, T., Re, B., Eds.; Springer: Cham, Switzerland, 2019; pp. 15–25. [Google Scholar]

- Finnish National Board on Research Integrity. The Ethical Principles of Research with Human Participants and Ethical Review in the Human Sciences in Finland; Finnish National Board on Research Integrity: Helsinki, Finland, 2019. [Google Scholar]

- Wasserman, S.; Faust, K. Social Network Analysis: Methods and Applications; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- Newman, M. Networks: An Introduction; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Hamilton, J.D. Time Series Analysis; Princeton University Press: Princeton, NJ, USA, 1994. [Google Scholar]

- Montgomery, D.C.; Jennings, C.L.; Kulahci, M. Introduction to Time Series Analysis and Forecasting; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Campanharo, A.S.L.O.; Sirer, M.I.; Malmgren, R.D.; Ramos, F.M.; Amaral, L.A.N. Duality Between Time Series and Networks. PLoS ONE 2011, 6, e23378. [Google Scholar]

- Vasques Filho, D.; O’Neale, D.R.J. Degree Distributions of Bipartite Networks and their Projections. Phys. Rev. E 2018, 98, 022307. [Google Scholar] [PubMed] [Green Version]

- Egelston, A.; Cook, S.; Nguyen, T.; Shaffer, S. Networks for the Future: A Mathematical Network Analysis of the Partnership Data for Sustainable Development Goals. Sustainability 2019, 11, 5511. [Google Scholar] [CrossRef] [Green Version]

- Krispin, R. Hands-On Time Series Analysis with R: Perform. Time Series Analysis and Forecasting using R; Packt: Birmingham, UK, 2019. [Google Scholar]

- Vaccari, C.; Chadwick, A. Deepfakes and Disinformation: Exploring the Impact of Synthetic Politicalcal Video on Deception, Uncertainty, and Trust in News. Soc. Media Soc. 2020, 6, 1–13. [Google Scholar]

- Rask, M.; Ertiö, T.-P. The Co-creation Radar: A Comprehensive Public Participation Evaluation Model. Helsinki. 2019. Available online: https://bibu.fi/policy-brief-the-co-creation-radar-a-comprehensive-public-participation-evaluation-model/ (accessed on 13 January 2021).

| Indicator | Description | Measurement |

|---|---|---|

| Participation dimension (volume of deliberation) | ||

| Participation rate | The proportion of residents who registered with an online deliberative system | # total IDs/population |

| Activeness | A longitudinal change in active commentators, proposals, and comments | # active IDs/# total IDs (two-sided) moving average |

| Continuity | The extent of consistency in participation | # active days/# entire days |

| Deliberation dimension (interaction in deliberation) | ||

| Responsiveness | The proportion of replies in online comments | # replies/# comments |

| Inter-linkedness | Interactive patterns among actors and proposals | Network properties |

| Commitment | Variability of the degree of engagement | Degree distribution |

| Statistic | Southeast | Central | Total |

|---|---|---|---|

| % of active commentators | 13.8% (n = 192) | 16.8% (n = 232) | 1385 |

| % of active proposals and plans | 9.6% (n = 100) | 12% (n = 125) | 1040 |

| % of comments | 11.1% (n = 354) | 11.6% (n = 368) | 3188 |

| Mean number of comments per commentator | 1.60 | 1.43 | 1.96 |

| Mean number of comments per proposal | 3.07 | 2.66 | 2.61 |

| Indicator | Description | Usefulness for Deliberative Quality Assessment |

|---|---|---|

| Participation dimension (volume of deliberation) | ||

| Participation rate | The proportion of residents who registered with an online deliberative system | Representativeness |

| Activeness | A longitudinal change in active commentators, proposals, and comments | Activeness |

| Continuity | The extent of consistency in participation | Consistency |

| Deliberation dimension (interaction in deliberation) | ||

| Responsiveness | The proportion of replies in online comments | Reciprocity |

| Inter-linkedness | Interactive patterns among actors and proposals | Structural property |

| Commitment | Variability of the degree of engagement | Equal involvement |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, B.; Rask, M. Assessment of Online Deliberative Quality: New Indicators Using Network Analysis and Time-Series Analysis. Sustainability 2021, 13, 1187. https://doi.org/10.3390/su13031187

Shin B, Rask M. Assessment of Online Deliberative Quality: New Indicators Using Network Analysis and Time-Series Analysis. Sustainability. 2021; 13(3):1187. https://doi.org/10.3390/su13031187

Chicago/Turabian StyleShin, Bokyong, and Mikko Rask. 2021. "Assessment of Online Deliberative Quality: New Indicators Using Network Analysis and Time-Series Analysis" Sustainability 13, no. 3: 1187. https://doi.org/10.3390/su13031187