Sustaining User Experience in a Smart System in the Retail Industry

Abstract

1. Introduction

2. Conceptual Background

2.1. SST

2.2. Computer Vision

2.3. Unmanned Retail

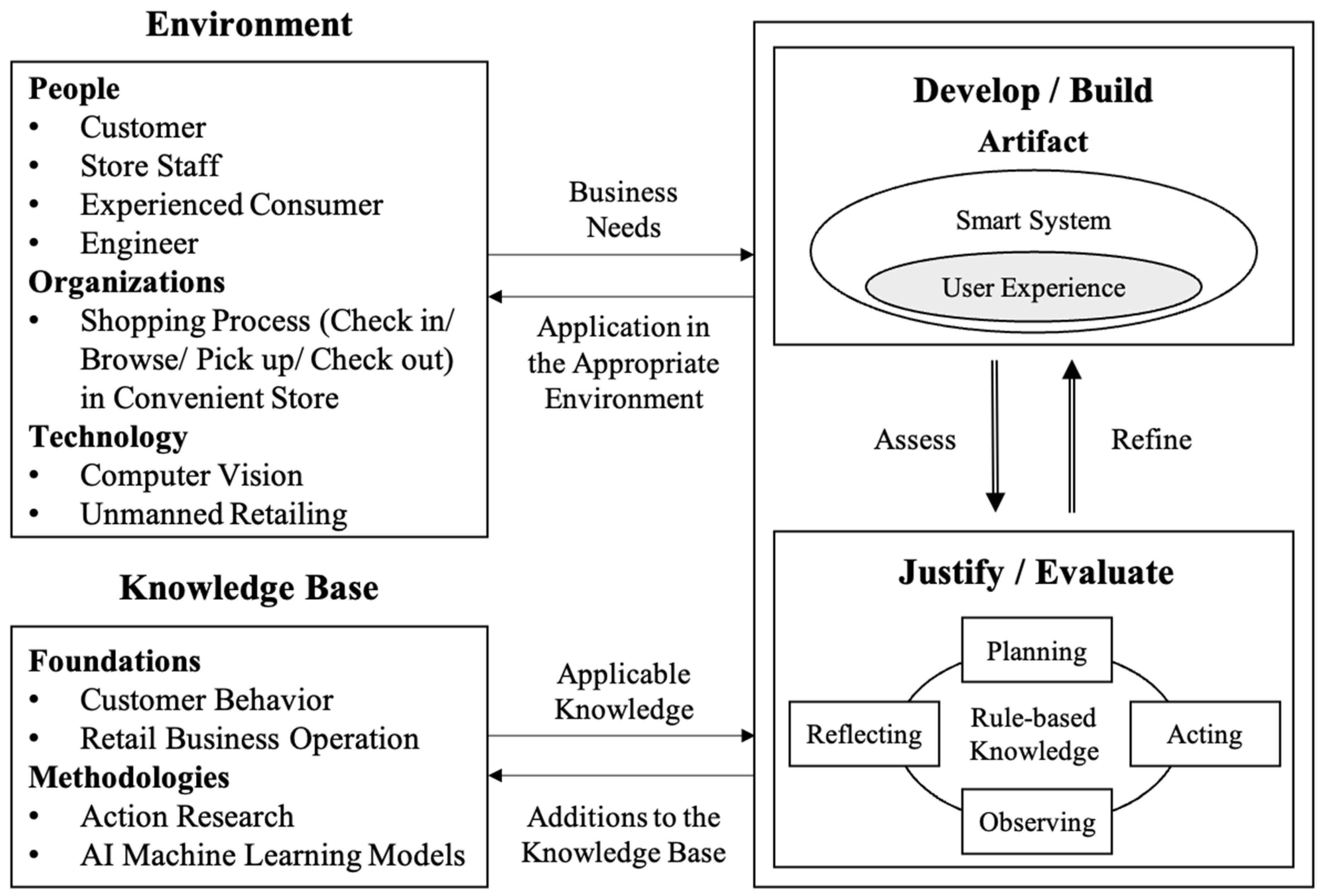

3. Methodology and Research Design

3.1. System Design

3.1.1. Shopping App

3.1.2. Global Tracking

3.1.3. Item Recognition

3.1.4. Person–Item Association

3.1.5. Inventory Management

3.2. Rule-Based Knowledge Design

3.2.1. Preliminary Diagnosis

3.2.2. Action Planning

- Check in: A customer checks in through a mobile app (QR code). The turnstile opens after a successful account validation.

- Browse: The customer passes through the turnstile and starts browsing the products. Many customers can be identified and tracked in the store simultaneously.

- Pick up: If a customer grabs a product, this product is added into a virtual basket. Moreover, if they place a product back on the shelf, the product is erased from the virtual basket.

- Check out: A customer completes the purchase, passes the door line on the way out, and is billed for the picked-up products.

3.2.3. Action Taking

3.2.4. Evaluation

4. Reflection Learning

4.1. Check In

- Most customers were permitted to enter the store by using the mobile app and following the rules serially. Few customers were identified as sharing one mobile app, except for children and a couple. However, in the future, the case in which customers might not own a mobile phone should also be considered.

- If the system could not autonomously identify a customer’s characteristic through computer vision technology, the characteristic was regarded as an abnormal one.

- Computer vision could precisely capture and identify each customer’s characteristics, with the key factor being that each customer would stop in front of the turnstile while scanning the mobile app.

4.2. Browse

- We barely found any irregular behavior, such as stealing and eating food in the store. This phenomenon was observed because the customers were already aware of the in-store surveillance operation before entering.

- The action in which a customer put the selected product back in place or left it anywhere was considered regular. A stakeholder perspective was discussed where alternatives occurred from the promotion of other products. The customer would prefer to replace the original one after picking up a product.

- A store staff was considered to set the products in order within the specified time. Thus, the autonomous store still involved manual operation for disordered products.

4.3. Pick Up

- Through customer experience, the process in which a customer picked up a product and put it into the cart or passed it to other people was regarded as “selected.” At this moment, the people–item association was recognized. The product was simultaneously recognized as “sold” if continuously remaining in a specific customer’s cart.

- We found that pick-up behaviors were not recognized by a single action from a customer; other sequential actions, such as putting products in a cart, exchanging products on the shelf, or handing products over to other people, also had to be considered.

- The customers browsed products through their eyes instead of touching them because of the in-store surveillance operation.

- Customer behaviors were observed and recognized to be more complicated in the pick-up step because customers spent more time in selecting different products and making decisions.

4.4. Check Out

- In this system learning journey, each product was regarded as “paid” in the process when it, in association with a customer, crossed the checkout line. The system synchronized a receipt service and inventory operation after the customer passed the checkout line.

- We found that a few consumers went back into the store through the checkout line within 5 min and made repeated attempts of repurchasing or replacing products. This behavior was regarded as an abnormal action. However, from the perspectives of in-store staff, the product was regarded as sold once it crossed the checkout line even if it was put back in place.

- The product quality degrades if replacing behavior from consumer is not appropriately controlled. Some quality issues related to sold products cannot be solved in the real environment. An alternative of manually issued refunds is suggested.

5. Future Opportunities

5.1. Understanding Complicated Behavior

5.2. Diverse Retail Environment

5.3. Autonomous Store Maturity

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- The UN Sustainable Development Goals 9: Industry, Innovation and Infrastructure. Available online: https://www.un.org/sustainabledevelopment/ (accessed on 20 April 2021).

- Bradlow, E.T.; Gangwar, M.; Kopalle, P.; Voleti, S. The Role of Big Data and Predictive Analytics in Retailing. J. Retail. 2017, 93, 79–95. [Google Scholar] [CrossRef]

- Grewal, D.; Noble, S.M.; Roggeveen, A.L.; Nordfalt, J. The Future of In-store Technology. J. Acad. Mark. Sci. 2020, 48, 96–113. [Google Scholar] [CrossRef]

- Grewal, D.; Roggeveen, A.L.; Nordfalt, J. The Future of Retailing. J. Retail. 2017, 93, 1–6. [Google Scholar] [CrossRef]

- Inman, J.J.; Nikolova, H. Shopper-Facing Retail Technology: A Retailer Adoption Decision Framework Incorporating Shopper Attitudes and Privacy Concerns. J. Retail. 2017, 93, 7–28. [Google Scholar] [CrossRef]

- Lemon, K.N.; Peter, C.V. Understanding Customer Experience throughout the Customer Journey. J. Mark. 2016, 80, 69–96. [Google Scholar] [CrossRef]

- Andajani, E. Understanding Customer Experience Management in Retailing. Procedia Soc. Behav. Sci. 2015, 211, 629–633. [Google Scholar] [CrossRef]

- Gentile, G.; Spiller, N.; Noci, G. How to Sustain the Customer Experience: An Overview of Experience Components that Co-create Value with the Customer. Eur. Manag. J. 2007, 25, 395–410. [Google Scholar] [CrossRef]

- Willems, K.; Smolders, A.; Brengman, M.; Luyten, K.; Schoning, J. The Path-to-purchase is Paved with Digital Opportunities: An Inventory of Shopper-oriented Retail Technologies. Technol. Forecast. Soc. Chang. 2017, 124, 228–242. [Google Scholar] [CrossRef]

- Bitner, M.; Ostrom, A.; Meuter, M. Implementing Successful Self-Service Technologies. Acad. Manag. Exec. 2002, 16, 96–108. [Google Scholar] [CrossRef]

- Dabholkar, P.A. Consumer Evaluations of New Technology-based Self-options: An Investigation of Alternative Models of Service Quality. Int. J. Res. Mark. 1996, 13, 29–52. [Google Scholar] [CrossRef]

- Grewal, D.; Levy, M.; Kumar, V. Customer Experience Management in Retailing: An Organizing Framework. J. Retail. 2009, 85, 1–14. [Google Scholar] [CrossRef]

- Hsieh, C.T. Implementing Self-Service Technology to Gain Competitive Advantages. Commun. IIMA 2005, 5, 77–83. [Google Scholar]

- Meuter, M.; Ostrom, A.; Roundtree, R.; Bitner, M. Self-Service Technologies: Understanding Customer Satisfaction with Technology-Based Service Encounters. J. Mark. 2000, 64, 50–64. [Google Scholar] [CrossRef]

- Curran, J.; Meuter, M. Encouraging Existing Customers to Switch to Self-Service Technologies: Put a Little Fun in Their Lives. J. Mark. Theory Pract. 2007, 15, 283–298. [Google Scholar] [CrossRef]

- Lee, J.; Allaway, A. Effects of Personal Control on Adoption of Self-Service Technology Innovations. J. Serv. Mark. 2002, 16, 553–573. [Google Scholar] [CrossRef]

- Walker, R.; Johnson, L. Why Consumers Use and Do Not Use Technology-Enabled Services. J. Serv. Mark. 2006, 20, 125–135. [Google Scholar] [CrossRef]

- Roy, S.K.; Balaji, M.S.; Sadeque, S.; Nguyen, B.; Melewar, T.C. Constituents and Consequences of Smart Customer Experience in Retailing. Technol. Forecast. Soc. Chang. 2017, 124, 257–270. [Google Scholar] [CrossRef]

- Lin, J.S.; Hsieh, P.L. The Influence of Technology Readiness on Satisfaction and Behavioral Intentions toward Self-service Technologies. Comput. Hum. Behav. 2007, 23, 1597–1615. [Google Scholar] [CrossRef]

- Meuter, M.; Ostrom, A.; Bitner, M.; Roundtree, R. The Influence of Technology Anxiety on Consumer Use and Experiences with Self-service Technologies. J. Bus. Res. 2003, 56, 899–906. [Google Scholar] [CrossRef]

- Wood, S.P.; Chang, J.; Healy, T.; Wood, J. The Potential Regulatory Challenges of Increasingly Autonomous Motor Vehicles. St. Clara Law Rev. 2012, 52, 1423–1502. [Google Scholar]

- Demirkan, H.; Spohrer, J. Developing a Framework to Improve Virtual Shopping in Digital Malls with Intelligent Self-service Systems. J. Retail. Consum. Serv. 2014, 21, 860–868. [Google Scholar] [CrossRef]

- Guo, B.; Wang, Z.; Wang, P.; Xin, T.; Zhang, D.; Yu, Z. DeepStore: Understanding Customer Behavior in Unmanned Stores. IT Prof. 2020, 22, 55–63. [Google Scholar] [CrossRef]

- Liu, J.; Gu, Y.; Kamijo, S. Customer Behavior Recognition in Retail Store from Surveillance Camera. In Proceedings of the 2015 IEEE International Symposium on Multimedia (ISM), Miami, FL, USA, 14–16 December 2015; pp. 154–159. [Google Scholar]

- Mora, D.; Nalbach, O.; Werth, D. How Computer Vision Provides Physical Retail with a Better View on Customers. In Proceedings of the 2019 21st IEEE Conference on Business Informatics (CBI), Moscow, Russia, 15–17 July 2019; pp. 462–471. [Google Scholar]

- Neman, A.J.; Daniel, K.; Oulton, D.P. New Insights into Retail Space and Format Planning from Customer-tracking Data. J. Retail. Consum. Serv. 2002, 9, 253–258. [Google Scholar] [CrossRef]

- Singh, P.; Katiyar, N.; Verma, G. Retail Shoppability: The Impact of Store Atmospherics & Store Layout on Consumer Buying Patterns. Int. J. Sci. Technol. Res. 2014, 3, 15–23. [Google Scholar]

- Polacco, A.; Backes, K. The Amazon Go Concept: Implications, Applications, and Sustainability. J. Bus. Manag. 2018, 24, 79–92. [Google Scholar]

- Senior, A.W.; Brown, L.; Hampapur, A.; Shu, C.F.; Zhai, Y.; Feris, R.S.; Tian, Y.L.; Borger, S.; Carlson, C. Video Analysis for Retail. In Proceedings of the Advanced Video and Signal Based Surveillance (AVSS), London, UK, 5–7 September 2007; pp. 423–428. [Google Scholar]

- Ross, D.A.; Lim, J.; Lin, R.S.; Yang, M.H. Incremental Learning for Robust Visual Tracking. Int. J. Comput. Vis. 2008, 77, 125–141. [Google Scholar] [CrossRef]

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual Tracking: An Experimental Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1442–1468. [Google Scholar] [PubMed]

- Weber, F.; Schutte, R. A Domain-Oriented Analysis of the Impact of Machine Learning—The Case of Retailing. Big Data Cogn. Comput. 2019, 3, 11. [Google Scholar] [CrossRef]

- Grewal, D.; Roggeveen, A.L. Understanding Retail Experiences and Customer Journey Management. J. Retail. 2020, 96, 3–8. [Google Scholar] [CrossRef]

- Lo, C.H.; Wang, Y.W. Constructing an Evaluation Model for User Experience in an Unmanned Store. Sustainability 2019, 11, 4965. [Google Scholar] [CrossRef]

- Roy, S.K.; Balaji, M.S.; Nguyen, B. Consumer-Computer Interaction and In-Store Smart Technology (IST) in the Retail Industry: The Role of Motivation, Opportunity, and Ability. J. Mark. Manag. 2020, 36, 299–333. [Google Scholar] [CrossRef]

- Grewal, D.; Roggeveen, A.L.; Sisodia, R.; Nordfalt, J. Enhancing Customer Engagement through Consciousness. J. Retail. 2017, 93, 55–64. [Google Scholar] [CrossRef]

- Wu, H.C.; Ai, C.H.; Cheng, C.C. Experiential Quality, Experiential Psychological States and Experiential Outcomes in an Unmanned Convenience Store. J. Retail. Consum. Serv. 2014, 51, 860–868. [Google Scholar] [CrossRef]

- Cole, R.; Purao, S.; Rossi, M.; Sein, M.K. Being Proactive: Where Action Research meets Design Research. In Proceedings of the International Conference on Information Systems (ICIS), Las Vegas, NV, USA, 11–14 December 2005; pp. 325–336. [Google Scholar]

- Lee, J.; Wyner, G.; Pentland, B. Process Grammar as a Tool for Business Process Design. MIS Q. 2008, 32, 757–778. [Google Scholar] [CrossRef]

- Hevner, A.R.; March, S.T.; Park, J. Design Science in Information Research. MIS Q. 2004, 28, 75–105. [Google Scholar] [CrossRef]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A Design Science Research Methodology for Information Systems Research. J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Baskerville, R.L. Investigating Information Systems with Action Research. Commun. Assoc. Inf. Syst. 1999, 2, 2–32. [Google Scholar] [CrossRef]

- Hult, M.; Lennung, S.Å. Towards a Definition of Action Research: A Note and Bibliography. J. Manag. Stud. 1980, 17, 241–250. [Google Scholar] [CrossRef]

- Baskerville, R.L.; Wood-Harper, T. A Critical Perspective on Action Research as a Method for Information Systems Research. J. Inf. Technol. 1996, 11, 235–246. [Google Scholar] [CrossRef]

- Lewin, K. Action research and minority problems. J. Soc. Issues 1946, 2, 34–46. [Google Scholar] [CrossRef]

- Sein, M.K.; Henfridsson, O.; Purao, S.; Rossi, M.; Lindgren, R. Action Design Research. MIS Q. 2011, 35, 37–56. [Google Scholar] [CrossRef]

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-road Motor Vehicles (SAE Standard J3016, Report No. J3016-201806); SAE International: Warrendale, PA, USA, 2018. [Google Scholar]

| Role | Responsibility | Number |

|---|---|---|

| Store staff | To interpret the possible intention of shoppers in the shopping journey and provide operation experience in service, such as product offering, space design, and Stock Keeping Unit management | 2 |

| Experienced consumer | To share in-store shopping experience and possible reactions in the browsing, selecting, exchange, and checkout processes | 3 |

| Engineer | To provide technical practicability in product display, shopper characteristics, camera setting, and computing capability | 2 |

| Confidence Level (CL) | Occurrence Number | Response Strategy |

|---|---|---|

| 1 | <10 | Human-assisted |

| 2 | 11–100 | Human-assisted |

| 3 | 101–500 | Self-identified |

| 4 | 501–1000 | Self-identified |

| 5 | >1001 | Self-identified |

| Stage | |||

|---|---|---|---|

| I | II | III | |

| Test Period (Day) | 1 | 3 | 4 |

| Rule Number | 8 | 8 | 4 |

| Evaluation | Converge to a common view to obtain pre-defined rules | Identify and increment rules by detecting customer behaviors | Continue recognizing rules to improve the system’s learning performance |

| Step | Rules | Type | CL in Stage | ||

|---|---|---|---|---|---|

| I | II | III | |||

| Check in | If a customer is alone, they use the mobile app to be permitted to enter the store. | Normal | 1 | 5 | 5 |

| Check in | If customers are wearing similar clothes, they are identified separately and considered overlapping in the store. | Normal | - | 2 | 3 |

| Check in | If a group of friends has only one app, the app account owner identifies each person wanting to enter with their app and all actions are linked to the same account. | Normal | 1 | 2 | 3 |

| Check in | If an employee is at work, they can be identified and permitted to enter the store through the mobile app but cannot shop. | Normal | - | 1 | 2 |

| Check in | If a customer enters with a kid in the stroller or on the shoulder, they are identified and tracked using the same account. | Normal | - | 1 | 2 |

| Check in | If a customer’s face is not visible, they are identified by their other body features instead. | Abnormal | - | 1 | 2 |

| Browse | If a customer picks up and then puts back a product, the product is removed from the virtual basket. | Normal | 1 | 5 | 5 |

| Browse | If a product is grabbed at one place and then put back at another place in the store, it is tracked. The product is removed from the customer’s virtual basket. | Normal | 1 | 3 | 4 |

| Browse | If a customer is trying to steal or exchange fake products in an irregular behavior, they are identified to be tracked and annotated to the mobile app. | Abnormal | - | - | 1 |

| Browse | If a customer eats a product and then puts back the packaging on the shelf, they are identified to be charged and annotated to the mobile app. | Abnormal | - | - | 1 |

| Pick up | If a product is grabbed by a customer, it is added to their virtual basket. | Normal | 1 | 5 | 5 |

| Pick up | If two or more items of the same product are grabbed by a customer, these are added to their virtual basket. | Normal | - | 1 | 3 |

| Pick up | If the product is grabbed and validated in the customer’s hand or bag, it is added to their virtual basket. | Normal | 1 | 5 | 5 |

| Pick up | If a customer passes on a product to another customer, it is identified as a transfer action and updated in the virtual basket if they are using different accounts. | Normal | - | 1 | 2 |

| Pick up | If a customer grabs a product but not with his hands, this product is identified to be added to their virtual basket. | Abnormal | - | - | 1 |

| Pick up | If a customer picks up a product lying on the floor and puts it back on the shelf, this product is not added to their virtual basket. | Abnormal | - | 1 | 2 |

| Pick up | If a customer enters the store with a product that is also sold in the store, it is recognized while they are entering the store. | Abnormal | - | 1 | 3 |

| Check out | If a customer passes through the store exit line, they are detected and recognized as leaving the store. | Normal | 1 | 5 | 5 |

| Check out | If a customer passes through the store exit line, they can receive an invoice on the mobile app with the correct shopping item details and price within 3 min. | Normal | 1 | 5 | 5 |

| Check out | If a customer passes through the store exit line and turns back to the exit line immediately, their shopping process is identified as ongoing. | Abnormal | - | - | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.-C.; Shang, S.S.C. Sustaining User Experience in a Smart System in the Retail Industry. Sustainability 2021, 13, 5090. https://doi.org/10.3390/su13095090

Chen S-C, Shang SSC. Sustaining User Experience in a Smart System in the Retail Industry. Sustainability. 2021; 13(9):5090. https://doi.org/10.3390/su13095090

Chicago/Turabian StyleChen, Sheng-Chi, and Shari S. C. Shang. 2021. "Sustaining User Experience in a Smart System in the Retail Industry" Sustainability 13, no. 9: 5090. https://doi.org/10.3390/su13095090

APA StyleChen, S.-C., & Shang, S. S. C. (2021). Sustaining User Experience in a Smart System in the Retail Industry. Sustainability, 13(9), 5090. https://doi.org/10.3390/su13095090