Human-Centered Artificial Intelligence: The Superlative Approach to Achieve Sustainable Development Goals in the Fourth Industrial Revolution

Abstract

:1. Introduction

2. Fourth Industrial Revolution, Artificial Intelligence, Sustainable Development, and the Global Goals

2.1. The Fourth Industrial Revolution (4IR)

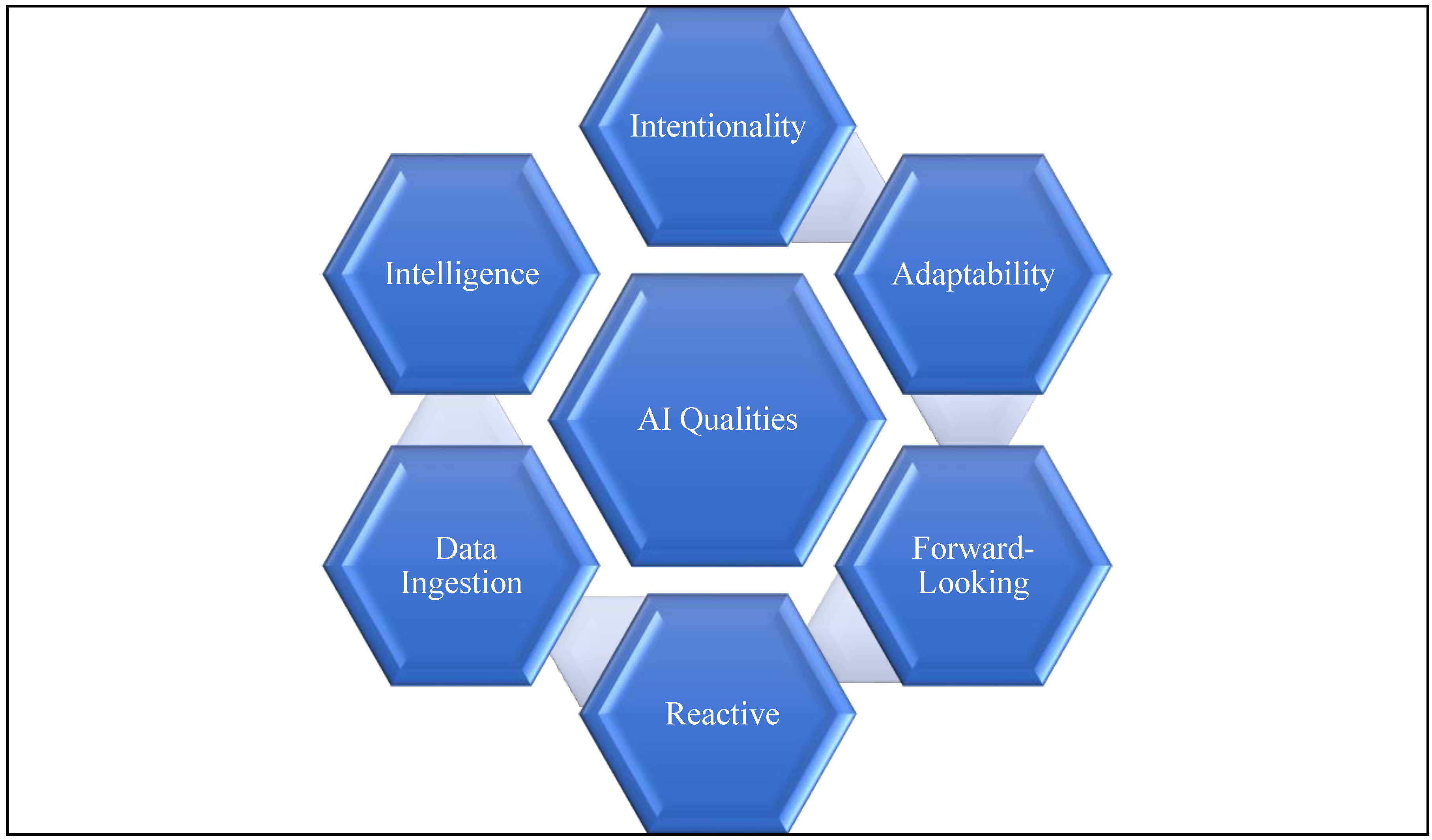

2.2. The Background and Definition of Artificial Intelligence

2.3. The Three Main Groups of AI

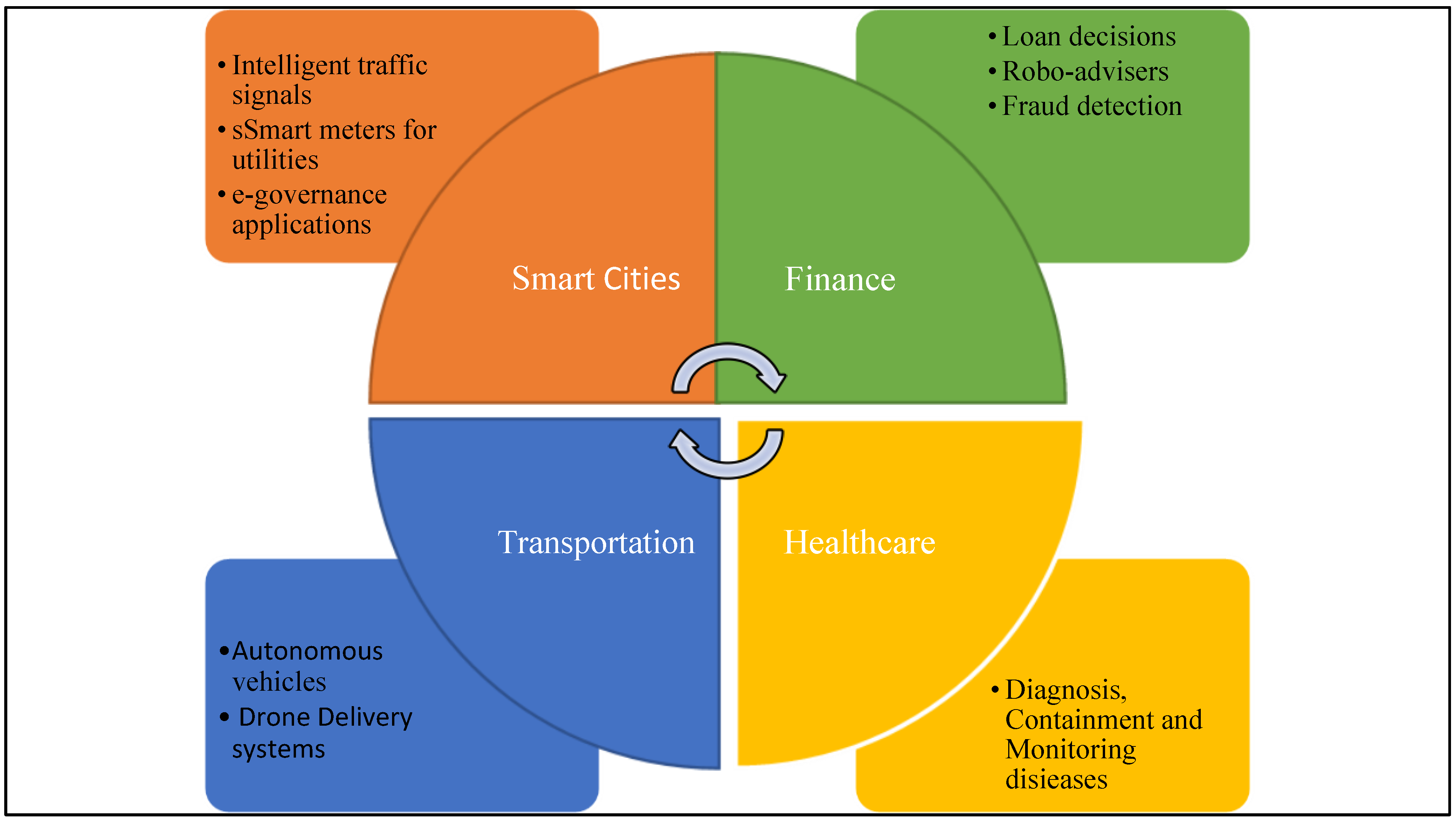

2.4. Applications of AI in Real Life Situations

2.5. Research in Artificial Intelligence

2.6. Sustainable Development and the Global Goals

2.7. The Global Goals

3. Empirical Literature Review

4. Research Methodology

5. Results and Discussion

5.1. The Meaning of Human-Centred AI

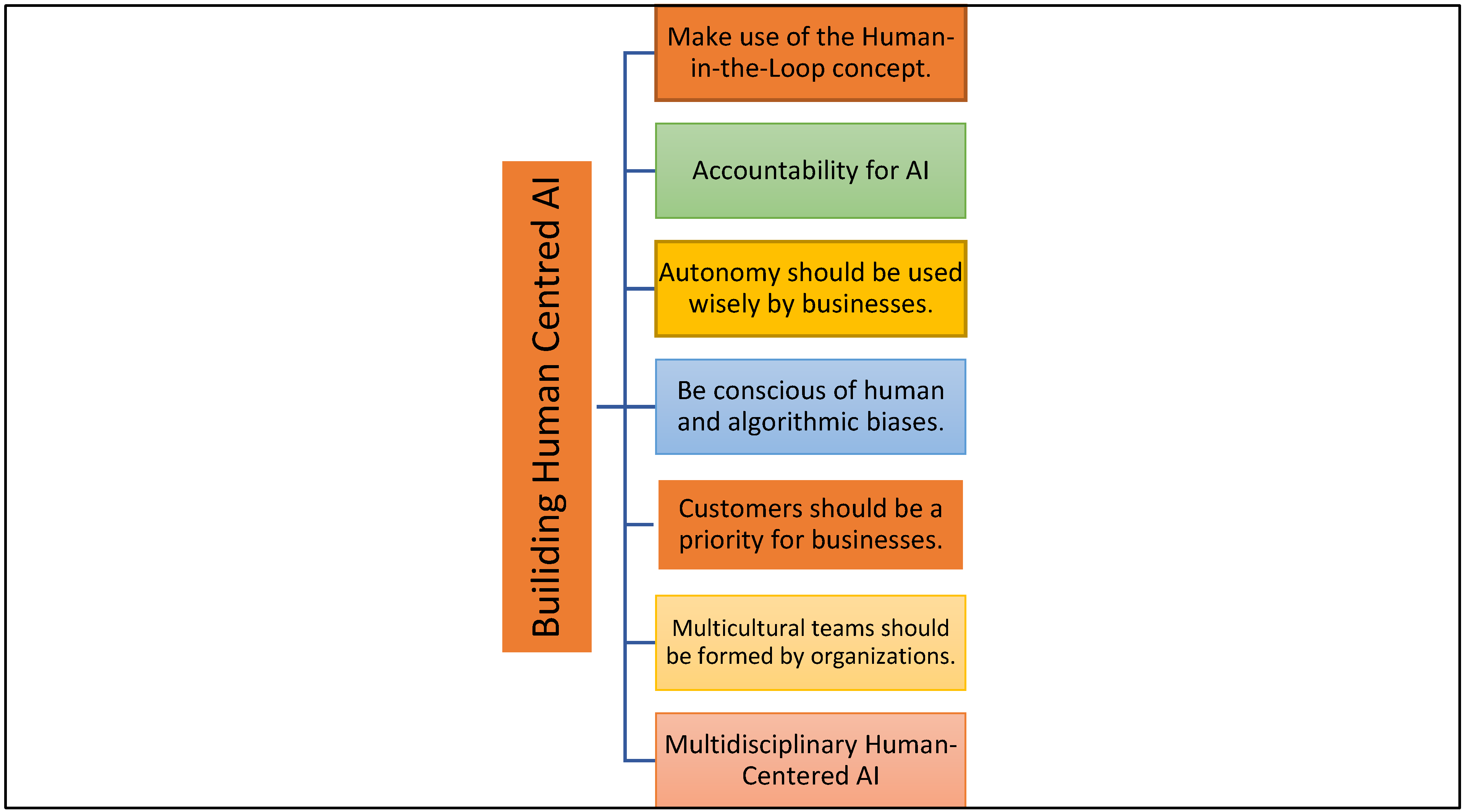

5.2. How to Create AI That Is Human-Centred

6. The Policy Recommendations for Human-Centred AI to Assist in the Attainment of the SDGs

6.1. Increase Governments Investment in AI

6.2. Addressing Data and Algorithm Biases

6.3. Resolving Data Access Issues

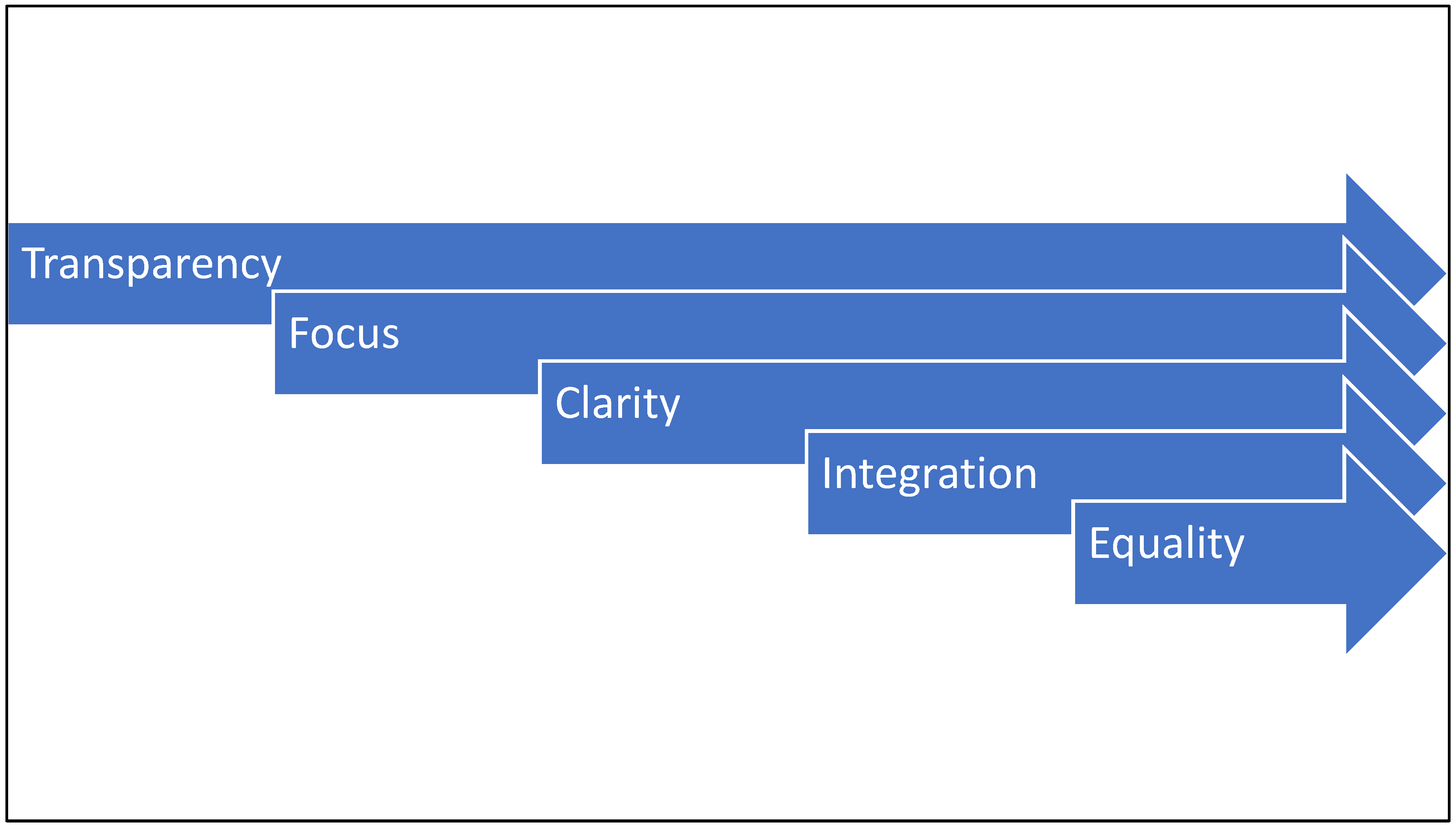

6.4. Addressing Concerns of AI Ethics and Transparency

6.5. Maintaining Mechanisms for Human Oversight and Control

7. Conclusions and Policy Recommendations

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Williams, O. Towards Human-Centred Explainable AI: A Systematic Literature Review. Master’s Thesis, University of Birmingham, Birmingham, UK, 2021. [Google Scholar]

- West, D.M.; Allen, J.R. How Artificial Intelligence Is Transforming the World. 2018. Available online: https://www.brookings.edu/research/how-artificial-intelligence-is-transforming-the-world/ (accessed on 20 January 2022).

- Davenport, T.; Loucks, J.; Schatsky, D. Bullish on the Business Value of Cognitive; Deloitte: London, UK, 2017; Available online: www2.deloitte.com/us/en/pages/deloitte-analytics/articles/cognitive-technology-adoption-survey.html (accessed on 22 January 2022).

- Mhlanga, D. The Role of Artificial Intelligence and Machine Learning Amid the COVID-19 Pandemic: What Lessons Are We Learning on 4IR and the Sustainable Development Goals. Int. J. Environ. Res. Public Health 2022, 19, 1879. [Google Scholar] [CrossRef] [PubMed]

- PriceWaterhouseCoopers. Sizing the Prize: What’s the Real Value of AI for Your Business and How Can You Capitalise? 2017. Available online: https://www.pwc.com.au/government/pwc-ai-analysis-sizing-the-prize-report.pdf (accessed on 13 February 2022).

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable ai: A review of machine learning interpretability methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- Capone, L.; Bertolaso, M. A Philosophical Approach for a Human-Centered Explainable AI. In Proceedings of the XAI. it@ AI* IA, Online Event, 25–26 November 2020; pp. 80–86. [Google Scholar]

- Wang, Y. When artificial intelligence meets educational leaders’ data-informed decision-making: A cautionary tale. Stud. Educ. Eval. 2021, 69, 100872. [Google Scholar] [CrossRef]

- Vinuesa, R.; Azizpour, H.; Leite, I.; Balaam, M.; Dignum, V.; Domisch, S.; Felländer, A.; Langhans, S.D.; Tegmark, M.; Fuso Nerini, F. The role of artificial intelligence in achieving the Sustainable Development Goals. Nat. Commun. 2020, 11, 233. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goralski, M.A.; Tan, T.K. Artificial intelligence and sustainable development. Int. J. Manag. Educ. 2020, 18, 100330. [Google Scholar] [CrossRef]

- Di Vaio, A.; Palladino, R.; Hassan, R.; Escobar, O. Artificial intelligence and business models in the sustainable development goals perspective: A systematic literature review. J. Bus. Res. 2020, 121, 283–314. [Google Scholar] [CrossRef]

- Truby, J. Governing artificial intelligence to benefit the UN sustainable development goals. Sustain. Dev. 2020, 28, 946–959. [Google Scholar] [CrossRef]

- How, M.L.; Cheah, S.M.; Khor, A.C.; Chan, Y.J. Artificial intelligence-enhanced predictive insights for advancing financial inclusion: A human-centric ai-thinking approach. Big Data Cogn. Comput. 2020, 4, 8. [Google Scholar] [CrossRef]

- Awan, U.; Kanwal, N.; Alawi, S.; Huiskonen, J.; Dahanayake, A. Artificial intelligence for supply chain success in the era of data analytics. In The Fourth Industrial Revolution: Implementation of Artificial Intelligence for Growing Business Success; Springer: Cham, Switzerland, 2021; pp. 3–21. [Google Scholar]

- Rožanec, J.; Trajkova, E.; Novalija, I.; Zajec, P.; Kenda, K.; Fortuna, B.; Mladenić, D. Enriching Artificial Intelligence Explanations with Knowledge Fragments. Future Internet 2022, 14, 134. [Google Scholar] [CrossRef]

- Mhlanga, D. Financial inclusion in emerging economies: The application of machine learning and artificial intelligence in credit risk assessment. Int. J. Financ. Stud. 2021, 9, 39. [Google Scholar] [CrossRef]

- Mhlanga, D. Stakeholder Capitalism, the Fourth Industrial Revolution (4IR), and Sustainable Development: Issues to Be Resolved. Sustainability 2022, 14, 3902. [Google Scholar] [CrossRef]

- Kok, J.N.; Boers, E.J.; Kosters, W.A.; Van der Putten, P.; Poel, M. Artificial intelligence: Definition, trends, techniques, and cases. Artif. Intell. 2009, 1, 270–299. [Google Scholar]

- Ramesh, A.N.; Kambhampati, C.; Monson, J.R.; Drew, P.J. Artificial intelligence in medicine. Ann. R. Coll. Surg. Engl. 2004, 86, 334. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kaplan, A.; Haenlein, M. Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus. Horiz. 2019, 62, 15–25. [Google Scholar] [CrossRef]

- Hassabis, D.; Kumaran, D.; Summerfield, C.; Botvinick, M. Neuroscience-inspired artificial intelligence. Neuron 2017, 95, 245–258. [Google Scholar] [CrossRef] [Green Version]

- Goertzel, B. Artificial general intelligence: Concept, state of the art, and future prospects. J. Artif. Gen. Intell. 2014, 5, 1. [Google Scholar] [CrossRef] [Green Version]

- Yampolskiy, R.; Fox, J. Safety engineering for artificial general intelligence. Topoi 2013, 32, 217–226. [Google Scholar] [CrossRef] [Green Version]

- Grudin, J.; Jacques, R. Chatbots, humbots, and the quest for artificial general intelligence. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–11. [Google Scholar]

- Ranjitha, S. What is Artificial General Intelligence? 2021. Available online: https://www.mygreatlearning.com/blog/artificial-general-intelligence/ (accessed on 3 January 2022).

- Zorins, A.; Grabusts, P. Safety of artificial superintelligence. In Proceedings of the 12th International Scientific and Practical Conference, Kyiv, Ukraine, 24–25 October 2019; Volume 2, pp. 180–183. [Google Scholar]

- Ghanchi, J. What Is Artificial Superintelligence? How Is It Different from Artificial General Intelligence? 2020. Available online: https://itchronicles.com/artificial-intelligence/what-is-artificial-superintelligence-how-is-it-different-from-artificial-general-intelligence/ (accessed on 6 January 2022).

- Nature Index. Top 100 Academic Institutions in Artificial Intelligence; Springer Nature: London, UK, 2020. [Google Scholar]

- Crew, B. Artificial-intelligence research escalates amid calls for caution. Nature 2020, 588, S101. [Google Scholar] [CrossRef]

- Savage, N. The race to the top among the world’s leaders in artificial intelligence. Nature 2020, 588, S102. [Google Scholar] [CrossRef]

- Duran, D.C.; Gogan, L.M.; Artene, A.; Duran, V. The components of sustainable development-a possible approach. Procedia Econ. Financ. 2015, 26, 806–811. [Google Scholar] [CrossRef] [Green Version]

- Dasgupta, P. The idea of sustainable development. Sustain. Sci. 2007, 2, 5–11. [Google Scholar] [CrossRef]

- Rees, W.E. Defining “Sustainable Development”; University of British Columbia, Centre for Human Settlements: Vancouver, BC, Canada, 1989. [Google Scholar]

- Tomislav, K. The concept of sustainable development: From its beginning to the contemporary issues. Zagreb Int. Rev. Econ. Bus. 2018, 21, 67–94. [Google Scholar]

- Redclift, M. The meaning of sustainable development. Geoforum 1992, 23, 395–403. [Google Scholar] [CrossRef]

- UNICEF. UNICEF and the Sustainable Development Goals. Investing in Children and Young People to Achieve a More Equitable, Just and Sustainable World for All. 2022. Available online: https://www.unicef.org/sdgs (accessed on 23 January 2022).

- Van Wynsberghe, A. Sustainable AI: AI for sustainability and the sustainability of AI. AI Ethics 2021, 1, 213–218. [Google Scholar] [CrossRef]

- Astobiza, A.M.; Toboso, M.; Aparicio, M.; López, D. AI Ethics for Sustainable Development Goals. IEEE Technol. Soc. Mag. 2021, 40, 66–71. [Google Scholar] [CrossRef]

- Jentzsch, S.F.; Höhn, S.; Hochgeschwender, N. Conversational interfaces for explainable AI: A human-centred approach. In Proceedings of the International Workshop on Explainable, Transparent Autonomous Agents and Multi-Agent Systems, Montreal, QC, USA, 13–14 May 2019; Springer: Cham, Switzerland, 2019; pp. 77–92. [Google Scholar]

- Van Berkel, N.; Tag, B.; Goncalves, J.; Hosio, S. Human-centred artificial intelligence: A contextual morality perspective. Behav. Inf. Technol. 2022, 41, 502–518. [Google Scholar] [CrossRef]

- Pisoni, G.; Díaz-Rodríguez, N.; Gijlers, H.; Tonolli, L. Human-centred artificial intelligence for designing accessible cultural heritage. Appl. Sci. 2021, 11, 870. [Google Scholar] [CrossRef]

- De Cremer, D.; Narayanan, D.; Deppeler, A.; Nagpal, M.; McGuire, J. The road to a human-centred digital society: Opportunities, challenges and responsibilities for humans in the age of machines. AI Ethics 2021, 1–5. [Google Scholar] [CrossRef]

- Linnenluecke, M.K.; Marrone, M.; Singh, A.K. Conducting systematic literature reviews and bibliometric analyses. Aust. J. Manag. 2020, 45, 175–194. [Google Scholar] [CrossRef]

- Kitchenham, B.; Brereton, O.P.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering–a systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Fisch, C.; Block, J. Six tips for your (systematic) literature review in business and management research. Manag. Rev. Q. 2018, 68, 103–106. [Google Scholar] [CrossRef] [Green Version]

- Pittway, L. Systematic literature reviews. In The SAGE Dictionary of Qualitative Management Research; Thorpe, R., Holt, R., Eds.; SAGE Publications Ltd: Thousand Oaks, CA, USA, 2008. [Google Scholar] [CrossRef]

- IBM. What Is Human-Centered AI? 2022. Available online: https://research.ibm.com/blog/what-is-human-centered-ai (accessed on 10 March 2022).

- Cognizant. Human-Centered Artificial Intelligence, What Is Human-Centered AI? 2022. Available online: https://www.cognizant.com/us/en/glossary/human-centered-ai (accessed on 10 March 2022).

- Appen. The Future of AI Needs a Human Touch. 2021. Available online: https://appen.com/blog/what-is-human-centered-ai/ (accessed on 10 March 2022).

- Shneiderman, B. Human-centred AI. Issues Sci. Technol. 2021, 37, 56–61. [Google Scholar]

- Shneiderman, B. Human-centred artificial intelligence: Reliable, safe trustworthy. Int. J. Hum.-Comput. Interact. 2020, 36, 495–504. [Google Scholar] [CrossRef] [Green Version]

- Wang, D.; Yang, Q.; Abdul, A.; Lim, B.Y. Designing theory-driven user-centric explainable AI. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–15. [Google Scholar]

- Allam, Z.; Jones, D.S. On the coronavirus (COVID-19) outbreak and the smart city network: Universal data sharing standards coupled with artificial intelligence (AI) to benefit urban health monitoring and management. Healthcare 2020, 8, 46. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Davidson, L.; Boland, M.R. Enabling pregnant women and their physicians to make informed medication decisions using artificial intelligence. J. Pharmacokinet. Pharmacodyn. 2020, 47, 305–318. [Google Scholar] [CrossRef] [Green Version]

- Mengoni, M.; Giraldi, L.; Ceccacci, S.; Montanari, R. How to Create Engaging Experiences from Human Emotions. diid disegno Ind. Ind. Des. 2021, 74, 10. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-centred artificial intelligence: Three fresh ideas. AIS Trans. Hum.-Comput. Interact. 2020, 12, 109–124. [Google Scholar] [CrossRef]

- Roggeveen, A.L.; Rosengren, S. From customer experience to human experience: Uses of systematized and non-systematized knowledge. J. Retail. Consum. Serv. 2022, 67, 102967. [Google Scholar] [CrossRef]

- Lepri, B.; Oliver, N.; Pentland, A. Ethical machines: The human-centric use of artificial intelligence. IScience 2021, 24, 102249. [Google Scholar] [CrossRef]

- Shneiderman, B. Bridging the gap between ethics and practice: Guidelines for reliable, safe, and trustworthy human-centred AI systems. ACM Trans. Interact. Intell. Syst. 2020, 10, 1–31. [Google Scholar] [CrossRef]

- Cranor, L.F. A Framework for Reasoning about the Human in the Loop; USENIX: Berkley, CA, USA, 2008. [Google Scholar]

- Holzinger, A. Interactive machine learning for health informatics: When do we need the human-in-the-loop? Brain Inform. 2016, 3, 119–131. Available online: https://www.natureindex.com/supplements/nature-index-2020-ai/tables/academic (accessed on 10 March 2022). [CrossRef] [PubMed] [Green Version]

- Emmanouilidis, C.; Pistofidis, P.; Bertoncelj, L.; Katsouros, V.; Fournaris, A.; Koulamas, C.; Ruiz-Carcel, C. Enabling the human in the loop: Linked data and knowledge in industrial cyber-physical systems. Annu. Rev. Control 2019, 47, 249–265. [Google Scholar] [CrossRef]

- Hengstler, M.; Enkel, E.; Duelli, S. Applied artificial intelligence and trust—The case of autonomous vehicles and medical assistance devices. Technol. Forecast. Soc. Chang. 2016, 105, 105–120. [Google Scholar] [CrossRef]

- Fan, F. Study on the Cause of Car Accidents at Intersections. Open Access Libr. J. 2018, 5, 1. [Google Scholar] [CrossRef]

- Aljaban, M. Analysis of Car Accidents Causes in the USA; Rochester Institute of Technology: Rochester, NY, USA, 2021. [Google Scholar]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. CSUR 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Panch, T.; Mattie, H.; Atun, R. Artificial intelligence and algorithmic bias: Implications for health systems. J. Glob. Health 2019, 9, 020318. [Google Scholar] [CrossRef]

- Price, F.L. The Influence of Cultural Intelligence (CQ) on Multicultural Team Performance. Ph.D. Dissertation, Saint Leo University, Saint Leo, FL, USA, 2021. [Google Scholar]

- Titu, A.M.; Stanciu, A.; Mihaescu, L. Technological and ethical aspects of autonomous driving in a multicultural society. In Proceedings of the 2020 12th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Bucharest, Romania, 25–27 June 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Ntoutsi, E.; Fafalios, P.; Gadiraju, U.; Iosifidis, V.; Nejdl, W.; Vidal, M.E.; Ruggieri, S.; Turini, F.; Papadopoulos, S.; Krasanakis, E.; et al. Bias in data-driven artificial intelligence systems—An introductory survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1356. [Google Scholar] [CrossRef] [Green Version]

- Zuiderveen Borgesius, F. Discrimination, Artificial Intelligence, and Algorithmic Decision-Making; University of Amsterdam: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Edelman, B.; Luca, M.; Svirsky, D. Racial discrimination in the sharing economy: Evidence from a field experiment. Am. Econ. J. Appl. Econ. 2017, 9, 1–22. [Google Scholar] [CrossRef] [Green Version]

- McKinsey. The State of AI in 2020. Available online: https://www.mckinsey.com/business-functions/quantumblack/our-insights/global-survey-the-state-of-ai-in-2020 (accessed on 10 March 2020).

- Stevens Institute of Technology. Artificial Intelligence Expert Etzioni: To Harvest the True Potential of AI, Get Beyond Hype and Hysteria. 2017. Available online: https://www.stevens.edu/news/artificial-intelligence-expert-etzioni-harvest-true-potential-ai-get-beyond-hype-and-hysteria (accessed on 12 March 2022).

- The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. 2022. Available online: https://standards.ieee.org/industry-connections/ec/autonomous-systems/ (accessed on 12 March 2022).

| Number | Institution | Location | Share 2015–2019 | Count 2015-2019 | International Articles (%) |

|---|---|---|---|---|---|

| 1 | Harvard University | United States of America (USA) | 331.08 | 937 | 57.0% |

| 2 | Stanford University | United States of America (USA) | 257.90 | 629 | 54.4% |

| 3 | Massachusetts Institute of Technology (MIT) | United States of America (USA) | 209.04 | 620 | 59.4% |

| 4 | Max Planck Society | Germany | 167.98 | 628 | 83.0% |

| 5 | University of Oxford | United Kingdom (UK) | 132.34 | 495 | 85.3% |

| 6 | University of Cambridge | United Kingdom (UK) | 130.68 | 485 | 84.9% |

| 7 | Chinese Academy of Sciences (CAS) | China | 130.00 | 492 | 73.2% |

| 8 | UCL | United Kingdom (UK) | 129.70 | 415 | 77.1% |

| 9 | Columbia University in the City of New York (CU) | United States of America (USA) | 127.56 | 386 | 61.9% |

| 10 | National Institutes of Health (NIH) | United States of America (USA) | 122.69 | 302 | 52.0% |

| Number | Institution | Location | Share 2015–2019 | Count 2015–2019 | International Articles (%) |

|---|---|---|---|---|---|

| 90 | Cold Spring Harbor Laboratory (CSHL) | United States of America (USA) | 23.15 | 54 | 44.4% |

| 91 | Dartmouth College | United States of America (USA) | 22.89 | 53 | 45.3% |

| 92 | Purdue University | United States of America (USA) | 22.78 | 98 | 74.5% |

| 93 | Carnegie Mellon University (CMU) | United States of America (USA) | 22.77 | 99 | 58.6% |

| 94 | Utrecht University (UU) | Netherlands | 22.61 | 87 | 83.9% |

| 95 | Mount Sinai Health System (MSHS) | United States of America (USA) | 22.38 | 108 | 63.9% |

| 96 | Fudan University | China | 22.14 | 77 | 72.7% |

| 97 | National Institute for Nuclear Physics (INFN) | Italy | 22.14 | 233 | 97.0% |

| 98 | Tel Aviv University (TAU) | Israel | 22.05 | 137 | 86.9% |

| 99 | National University of Singapore (NUS) | Singapore | 21.81 | 84 | 85.7% |

| 100 | University of Science and Technology of China (USTC) | China | 21.50 | 119 | 78.2% |

| Journal Articles | Reports | Media Articles | Others |

|---|---|---|---|

| 55 | 25 | 30 | 20 |

| Journals articles used were those published in 2000 upwards. Work from previous years was also considered but the focus was mainly 2000 upwards. Publishers-Springer Nature, Multidisciplinary Publishing, Es, Elsevier Institute of Electrical and Electronics Engineers, etc. | Reports from United Nations, The World Bank, The World Economic Forum, and Development (OECD) among others were also considered in the study. | Media articles were also considered mainly from countries such as the United State of America, South Africa, and the United Kingdom among other nations. | Various other documents were consulted to come up with the ideas that shaped the trajectory of the study. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mhlanga, D. Human-Centered Artificial Intelligence: The Superlative Approach to Achieve Sustainable Development Goals in the Fourth Industrial Revolution. Sustainability 2022, 14, 7804. https://doi.org/10.3390/su14137804

Mhlanga D. Human-Centered Artificial Intelligence: The Superlative Approach to Achieve Sustainable Development Goals in the Fourth Industrial Revolution. Sustainability. 2022; 14(13):7804. https://doi.org/10.3390/su14137804

Chicago/Turabian StyleMhlanga, David. 2022. "Human-Centered Artificial Intelligence: The Superlative Approach to Achieve Sustainable Development Goals in the Fourth Industrial Revolution" Sustainability 14, no. 13: 7804. https://doi.org/10.3390/su14137804

APA StyleMhlanga, D. (2022). Human-Centered Artificial Intelligence: The Superlative Approach to Achieve Sustainable Development Goals in the Fourth Industrial Revolution. Sustainability, 14(13), 7804. https://doi.org/10.3390/su14137804