A Vision-Based Collision Monitoring System for Proximity of Construction Workers to Trucks Enhanced by Posture-Dependent Perception and Truck Bodies’ Occupied Space

Abstract

:1. Introduction

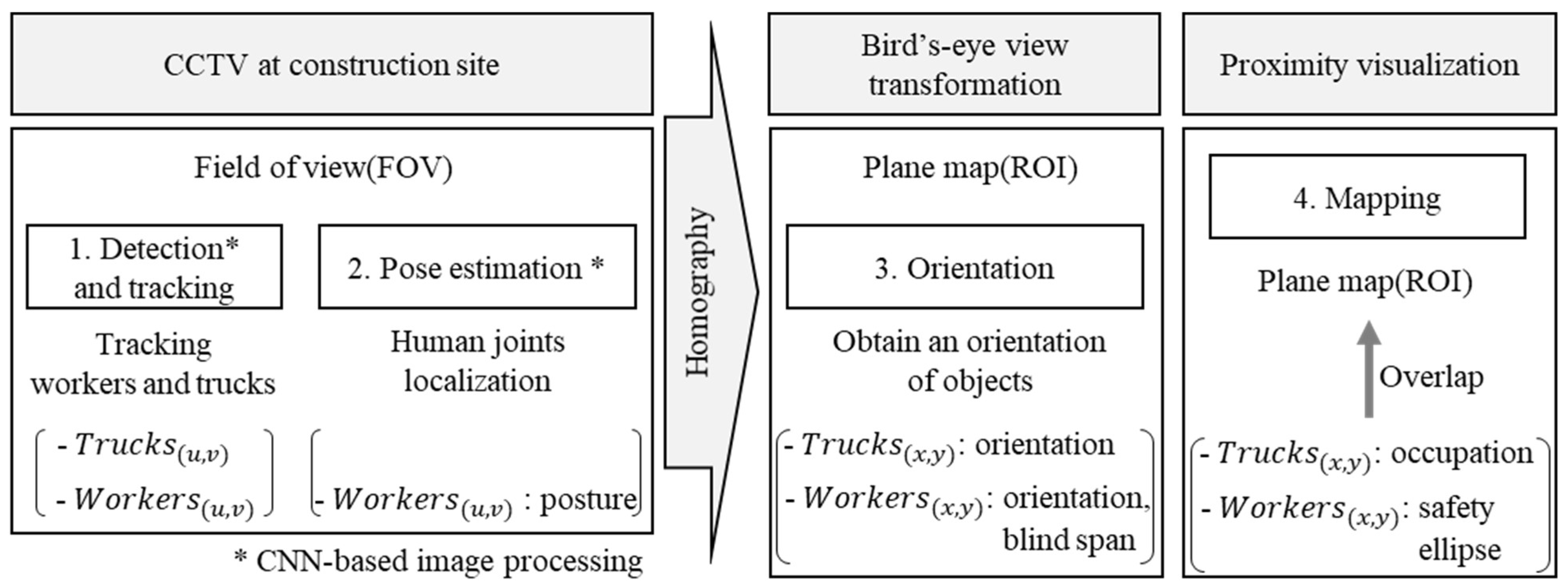

2. Methodology

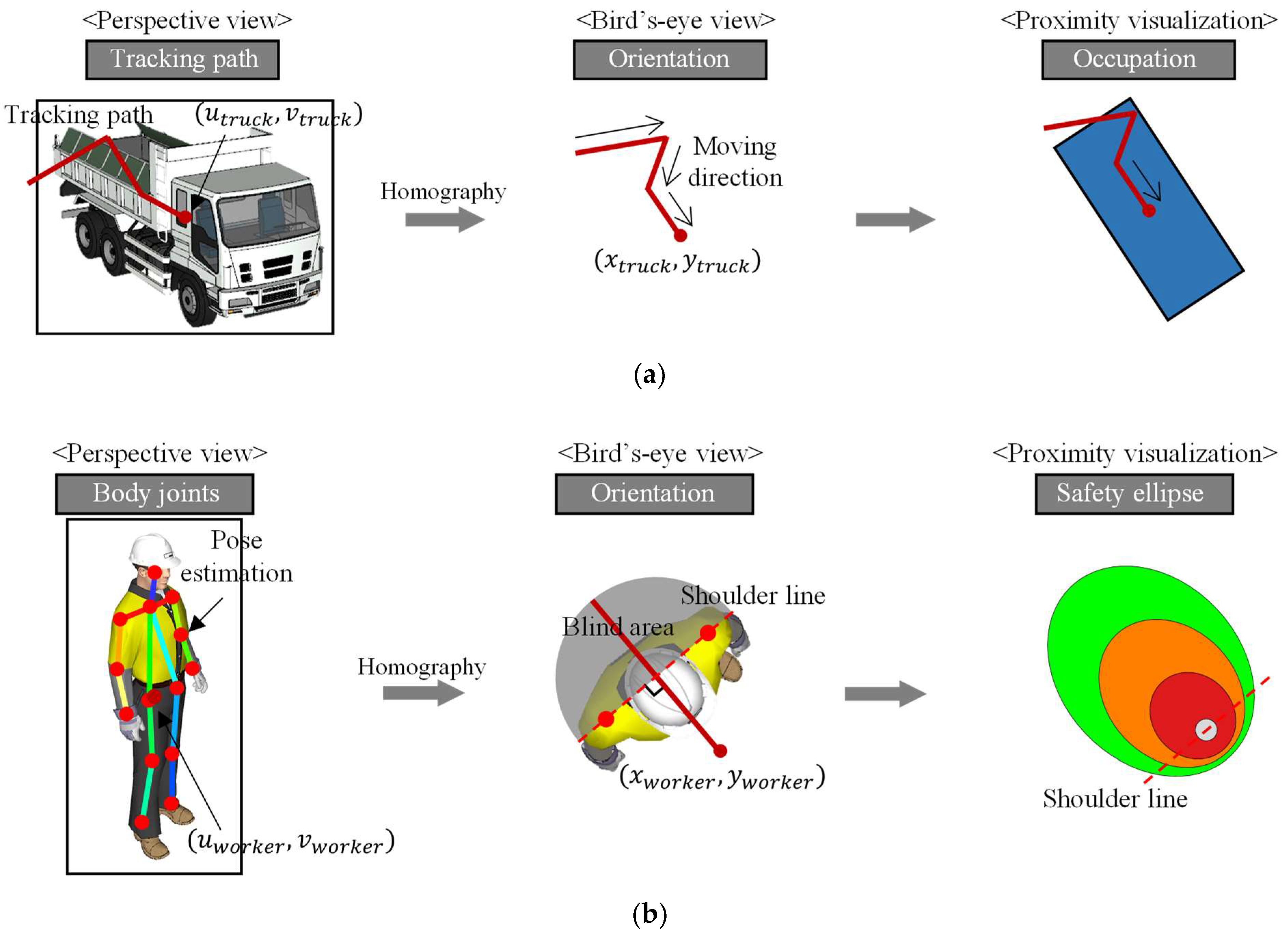

2.1. Vision-Based Proximity Visualization

2.2. Object Detection and Tracking

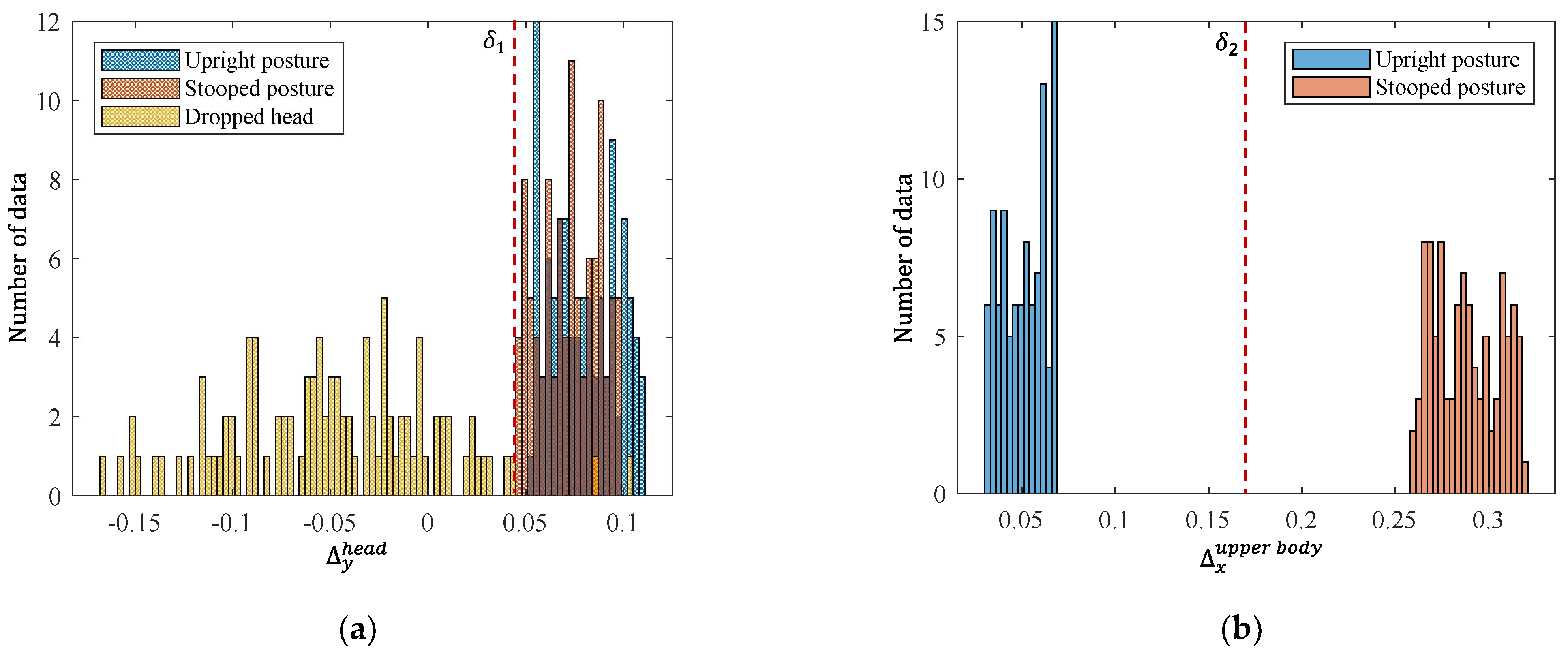

2.3. Posture Determination

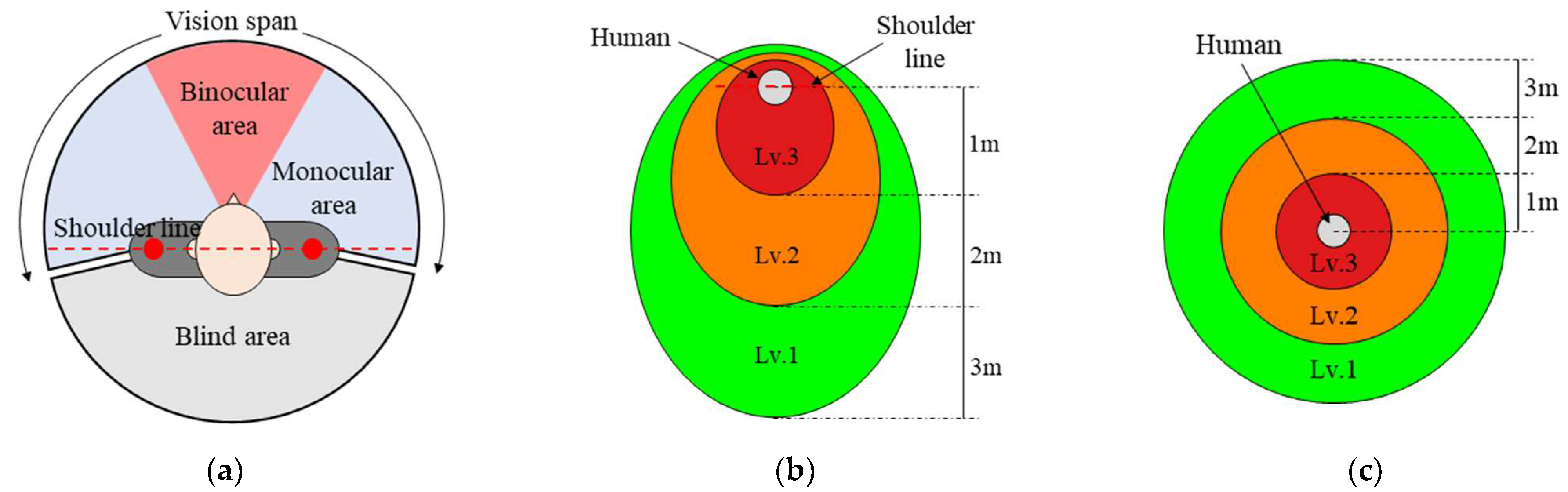

2.4. Perception-Based Safety Ellipse

2.5. Plane-Map Generation

3. Results

3.1. Posture and Orientation

3.2. Image Processing

3.3. Results in Sequential Images

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bureau of Labor Statistics. Census of Fatal Occupational Injuries Summary, 2019 (USDL-20-2265); Bureau of Labor Statistics: Washington, DC, USA, 2020.

- Sung, Y. RSSI-based distance estimation framework using a Kalman filter for sustainable indoor computing environments. Sustainability 2016, 8, 1136. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Li, H.; Yang, X. Vision-based robotic system for on-site construction and demolition waste sorting and recycling. J. Build. Eng. 2020, 32, 101769. [Google Scholar] [CrossRef]

- Zabielski, J.; Srokosz, P. Monitoring of Structural Safety of Buildings Using Wireless Network of MEMS Sensors. Buildings 2020, 10, 193. [Google Scholar] [CrossRef]

- Cai, H.; Andoh, A.R.; Su, X.; Li, S. A boundary condition based algorithm for locating construction site objects using RFID and GPS. Adv. Eng. Inform. 2014, 28, 455–468. [Google Scholar] [CrossRef]

- Lee, H.-S.; Lee, K.-P.; Park, M.; Baek, Y.; Lee, S. RFID-based real-time locating system for construction safety management. J. Comput. Civ. Eng. 2012, 26, 366–377. [Google Scholar] [CrossRef]

- BenAbdelkader, C.; Cutler, R.; Davis, L. Stride and cadence as a biometric in automatic person identification and verification. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21–21 May 2002; pp. 372–377. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L. Swin Transformer V2: Scaling Up Capacity and Resolution. arXiv 2021, arXiv:2111.09883. [Google Scholar] [CrossRef]

- Yuan, L.; Chen, D.; Chen, Y.-L.; Codella, N.; Dai, X.; Gao, J.; Hu, H.; Huang, X.; Li, B.; Li, C. Florence: A New Foundation Model for Computer Vision. arXiv 2021, arXiv:2111.11432. [Google Scholar] [CrossRef]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple copy-paste is a strong data augmentation method for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2918–2928. [Google Scholar]

- Jin, Y.-H.; Ko, K.-W.; Lee, W.-H. An indoor location-based positioning system using stereo vision with the drone camera. Mob. Inf. Syst. 2018, 2018, 5160543. [Google Scholar] [CrossRef] [Green Version]

- Weerasinghe, I.T.; Ruwanpura, J.Y.; Boyd, J.E.; Habib, A.F. Application of Microsoft Kinect sensor for tracking construction workers. In Proceedings of the Construction Research Congress 2012: Construction Challenges in a Flat World, West Lafayette, IN, USA, 21–23 May 2012; pp. 858–867. [Google Scholar]

- Palikhe, S.; Lee, J.Y.; Kim, B.; Yirong, M.; Lee, D.-E. Ergonomic Risk Assessment of Aluminum Form Workers’ Musculoskeletal Disorder at Construction Workstations Using Simulation. Sustainability 2022, 14, 4356. [Google Scholar] [CrossRef]

- Lee, G.; Choi, B.; Jebelli, H.; Lee, S. Assessment of construction workers’ perceived risk using physiological data from wearable sensors: A machine learning approach. J. Build. Eng. 2021, 42, 102824. [Google Scholar] [CrossRef]

- Kim, H.; Han, S. Accuracy improvement of real-time location tracking for construction workers. Sustainability 2018, 10, 1488. [Google Scholar] [CrossRef] [Green Version]

- Gatti, U.C.; Schneider, S.; Migliaccio, G. Physiological condition monitoring of construction workers. J. Autom. Constr. 2014, 44, 227–233. [Google Scholar] [CrossRef]

- Antwi-Afari, M.F.; Li, H. Fall risk assessment of construction workers based on biomechanical gait stability parameters using wearable insole pressure system. Adv. Eng. Inform. 2018, 38, 683–694. [Google Scholar] [CrossRef]

- Ray, S.J.; Teizer, J. Real-time construction worker posture analysis for ergonomics training. Adv. Eng. Inform. 2012, 26, 439–455. [Google Scholar] [CrossRef]

- Vazirizade, S.M.; Nozhati, S.; Zadeh, M.A. Seismic reliability assessment of structures using artificial neural network. J. Build. Eng. 2017, 11, 230–235. [Google Scholar] [CrossRef]

- Hu, Q.; Bai, Y.; He, L.; Huang, J.; Wang, H.; Cheng, G. Workers’ Unsafe Actions When Working at Heights: Detecting from Images. Sustainability 2022, 14, 6126. [Google Scholar] [CrossRef]

- Tien, P.W.; Wei, S.; Calautit, J. A computer vision-based occupancy and equipment usage detection approach for reducing building energy demand. Energies 2020, 14, 156. [Google Scholar] [CrossRef]

- Zhang, M.; Cao, Z.; Yang, Z.; Zhao, X. Utilizing Computer Vision and Fuzzy Inference to Evaluate Level of Collision Safety for Workers and Equipment in a Dynamic Environment. J. Constr. Eng. Manag. 2020, 146, 04020051. [Google Scholar] [CrossRef]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Luo, H.; Rose, T.M.; An, W. Detecting non-hardhat-use by a deep learning method from far-field surveillance videos. Autom. Constr. 2018, 85, 1–9. [Google Scholar] [CrossRef]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Rose, T.M.; An, W.; Yu, Y. A deep learning-based method for detecting non-certified work on construction sites. Adv. Eng. Inform. 2018, 35, 56–68. [Google Scholar] [CrossRef]

- Li, Z.; Li, D. Action recognition of construction workers under occlusion. J. Build. Eng. 2022, 45, 103352. [Google Scholar] [CrossRef]

- Fang, W.; Zhong, B.; Zhao, N.; Love, P.E.; Luo, H.; Xue, J.; Xu, S. A deep learning-based approach for mitigating falls from height with computer vision: Convolutional neural network. Adv. Eng. Inform. 2019, 39, 170–177. [Google Scholar] [CrossRef]

- Ding, L.; Fang, W.; Luo, H.; Love, P.E.; Zhong, B.; Ouyang, X. A deep hybrid learning model to detect unsafe behavior: Integrating convolution neural networks and long short-term memory. Autom. Constr. 2018, 86, 118–124. [Google Scholar] [CrossRef]

- Luo, H.; Liu, J.; Fang, W.; Love, P.E.; Yu, Q.; Lu, Z. Real-time smart video surveillance to manage safety: A case study of a transport mega-project. Adv. Eng. Inform. 2020, 45, 101100. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Son, H.; Choi, H.; Seong, H.; Kim, C. Detection of construction workers under varying poses and changing background in image sequences via very deep residual networks. Autom. Constr. 2019, 99, 27–38. [Google Scholar] [CrossRef]

- Park, S.; Kim, J.; Jeon, K.; Kim, J.; Park, S. Improvement of GPR-Based Rebar Diameter Estimation Using YOLO-v3. Remote Sens. 2021, 13, 2011. [Google Scholar] [CrossRef]

- Du, Y.; Pan, N.; Xu, Z.; Deng, F.; Shen, Y.; Kang, H. Pavement distress detection and classification based on YOLO network. Int. J. Pavement Eng. 2021, 22, 1659–1672. [Google Scholar] [CrossRef]

- Angah, O.; Chen, A.Y. Tracking multiple construction workers through deep learning and the gradient based method with re-matching based on multi-object tracking accuracy. Autom. Constr. 2020, 119, 103308. [Google Scholar] [CrossRef]

- Iswanto, I.A.; Li, B. Visual object tracking based on mean-shift and particle-Kalman filter. Procedia Comput. Sci. 2017, 116, 587–595. [Google Scholar] [CrossRef]

- Saho, K. Kalman filter for moving object tracking: Performance analysis and filter design. In Kalman Filters—Theory for Advanced Applications; IntechOpen: London, UK, 2017; pp. 233–252. [Google Scholar]

- Ball, K.K.; Beard, B.L.; Roenker, D.L.; Miller, R.L.; Griggs, D.S. Age and visual search: Expanding the useful field of view. JOSA A 1988, 5, 2210–2219. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime multi-person 2D pose estimation using Part Affinity Fields. Comput. Vis. Pattern Recognit. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Posture | Upright Posture | Stooped Posture | Dropped Head | ||||

|---|---|---|---|---|---|---|---|

| Image |  |  |  |  |  |  | |

| Key | Image 372.jpg | Image 1653.jpg | Image2462.jpg | Image2795.jpg | Image619.jpg | Image811.jpg | |

| Value | 0.1316 | 0.1573 | 0.1307 | 0.1611 | 0.0492 | −0.1527 | |

| 0.0381 | 0.0674 | 0.3209 | 0.2652 | 0.1592 | 0.1017 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, Y.-S.; Kim, J. A Vision-Based Collision Monitoring System for Proximity of Construction Workers to Trucks Enhanced by Posture-Dependent Perception and Truck Bodies’ Occupied Space. Sustainability 2022, 14, 7934. https://doi.org/10.3390/su14137934

Shin Y-S, Kim J. A Vision-Based Collision Monitoring System for Proximity of Construction Workers to Trucks Enhanced by Posture-Dependent Perception and Truck Bodies’ Occupied Space. Sustainability. 2022; 14(13):7934. https://doi.org/10.3390/su14137934

Chicago/Turabian StyleShin, Yoon-Soo, and Junhee Kim. 2022. "A Vision-Based Collision Monitoring System for Proximity of Construction Workers to Trucks Enhanced by Posture-Dependent Perception and Truck Bodies’ Occupied Space" Sustainability 14, no. 13: 7934. https://doi.org/10.3390/su14137934

APA StyleShin, Y.-S., & Kim, J. (2022). A Vision-Based Collision Monitoring System for Proximity of Construction Workers to Trucks Enhanced by Posture-Dependent Perception and Truck Bodies’ Occupied Space. Sustainability, 14(13), 7934. https://doi.org/10.3390/su14137934