A Multidimensional Evaluation of Technology-Enabled Assessment Methods during Online Education in Developing Countries

Abstract

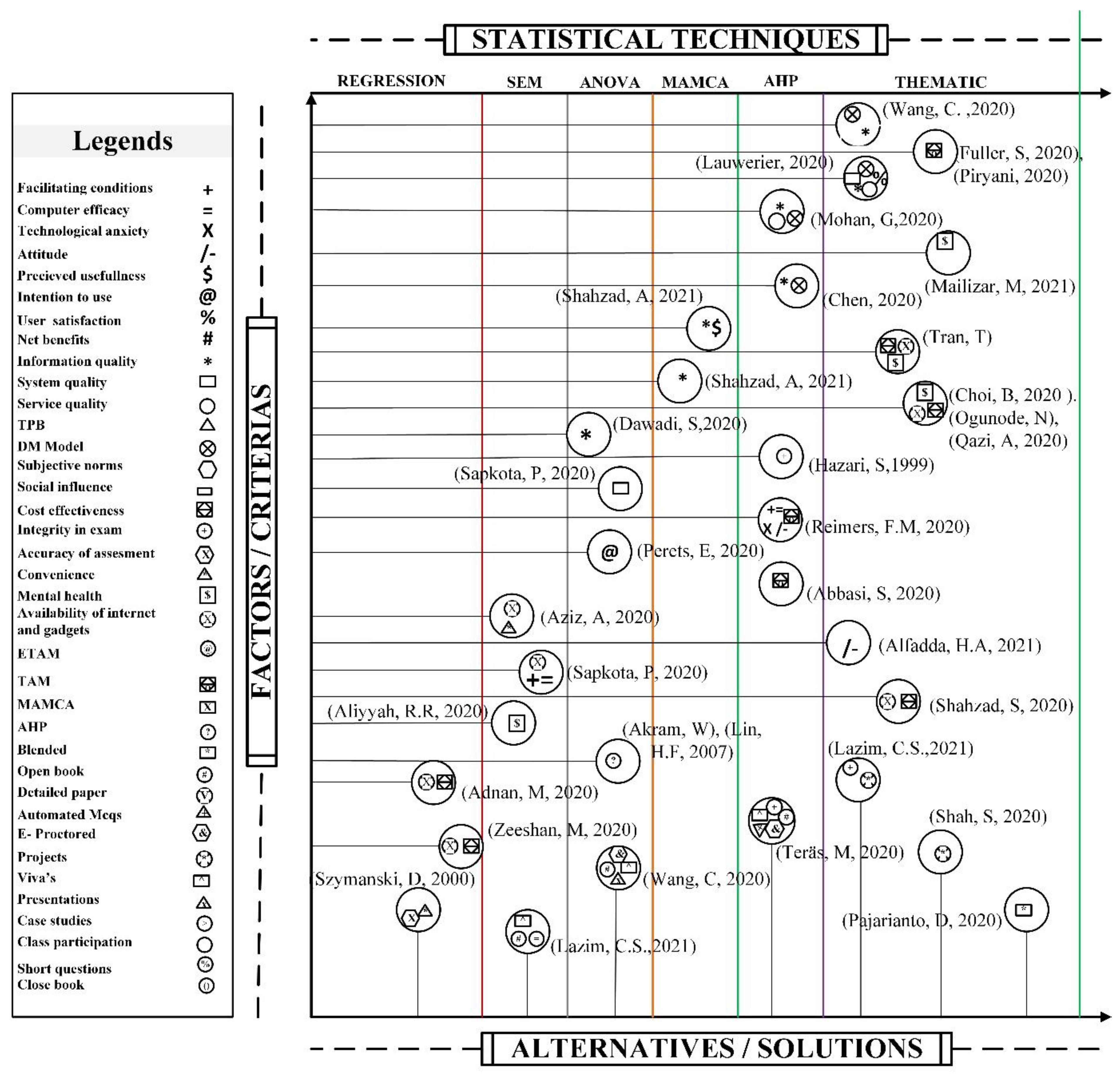

:1. Introduction

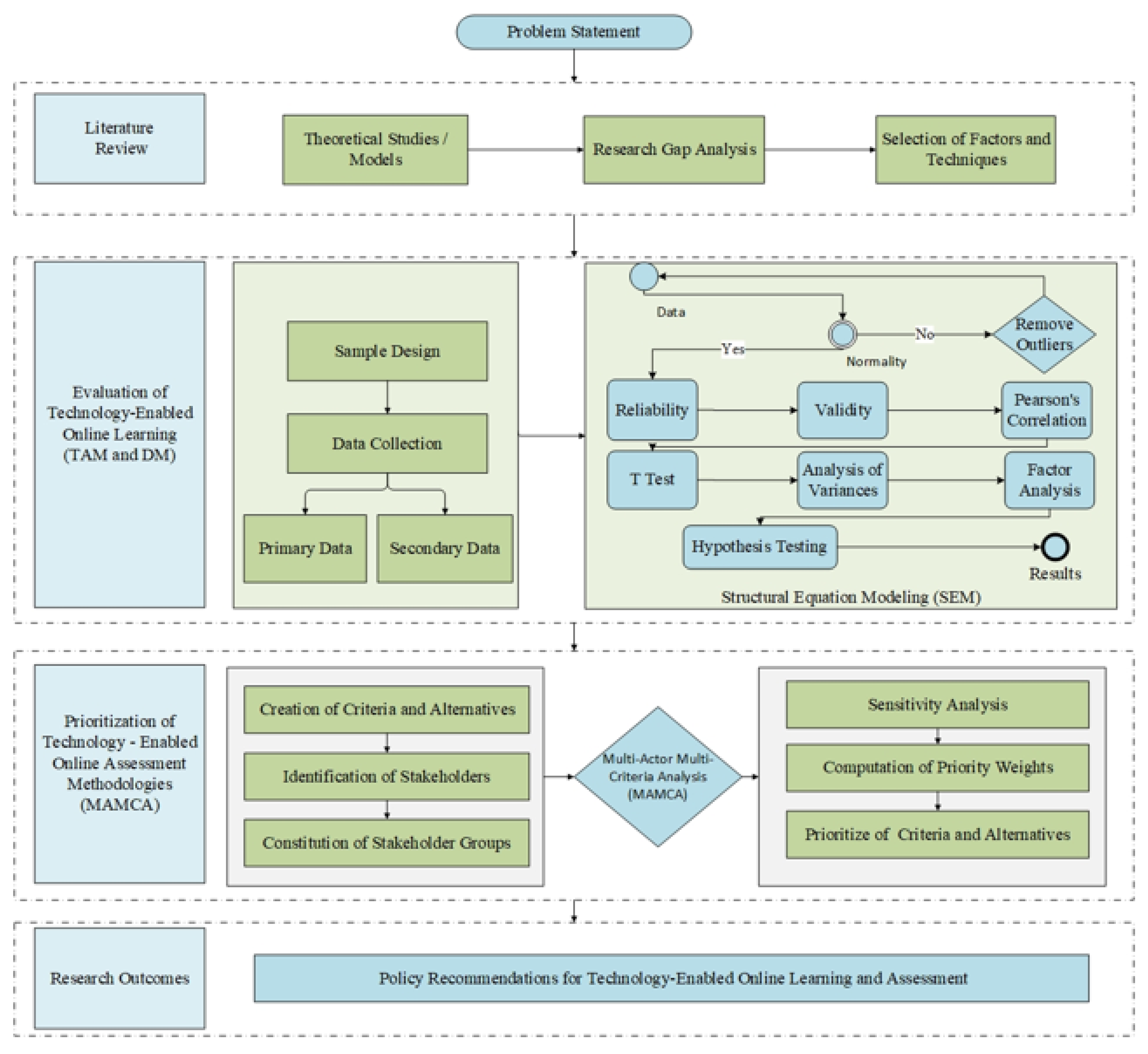

2. Materials and Methods

2.1. Phase I: Evaluation of Technology-Enabled Online Learning Using TAM and DM

Questionnaire Development and Participants of the Study

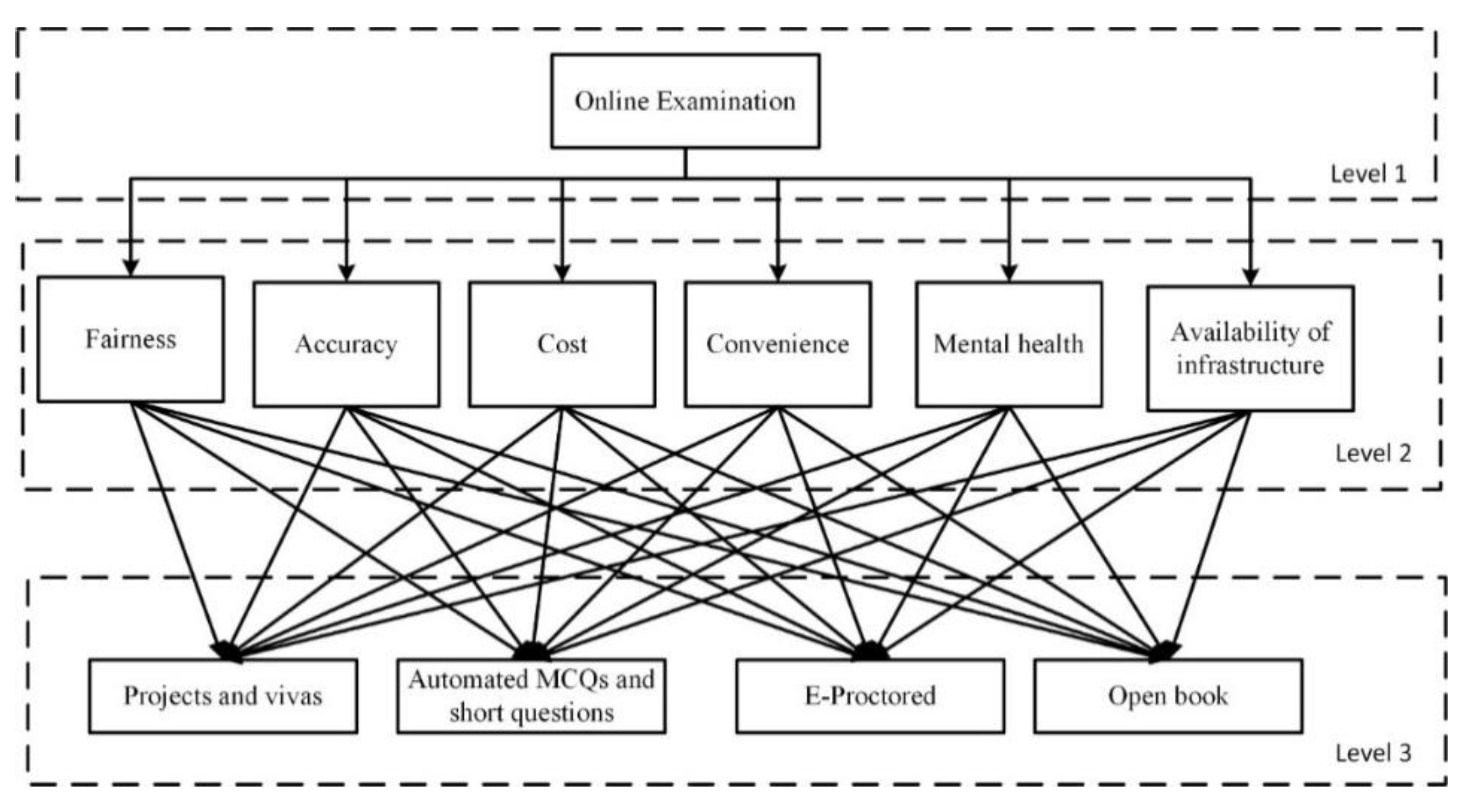

2.2. Phase II: Prioritization of Technology-Enabled Online Assessment Methodologies Using Multi-Actor Multi-Criteria Analysis (MAMCA)

2.3. Linkage of the Two Phases

3. Results and Discussion

3.1. Evaluation of Technology-Enabled Online Learning

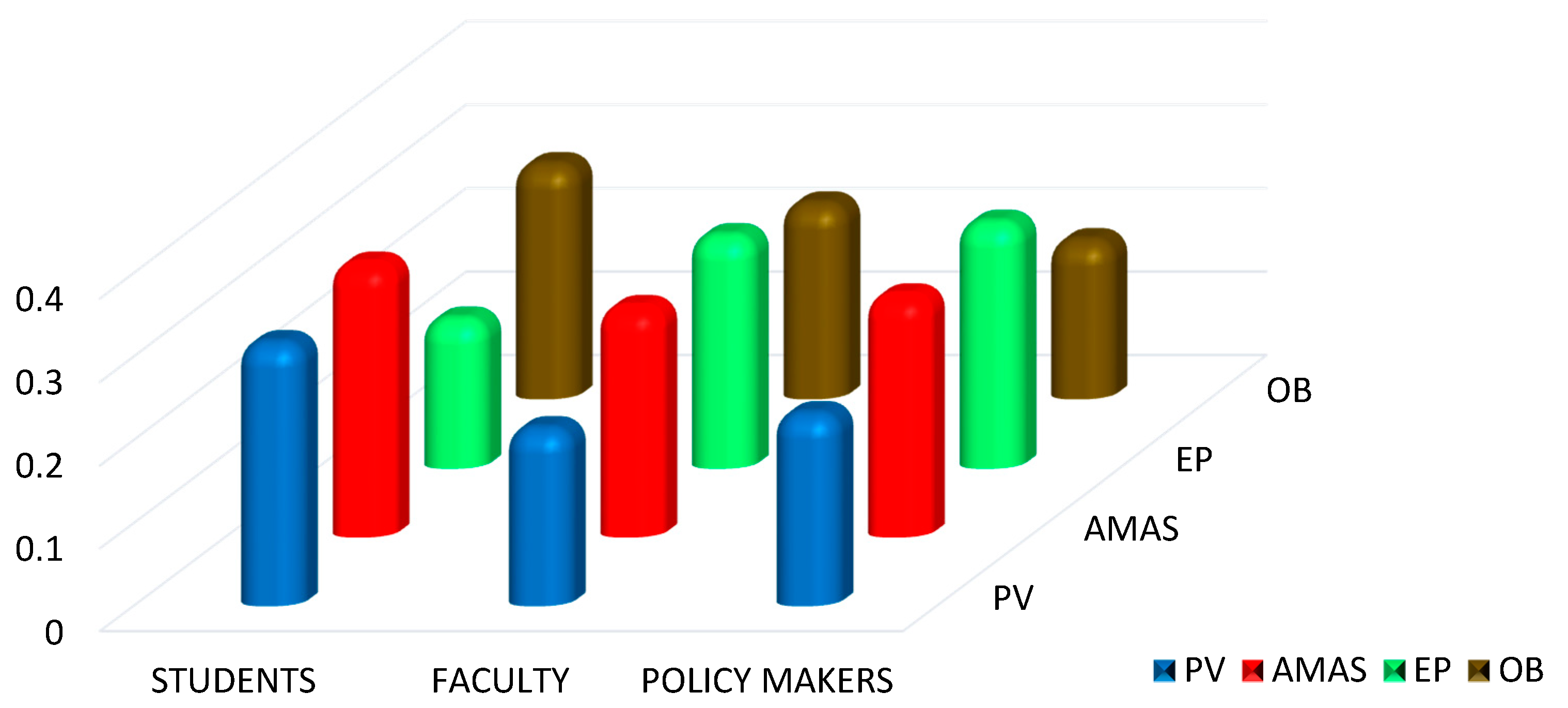

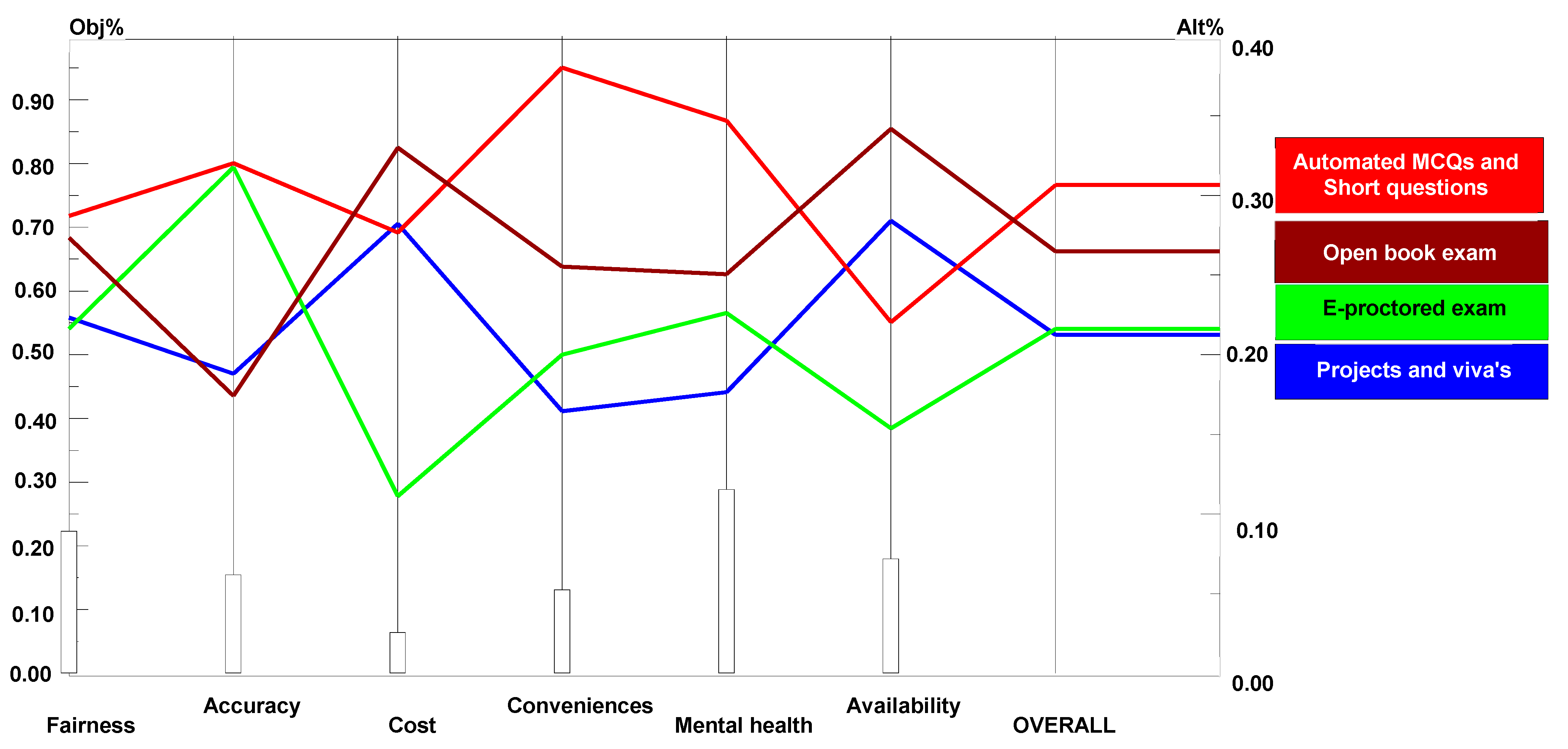

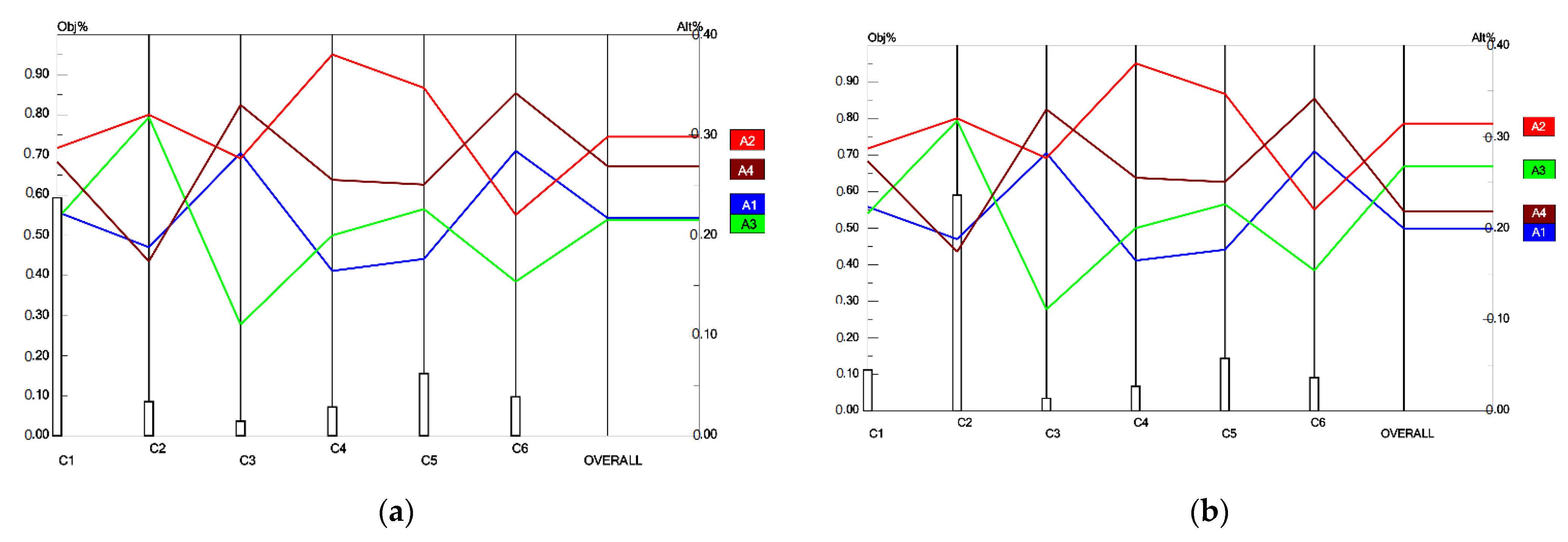

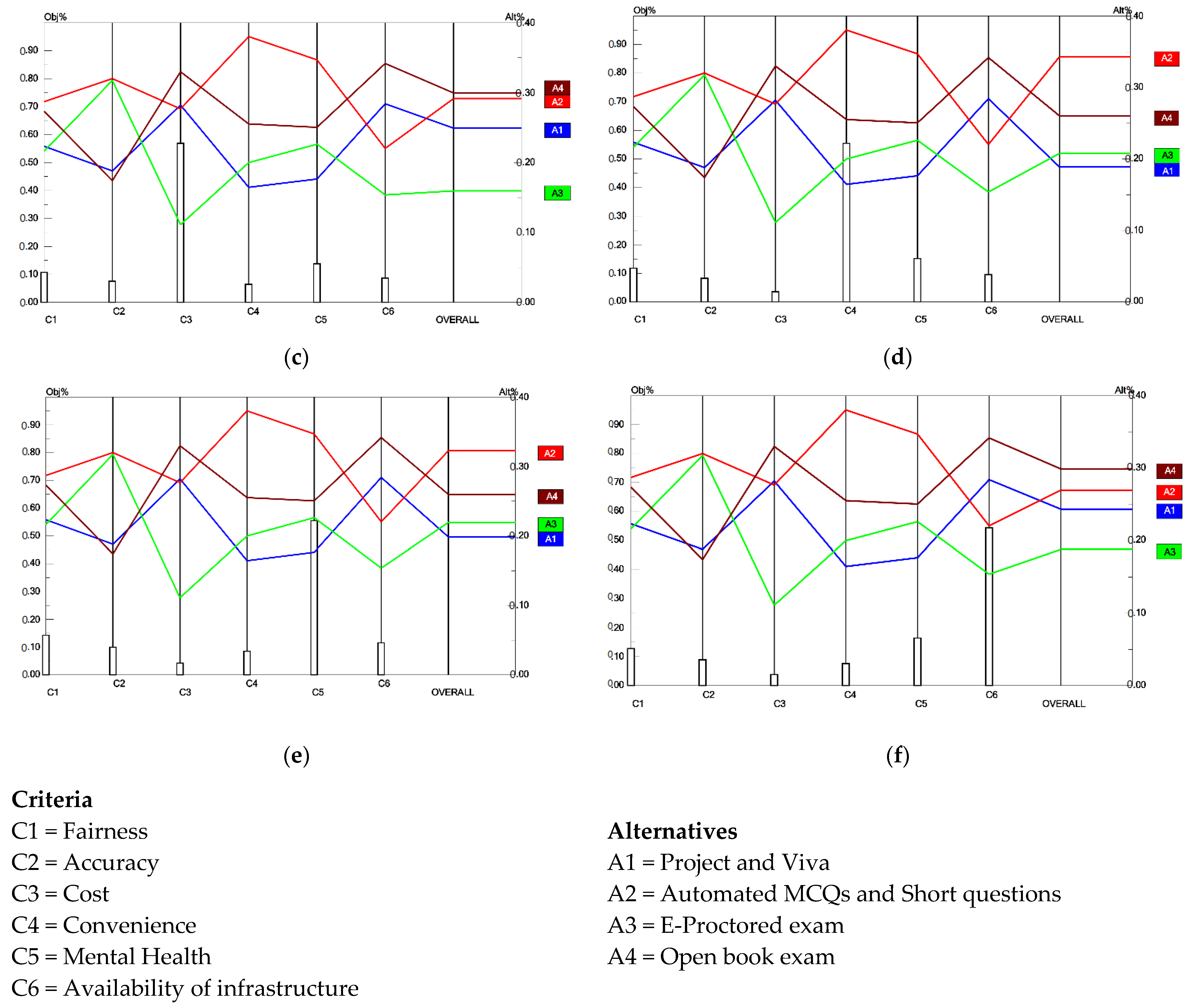

3.2. Prioritization of Technology-Enabled Online Assessment Methodologies

3.3. Analysing the Effect of Priority Variations

4. Conclusions and Recommendations

4.1. Policy Implication

- This research provides a multidimensional set of results as flexible policy guidance for local as well as international education policymakers in setting the stakeholder priorities for the commencement of online education in developing countries.

- Whenever online education becomes the only option left to continue the education process worldwide, it requires an efficient system that considers all the factors and fulfills the needs of all stakeholders. This study can play a role in the development of an effective recommendation system for improved online education and exam conduct.

- The top two modes of examination suggested by stakeholders, after keeping in mind various factors, are the Automated MCQS/Short Questions and the Open Book exams.

4.2. Limitations and Recommendations

- It is worth noting that the results were achieved by solving the established models with specific data in relation to the current scenario. Political instability and financial uncertainty will have an impact on model inputs and outcomes. While the second phase takes all three stakeholders on board, the first phase is limited to students only. Another limitation of the study is that an equal weightage was given to the opinion of three stakeholders in the aggregate results. However, the differences in their assigned priorities are clearly highlighted and discussed.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- United Nations. Policy Brief: Education during COVID-19 and Beyond. 2020. Available online: https://www.un.org/development/desa/dspd/wp-content/uploads/sites/22/2020/08/sg_policy_brief_covid-19_and_education_august_2020.pdf (accessed on 20 May 2022).

- Sapkota, P.P.; Narayangarh, C. Determining Factors of the Use of E-Learning during COVID-19 Lockdown among the College Students of Nepal: A Cross-Sectional Study; A Mini Research Report; Balkumari College: Chitwan, Nepal, 2020. [Google Scholar]

- Nguyen, T. The effectiveness of online learning: Beyond no significant difference and future horizons. MERLOT J. Online Learn. Teach. 2015, 11, 309–319. [Google Scholar]

- Shahzad, A.; Hassan, R.; Aremu, A.Y.; Hussain, A.; Lodhi, R.N. Effects of COVID-19 in E-learning on higher education institution students: The group comparison between male and female. Qual. Quant. 2020, 55, 805–826. [Google Scholar] [CrossRef] [PubMed]

- Scholtz, B.; Kapeso, M. An m-learning framework for ERP systems in higher education. Interact. Technol. Smart Educ. 2014, 11, 287–301. [Google Scholar] [CrossRef]

- Oyedotun, T.D. Sudden change of pedagogy in education driven by COVID-19: Perspectives and evaluation from a developing country. Res. Glob. 2020, 2, 100029. [Google Scholar] [CrossRef]

- Di Vaio, A.; Palladino, R.; Hassan, R.; Escobar, O. Artificial intelligence and business models in the sustainable development goals perspective: A systematic literature review. J. Bus. Res. 2020, 121, 283–314. [Google Scholar] [CrossRef]

- Hamadi, M.; El-Den, J.; Azam, S.; Sriratanaviriyakul, N. Integrating social media as cooperative learning tool in higher education classrooms: An empirical study. J. King Saud Univ.-Comput. Inf. Sci. 2021, 34, 3722–3731. [Google Scholar] [CrossRef]

- Rapanta, C.; Botturi, L.; Goodyear, P.; Guàrdia, L.; Koole, M. Online university teaching during and after the COVID-19 crisis: Refocusing teacher presence and learning activity. Postdigital Sci. Educ. 2020, 2, 923–945. [Google Scholar] [CrossRef]

- Lazim, C.S.L.M.; Ismail, N.D.B.; Tazilah, M.D.A.K. Application of technology acceptance model (TAM) towards online learning during COVID-19 pandemic: Accounting students perspective. Int. J. Bus. Econ. Law 2021, 24, 13–20. [Google Scholar]

- Daradoumis, T.; Rodriguez-Ardura, I.; Faulin, J.; Juan, A.; Xhafa, F.; Lopez, F.J.M. Customer Relationship Management applied to higher education: Developing an e-monitoring system to improve relationships in electronic learning environments. Int. J. Serv. Technol. Manag. 2010, 14, 103–125. [Google Scholar] [CrossRef]

- Almaiah, M.A.; Al-Khasawneh, A.; Althunibat, A. Exploring the critical challenges and factors influencing the E-learning system usage during COVID-19 pandemic. Educ. Inf. Technol. 2020, 25, 5261–5280. [Google Scholar] [CrossRef]

- Tănase, F.-D.; Demyen, S.; Manciu, V.-C.; Tănase, A.-C. Online Education in the COVID-19 Pandemic—Premise for Economic Competitiveness Growth? Sustainability 2022, 14, 3503. [Google Scholar] [CrossRef]

- Qazi, A.; Naseer, K.; Qazi, J.; AlSalman, H.; Naseem, U.; Yang, S.; Hardaker, G.; Gumaei, A. Conventional to online education during COVID-19 pandemic: Do develop and underdeveloped nations cope alike. Child. Youth Serv. Rev. 2020, 119, 105582. [Google Scholar] [CrossRef] [PubMed]

- Ismail, A.; Kuppusamy, K. Web accessibility investigation and identification of major issues of higher education websites with statistical measures: A case study of college websites. J. King Saud Univ.-Comput. Inf. Sci. 2019, 34, 901–911. [Google Scholar] [CrossRef]

- Almaiah, M.A.; Al Mulhem, A. A conceptual framework for determining the success factors of e-learning system implementation using Delphi technique. J. Theor. Appl. Inf. Technol. 2018, 96, 5962–5976. [Google Scholar]

- Ang, L.; Buttle, F. Customer retention management processes: A quantitative study. Eur. J. Mark. 2006, 40, 83–99. [Google Scholar] [CrossRef] [Green Version]

- Gibson, S.G.; Harris, M.L.; Colaric, S.M. Technology acceptance in an academic context: Faculty acceptance of online education. J. Educ. Bus. 2008, 83, 355–359. [Google Scholar] [CrossRef]

- Abbasi, S.; Ayoob, T.; Malik, A.; Memon, S.I. Perceptions of students regarding E-learning during COVID-19 at a private medical college. Pak. J. Med. Sci. 2020, 36, S57–S61. [Google Scholar] [CrossRef]

- Alfadda, H.A.; Mahdi, H.S. Measuring students’ use of zoom application in language course based on the technology acceptance model (TAM). J. Psycholinguist. Res. 2021, 50, 883–900. [Google Scholar] [CrossRef]

- Reimers, F.M.; Schleicher, A. A Framework to Guide an Education Response to the COVID-19 Pandemic of 2020; OECD: Paris, France, 2020. [Google Scholar]

- DeLone, W.H.; McLean, E.R. Information systems success: The quest for the dependent variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef] [Green Version]

- DeLone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar]

- Ng, H.H.; Tan, H.H. An annotated checklist of the non-native freshwater fish species in the reservoirs of Singapore. COSMOS 2010, 6, 95–116. [Google Scholar] [CrossRef]

- Petter, S.; Delone, W.; McLean, E.R. Information Systems Success: The Quest for the Independent Variables. J. Manag. Inf. Syst. 2013, 29, 7–62. [Google Scholar] [CrossRef]

- Chen, T.; Peng, L.; Yin, X.; Rong, J.; Yang, J.; Cong, G. Analysis of User Satisfaction with Online Education Platforms in China during the COVID-19 Pandemic. Healthcare 2020, 8, 200. [Google Scholar] [CrossRef] [PubMed]

- Dawadi, S.; Giri, R.A.; Simkhada, P. Impact of COVID-19 on the Education Sector in Nepal: Challenges and Coping Strategies. 2020; 16p. Available online: https://files.eric.ed.gov/fulltext/ED609894.pdf (accessed on 18 April 2022).

- Lauwerier, T. Reactions to COVID-19 from International Cooperation in Education: Between Continuities and Unexpected Changes. Blog edu‘C’oop. 2020. Available online: https://archive-ouverte.unige.ch/unige:138268 (accessed on 18 April 2022).

- Mohan, G.; Mccoy, S.; Carroll, E.; Mihut, G.; Lyons, S.; Domhnaill, C.M. Learning for All? Second-Level Education in Ireland during COVID-19. ESRI Survey and Statistical Report Series 92. 2020. Available online: https://www.researchgate.net/profile/Selina-Mccoy/publication/342453663_Learning_For_All_Second-Level_Education_in_Ireland_During_COVID-19/links/5ef52ec4458515505072782b/Learning-For-All-Second-Level-Education-in-Ireland-During-COVID-19.pdf (accessed on 19 April 2022).

- Teräs, M.; Suoranta, J.; Teräs, H.; Curcher, M. Post-COVID-19 education and education technology ‘solutionism’: A seller’s market. Postdigital Sci. Educ. 2020, 2, 863–878. [Google Scholar] [CrossRef]

- Perets, E.A.; Chabeda, D.; Gong, A.Z.; Huang, X.; Fung, T.S.; Ng, K.Y.; Bathgate, M.; Yan, E.C.Y. Impact of the Emergency Transition to Remote Teaching on Student Engagement in a Non-STEM Undergraduate Chemistry Course in the Time of COVID-19. J. Chem. Educ. 2020, 97, 2439–2447. [Google Scholar] [CrossRef]

- Hoodbhoy, P. Cheating on Online Exams. Dawn, 23 January 2021. [Google Scholar]

- Star, W. HEC Allows Universities to Hold Online Exams after Days-Long Protests by Students. Dawn, 27 January 2021. [Google Scholar]

- HEC. HEC Policy Guidance Series on COVID-19. Guidance on Assessments and Examinations; Policy Guidance No. 6; HEC: Islamabad, Pakistan, 2020. [Google Scholar]

- Szymanski, D.M.; Hise, R.T. E-satisfaction: An initial examination. J. Retail. 2000, 76, 309–3222. [Google Scholar] [CrossRef]

- Güldeş, M.; Gürcan, F.; Atici, U.; Şahin, C. A fuzzy multi-criteria decision-making method for selection of criteria for an e-learning platform. Eur. J. Sci. Technol. 2022, 797–806. [Google Scholar] [CrossRef]

- Şahin, S.; Kargın, A.; Yücel, M. Hausdorff Measures on Generalized Set Valued Neutrosophic Quadruple Numbers and Decision Making Applications for Adequacy of Online Education. Neutrosophic Sets Syst. 2021, 40, 86–116. [Google Scholar]

- Xu, X.; Xie, J.; Wang, H.; Lin, M. Online education satisfaction assessment based on cloud model and fuzzy TOPSIS. Appl. Intell. 2022. [Google Scholar] [CrossRef]

- Nanath, K.; Sajjad, A.; Kaitheri, S. Decision-making system for higher education university selection: Comparison of priorities pre-and post-COVID-19. J. Appl. Res. High. Educ. 2021, 14, 347–365. [Google Scholar] [CrossRef]

- Creswell, J.W. A Concise Introduction to Mixed Methods Research; SAGE Publications: London, UK, 2021. [Google Scholar]

- Cheung, R.; Vogel, D. Predicting user acceptance of collaborative technologies: An extension of the technology acceptance model for e-learning. Comput. Educ. 2013, 63, 160–175. [Google Scholar] [CrossRef]

- Tosuntaş, Ş.B.; Karadağ, E.; Orhan, S. The factors affecting acceptance and use of interactive whiteboard within the scope of FATIH project: A structural equation model based on the Unified Theory of acceptance and use of technology. Comput. Educ. 2015, 81, 169–178. [Google Scholar] [CrossRef]

- Chu, T.-H.; Chen, Y.-Y. With good we become good: Understanding e-learning adoption by the theory of planned behavior and group influences. Comput. Educ. 2016, 92, 37–52. [Google Scholar] [CrossRef]

- Zogheib, B.; Rabaa’I, A.; Zogheib, S.; Elsaheli, A. University Student Perceptions of Technology Use in Mathematics Learning. J. Inf. Technol. Educ. Res. 2015, 14, 417–438. [Google Scholar] [CrossRef] [Green Version]

- Kisanjara, S.; Tossy, T.M.; Sife, A.S.; Msanjila, S.S. An integrated model for measuring the impacts of e-learning on students’ achievement in developing countries. Int. J. Educ. Dev. Using Inf. Commun. Technol. 2017, 13, 109–127. [Google Scholar]

- Ramli, N.H.H.; Alavi, M.; Mehrinezhad, S.A.; Ahmadi, A. Academic Stress and Self-Regulation among University Students in Malaysia: Mediator Role of Mindfulness. Behav. Sci. 2018, 8, 12. [Google Scholar] [CrossRef] [Green Version]

- Pajarianto, D. Study from home in the middle of the COVID-19 pandemic: Analysis of religiosity, teacher, and parents support against academic stress. Talent. Dev. Excell. 2020, 12, 1791–1807. [Google Scholar]

- Bilal, M.; Ali, M.K.; Qazi, U.; Hussain, S.; Jahanzaib, M.; Wasim, A. A multifaceted evaluation of hybrid energy policies: The case of sustainable alternatives in special Economic Zones of the China Pakistan Economic Corridor (CPEC). Sustain. Energy Technol. Assess. 2022, 52, 101958. [Google Scholar] [CrossRef]

- Hasnain, S.; Ali, M.K.; Akhter, J.; Ahmed, B.; Abbas, N. Selection of an Industrial Boiler for a Soda Ash Plant using Analytic Hieararchy Process and TOPSIS Approaches. Case Stud. Therm. Eng. 2020, 19, 100636. [Google Scholar] [CrossRef]

- Ahmad, T.; Ali, M.K.; Malik, K.A.; Jahanzaib, M. Sustaining Power Production in Hydropower Stations of Developing Countries. Sustain. Energy Technol. Assess. 2020, 37, 100637. [Google Scholar]

- Akram, W.; Adeel, S.; Tabassum, M.; Jiang, Y.; Chandio, A.; Yasmin, I. Scenario Analysis and Proposed Plan for Pakistan Universities–COVID–19: Application of Design Thinking Model; Cambridge Open Engage: Cambridge, UK, 2020. [Google Scholar]

- AlQudah, A.A. Accepting Moodle by academic staff at the University of Jordan: Applying and extending TAM in technical support factors. Eur. Sci. J. 2014, 10, 183–200. [Google Scholar]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- DeVellis, R.F. Scale Development: Theory and Applications, 3rd ed.; Sage Publications: Saunders Oaks, CA, USA, 2016; Volume 26. [Google Scholar]

- Ozili, P.K. The Acceptable R-Square in Empirical Modeling for Social Science Research. 2022. Available online: https://ssrn.com/abstract=4128165 (accessed on 19 April 2022).

- Shahrabi, M.A.; Ahaninjan, A.; Nourbakhsh, H.; Ashlubolagh, M.A.; Abdolmaleki, J.; Mohamadi, M. Assessing psychometric reliability and validity of Technology Acceptance Model (TAM) among faculty members at Shahid Beheshti University. Manag. Sci. Lett. 2013, 3, 2295–2300. [Google Scholar] [CrossRef]

- Choi, B.; Jegatheeswaran, L.; Minocha, A.; AlHilani, M.; Nakhoul, M.; Mutengesa, E. The impact of the COVID-19 pandemic on final year medical students in the United Kingdom: A national survey. BMC Med. Educ. 2020, 20, 206. [Google Scholar] [CrossRef]

- Hasan, L.F.; Elmetwaly, A.; Zulkarnain, S. Make the educational decisions using the analytical hierarchy process AHP in the light of the corona pandemic. Eng. Appl. Artif. Intell. 2020, 2, 1–13. [Google Scholar]

- Mailizar, M.; Burg, D.; Maulina, S. Examining university students’ behavioural intention to use e-learning during the COVID-19 pandemic: An extended TAM model. Educ. Inf. Technol. 2021, 26, 7057–7077. [Google Scholar] [CrossRef]

- Shah, S.; Diwan, S.; Kohan, L.; Rosenblum, D.; Gharibo, C.; Soin, A.; Sulindro, A.; Nguyen, Q.; Provenzano, D.A. The technological impact of COVID-19 on the future of education and health care delivery. Pain Physician 2020, 23, S367–S380. [Google Scholar] [CrossRef]

- Siraj, H.H.; Salam, A.; Roslan, R.; Hasan, N.A.; Jin, T.H.; Othman, M.N. Stress and Its Association with the Academic Performance of Undergraduate Fourth Year Medical Students at Universiti Kebangsaan Malaysia. IIUM Med. J. Malays. 2014, 13. [Google Scholar] [CrossRef]

- Wang, C.; Zhao, H. The impact of COVID-19 on anxiety in Chinese university students. Front. Psychol. 2020, 11, 1168. [Google Scholar] [CrossRef]

- Kaden, U. COVID-19 School Closure-Related Changes to the Professional Life of a K–12 Teacher. Educ. Sci. 2020, 10, 165. [Google Scholar] [CrossRef]

- Fuller, S.; Vaporciyan, A.; Dearani, J.A.; Stulak, J.M.; Romano, J.C. COVID-19 Disruption in Cardiothoracic Surgical Training: An Opportunity to Enhance Education. Ann. Thorac. Surg. 2020, 110, 1443–1446. [Google Scholar] [CrossRef] [PubMed]

- Basnet, S.; Basnet, H.B.; Bhattarai, D.K. Challenges and Opportunities of Online Education during COVID-19 Situation in Nepal. Rupantaran Multidiscip. J. 2021, 5, 89–99. [Google Scholar] [CrossRef]

- Tran, T.; Hoang, A.-D.; Nguyen, Y.-C.; Nguyen, L.-C.; Ta, N.-T.; Pham, Q.-H.; Pham, C.-X.; Le, Q.-A.; Dinh, V.-H.; Nguyen, T.-T. Toward Sustainable Learning during School Suspension: Socioeconomic, Occupational Aspirations, and Learning Behavior of Vietnamese Students during COVID-19. Sustainability 2020, 12, 4195. [Google Scholar] [CrossRef]

- Aziz, A.; Sohail, M. A bumpy road to online teaching: Impact of COVID-19 on medical education. Ann. King Edw. Med. Univ. 2020, 26, 181–186. [Google Scholar]

- Bisht, R.K.; Jasola, S.; Bisht, I.P. Acceptability and challenges of online higher education in the era of COVID-19: A study of students’ perspective. Asian Educ. Dev. Stud. 2020, 11, 401–414. [Google Scholar] [CrossRef]

- Clark, R.A.; Jones, D. A comparison of traditional and online formats in a public speaking course. Commun. Educ. 2001, 50, 109–124. [Google Scholar] [CrossRef]

- James, R. Tertiary student attitudes to invigilated, online summative examinations. Int. J. Educ. Technol. High. Educ. 2016, 13, 19. [Google Scholar] [CrossRef] [Green Version]

- Muthuprasad, T.; Aiswarya, S.; Aditya, K.; Jha, G.K. Students’ perception and preference for online education in India during COVID-19 pandemic. Soc. Sci. Humanit. Open 2021, 3, 100101. [Google Scholar] [CrossRef]

- Hazari, S.; Schnorr, D. Leveraging student feedback to improve teaching in web-based courses. Journal 1999, 26, 30–38. [Google Scholar]

- Muhammad, A.; Shaikh, A.; Naveed, Q.N.; Qureshi, M.R.N. Factors Affecting Academic Integrity in E-Learning of Saudi Arabian Universities. An Investigation Using Delphi and AHP. IEEE Access 2020, 8, 16259–16268. [Google Scholar] [CrossRef]

- Ogunode, N.J. Impact of COVID-19 on Private Secondary School Teachers in FCT, Abuja, Nigeria. Electron. Res. J. Behav. Sci. 2020, 3, 72–83. [Google Scholar]

- Adnan, M.; Anwar, K. Online Learning amid the COVID-19 Pandemic: Students’ Perspectives. J. Pedagog. Sociol. Psychol. 2020, 2, 45–51. [Google Scholar] [CrossRef]

- Zeeshan, M.; Chaudhry, A.G.; Khan, S.E. Pandemic preparedness and techno stress among faculty of DAIs in COVID-19. SJESR 2020, 3, 383–396. [Google Scholar] [CrossRef]

- Cassidy, S. Assessing ‘inexperienced’students’ ability to self-assess: Exploring links with learning style and academic personal control. Assess. Eval. High. Educ. 2007, 32, 313–330. [Google Scholar] [CrossRef]

- García, P.; Amandi, A.; Schiaffino, S.; Campo, M. Evaluating Bayesian networks’ precision for detecting students’ learning styles. Comput. Educ. 2007, 49, 794–808. [Google Scholar] [CrossRef]

- Huang, Y.-M.; Lin, Y.-T.; Cheng, S.-C. An adaptive testing system for supporting versatile educational assessment. Comput. Educ. 2009, 52, 53–67. [Google Scholar] [CrossRef]

- Lin, H.-F. An application of fuzzy AHP for evaluating course website quality. Comput. Educ. 2010, 54, 877–888. [Google Scholar] [CrossRef]

- Rasmitadila, R.; Aliyyah, R.R.; Rachmadtullah, R.; Samsudin, A.; Syaodih, E.; Nurtanto, M.; Tambunan, A.R.S. The Perceptions of Primary School Teachers of Online Learning during the COVID-19 Pandemic Period: A Case Study in Indonesia. J. Ethn. Cult. Stud. 2020, 7, 90–109. [Google Scholar] [CrossRef]

- Durbach, I. Scenario planning in the analytic hierarchy process. Futures Foresight Sci. 2019, 1, e1668. [Google Scholar] [CrossRef]

| Factors | Abb | Relationship | Hypothesis |

|---|---|---|---|

| Attitude | ATT | ATT -> E | H1: Attitude has an impact on the evaluation of technology-enabled online learning. |

| Computer Efficacy | CE | CE -> E | H2: Computer Efficacy has an impact on the evaluation of technology-enabled online learning. |

| Facilitating Conditions | FC | FC -> E | H3: Facilitating Conditions have an impact on the evaluation of technology-enabled online learning. |

| Information Quality | IQ | IQ -> IU | H4: Information Quality has an impact on Intention to Use. |

| IQ -> US | H5: Information Quality has an impact on User Satisfaction. | ||

| Intention to Use | IU | IU -> E | H6: Intention to Use has an impact on the evaluation of technology-enabled online learning. |

| Service Quality | SQ | SQ -> IU | H7: Service Quality has an impact on Intention to Use. |

| SQ -> US | H8: Service Quality has an impact on User Satisfaction. | ||

| System Quality | SYQ | SYQ -> IU | H9: System Quality has an impact on Intention to Use. |

| SYQ -> US | H10: System Quality has an impact on User Satisfaction. | ||

| Technological Anxiety | TA | TA -> E | H11: Technological Anxiety has an impact on the evaluation of technology-enabled online learning. |

| User Satisfaction | US | US -> E | H12: User Satisfaction has an impact on the evaluation of technology-enabled online learning. |

| Variables | Indicators | Frequency | Percentage% |

|---|---|---|---|

| Gender | Female | 262 | 31.3 |

| Male | 575 | 68.7 | |

| Institute type | Public | 681 | 81.4 |

| Private | 156 | 18.6 |

| Constructs | Indicators | Loadings (>0.50) | Cronbach’s Alpha (0.7–0.88) | R Square | Composite Reliability (>0.82) | Average Variance Extracted (>0.50) |

|---|---|---|---|---|---|---|

| ATT | ATT1 | 0.842 | 0.72 | 0.82 | 0.54 | |

| ATT2 | 0.578 | |||||

| ATT3 | 0.647 | |||||

| ATT4 | 0.837 | |||||

| TA | TA1 | 0.909 | 0.7 | 0.86 | 0.75 | |

| TA2 | 0.823 | |||||

| CE | CE1 | 0.912 | 0.82 | 0.92 | 0.85 | |

| CE2 | 0.929 | |||||

| FC | FC1 | 0.892 | 0.7 | 0.87 | 0.77 | |

| FC2 | 0.861 | |||||

| IQ | IQ1 | 0.860 | 0.73 | 0.88 | 0.78 | |

| IQ2 | 0.910 | |||||

| SYQ | SYQ1 | 0.898 | 0.7 | 0.87 | 0.76 | |

| SYQ2 | 0.850 | |||||

| SQ | SQ1 | 0.849 | 0.83 | 0.9 | 0.74 | |

| SQ2 | 0.885 | |||||

| SQ3 | 0.854 | |||||

| IU | IU1 | 0.865 | 0.72 | 0.291 | 0.88 | 0.78 |

| IU2 | 0.900 | |||||

| US | US1 | 0.914 | 0.88 | 0.729 | 0.92 | 0.74 |

| US2 | 0.838 | |||||

| US3 | 0.916 | |||||

| US4 | 0.766 | |||||

| E | E1 | 0.886 | 0.85 | 0.879 | 0.9 | 0.69 |

| E2 | 0.781 | |||||

| E3 | 0.820 | |||||

| E4 | 0.828 |

| Hypothesis | Relationship | Std-Beta | Std-Error | t-Value | Decision | p Values |

|---|---|---|---|---|---|---|

| H1 | ATT -> E | 0.07 | 0.03 | 2.44 | Supported | 0.02 |

| H2 | CE -> E | 0.08 | 0.03 | 2.5 | Supported | 0.01 |

| H3 | FC -> E | 0.11 | 0.03 | 3.6 | Supported | 0 |

| H4 | IQ -> IU | 0.17 | 0.05 | 3.26 | Supported | 0 |

| H5 | IQ -> US | 0.21 | 0.03 | 6.07 | Supported | 0 |

| H6 | IU -> E | 0.05 | 0.03 | 2.03 | Supported | 0.04 |

| H7 | SQ -> IU | 0.2 | 0.06 | 3.52 | Supported | 0 |

| H8 | SQ -> US | 0.29 | 0.04 | 7.25 | Supported | 0 |

| H9 | SYQ -> IU | 0.22 | 0.05 | 4.32 | Supported | 0 |

| H10 | SYQ -> US | 0.45 | 0.03 | 13.25 | Supported | 0 |

| H11 | TA -> E | 0.02 | 0.02 | 1.13 | Not Supported | 0.26 |

| H12 | US -> E | 0.65 | 0.03 | 20.4 | Supported | 0 |

| Factors | Description | Rationale | Shreds of Evidence from Previous Studies on Education |

|---|---|---|---|

| Mental Health | Effect on the mental health of students and faculty during online assessments. | Since the students and faculty are not fine-tuned with the online assessments, it may seriously affect their mental health and performance. | [46,47,57,58,59,60,61,62] |

| Cost | It includes all types of costs associated with different modes of online exam conduct. | The system should be cost-effective to ensure its sustainability. Weaker financial circumstances make the ‘Cost’ factor more crucial in developing countries. | [12,16,17,58,63,64,65,66] |

| Convenience | The comfort level of students and faculty during online exam conduct. | Convenience always affects learning and assessment performance. | [67,68,69,70,71] |

| Integrity and Fairness | Maintaining the exam integrity and preventing unfair means. | It is always one of the crucial parameters for the conduct of any exam. | [32,33,72,73] |

| Accuracy | The adopted method must assess the student’s learning accurately. | Accuracy is always one of the most important factors in the selection of any assessment method. | [66,74,75,76,77,78,79,80] |

| Availability of Infrastructure | Availability of facilities, such as internet connectivity, personal computers, proctoring system and other necessary gadgets. | Conduct of online exams is never possible without having sufficient technical infrastructure. | [2,12,16,56,57,58,81] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khattak, A.S.; Ali, M.K.; Al Awadh, M. A Multidimensional Evaluation of Technology-Enabled Assessment Methods during Online Education in Developing Countries. Sustainability 2022, 14, 10387. https://doi.org/10.3390/su141610387

Khattak AS, Ali MK, Al Awadh M. A Multidimensional Evaluation of Technology-Enabled Assessment Methods during Online Education in Developing Countries. Sustainability. 2022; 14(16):10387. https://doi.org/10.3390/su141610387

Chicago/Turabian StyleKhattak, Ambreen Sultana, Muhammad Khurram Ali, and Mohammed Al Awadh. 2022. "A Multidimensional Evaluation of Technology-Enabled Assessment Methods during Online Education in Developing Countries" Sustainability 14, no. 16: 10387. https://doi.org/10.3390/su141610387

APA StyleKhattak, A. S., Ali, M. K., & Al Awadh, M. (2022). A Multidimensional Evaluation of Technology-Enabled Assessment Methods during Online Education in Developing Countries. Sustainability, 14(16), 10387. https://doi.org/10.3390/su141610387