Computer-Based Scaffolding for Sustainable Project-Based Learning: Impact on High- and Low-Achieving Students

Abstract

:1. Introduction

1.1. Challenges in Implementing PjBL

1.2. High- and Low-Achieving Students in PjBL

1.3. Scaffolding in Learning with Complex Real-World Projects

1.4. Affective Experiences in PjBL

1.5. Summary of Existing Studies

1.6. The Present Study

- RQ1: What are the academic achievement and affective experiences acquired by students from the PjBL course with computer-based scaffolding?

- RQ2: Do high-, medium-, and low-achieving students differ in their academic achievement and affective experiences acquired from the PjBL course with computer-based scaffolding? If so, what are the differences?

2. Methods

2.1. Participants

2.2. Measures and Instruments

2.3. Learning Task

2.4. Online Learning Environment with Computer-Based Scaffolding

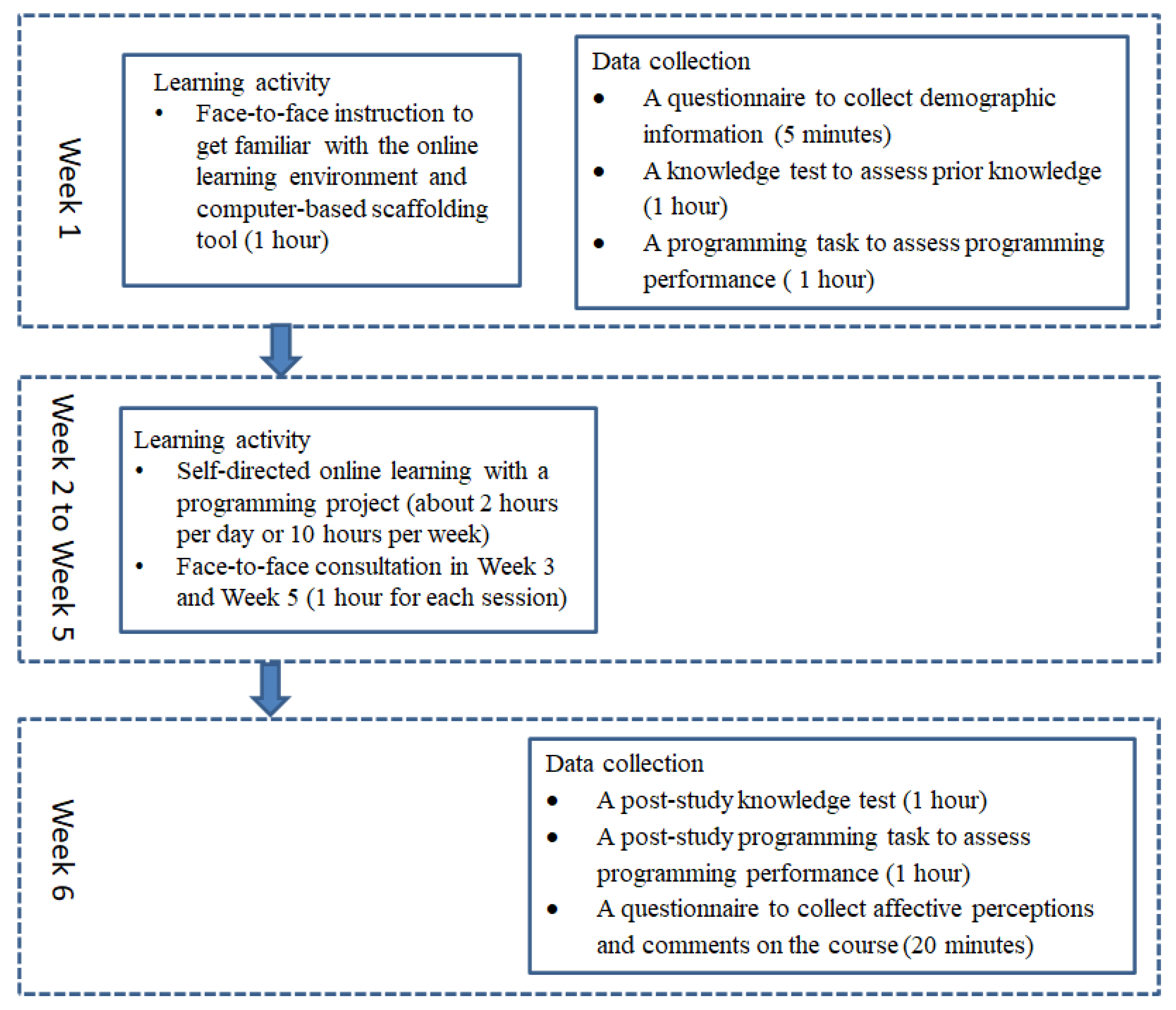

2.5. Procedure

2.6. Data Analysis

3. Results

3.1. Learning Outcomes and Affective Experiences of All Participants

3.2. Differences in Learning Outcomes between High-, Medium-, and Low-Achievers

3.3. Differences in Affective Experiences between High-, Medium-, and Low-Achievers

3.4. Student Comments on the PjBL Course

4. Discussion

4.1. Learning Outcomes, Affective Experiences, and Comments of All Participants

4.2. Differences in Learning Outcomes between High-, Medium-, and Low-Achieving Students

4.3. Differences in Affective Experiences and Comments between High-, Medium-, and Low-Achieving Students

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 4C/ID | Four-component instructional design |

| ANOVA | Analysis of variance |

| CSS | Cascading style sheets |

| HTML | HyperText markup language |

| PjBL | Project-based learning |

| SD | Standard deviation |

| SQL | Structured query language |

| STEM | Science, technology, engineering, and mathematics |

Appendix A

| Aspect | Description * | Score Range |

|---|---|---|

| Correctness | 10—Correct solution specifications/program code and results consistent with problem requirements. 5—Partial solution specifications/program code and/or some results. 0—No solution specifications/program code or results inconsistent with problem requirements. | 0 to 10 |

| Efficiency | 10—Most algorithms, data structures, control structures, and language constructs are appropriate. 5—Program accomplishes its task but lacks coherence in choice of either data and/or control structures. 0—Program solution lacks coherence in choice of both data and control structures. | 0 to 10 |

| Reliability | 10—Program functions properly under all test cases. Works for and responds to all valid inputs. 5—Program functions under limited test cases. Only works for valid inputs but fails to respond to invalid inputs. 0—Program fails under most test cases. | 0 to 10 |

| Readability | 10—Program code includes clear documentation (comments, meaningful identifiers, indentation to clarify logical structure) and user instructions. 5—Program code lacks clear documentation and/or user instructions. 0—Program code is totally incoherent. | 0 to 10 |

Appendix B

| Aspect | Description * | Score Range | |

|---|---|---|---|

| Problem understanding | Project requirements | 10—Project requirements are clearly and correctly stated. All elements are identified. 5—Project requirements are partially stated. Some elements are not identified. Some statements are incorrect or irrelevant. 0—No relevant project requirements are identified. | 0 to 10 |

| Project goals | 10—Project goals are clearly and correctly stated. All elements are identified. 5—Project goals are partially stated. Some elements are not identified. Some statements are incorrect or irrelevant. 0—No relevant project goals are identified. | 0 to 10 | |

| Modular design | Functional modules | 10—Detailed and clear planning of the solution, with complete and appropriate functional modules. 5—Partially correct planning of the solution, with incomplete or inappropriate functional modules. 0—No appropriate or relevant modules proposed for the solution plan. | 0 to 10 |

| Relationships between functional modules | 10—Detailed and appropriate relationships between functional modules. 5—Partially correct or incomplete relationships between functional modules. 0—No or completely inappropriate relationships between functional modules. | 0 to 10 | |

| Process design | Module decomposition | 10—Complete and appropriate decomposition of functional modules in the process design. 5—Partially correct or incomplete decomposition of functional modules in the process design. 0—No or completely inappropriate decomposition of functional modules in the process design. | 0 to 10 |

| Process organization | 10—Complete and appropriate organization of the process. 5—Partially correct or incomplete organization of the process. 0—No or completely inappropriate organization of the process. | 0 to 10 | |

References

- Hays, J.; Reinders, H. Sustainable learning and education: A curriculum for the future. Int. Rev. Educ. 2020, 66, 29–52. [Google Scholar] [CrossRef] [Green Version]

- Caena, F.; Stringher, C. Towards a New Conceptualization of Learning to Learn. Aula Abierta 2020, 49, 199–216. [Google Scholar] [CrossRef]

- Black, P.; McCormick, R.; James, M.; Pedder, D. Learning how to learn and assessment for learning: A theoretical inquiry. Res. Pap. Educ. 2006, 21, 119–132. [Google Scholar] [CrossRef]

- English, M.C.; Kitsantas, A. Supporting student self-regulated learning in problem-and project-based learning. Interdiscip. J. Probl.-Based Learn. 2013, 7, 6. [Google Scholar] [CrossRef] [Green Version]

- Wright, G.B. Student-Centered Learning in Higher Education. Int. J. Teach. Learn. High. Educ. 2011, 23, 92–97. [Google Scholar]

- Jollands, M.; Jolly, L.; Molyneaux, T. Project-based learning as a contributing factor to graduates’ work readiness. Eur. J. Eng. Educ. 2012, 37, 143–154. [Google Scholar] [CrossRef]

- Blumenfeld, P.C.; Soloway, E.; Marx, R.W.; Krajcik, J.S.; Guzdial, M.; Palincsar, A. Motivating project-based learning: Sustaining the doing, supporting the learning. Educ. Psychol. 1991, 26, 369–398. [Google Scholar] [CrossRef]

- Chen, C.-H.; Yang, Y.-C. Revisiting the effects of project-based learning on students’ academic achievement: A meta-analysis investigating moderators. Educ. Res. Rev. 2019, 26, 71–81. [Google Scholar] [CrossRef]

- Guo, P.; Saab, N.; Post, L.S.; Admiraal, W. A review of project-based learning in higher education: Student outcomes and measures. Int. J. Educ. Res. 2020, 102, 101586. [Google Scholar] [CrossRef]

- Helle, L.; Tynjälä, P.; Olkinuora, E. Project-based learning in post-secondary education–theory, practice and rubber sling shots. High. Educ. 2006, 51, 287–314. [Google Scholar] [CrossRef]

- Peng, J.; Wang, M.; Sampson, D.; van Merrienboer, J. Using a visualization-based and progressive learning environment as a cognitive tool for learning computer programming. Australas. J. Educ. Technol. 2019, 35, 52–68. [Google Scholar] [CrossRef]

- Peng, J.; Wang, M.; Sampson, D. Visualizing the Complex Process for Deep Learning with an Authentic Programming Project. Educ. Technol. Soc. 2017, 20, 275–287. [Google Scholar]

- Thomas, J.W. A Review of Research on Project-Based Learning; Autodesk Foundation: San Rafael, CA, USA, 2000. [Google Scholar]

- Hakamian, H.; Sobhiyah, M.H.; Aghdasi, M.; Shamizanjani, M. How can the Portuguese navigation system in the 15th century inspire the development of the model for project-based learning organizations? Knowl. Manag. E-Learn. 2019, 11, 59–80. [Google Scholar] [CrossRef]

- Hmelo-Silver, C.E.; Duncan, R.G.; Chinn, C.A. Scaffolding and achievement in problem-based and inquiry learning: A response to Kirschner, Sweller, and Clark (2006). Educ. Psychol. 2007, 42, 99–107. [Google Scholar] [CrossRef]

- Kirschner, P.A.; Sweller, J.; Clark, R.E. Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educ. Psychol. 2006, 41, 75–86. [Google Scholar] [CrossRef]

- Sasson, I.; Yehuda, I.; Malkinson, N. Fostering the skills of critical thinking and question-posing in a project-based learning environment. Think. Ski. Creat. 2018, 29, 203–212. [Google Scholar] [CrossRef]

- Jazayeri, M. Combining mastery learning with project-based learning in a first programming course: An experience report. In Proceedings of the 2015 IEEE/ACM 37th IEEE International Conference on Software Engineering, Florence, Italy, 16–24 May 2015; Volume 2, pp. 315–318. [Google Scholar]

- Pucher, R.; Lehner, M. Project based learning in computer science–A review of more than 500 projects. Procedia-Soc. Behav. Sci. 2011, 29, 1561–1566. [Google Scholar] [CrossRef] [Green Version]

- Zohar, A.; Degani, A.; Vaaknin, E. Teachers’ beliefs about low-achieving students and higher order thinking. Teach. Teach. Educ. 2001, 17, 469–485. [Google Scholar] [CrossRef]

- Belland, B.R.; Walker, A.E.; Kim, N.J.; Lefler, M. Synthesizing results from empirical research on computer-based scaffolding in STEM education: A Meta-analysis. Rev. Educ. Res. 2016, 87, 309–344. [Google Scholar] [CrossRef] [Green Version]

- Gijlers, H.; de Jong, T. Using concept maps to facilitate collaborative simulation-based inquiry learning. J. Learn. Sci. 2013, 22, 340–374. [Google Scholar] [CrossRef]

- Lazonder, A.W.; Harmsen, R. Meta-analysis of inquiry-based learning: Effects of guidance. Rev. Educ. Res. 2016, 86, 681–718. [Google Scholar] [CrossRef]

- Reiser, B.J. Scaffolding complex learning: The Mechanisms of structuring and problematizing student work. J. Learn. Sci. 2004, 13, 273–304. [Google Scholar] [CrossRef]

- Hooshyar, D.; Yousefi, M.; Wang, M.; Lim, H. A data-driven procedural-content-generation approach for educational games. J Comput Assist Learn. 2018, 34, 731–739. [Google Scholar] [CrossRef]

- Van Merriënboer, J.J.G.; Kirschner, P.A. Ten Steps to Complex Learning: A Systematic Approach to Four-Component Instructional Design, 3rd ed.; Routledge: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Collins, A.; Brown, J.S.; Holum, A. Cognitive apprenticeship: Making thinking visible. Am. Educ. 1991, 15, 6–11. [Google Scholar]

- Kim, N.J.; Belland, B.R.; Walker, A.E. Effectiveness of computer-based scaffolding in the context of problem-based learning for STEM education: Bayesian meta-analysis. Educ. Psychol. Rev. 2018, 30, 397–429. [Google Scholar] [CrossRef] [Green Version]

- Devolder, A.; Van Braak, J.; Tondeur, J. Supporting self-regulated learning in computer-based learning environments: Systematic review of effects of scaffolding in the domain of science education. J. Comput. Assist. Learn. 2012, 28, 557–573. [Google Scholar] [CrossRef]

- Phelps, E.A. Emotion and cognition: Insights from studies of the human amygdala. Annu. Rev. Psychol. 2006, 57, 27–53. [Google Scholar] [CrossRef] [Green Version]

- Schutz, P.A.; DeCuir, J.T. Inquiry on emotions in education. Educ. Psychol. 2002, 37, 125–134. [Google Scholar] [CrossRef]

- Pekrun, R.; Goetz, T.; Frenzel, A.C.; Barchfeld, P.; Perry, R.P. Measuring emotions in students’ learning and performance: The Achievement Emotions Questionnaire (AEQ). Contemp. Educ. Psychol. 2011, 36, 36–48. [Google Scholar] [CrossRef] [Green Version]

- McAuley, E.; Duncan, T.; Tammen, V.V. Psychometric properties of the Intrinsic Motivation Inventory in a competitive sport setting: A confirmatory factor analysis. Res. Q. Exerc. Sport 1989, 60, 48–58. [Google Scholar] [CrossRef]

- Fredricks, J.A.; Blumenfeld, P.C.; Paris, A.H. School engagement: Potential of the concept, state of the evidence. Rev. Educ. Res. 2004, 74, 59–109. [Google Scholar] [CrossRef] [Green Version]

- Eisenberg, M.; Basman, A.; Hsi, S. Math on a sphere: Making use of public displays in mathematics and programming education. Knowl. Manag. E-Learn. 2014, 6, 140–155. [Google Scholar] [CrossRef]

- Ralph, R.A. Post secondary project-based learning in science, technology, engineering and mathematics. J. Technol. Sci. Educ. 2016, 6, 26–35. [Google Scholar] [CrossRef] [Green Version]

- Reis, A.C.B.; Barbalho, S.C.M.; Zanette, A.C.D. A bibliometric and classification study of Project-based Learning in Engineering Education. Production 2017, 27, e20162258. [Google Scholar] [CrossRef] [Green Version]

- De los Ríos, I.; Cazorla, A.; Díaz-Puente, J.M.; Yagüe, J.L. Project–based learning in engineering higher education: Two decades of teaching competences in real environments. Procedia-Soc. Behav. Sci. 2010, 2, 1368–1378. [Google Scholar] [CrossRef] [Green Version]

- Coronado, J.M.; Moyano, A.; Romero, V.; Ruiz, R.; Rodríguez, J. Student Long-Term Perception of Project-Based Learning in Civil Engineering Education: An 18-Year Ex-Post Assessment. Sustainability 2021, 13, 1949. [Google Scholar] [CrossRef]

- Farid, T.; Ali, S.; Sajid, M.; Akhtar, K. Sustainability of Project-Based Learning by Incorporating Transdisciplinary Design in Fabrication of Hydraulic Robot Arm. Sustainability 2021, 13, 7949. [Google Scholar] [CrossRef]

- Deek, F.P.; Hiltz, S.R.; Kimmel, H.; Rotter, N. Cognitive assessment of students’ problem solving and program development skills. J. Eng. Educ. 1999, 88, 317–326. [Google Scholar] [CrossRef]

- Bayrak, F. Associations between university students’ online learning preferences, readiness, and satisfaction. Knowl. Manag. E-Learn. 2022, 14, 186–201. [Google Scholar] [CrossRef]

- Weldon, A.; Ma, W.W.K.; Ho, I.M.K.; Li, E. Online learning during a global pandemic: Perceived benefits and issues in higher education. Knowl. Manag. E-Learn. 2021, 13, 161–181. [Google Scholar] [CrossRef]

- Preacher, K.J. Advances in mediation analysis: A survey and synthesis of new developments. Annu. Rev. Psychol. 2015, 66, 825–852. [Google Scholar] [CrossRef] [PubMed]

- Preacher, K.J.; Rucker, D.D.; MacCallum, R.C.; Nicewander, W.A. Use of the extreme groups approach: A critical reexamination and new recommendations. Psychol. Methods 2005, 10, 178–192. [Google Scholar] [CrossRef] [PubMed]

- Slof, B.; Erkens, G.; Kirschner, P.A.; Janssen, J.; Jaspers, J.G.M. Successfully carrying out complex learning-tasks through guiding teams’ qualitative and quantitative reasoning. Instr. Sci. 2012, 40, 623–643. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Wu, B.; Kirschner, P.A.; Spector, J.M. Using cognitive mapping to foster deeper learning with complex problems in a computer-based environment. Comput. Hum. Behav. 2018, 87, 450–458. [Google Scholar] [CrossRef]

- Sorva, J.; Karavirta, V.; Malmi, L. A review of generic program visualization systems for introductory programming education. ACM Trans. Comput. Educ. 2013, 13, 15. [Google Scholar] [CrossRef]

- Corbalan, G.; Kester, L.; Van Merriënboer, J.J.G. Dynamic task selection: Effects of feedback and learner control on efficiency and motivation. Learn. Instr. 2009, 19, 455–465. [Google Scholar] [CrossRef]

- Sung, H.-Y.; Hwang, G.-J. A Collaborative game-based learning approach to improving students’ learning performance in science courses. Comput. Educ. 2013, 63, 43–51. [Google Scholar] [CrossRef]

- Yuan, B.; Wang, M.; van Merriënboer, J.; Tao, X.; Kushniruk, A.; Peng, J. Investigating the Role of Cognitive Feedback in Practice-Oriented Learning for Clinical Diagnostics. Vocat. Learn. 2020, 13, 159–177. [Google Scholar] [CrossRef]

- White, B.Y.; Frederiksen, J.R. Inquiry, modelling, and metacognition: Making science accessible to all students. Cogn. Instr. 1998, 16, 3–118. [Google Scholar] [CrossRef]

- White, B.; Frederiksen, J. A Theoretical Framework and Approach for Fostering Metacognitive Development. Educ. Psychol. 2005, 40, 211–223. [Google Scholar] [CrossRef]

- Zohar, A.; Dori, Y.J. Higher order thinking skills and low-achieving students: Are they mutually exclusive? J. Learn. Sci. 2003, 12, 145–181. [Google Scholar] [CrossRef]

- Wu, B.; Wang, M.; Grotzer, T.A.; Liu, J.; Johnson, J.M. Visualizing Complex Processes Using a Cognitive-Mapping Tool to Support the Learning of Clinical Reasoning. BMC Med. Educ. 2016, 16, 216. [Google Scholar] [CrossRef] [PubMed]

- Stewart, R.A. Investigating the link between self directed learning readiness and project-based learning outcomes: The case of international Masters students in an engineering management course. Eur. J. Eng. Educ. 2007, 32, 453–465. [Google Scholar] [CrossRef]

| Measures | Cohen’s Kappa Coefficient | p |

|---|---|---|

| Pre-study knowledge tests | 0.820 | <0.001 |

| Post-study knowledge tests | 0.865 | <0.001 |

| Pre-study product quality | 0.835 | <0.001 |

| Post-study product quality | 0.889 | <0.001 |

| Pre-study thinking skills | ||

| in problem understanding | 0.852 | <0.001 |

| in Modular design | 0.852 | <0.001 |

| in process design | 0.900 | <0.001 |

| Post-study thinking skills | ||

| in problem understanding | 0.871 | <0.001 |

| in modular design | 0.894 | <0.001 |

| in process design | 0.874 | <0.001 |

| Variable | Min | Max | Mean | SD |

|---|---|---|---|---|

| Pre-study knowledge | 10 | 94 | 40.2 | 19.45 |

| Post-study knowledge | 28 | 88 | 55.87 | 18.14 |

| Pre-study thinking skills | ||||

| in problem understanding | 5 | 20 | 13.2 | 4.05 |

| in modular design | 5 | 20 | 12.72 | 3.93 |

| in process design | 5 | 20 | 12.49 | 3.65 |

| Post-study thinking skills | ||||

| in problem understanding | 10 | 20 | 15.96 | 3.29 |

| in modular design | 10 | 20 | 15.5 | 3.31 |

| in process design | 10 | 20 | 15.7 | 3.25 |

| Pre-study product quality | 9 | 30 | 17.58 | 5.39 |

| Post-study product quality | 10 | 40 | 23.82 | 8.63 |

| Affective experiences | ||||

| Interest/Enjoyment | 2.33 | 5 | 3.97 | 0.66 |

| Perceived competence | 2.25 | 5 | 3.86 | 0.69 |

| Effort/Importance | 2 | 5 | 3.95 | 0.72 |

| Pressure/Tension | 1 | 5 | 2.84 | 1.12 |

| Value/Usefulness | 3 | 5 | 4.22 | 0.46 |

| Variable | Pre-Study | Post-Study | t | p | d | ||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | ||||

| Subject knowledge | 40.20 | 19.45 | 55.87 | 18.14 | −10.39 | <0.001 | −1.25 |

| Product quality | 17.58 | 5.39 | 23.82 | 8.63 | −6.20 | <0.001 | −0.75 |

| Variable | Pre-Study | Post-Study | t | p | d | ||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | ||||

| Problem Understanding | 13.20 | 4.05 | 15.96 | 3.29 | −5.91 | <0.001 | −0.71 |

| Modular Design | 12.72 | 3.93 | 15.50 | 3.31 | −6.44 | <0.001 | −0.78 |

| Process Design | 12.49 | 3.65 | 15.70 | 3.25 | −7.75 | <0.001 | −0.93 |

| Affective experiences | Mean | SD |

|---|---|---|

| Interest/Enjoyment | 3.97 | 0.66 |

| Perceived Competence | 3.86 | 0.69 |

| Effort/Importance | 3.95 | 0.72 |

| Value/Usefulness | 4.22 | 0.46 |

| Pressure/Tension | 2.84 | 1.12 |

| Subject Knowledge | Low-Achieving Group | Medium-Achieving Group | High-Achieving Group | F | Scheffe’s Post Hoc | |||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |||

| Pre-test | 18.40 | 4.41 | 37.90 | 8.01 | 65.74 | 10.19 | 171.88 *** | High > Medium; High > Low; Medium > Low |

| Post-test | 43.43 | 15.37 | 50.29 | 12.12 | 77.21 | 8.38 | 41.46 *** | High > Medium; High > Low |

| Gain score | 25.24 | 13.23 | 12.39 | 9.38 | 11.47 | 11.72 | 9.61 *** | Low > Medium Low > High |

| Product Quality | Low-Achieving Group | Medium-Achieving Group | High-Achieving Group | F | Scheffe’s Post Hoc | |||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |||

| Pre-study | 14.47 | 3.26 | 17.06 | 4.46 | 21.53 | 6.24 | 10.82 *** | High > Medium; High > Low |

| Post-study | 23.74 | 8.96 | 20.18 | 7.67 | 29.84 | 6.47 | 9.16 *** | High > Medium |

| Gain score | 9.26 | 9.98 | 3.11 | 7.16 | 8.31 | 6.93 | 4.40 * | Low > Medium |

| Thinking Skills | Low-Achieving Group | Medium-Achieving Group | High-Achieving Group | F | Scheffe’s Post Hoc | |||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |||

| Pre-test | ||||||||

| - Problem Understanding | 10.84 | 3.55 | 12.90 | 3.69 | 16.03 | 3.51 | 10.02 *** | High > Medium; High > Low |

| - Modular Design | 10.21 | 3.55 | 12.84 | 3.56 | 15.03 | 3.47 | 8.83 *** | High > Low; Medium > Low |

| - Process Design | 10.05 | 3.03 | 12.19 | 2.95 | 15.40 | 3.39 | 14.41 *** | High > Medium; High > Low |

| Post-test | ||||||||

| - Problem Understanding | 16.32 | 3.28 | 14.63 | 3.29 | 17.79 | 2.35 | 6.46 ** | High > Medium |

| - Modular Design | 15.32 | 3.43 | 14.28 | 3.04 | 17.68 | 2.58 | 7.44 ** | High > Medium |

| - Process Design | 15.97 | 2.91 | 14.26 | 3.17 | 17.79 | 2.51 | 8.66 *** | High > Medium |

| Gain score | ||||||||

| - Problem Understanding | 5.47 | 4.74 | 1.73 | 2.70 | 1.76 | 3.45 | 7.56 ** | Low > Medium; Low > High |

| - Modular Design | 5.11 | 3.48 | 1.44 | 3.08 | 2.66 | 3.47 | 7.26 ** | Low > Medium |

| - Process Design | 5.92 | 3.24 | 2.06 | 2.77 | 2.39 | 3.31 | 10.33 *** | Low > Medium; Low > High |

| Affective Experiences | Low-Achieving Group | Medium-Achieving Group | High-Achieving Group | F | |||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | ||

| Interest/Enjoyment | 4.05 | 0.59 | 3.92 | 0.62 | 3.95 | 0.81 | 0.22 |

| Perceived Competence | 4.09 | 0.57 | 3.72 | 0.74 | 3.86 | 0.69 | 1.78 |

| Effort/Importance | 4.28 | 0.69 | 3.83 | 0.69 | 3.81 | 0.72 | 3.01 |

| Value/Usefulness | 4.37 | 0.45 | 4.19 | 0.49 | 4.13 | 0.40 | 1.39 |

| Pressure/Tension | 2.68 | 1.14 | 3.11 | 1.25 | 2.54 | 0.80 | 1.76 |

| Theme | Illustrative Example | Frequency | |||

|---|---|---|---|---|---|

| Low-Achieving Group (n = 19) K (%) | Medium-Achieving Group (n = 31) K (%) | High- Achieving Group (n = 19) K (%) | Total (n = 69) K (%) | ||

| Self-regulated learning | The system allowed me to manage my learning process in an autonomous way. | 14 (74%) | 18 (58%) | 12 (63%) | 44 (64%) |

| Problem-solving ability | My programming problem-solving ability has been improved significantly. | 10 (53%) | 17 (55%) | 10 (53%) | 37 (54%) |

| Scaffolding for learning | The scaffolding provided in the system is very helpful. | 10 (53%) | 17 (55%) | 8 (42%) | 35 (51%) |

| Knowledge acquisition | The course helped me to gain a deeper and systematic understanding of programming knowledge. | 12 (63%) | 10 (23%) | 7 (37%) | 29 (42%) |

| Knowledge-practice integration | The course let me master programming knowledge and skills through a practical project. | 8 (42%) | 10 (33%) | 5 (26%) | 23 (33%) |

| Motivation for learning | My interest in learning computer programming has been greatly improved. | 7 (37%) | 9 (29%) | 6 (32%) | 22 (32%) |

| Learning resource | There are abundant multimedia learning resources in the system. | 7 (37%) | 7 (23%) | 4 (21%) | 18 (26%) |

| Reflective learning | The learning record in the system helped me to find out my problems. | 2 (11%) | 8 (26%) | 7 (37%) | 17 (25%) |

| Theme | Illustrative Example | Frequency | |||

|---|---|---|---|---|---|

| Low-Achieving Group (n = 19) K (%) | Medium-Achieving Group (n = 31) K (%) | High- Achieving Group (n = 19) K (%) | Total (n = 69) K (%) | ||

| Technical problems | There are some bugs in the system. | 8 (42%) | 14 (45%) | 7 (37%) | 29 (42%) |

| Teacher-student interaction | The interaction with the teacher is insufficient during the learning program. | 7 (37%) | 7 (23%) | 3 (16%) | 17 (25%) |

| Learning difficulty | The project is difficult to me in some extent. | 3 (16%) | 8 (26%) | 4 (21%) | 15 (22%) |

| Learning time | I need more time to work on the programming project. | 4 (21%) | 6 (19%) | 2 (11%) | 12 (17%) |

| Supervision | Sometimes it is hard to focus on learning due to inadequate supervision by the teacher. | 3 (16%) | 4 (13%) | 2 (11%) | 9 (13%) |

| Boring | The learning environment is a bit boring. | 1 (5%) | 3 (10%) | 1 (5%) | 5 (7%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, J.; Yuan, B.; Sun, M.; Jiang, M.; Wang, M. Computer-Based Scaffolding for Sustainable Project-Based Learning: Impact on High- and Low-Achieving Students. Sustainability 2022, 14, 12907. https://doi.org/10.3390/su141912907

Peng J, Yuan B, Sun M, Jiang M, Wang M. Computer-Based Scaffolding for Sustainable Project-Based Learning: Impact on High- and Low-Achieving Students. Sustainability. 2022; 14(19):12907. https://doi.org/10.3390/su141912907

Chicago/Turabian StylePeng, Jun, Bei Yuan, Meng Sun, Meilin Jiang, and Minhong Wang. 2022. "Computer-Based Scaffolding for Sustainable Project-Based Learning: Impact on High- and Low-Achieving Students" Sustainability 14, no. 19: 12907. https://doi.org/10.3390/su141912907

APA StylePeng, J., Yuan, B., Sun, M., Jiang, M., & Wang, M. (2022). Computer-Based Scaffolding for Sustainable Project-Based Learning: Impact on High- and Low-Achieving Students. Sustainability, 14(19), 12907. https://doi.org/10.3390/su141912907