Environmentally Friendly Concrete Compressive Strength Prediction Using Hybrid Machine Learning

Abstract

1. Introduction

2. Dataset

3. Machine Learning

3.1. CatBoost Regressor

3.2. Extra Trees Regressor

3.3. Gradient Boosting Regressor

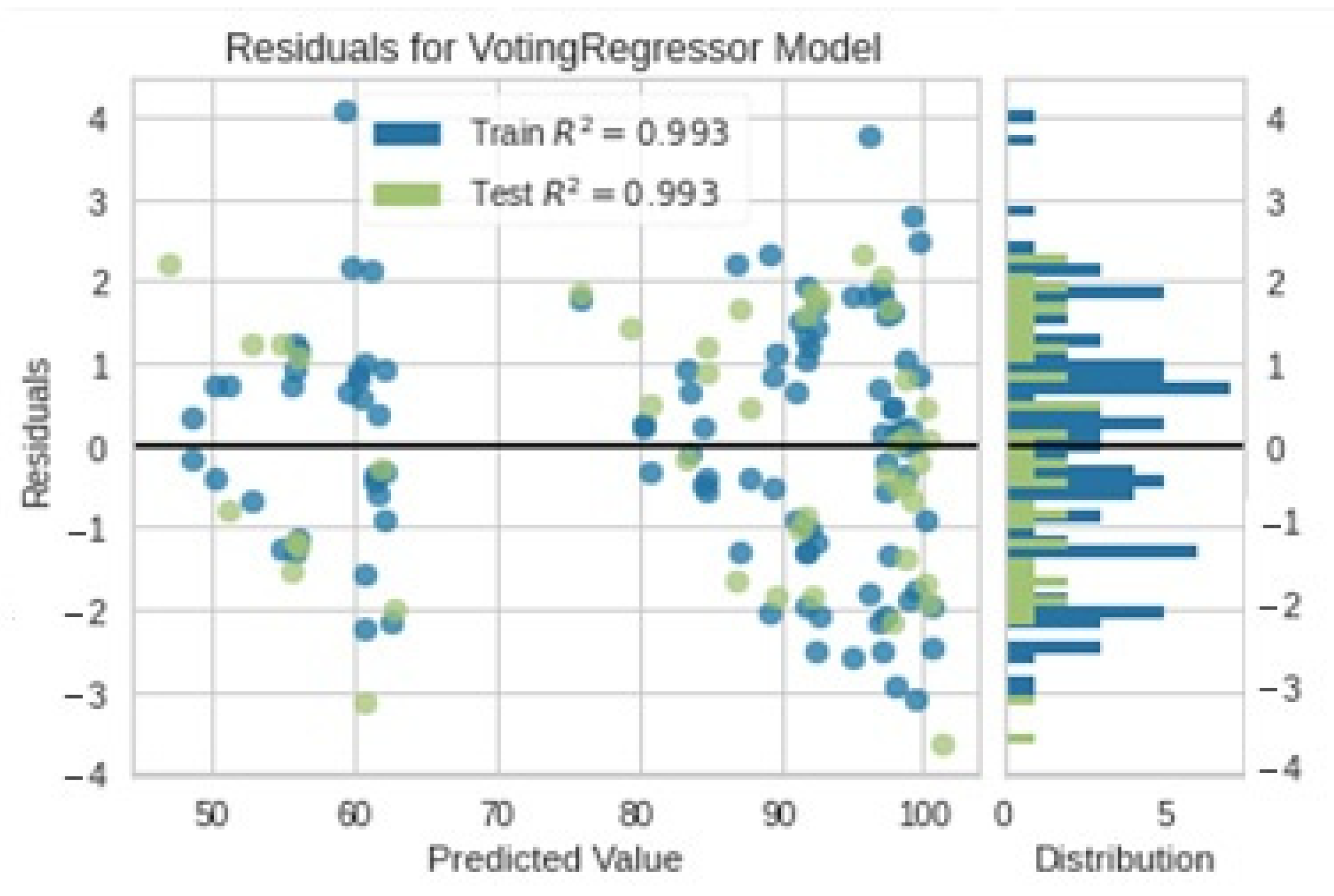

3.4. Hybrid Model

3.5. Cross-Validation Using K Fold

3.6. Feature Scaling

4. Experiment and Results

- An average of the absolute difference over the data set represents the mean absolute error (MAE) between the original and predicted values.

- By taking the average difference over the data set and squaring it, MSE (mean squared error) is calculated.

- RMSE (root mean squared error) is the error rate by the square root of MSE.

- The coefficient of determination (R2) [67] represents the degree to which the values fit the originals. Percentages ranging from 0 to 1. Models with higher values are better.

- MAPE (mean absolute percentage error)

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Reference | FA (%) | SF (%) | MK (%) | Bacillus Bacteria (mL/L) | f’c (MPa) |

|---|---|---|---|---|---|

| 30 | 0 | 0 | 0 | 44.87 | |

| 30 | 0 | 0 | 12.5 | 48.39 | |

| 30 | 0 | 0 | 25 | 49.44 | |

| 30 | 0 | 0 | 37.5 | 50.46 | |

| 30 | 0 | 0 | 50 | 51.48 | |

| 30 | 0 | 0 | 62.5 | 53.71 | |

| 30 | 0 | 0 | 75 | 54.82 | |

| 30 | 0 | 0 | 87.5 | 54.96 | |

| 30 | 0 | 0 | 100 | 55.1 | |

| 30 | 0 | 0 | 112.5 | 54.91 | |

| 30 | 0 | 0 | 125 | 54.73 | |

| 30 | 0 | 0 | 12.5 | 48.91 | |

| 30 | 0 | 0 | 23 | 50.65 | |

| 30 | 0 | 0 | 37.5 | 51.99 | |

| 30 | 0 | 0 | 50 | 53.42 | |

| 30 | 0 | 0 | 62.5 | 56.19 | |

| 30 | 0 | 0 | 75 | 57.06 | |

| 30 | 0 | 0 | 87.5 | 57.2 | |

| 30 | 0 | 0 | 100 | 57.33 | |

| 30 | 0 | 0 | 112.5 | 57.27 | |

| 30 | 0 | 0 | 125 | 57.13 | |

| 15 | 10 | 5 | 0 | 74.02 | |

| 15 | 10 | 5 | 12.5 | 80.07 | |

| 15 | 10 | 5 | 25 | 82.46 | |

| 15 | 10 | 5 | 37.5 | 83.53 | |

| 15 | 10 | 5 | 50 | 84.6 | |

| 15 | 10 | 5 | 62.5 | 88.43 | |

| 15 | 10 | 5 | 75 | 89.7 | |

| 15 | 10 | 5 | 87.5 | 90.26 | |

| 15 | 10 | 5 | 100 | 90.82 | |

| 15 | 10 | 5 | 112.5 | 90.61 | |

| 15 | 10 | 5 | 125 | 90.09 | |

| 15 | 10 | 5 | 12.5 | 80.11 | |

| 15 | 10 | 5 | 25 | 83.57 | |

| 15 | 10 | 5 | 37.5 | 85.12 | |

| 15 | 10 | 5 | 50 | 88.45 | |

| 15 | 10 | 5 | 62.5 | 91.39 | |

| 15 | 10 | 5 | 75 | 92.23 | |

| 15 | 10 | 5 | 87.5 | 93.97 | |

| 15 | 10 | 5 | 100 | 94.67 | |

| 15 | 10 | 5 | 112.5 | 93.48 | |

| 15 | 10 | 5 | 125 | 92.97 | |

| 17 | 5 | 8 | 0 | 74.07 | |

| 17 | 5 | 8 | 12.5 | 81.19 | |

| 17 | 5 | 8 | 25 | 83.01 | |

| 17 | 5 | 8 | 37.5 | 83.97 | |

| 17 | 5 | 8 | 50 | 85.31 | |

| 17 | 5 | 8 | 62.5 | 86.83 | |

| 17 | 5 | 8 | 75 | 90.51 | |

| 17 | 5 | 8 | 87.5 | 90.73 | |

| 17 | 5 | 8 | 100 | 90.95 | |

| 17 | 5 | 8 | 112.5 | 90.86 | |

| 17 | 5 | 8 | 125 | 90.44 | |

| 17 | 5 | 8 | 12.5 | 80.37 | |

| 17 | 5 | 8 | 25 | 83.76 | |

| 17 | 5 | 8 | 37.5 | 85.39 | |

| 17 | 5 | 8 | 50 | 88.27 | |

| 17 | 5 | 8 | 62.5 | 91.17 | |

| 17 | 5 | 8 | 75 | 92.05 | |

| 17 | 5 | 8 | 87.5 | 93.07 | |

| 17 | 5 | 8 | 100 | 94.89 | |

| 17 | 5 | 8 | 112.5 | 93.11 | |

| 17 | 5 | 8 | 125 | 92.63 | |

| 12 | 10 | 8 | 0 | 77.97 | |

| 12 | 10 | 8 | 12.5 | 84.37 | |

| 12 | 10 | 8 | 25 | 87.4 | |

| 12 | 10 | 8 | 37.5 | 88.62 | |

| 12 | 10 | 8 | 50 | 89.83 | |

| 12 | 10 | 8 | 62.5 | 93.09 | |

| 12 | 10 | 8 | 75 | 94.45 | |

| 12 | 10 | 8 | 87.5 | 95.26 | |

| 12 | 10 | 8 | 100 | 96.06 | |

| 12 | 10 | 8 | 112.5 | 95.79 | |

| 12 | 10 | 8 | 125 | 94.97 | |

| 12 | 10 | 8 | 12.5 | 85.07 | |

| 12 | 10 | 8 | 25 | 88.24 | |

| 12 | 10 | 8 | 37.5 | 89.99 | |

| 12 | 10 | 8 | 50 | 93.74 | |

| 12 | 10 | 8 | 62.5 | 97.53 | |

| 12 | 10 | 8 | 75 | 98.09 | |

| 12 | 10 | 8 | 87.5 | 99.59 | |

| 12 | 10 | 8 | 100 | 99.87 | |

| 12 | 10 | 8 | 112.5 | 99.47 | |

| 12 | 10 | 8 | 125 | 99.03 | |

| Authors | 20 | 5 | 0 | 79.5 | 59.06 |

| 20 | 5 | 0 | 39.3 | 61.32 | |

| 20 | 5 | 0 | 45.3 | 62.11 | |

| 20 | 5 | 0 | 79.7 | 57.59 | |

| 20 | 5 | 0 | 52.5 | 59.17 | |

| 20 | 5 | 0 | 37.8 | 61.88 | |

| 20 | 5 | 0 | 84.1 | 63.8 | |

| 20 | 5 | 0 | 64.3 | 63.12 | |

| 20 | 5 | 0 | 93.7 | 59.39 | |

| 20 | 5 | 0 | 59.7 | 64.71 | |

| 20 | 5 | 0 | 43.1 | 62.33 | |

| 20 | 5 | 0 | 132.5 | 59.96 | |

| 20 | 5 | 0 | 80.9 | 63.01 | |

| 20 | 5 | 0 | 83.6 | 55.21 | |

| 20 | 5 | 0 | 37.7 | 61.77 | |

| 20 | 5 | 0 | 63.3 | 62.45 | |

| 20 | 5 | 0 | 59.1 | 61.32 | |

| 20 | 5 | 0 | 56.3 | 64.93 | |

| 20 | 5 | 0 | 95.5 | 62.22 | |

| 20 | 5 | 0 | 70.4 | 59.85 | |

| 20 | 5 | 0 | 91 | 59.39 | |

| 20 | 10 | 5 | 29 | 97.97 | |

| 20 | 10 | 5 | 92.3 | 98.99 | |

| 20 | 10 | 5 | 46.4 | 99.89 | |

| 20 | 10 | 5 | 99.8 | 92.44 | |

| 20 | 10 | 5 | 67.3 | 102.61 | |

| 20 | 10 | 5 | 58.1 | 96.96 | |

| 20 | 10 | 5 | 34.4 | 97.52 | |

| 20 | 10 | 5 | 48.8 | 98.76 | |

| 20 | 10 | 5 | 64.2 | 97.41 | |

| 20 | 10 | 5 | 61.2 | 97.75 | |

| 20 | 10 | 5 | 95.9 | 96.16 | |

| 20 | 10 | 5 | 77.9 | 95.83 | |

| 20 | 10 | 5 | 86.7 | 98.09 | |

| 20 | 10 | 5 | 59.9 | 94.92 | |

| 20 | 10 | 5 | 45.3 | 99.1 | |

| 20 | 10 | 5 | 71.5 | 99.1 | |

| 20 | 10 | 5 | 104.5 | 101.02 | |

| 20 | 10 | 5 | 85.4 | 100.12 | |

| 20 | 10 | 5 | 69.3 | 101.25 | |

| 20 | 10 | 5 | 63.9 | 97.41 | |

| 20 | 10 | 5 | 88 | 97.97 | |

| 25 | 5 | 8 | 42.1 | 101 | |

| 25 | 5 | 8 | 66.2 | 97.72 | |

| 25 | 5 | 8 | 49.1 | 96.48 | |

| 25 | 5 | 8 | 52.5 | 103.14 | |

| 25 | 5 | 8 | 41.5 | 98.85 | |

| 25 | 5 | 8 | 32.9 | 100.21 | |

| 25 | 5 | 8 | 73.8 | 99.87 | |

| 25 | 5 | 8 | 49.1 | 102.24 | |

| 25 | 5 | 8 | 111.2 | 98.74 | |

| 25 | 5 | 8 | 14.1 | 93.43 | |

| 25 | 5 | 8 | 30.7 | 102.58 | |

| 25 | 5 | 8 | 95.2 | 98.4 | |

| 25 | 5 | 8 | 51.2 | 99.64 | |

| 25 | 5 | 8 | 58.5 | 97.95 | |

| 25 | 5 | 8 | 46 | 104.84 | |

| 25 | 5 | 8 | 71.5 | 99.41 | |

| 25 | 5 | 8 | 80.5 | 101.79 | |

| 25 | 5 | 8 | 59.5 | 98.62 | |

| 25 | 5 | 8 | 16.5 | 100.77 | |

| 25 | 5 | 8 | 44.9 | 97.15 | |

| 25 | 5 | 8 | 58.3 | 98.74 |

References

- Kaloop, M.R.; Samui, P.; Iqbal, M.; Hu, J.W. Soft Computing Approaches towards Tensile Strength Estimation of GFRP Rebars Subjected to Alkaline-Concrete Environment. Case Stud. Constr. Mater. 2022, 16, e00955. [Google Scholar] [CrossRef]

- Kaloop, M.R.; Gabr, A.R.; El-Badawy, S.M.; Arisha, A.; Shwally, S.; Hu, J.W. Predicting Resilient Modulus of Recycled Concrete and Clay Masonry Blends for Pavement Applications Using Soft Computing Techniques. Front. Struct. Civ. Eng. 2019, 13, 1379–1392. [Google Scholar] [CrossRef]

- Kaloop, M.R.; Kumar, D.; Samui, P.; Hu, J.W.; Kim, D. Compressive Strength Prediction of High-Performance Concrete Using Gradient Tree Boosting Machine. Constr. Build. Mater. 2020, 264, 120198. [Google Scholar] [CrossRef]

- Kaloop, M.R.; Roy, B.; Chaurasia, K.; Kim, S.-M.; Jang, H.-M.; Hu, J.-W.; Abdelwahed, B.S. Shear Strength Estimation of Reinforced Concrete Deep Beams Using a Novel Hybrid Metaheuristic Optimized SVR Models. Sustainability 2022, 14, 5238. [Google Scholar] [CrossRef]

- Das, S.; Mansouri, I.; Choudhury, S.; Gandomi, A.H.; Hu, J.W. A Prediction Model for the Calculation of Effective Stiffness Ratios of Reinforced Concrete Columns. Materials 2021, 14, 1792. [Google Scholar] [CrossRef] [PubMed]

- Mansouri, I.; Ozbakkaloglu, T.; Kisi, O.; Xie, T. Predicting Behavior of FRP-Confined Concrete Using Neuro Fuzzy, Neural Network, Multivariate Adaptive Regression Splines and M5 Model Tree Techniques. Mater. Struct. 2016, 49, 4319–4334. [Google Scholar] [CrossRef]

- Mansouri, I.; Gholampour, A.; Kisi, O.; Ozbakkaloglu, T. Evaluation of Peak and Residual Conditions of Actively Confined Concrete Using Neuro-Fuzzy and Neural Computing Techniques. Neural Comput. Appl. 2018, 29, 873–888. [Google Scholar] [CrossRef]

- Gholampour, A.; Mansouri, I.; Kisi, O.; Ozbakkaloglu, T. Evaluation of Mechanical Properties of Concretes Containing Coarse Recycled Concrete Aggregates Using Multivariate Adaptive Regression Splines (MARS), M5 Model Tree (M5Tree), and Least Squares Support Vector Regression (LSSVR) Models. Neural Comput. Appl. 2020, 32, 295–308. [Google Scholar] [CrossRef]

- Shariati, M.; Mafipour, M.S.; Mehrabi, P.; Ahmadi, M.; Wakil, K.; Trung, N.T.; Toghroli, A. Prediction of Concrete Strength in Presence of Furnace Slag and Fly Ash Using Hybrid ANN-GA (Artificial Neural Network-Genetic Algorithm). Smart Struct. Syst. 2020, 25, 183–195. [Google Scholar] [CrossRef]

- Shariati, M.; Mafipour, M.S.; Haido, J.H.; Yousif, S.T.; Toghroli, A.; Trung, N.T.; Shariati, A. Identification of the Most Influencing Parameters on the Properties of Corroded Concrete Beams Using an Adaptive Neuro-Fuzzy Inference System (ANFIS). Comput. Concr. 2020, 25, 83–94. [Google Scholar] [CrossRef]

- Pazouki, G.; Golafshani, E.M.; Behnood, A. Predicting the Compressive Strength of Self-Compacting Concrete Containing Class F Fly Ash Using Metaheuristic Radial Basis Function Neural Network. Struct. Concr. 2022, 23, 1191–1213. [Google Scholar] [CrossRef]

- Mohammadi Golafshani, E.; Arashpour, M.; Behnood, A. Predicting the Compressive Strength of Green Concretes Using Harris Hawks Optimization-Based Data-Driven Methods. Constr. Build. Mater. 2022, 318, 125944. [Google Scholar] [CrossRef]

- Shahmansouri, A.A.; Nematzadeh, M.; Behnood, A. Mechanical Properties of GGBFS-Based Geopolymer Concrete Incorporating Natural Zeolite and Silica Fume with an Optimum Design Using Response Surface Method. J. Build. Eng. 2021, 36, 102138. [Google Scholar] [CrossRef]

- John, S.K.; Cascardi, A.; Nadir, Y.; Aiello, M.A.; Girija, K. A New Artificial Neural Network Model for the Prediction of the Effect of Molar Ratios on Compressive Strength of Fly Ash-Slag Geopolymer Mortar. Adv. Civ. Eng. 2021, 2021, 6662347. [Google Scholar] [CrossRef]

- Aprianti, S.E. A Huge Number of Artificial Waste Material Can Be Supplementary Cementitious Material (SCM) for Concrete Production—A Review Part II. J. Clean. Prod. 2017, 142, 4178–4194. [Google Scholar] [CrossRef]

- Akbar, A.; Farooq, F.; Shafique, M.; Aslam, F.; Alyousef, R.; Alabduljabbar, H. Sugarcane Bagasse Ash-Based Engineered Geopolymer Mortar Incorporating Propylene Fibers. J. Build. Eng. 2021, 33, 101492. [Google Scholar] [CrossRef]

- Jain, M.; Dwivedi, A. Fly Ash—Waste Management and Overview: A Review Fly Ash—Waste Management and Overview: A Review. Recent Res. Sci. Technol. 2014, 2014, 6. [Google Scholar]

- Rafieizonooz, M.; Mirza, J.; Salim, M.R.; Hussin, M.W.; Khankhaje, E. Investigation of Coal Bottom Ash and Fly Ash in Concrete as Replacement for Sand and Cement. Constr. Build. Mater. 2016, 116, 15–24. [Google Scholar] [CrossRef]

- Abdulkareem, O.A.; Mustafa Al Bakri, A.M.; Kamarudin, H.; Khairul Nizar, I.; Saif, A.A. Effects of Elevated Temperatures on the Thermal Behavior and Mechanical Performance of Fly Ash Geopolymer Paste, Mortar and Lightweight Concrete. Constr. Build. Mater. 2014, 50, 377–387. [Google Scholar] [CrossRef]

- Khan, M.A.; Zafar, A.; Akbar, A.; Javed, M.F.; Mosavi, A. Application of Gene Expression Programming (GEP) for the Prediction of Compressive Strength of Geopolymer Concrete. Materials 2021, 14, 1106. [Google Scholar] [CrossRef]

- Ghazali, N.; Muthusamy, K.; Wan Ahmad, S. Utilization of Fly Ash in Construction. IOP Conf. Ser.: Mater. Sci. Eng. 2019, 601, 012023. [Google Scholar] [CrossRef]

- Farooq, F.; Akbar, A.; Khushnood, R.A.; Muhammad, W.L.B.; Rehman, S.K.U.; Javed, M.F. Experimental Investigation of Hybrid Carbon Nanotubes and Graphite Nanoplatelets on Rheology, Shrinkage, Mechanical, and Microstructure of SCCM. Materials 2020, 13, 230. [Google Scholar] [CrossRef] [PubMed]

- Liew, K.M.; Akbar, A. The Recent Progress of Recycled Steel Fiber Reinforced Concrete. Constr. Build. Mater. 2020, 232, 117232. [Google Scholar] [CrossRef]

- Gagg, C.R. Cement and Concrete as an Engineering Material: An Historic Appraisal and Case Study Analysis. Eng. Fail. Anal. 2014, 40, 114–140. [Google Scholar] [CrossRef]

- Mehta, P.K. Greening of the Concrete Industry for Sustainable Development. Concr. Int. 2002, 24, 23–28. [Google Scholar]

- Wongsa, A.; Siriwattanakarn, A.; Nuaklong, P.; Sata, V.; Sukontasukkul, P.; Chindaprasirt, P. Use of Recycled Aggregates in Pressed Fly Ash Geopolymer Concrete. Environ. Prog. Sustain. Energy 2020, 39, e13327. [Google Scholar] [CrossRef]

- Javed, M.F.; Amin, M.N.; Shah, M.I.; Khan, K.; Iftikhar, B.; Farooq, F.; Aslam, F.; Alyousef, R.; Alabduljabbar, H. Applications of Gene Expression Programming and Regression Techniques for Estimating Compressive Strength of Bagasse Ash Based Concrete. Crystals 2020, 10, 737. [Google Scholar] [CrossRef]

- Nour, A.I.; Güneyisi, E.M. Prediction Model on Compressive Strength of Recycled Aggregate Concrete Filled Steel Tube Columns. Compos. Part B Eng. 2019, 173, 106938. [Google Scholar] [CrossRef]

- Shahmansouri, A.A.; Akbarzadeh Bengar, H.; Ghanbari, S. Compressive Strength Prediction of Eco-Efficient GGBS-Based Geopolymer Concrete Using GEP Method. J. Build. Eng. 2020, 31, 101326. [Google Scholar] [CrossRef]

- Carbon Dioxide Capture and Storage: Special Report of the Intergovernmental —IPCC, Intergovernmental Panel on Climate Change, Intergovernmental Panel on Climate Change. Working Group III—Google Books. Available online: https://books.google.com/books?hl=en&lr=&id=HWgRvPUgyvQC&oi=fnd&pg=PA58&ots=WIoyaGdsz6&sig=vZMFpF_AnR9sKSx60fFDyb225dg#v=onepage&q&f=false (accessed on 9 August 2022).

- Ávalos-Rendón, T.L.; Chelala, E.A.P.; Mendoza Escobedo, C.J.; Figueroa, I.A.; Lara, V.H.; Palacios-Romero, L.M. Synthesis of Belite Cements at Low Temperature from Silica Fume and Natural Commercial Zeolite. Mater. Sci. Eng. B 2018, 229, 79–85. [Google Scholar] [CrossRef]

- Pacheco-Torgal, F.; Abdollahnejad, Z.; Camões, A.F.; Jamshidi, M.; Ding, Y. Durability of Alkali-Activated Binders: A Clear Advantage over Portland Cement or an Unproven Issue? Constr. Build. Mater. 2012, 30, 400–405. [Google Scholar] [CrossRef]

- Samimi, K.; Kamali-Bernard, S.; Akbar Maghsoudi, A.; Maghsoudi, M.; Siad, H. Influence of Pumice and Zeolite on Compressive Strength, Transport Properties and Resistance to Chloride Penetration of High Strength Self-Compacting Concretes. Constr. Build. Mater. 2017, 151, 292–311. [Google Scholar] [CrossRef]

- Shahmansouri, A.A.; Yazdani, M.; Ghanbari, S.; Akbarzadeh Bengar, H.; Jafari, A.; Farrokh Ghatte, H. Artificial Neural Network Model to Predict the Compressive Strength of Eco-Friendly Geopolymer Concrete Incorporating Silica Fume and Natural Zeolite. J. Clean. Prod. 2021, 279, 123697. [Google Scholar] [CrossRef]

- Wu, Y.; Li, S. Damage Degree Evaluation of Masonry Using Optimized SVM-Based Acoustic Emission Monitoring and Rate Process Theory. Measurement 2022, 190, 110729. [Google Scholar] [CrossRef]

- Fan, X.; Li, S.; Tian, L. Chaotic Characteristic Identification for Carbon Price and an Multi-Layer Perceptron Network Prediction Model. Expert Syst. Appl. 2015, 42, 3945–3952. [Google Scholar] [CrossRef]

- Wu, Y.; Zhou, Y. Prediction and Feature Analysis of Punching Shear Strength of Two-Way Reinforced Concrete Slabs Using Optimized Machine Learning Algorithm and Shapley Additive Explanations. Mech. Adv. Mater. Struct. 2022, 1–11. [Google Scholar] [CrossRef]

- Wu, Y.; Zhou, Y. Splitting Tensile Strength Prediction of Sustainable High-Performance Concrete Using Machine Learning Techniques. Environ. Sci. Pollut. Res. 2022, 1–12. [Google Scholar] [CrossRef]

- Han, B.; Wu, Y.; Liu, L. Prediction and Uncertainty Quantification of Compressive Strength of High-Strength Concrete Using Optimized Machine Learning Algorithms. Struct. Concr. 2022, 1–14. [Google Scholar] [CrossRef]

- Wu, Y.; Zhou, Y. Hybrid Machine Learning Model and Shapley Additive Explanations for Compressive Strength of Sustainable Concrete. Constr. Build. Mater. 2022, 330, 127298. [Google Scholar] [CrossRef]

- Zhu, B.; Shi, X.; Chevallier, J.; Wang, P.; Wei, Y.-M. An Adaptive Multiscale Ensemble Learning Paradigm for Nonstationary and Nonlinear Energy Price Time Series Forecasting. J. Forecast. 2016, 35, 633–651. [Google Scholar] [CrossRef]

- Patel, J.; Shah, S.; Thakkar, P.; Kotecha, K. Predicting Stock Market Index Using Fusion of Machine Learning Techniques. Expert Syst. Appl. 2015, 42, 2162–2172. [Google Scholar] [CrossRef]

- Dou, Z.; Sun, Y.; Zhang, Y.; Wang, T.; Wu, C.; Fan, S. Regional Manufacturing Industry Demand Forecasting: A Deep Learning Approach. Appl. Sci. 2021, 11, 6199. [Google Scholar] [CrossRef]

- Britto, J.; Muthuraj, M.P. Prediction of Compressive Strength of Bacteria Incorporated Geopolymer Concrete by Using ANN and MARS. Struct. Eng. Mech. 2019, 70, 671. [Google Scholar] [CrossRef]

- Mansouri, I.; Ostovari, M.; Awoyera, P.O.; Hu, J.W.; Mansouri, I.; Ostovari, M.; Awoyera, P.O.; Hu, J.W. Predictive Modeling of the Compressive Strength of Bacteria-Incorporated Geopolymer Concrete Using a Gene Expression Programming Approach. Comput. Concr. 2021, 27, 319–332. [Google Scholar] [CrossRef]

- Paruthi, S.; Husain, A.; Alam, P.; Husain Khan, A.; Abul Hasan, M.; Magbool, H.M. A Review on Material Mix Proportion and Strength Influence Parameters of Geopolymer Concrete: Application of ANN Model for GPC Strength Prediction. Constr Build Mater 2022, 356, 129253. [Google Scholar] [CrossRef]

- Patankar, S.V.; Ghugal, Y.M.; Jamkar, S.S. Effect of Concentration of Sodium Hydroxide and Degree of Heat Curing on Fly Ash-Based Geopolymer Mortar. Indian J. Mater. Sci. 2014, 2014, 938789. [Google Scholar] [CrossRef]

- Patankar, S.V.; Ghugal, Y.M.; Jamkar, S.S. Mix Design of Fly Ash Based Geopolymer Concrete. In Advances in Structural Engineering: Materials, Volume Three; Springer: Berlin/Heidelberg, Germany, 2015; pp. 1619–1634. [Google Scholar] [CrossRef]

- Khater, H.M. Effect of Silica Fume on the Characterization of the Geopolymer Materials. Int. J. Adv. Struct. Eng. 2013, 5, 12. [Google Scholar] [CrossRef]

- Jayarajan, G.; Arivalagan, S. Study of Geopolymer Based Bacterial Concrete. Int. J. Civ. Eng. 2019, 6, 30–33. [Google Scholar] [CrossRef]

- Dorogush, A.V.; Ershov, V.; Yandex, A.G. CatBoost: Gradient Boosting with Categorical Features Support. arXiv 2018, arXiv:abs/1810.11363. [Google Scholar] [CrossRef]

- Diao, L.; Niu, D.; Zang, Z.; Chen, C. Short-Term Weather Forecast Based on Wavelet Denoising and Catboost. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 3760–3764. [Google Scholar] [CrossRef]

- Jhaveri, S.; Khedkar, I.; Kantharia, Y.; Jaswal, S. Success Prediction Using Random Forest, CatBoost, XGBoost and AdaBoost for Kickstarter Campaigns. In Proceedings of the 2019 3rd International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 27–29 March 2019; pp. 1170–1173. [Google Scholar]

- Liu, W.; Deng, K.; Zhang, X.; Cheng, Y.; Zheng, Z.; Jiang, F.; Peng, J. A Semi-Supervised Tri-CatBoost Method for Driving Style Recognition. Symmetry 2020, 12, 336. [Google Scholar] [CrossRef]

- Li, M.F.; Gao, Y. Cen Diabetes Prediction Method Based on CatBoost Algorithm. Comput. Syst. Appl. 2019, 28, 215–218. [Google Scholar]

- Dhananjay, B.; Sivaraman, J. Analysis and Classification of Heart Rate Using CatBoost Feature Ranking Model. Biomed. Signal Process. Control 2021, 68, 102610. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely Randomized Trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Hameed, M.M.; Alomar, M.K.; Khaleel, F.; Al-Ansari, N. An Extra Tree Regression Model for Discharge Coefficient Prediction: Novel, Practical Applications in the Hydraulic Sector and Future Research Directions. Math Probl Eng 2021, 2021, 7001710. [Google Scholar] [CrossRef]

- Sharafati, A.; Asadollah, S.B.H.S.; Hosseinzadeh, M. The Potential of New Ensemble Machine Learning Models for Effluent Quality Parameters Prediction and Related Uncertainty. Process Saf. Environ. Prot. 2020, 140, 68–78. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Mishra, G.; Sehgal, D.; Valadi, J.K. Quantitative Structure Activity Relationship Study of the Anti-Hepatitis Peptides Employing Random Forests and Extra-Trees Regressors. Bioinformation 2017, 13, 60–62. [Google Scholar] [CrossRef]

- John, V.; Liu, Z.; Guo, C.; Mita, S.; Kidono, K. Real-Time Lane Estimation Using Deep Features and Extra Trees Regression. In Image and Video Technology; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9431, pp. 721–733. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Statist. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Dahiya, N.; Saini, B.; Chalak, H.D. Gradient Boosting-Based Regression Modelling for Estimating the Time Period of the Irregular Precast Concrete Structural System with Cross Bracing. J. King Saud Univ.-Eng. Sci. 2021. [Google Scholar] [CrossRef]

- Kapoor, N.R.; Kumar, A.; Kumar, A.; Kumar, A.; Mohammed, M.A.; Kumar, K.; Kadry, S.; Lim, S. Machine Learning-Based CO2Prediction for Office Room: A Pilot Study. Wirel. Commun. Mob. Comput. 2022, 2022, 9404807. [Google Scholar] [CrossRef]

- Kumar, A.; Arora, H.C.; Kapoor, N.R.; Mohammed, M.A.; Kumar, K.; Majumdar, A.; Thinnukool, O. Compressive Strength Prediction of Lightweight Concrete: Machine Learning Models. Sustainability 2022, 14, 2404. [Google Scholar] [CrossRef]

- Ambe, K.; Suzuki, M.; Ashikaga, T.; Tohkin, M. Development of Quantitative Model of a Local Lymph Node Assay for Evaluating Skin Sensitization Potency Applying Machine Learning CatBoost. Regul. Toxicol. Pharmacol. 2021, 125, 105019. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Sun, X.; Cheng, Q.; Cui, Q. An Innovative Random Forest-Based Nonlinear Ensemble Paradigm of Improved Feature Extraction and Deep Learning for Carbon Price Forecasting. Sci. Total Environ. 2021, 762, 143099. [Google Scholar] [CrossRef] [PubMed]

- Namdarpour, F.; Mesbah, M.; Gandomi, A.H.; Assemi, B. Using Genetic Programming on GPS Trajectories for Travel Mode Detection. IET Intell. Transp. Syst. 2022, 16, 99–113. [Google Scholar] [CrossRef]

- Asteris, P.G.; Gavriilaki, E.; Touloumenidou, T.; Koravou, E.E.; Koutra, M.; Papayanni, P.G.; Pouleres, A.; Karali, V.; Lemonis, M.E.; Mamou, A.; et al. Genetic Prediction of ICU Hospitalization and Mortality in COVID-19 Patients Using Artificial Neural Networks. J. Cell. Mol. Med. 2022, 26, 1445–1455. [Google Scholar] [CrossRef]

- Naser, M.Z.; Alavi, A.H. Error Metrics and Performance Fitness Indicators for Artificial Intelligence and Machine Learning in Engineering and Sciences. Archit. Struct. Constr. 2021, 1, 1–19. [Google Scholar] [CrossRef]

- Atkinson, A.; Riani, M. Robust Diagnostic Regression Analysis; Springer: New York, NY, USA, 2000; ISBN 978-1-4612-7027-0. [Google Scholar]

- Buitinck, L.; Louppe, G.; Blondel, M.; Pedregosa, F.; Mueller, A.; Grisel, O.; Niculae, V.; Prettenhofer, P.; Grobler, A.G.; Layton, R.; et al. API Design for Machine Learning Software: Experiences from the Scikit-Learn. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.manifold.TSNE.html (accessed on 9 August 2022).

| Model | MAE | MSE | RMSE | R2 | RMSLE | MAPE |

|---|---|---|---|---|---|---|

| CatBoost Regressor | 2.1116 | 7.2175 | 2.629 | 0.9565 | 0.0312 | 0.0254 |

| Extra Trees Regressor | 2.1126 | 8.1478 | 2.7518 | 0.9558 | 0.0333 | 0.0257 |

| Gradient Boosting Regressor | 2.175 | 7.6915 | 2.7279 | 0.9528 | 0.0327 | 0.0264 |

| Fold | MAE | MSE | RMSE | R2 | RMSLE | MAPE |

|---|---|---|---|---|---|---|

| 0 | 1.8879 | 4.6918 | 2.1661 | 0.9847 | 0.0282 | 0.0243 |

| 1 | 1.7773 | 6.0005 | 2.4496 | 0.9779 | 0.0312 | 0.0228 |

| 2 | 1.575 | 3.9003 | 1.9749 | 0.9868 | 0.0212 | 0.018 |

| 3 | 1.2351 | 2.0828 | 1.4432 | 0.994 | 0.0191 | 0.0171 |

| 4 | 1.3525 | 3.1442 | 1.7732 | 0.9906 | 0.0201 | 0.0166 |

| 5 | 1.9193 | 6.8454 | 2.6164 | 0.9648 | 0.0315 | 0.0241 |

| 6 | 1.5786 | 4.3335 | 2.0817 | 0.9847 | 0.0326 | 0.0236 |

| 7 | 2.802 | 11.0342 | 3.3218 | 0.961 | 0.0392 | 0.0332 |

| 8 | 2.0567 | 5.8858 | 2.4261 | 0.8381 | 0.0253 | 0.0217 |

| 9 | 2.6954 | 9.5568 | 3.0914 | 0.9686 | 0.0369 | 0.0313 |

| Mean | 1.888 | 5.7475 | 2.3344 | 0.9651 | 0.0285 | 0.0233 |

| Std | 0.4935 | 2.6549 | 0.5459 | 0.0436 | 0.0066 | 0.0053 |

| Fold | MAE | MSE | RMSE | R2 | RMSLE | MAPE |

|---|---|---|---|---|---|---|

| 0 | 1.613 | 3.8801 | 1.9698 | 0.9874 | 0.0253 | 0.0206 |

| 1 | 2.7107 | 11.4503 | 3.3838 | 0.9578 | 0.0438 | 0.0358 |

| 2 | 1.3623 | 3.6744 | 1.9169 | 0.9876 | 0.0205 | 0.0155 |

| 3 | 1.7642 | 6.0952 | 2.4688 | 0.9824 | 0.032 | 0.0239 |

| 4 | 1.8849 | 4.8217 | 2.1958 | 0.9857 | 0.0284 | 0.0244 |

| 5 | 1.8805 | 6.0462 | 2.4589 | 0.9689 | 0.0282 | 0.023 |

| 6 | 1.574 | 4.8332 | 2.1984 | 0.9829 | 0.0344 | 0.0236 |

| 7 | 2.4592 | 7.8659 | 2.8046 | 0.9722 | 0.0307 | 0.0281 |

| 8 | 1.9959 | 5.5419 | 2.3541 | 0.8476 | 0.0247 | 0.0211 |

| 9 | 2.7254 | 9.2016 | 3.0334 | 0.9698 | 0.0349 | 0.0315 |

| Mean | 1.997 | 6.3411 | 2.4785 | 0.9642 | 0.0303 | 0.0248 |

| Std | 0.4542 | 2.3484 | 0.4452 | 0.04 | 0.0062 | 0.0055 |

| Fold | MAE | MSE | RMSE | R2 | RMSLE | MAPE |

|---|---|---|---|---|---|---|

| 0 | 1.6887 | 4.0168 | 2.0042 | 0.9869 | 0.0247 | 0.0211 |

| 1 | 2.0354 | 6.4656 | 2.5428 | 0.9762 | 0.0326 | 0.0265 |

| 2 | 1.7395 | 5.4694 | 2.3387 | 0.9815 | 0.0262 | 0.0209 |

| 3 | 1.425 | 2.9556 | 1.7192 | 0.9915 | 0.0219 | 0.019 |

| 4 | 1.8397 | 4.6379 | 2.1536 | 0.9862 | 0.025 | 0.0224 |

| 5 | 1.9379 | 7.1815 | 2.6798 | 0.963 | 0.0333 | 0.0245 |

| 6 | 1.3919 | 5.0369 | 2.2443 | 0.9822 | 0.0369 | 0.0222 |

| 7 | 2.9093 | 16.3499 | 4.0435 | 0.9422 | 0.0423 | 0.0313 |

| 8 | 1.7562 | 5.5063 | 2.3466 | 0.8486 | 0.0245 | 0.0185 |

| 9 | 3.0088 | 10.4196 | 3.2279 | 0.9658 | 0.0409 | 0.0363 |

| Mean | 1.9733 | 6.804 | 2.5301 | 0.9624 | 0.0308 | 0.0243 |

| Std | 0.5285 | 3.719 | 0.6347 | 0.0404 | 0.007 | 0.0054 |

| Fold | MAE | MSE | RMSE | R2 | RMSLE | MAPE |

|---|---|---|---|---|---|---|

| 0 | 1.6699 | 9.6212 | 2.2936 | 0.987 | 0.0234 | 0.0133 |

| 1 | 2.1309 | 13.8944 | 2.6276 | 0.995 | 0.0166 | 0.0175 |

| 2 | 1.9022 | 5.3901 | 2.0845 | 0.997 | 0.0325 | 0.0172 |

| 3 | 1.6091 | 7.2350 | 1.9028 | 0.993 | 0.0295 | 0.0159 |

| 4 | 1.8274 | 8.2654 | 1.6877 | 0.981 | 0.0312 | 0.0265 |

| 5 | 2.0111 | 6.3820 | 1.0339 | 0.985 | 0.0194 | 0.0270 |

| 6 | 1.4661 | 6.0591 | 2.9916 | 0.997 | 0.0263 | 0.0187 |

| 7 | 1.5413 | 7.0150 | 2.7520 | 0.952 | 0.0289 | 0.0226 |

| 8 | 1.8261 | 4.2140 | 2.1107 | 0.994 | 0.0264 | 0.0228 |

| 9 | 1.5823 | 8.6375 | 3.0620 | 0.976 | 0.0252 | 0.0149 |

| Mean | 1.7567 | 7.6714 | 2.2546 | 0.99 | 0.0259 | 0.0197 |

| Std | 0.2176 | 2.7050 | 0.6296 | 0.013818 | 0.0050 | 0.0048 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mansouri, E.; Manfredi, M.; Hu, J.-W. Environmentally Friendly Concrete Compressive Strength Prediction Using Hybrid Machine Learning. Sustainability 2022, 14, 12990. https://doi.org/10.3390/su142012990

Mansouri E, Manfredi M, Hu J-W. Environmentally Friendly Concrete Compressive Strength Prediction Using Hybrid Machine Learning. Sustainability. 2022; 14(20):12990. https://doi.org/10.3390/su142012990

Chicago/Turabian StyleMansouri, Ehsan, Maeve Manfredi, and Jong-Wan Hu. 2022. "Environmentally Friendly Concrete Compressive Strength Prediction Using Hybrid Machine Learning" Sustainability 14, no. 20: 12990. https://doi.org/10.3390/su142012990

APA StyleMansouri, E., Manfredi, M., & Hu, J.-W. (2022). Environmentally Friendly Concrete Compressive Strength Prediction Using Hybrid Machine Learning. Sustainability, 14(20), 12990. https://doi.org/10.3390/su142012990