Examining the Effects of Artificial Intelligence on Elementary Students’ Mathematics Achievement: A Meta-Analysis

Abstract

:1. Introduction

2. Literature Review

2.1. AI in Education

2.2. Review of Previous Meta-Analyses

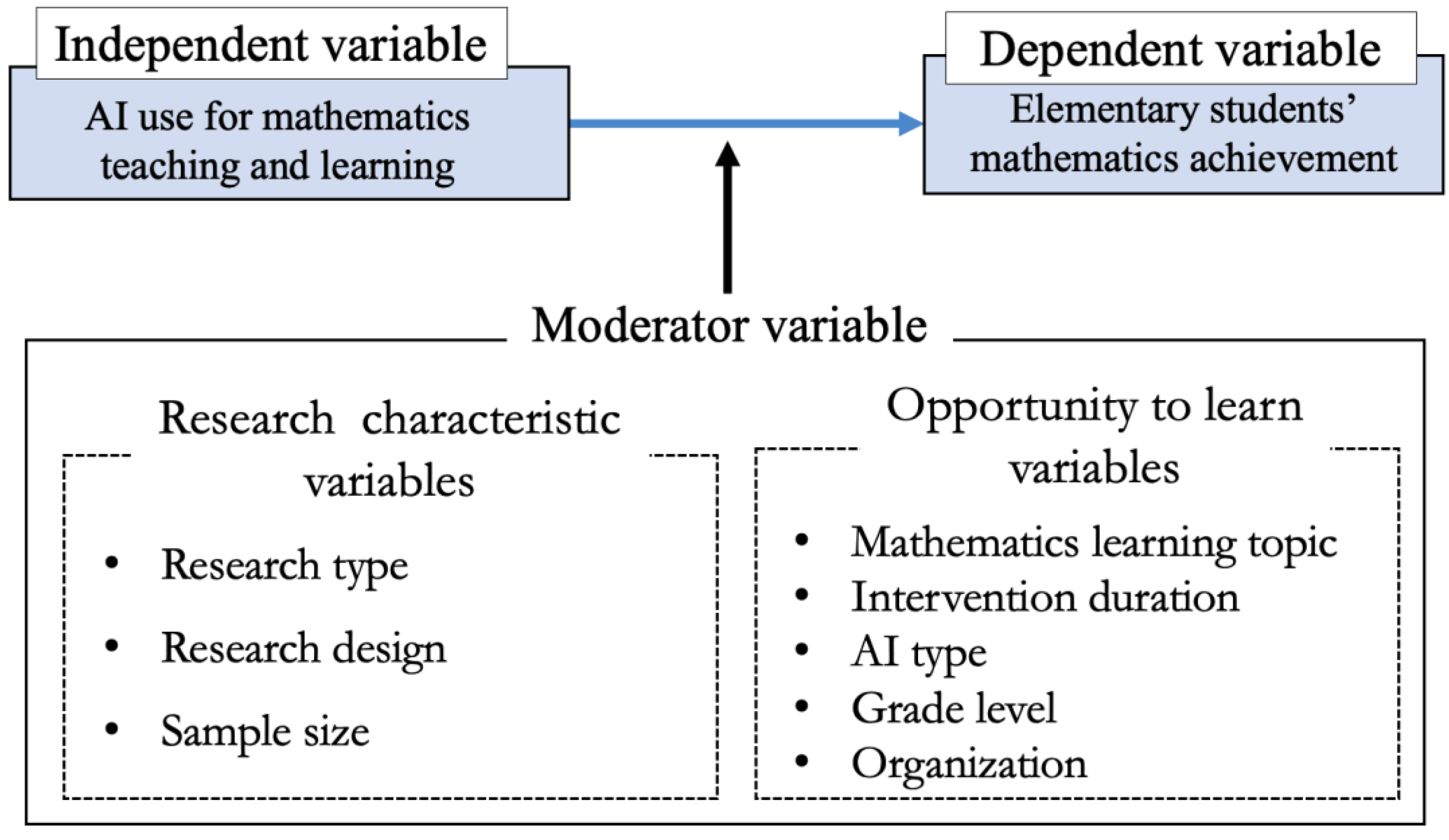

2.3. Analytical Framework

2.4. The Present Study

3. Methods

3.1. Article-Selection Process

3.2. Coding Procedure

3.3. Data Analysis

4. Results

4.1. Overall Effect Size of AI on Mathematics Achievement

4.2. Publication Bias

4.3. Moderator Analysis

4.3.1. Mathematics Learning Topic

4.3.2. Intervention Duration

4.3.3. AI Type

4.3.4. Grade Level

4.3.5. Organization

5. Discussion

6. Limitations

7. Conclusions and Implications

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zafari, M.; Bazargani, J.S.; Sadeghi-Niaraki, A.; Choi, S.-M. Artificial intelligence applications in K-12 education: A systematic literature review. IEEE Access 2022, 10, 61905–61921. [Google Scholar] [CrossRef]

- Pedro, F.; Subosa, M.; Rivas, A.; Valverde, P. Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development; UNESCO: Paris, France, 2019. [Google Scholar]

- Ahmad, S.F.; Rahmat, M.K.; Mubarik, M.S.; Alam, M.M.; Hyder, S.I. Artificial intelligence and its role in education. Sustainability 2021, 13, 12902. [Google Scholar] [CrossRef]

- Paek, S.; Kim, N. Analysis of worldwide research trends on the impact of artificial intelligence in education. Sustainability 2021, 13, 7941. [Google Scholar] [CrossRef]

- Zheng, L.; Niu, J.; Zhong, L.; Gyasi, J.F. The effectiveness of artificial intelligence on learning achievement and learning perception: A meta-analysis. Interact. Learn. Environ. 2021, 1–15. [Google Scholar] [CrossRef]

- Zhang, K.; Aslan, A.B. AI technologies for education: Recent research & future directions. Compu. Edu. 2021, 2, 100025. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education: Where are the educators? Int. J. Educ. Technol. High. Ed. 2019, 16, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Rittle-Johnson, B.; Koedinger, K. Iterating between lessons on concepts and procedures can improve mathematics knowledge. Brit. J. Educ. Psychol. 2009, 79, 483–500. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moltudal, S.; Høydal, K.; Krumsvik, R.J. Glimpses into real-life introduction of adaptive learning technology: A mixed methods research approach to personalised pupil learning. Design. Learn. 2020, 12, 13–28. [Google Scholar] [CrossRef]

- González-Calero, J.A.; Cózar, R.; Villena, R.; Merino, J.M. The development of mental rotation abilities through robotics-based instruction: An experience mediated by gender. Brit. J. Educ. Technol. 2019, 50, 3198–3213. [Google Scholar] [CrossRef]

- OECD. Artificial Intelligence in Society; OECD Publishing: Paris, France, 2019. [Google Scholar]

- Hwang, G.-J.; Sung, H.-Y.; Chang, S.-C.; Huang, X.-C. A fuzzy expert system-based adaptive learning approach to improving students’ learning performances by considering affective and cognitive factors. Compu. Edu. Art. Intel. 2020, 1, 100003. [Google Scholar] [CrossRef]

- Chen, L.; Chen, P.; Lin, Z. Artificial intelligence in education: A review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Mou, X. Artificial intelligence: Investment trends and selected industry uses. Int. Finance. Corp. 2019, 8, 1–8. [Google Scholar]

- Chen, X.; Xie, H.; Hwang, G.-J. A multi-perspective study on artificial intelligence in education: Grants, conferences, journals, software tools, institutions, and researchers. Compu. Edu. 2020, 1, 100005. [Google Scholar] [CrossRef]

- González-Calatayud, V.; Prendes-Espinosa, P.; Roig-Vila, R. Artificial intelligence for student assessment: A systematic review. Appl. Sci. 2021, 11, 5467. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, Y. Effects of educational robotics on the creativity and problem-solving skills of K-12 students: A meta-analysis. Edu. Stud. 2022, 1–19. [Google Scholar] [CrossRef]

- Namoun, A.; Alshanqiti, A. Predicting student performance using data mining and learning analytics techniques: A systematic literature review. Appl. Sci. 2020, 11, 237. [Google Scholar] [CrossRef]

- Ma, W.; Adesope, O.O.; Nesbit, J.C.; Liu, Q. Intelligent tutoring systems and learning outcomes: A meta-analysis. J. Educ. Psychol. 2014, 106, 901–918. [Google Scholar] [CrossRef] [Green Version]

- Hwang, G.-J.; Tu, Y.-F. Roles and research trends of artificial intelligence in mathematics education: A bibliometric mapping analysis and systematic review. Mathematics 2021, 9, 584. [Google Scholar] [CrossRef]

- Gamoran, A.; Hannigan, E.C. Algebra for everyone? Benefits of college-preparatory mathematics for students with diverse abilities in early secondary school. Educ. Eval. Policy. 2000, 22, 241–254. [Google Scholar] [CrossRef]

- OECD. PISA 2018 Assessment and Analytical Framework; OECD Publishing: Paris, France, 2019. [Google Scholar]

- Moses, R.P.; Cobb, C.E. Radical Equations: Math Literacy and Civil Rights; Beacon Press: Boston, MA, USA, 2001. [Google Scholar]

- UN. Transforming Our World: The 2030 Agenda for Sustainable Development; UN: New York, NY, USA, 2015. [Google Scholar]

- Steenbergen-Hu, S.; Cooper, H. A meta-analysis of the effectiveness of intelligent tutoring systems on K–12 students’ mathematical learning. J. Edu. Psyc. 2013, 105, 970–987. [Google Scholar] [CrossRef] [Green Version]

- OECD. PISA 2012 Assessment and Analytical Framework; OECD Publishing: Paris, France, 2013. [Google Scholar]

- Carroll, J.B. A model of school learning. Teach. Coll. Rec. 1963, 64, 1–9. [Google Scholar] [CrossRef]

- Steenbergen-Hu, S.; Cooper, H. A meta-analysis of the effectiveness of intelligent tutoring systems on college students’ academic learning. J. Edu. Psyc. 2014, 106, 331–347. [Google Scholar] [CrossRef] [Green Version]

- Little, C.W.; Lonigan, C.J.; Phillips, B.M. Differential patterns of growth in reading and math skills during elementary school. J. Educ. Psychol. 2021, 113, 462–476. [Google Scholar] [CrossRef] [PubMed]

- Hascoët, M.; Giaconi, V.; Jamain, L. Family socioeconomic status and parental expectations affect mathematics achievement in a national sample of Chilean students. Int. J. Behav. Dev. 2021, 45, 122–132. [Google Scholar] [CrossRef]

- McCarthy, J.; Minsky, M.L.; Rochester, N.; Shannon, C.E. A proposal for the Dartmouth summer research project on artificial intelligence, August 31, 1955. AI Mag. 2006, 27, 12–14. [Google Scholar]

- Akgun, S.; Greenhow, C. Artificial intelligence in education: Addressing ethical challenges in K-12 settings. AI Ethics 2021, 2, 431–440. [Google Scholar] [CrossRef]

- Baker, T.; Smith, L.; Anissa, N. Educ-AI-Tion Rebooted? Exploring the Future of Artificial Intelligence in Schools and Colleges; Nesta: London, UK, 2019. [Google Scholar]

- Limna, P.; Jakwatanatham, S.; Siripipattanakul, S.; Kaewpuang, P.; Sriboonruang, P. A review of artificial intelligence (AI) in education during the digital era. Adv. Know. Execu. 2022, 1, 1–9. [Google Scholar]

- Lameras, P.; Arnab, S. Power to the teachers: An exploratory review on artificial intelligence in education. Information 2021, 13, 14. [Google Scholar] [CrossRef]

- Shabbir, J.; Anwer, T. Artificial intelligence and its role in near future. J. Latex. Class. 2018, 14, 1–10. [Google Scholar] [CrossRef]

- Mohamed, M.Z.; Hidayat, R.; Suhaizi, N.N.; Mahmud, M.K.H.; Baharuddin, S.N. Artificial intelligence in mathematics education: A systematic literature review. Int. Elect. J. Math. Edu. 2022, 17, em0694. [Google Scholar] [CrossRef]

- Fang, Y.; Ren, Z.; Hu, X.; Graesser, A.C. A meta-analysis of the effectiveness of ALEKS on learning. Edu. Psyc. 2019, 39, 1278–1292. [Google Scholar] [CrossRef]

- Fanchamps, N.L.J.A.; Slangen, L.; Hennissen, P.; Specht, M. The influence of SRA programming on algorithmic thinking and self-efficacy using Lego robotics in two types of instruction. Int. J. Technol. Des. Educ. 2021, 31, 203–222. [Google Scholar] [CrossRef] [Green Version]

- Bush, S.B. Software-based intervention with digital manipulatives to support student conceptual understandings of fractions. Brit. J. Educ. Technol. 2021, 52, 2299–2318. [Google Scholar] [CrossRef]

- Vanbecelaere, S.; Cornillie, F.; Sasanguie, D.; Reynvoet, B.; Depaepe, F. The effectiveness of an adaptive digital educational game for the training of early numerical abilities in terms of cognitive, noncognitive and efficiency outcomes. Brit. J. Educ. Technol. 2021, 52, 112–124. [Google Scholar] [CrossRef]

- Chu, H.-C.; Chen, J.-M.; Kuo, F.-R.; Yang, S.-M. Development of an adaptive game-based diagnostic and remedial learning system based on the concept-effect model for improving learning achievements in mathematics. Edu. Technol. Soc. 2021, 24, 36–53. [Google Scholar]

- Crowley, K. The Impact of Adaptive Learning on Mathematics Achievement; New Jersey City University: Jersey City, NJ, USA, 2018. [Google Scholar]

- Francis, K.; Rothschuh, S.; Poscente, D.; Davis, B. Malleability of spatial reasoning with short-term and long-term robotics interventions. Technol. Know. Learn. 2022, 27, 927–956. [Google Scholar] [CrossRef]

- Hoorn, J.F.; Huang, I.S.; Konijn, E.A.; van Buuren, L. Robot tutoring of multiplication: Over one-third learning gain for most, learning loss for some. Robotics 2021, 10, 16. [Google Scholar] [CrossRef]

- Hillmayr, D.; Ziernwald, L.; Reinhold, F.; Hofer, S.I.; Reiss, K.M. The potential of digital tools to enhance mathematics and science learning in secondary schools: A context-specific meta-analysis. Compu. Edu. 2020, 153, 103897. [Google Scholar] [CrossRef]

- Lin, R.; Zhang, Q.; Xi, L.; Chu, J. Exploring the effectiveness and moderators of artificial intelligence in the classroom: A meta-analysis. In Resilience and Future of Smart Learning; Yang, J., Kinshuk, D.L., Tlili, A., Chang, M., Popescu, E., Burgos, D., Altınay, Z., Eds.; Springer: New York, NY, USA, 2022; pp. 61–66. [Google Scholar]

- Athanasiou, L.; Mikropoulos, T.A.; Mavridis, D. Robotics interventions for improving educational outcomes: A meta-analysis. In Technology and Innovation in Learning, Teaching and Education; Tsitouridou, M., Diniz, J.A., Mikropoulos, T.A., Eds.; Springer: New York, NY, USA, 2019; pp. 91–102. [Google Scholar]

- Liao, Y.-k.C.; Chen, Y.-H. Effects of integrating computer technology into mathematics instruction on elementary schoolers’ academic achievement: A meta-analysis of one-hundred and sixty-four studies fromTaiwan. In Proceedings of the E-Learn: World Conference on E-Learning in Corporate, Government, Healthcare and Higher Education, Las Vegas, NV, USA, 15 October 2018. [Google Scholar]

- Stevens, F.I. The need to expand the opportunity to learn conceptual framework: Should students, parents, and school resources be included? In Proceedings of the Annual Meeting of the American Educational Research Association, New York, NY, USA, 8–12 April 1996.

- Brewer, D.; Stasz, C. Enhancing opportunity to Learn Measures in NCES Data; RAND: Santa Monica, CA, USA, 1996. [Google Scholar]

- Bozkurt, A.; Karadeniz, A.; Baneres, D.; Guerrero-Roldán, A.E.; Rodríguez, M.E. Artificial intelligence and reflections from educational landscape: A review of AI studies in half a century. Sustainability 2021, 13, 800. [Google Scholar] [CrossRef]

- Egert, F.; Fukkink, R.G.; Eckhardt, A.G. Impact of in-service professional development programs for early childhood teachers on quality ratings and child outcomes: A meta-analysis. Rev. Educ. Res. 2018, 88, 401–433. [Google Scholar] [CrossRef]

- Grbich, C. Qualitative Data Analysis: An Introduction; Sage: London, UK, 2012. [Google Scholar]

- Strauss, A.; Corbin, J. Basics of Qualitative Research; Sage Publisher: New York, NY, USA, 1990. [Google Scholar]

- Pai, K.-C.; Kuo, B.-C.; Liao, C.-H.; Liu, Y.-M. An application of Chinese dialogue-based intelligent tutoring system in remedial instruction for mathematics learning. Edu. Psyc. 2021, 41, 137–152. [Google Scholar] [CrossRef]

- Borenstein, M.; Hedges, L.V.; Higgins, J.P.; Rothstein, H.R. Introduction to Meta-Analysis; John Wiley & Sons: New York, NY, USA, 2021. [Google Scholar]

- Hedges, L.V. Distribution theory for Glass’s estimator of effect size and related estimators. J. Educ. Stat. 1981, 6, 107–128. [Google Scholar] [CrossRef]

- Christopoulos, A.; Kajasilta, H.; Salakoski, T.; Laakso, M.-J. Limits and virtues of educational technology in elementary school mathematics. J. Edu. Technol. 2020, 49, 59–81. [Google Scholar] [CrossRef]

- Chu, Y.-S.; Yang, H.-C.; Tseng, S.-S.; Yang, C.-C. Implementation of a model-tracing-based learning diagnosis system to promote elementary students’ learning in mathematics. Edu. Technol. Soc. 2014, 17, 347–357. [Google Scholar] [CrossRef]

- Hou, X.; Nguyen, H.A.; Richey, J.E.; Harpstead, E.; Hammer, J.; McLaren, B.M. Assessing the effects of open models of learning and enjoyment in a digital learning game. Int. J. Artif. Intel. Edu. 2022, 32, 120–150. [Google Scholar] [CrossRef]

- Julià, C.; Antolí, J. Spatial ability learning through educational robotics. Int. J. Technol. Des. Educ. 2016, 26, 185–203. [Google Scholar] [CrossRef]

- Laughlin, S.R. Robotics: Assessing Its Role in Improving Mathematics Skills for Grades 4 to 5. Doctoral Dissertation, Capella University, Minneapolis, MN, USA, 2013. [Google Scholar]

- Lindh, J.; Holgersson, T. Does lego training stimulate pupils’ ability to solve logical problems? Compu. Educ. 2007, 49, 1097–1111. [Google Scholar] [CrossRef]

- Ortiz, A.M. Fifth Grade Students’ Understanding of Ratio and Proportion in an Engineering Robotics Program; Tufts University: Medford, MA, USA, 2010. [Google Scholar]

- Rau, M.A.; Aleven, V.; Rummel, N.; Pardos, Z. How should intelligent tutoring systems sequence multiple graphical representations of fractions? A multi-methods study. Int. J. Artif. Intel. Edu. 2014, 24, 125–161. [Google Scholar] [CrossRef] [Green Version]

- Rau, M.A.; Aleven, V.; Rummel, N. Making connections among multiple graphical representations of fractions: Sense-making competencies enhance perceptual fluency, but not vice versa. Instr. Sci. 2017, 45, 331–357. [Google Scholar] [CrossRef]

- Ruan, S.S. Smart Tutoring through Conversational Interfaces; Stanford University: Stanford, CA, USA, 2021. [Google Scholar]

- Lampert, M. Teaching Problems and the Problems of Teaching; Yale University Press: New Haven, CT, USA, 2001. [Google Scholar]

- Papert, S. What’s the big idea? Toward a pedagogy of idea power. IBM. Syst. J. 2000, 39, 720–729. [Google Scholar] [CrossRef] [Green Version]

- NCTM. Principles to Actions: Ensuring Mathematical Success for All; NCTM: Reston, VA, USA, 2014. [Google Scholar]

- Dyer, E.B.; Sherin, M.G. Instructional reasoning about interpretations of student thinking that supports responsive teaching in secondary mathematics. ZDM 2016, 48, 69–82. [Google Scholar] [CrossRef]

- Stockero, S.L.; Van Zoest, L.R.; Freeburn, B.; Peterson, B.E.; Leatham, K.R. Teachers’ responses to instances of student mathematical thinking with varied potential to support student learning. Math. Ed. Res. J. 2020, 34, 165–187. [Google Scholar] [CrossRef]

- Noss, R.; Clayson, J. Reconstructing constructionism. Constr. Found. 2015, 10, 285–288. [Google Scholar]

- Kaoropthai, C.; Natakuatoong, O.; Cooharojananone, N. An intelligent diagnostic framework: A scaffolding tool to resolve academic reading problems of Thai first-year university students. Compu. Edu. 2019, 128, 132–144. [Google Scholar] [CrossRef]

| AI-Related Terms | Mathematics-Education- Related Terms | Elementary-Education- Related Terms |

|---|---|---|

| “artificial intelligence” “deep learning” “machine learning” “chatbot” “robot”* “intelligent tutor”* “automated tutor”* “neural network”* “expert system” “intelligent system” “intelligent agent”* “virtual learning” “natural language processing” | “mathematics” “math” “geometry” “arithmetic” “addition” “subtraction” “multiplication” “division” “fraction” “decimal” | “elementary” “primary” “Grade 1” to “Grade 6” “first grade” to “sixth grade” “child”* |

| Dimension | Variable | Sub-Category |

|---|---|---|

| Research characteristics | Research type | -Journal paper, Dissertation |

| Research design | -Experimental study, Non-experimental study | |

| Sample size | -1–40, 41–80, 81–120, Over 120 | |

| Opportunity to learn | Mathematics learning topic | -Determining areas, arithmetic, decimal numbers, finding patterns, fractions, multiplication, ratio and proportion, spatial reasoning |

| Intervention duration | -1–5 h, 6–10 h, over 10 h | |

| AI type | -ALS, ITS, Robotics | |

| Grade level | -Grade 1 to 6 and mixed grade | |

| Organization | -Group work, Individual learning |

| Moderator Variable | Subgroup | K | Effect Size | 95% CI | Between-Groups Effect | ||

|---|---|---|---|---|---|---|---|

| g | SE | LL | UL | ||||

| Research type | Journal | 26 | 0.368 * | 0.065 | 0.242 | 0.495 | = 0.708 p = 0.400 |

| Dissertation | 4 | 0.194 | 0.197 | −0.193 | 0.581 | ||

| Research design | Experimental study | 16 | 0.284 * | 0.086 | 0.115 | 0.453 | = 1.253 p = 0.263 |

| Non-experimental study | 14 | 0.422 * | 0.088 | 0.249 | 0.594 | ||

| Sample size | 1–40 | 9 | 0.545 * | 0.130 | 0.290 | 0.801 | = 2.858 p = 0.414 |

| 41–80 | 12 | 0.290 * | 0.099 | 0.096 | 0.484 | ||

| 81–120 | 6 | 0.288 * | 0.140 | 0.013 | 0.562 | ||

| More than 120 | 3 | 0.310 | 0.180 | −0.043 | 0.662 | ||

| Research Characteristics | Opportunity-to-Learn Variables | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Study | g | SE | Type and Design | Sample Size | Learning Topic | Duration (hours) | AI Type | Grade Level | Organization |

| Bush [40] | 0.315 | 0.128 | J-EX | 297 | Fractions | 10 | ALS | 4, 5 | Individual |

| Christopoulos et al. [59] | 0.060 | 0.200 | J-EX | 100 | Arithmetic | 8 | ALS | 3 | Individual |

| Chu et al. [60] | 0.545 | 0.183 | J-EX | 124 | Fractions | 1 | ITS | 5 | Individual |

| Chu et al. [42] | 0.777 | 0.193 | J-EX | 116 | Fractions | 2 | ALS | 3 | Individual |

| Fanchamps et al. [39]-(1) | 0.194 | 0.187 | J-Non | 62 | Finding patterns | 9 | Robotics | 5, 6 | Group |

| Fanchamps et al. [39]-(2) | 0.09 | 0.174 | J-Non | 62 | Finding patterns | 9 | Robotics | 5, 6 | Group |

| Francis et al. [44] | 0.959 | 0.199 | J-Non | 37 | Spatial reasoning | Over 30 | Robotics | 4 | Group |

| González-Calero et al. [10]-(1) | 0.118 | 0.242 | J-EX | 74 | Spatial reasoning | 2 | Robotics | 3 | Group |

| González-Calero et al. [10]-(2) | 0.636 | 0.249 | J-EX | 68 | Spatial reasoning | 2 | Robotics | 3 | Group |

| Hoorn et al. [45] | 0.83 | 0.134 | J-Non | 75 | Multiplication | — | Robotics | — | Individual |

| Hou et al. [61] | 0.554 | 0.223 | J-Non | 53 | Decimal numbers | 3 | ALS | 5, 6 | Individual |

| Hwang et al. [12]-(1) | −0.013 | 0.192 | J-EX | 109 | Determining areas | 2 | ALS | 5 | Individual |

| Hwang et al. [12]-(2) | 0.418 | 0.194 | J-EX | 109 | Determining areas | 2 | ALS | 5 | Individual |

| Julia and Antoli [62] | 0.613 | 0.451 | J-EX | 21 | Spatial reasoning | 8 | Robotics | 6 | Group |

| Laughlin [63]-(1) | −0.158 | 0.295 | D-EX | 46 | – | – | Robotics | 4 | Group |

| Laughlin [63]-(2) | 0.198 | 0.296 | D-EX | 46 | – | – | Robotics | 5 | Group |

| Lindh and Holgersson [64] | 0.114 | 0.110 | J-EX | 331 | – | Over 50 | Robotics | 5 | Group |

| Moltudal et al. [9] | 0.577 | 0.171 | J-Non | 40 | – | 4 | ALS | 5, 6, 7 | Individual |

| Ortiz [65] | 0.394 | 0.369 | D-EX | 30 | Ratio and proportion | 15 | Robotics | 5 | Group |

| Pai et al. [56]-(1) | 0.303 | 0.213 | J-EX | 89 | Arithmetic | 3.5 | ITS | 5 | Individual |

| Pai et al. [56]-(2) | 0.169 | 0.212 | J-EX | 89 | Arithmetic | 3.5 | ITS | 5 | Individual |

| Rau et al. [66]-(1) | 0.216 | 0.128 | J-Non | 57 | Fractions | 5 | ITS | 4, 5 | Individual |

| Rau et al. [66]-(2) | 0.304 | 0.143 | J-Non | 57 | Fractions | 5 | ITS | 4, 5 | Individual |

| Rau et al. [66]-(3) | 0.136 | 0.138 | J-Non | 57 | Fractions | 5 | ITS | 4, 5 | Individual |

| Rau et al. [67]-(1) | −0.182 | 0.178 | J-Non | 32 | Fractions | – | ITS | 3, 4, 5 | Individual |

| Rau et al. [67]-(2) | 0.169 | 0.166 | J-Non | 37 | Fractions | – | ITS | 3, 4, 5 | Individual |

| Rittle-Johnson and Koedinger [8]-(1) | 1.639 | 0.443 | J-Non | 13 | Decimal numbers | 2 | ITS | 6 | Individual |

| Rittle-Johnson and Koedinger [8]-(2) | 2.002 | 0.513 | J-Non | 13 | Decimal numbers | 2 | ITS | 6 | Individual |

| Ruan [68] | 0.354 | 0.245 | D-Non | 18 | – | 1 | ITS | 3, 4, 5 | Individual |

| Vanbecelaere et al. [41] | 0.185 | 0.243 | J-EX | 68 | Arithmetic | 3 | ALS | 1 | Individual |

| Moderator Variable | Subgroup | K | Effect Size | 95% CI | Between-Groups Effect | ||

|---|---|---|---|---|---|---|---|

| g | SE | LL | UL | ||||

| Mathematics learning topic | Determining areas | 2 | 0.201 | 0.204 | −0.199 | 0.602 | = 18.895 p = 0.009 |

| Arithmetic | 4 | 0.177 | 0.179 | −0.130 | 0.571 | ||

| Decimal numbers | 3 | 1.062 * | 0.237 | 0.604 | 1.534 | ||

| Finding patterns | 2 | 0.140 | 0.199 | −0.249 | 0.530 | ||

| Fractions | 8 | 0.276 * | 0.094 | 0.092 | 0.461 | ||

| Multiplication | 1 | 0.830 * | 0.254 | 0.333 | 1.327 | ||

| Ratio and proportion | 1 | 0.394 | 0.427 | −0.443 | 1.231 | ||

| Spatial reasoning | 4 | 0.600 * | 0.170 | 0.265 | 0.933 | ||

| Intervention duration | 1–5 h | 17 | 0.488 * | 0.100 | 0.291 | 0.684 | = 2.330 p = 0.408 |

| 6–10 h | 5 | 0.210 | 0.155 | −0.093 | 0.514 | ||

| Over 10 h | 3 | 0.463 * | 0.200 | 0.071 | 0.856 | ||

| AI type | ALS | 8 | 0.362 * | 0.117 | 0.133 | 0.591 | = 0.057 p = 0.972 |

| ITS | 11 | 0.333 * | 0.110 | 0.130 | 0.536 | ||

| Robotics | 11 | 0.366 * | 0.107 | 0.155 | 0.576 | ||

| Grade level | 1st Grade | 1 | 0.185 | 0.303 | −0.408 | 0.779 | = 16.688 p = 0.005 |

| 3rd Grade | 4 | 0.403 * | 0.142 | 0.124 | 0.681 | ||

| 4th Grade | 2 | 0.539 * | 0.212 | 0.123 | 0.956 | ||

| 5th Grade | 8 | 0.251 * | 0.097 | 0.060 | 0.441 | ||

| 6th Grade | 3 | 1.378 * | 0.289 | 0.812 | 1.945 | ||

| Mixed | 11 | 0.240 * | 0.074 | 0.094 | 0.386 | ||

| Organization | Group work | 10 | 0.298 * | 0.113 | 0.076 | 0.520 | = 0.325 p = 0.569 |

| Individual learning | 20 | 0.375 * | 0.074 | 0.230 | 0.520 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hwang, S. Examining the Effects of Artificial Intelligence on Elementary Students’ Mathematics Achievement: A Meta-Analysis. Sustainability 2022, 14, 13185. https://doi.org/10.3390/su142013185

Hwang S. Examining the Effects of Artificial Intelligence on Elementary Students’ Mathematics Achievement: A Meta-Analysis. Sustainability. 2022; 14(20):13185. https://doi.org/10.3390/su142013185

Chicago/Turabian StyleHwang, Sunghwan. 2022. "Examining the Effects of Artificial Intelligence on Elementary Students’ Mathematics Achievement: A Meta-Analysis" Sustainability 14, no. 20: 13185. https://doi.org/10.3390/su142013185

APA StyleHwang, S. (2022). Examining the Effects of Artificial Intelligence on Elementary Students’ Mathematics Achievement: A Meta-Analysis. Sustainability, 14(20), 13185. https://doi.org/10.3390/su142013185