1. Introduction

With the characteristics of high flexibility, low cost, high reliability, free takeoff, landing, and hovering, quad-rotor helicopters (typical small UAVs) are widely used in various fields, such as rescue [

1], plant protection [

2], aerial photography [

3], transportation [

4], and so on. For example, quad-rotor helicopters applied to farmland can detect and spray crops by hovering at ultralow altitude, which is more flexible and efficient than manual work. On the one hand, a quad-rotor helicopter has four input channels and six degrees of freedom. It has an underactuated system, with nonlinear and strong coupling properties. On the other hand, in the process of flight, the external environment is uncertain, i.e., wind, load change, obstacles, and other factors will affect the control of the UAV, so the adaptive ability and robustness of UAV are required to be high [

1,

2,

3,

4,

5,

6]. To sum up, it is of great scientific research value and application value to study and design the steady-state control of quad-rotor helicopters [

7,

8,

9,

10]. For application in the maritime industry, UAVs usually collaborates with unmanned marine vehicles (UMVs) [

11,

12] to execute complex tasks. It is very important to implement stable-state flight for UAVs, especially in uncertain environments.

At present, the widely used quad-rotor helicopter attitude control methods mainly include PID control [

13,

14,

15], sliding mode control [

16,

17,

18], backstepping control [

19,

20,

21], neural network control [

22,

23], active disturbance rejection control (ADRC) [

24,

25,

26,

27] and so on. PID control is composed of proportional, integral and differential parts. It uses deviation to eliminate deviation, and it has the characteristics of simple structure and easy parameter adjustment, but inaccurate input data and parameters have a great impact on results. Reference [

28] proposes a nonlinear PID control method, which effectively enhances the robustness of the quad-rotor helicopter system. Sliding mode control is a typical variable structure control, which has good anti-disturbance for uncertain complex systems. However, due to the structure not being fixed, chattering may occur when the state trajectory reaches the sliding surface. Reference [

29] proposes a second-order sliding mode controller method, which is applied to the height tracking of a quad-rotor helicopter, and the experiment proves that it can effectively reduce the jitter phenomenon. Backstepping control utilizes the idea of divide and conquer, which divides a high-order complex system into low-order simple subsystems, which is suitable for systems with uncertain parameters. As the order of the system increases, the complexity of the division will also increase. Reference [

30] proposes a multi-UAV cooperative formation method based on backstepping control. Simulation experiments show that the UAV converges to the final trajectory faster. Neural network control has powerful learning ability and can learn in complex, uncertain and nonlinear situations. Compared with other algorithms, the control process needs to consume additional computing resources. Reference [

31] proposes an intelligent control algorithm based on RBF neural network, which enhances the anti-jamming ability of the quad-rotor helicopter. Active disturbance rejection control is a control method that does not depend on the mathematical model of the controlled object. It mainly consists of a tracking differentiator (TD), an extended state observer (ESO) and a nonlinear state error feedback controller (NSEFC). Its disadvantage is that manual tuning parameters are more complicated than PID control. Reference [

32] proposes a dual closed-loop quad-rotor helicopter attitude control algorithm: the outer loop adopts PID control, and the inner loop adopts active disturbance rejection control.

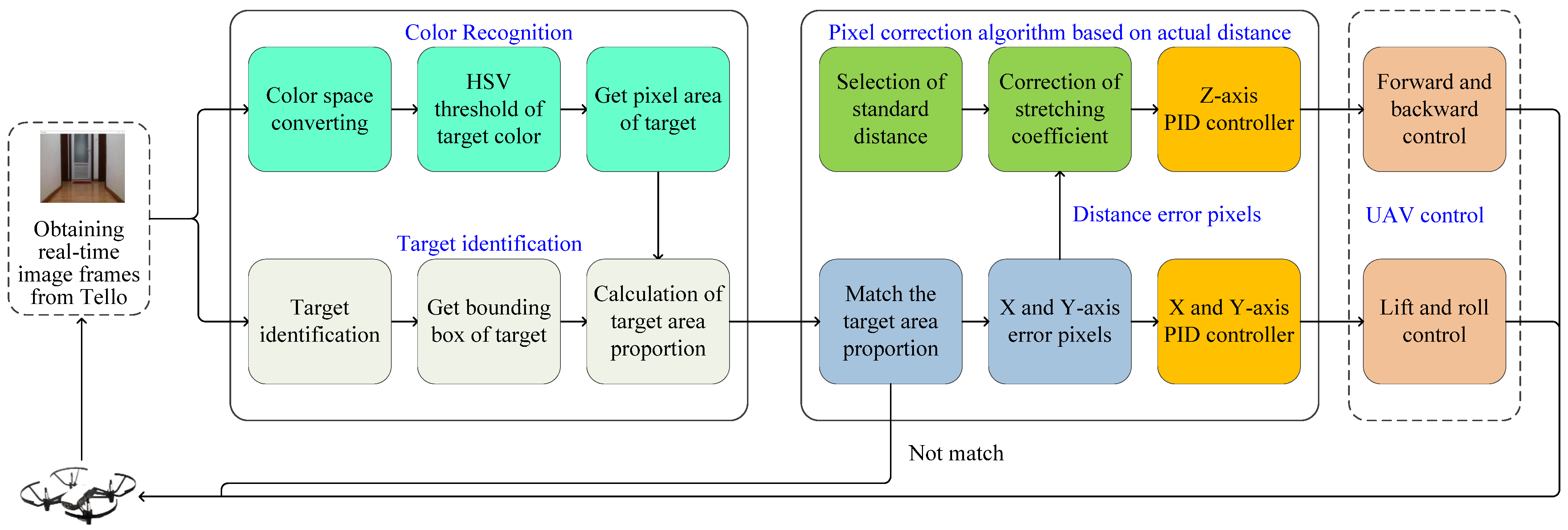

In this paper, a method based on computer vision is proposed for a quad-rotor helicopter to maintain a stable flight state after being disturbed by certain external forces. First, the system terminal collects the video stream returned by the quad-rotor helicopter, and uses color recognition and target detection algorithms to determine the boundary box of the object. Secondly, the pixel distance between the image frame center and the target geometric center is calculated, and the stretching coefficient is creatively introduced to correct the pixel distance deviation under different distance conditions. Using the stretching coefficient method, the target area proportion of different distances can be converted into depth information by a priori information, the relative distance between the UAV and the target can be obtained through the image frame collected by the monocular camera, and the information can be used to guide the UAV control, so as to improve the control accuracy of the UAV. Finally, a PID algorithm is used to control the UAV to maintain stability after receiving external force interference.

2. System Design

In order to implement stable flight of a quad-rotor helicopter, this paper combines color recognition and target detection, and proposes a pixel distance correction algorithm by stretching ratio coefficient and PID control, as

Figure 1 shows.

Color recognition is implemented based on HSV color space, and target color matching combining HSV threshold is adopted so as to obtain the geometric center of the target. A pixel distance correction algorithm based on actual distance includes the calculation of pixel distance and target area proportion, selection of standard distance, calculation of stretching radio and stretching coefficient. According to last frame error information, PID control is used so as to implement the UAV control of forward and backward, lift and roll.

The expansion ratio coefficient can effectively correct the input error data. The results that affect PID control have two parts. One part is PID control parameters, the other part is the input error data. According to the predesigned standard distance, the expansion ratio coefficient can effectively correct the error data and make the control distance between the UAV and the object more accurate.

3. Function Implementation of System

3.1. Image Processing Combining Target Identification and Color Recognition

The UAV collects the video stream through the equipped front camera, and the system terminal processes the returned image. By identifying the fixed target, the UAV can fly stably near the geometric center of the target. During the flight, the images captured by the UAV will appear blurry. Therefore, the color recognition algorithm is used to optimize the target identification to improve the accuracy. The main process of identification is as follows.

Select the recognized target and color. Targets are identified using the yolov3 algorithm, which accurately and quickly identifies the bounding box of a specific target. Use HSV color space transformation to identify the target color. Compared with the RGB color model, the HSV color model can reflect the specific color information of objects well. The conversion from RGB color space to HSV color space is accomplished by calling cv2.cvtColor() method in the program and setting the parameter to cv2.COLOR_BGR2HSV.

After a specific color is identified, there may be other impurity areas with the same or similar color as the target in the image frame, but usually these have a small area. In order to avoid these areas being misidentified as the target, the region with the largest area in the identified color is selected for contour extraction, the cv2.findContours() method is used to find the contour, and the max() method is called to select the contour with the largest area.

Combining the results of color recognition and target recognition, calculate the ratio between the white area located in rectangular box of the binary image target recognition and the area of the rectangular box. If the ratio is larger than the preset ratio pr, target detection combined with color recognition can screen out more accurate targets.

The specific process of the algorithm is shown in Algorithm 1.

| Algorithm 1: Combining object detection and color recognition. |

Input:

|

3.2. UAV Pixel Distance Correction Algorithm

3.2.1. Pixel Distance Calculation

After obtaining the geometric center of the maximum contour, in order to determine the deviation degree between the current position of the UAV and the geometric center of the target, the deviation distance between the current position of the UAV and the X axis or Y axis directions should be calculated, respectively.

By default, the center of the image frame is taken as the position of the UAV, so the actual deviation distance can be converted into pixel deviation distance between the center coordinates of the image frame and the geometric center of the target.

In order to measure the stability effect of the UAV near the target point and facilitate data analysis, in addition to the pixel distance deviation in X axis and Y axis directions, the linear pixel distance between the two coordinates should also be introduced so as to characterize the overall distance deviation between the UAV and the geometric center of the target point, which can be calculated by .

After obtaining the pixel distance deviation of the X axis and Y axis, there exists the problem of enlarging or shrinking the pixel distance caused by different actual distances between the UAV and the target plane. To address this problem, the pixel distance correction method is proposed as below.

As shown in

Figure 2, the distance from two image frames targeting the geometric center to center of the image is expressed by

d, while observing the right target area is larger, which means the UAV is closer to the target plane, then the speed of the UAV adjustment should be smaller. The UAV speed (up and down, left and right) has a linear positive correlation with pixel distance, and the pixel distance

in the two images is the same; therefore, the speed control command issued by the UAV is exactly the same, which is inconsistent with the actual situation, so it needs to be modified. Thus, while the UAV is closer to the target plane, the pixel distance should be reduced proportionally.

The pixel distance correction algorithm is composed of standard distance selection, obtaining stretching radio and stretching coefficient, and calculating stretching coefficient by combining area proportion.

3.2.2. Standard Distance Selection

As shown in

Figure 3, when the actual distance between the UAV and the geometric center of the target is

(that is, the UAV needs to fly the same distance to the right), when the distance between the UAV and the plane where the target is located is

,

and

, the pixel distance between the center of the UAV image frame and the geometric center of the target is

,

and

respectively. It can be seen from

Figure 3 that

<

<

and

<

<

. Therefore, when the UAV makes the same horizontal displacement, the closer it is to the plane where the target is and the greater the change in pixel distance in the image frame.

Taking the X axis as an example, when the UAV is at different distances from the same central axis to the target plane, the pixel distance sequence of the X axis is listed as Formula (1).

After analysis of

Figure 3,

. When the UAV is infinitely far away from the target plane, the pixel distance between the center of the UAV image frame and the target line is consistent with the actual distance. However, considering that the distance between the UAV and the target plane cannot reach an infinite distance in an actual situation, an appropriate distance as far as possible should be selected as the standard distance length, and

in the pixel distance sequence is assumed to be the standard distance, where

=

.

3.2.3. Stretching Ratio and Stretching Coefficient

Because the pixel length corresponding to the same length is different at different distances, the pixel length is small while the distance is far away, and the pixel length is large while the distance is close. This change is called stretching with respect to the standard length. The ratio between pixel length at different distances and standard distance length is called stretching ratio, which is described as Formula (2).

Therefore, by dividing the pixel distance sequence

Xn by

x0, then the corresponding stretching ratio sequence of X axis at different distances can be obtained by Formula (3).

The sequence reflects the stretching ratio compared to standard distance length at different distances, so the distance can be modified to standard distance through dividing pixel length at different distances by stretching ratio at the same distance.

The same is true for the stretching ratio in the Y axis. The pixel distance on the X axis and Y axis can be obtained by trigonometric function operation based on pixel distance

, and then dividing the corresponding stretching ratio under the same distance so as to obtain the corrected pixel distance on the X axis and Y axis, which is described as Formula (4).

where

and

represent the pixel distance of X axis and Y axis after correction;

and

are the pixel distance under the condition of different distance.

and

are the stretching ratio of X axis and Y axis at different distances.

3.2.4. Calculating the Stretching Coefficient by Combing Target Area with Area Proportion

Assuming that the target points are rectangles of unknown size, assume that the height and width of the image obtained by the UAV camera are pixels and pixels respectively. Similarly, the length of the rectangle in image is pixels and pixels in X axis and Y axis respectively.

Then, the total pixels of the image is

and the total pixels occupied by the target point is

. Supposing the targets are squares, and the UAV image frame is also set to a square, then

and

. According to

Section 3.2.2 and

Section 3.2.3, the standard distance length

is selected in the one-dimensional direction, and the stretching ratio sequence can be obtained as shown in Formula (5).

represents the area of the target corresponding to the standard distance length , and represents the pixel area of the target in UAV image frame at different distances.

If the sampling time of the sampling distance points reaches accuracy requirement, then discrete sequence of the distance can be regarded as a continuous function, and the stretching ratio function can be calculated by Formula (6).

where

represents the functional relationship between the distance

(unit: meters) from UAV to target and the area (unit: the square of number) of the target in image;

is called the stretching coefficient.

Considering the possible change of set image frame size and target size, a new variable

p is introduced to reduce unnecessary calculation and enhance the universality of Formula (6). Thus

p represents the ratio of the target area to the whole image frame, and the stretching ratio formula can be transformed into Formula (7).

Therefore, this formula can adapt to different image frames and target points, and determine the stretching coefficient.

4. Velocity Control of the UAV Based on PID

A PID formula is a commonly used control method in system process control. It is mainly composed of three parts: proportion, integral and derivative. A PID formula has the advantages of excellent negative feedback effect and simple parameter adjustment. It is mostly used in closed-loop systems with automatic correction. Position-type PID can be described as Formula (8).

where:

represents the output curve of system, which means it is the curve of PID output result changing with time;

is the ratio factor;

represents the deviation curve, which means it is changing curve between the set value and the actual value changing with time;

is integration time;

stands for differential time. Expand the Formula (8) to obtain the Formula (9).

For UAV, the proportion part represents response speed of the UAV. Too much will exceed the target, and too little will not reach the target due to lack of power.

The integral part can make up for the problem that the proportion is too small, but it can only correct for small deviations. For the position-type PID formula, the integral will gradually increase with the accumulation of time, so it is necessary to set the upper and lower bounds.

The differential part can prevent the UAV from exceeding the target: if it is too small, it will exceed the target, and if it is too large, it will be sensitive to error and jitter near the target with high frequency.

4.1. Symbolic Control of the UAV Roll, Ascent and Descent Speed

In DJITelloPy project, velocity is a vector, and its positive and negative values have directional meaning. In a Cartesian three-dimensional coordinate system, the symbols of each axis and their corresponding velocity directions are shown in

Table 1.

Since the coordinate system displayed in the computer image is different from the Cartesian coordinate system and considering the relative motion between the UAV and the target, its schematic diagram and transformation relationship are shown in

Figure 4 and

Table 2.

The red dotted line divides the UAV’s real-time image into four areas with the same area: Area

1, Area

2, Area

3 and Area

4. Area

5, Area

6, Area

7 and Area

8 are four dotted lines inside the image, and the red dots are the center of the image. P

1, P

2, P

3, P

4, P

5, P

6, P

7, and P

8 are the different positions of identified bullseye. In the calculation, the geometric center coordinates of the target point are subtracted from the corresponding coordinates of the center of the image frame.

where:

and

represents the pixel distance of

X and

Y axis respectively;

and

represent the horizontal and vertical coordinates respectively of the geometric center of the target;

and

represent the width and height of image respectively.

Table 2 shows that the sign of

is same as the sign of left and right motion, and the sign of

is opposite to the sign of up and down motion. Therefore, after PID control is used to calculate the speed in program, the upper and lower speeds obtained are taken to be negative, and other data remain unchanged.

4.2. UAV Roll, Lifting Speed Size Control

Pixel distance correction before PID controlling. In the process of the UAV control, it doesn’t need to accurately measure the distance between the UAV and the target plane. Instead, it calculates the proportion of pixel area within the scope of the target. Thus, it needs to measure the proportion of the target area with the distance it needs to keep, then it can maintain relative distance control between the UAV and the target plane. This method can keep relative distance between the UAV and the target under the unknown size of the target, as shown in the following

Table 3 (UAV screen is set to 480 × 480 pixels):

Then, the area of the target point is obtained, and after calculating the area proportion P, the stretching ratio coefficient between the UAV and the target plane at the current distance can be calculated by Formula (11).

The larger the object, the greater the recognition distance. Thus, take a small object apple as an example and test at a longer distance (150–275 cm), then the image target area and its proportion between the UAV and the target are obtained, as

Table 4 shows. From the experimental results, we know that the method is also applicable.

4.3. UAV Forward and Backward Speed Size Control

Observed many times by the flight control, when UAV forward or backward velocity

is larger than 15 cm/s

2, it will generate a larger pitch angle (

Figure 5) and lead to a spurt in pixel distance, which will affect the results of PID formulas used for UAV control. In order to avoid this situation, the forward or backward velocity maximum threshold is set to 10.

At the same time, relative distance control method is used to control the forward and backward speed of the UAV. Thus, it is not necessary to obtain an accurate distance between the UAV and the target plane, but it is necessary to maintain a stable distance between the UAV and the target plane by controlling the proportion of the target area in the image frame within a certain range. The specific process of the algorithm is shown in Algorithm 2.

| Algorithm 2: UAV controlling algorithm |

Input:

Output: |

5. Experiment

5.1. Experimental Platform Tello

Tello is equipped with visual positioning system and integrated flight control system for stable hovering and flight. The Tello front camera can take 5-megapixel photos and 720p HD video, and the electronic shake protection makes the pictures more stable. The physical picture of Tello as shown in

Figure 6.

5.2. Experiment to Determine the Parameters of the PID Formula

5.2.1. Determining KP

By adjusting different values of proportionality coefficient KP and observing motion state of the UAV, the appropriate proportionality coefficient KP value of the UAV response speed is determined, so that UAV has sufficient response speed and no strong shock when moving.

The flight duration of each experiment is about 80 s. After obtaining the image, the distance between the center of the UAV image frame and the center of the target began to be recorded. The experimental data are shown in

Figure 7, the horizontal axis is UAV flight time (unit: s), the vertical axis is pixel distance of plane straight line between the center of the UAV image frame and the center of the target (unit: number of pixels).

By analyzing experimental data, it can be seen that when

KP value is too large (

KP > 0.1), UAV power is too large, and it is easy to generate vibration, so that UAV flies out of the range of identifiable targets, resulting in the inability to identify target, and the experimental data is less than 80 s. After that, the value of

KP (

Figure 7a–d) was determined by the dichotomization method in the experiment. After comparison, while

KP = 0.06, UAV has sufficient power and moderate size.

5.2.2. Determining KD

After basically determining the range of proportion coefficient KP, the differential coefficient KD is added to the joint experiment, and KD can also be adjusted to fine-tune KP to finally achieve the effect of steady-state.

When there are only two parameters

KP and

KD, the proportional part provides the appropriate response speed of the UAV, and the differential part complements the difference. Eventually there will be some time after t, for any given

and

:

At this time, the steady-state error will be generated, and the influence caused by proportion P and the influence caused by differential D on UAV will cancel each other. Therefore, the experimental effect expected to be achieved in this part of the experiment is that the linear pixel distance between Tello UAV and geometric center of the target point will maintain a stable distance.

At the same time, average error is calculated to measure the stability of distance. For a set of experimental data samples pixel distance

, the following result is obtained:

where:

is the average error of experimental samples in this group, and

is the average of experimental samples in this group.

This group of experiments focuses on the stability of pixel distance between the UAV and the target after finding the target, so the flight data after 20 s (after 20 s, UAV has been kept flying around the target) is selected as sample to calculate the average error, and calculation results

and parameter values are shown in

Figure 8. All horizontal axes represent time (unit: s), and all vertical axes represent straight-line pixel distance (unit: number of pixels) between the UAV image frame center and the geometric center of the target.

The stretching coefficient

KP = 0.06 was determined from the previous set of experiments, and

KD = 0.5 was initially set. The experimental results (

Figure 7a) show that under this parameter combination, the response speed of the UAV is slow and the oscillation amplitude is large. When differential coefficient is adjusted to

KD = 0.25 and

KD = 0.13 respectively (

Figure 8b,c), the UAV pixel distance becomes more and more stable, so proportion coefficient

KP = 0.06 is kept unchanged and differential coefficient

KD is continuously reduced. By comparing the average error of each experiment, it is found that while

KP = 0.06 and

KD = 0.13, UAV flight error is the most stable and the value reaches the smallest.

5.2.3. Determining KI

After steady-state error is generated, the integral coefficient KI is added to eliminate the steady-state error, so the steady-state error is compensated to achieve the final target consistently with the geometric center of the target.

Keeping

KP = 0.06 and

KD = 0.13, while

KI = 0.01 (

Figure 9a), it has a great influence on the steady-state control of the UAV, and UAV produces large oscillations near the target, which proves that the differential coefficient

KD set at this time is too small and insensitive to the error, so the values of

KP and

KD are increased in experiment (b) (

Figure 9b). It was observed that the mean value of pixel distance decreased, but the oscillation phenomenon still occurred after the target object was identified, indicating that the integral did not make up the steady-state error in time. Therefore, the integral coefficient

KI was increased in

Figure 9c,d, and

KI achieved a more obvious effect in experiment (d). Keep

KI = 0.03, and continue to adjust the values of

KP and

KD in

Figure 9e–g, so that UAV has a faster response after identifying the target while maintaining a small average error. Finally, in

Figure 9h, the reaction speed and average error of the UAV are better than those of the previous experiment, so it is believed that UAV flight control reaches the local optimal solution under each parameter of experiment (h). Finally, the stretching coefficients

KP = 0.05,

KI = 0.035,

KD = 0.2 are determined.

5.3. External Force Disturbance Test of UAV

The experimental design of the steady-state algorithm for the UAV is as follows:

- (1)

A small wooden block is suspended under the UAV by a string to simulate the aircraft under load;

- (2)

A red apple is used as the object to be recognized and placed on a chair to simulate the object to be recognized by the UAV;

- (3)

When the UAV takes off and is hovering in a stable state, the UAV is simulated to be disturbed by external forces by manually pulling the string.

The whole experiment was divided into four stages. The scene diagram and system terminal processing diagram of the takeoff stage are shown in

Figure 10a,b. The UAV is in a stable state, and the center of the image frame coincides with the geometric center of object. The scene diagram and the system terminal processing diagram of the predisturbance stage are shown in

Figure 10c,d. The state of external interference of the UAV is simulated by manually pulling the string. The scene diagram and the system terminal processing diagram of the disturbance stage are shown in

Figure 10e,f. The UAV is pulled to the right, then the center of the target object shifts to the left, so the UAV is in the disturbed state. The scene diagram and system terminal processing diagram of the post-disturbance stage are shown in

Figure 10g,h. The UAV is restored to the stable state through the pixel correction algorithm, which is consistent with the takeoff state.

5.4. Perturbation Experiment of X, Y, Z Axes

This test simulated the degree of stability of the UAV in the harsh environment of strong wind with variable wind direction. The UAV took off after reaching a steady state. We chose different times to exert force with variable direction for the UAV. At the same time X, Y, Z axis acceleration, linear pixel distance to the target, and minimum circumscribed rectangular area were recorded, and the record length is 120 s.

A string is attached to the bottom of the UAV. While the UAV is in a stable hovering state, external force is applied to it by pulling the string to induce a disturbed state. As the acceleration value of the UAV can be obtained in real time during its flight, at the same time, the weight of the UAV is known. According to Newton’s second formula , on the premise of knowing the weight and acceleration of the UAV, the direction and magnitude of external disturbance of the UAV can be calculated.

5.4.1. X Axis Perturbation Experiment

In this experiment, the direction of force applied to the UAV was the X axis direction of the UAV coordinate system. At the same time, the acceleration of the UAV in the X axis direction and the size of the target pixel area were recorded, and the target area identified by the UAV was controlled in the range of 7500–10,000 units.

The results are shown in

Figure 11: In this experiment, the UAV was perturbed times at 28.1 s, 48.4 s, and 68.9 s.

Observing the experimental results, it can be seen that a force of about 19.36 × 10−2 N is applied to the UAV at t = 28.1 s, which makes it produce an acceleration of −242 cm/s2 in the X axis direction. The disturbance has great impact on the UAV, changes the target area by about 3000 units, and returns to a stable state after about 10 s.

At t = 48.4 s, a force of about 9.6 × 10−2 N is applied to the UAV, which makes it produce an acceleration of −120 cm/s2 in the X axis direction, and produces a small disturbance to the UAV, which changes the target area by about 5000 units. After about 12 s, the UAV basically returns to a stable state.

At t = 68.9 s, a force of about 6.88 × 10−2 N is applied to UAV, which is the weakest one of the three disturbances, resulting in an acceleration of 86 cm/s2 in the X axis of the UAV. However, due to the different acceleration directions of external force, the target area changes by nearly 14,000 units, and finally returns to stability after about 6 s.

When setting the forward and backward speed of the UAV, the factors that have inconsistent effects on target area change rate are considered, so the backward speed of the UAV is greater than the forward speed to compensate for area loss, so as to balance the recovery ability of the UAV in the X axis direction.

5.4.2. Y Axis Perturbation Experiment

Under this experiment, the direction of force applied to UAV is all the Y axis direction of the UAV coordinate system.

The results are shown in

Figure 12: In this experiment, UAV was perturbed for a total of three times at 31.5 s, 49.3 s and 69.9 s, respectively.

Observing the experimental results, it can be seen that a force of about 6.96 × 10−2 N is applied to UAV at t = 31.6 s, which makes UAV produce an acceleration of −87 cm/s2 in the Y axis, which has little impact on UAV. The pixel offset of the Y axis reaches 66 units, and UAV returns to a stable state after 7 s.

At t = 49.3 s and t = 69.9 s, the force applied to UAV is more than 9.5 × 10−2 N, and the maximum disturbance is generated at t = 49.3 s, so that the resulting Y axis acceleration reaches −186 cm/s2, and the disturbance causes the Y axis pixel distance to be shifted by 160 units. After being disturbed at t = 49.3 s and t = 69.9 s, UAV returned to the stable state in about 10 s.

5.4.3. Z Axis Perturbation Experiment

Under this experiment, the direction of force applied to UAV is all the Z axis direction of the UAV coordinate system.

The results are shown in

Figure 13: In this experiment, the UAV was perturbed for totally three times at 37.3 s, 58.8 s, and 76.6 s, respectively.

By observing the experimental results, it can be seen that the Z axis acceleration has an initial value (the value is about −1000), so the relative acceleration is used to calculate the force of the UAV in the Z axis direction.

At t = 37.3 s, a small disturbance is applied to UAV, and UAV returns to a stable state after about 5 s.

At t = 58.8 s, the maximum disturbance of this experiment is applied to the UAV, the magnitude of force is about 14.88 × 10−2 N, the Z axis pixel offset is 163 units, and the UAV returns to a stable state after about 10 s.

At t = 76.6 s, a force of 12.96 × 10−2 N is applied to the UAV, which makes the UAV produce an acceleration of −162 cm/s2 in the Z axis, which causes a large disturbance to the UAV. Finally, the UAV returns to a stable state after about 8 s.

6. Discussion

In recent years, the application of small UAVs has become more extensive in various environments, such as aerial photography, rescue operation, exploration, logistics, etc. As the steady-state flight directly determines the completion quality of flight mission, so the steady-state control of UAVs has great research prospects and value. However, wind and other factors will affect the control of UAVs, so it is very important to implement stable-state flight for UAV, especially in uncertain environments. Compared with other sensors such as radar and laser range finder and so on, the camera is more common, so the algorithm relying only on camera is suitable for more UAV platforms.

The essence of this method is to map a 3D object with depth information to a 2D plane and modify it according to the object center. In the experiment of the thesis, only the rolling angle is verified by experiments.

As shown in

Figure 14, when the UAV is in a stable state under different yaw angles, roll angles and pitch angles, the geometric center of the target object projected on the image frame of the UAV camera remains unchanged. When interfered by external force, the geometric center of the object changes on the image frame, and the UAV can be returned to the original state by the correction algorithm, which is also applicable to the different attitude (yaw and pitch angles controlling) of the UAV.

The proposed algorithm only relies on the image frames provided by the front camera of the UAV, so as to realize the steady flight of the UAV. Therefore, this algorithm is suitable for any UAV platform equipped with a front camera.

7. Conclusions

In addition to considering the pixel distance between target center and two-dimensional plane of the center of the UAV frame, it also focuses on the different distances between the UAV and the target in the three-dimensional space. Similar triangle principle is used to stretch the pixel distance so as to achieve accurate control of the UAV. In this paper, by combining color recognition with target detection, the frame returned by the UAV to the terminal is analyzed to identify specific target, and the pixel distance correction algorithm is proposed to modify the results of PID control algorithm so as to solve the problem of inaccurate UAV control caused by different distance from the target. By analyzing the results of the PID control algorithm and pixel distance correction algorithm and sending control commands to UAV in a closed-loop way, the system realizes steady-state control of the UAV, and makes it have a certain ability to resist external interference. Finally, the disturbance experiment is conducted in order to test the anti-interference ability of the UAV, which proves that the proposed algorithm can be used for steady-state control of UAVs under uncertain environments.

Limitations: The method proposed in this paper is to realize steady-state control of a UAV by identifying objects with the help of visual sensors. When the object is blocked, far away, or the ambient light is weak, the tracking of the object by the UAV will be greatly affected, and the stable state of the UAV will be affected.

Author Contributions

Conceptualization, R.T. and L.N.; methodology, J.W., R.T. and L.N.; software, R.T.; validation, R.T. and L.N.; formal analysis, R.T.; investigation, R.T. and L.N.; resources, R.T.; data curation, R.T.; writing—original draft preparation, R.T. and J.W.; writing—review and editing, J.W. and L.N.; visualization, R.T. and L.N.; supervision, J.W.; project administration, J.W.; funding acquisition, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Scientific Research Project of Tianjin Education Commission (2020KJ026) and Open Fund Project of the Information Security Evaluation Center of the Civil Aviation University of China (ISECCA-202007).

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors thank Jan Vitek, Ales Plsek, and Lei Zhao for their help while the first author studied in the S3 lab at Purdue University as a visiting scholar from September 2010 to September 2012. We also thank the anonymous reviewers for their valuable comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mishra, B.; Garg, D.; Narang, P.; Mishra, V. Drone-surveillance for search and rescue in natural disaster. Comput. Commun. 2020, 156, 1–10. [Google Scholar] [CrossRef]

- Wang, S.; Han, Y.; Chen, J.; Zhang, Z.; Liu, X. Active disturbance rejection control of UAV attitude based on iterative learning control. Chin. J. Aeronaut 2020, 41, 324112–324125. [Google Scholar]

- Cao, Y.; Cheng, X.; Cheng, J. Concentrated Coverage Path Planning Algorithm of UAV Formation for Aerial Photography. IEEE Sens. J. 2022, 22, 11098–11111. [Google Scholar] [CrossRef]

- Li, X.; Tan, J.; Liu, A.; Vijayakumar, P.; Kumar, N.; Alazab, M. A Novel UAV-Enabled Data Collection Scheme for Intelligent Transportation System Through UAV Speed Control. IEEE Trans. Intell. Transp. Syst. 2020, 22, 2100–2110. [Google Scholar] [CrossRef]

- Qian, L.; Liu, H. Path-Following Control of a Quadrotor UAV with a Cable-Suspended Payload Under Wind Disturbances. IEEE Trans. Ind. Electron. 2020, 67, 2021–2029. [Google Scholar] [CrossRef]

- Jia, Z.; Yu, J.; Mei, Y.; Chen, Y.; Shen, Y.; Ai, X. Integral backstepping sliding mode control for quadrotor helicopter under external uncertain disturbances. Aerosp. Sci. Techol. 2017, 68, 299–307. [Google Scholar] [CrossRef]

- Wu, Y.; Ding, Z.; Xu, C.; Gao, F. External Forces Resilient Safe Motion Planning for Quadrotor. IEEE Robot. Autom. Lett. 2021, 6, 8506–8513. [Google Scholar] [CrossRef]

- Hoang, V.T.; Phung, M.D.; Ha, Q.P. Adaptive twisting sliding mode control for quadrotor unmanned aerial vehicles. In Proceedings of the 2017 11th Asian Control Conference (ASCC), Gold Coast, Australia, 17–20 December 2017. [Google Scholar]

- Labbadi, M.; Cherkaoui, M.; Houm, Y.E.; Guisser, M.H. Modeling and Robust Integral Sliding Mode Control for a Quadrotor Unmanned Aerial Vehicle. In Proceedings of the 2018 6th International Renewable and Sustainable Energy Conference (IRSEC), Rabat, Morocco, 5–8 December 2018. [Google Scholar]

- Perozzi, G.; Efimov, D.; Biannic, J.; Planckaert, L. Trajectory tracking for a quadrotor under wind perturbations: Sliding mode control with state-dependent gains. J. Frankl. Inst. 2018, 355, 4809–4838. [Google Scholar]

- Hao, L.; Zhang, H.; Guo, G.; Li, H. Quantized Sliding Mode Control of Unmanned Marine Vehicles: Various Thruster Faults Tolerated with a Unified Model. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 2012–2026. [Google Scholar] [CrossRef]

- He, W.; Zhang, J.; Li, H.; Liu, S.; Wang, Y.; Lv, B.; Wei, J. Optimal thermal management of server cooling system based cooling tower under different ambient temperatures. Appl. Therm. Eng. 2022, 207, 118176–118185. [Google Scholar] [CrossRef]

- Mac, T.; Copot, C.; Keyser, R.D.; Ionescu, C.M. The development of an autonomous navigation system with optimal control of an UAV in partly unknown indoor environment. Mechatronics 2018, 49, 187–196. [Google Scholar] [CrossRef]

- Okasha, M.; Kralev, J.; Islam, M. Design and Experimental Comparison of PID, LQR and MPC Stabilizing Controllers for Parrot Mambo Mini-Drone. Aerospace 2022, 9, 298. [Google Scholar] [CrossRef]

- Yoon, J.; Doh, J. Optimal PID control for hovering stabilization of quadcopter using long short term memory. Adv. Eng. Inform. 2022, 53, 101679–101690. [Google Scholar] [CrossRef]

- Maqsood, H.; Qu, Y. Nonlinear Disturbance Observer Based Sliding Mode Control of Quadrotor Helicopter. J. Electr. Eng. Technol. 2020, 15, 1453–1461. [Google Scholar] [CrossRef]

- Labbadi, M.; Cherkaoui, M. Robust Adaptive Global Time-varying Sliding-mode Control for Finite-time Tracker Design of Quadrotor Drone Subjected to Gaussian Random Parametric Uncertainties and Disturbances. Int. J. Control. Autom. Syst. 2021, 19, 2213–2223. [Google Scholar] [CrossRef]

- Pal, M.; Das, S.; Kumar, R.; Das, S.; Banerjee, S.; Shekhar, S.; Ghosh, S. Terminal Sliding Mode Control (TSMC) based Cooperative Load Transportation using Multiple Drones. In Proceedings of the 2022 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Toronto, ON, Canada, 1–4 June 2022. [Google Scholar]

- Saif, A.; Aliyu, A.; Dhaifallah, M.; Elshafei, M. Decentralized Backstepping Control of a Quadrotor with Tilted-rotor under Wind Gusts. Int. J. Control. Autom. Syst. 2018, 16, 2458–2472. [Google Scholar] [CrossRef]

- Saibi, A.; Boushaki, R.; Belaidi, H. Backstepping Control of Drone. Eng. Proc. 2022, 14, 4–12. [Google Scholar]

- Al Younes, Y.; Barczyk, M. A Backstepping Approach to Nonlinear Model Predictive Horizon for Optimal Trajectory Planning. Robotics 2022, 11, 87. [Google Scholar] [CrossRef]

- Bellahcene, Z.; Bouhamida, M.; Denai, M.; Assali, K. Adaptive neural network-based robust H∞ tracking control of a quadrotor UAV under wind disturbances. Int. J. Autom. Control. 2021, 15, 28–57. [Google Scholar] [CrossRef]

- Madruga, S.; Tavares, A.; Luiz, S.; Nascimento, T.; Lima, A. Aerodynamic Effects Compensation on Multi-Rotor UAVs Based on a Neural Network Control Allocation Approach. IEEE/CAA J. Autom. Sin. 2022, 9, 295–312. [Google Scholar] [CrossRef]

- Cai, Z.; Lou, J.; Zhao, J.; Wu, K.; Liu, N.; Wang, Y. Quadrotor trajectory tracking and obstacle avoidance by chaotic grey wolf optimization-based active disturbance rejection control. Mech. Syst. Signal Process. 2019, 128, 636–654. [Google Scholar] [CrossRef]

- AlAli, A.; Fareh, R.; Sinan, S.; Bettayeb, M. Control of Quadcopter Drone Based on Fractional Active Disturbances Rejection Control. In Proceedings of the 2021 14th International Conference on Developments in eSystems Engineering (DeSE), Sharjah, United Arab Emirates, 7–10 December 2021. [Google Scholar]

- Li, H.; Wang, J.; Han, C.; Zhou, M.; Dong, Z. Leader-follower formation control of mutilple UAVs based on ADRC:experiment research. In Proceedings of the 2021 4th IEEE International Conference on Industrial Cyber-Physical Systems (ICPS), Victoria, BC, Canada, 10–12 May 2021. [Google Scholar]

- Soto, S.M.O.; Cacace, J.; Ruggiero, F.; Lippiello, V. Active Disturbance Rejection Control for the Robust Flight of a Passively Tilted Hexarotor. Drones 2022, 6, 258. [Google Scholar] [CrossRef]

- Najm, A.; Ibraheem, I. Nonlinear PID controller design for a 6-DOF UAV quadrotor system. Eng. Sci. Technol. 2019, 22, 1087–1097. [Google Scholar] [CrossRef]

- Muñoz, F.; González-Hernández, I.; Salazar, S.; Espinoza, E.; Lozano, R. Second order sliding mode controllers for altitude control of a quadrotor UAS: Real-time implementation in outdoor environments. Neurocomputing 2017, 233, 61–71. [Google Scholar] [CrossRef]

- Liang, Z.; Yi, L.; Shida, X.; Han, F. Multiple UAVs cooperative formation forming control based on back-stepping-like approach. J. Syst. Eng. Electron. 2018, 29, 816–822. [Google Scholar]

- Wang, B.; Jahanshahi, H.; Volos, C.; Bekiros, S.; Khan, M.A.; Agarwal, P.; Aly, A.A. A New RBF Neural Network-Based Fault-Tolerant Active Control for Fractional Time-Delayed Systems. Electronics 2021, 10, 1501. [Google Scholar] [CrossRef]

- Yang, H.; Cheng, L.; Xia, Y.; Yuan, Y. Active Disturbance Rejection Attitude Control for a Dual Closed-Loop Quadrotor Under Gust Wind. IEEE Trans. Control. Syst. Technol. 2018, 26, 1400–1405. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).