1. Introduction

Given China’s limited land resources, rapid urbanization poses a serious threat to China’s land-resource security. At the same time, the rapid development of urbanization is increasing the intensity of land, and over-exploitation of land is producing a significant negative impact on the ecological environment, producing adverse effects such as serious haze, urban heat islands, and the degradation of natural urban ecosystem services [

1]. In the context of rapid urbanization, accurate and rapid urban-land-use mapping is a prerequisite for the study of land-resource issues and provides a basis for decision-making in land-use management, urban management, and sustainable development. Currently, land-use classification relies mostly on optical images, but this approach leads to classification errors for urban environments with high soil moisture and is susceptible to cloud and haze disturbances [

2,

3]. According to the research, the overall accuracy decreases by 10–20% when the amount of cloud coverage on the optical image is about 50%, and the addition of SAR data can increase the overall accuracy by about 5%, and the machine learning classification accuracy is low when the optical images are disturbed by soil moisture, cloud cover and haze, mostly below 85% [

4,

5,

6]. Synthetic aperture radar (SAR) data are a type of active remote sensing and can penetrate adverse weather conditions such as clouds and haze by operating in the microwave range, allowing the detection of the geometric and dielectric properties of surface objects. In addition, the backscatter coefficient of SAR data can differentiate between different land-use types [

7]. Therefore, synergistic active-passive remote-sensing data, with its all-day, all-weather, comprehensive coverage and high acquisition frequency, improve urban-land-use classification and rapid mapping.

Currently, medium-resolution (10–100 m) satellite imagery is widely used for land-use mapping [

8,

9,

10]; for example, Landsat TM, ETM+, and Sentinel-2 multispectral images are used to produce global 30- and 10-m-resolution land-use classification maps [

11]. Sentinel-1 and Sentinel-2 are two new-generation satellite platforms and are widely used in land-use studies because of their free and easy accessibility. Compared with Landsat series satellites, they have higher resolution, a faster return cycle, and provide more refined land-use classification maps. The 13 bands of Sentinel-2 MSI data provide richer feature information, and Sentinel-2 MSI data have demonstrated their value in area extraction, the monitoring of land use, and mangrove extent mapping. However, given weather conditions such as clouds, smog, and haze, Sentinel-2 MSI data are limited by the weather and the classification accuracy decreases significantly to meet the demand. Collecting feature information during the rainy season when the cloud cover is severe or the soil moisture is high does not affect the resulting SAR data [

12,

13,

14,

15]. In addition, Sentinel-1 SAR data have been used in studies involving flood monitoring, crop yield estimation, and waterbody extraction [

16,

17], and the results validate the use of SAR data for land-use classification.

When clouds, smoke, and haze obstruct the use of optical imagery in the study area due to high surface humidity, SAR data become an alternative solution for optical imagery [

18,

19,

20]. Numerous studies have used optical and radar data for land-use classification in non-urban areas and have achieved improved results. Walker et al. [

21] combined SAR data with Landsat optical imagery for land-use mapping of the Brazilian Amazon Forest and verified the feasibility of combining SAR data with optical imagery for large-scale forest mapping. Colson et al. [

22] combined Sentinel-1 SAR data and Sentinel-2 MSI data for fire monitoring and for studying soil erosion in the Sierra del Gata wildfire in Spain in the summer of 2015. Heckel et al. [

23] studied the extent of forest cover in South Africa and Thuringia by fusing Sentinel-1 SAR and Sentinel-2 MSI data and validated the potential of synergistically combined active and passive remote-sensing data for studying forest cover on a global scale. Although these studies verified the use of synergistically combined active and passive remote-sensing data in land-use studies, their target was non-urban areas in large regions. Sentinel-1 SAR data still pose a challenge for land-use classification in urban areas because of the complexity of the classes of urban-land-use features. Therefore, the fusing of Sentinel-1 SAR and Sentinel-2 MSI data for land-use classification studies in urban regions merits further investigation.

Machine learning is widely used in remote-sensing image classification because of its ability to identify feature information via an automated framework. Many scholars have studied the combination of remote-sensing data and machine-learning algorithms for land-use classification. For example, Zhang et al. [

24] used the random forest algorithm to classify farmland in China and Canada by combining texture features and spectral indices and verified that the use of machine-learning algorithms with integrated spectral indices improves land-use classification accuracy. Mandal et al. [

25] used a support vector machine (SVM) algorithm to classify crops in the Vijayawada region of India. They also made a comparative study of the decision tree, K-most proximity, and random forest algorithms to verify that the SVM algorithm is superior to these other methods. However, such machine-learning methods only extract shallow spectral features from remote-sensing data, which prevents the classification accuracy from satisfying the production requirements. In addition, the scene segmentation model based on Vision Transformers has attracted significant attention in urban-land-use classification research because of its accurate classification and high stability, but it often requires numerous labeled samples, which requires significant time, manpower, and material resources and thus cannot meet the demand for rapid mapping of urban-land-use classification [

26,

27].

The emergence of deep-learning algorithms, represented by convolutional neural networks (CNNs), has provided new ideas for the classification of remote-sensing images [

28]. By leveraging their powerful feature extraction and feature representation capabilities, these algorithms have achieved remarkable results in the field of land-use classification. For example, Zhao et al. [

29] compared five deep-learning classification effects based on time series Sentinel-2 images. In addition, a one-dimensional convolutional neural network was used with success to map crops at a higher level. Lee et al. [

30] proposed a two-dimensional CNN (2D-CNN) structure by adding a residual structure to the 2D CNN to introduce residual connections. Ji et al. [

31] classified crops by using a three-dimensional CNN (3D-CNN) and spatiotemporal remote-sensing data. 3D-CNNs enhance the feature-extraction capability through an active learning strategy, and the classification results are more accurate compared with 2D-CNNs. These methods overcome the defects of traditional classification algorithms, such as poor robustness and weak discriminative feature extraction compared with machine-learning methods. However, due to their structure and framework, CNNs are prone to problems involving the disappearance of network gradients and feature loss, resulting in inadequate extraction of information data from the data. Therefore, a classification model must be developed that can fully exploit the spatial and spectral information of remote-sensing data.

In summary, the combination of SAR data and optical images is of significant potential importance for the study of urban-land-use classification. Machine-learning and deep-learning methods are rarely trained with active-passive remote-sensing synoptic data [

32,

33,

34,

35] and are mostly studied by using optical images, whose effectiveness must be further validated. Moreover, for land-use-classification applications, medium-resolution images from satellite sensors such as Landsat or Sentinel are more desirable because they provide improved resolution, broader spatial coverage, and free and easy accessibility [

8,

9,

10], making them an excellent choice for land-use-classification studies.

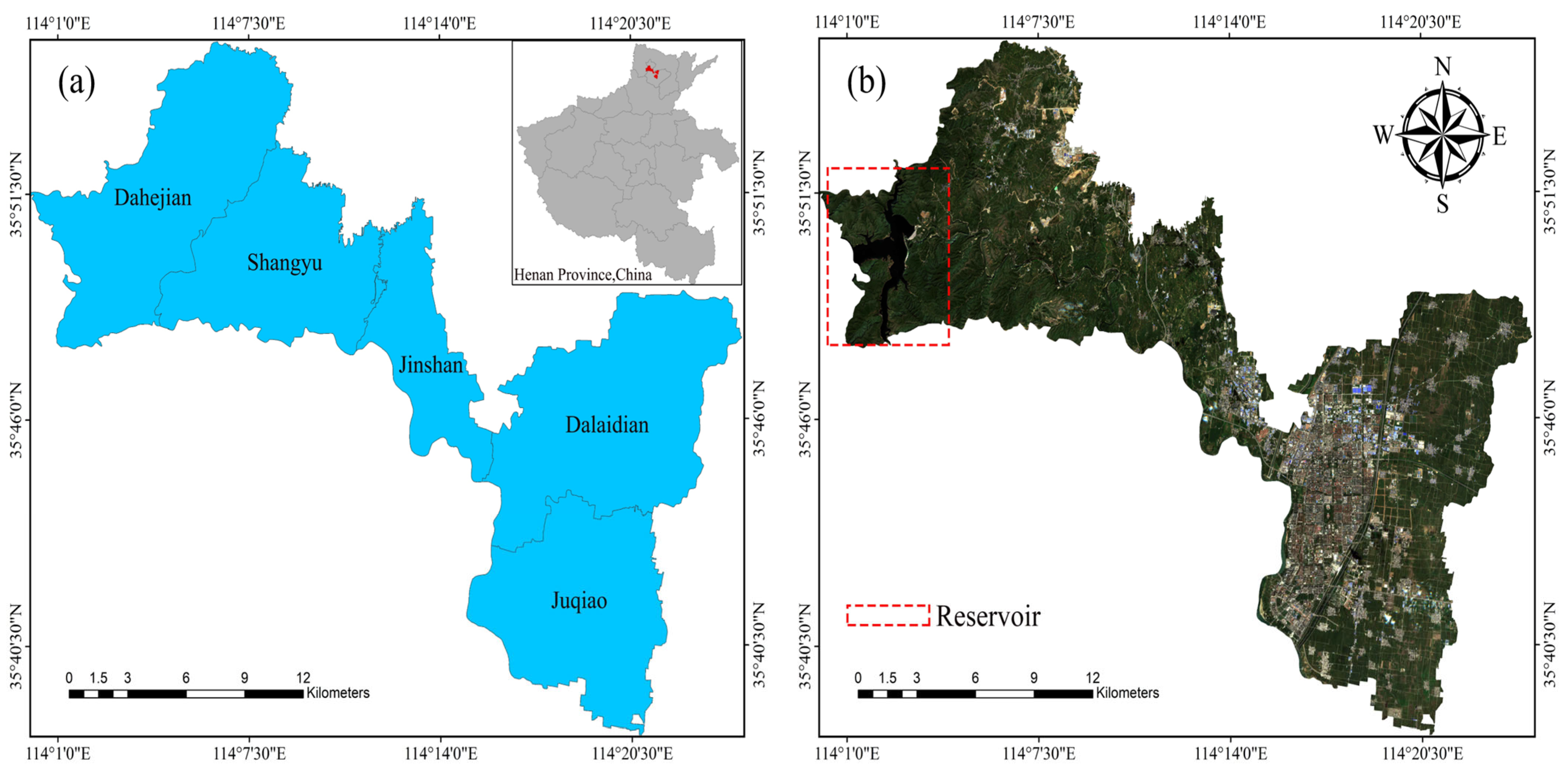

We must thus overcome the classification error of optical images due to cloud cover and haze, and information loss and overfitting in urban environments by CNNs due to the high surface-soil moisture, both of which lead to poor land-use classification. Combining active and passive remote-sensing data, we construct a hybrid CNN with multiple attention mechanisms and residual structures to avoid losing feature information with network depth and thereby fully exploiting for land-use classification the spatial and spectral information available in active and passive remote-sensing data. This study uses Qibin District, Henan Province, China as the research area. A multi-attention module hybrid CNN (MAM-HybridNet) is proposed to study land-use classification based on Sentinel-1A SAR and Sentinel-2B MSI active-passive remote-sensing synoptic data. We explore the potential of SAR and MS fused data for applications in urban-land-use classification research by verifying the effectiveness of the proposed CNN and producing land-use classification maps to provide a decision-making basis for urban development, maintain ecological services, and formulate land-use policy. The main contributions of this study are as follows:

A land-use classification model (MAM-HybridNet) is proposed based on a 2D- and 3D-CNN and a multi-attention mechanism.

A new residual spatial and spectral attention module is introduced to deeply extract discriminative features of remote-sensing image features.

EVI, NDBI, and MNDWI spectral indices are added to Sentinel-1A SAR and Sentinel-2B MSI fused data to explore how this affects land-use classification.

The performance of the proposed classification model for land-use classification is compared with that of commonly used machine-learning and deep-learning methods.

6. Conclusions

Accurate land-use classification is an important tool for monitoring urban development, protecting the urban environment, and restoring urban ecology, as well as for the sustainable development of urban-land resources. This study proposes a hybrid CNN with a multi-attention module (MAM-HybridNet) to combine active-passive remote-sensing data and spectral indexes and thereby improve land-use classification accuracy in a typical city with high surface soil moisture. This study uses the Qibin District of Henan Province as an example. The results indicate that

the worst classification accuracy is produced by using SAR images alone, with OA = 79.53%, KC = 0.73, and AA = 77.83%. SAR images produce more accurate classification for forest and water.

When using MS data alone, the OA of land-use classification is 93.48%, KC = 0.91, and AA = 92.86%. With the synergistic effect of SAR and MS data, the OA accuracy is 95.10%, which is a 1.62% improvement. After considering the deep information of SAR data (GLCM), the OA is 96.59%, which gets even greater improvement of 3.11%.

EVI, MNDWI, and NDBI spectral indexes combined with SAR and MS data produce the most accurate classification results, with OA = 98.87%, KC = 0.98, and AA = 98.36%. The spectral index, which was added as a characteristic variable, increases the discriminative feature classes and reduces the confusion between bare land and construction land, and between forest and cultivated land.

Comparing the classification results of KNN, SVM, 2D-CNN, 3D-CNN, and HybridSN methods with those of the proposed method, the latter produces the best classification results when “All bands data” are selected as the input data, with OA = 98.87%. The standard deviation of the ten experimental results is ±0.53, further testifying to the accuracy and stability of the proposed MAM-HybridNet model. These results confirm that SAR and spectral indexes can improve the classification accuracy of optical images. The synergistic active-passive remote-sensing classification can be generalized to other typical urban areas with high surface soil moisture and with clouds, haze, and smog.

In addition, the proposed MAM-HybridSN network model has more advantages over the other classification methods for feature extraction and feature representation, which further improves the classifier performance and classification accuracy. The 2D-CNN and 3D-CNN algorithms make up for the under-exploitation of spectral features and spatial features, respectively, and overcome the problems of overfitting and feature-information loss in HybridSN networks. The proposed model can be extended to applications such as crop classification, vegetation-type classification, and flood monitoring. However, the model still has shortcomings, and the introduction of the multi-attention mechanism and residual structure makes the network structure more complex. In future research, we thus plan to continue improving the structure of the network to produce a lightweight model with optimum classification accuracy.