The Ethical Governance for the Vulnerability of Care Robots: Interactive-Distance-Oriented Flexible Design

Abstract

:1. Introduction

1.1. Application and Ethical Issues of Caring Robot in Elderly Health Management

1.2. The Problem of Human-Machine Relationships Created by Information-Assisted Guidance

2. Materials and Methods

2.1. Vulnerability Brought by the Dualistic Values of Mind and Body

2.2. Ethical Issues of Care Due to Vulnerability: The Intimacy Dilemma and the Assistance Dilemma

2.2.1. Assistance Dilemma

2.2.2. Intimacy Dilemma

3. Results

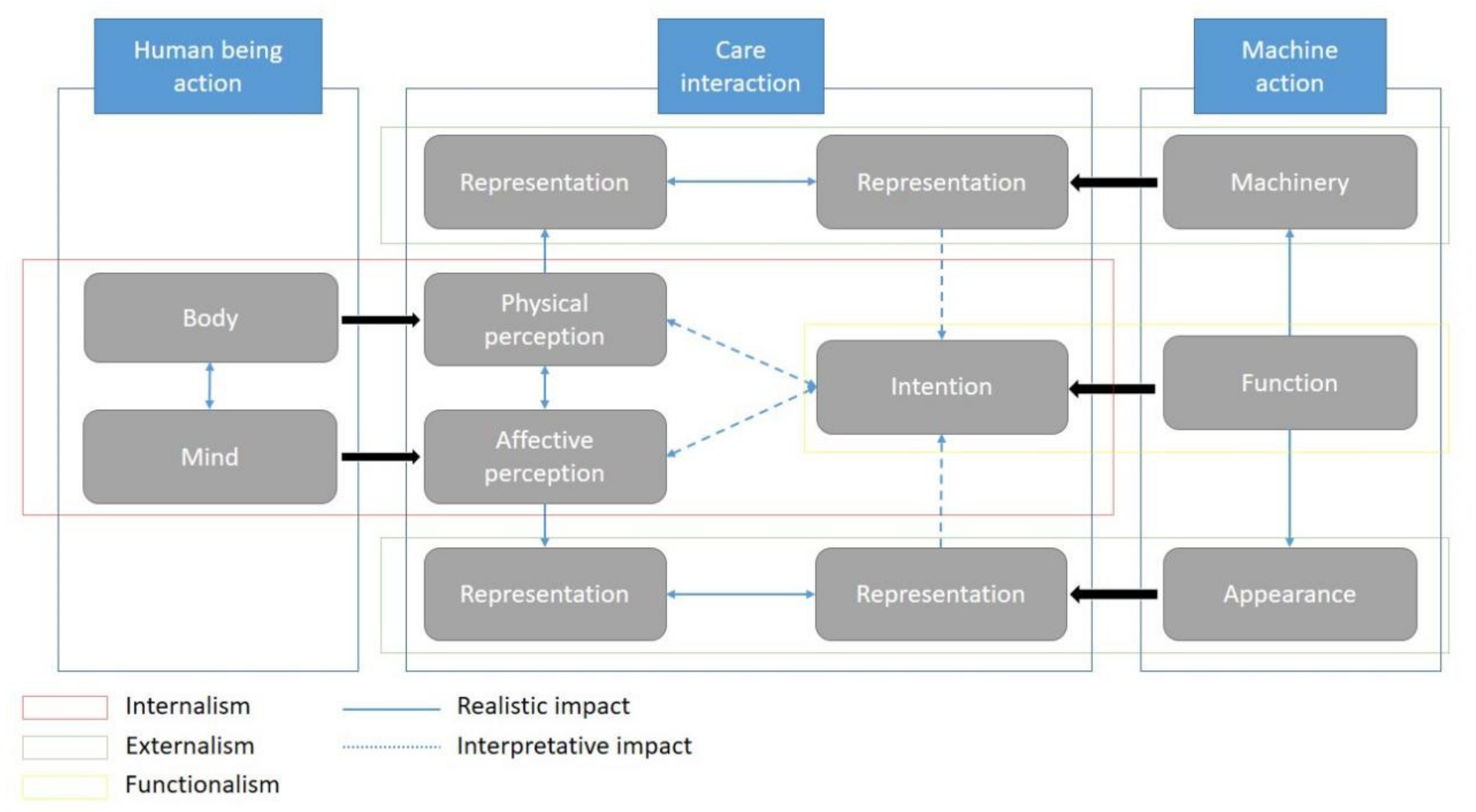

3.1. Beyond the Binary Values of the Human-Machine Caring Relationship

3.2. Analysis: Three Approaches to Anti-Vulnerability

3.2.1. Functionalism: Socially Assistive Robot Designs

3.2.2. Internalism: Virtue Ethics Design

3.2.3. Externalism: Value-Sensitive Design (VSD)

3.3. Interactive-Distance-Oriented Flexible Design

3.3.1. Three Interactive Distances and Their Hermeneutic Hierarchies

- Intention distance: Transforming functions into intentions is a way to get rid of the mode of thinking simply from the perspective of users or designers, especially to deal with the problem of deceptive caring intentions;

- Representational distance: It is the most immediate aspect of interaction, influenced by the fundamental principle of caring ethics, namely the tension between intimacy and effectiveness;

- Interpretation distance: It is the most ambiguous and misunderstood aspect. There are two kinds of interpretation distance: functional and perceptual. The functional interpretation distance describes the distance between the internal initiation of a function and the external realization of a function in a caring interaction. It is a flexible adjustment space, and its adjustment range and emphasis are based on different types of robots. For example, a care robot reminds an elderly person to take their medicine on time. The functional intrinsic initiation is to wake up the verbal function of the machine in a time series to vocalize and textually indicate the need to take medication, which will be heard and seen by the elderly person. This time difference is influenced by the convenience of the machine’s function as well as its clarity of expression.

3.3.2. The Flexible Design of Care Robots

- Distance

- (1)

- Keep a distance for interaction

- (2)

- Physical barrier

- 2.

- Diversity

- (1)

- Anti-anthropomorphic appearance

- (2)

- Autonomy

- 3.

- Transparent

- (1)

- Explicability

- (2)

- Symmetry

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Broekens, J.; Heerink, M.; Rosendal, H. Assistive social robots in elderly care: A review. Gerontechnology 2009, 8, 94–103. [Google Scholar] [CrossRef] [Green Version]

- Abdi, J.; Al-Hindawi, A.; Ng, T.; Vizcaychipi, M.P. Scoping review on the use of socially assistive robot technology in elderly care. BMJ Open 2018, 8, e018815. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McGinn, C.; Bourke, E.; Murtagh, A.; Donovan, C.; Lynch, P.; Cullinan, M.F.; Kelly, K. Meet Stevie: A Socially Assistive Robot Developed Through Application of a ‘Design-Thinking’ Approach. J. Intell. Robot. Syst. 2020, 98, 39–58. [Google Scholar] [CrossRef]

- World Health Organization. Ageing: Healthy Ageing and Functional Ability. Available online: https://www.who.int/news-room/q-a-detail/ageing-healthy-ageing-and-functional-ability (accessed on 26 October 2020).

- Calatayud, E.; Rodríguez-Roca, B.; Aresté, J.; Marcén-Román, Y.; Salavera, C.; Gómez-Soria, I. Functional Differences Found in the Elderly Living in the Community. Sustainability 2021, 13, 5945. [Google Scholar] [CrossRef]

- Danaher, J. Robot Sex: Social and Ethical Implications; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Gunkel, D.J. Robot Rights; MIT Press: Cambridge, MA, USA, 2018; p. 170. [Google Scholar]

- Dignum, V. Responsible Artificial Intelligence: How to Develop and Use AI in a Responsible Way; Springer Nature: Cham, Switzerland, 2019. [Google Scholar]

- Nyholm, S. Humans and Robots: Ethics, Agency, and Anthropomorphism; Rowman & Littlefield International: London, UK, 2020. [Google Scholar]

- Andreotta, A.J. The hard problem of AI rights. AI Soc. 2021, 36, 19–32. [Google Scholar] [CrossRef]

- Metzinger, T. Two principles for robot ethics. In Robotik und Gesetzgebung; Hilgendorf, E., Günther, J.-P., Eds.; Nomos: Baden-Baden, Germany, 2013; pp. 263–302. [Google Scholar]

- Campa, R. The rise of social robots: A review of the recent literature. J. Evol. Technol. 2016, 26, 106–113. [Google Scholar] [CrossRef] [Green Version]

- Shea, M. User-Friendly: Anthropomorphic Devices and Mechanical Behaviour in Japan. Adv. Anthropol. 2014, 6, 41–49. [Google Scholar] [CrossRef] [Green Version]

- Bartneck, C.; Bleeker, T.; Bun, J.; Fens, P.; Riet, L. The influence of robot anthropomorphism on the feelings of embarrassment when interacting with robots. Paladyn 2010, 1, 109–115. [Google Scholar] [CrossRef] [Green Version]

- Yew, G.C.K. Trust in and Ethical Design of Carebots: The Case for Ethics of Care. Int. J. Soc. Robot. 2021, 13, 629–645. [Google Scholar] [CrossRef]

- Ferreira, M.; Sequeira, J.S.; Tokhi, M.O.; Kadar, E.E.; Virk, G.S. A World with Robots; Springer International: Cham, Switzerland, 2017; p. 210. [Google Scholar]

- Albu-Schäeffer, A.; Eiberger, O.; Grebenstein, M.; Haddadin, S.; Ott, C.; Wimböck, T.; Wolf, S.; Hirzinger, G. Soft robotics: From torque feedback controlled lightweight robots to intrinsically compliant systems. IEEE Robot. Autom. Mag. 2008, 15, 20–30. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef] [Green Version]

- Floridi, L.; Cowls, J.; King, T.C.; Taddeo, M. How to Designing AI for social good: Seven essential factors. Sci. Eng. Ethics 2020, 26, 1771–1796. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Taddeo, M.; Floridi, L. How AI can be a force for good. Science 2018, 361, 751–752. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Umbrello, S. Meaningful human control over smart home systems: A value sensitive design approach. Humana. Mente J. Philos. Stud. 2020, 13, 40–65. [Google Scholar]

- Winfeld, A.F.; Michael, K.; Pitt, J.; Evers, V. Machine ethics: The design and governance of ethical AI and autonomous systems. Proc. IEEE 2019, 107, 509–517. [Google Scholar] [CrossRef] [Green Version]

- Van Wynsberghe, A. Social Robots and the Risks to Reciprocity. AI Soc. 2021; 1–7, Epub ahead of print. [Google Scholar] [CrossRef]

- Friedman, B.; Hendry, D.G. Value Sensitive Design: Shaping Technology with Moral Imagination; MIT Press: Cambridge, MA, USA, 2019; p. 25. [Google Scholar]

- Friedman, B.; Hendry, D.G.; Borning, A. A survey of value sensitive design methods. Found. Trends Hum. Comput. Interact. 2017, 11, 63–125. [Google Scholar] [CrossRef]

- Longo, F.; Padovano, A.; Umbrello, S. Value-oriented and ethical technology engineering in industry 5.0: A human-centric perspective for the design of the factory of the future. Appl. Sci. 2020, 10, 4182. [Google Scholar] [CrossRef]

- Martinez-Martin, E.; Escalona, F.; Cazorla, M. Socially assistive robots for older adults and people with autism: An overview. Electronics 2020, 9, 367. [Google Scholar] [CrossRef] [Green Version]

- Jecker, N.S. You’ve Got a Friend in Me: Sociable Robots for Older Adults in an Age of Global Pandemics. Ethics Inf. Technol. 2020, 23, 35–43. [Google Scholar] [CrossRef]

- Sharkey, A. Robots and human dignity: A consideration of the effects of robot care on the dignity of older people. Ethics Inf. Technol. 2014, 16, 63–75. [Google Scholar] [CrossRef] [Green Version]

- Ostrowski, A.K.; DiPaola, D.; Partridge, E.; Park, H.W.; Breazeal, C. Older Adults Living with Social Robots: Promoting Social Connectedness in Long-Term Communities. IEEE Robot. Automat. Mag. 2019, 26, 59–70. [Google Scholar] [CrossRef]

- Van de Poel, I. Embedding values in artifcial intelligence (AI) systems. Minds Mach. 2020, 30, 385–409. [Google Scholar] [CrossRef]

- Mabaso, B.A. Artifcial moral agents within an ethos of AI4SG. Philos. Technol. 2020, 24, 7–21. [Google Scholar]

- Coeckelbergh, M. Moral Appearances: Emotions, Robots, and Human Morality. In Machine Ethics and Robot Ethics; Wallach, W., Asaro, P., Eds.; Routledge: London, UK, 2020; pp. 117–123. [Google Scholar] [CrossRef]

- Heerink, M.; Kröse, B.; Evers, V.; Wielinga, B. Assessing Acceptance of Assistive Social Agent Technology by Older Adults: The Almere Model. Int. J. Soc. Robot. 2010, 2, 361–375. [Google Scholar] [CrossRef] [Green Version]

- Jung, M.; Leij, L.; Kelders, S. An Exploration of the Benefits of an Animallike Robot Companion with More Advanced Touch Interaction Capabilities for Dementia Care. Front. ICT 2017, 4, 16. [Google Scholar] [CrossRef] [Green Version]

- Bradwell, H.L.; Noury, G.E.A.; Edwards, K.J.; Winnington, R.; Serge, T.; Ray, B.J. Design recommendations for socially assistive robots for health and social care based on a large scale analysis of stakeholder positions: Social robot design recommendations. Health Policy Technol. 2021, 10, 100544. [Google Scholar] [CrossRef]

- Broadbent, E.; Stafford, R.; MacDonald, B. Acceptance of Healthcare Robots for the Older Population: Review and Future Directions. Int. J. Soc. Robot. 2009, 1, 319. [Google Scholar] [CrossRef]

- Sparrow, R.; Sparrow, L. In the hands of machines? The future of aged care. Minds Mach. 2006, 16, 141–161. [Google Scholar] [CrossRef]

- De Graaf, M.M.A.; Allouch, S.B.; van Dijk, J.A.G.M. Why Would I Use This in My Home? A Model of Domestic Social Robot Acceptance. Hum. Comput. Interact. 2017, 34, 115–173. [Google Scholar] [CrossRef]

- Burmeister, O.K.; Ritchie, D.; Devitt, A.; Chia, E.; Dresser, G.; Roberts, R. The impact of telehealth technology on user perception of wellbeing and social functioning, and the implications for service providers. Australas. J. Inf. Syst. 2019, 23, 1–18. [Google Scholar] [CrossRef]

- Teipel, S.; Babiloni, C.; Hoey, J.; Kirste, T.; Burmeister, O.K. Information and communication technology solutions for outdoor navigation in dementia. Alzheimer’s Dement. J. Alzheimer’s Assoc. 2016, 12, 695–707. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tronto, J. Moral Boundaries: A Political Argument for an Ethic of Care; Routledge: New York, NY, USA, 1993. [Google Scholar]

- Rogers, W.; Mackenzie, C.; Dodds, S. Why bioethics needs a concept of vulnerability. Int. J. Fem. Approach. Bioeth. 2012, 5, 11–38. [Google Scholar] [CrossRef]

- Kreps, D.; Komukai, T.; Gopal, T.; Ishii, K.K. Human-Centric Computing in a Data-Driven Society. In Proceedings of the 14th IFIP TC 9 International Conference on Human Choice and Computers, HCC14 2020, Tokyo, Japan, 9–11 September 2020. [Google Scholar]

- Van Wynsberghe, A. Healthcare Robots: Ethics, Design and Implementation; Ashgate Publishing: Farnham, UK, 2015. [Google Scholar]

- Malle, B.F. Integrating robot ethics and machine morality: The study and design of moral competence in robots. Ethics Inf. Technol. 2016, 18, 243–256. [Google Scholar] [CrossRef]

- Braun, J.V.; Archer, M.S.; Reichberg, G.M.; Sorondo, M.S. Robotics, AI, and Humanity: Science, Ethics, and Policy; Springer: Cham, Switzerland, 2021; p. 193. [Google Scholar] [CrossRef]

- Coeckelbergh, M. Human Being @ Risk: Enhancement, Technology, and the Evaluation of Vulnerability Transformations; Springer: Dordrecht, The Netherlands, 2013; p. 17. [Google Scholar]

- Balkin, J.M. The path of robotics law. Calif. Law Rev. 2015, 6, 45–60. [Google Scholar]

- Benso, S. The Face of Things: A Different Side of Ethics; SUNY Press: Albany, NY, USA, 2000. [Google Scholar]

- Peeters, A.; Haselager, P. Designing Virtuous Sex Robots. Int. J. Soc. Robot. 2021, 13, 55–66. [Google Scholar] [CrossRef] [Green Version]

- Yamaji, Y.; Miyake, T.; Yoshiike, Y.; De Silva, P.R.S.; Okada, M. STB: Child-Dependent Sociable Trash Box. Int. J. Soc. Robot. 2011, 3, 359–370. [Google Scholar] [CrossRef]

- Sandewall, E. Ethics, Human Rights, the Intelligent Robot, and its Subsystem for Moral Beliefs. Int. J. Soc. Robot. 2021, 13, 557–567. [Google Scholar] [CrossRef] [Green Version]

- Jordan, P. Designing Pleasurable Products: An Introduction to the New Human Factors; Taylor & Francis: London, UK, 2000. [Google Scholar]

- Helander, M.G.; Khalid, H.M. Affective and pleasurable design. In Handbook of Human Factors and Ergonomics; Salvendy, G., Ed.; Wiley, Inc.: Hoboken, NJ, USA, 2006; pp. 543–572. [Google Scholar] [CrossRef]

- Desmet, P.M.A. Designing Emotions; Delft University of Technology: Delft, The Netherlands, 2002. [Google Scholar]

- Van Wynsberghe, A. A method for integrating ethics into the design of robots. Ind. Robot. Int. J. 2013, 40, 433–440. [Google Scholar] [CrossRef]

- Van Wynsberghe, A. Service robots, care ethics, and design. Ethics Inf. Technol. 2016, 18, 311–321. [Google Scholar] [CrossRef] [Green Version]

- Ayanoğlu, H.; Duarte, E. Emotional Design in Human-Robot Interaction; Springer Nature: Cham, Switzerland, 2019; p. 127. [Google Scholar]

- Pirtle, Z.; Tomblin, D.; Madhavan, G. Engineering and Philosophy: Reimagining Technology and Social Progress; Springer Nature: Cham, Switzerland, 2021; p. 171. [Google Scholar]

- Pirni, A.; Balistreri, M.; Capasso, M.; Umbrello, S.; Merenda, F. Robot Care Ethics Between Autonomy and Vulnerability: Coupling Principles and Practices in Autonomous Systems for Care. Front. Robot. AI 2021, 8, 654298. [Google Scholar] [CrossRef] [PubMed]

- Tronto, J. Caring Democracy. Markets, Equality and Justice; New York University Press: New York, NY, USA, 2013; p. 108. [Google Scholar]

- Umbrello, S.; Capasso, M.; Balistreri, M.; Pirni, A.; Merenda, F. Value Sensitive Design to Achieve the UN SDGs with AI: A Case of Elderly Care Robots. Minds Mach. 2021, 31, 395–419. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Zhang, C.; Li, X. The Ethical Governance for the Vulnerability of Care Robots: Interactive-Distance-Oriented Flexible Design. Sustainability 2022, 14, 2303. https://doi.org/10.3390/su14042303

Zhang Z, Zhang C, Li X. The Ethical Governance for the Vulnerability of Care Robots: Interactive-Distance-Oriented Flexible Design. Sustainability. 2022; 14(4):2303. https://doi.org/10.3390/su14042303

Chicago/Turabian StyleZhang, Zhengqing, Chenggang Zhang, and Xiaomeng Li. 2022. "The Ethical Governance for the Vulnerability of Care Robots: Interactive-Distance-Oriented Flexible Design" Sustainability 14, no. 4: 2303. https://doi.org/10.3390/su14042303