Development and Validation of a Questionnaire to Measure Digital Skills of Chinese Undergraduates

Abstract

:1. Introduction

2. Literature Review

3. Research Questions

- (1)

- What is the internal factor structure of the questionnaire to estimate the digital skills of Chinese undergraduates?

- (2)

- What are the reliability and validity of the content and construct of the questionnaire to estimate the digital skills of Chinese undergraduates?

4. Methods

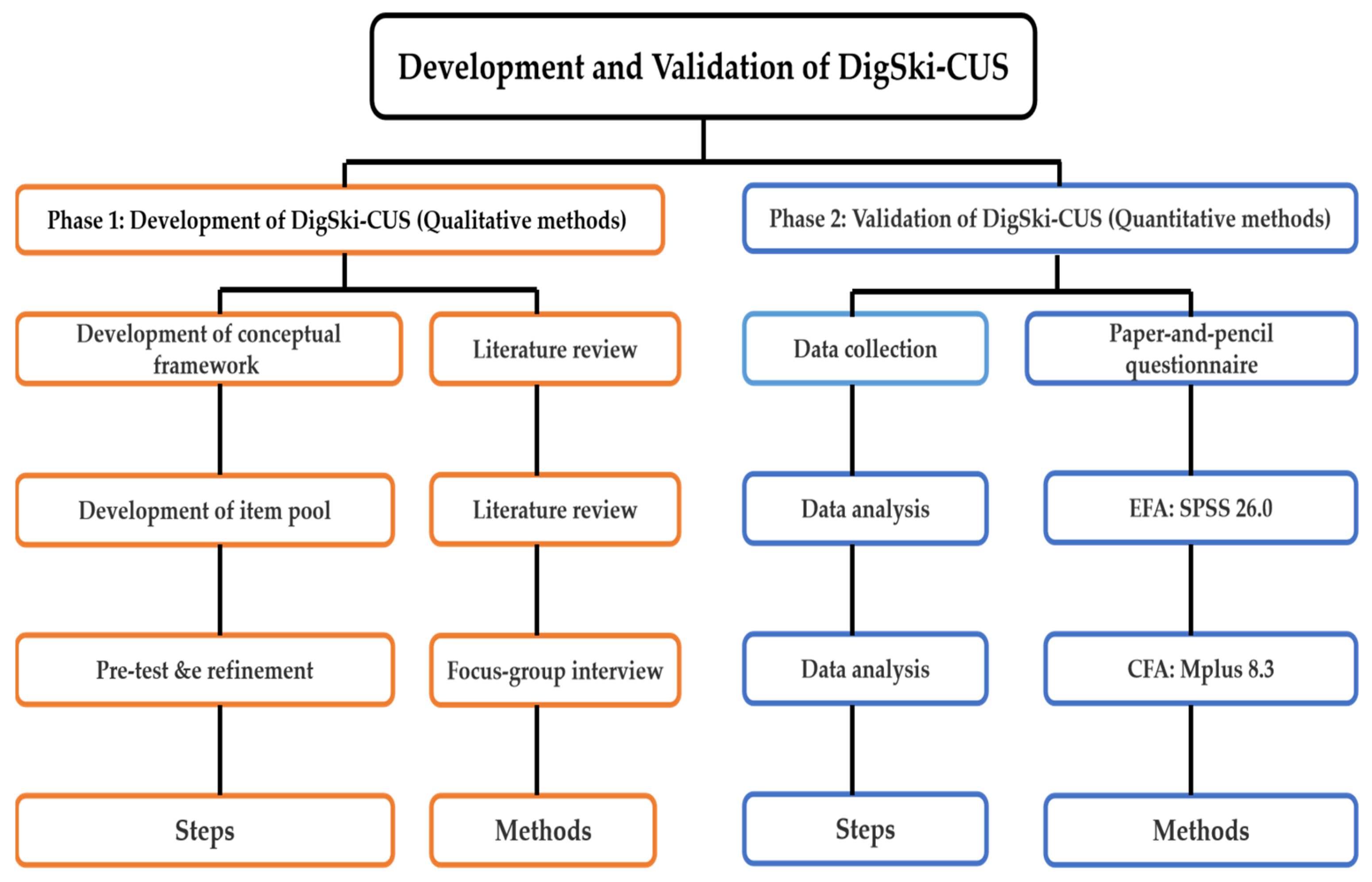

4.1. Research Design and Methods

4.2. Ethical Considerations

4.3. Procedure

4.4. Sample

4.5. Questionnaire Development

4.6. Questionnaire Testing and Refinement

4.7. Data Analysis

5. Results

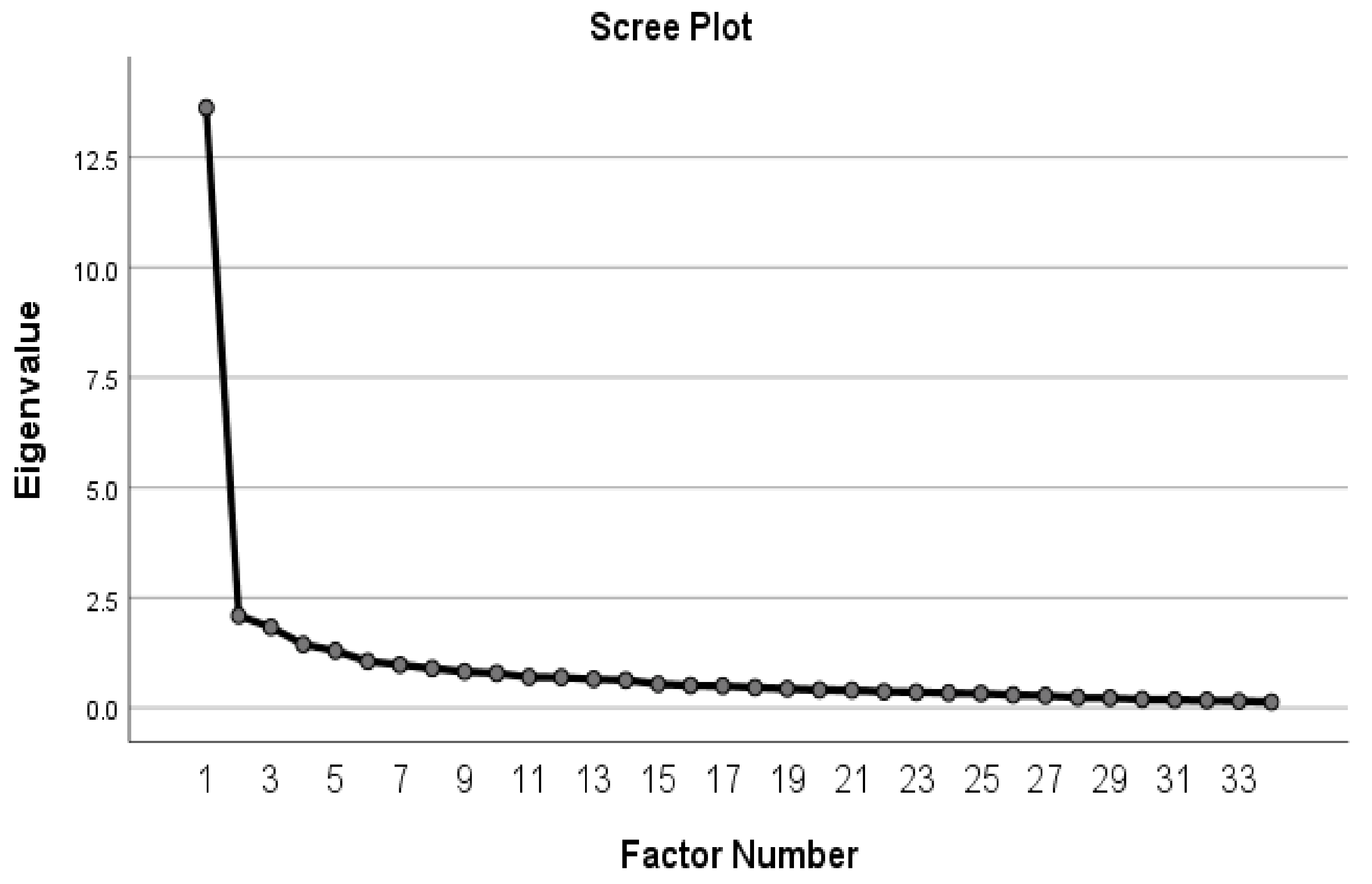

5.1. Results of EFA

5.2. Results of CFA

5.2.1. Model Goodness-of-Fit

5.2.2. Convergent Validity

5.2.3. Discriminant Validity

6. Discussion

7. Limitations and Future Work

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hargittai, E.; Shaw, A. Digitally savvy citizenship: The role of internet skills and engagement in young adults’ political participation around the 2008 presidential election. J. Broadcasting Electron. Media 2013, 57, 115–134. [Google Scholar] [CrossRef]

- Kahne, J.; Bowyer, B. Can media literacy increase digital engagement in politics? Learn. Media Technol. 2019, 44, 211–224. [Google Scholar] [CrossRef]

- Janschita, G.; Penker, M. How digital are ‘digital natives’ actually? Developing an instrument to measure the degree of digitalisation of university students—The DDS-Index. Tools Instrum. 2022, 153, 127–159. [Google Scholar]

- Hernández-Martín, A.; Martín-del-Pozo, M.; Iglesias-Rodríguez, A. Pre-adolescents’ digital competences in the area of safety. Does frequency of social media use mean safer and more knowledgeable digital usage? Educ. Inf. Technol. 2021, 26, 1043–1067. [Google Scholar] [CrossRef]

- Eshet, Y. Digital literacy: A conceptual framework for survival skills in the digital era. J. Educ. Multimed. Hypermedia 2004, 13, 93–106. [Google Scholar]

- Van Deursen, A.J.A.M.; Van Dijk, J.A.G.M. Internet skills levels increase, but gaps widen: A longitudinal cross-sectional analysis (2010–2013) among the Dutch population. Inf. Commun. Soc. 2011, 18, 782–797. [Google Scholar] [CrossRef]

- OECD. Students, Computers and Learning: Making the Connection. PISA, OECD. 2015. Available online: https//doi.org/10.1787/9789274239555-en (accessed on 20 January 2020).

- Radovanovic, D.; Hogan, B.; Lalic, D. Overcoming digital divides in higher education: Digital literacy beyond Facebook. New Media Soc. 2015, 17, 1733–1749. [Google Scholar] [CrossRef]

- Li, X.J.; Hu, R.J. Developing and validating the digital skills scale for school children (DSS-SC). Inf. Commun. Soc. 2018. [Google Scholar] [CrossRef]

- Liu, Q.T.; Wu, L.X.; Zhang, S.; Mao, G. Research of a model for teachers’ digital ability standard. China Educ. Technol. 2015, 340, 14–19. (In Chinese) [Google Scholar]

- Peled, Y. Pre-service teachers’ self-perception of digital literacy: The case of Isreal. Educ. Inf. Technol. 2021, 26, 2879–2896. [Google Scholar] [CrossRef]

- Peart, M.T.; Gutierrez-Esteban, P.; Cubo-Delgado, S. Development of the digital and socio-civic skills (DIGSOC) questionnaire. Educ. Tech. Res. Dev. 2020, 68, 3327–3351. [Google Scholar] [CrossRef]

- Correa, T. Digital skills and social media use: How internet skills are related to different types of Facebook use among ‘digital natives’. Inf. Commun. Soc. 2016, 19, 1095–1107. [Google Scholar] [CrossRef]

- Handley, F. Developing digital skills and literacies in UK higher education: Recent developments and a case study of the digital literacies framework at the University of Brighton, UK. Publications 2018, 48, 109–126. [Google Scholar] [CrossRef] [Green Version]

- Greene, J.A.; Yu, S.B.; Copeland, D.Z. Measuring critical components of digital literacy and their relationships with learning. Comput. Educ. 2014, 76, 55–69. [Google Scholar] [CrossRef]

- Mohammadyari, S.; Singh, H. Understanding the effect of e-learning on individual performance: The role of digital literacy. Comput. Educ. 2015, 82, 11–25. [Google Scholar] [CrossRef]

- Burgos-Videla, C.G.; Castillo Rojas, W.A.; López Meneses, E.; Martínez, J. Digital Competence Analysis of University Students Using Latent Classes. Educ. Sci. 2021, 11, 385. [Google Scholar] [CrossRef]

- Ferrari, A. DIGCOMP: A Framework for Developing and Understanding Digital Competence in Europe; Joint Research Centre Reports; Publications Office of the European Union: Luxemberg, 2013. [Google Scholar]

- Janseen, J.; Stoyanov, S.; Ferrari, A.; Punie, Y.; Pannekeet, K.; Sloep, P. Experts’ views on digital competence: Commonalities and differences. Comput. Educ. 2013, 68, 473–481. [Google Scholar] [CrossRef]

- List, A. Defining digital literacy development: An examination of pre-service teachers’ beliefs. Comput. Educ. 2019, 138, 146–158. [Google Scholar] [CrossRef]

- UNESCO. A Policy Review: Building Digital Citizenship in Asia Pacific through Safe, Effective and Responsible Use of ICT; UNESCO Asia and Pacific Regional Bureau for Education: Bangkok, Thailand, 2016. [Google Scholar]

- Gorsuch, R.L. Factor Analysis Hillsdale, 2nd ed.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1983. [Google Scholar]

- Henson, R.K.; Capraro, R.M.; Capraro, M.M. Reporting practices and use of exploratory factor analyses in educational research journals: Errors and explanation. Res. Sch. 2004, 11, 61–72. [Google Scholar]

- Thompson, B.; Daniel, L. Factor analytic evidence for the construct validity of scores: A historical overview and some guidelines. Educ. Psychol. Meas. 1996, 56, 197–208. [Google Scholar] [CrossRef]

- van Laar, E.; van Deursen, A.J.A.M.; van Dijk, J.A.G.M.; de Haan, J. The relation between 21st-century skills and digital skills: A systematic literature review. Comput. Hum. Behav. 2017, 72, 577–588. [Google Scholar] [CrossRef]

- Gilster, P. Digital Literacy; Wiley: New York, NY, USA, 1997. [Google Scholar]

- Ng, W. Can we teach digital natives’ digital literacy? Comput. Educ. 2012, 59, 1065–1078. [Google Scholar] [CrossRef]

- American Library Association. Digital Literacy. Libraries and Public Policy: Report of the Office for Information Technology Policy’s Digital Literacy Taskforce. 2013. Available online: https://www.atalm.org/sites/default/files/Digital%20Literacy,%20Libraries,%20and%20Public%20Policy.pdf (accessed on 8 February 2022).

- European Commission. Recommendation 2009/625/CE of the Commission, 20 August 2009, on Media Literacy in Digital Environments for Audio-Visual Industries and Entrepreneur and Knowledge Society; European Commission Press: Brussels, Belgium, 2009. [Google Scholar]

- Vuorikari, R.; Punie, Y.; Carretero Gomez, S.; Van den Brande, G. DigComp 2.0: The Digital Competence Framework for Citizens. Update Phase 1: The Conceptual Reference Model; Luxembourg Publication Office of the European Union: Luxembourg, 2016. [Google Scholar]

- Adorjan, M.; Ricciardelli, R. Student perspectives towards school responses to cyber-risk and safety: The presumption of the prudent digital citizen. Learn. Media Technol. 2019, 44, 430–442. [Google Scholar] [CrossRef]

- Johnston, N. The shift towards digital literacy in Australian university libraries: Developing a digital literacy framework. J. Aust. Libr. Inf. Assoc. 2020, 69, 93–101. [Google Scholar] [CrossRef]

- Mengual-Andrés, S.; Roig-Vila, R.; Mira, J.B. Delphi study for the design and validation of a questionnaire about digital competences in higher education. Int. J. Educ. Technol. High. Educ. 2016, 13, 12–23. [Google Scholar] [CrossRef] [Green Version]

- Rolstad, S.; PhLic, J.A.; Rydén, A. Response burden and questionnaire length: Is shorter better? A review and meta-analysis. Value Health 2011, 14, 1101–1108. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.W. Developing EFL students’ digital empathy through video production. System 2018, 77, 50–57. [Google Scholar] [CrossRef]

- Petousi, V.; Sifaki, E. Contextualizing harm in the framework of research misconduct. Findings from discourse analysis of scientific publications. Int. J. Sustain. Dev. 2020, 23, 149–174. [Google Scholar] [CrossRef]

- Hair, J.F., Jr.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis; CENGAGE: Boston, MA, USA, 2018. [Google Scholar]

- MacCallum, R.C.; Roznowski, M.; Mar, C.; Reith, J.V. Alternative strategies for cross-validation of covariance structure models. Multivar. Behav. Res. 1994, 29, 1–32. [Google Scholar] [CrossRef]

- Jomeen, J.; Martin, C.R. Confirmation of an occluded anxiety component within the Edinburgh Postnatal Depression Scale (EPDS) during early pregnancy. J. Reprod. Infant. Psychol. 2005, 23, 143–154. [Google Scholar] [CrossRef]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Modeling 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Schumacker, R.E.; Lomax, R.G. A Beginner’s Guide to Structural Equation Modeling; Lawrence Erlbaum: Mahwah, NJ, USA, 1996. [Google Scholar]

- Prudon, P. Confirmatory factor analysis as a tool in research using questionnaires: A critique. Compr. Psychol. 2015, 4, 10. [Google Scholar] [CrossRef] [Green Version]

- Streiner, D.L. Figuring out factors: The use and misuse of factor analysis. Can. J. Psychiatry 1994, 39, 135–140. [Google Scholar] [CrossRef] [PubMed]

- Howard, M.C. A review of exploratory factor analysis decisions and overview of current practices: What we are doing and how can we improve? Int. J. Hum. Comput. Interact. 2016, 32, 51–62. [Google Scholar] [CrossRef]

- Martin, C.R.; Tweed, A.E.; Metcalfe, M.S. A psychometric evaluation of the hospital anxiety and depression scale in patients diagnosed with end-stage renal disease. Br. J. Clin. Psychol. 2004, 43, 51–64. [Google Scholar] [CrossRef]

- Carter, S.R. Using confirmatory factor analysis to manage discriminant validity issues in social pharmacy research. Int. J. Clin. Pharm. 2016, 38, 731–737. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

| Dimensions | Measuring Items z | Literature Sources |

|---|---|---|

| Information Skills | ||

| 1 | I have apps that keep me up to date with news. | Li & Hu [9] Peart et al. [12] |

| 2 | I am able to search for and access information in digital environments. | |

| 3 | I can use different tools to store and manage information. | |

| 4 | I am able to search for information that I need on the Internet. | |

| 5 | I can understand the information that I get from the Internet. | |

| 6 | I consider only the most important information on the Internet. | |

| 7 | I skillfully use digital software to complete learning tasks. | Based on Code for the Construction of Digital Campuses in Colleges and Universities (for Trial Implementation) (2021) |

| 8 | I can complete digital content that meets the minimum requirements of learning tasks. | |

| 9 | I can create and edit digital content with higher standards according to the requirements of work or study. | |

| 10 | I am able to use digital means to clearly express my views to others | |

| 11 | I am able to use digital means to cooperate with others to complete tasks. | |

| 12 | I am able to use digital means to solve problems encountered in my study. | |

| 13 | I am able to use digital means to detect plagiarism of content that I created. | |

| Communication skills | ||

| 14 | I can communicate with others in digital environments. | Li & Hu [9] Peart et al. [12] |

| 15 | I know how to communicate with others through different digital means. | |

| 16 | I know how to communicate with others in different ways (e.g., images, texts, videos ...). | |

| 17 | I communicate my ideas to people whom I know in digital environments. | |

| 18 | I share information and content with other people via digital tools or websites. | |

| Creation skills | ||

| 19 | I know different ways to create and edit digital content (e.g., videos, photographs, texts, animations...). | Li & Hu [9] Peart et al. [12] |

| 20 | I am able to accurately present what I want to deliver in digital environments. | |

| 21 | I can transform information and organize it in different formats. | |

| Digital safety skills | ||

| 22 | I am careful and try to ensure that my messages do not irritate others. | Li & Hu [9] Peart et al. [12] |

| 23 | I am careful with my personal information. | |

| 24 | I am careful with the information of other people. | |

| 25 | I avoid having arguments with others in digital environments | |

| 26 | I am able to identify harmful behaviors that can affect me on social networks. | |

| 27 | I avoid behaviors that are harmful on social networks. | |

| 28 | Before doing a digital activity (e.g., upload a photo, comment ...), I think about the possible consequences. | |

| 37 | When sharing digital information, I am able to protect my privacy and security. | |

| 38 | I reflect on whether a digital environment is safe. | |

| Digital empathy skills | ||

| 29 | I am able to put myself in other people’s shoes in digital environments. | Li & Hu [9] Peart et al. [12] |

| 30 | I am willing to help other people in digital environments. | |

| 31 | I am able to use digital technologies to exercise my citizenship. | |

| 32 | I respect other people in digital environments. | |

| 33 | I take into account the opinion of others in digital environments. | |

| 34 | I get informed before commenting on a topic. | |

| 35 | I am able to restrict my behavior based on the qualities of netizens. | |

| 36 | When forwarding information, I consider whether the information source is reliable. | |

| Items | Factor Loadings z | |||||

|---|---|---|---|---|---|---|

| Factor 1 | Factor 2 | Factor 3 | Factor 4 | Factor 5 | Factor 6 | |

| 32 | 0.833 *** | |||||

| 33 | 0.713 *** | |||||

| 29 | 0.593 *** | |||||

| 35 | 0.466 *** | |||||

| 31 | 0.397 *** | |||||

| 34 | 0.383 *** | |||||

| 30 | 0.328 *** | |||||

| 4 | 0.883 *** | |||||

| 1 | 0.823 *** | |||||

| 2 | 0.724 *** | |||||

| 3 | 0.621 *** | |||||

| 5 | 0.592 *** | |||||

| 9 | 0.658 *** | |||||

| 7 | 0.643 *** | |||||

| 13 | 0.514 *** | |||||

| 11 | 0.511 *** | |||||

| 8 | 0.442 *** | |||||

| 12 | 0.441 *** | |||||

| 26 | 0.774 *** | |||||

| 28 | 0.697 *** | |||||

| 25 | 0.587 *** | |||||

| 27 | 0.587 *** | |||||

| 23 | 0.434 *** | |||||

| 37 | 0.397 *** | |||||

| 38 | 0.388 *** | |||||

| 22 | 0.313 *** | |||||

| 16 | 0.716 *** | |||||

| 15 | 0.569 *** | |||||

| 14 | 0.510 *** | |||||

| 17 | 0.502 *** | |||||

| 21 | 0.615 *** | |||||

| 20 | 0.562 *** | |||||

| 19 | 0.350 *** | |||||

| Factors | Items z | Cronbach’s α |

|---|---|---|

| Use of digital means | 9, 7, 13, 11, 8, 12 | 0.854 |

| Access to and management of digital content | 4, 1, 2, 3, 5 | 0.874 |

| Communication of digital content | 15, 14, 16, 17 | 0.784 |

| Creation of digital content | 20, 19 | 0.844 |

| Digital empathy | 32, 33, 29, 35, 31, 34, 30 | 0.888 |

| Digital safety | 26, 28, 25, 27, 23, 37, 38, 22 | 0.866 |

| Factors | Items | X2 | df | X2/df | RMSEA | CFI | TLI |

|---|---|---|---|---|---|---|---|

| Use of digital means | 7, 8, 9, 12, 13 | 11.142 | 5 | 2.228 | 0.073 | 0.981 | 0.962 |

| Access to and management of digital content | 1, 2, 3, 4, 5 | 10.320 | 5 | 2.064 | 0.068 | 0.989 | 0.978 |

| Communication of digital content | 14, 15, 16 | 0.000 | 0 | 0.000 | 0.000 | 1.000 | 1.000 |

| Creation of digital content | 19, 20, 21 | 0.000 | 0 | 0.000 | 0.000 | 1.000 | 1.000 |

| Digital empathy | 29, 30, 32, 33, 34 | 10.914 | 5 | 2.182 | 0.072 | 0.989 | 0.978 |

| Digital safety | 23, 25, 26, 27, 28, 37 | 13.594 | 9 | 2.228 | 0.047 | 0.989 | 0.982 |

| Factors | Items | AVE z | CR y |

|---|---|---|---|

| Use of digital means | 7, 8, 9, 12, 13 | 0.458 | 0.808 |

| Access to and management of digital content | 1,2,3,4,5 | 0.553 | 0.859 |

| Communication of digital content | 14, 15, 16 | 0.534 | 0.770 |

| Creation of digital content | 19, 20, 21 | 0.628 | 0.835 |

| Digital empathy | 29, 30, 32, 33, 34 | 0.575 | 0.870 |

| Digital safety | 23, 25, 26, 27, 28, 37 | 0.455 | 0.832 |

| Factors | AVE | Use of Digital Means | Access to and Management of Digital Content | Communication of Digital Content | Creation of Digital Content | Digital Empathy | Digital Safety |

|---|---|---|---|---|---|---|---|

| Use of digital means | 0.458 | 0.677 | |||||

| Access to and management of digital content | 0.553 | 0.452 | 0.744 | ||||

| Communication of digital content | 0.534 | 0.503 | 0.721 | 0.731 | |||

| Creation of digital content | 0.628 | 0.618 | 0.542 | 0.630 | 0.792 | ||

| Digital empathy | 0.575 | 0.640 | 0.466 | 0.528 | 0.463 | 0.758 | |

| Digital safety | 0.455 | 0.554 | 0.560 | 0.648 | 0.627 | 0.588 | 0.675 |

| Dimensions | Factors | Items | Factor loading |

|---|---|---|---|

| Information skills | Access to and management of digital content | 1. I have apps that keep me up to date with news. | 0.823 |

| 2. I am able to search for and access information in digital environments. | 0.724 | ||

| 3. I can use different tools to store and manage information. | 0.621 | ||

| 4. I am able to search for information that I need on the Internet. | 0.883 | ||

| 5. I can understand the information that I get from the Internet. | 0.592 | ||

| Use of digital means | 7. I skillfully use digital software to complete learning tasks. | 0.643 | |

| 8. I can complete digital content that meets the minimum requirements of learning tasks. | 0.442 | ||

| 9. I can create and edit digital content with higher standards according to the requirements of work or study. | 0.658 | ||

| 12. I am able to use digital means to solve problems encountered in my study. | 0.441 | ||

| 13. I am able to use digital means to detect plagiarism of content that I created. | 0.514 | ||

| Communication skills | Communication of digital content | 14. I can communicate with others in digital environments. | 0.510 |

| 15. I know how to communicate with others through different digital means. | 0.569 | ||

| 16. I know how to communicate with others in different ways (e.g., images, texts, videos ...). | 0.716 | ||

| Creation skills | Creation of digital content | 19. I know different ways to create and edit digital content (e.g., videos, photographs, texts, animations...). | 0.350 |

| 20. I am able to accurately present what I want to deliver in digital environments. | 0.562 | ||

| 21. I can transform information and organize it in different formats. | 0.615 | ||

| Digital safety skills | Digital safety | 23. I am careful with my personal information. | 0.434 |

| 25. I avoid having arguments with others in digital environments | 0.587 | ||

| 26. I am able to identify harmful behaviors that can affect me on social networks. | 0.774 | ||

| 27. I avoid behaviors that are harmful on social networks. | 0.587 | ||

| 28. Before doing a digital activity (e.g., upload a photo, comment ...), I think about the possible consequences. | 0.697 | ||

| 37. When sharing digital information, I am able to protect my privacy and security. | 0.397 | ||

| Digital empathy skills | Digital empathy | 29. I am able to put myself in other people’s shoes in digital environments. | 0.593 |

| 30. I am willing to help other people in digital environments. | 0.328 | ||

| 32. I respect other people in digital environments. | 0.833 | ||

| 33. I take into account the opinion of others in digital environments. | 0.713 | ||

| 34. I get informed before commenting on a topic. | 0.383 |

| Factors | Items z | Cronbach’s α |

|---|---|---|

| Access to and management of digital content | 4, 1, 2, 3, 5 | 0.874 |

| Use of digital means | 9, 7, 13, 8, 12 | 0.817 |

| Communication of digital content | 15, 14, 16, | 0.778 |

| Creation of digital content | 21, 20, 19 | 0.844 |

| Digital safety | 26, 28, 25, 27, 23, 37 | 0.852 |

| Digital empathy | 32, 33, 29, 34, 30 | 0.861 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, C.; Wang, J. Development and Validation of a Questionnaire to Measure Digital Skills of Chinese Undergraduates. Sustainability 2022, 14, 3539. https://doi.org/10.3390/su14063539

Fan C, Wang J. Development and Validation of a Questionnaire to Measure Digital Skills of Chinese Undergraduates. Sustainability. 2022; 14(6):3539. https://doi.org/10.3390/su14063539

Chicago/Turabian StyleFan, Cunying, and Juan Wang. 2022. "Development and Validation of a Questionnaire to Measure Digital Skills of Chinese Undergraduates" Sustainability 14, no. 6: 3539. https://doi.org/10.3390/su14063539

APA StyleFan, C., & Wang, J. (2022). Development and Validation of a Questionnaire to Measure Digital Skills of Chinese Undergraduates. Sustainability, 14(6), 3539. https://doi.org/10.3390/su14063539