The Impact of Artificial Intelligence on Sustainable Development in Electronic Markets

Abstract

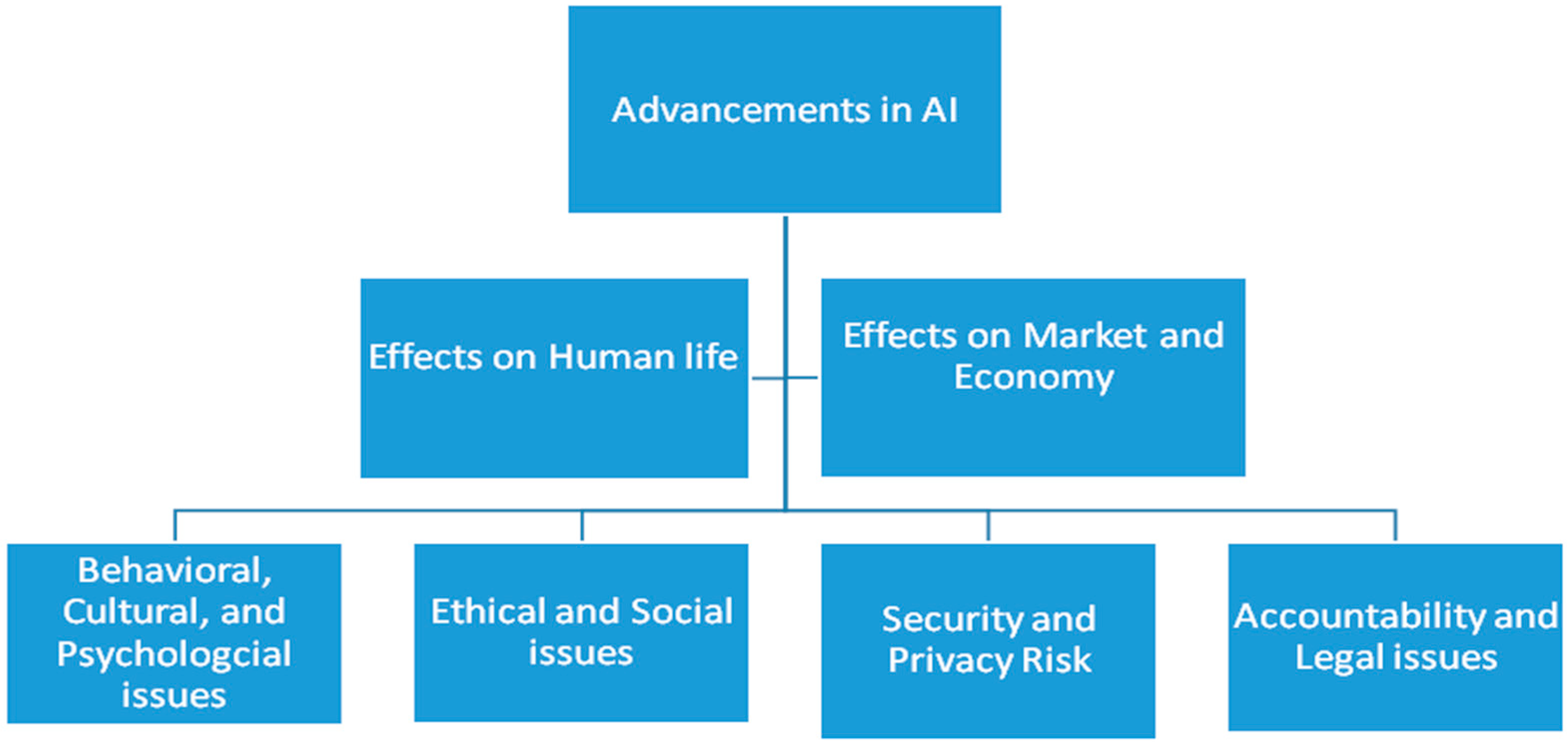

:1. Introduction

2. Literature Review

2.1. Behavioral, Cultural, and Psychological Issues

2.2. Ethical and Social Issues

2.3. AI Effects on Market and Economy

2.4. Security and Privacy Risk

2.5. Accountability and Legal Issues

3. Materials and Methods

Selection of Studies

4. Results and Discussion

5. Theoretical and Practical Implications

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Scherer, M.U. Regulating Artificial Intelligence Systems: Risks, Challenges, Competencies, and Strategies. SSRN Electron. J. 2015, 29, 353. [Google Scholar] [CrossRef]

- Malhotra, N.K.; Kim, S.S.; Agarwal, J. Internet users’ information privacy concerns (IUIPC): The construct, the scale, and a causal model. Inf. Syst. Res. 2004, 15, 336–355. [Google Scholar] [CrossRef] [Green Version]

- Anandalingam, G.; Day, R.W.; Raghavan, S. The landscape of electronic market design. Manag. Sci. 2005, 51, 316–327. [Google Scholar] [CrossRef] [Green Version]

- Johnson, M. Barriers to innovation adoption: A study of e-markets. Ind. Manag. Data Syst. 2010, 110, 157–174. [Google Scholar] [CrossRef]

- Oreku, G. Rethinking E-commerce Security. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28 November 2005. [Google Scholar]

- Yazdanifard, R.; Edres, N.A.-H.; Seyedi, A.P. Security and Privacy Issues as a Potential Risk for Further E-commerce Development. In Proceedings of the International Conference on Information Communication and Management-IPCSIT; 2011; Volume 16, pp. 23–27. Available online: http://www.ipcsit.com/vol16/5-ICICM2011M008.pdf (accessed on 14 August 2021).

- Bauer, W.A.; Dubljević, V. AI Assistants and the Paradox of Internal Automaticity. Neuroethics 2020, 13, 303–310. [Google Scholar] [CrossRef]

- Russell, S. Rationality and Intelligence: A Brief Update. In Fundamental Issues of Artificial Intelligence; Springer: Cham, Germany, 2016; pp. 7–28. [Google Scholar]

- Goodell, J.W.; Kumar, S.; Lim, W.M.; Pattnaik, D. Artificial intelligence and machine learning in finance: Identifying foundations, themes, and research clusters from bibliometric analysis. J. Behav. Exp. Financ. 2021, 32, 100577. [Google Scholar] [CrossRef]

- Du, S.; Xie, C. Paradoxes of artificial intelligence in consumer markets: Ethical challenges and opportunities. J. Bus. Res. 2020, 129, 961–974. [Google Scholar] [CrossRef]

- Kaplan, A.; Haenlein, M. Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus. Horiz. 2019, 62, 15–25. [Google Scholar] [CrossRef]

- Wirtz, B.W.; Weyerer, J.C.; Sturm, B.J. The Dark Sides of Artificial Intelligence: An Integrated AI Governance Framework for Public Administration. Int. J. Public Adm. 2020, 43, 818–829. [Google Scholar] [CrossRef]

- Kumar, G.; Singh, G.; Bhatanagar, V.; Jyoti, K. Scary dark side of artificial intelligence: A perilous contrivance to mankind. Humanit. Soc. Sci. Rev. 2019, 7, 1097–1103. [Google Scholar] [CrossRef] [Green Version]

- Yang, Z.; Shi, Y.; Yan, H. Scale, congestion, efficiency and effectiveness in e-commerce firms. Electron. Commer. Res. Appl. 2016, 20, 171–182. [Google Scholar] [CrossRef]

- Faust, M.E. Cashmere: A lux-story supply chain told by retailers to build a competitive sustainable advantage. Int. J. Retail. Distrib. Manag. 2013, 41, 973–985. [Google Scholar] [CrossRef]

- Ingaldi, M.; Ulewicz, R. How to make e-commerce more successful by use of Kano’s model to assess customer satisfaction in terms of sustainable development. Sustainability 2019, 11, 4830. [Google Scholar] [CrossRef] [Green Version]

- Lim, W.M. The Sustainability Pyramid: A Hierarchical Approach to Greater Sustainability and the United Nations Sustainable Development Goals with Implications for Marketing Theory, Practice, and Public Policy. Aust. Mark. J. 2022, 4, 1–21. [Google Scholar] [CrossRef]

- Lv, Z.; Qiao, L.; Singh, A.K.; Wang, Q. AI-empowered IoT Security for Smart Cities. ACM Trans. Internet Technol. 2021, 21, 1–21. [Google Scholar] [CrossRef]

- Rao, B.T.; Patibandla, R.S.M.L.; Narayana, V.L. Comparative Study on Security and Privacy Issues in VANETs. In Proceedings of the Cloud and IoT-Based Vehicular Ad Hoc Networks, Guntur, India, 22 April 2021; pp. 145–162. [Google Scholar]

- Holzinger, A.; Weippl, E.; Tjoa, A.M.; Kieseberg, P. Digital Transformation for Sustainable Development Goals (SDGs)—A Security, Safety and Privacy Perspective on AI. Lect. Notes Comput. Sci. 2021, 12844, 1–20. [Google Scholar]

- Nguyen, V.L.; Lin, P.C.; Cheng, B.C.; Hwang, R.H.; Lin, Y.D. Security and Privacy for 6G: A Survey on Prospective Technologies and Challenges. IEEE Commun. Surv. Tutor. 2021, 23, 2384–2428. [Google Scholar] [CrossRef]

- Oseni, A.; Moustafa, N.; Janicke, H.; Liu, P.; Tari, Z.; Vasilakos, A. Security and privacy for artificial intelligence: Opportunities and challenges. arXiv 2021, arXiv:2102.04661. [Google Scholar]

- Xu, J.; Yang, P.; Xue, S.; Sharma, B.; Sanchez-Martin, M.; Wang, F.; Beaty, K.A.; Dehan, E.; Parikh, B. Translating cancer genomics into precision medicine with artificial intelligence: Applications, challenges and future perspectives. Hum. Genet. 2019, 138, 109–124. [Google Scholar] [CrossRef] [Green Version]

- Dwivedi, Y.K.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Duan, Y.; Dwivedi, R.; Edwards, J.; Eirug, A.; et al. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2021, 57, 101994. [Google Scholar] [CrossRef]

- Kumar, S.; Lim, W.M.; Pandey, N.; Westland, J.C. 20 years of Electronic Commerce Research. Electron. Commer. Res. 2021, 21, 1–40. [Google Scholar] [CrossRef]

- Sun, T.Q.; Medaglia, R. Mapping the challenges of Artificial Intelligence in the public sector: Evidence from public healthcare. Gov. Inf. Q. 2019, 36, 368–383. [Google Scholar] [CrossRef]

- Złotowski, J.; Proudfoot, D.; Yogeeswaran, K.; Bartneck, C. Anthropomorphism: Opportunities and Challenges in Human—Robot Interaction. Int. J. Soc. Robot. 2015, 7, 347–360. [Google Scholar] [CrossRef]

- Benedikt, C.; Osborne, M.A. Technological Forecasting & Social Change The future of employment: How susceptible are jobs to computerisation? Technol. Forecast. Soc. Chang. 2017, 114, 254–280. [Google Scholar]

- Horvitz, E. Artificial Intelligence and Life in 2030. In One Hundred Year Study on Artificial Intelligence: Report of the 2015–2016 Study Panel; Stanford: California, CA, USA, 2016. [Google Scholar]

- Kaplan, A.; Haenlein, M. ScienceDirect Rulers of the world, unite! The challenges and opportunities of artificial intelligence. Bus. Horiz 2019, 63, 37–50. [Google Scholar] [CrossRef]

- Risse, M. Human rights and artificial intelligence: An urgently needed Agenda. Hum. Rights Q. 2019, 41, 1–16. [Google Scholar] [CrossRef]

- Jonsson, A.; Svensson, V. Systematic Lead Time Analysis. Master’s Thesis, Chalmers University of Technology, Göteborg, Sweden, 2016. [Google Scholar]

- Wang, L.; Törngren, M.; Onori, M. Current status and advancement of cyber-physical systems in manufacturing. J. Manuf. Syst. 2015, 37, 517–527. [Google Scholar] [CrossRef]

- Furnell, S.M.; Warren, M.J. Computer hacking and cyber terrorism: The real threats in the new millennium? Comput. Secur. 1999, 18, 28–34. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 2014, 4, 3104–3112. [Google Scholar]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A Brief Survey of Deep Reinforcement Learning. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef] [Green Version]

- Banerjee, S.; Singh, P.K.; Bajpai, J. A comparative study on decision-making capability between human and artificial intelligence. In Nature Inspired Computing; Springer: Singapore, 2018; pp. 203–210. [Google Scholar]

- Lacey, G.; Taylor, G. Deep Learning on FPGAs: Past, present, and future. arXiv 2016, arXiv:1602.04283. [Google Scholar]

- Norman, D.A. Approaches to the study of intelligence. Artif. Intell. 1991, 47, 327–346. [Google Scholar] [CrossRef]

- Lin, W.; Lin, S.; Yang, T. Integrated Business Prestige and Artificial Intelligence for Corporate Decision Making in Dynamic Environments. Cybern. Syst. 2017, 48, 303–324. [Google Scholar] [CrossRef]

- Thierer, A.; O’Sullivan, A.C.; Russell, R. Artificial Intelligence and Public Policy; Mercatus Research Centre at George Mason University: Arlington, VA, USA, 2017. [Google Scholar]

- Nomura, T.; Kanda, T.; Suzuki, T.; Kato, K. Prediction of Human Behavior in Human—Robot Interaction Using Psychological Scales for Anxiety and Negative Attitudes Toward Robots. IEEE Trans. Robot 2008, 24, 442–451. [Google Scholar] [CrossRef]

- Dautenhahn, K.; Bond, A.H.; Canamero, L.; Edmonds, B. Socially Intelligent Agents: Creating Relationships with Computers and Robots; Kluwer Academic Publishers: Munich, Germany, 2008. [Google Scholar]

- Raina, R.; Madhavan, A.; Ng, A.Y. Large-scale deep unsupervised learning using graphics processors. In Proceedings of the 26th Annual International Conference on Machine Learning, California, CA, USA, 14 June 2009; pp. 873–880. [Google Scholar]

- Luxton, D.D. Artificial intelligence in psychological practice: Current and future applications and implications. Prof. Psychol. Res. Pract. 2014, 45, 332–339. [Google Scholar] [CrossRef] [Green Version]

- Pavaloiu, A.; Kose, U. Ethical Artificial Intelligence—An Open Question. J. Multidiscip. Dev. 2017, 2, 15–27. [Google Scholar]

- Wang, P. On Defining Artificial Intelligence. J. Artif. Gen. Intell. 2019, 10, 1–37. [Google Scholar] [CrossRef] [Green Version]

- Bostrom, N.; Yudkowsky, E. The ethics of artificial nutrition. Medicine 2014, 47, 166–168. [Google Scholar]

- André, Q.; Carmon, Z.; Wertenbroch, K.; Crum, A.; Frank, D.; Goldstein, W.; Huber, J.; Van Boven, L.; Weber, B.; Yang, H. Consumer Choice and Autonomy in the Age of Artificial Intelligence and Big Data. Cust. Needs Solut. 2018, 5, 28–37. [Google Scholar] [CrossRef] [Green Version]

- Samaha, M.; Hawi, N.S. Computers in Human Behavior Relationships among smartphone addiction, stress, academic performance, and satisfaction with life. Comput. Hum. Behav. 2016, 57, 321–325. [Google Scholar] [CrossRef]

- Van den Eijnden, R.J.J.M.; Lemmens, J.S.; Valkenburg, P.M. Computers in Human Behavior the Social Media Disorder Scale: Validity and psychometric properties. Comput. Hum. Behavior. 2016, 61, 478–487. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.; Kim, S.; Ham, C. A Double-Edged Sword? Predicting Consumers’ Attitudes Toward and Sharing Intention of Native Advertising on Social Media. Am. Behav. Sci. 2016, 60, 1425–1441. [Google Scholar] [CrossRef]

- Valenzuela, S.; Piña, M.; Ramírez, J. Behavioral Effects of Framing on Social Media Users: How Conflict, Economic, Human Interest, and Morality Frames Drive News Sharing. J. Commun. 2016, 67, 803–826. [Google Scholar] [CrossRef]

- Roberts, J.A.; David, M.E. Computers in Human Behavior My life has become a major distraction from my cell phone: Partner phubbing and relationship satisfaction among romantic partners. Comput. Hum. Behav. 2016, 54, 134–141. [Google Scholar] [CrossRef]

- Wirtz, B.W.; Weyerer, J.C.; Geyer, C. Artificial intelligence and the public sector—Applications and challenges. Int. J. Public Adm. 2019, 42, 596–615. [Google Scholar] [CrossRef]

- Duan, Y.; Edwards, J.S.; Dwivedi, Y.K. International Journal of Information Management Artificial intelligence for decision making in the era of Big Data—Evolution, challenges and research agenda. Int. J. Inf. Manag. 2019, 48, 63–71. [Google Scholar] [CrossRef]

- Gupta, R.K.; Kumari, R. Artificial Intelligence in Public Health: Opportunities and Challenges. JK Sci. 2017, 19, 191–192. [Google Scholar]

- Bakos, J.Y. Reducing buyer search costs: Implications for electronic marketplaces. Manag. Sci. 1997, 43, 1676–1692. [Google Scholar] [CrossRef] [Green Version]

- Pathak, B.K.; Bend, S. Internet of Things Enabled Electronic Markets: Transparent. Issues Inf. Syst. 2020, 21, 306–316. [Google Scholar]

- Piccardi, C.; Tajoli, L. Complexity, centralization, and fragility in economic networks. PLoS ONE 2018, 13, 1–13. [Google Scholar] [CrossRef]

- Baye, M.R.; Morgan, J.; Scholten, P. Chapter 6 Information, search, and price dispersion. In Handbook on Economics and Information Systems; Elsevier Press: Amsterdam, The Netherland, 2006; pp. 323–375. [Google Scholar]

- Smith, M.D.; Brynjolfsson, E. Consumer decision-making at an Internet shopbot: Brand still matters. J. Ind. Econ. 2001, 49, 541–558. [Google Scholar] [CrossRef]

- Clay, K.; Krishnan, R.; Wolff, E.; Fernandes, D. Retail strategies on the web: Price and non-price competition in the online book industry. J. Ind. Econ. 2002, 50, 351–367. [Google Scholar] [CrossRef]

- Ackoff, R.L. Management misinformation systems. Manag. Sci. 1967, 14, 11. [Google Scholar] [CrossRef]

- Grover, V.; Lim, J.; Ayyagari, R. The dark side of information and market efficiency in e-markets. Decis. Sci. 2006, 37, 297–324. [Google Scholar] [CrossRef]

- Keller, K.L.; Staelin, R. Effects of Quality and Quantity of Information on Decision Effectiveness. J. Consum. Res. 1987, 14, 200. [Google Scholar] [CrossRef]

- Pontiggia, A.; Virili, F. Network effects in technology acceptance: Laboratory experimental evidence. Int. J. Inf. Manag. 2010, 30, 68–77. [Google Scholar] [CrossRef]

- Bantas, K.; Aryastuti, N.; Gayatri, D. The relationship between antenatal care with childbirth complication in Indonesian’s mothers (data analysis of the Indonesia Demographic and Health Survey 2012). J. Epidemiol. Kesehat. Indones. 2019, 2, 2. [Google Scholar] [CrossRef]

- Lee, I.H.; Mason, R. Market structure in congestible markets. Eur. Econ. Rev. 2001, 45, 809–818. [Google Scholar] [CrossRef]

- Swan, J.E.; Nolan, J.J. Gaining customer trust: A conceptual guide for the salesperson. J. Pers. Sell. Sales Manag. 1985, 5, 39–48. [Google Scholar]

- Bolton, G.E.; Kusterer, D.J.; Mans, J. Inflated reputations: Uncertainty, leniency, and moral wiggle room in trader feedback systems. Manag. Sci. 2019, 65, 5371–5391. [Google Scholar] [CrossRef]

- Manyika, J.; Chui, M.; Miremadi, M.; Bughin, J.; George, K.; Willmott, P.; Dewhurst, M. Harnessing Automation for a Future that Works. McKinsey Glob. Inst. 2017, 8, 1–14. [Google Scholar]

- Briot, J.P. Deep learning techniques for music generation—A survey. arXiv 2017, arXiv:1709.01620. [Google Scholar]

- Zanzotto, F.M. Viewpoint: Human-in-the-loop Artificial Intelligence. J. Artif. Intell. Res. 2019, 64, 243–252. [Google Scholar] [CrossRef] [Green Version]

- Tizhoosh, L.P.R. Artificial Intelligence and Digital Pathology: Challenges and Opportunities. J. Pathol. Inform. 2018, 9. [Google Scholar] [CrossRef]

- Bughin, J.; Seong, J.; Manyika, J.; Chui, M.; Joshi, R. Notes from the AI Frontier: Modeling the Global Economic Impact of AI|McKinsey. Available online: https://www.mckinsey.com/featured-insights/artificial-intelligence/notes-from-the-ai-frontier-modeling-the-impact-of-ai-on-the-world-economy (accessed on 14 August 2021).

- Sahmim, S.; Gharsellaoui, H. Privacy and Security in Internet-based Computing: Cloud Computing, Internet of Things, Cloud of Things: A review. Procedia Comput. Sci. 2017, 112, 1516–1522. [Google Scholar] [CrossRef]

- Baccarella, C.V.; Wagner, T.F.; Kietzmann, J.H.; McCarthy, I.P. Social media? It’s serious! Understanding the dark side of social media. Eur. Manag. J. 2018, 36, 431–438. [Google Scholar] [CrossRef]

- Cowie, H. Cyberbullying and its impact on young people’s emotional health and well-being. Psychiatrist 2013, 37, 167–170. [Google Scholar] [CrossRef] [Green Version]

- Pesapane, F.; Volonté, C.; Codari, M.; Sardanelli, F. Artificial intelligence as a medical device in radiology: Ethical and regulatory issues in Europe and the United States. Insights Imaging 2018, 9, 745–753. [Google Scholar] [CrossRef]

- Smith, H.J.; Milberg, S.J.; Burke, S.J.; Hall, O.N. Privacy: Concerns Organizational. MIS Q. 1996, 20, 167–196. [Google Scholar] [CrossRef]

- Gwebu, K.L.; Wang, J.; Wang, L. The Role of Corporate Reputation and Crisis Response Strategies in Data Breach Management. J. Manag. Inf. Syst. 2018, 35, 683–714. [Google Scholar] [CrossRef]

- Barocas, S.; Nissenbaum, H. Big data’s end run around anonymity and consent. In Privacy, Big Data, and the Public Good: Frameworks for Engagement; Cambridge University Press: New York, NY, USA, 2013. [Google Scholar]

- Sujitparapitaya, S.; Shirani, A.; Roldan, M. Issues in Information Systems. Issues Inf. Syst. 2012, 13, 112–122. [Google Scholar]

- Wirtz, J.; Lwin, M.O. Regulatory focus theory, trust, and privacy concern. J. Serv. Res. 2009, 12, 190–207. [Google Scholar] [CrossRef] [Green Version]

- Palmatier, R.W.; Martin, K.D. The Intelligent Marketer’s Guide to Data Privacy: The Impact of Big Data on Customer Trust; Springer International Publishing: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Vail, M.W.; Earp, J.B.; Antón, A.I. An empirical study of consumer perceptions and comprehension of web site privacy policies. IEEE Trans. Eng. Manag. 2008, 55, 442–454. [Google Scholar] [CrossRef]

- Ashworth, L.; Free, C. Marketing dataveillance and digital privacy: Using theories of justice to understand consumers’ online privacy concerns. J. Bus. Ethics 2006, 67, 107–123. [Google Scholar] [CrossRef]

- Strandburg, K.J. Monitoring, Datafication, and Consent: Legal Approaches to Privacy in the Big Data Context. In Privacy, Big Data and the Public Good; Cambridge University Press: New York, NY, USA, 2013. [Google Scholar]

- Kohli, M.; Prevedello, L.M.; Filice, R.W.; Geis, J.R. implementing machine learning in radiology practice and research. Am. J. Roentgenol. 2017, 208, 754–760. [Google Scholar] [CrossRef]

- Krittanawong, C. The rise of artificial intelligence and the uncertain future for physicians. Eur. J. Intern. Med. 2018, 48, e13–e14. [Google Scholar] [CrossRef]

- Ravì, D.; Wong, C.; Deligianni, F.; Berthelot, M.; Andreu-Perez, J.; Lo, B.; Yang, G.Z. Deep Learning for Health Informatics. IEEE J. Biomed. Health Inform. 2017, 21, 4–21. [Google Scholar] [CrossRef] [Green Version]

- Mitchell, T.; Brynjolfsson, E. Track how technology is transforming work. Nature 2017, 544, 290–292. [Google Scholar] [CrossRef] [Green Version]

- Zatarain, J.M.N. The role of automated technology in the creation of copyright works: The challenges of artificial intelligence. Int. Rev. Law Comput. Technol. 2017, 31, 91–104. [Google Scholar] [CrossRef]

- Castelvecchi, D. The black box 2.0 I. Nature 2016, 538, 20–23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nambu, T. Legal regulations and public policies for next-generation robots in Japan. AI Soc. 2016, 31, 483–500. [Google Scholar] [CrossRef]

- Recht, M.; Bryan, R.N. Artificial Intelligence: Threat or Boon to Radiologists? J. Am. Coll. Radiol. 2017, 14, 1476–1480. [Google Scholar] [CrossRef] [PubMed]

- Staples, M.; Niazi, M.; Jeffery, R.; Abrahams, A.; Byatt, P.; Murphy, R. An exploratory study of why organizations do not adopt CMMI. J. Syst. Softw. 2007, 80, 883–895. [Google Scholar] [CrossRef]

- Howard, A.; Borenstein, J. The Ugly Truth about Ourselves and Our Robot Creations: The Problem of Bias and Social Inequity. Sci. Eng. Ethics 2018, 24, 1521–1536. [Google Scholar] [CrossRef]

- Lauscher, A. Life 3.0: Being human in the age of artificial intelligence. Internet Hist. 2019, 3, 101–103. [Google Scholar] [CrossRef]

- Gaggioli, A.; Riva, G.; Peters, D.; Calvo, R.A. Emotions and Affect in Human Factors and Human-Computer Interaction; Jeon, M., Ed.; Elsevier: Cambridge, MA, USA, 2017. [Google Scholar]

- Soh, C.; Markus, M.L.; Goh, K.H. Electronic Marketplaces and Price Transparency: Strategy, Information Technology, and Success. Pharmacogenomics 2006, 3, 781–791. [Google Scholar] [CrossRef]

- Etzioni, A.; Etzioni, O. Incorporating Ethics into Artificial Intelligence. J. Ethics 2017, 21, 403–418. [Google Scholar] [CrossRef]

- Yampolskiy, R.V. Artificial intelligence safety engineering: Why machine ethics is a wrong approach. Stud. Appl. Philos. Epistemol. Ration. Ethics 2013, 5, 389–396. [Google Scholar]

- Murata, K.; Wakabayashi, K.; Watanabe, A. Study on and instrument to assess knowledge supply chain systems using advanced kaizen activity in SMEs. Supply Chain Forum 2014, 15, 20–32. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Methods for Automatic Control Of Lifting Devices in Jack-Up Systems; IEEE Access: Hoo Chi Minh, Vietnam, 2007. [Google Scholar]

- Perez-Staples, D.; Prabhu, V.; Taylor, P.W. Post-teneral protein feeding enhances sexual performance of Queensland fruit flies. Physiol. Entomol. 2007, 32, 225–232. [Google Scholar] [CrossRef]

- Bostrom, N.; Yudkowsky, E. The Ethics of Artificial Intelligence. IFIP Adv. Inf. Commun. Technol. 2021, 555, 55–69. [Google Scholar]

| Identified Variables | Main Challenges/Issues Discussed in Literature | Authors Discussing These Variables |

|---|---|---|

| Behavioral, psychological, and cultural factors |

|

|

| Ethical and social issues |

|

|

| Security and privacy issues |

|

|

| Accountability and legal issues |

|

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thamik, H.; Wu, J. The Impact of Artificial Intelligence on Sustainable Development in Electronic Markets. Sustainability 2022, 14, 3568. https://doi.org/10.3390/su14063568

Thamik H, Wu J. The Impact of Artificial Intelligence on Sustainable Development in Electronic Markets. Sustainability. 2022; 14(6):3568. https://doi.org/10.3390/su14063568

Chicago/Turabian StyleThamik, Hanane, and Jiang Wu. 2022. "The Impact of Artificial Intelligence on Sustainable Development in Electronic Markets" Sustainability 14, no. 6: 3568. https://doi.org/10.3390/su14063568

APA StyleThamik, H., & Wu, J. (2022). The Impact of Artificial Intelligence on Sustainable Development in Electronic Markets. Sustainability, 14(6), 3568. https://doi.org/10.3390/su14063568