1. Introduction

A financial audit is an objective look at an organisation’s financial health in order to assess if the financial statements are a true representation of the financial state of the organization [

1]. An audit gives financial statements credibility and gives shareholders assurance that the accounts are true and fair and also helps identify areas where a company may need to improve [

2]. Motives for manipulating the financial accounts and fraudulent reporting could include increasing the profitability of the business, reducing tax obligations and exaggerating the performance due to managerial pressure [

1]. The recent lapses in corporate governance and manipulations of financial accounts in the private (e.g., Steinhoff and Tongaat Hulett) and public sectors (e.g., state capture) in South Africa have highlighted the need for more robust mechanisms of auditing financial statements of entities, particularly in a developing economy [

3,

4].

Auditing requires a great deal of knowledge and judgment [

5]. However, it also includes repetitive and time consuming tasks, which can be automated using artificial intelligence (AI) and machine learning algorithms [

4,

5]. Machine learning is a branch of AI that allows for development of non-linear models of various tasks and is divided into two subsets: supervised and unsupervised learning. Supervised learning uses labelled data meaning inputs have known outputs (which is the focus on this current work), whereas in unsupervised learning there are no labels [

1,

4,

6]. In unsupervised learning, the algorithm has to discover the patterns in the data on its own. Machine learning algorithms are utilised to analyse data to understand patterns and provide forecasts which can be used as part of a decision support system [

6].

Institutions must constantly enhance their systems for fraud detection. Several studies have made use of machine learning in recent years to provide solutions to this problem, with the goal of providing auditors with solutions that improve the quality and speed of the auditing process [

7,

8]. The financial health of the institutions is an ongoing risk. Financial health is an area of concern and there is need for intervention. This is why this study is conducted where audit opinions are modelled as it may assist with establishing measures to take to improve the financial well-being of a municipality. There are four different types of audit opinions a firm could receive: unqualified, qualified, disclaimer and adverse audit opinions [

9]. These opinions provide a measure of the level of confidence one can have in the financial statements and we discuss these further in detail later in

Section 3.

An organisation’s financial statements are more likely to be free of fraud if they receive a clean audit opinion, this shows the link between audit outcomes and fraud. Ref. Craja et al. [

10] utilise deep learning for the detection of fraud in financial statements using financial ratios and textual data from the financial statements. The authors also address how machine learning can be used to prevent its occurrence by flagging which entities are likely to commit fraud. Fraudulent financial reporting is deliberate or careless conduct that results in materially misleading financial statements [

11]. There has been an increase in fraudulent financial reporting in the past decades [

4]. As a result, fraud has become a concern in the business world and various stakeholders have been trying to understand it and better detect or predict it. Humans are not able to process big data in a timely manner and effectively, which is where machine learning and AI more generally come into play. AI has made it easier for auditors to identify areas of risk and execute numerous other tasks more efficiently [

4]. AI makes use of algorithms to identify patterns and anomalies in datasets. With AI, stakeholders are able to analyse and model large complex datasets at rapid speed. This allows auditors to be able to perform repetitive tasks, gain better insights and operate more efficiently and effectively. Auditing firms are already making use of machine learning in their processes, an example of this is Deloitte’s Argus, a machine learning tool capable of reading contracts and other documents [

6].

Contributions: With this study, we contribute to the body of ongoing research on how auditing can be made easier with the use of machine learning. This is particularly important in South Africa where little research has been conducted, especially in the local government sphere. Our work expands on that of Mongwe and Malan [

1] and Mongwe et al. [

12] in that we present a first-in-the-literature comparison of various machine learning algorithms and feature selection techniques for the task of analysing audit outcomes of South African municipalities. The results of this study could prove to be useful to the various users of financial statements, particularly as we also highlight which variables are important in discriminating between financial statements with qualified and unqualified audit opinions.

The rest of the paper is arranged as follows:

Section 2 reviews the theoretical background.

Section 3 outlines the methods, models, data and performance metrics to be used for comparison.

Section 4 presents the results from using the proposed methods of prediction of audit outcomes. Finally,

Section 5 concludes the paper and outlines future research directions.

2. Literature Review

Our study is most similar to Liou [

13] who compares three data mining techniques to identify the best one for detection of fraud risk. In their work, the performance of logistic regression, ANN and decision tree models in detection of Financial Statement Fraud (FSF) and business failure prediction is compared. They make use of 52 financial ratios as input data for the three models and these inputs were selected based on profitability and liquidity criteria [

14]. Step-wise logistic regression feature selection was performed on the financial ratios to identify the most significant features to use in building the logistic regression model. Only 25 out of the 52 features were found to be significant. The logistic regression model outperformed the other models in the detection of fraudulent financial statements with an accuracy of 99%. In bankruptcy prediction, the decision tree outperformed with an accuracy of 98.15%. The ANN performed the worst which could be because all 52 features were used (no feature selection) and the training dataset was not large enough [

14].

Another similar study was conducted by Kirkos et al. [

15] wherein they utilise Bayesian belief networks, neural networks and decision trees. The input data here are also financial ratios but derived from balance sheet and income statements only. The models are compared based on prediction accuracy. The data were collected from 76 Greek manufacturing companies and a total of 10 ratios were used. The Bayesian belief network model had the best performance in classifying the companies with a prediction accuracy of 90.3%. The prediction accuracy of the neural network was 80% and 73.6% for the decision tree model.

Persons et al. [

16] find that capital turnover, financial leverage, asset composition and size of the firm are significant factors in fraudulent financial reporting using publicly available data wherein they had 103 non-fraud firms for the fraud year sample and 100 non-fraud firms for the preceding year sample. They made use of the step-wise logistic regression model. In their study, the logistic regression model outperformed the naive strategy of classifying all firms as non-fraudulent.

Green and Choi [

17] uses neural networks to classify firms and investigate whether they can be used for fraud detection and the results did indeed indicate that as a fraud detection tool, neural networks have significant potential. Yao et al. [

18] conduct a study to propose a model for FSF detection combining feature selection and machine learning. Random forest outperforms the other four models they used in FSF detection.

Kaminski et al. [

11] and Mongwe and Malan [

1] study whether financial ratios can detect fraudulent financial reporting. Kaminski et al. [

11] conduct a study using 16 statistically significant ratios and utilised discriminant analysis which misclassified fraud firms between 58% and 98% of the time. On the other hand, Mongwe and Malan [

1] use self-organising maps and k-means clustering and in their research, it is found that the ratios are useful in financial statement fraud detection in local governments in South Africa. Carcello and Nagy [

19] look at the likelihood of fraudulent financial reporting, making use of a logistic regression where fraud has a linear relationship with 10 features. They find that it is highly likely in the first few years of a client–auditor relationship and no evidence of it being more likely in the long run.

Mongwe et al. [

12] utilise a Bayesian framework for the prediction of South African audit outcomes using financial ratios as inputs. The authors consider a Bayesian logistic regression model with automatic relevance determination that was trained using the Metropolis Adjusted Langevin Algorithm, Metropolis-Hastings [

20] and the No-U-Turn sampler and Separable Shadow Hamiltonian Hybrid Monte Carlo [

21] Markov Chain Monte Carlo algorithms. Their results highlight, in an automatic fashion, various financial ratios that are relevant for modelling audit outcomes.

A deep learning approach for the detection of fraud in financial statements using financial ratios and textual data from the financial statements is used by Craja et al. [

10]. The authors use a hierarchical attention network to extract features from the text data of the financial reports. Their results reveal that incorporating the text data improves performance of fraud detection compared to solely using the financial ratios. On the other hand, Jan [

22] deploys a deep recurrent neural network (RNN) and a deep long short-term memory (LSTM) network to construct a financial statement fraud detection model using data from listed companies in Taiwan. Their results show that the LSTM model outperforms the RNN model for this particular dataset.

3. Methodology

In this section, we will briefly describe the data used, look at the different feature selection methods to be applied, the models to be fitted on the data and performance measures to compare and select the best model for the prediction of audit outcomes.

3.1. Data Description

It is crucial to note that fraudulent samples that are analysed in the literature are comprised of only reported fraud [

4,

11]. The fraudulent instances that are never discovered are not available for research. These include those discovered within the entity as they are corrected and never revealed to the public [

11].

For this study, we use the same data as Mongwe and Malan [

1] used in analysing the usefulness of financial ratios in detecting the presence of fraud in financial statements. This dataset was retrieved from the South African National Treasury website [

1,

23]:

https://municipaldata.treasury.gov.za/ which was last accessed on 8 August 2021. A sample of 1560 observations was obtained from audited financial statements of South African municipalities along with their audit outcomes over the period of 2012 to 2018. The audit opinions that can be issued by the Auditor General of South Africa on the financial statements of municipalities can be summarised as follows [

4,

9,

12]:

Clean or unqualified audit opinion—The financial statements contain no material misstatements. Note that this does not necessarily mean there was no fraud.

Qualified audit opinion—The financial statements contain material misstatements in specific amounts, or there is insufficient evidence to conclude that the amounts are not materially misstated.

Adverse audit opinion—The financial statements contain material misstatements. This however does not necessarily mean that there was fraud present.

Disclaimer audit opinion—The municipality provided insufficient evidence in the form of documentation on which to base an audit opinion.

We use the same variables as in Mongwe and Malan [

1], Mongwe et al. [

12], with the five number summary shown in [

1,

12] and the full description presented below:

Debt to Community Wealth/Equity—Ratio of debt to the community equity. The ratio is used to evaluate a municipality’s financial leverage.

Capital Expenditure to Total Expenditure—Ratio of capital expenditure to total expenditure.

Impairment of PPE, IP and IA—Impairment of Property, Plant and Equipment (PPE) and Investment Property (IP) and Intangible Assets (IAs).

Repairs and Maintenance as a percentage of PPE+IP—The ratio measures the level of repairs and maintenance relative to assets.

Debt to Total Operating Revenue—The ratio indicates the level of total borrowings in relation to total operating revenue.

Current Ratio—The ratio is used to assess the municipality’s ability to pay back short-term commitments with short-term assets.

Capital Cost to Total Operating Expenditure—The ratio indicates the cost of servicing debt relative to overall expenditure.

Net Operating Surplus Margin—The ratio assesses the extent to which the entity generates operating surpluses.

Remuneration to Total Operating Expenditure—The ratio measures the extent of remuneration of the entity’s staff to total operating expenditure.

Contracted Services to Total Operating Expenditure—This ratio measures how much of total expenditure is spent on contracted services.

Own Source Revenue to Total Operating Revenue—The ratio measures the extent to which the municipality’s total capital expenditure is funded through internally generated funds and borrowings.

Net Surplus/Deficit Water—This ratio measures the extent to which the municipality generates surplus or deficit in rendering water service

Net Surplus/Deficit Electricity—This ratio measures the extent to which the municipality generates surplus or deficit in rendering electricity service.

3.2. Data Pre-Processing

The data are separated into a training set and a test set. The training set data are used for training the model and the test set is used to evaluate the performance of the trained and validated model. In this study, 70% of the data are assigned to the training set and 30% to the test set, with the split being according to time.

Feature selection is an essential part of data pre-processing as it allows us to reduce the number of input variables leaving enough to efficiently describe the data. It further helps to improve the predictive accuracy of models, simplifies data and makes it easier to model using machine learning techniques since they suffer from the curse of dimensionality [

24]. Feature selection detects the relevant and irrelevant features and removes the latter. As an example, when we have features that are highly correlated in our data, it would make more sense to use only one of those features as it sufficiently describes the data. With feature selection, we seek to eliminate dependent variables that offer no extra information [

25]. Under feature selection, we will be looking at correlation analysis, step-wise regression and random forest.

Correlation analysis is a statistical technique used to analyse the linear relationship between two or more features and the output variable. Only those features strongly related to the output will be selected for model training. From implementing correlation analysis, we obtain a correlation coefficient where:

indicates perfect negative linear relation

indicates that there is no linear relationship between the two variables

indicates perfect positive linear relation.

Random Forest uses a collection of independent decision trees which later, under feature selection, vote on which features are significant. Each tree is trained with a bootstrap sample. The random forest has an important standard feature for estimating the importance of each feature and it is called the variance importance measure. Variables are selected on the basis of their importance in predicting the outcome variable [

26]. From a dataset with

N observations and

p features, each tree in the forest selects

N observations with replacements in the whole dataset. At every node, the tree randomly selects

, a subset of the

p features and the best split feature and split point are chosen using an impurity measure. The node is split into two daughter nodes and this repeats until an entire tree is grown. For a particular tree, some observations are excluded during the training process and constitute the out-of-bag (OOB) samples which are used to construct variable importance measures [

26,

27]. A prediction accuracy is obtained from a tree using OOB samples, then values of a feature of interest, feature

j, are randomly permuted and a new prediction accuracy is determined. The difference in the prediction accuracy before and after the permutation provides the importance of feature

j from that single tree [

26,

27]. The variable importance of a feature for the forest is then determined by averaging over all the important values. This is done for all features. A feature with a low importance score does not affect the predictive ability of trees on OOB samples and a feature with high importance greatly impacts the trees’ ability to correctly predict the class of OOB samples [

27]. The importance scores take into account the interactions between attributes. Attributes are ranked on how well they improve the impurity of a node. The algorithm is easy to implement and tune.

Step-wise regression analysis is a statistical technique that iteratively searches for features that significantly impact the output variable. A variable is added if it maximises the coefficient of determination or minimises the error sum of squares. In step-wise forward selection, variables are added until a significant partial correlation is reached and once a variable enters, it cannot leave. Similarly for step-wise backward selection, once a variable leaves, it cannot re-enter the equation [

28].

A forward selection begins with an empty model and then adds variables one at a time, based on which variable is the most statistically significant until no statistically significant variables remain. Backward elimination begins with all variables in the model and drops the least statistically significant variables one at a time until each variable remaining in the equation is statistically significant. Backward elimination is not ideal when there are many variables and when the number of variables exceeds the number of observations [

28]. A combination of the two methods (which is what is used in this current study) allows for the model to be able to add or drop features at any step. This technique does not account for multicollinearity, which means it might choose features that do not directly affect the output but are related to ones that do affect the output [

29].

3.3. Models

We model the audit outcome variable using decision trees, artificial neural networks and logistic regression models. The aim is to be able to classify a municipality’s financial statements correctly using these models. This section presents more information about each of the models considered in this manuscript.

3.3.1. Decision Trees

A decision tree is a model that resembles a tree, starts with a node which has all the data and is then split into child nodes. Each node represents a “test” on an attribute and each branch represents the test’s outcome. The tree splits observations into mutually exclusive subgroups and this splitting continues until we have terminal or leaf nodes which do not split further, or until there is no more splitting that can produce statistically significant differences [

15].

If the trained decision tree is too complex, it tends to overfit and has low predictive accuracy. Methods like pruning are then used to reduce the complexity of the trained tree, making it shorter, simpler, easier and better at predicting. One of the advantages is that decision trees do not require feature selection prior to training or scaling of variables.

The decision tree that we considered in this paper had a maximum depth of 10 and pruning was applied to the tree to prevent over-fitting. These settings were used as they gave the best results on the validation dataset.

3.3.2. Artificial Neural Network

Artificial neural networks (ANNs) are inspired by the central nervous system in animals and are good at self-learning and self-organising [

18]. ANNs are widely used in classification and clustering tasks. An ANN can learn complex non-linear relationships between explanatory and response variables indirectly; it is adaptive and capable of generating robust models and modifying the classification process if new training weights are set [

14]. The neurons in the ANN are arranged into layers. A layered network consists of input, hidden and output layers in that order. The hidden layer may or may not exist between the input and output layers [

15].

As outlined by Green and Choi [

17], an ANN is a knowledge induction technique that requires no rigid assumptions to be made like normality which is often assumed in other statistical techniques. In an ANN, data enter through the nodes in the input layer, from the input layer to the hidden layer if there is one; each input value is multiplied by its respective weight and the resulting products are summed; the same operation happens from the hidden layer to the output layer. The sum which is called an activation is further processed by the signal transfer function to determine an output.

In this work, we utilised an ANN with one hidden layer that had 10 hidden neurons and using the relu activation function. These settings we determined using a cross-validation technique on the training dataset.

3.3.3. Logistic Regression

Logistic regression is a generalised linear model [

8]; it is a popular classification model for multivariate analysis and modelling of a binary dependent variable [

30]. It is simple, easy to understand and a more efficient method for binary and linear classification problems [

18,

31].

where

p—the probability of an unqualified audit opinion;

—regression coefficients of independent variables;

—independent variable;

n—number of independent variables that remain after feature selection;

—intercept term;

—residual value.

3.4. Performance Metrics

The measures that will be used to compare the fitness of the models are as follows, the majority of which are based on the confusion matrix in

Table 1:

Precision—The proportion of correct classifications from cases that are predicted as positive/correct tells how close two or more measurements are to each other. This is appropriate when the aim is to minimise false positives.

Recall/Sensitivity—The proportion of correct positive classifications that are actually positive. This is appropriate when the aim is to minimise false negatives.

Specificity—The proportion of correct negative classifications that are actually negative.

The Receiver Operating Characteristic (ROC) curve—This illustrates the relationship between sensitivity and specificity, summarises information or performance of a binary classification model and has true a positive rate (recall/sensitivity) on the y-axis and a false positive rate (1-specificity) on the x-axis. It makes it easier to identify the best threshold for making a decision, useful even when predicted probabilities are not properly calibrated. A model that performs better is one with an ROC above those of other models. It is desirable due to its sensitivity to class imbalance. As test accuracy improves, area under the ROC curve (AUC-ROC) approaches 1.

Precision–Recall (PR) curve—This demonstrates the trade-off between precision and recall for various thresholds [

32]. High precision means low FPR and that the model is returning accurate results and high recall means low FNR and that the model is returning the majority of all positive results. High recall and high precision of a model are represented by a high area under the curve. High recall and low precision mean that the model is returning many results, but the majority of the predicted outcomes are incorrect when compared to the training outcomes. Low recall and high precision mean that the model returns very few results, but the majority of the predicted outcomes are correct when compared to the training outcomes. An ideal model is one with high precision and high recall which returns many correctly labelled results. It should be noted that precision may not decrease with recall (

https://scikit-learn.org/stable/auto_examples/model_selection/plot_precision_recall.html, last accessed: 21 September 2022).

AUC—This is the area under a curve; it measures the model’s ability to distinguish between classes. The higher the AUC, the better the model’s performance at distinguishing classes. For the Precision–Recall curve, the area under the curve (AUC-PR) is a single number summarising the information in the curve [

33], where each point on the curve is defined by a different value of the threshold and it does not depend on the number of true negatives (TNs).

4. Results and Discussion

This section presents the performance of the calibrated models under each feature selection method. In order to comprehensively evaluate the algorithms, we first discuss the performance of the three methods considered under each feature selection scheme. We then outline which features are selected under each feature selection approach and thus also highlight which variables are important for the task of discriminating between qualified and unqualified financial statements. All the results were produced using the R statistical programming language.

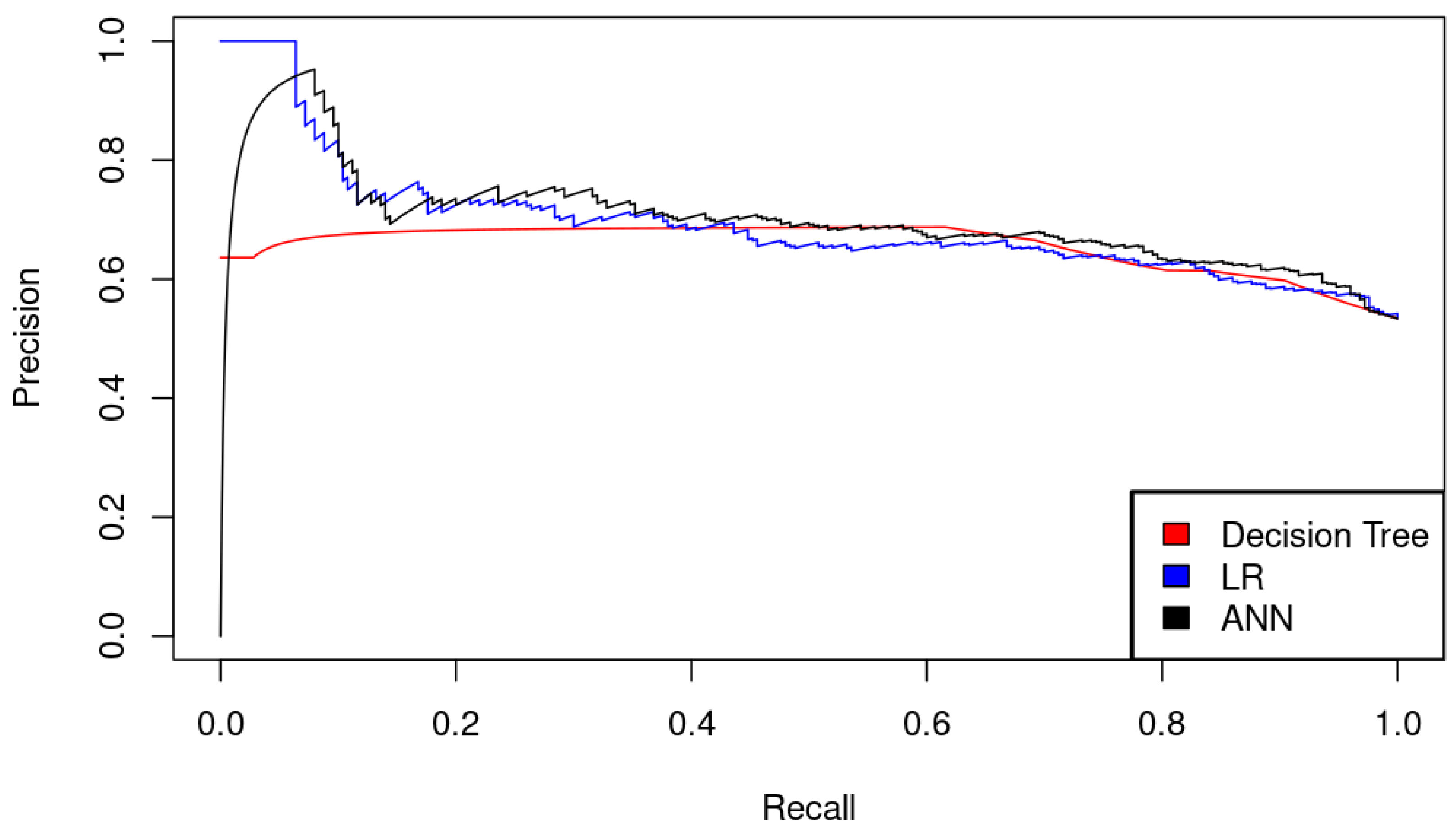

Table 2 and

Table 3 present the AUCs for the models, while

Figure 1,

Figure 2,

Figure 3,

Figure 4,

Figure 5,

Figure 6,

Figure 7,

Figure 8 and

Figure 9 present the ROC and Precision–Recall curves across the various feature selection schemes. The results of applying correlation feature selection technique can be seen in

Table 4 under the results and discussion section. If the

p-value of the correlation is less than

, a feature is considered significant. A

p-value is a probability that is calculated from a hypothesis test and describes how likely the null hypothesis is to be true.

The ANN model outperforms when all features are used with AUCs of 0.7089 and 0.7369 for the ROC curve and Precision–Recall curve, respectively, as shown in

Table 2 and

Table 3. This is in line with

Figure 1 and

Figure 2 wherein the ANN curves are generally above the others. The ROC curve of the decision tree is above the other two in

Figure 1, hence using ROC curves, the decision tree is the outperforming model.

With the random forest feature selection technique, the average of the mean decrease in accuracy is 20.8698. The significant features are debt to community wealth/equity, debt to total operating revenue, current ratio, capital cost to total operating expenditure, net operating surplus margin, net surplus/deficit water and net surplus/deficit electricity since their mean decrease in accuracy is greater than 20.8698 as seen in

Figure 3.

From

Figure 4 and

Figure 5, the decision tree outperforms and is the only model that performs better under the random forest feature selection scheme than when all features are used. Under the correlation analysis feature selection scheme, the data fail the normality test; hence, the Spearman’s correlation coefficient is to be determined.

Table 4 shows the hypothesis test outputs under the correlation analysis selection method where the null hypothesis is there being no correlation between a feature and the audit opinion and the alternative hypothesis is there being a correlation.

From

Table 4, we consider a feature as significant if it has a

p-value of less than

. These significant features are therefore repairs and maintenance as a percentage of PPE+IP, debt to total operating revenue, current ratio, net operating surplus margin, remuneration to total operating expenditure, contracted services to total operating expenditure, source revenue to total operating revenue and net surplus/deficit electricity which also makes sense looking at the magnitude of the absolute correlation coefficients of these features and the output.

Using features selected by correlation analysis, the ANN model outperforms the other models, followed by the decision tree and lastly the logistic regression model. ANN proves to be better at distinguishing between the unqualified municipalities and those that are not unqualified.

Figure 6 and

Figure 7 show that under this selection method, the three models have similar performance.

The step-wise regression analysis feature selection approach yielded the current ratio, net operating surplus margin, capital expenditure to total expenditure, repairs and maintenance as a percentage of PPE+IP, debt to total operating revenue and capital cost to total operating expenditure as the significant variables in training the models.

From

Figure 8, we observe that the ANN and logistic regression models perform better than the decision tree, with ANN performing better than the other two; the same can be seen in

Figure 9. The average area under the ROC curve is 0.6733 for the decision tree, 0.6918 for the ANN and 0.6682 for the logistic regression determined from

Table 2 and the average under the Precision–Recall curve is 0.6673 for the decision tree, 0.7074 for the ANN and 0.6770 for the linear regression determined from

Table 3. This implies the overall performance of ANN is better through using the ROC curve; a decision tree is second best but with a Precision–Recall curve, logistic regression is second best.

The results of the present study demonstrated that step-wise regression selects variables that capture the most variation in data better than those selected using random forest or correlation analysis. We further find that debt to operating ratio, current ratio and net operating surplus margin are the most important variables in predicting audit outcomes, which are in line with the results of [

1,

12].

The key financial ratios that have been identified from our analysis make economic sense since, for instance, a high current ratio indicates that a corporation has more current assets than current liabilities. They are therefore less prone to commit fraud because they are in good financial standing. A high debt-to-total operational income ratio, on the other hand, indicates that the business has more debt than it makes in revenue, which raises the risk of fraud. Additionally, municipalities with large net operating margins are both more financially successful and less prone to commit fraud. As a result, these variables may prove to be essential for different stakeholders when evaluating the financial stability of municipalities.