Cooperatives and the Use of Artificial Intelligence: A Critical View

Abstract

:1. Introduction

2. Governance in Cooperatives

2.1. Main Features

- (i)

- voluntary and open membership;

- (ii)

- democratic member control;

- (iii)

- economic participation by members;

- (iv)

- autonomy and independence;

- (v)

- education, training, and information;

- (vi)

- cooperation among cooperatives; and

- (vii)

- concern for the community.

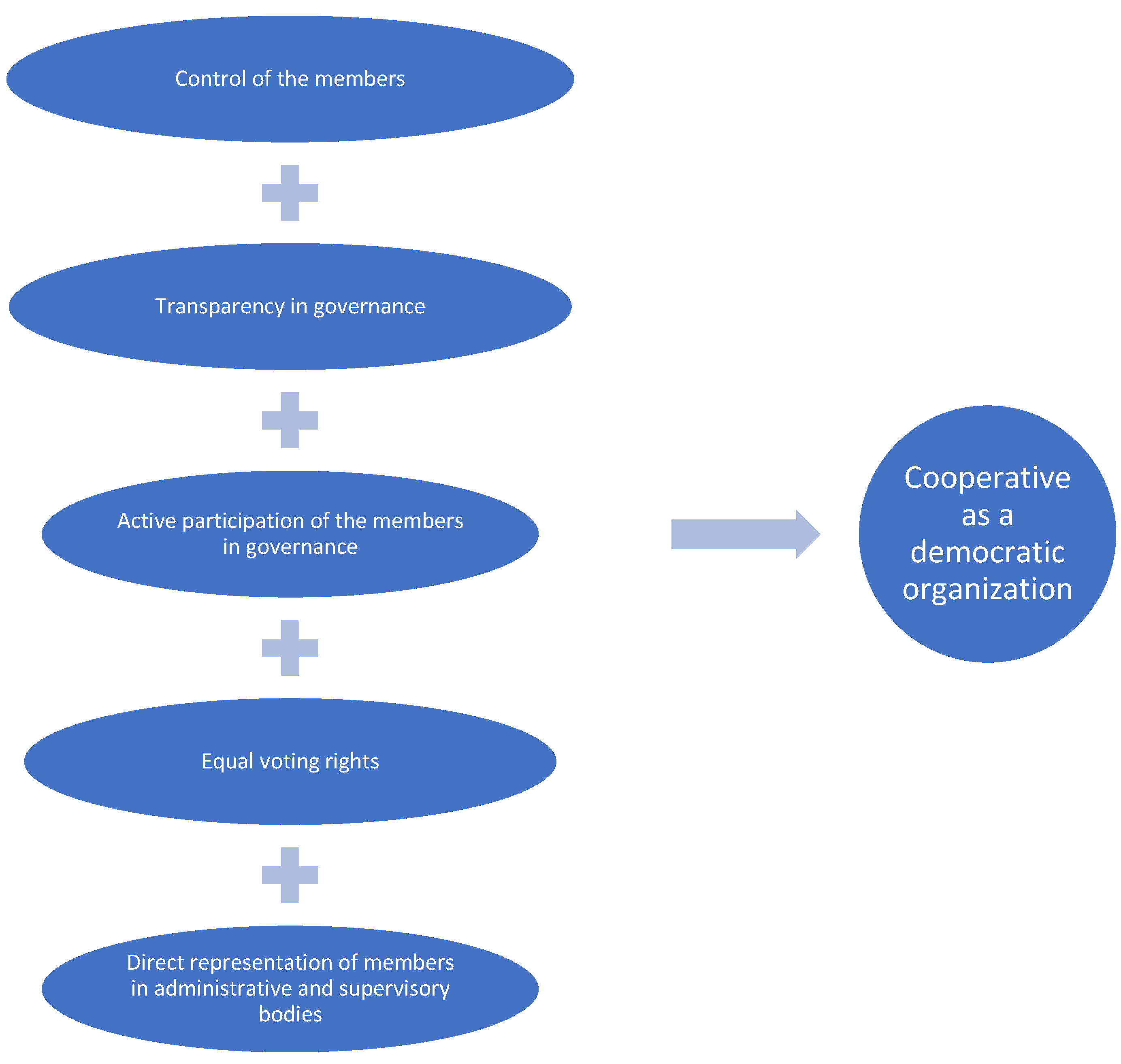

- (i)

- participatory governance, due to the principle of democratic control by members;

- (ii)

- oriented towards its members, following the mutualist aim of the cooperative;

- (iii)

- autonomous and independent, under the principle of autonomy and independence; and

- (iv)

- transparent, due to the members’ right to information enshrined in the PCC and the control and supervision of the Board of Directors by the General Assembly and Supervisory Board.

- (i)

- provide the members of the cooperative with knowledge about the cooperative’s principles and values, namely to induce them to actively participate in their cooperative, to deliberate properly at meetings, and to elect their bodies consciously and monitor their performance;

- (ii)

- teach the leaders and managers of the cooperative to exercise the power they have been appropriately given and preserve the trust placed in them by the other members to obtain knowledge of the cooperative and show a level of competence in keeping with the responsibilities of their office;

- (iii)

- provide workers with the technical expertise needed for proper performance; and

- (iv)

- foster a sense of solidarity and cooperative responsibility in the cooperative’s community.

2.2. The Cooperative Governance Structures

- (i)

- the General Assembly;

- (ii)

- the Board of Directors; and

- (iii)

- the Supervisory Board.

- (i)

- the most important and decisive issues in the life of the cooperative fall within the remit of the general assembly (Article. 38 of the PCC);

- (ii)

- the general assembly elects the members of the corporate bodies from among the collective of cooperators (Article 29(1) of the PCC).

- (iii)

- the resolutions adopted by the general assembly, according to the law and the bylaws, are binding on all the other bodies of the cooperative and all its members (Article 33(1) of the PCC).

2.3. The Central Role of Members of the Cooperatives

2.4. Transparency in the Cooperatives’ Governance

3. Framing Artificial Intelligence in the Decision-Making Process

3.1. Digital Transformation and Digital Innovation

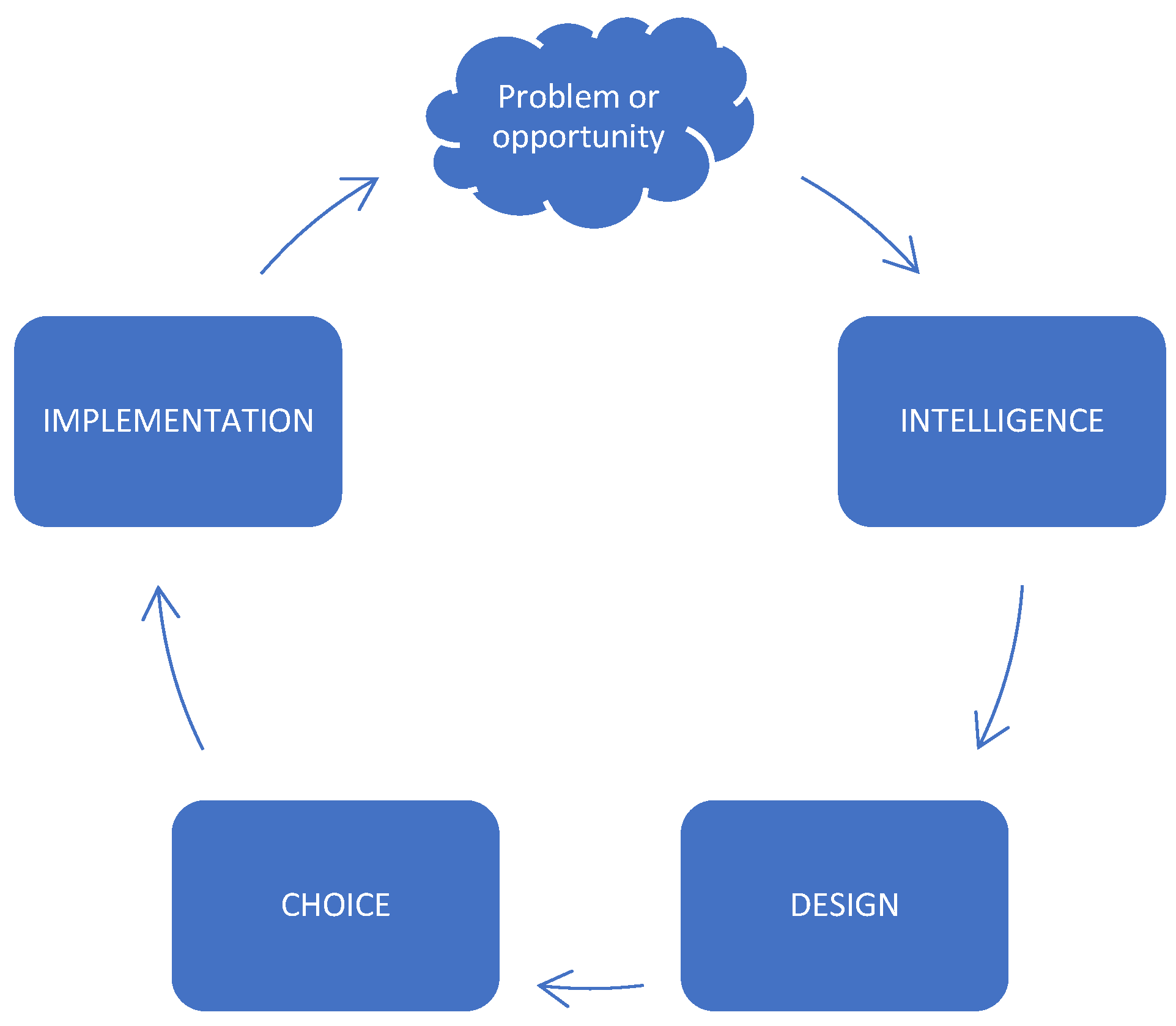

3.2. The Decision-Making Process

- (i)

- the Intelligence phase involves the profound examination of the environment, producing reports, queries, and comparisons;

- (ii)

- the Design phase involves creativity by developing and finding possible alternatives and solutions;

- (iii)

- the Selection phase involves the comparison and selection of one of the alternatives obtained in the previous phase;

- (iv)

- the Implementation phase involves putting the selected solution into action and adapting it to a real-life situation.

- the data management subsystem allows access to a multiplicity of data sources, types and formats;

- the model management subsystem allows access to a multiplicity of capabilities with some suggestions or guidelines available;

- the user interface subsystem allows the users to access and control the DSS;

- the knowledge-based management subsystem allows access to various AI tools that provide intelligence to the other three components and mechanisms to solve problems directly.

3.3. Artificial Intelligence

3.4. The Effects of Artificial Intelligence on Decision-Making

3.5. The European Union and the Standardisation of the Concept of Artificial Intelligence

4. Discussion

4.1. A Critical View on the Use of Artificial Intelligence in Cooperatives

- data dependency—distortion of past data, learning from “bad examples”, insufficient data, the unpredictability of human behaviours;

- the indispensability of human judgement;

- conflicts with human ethical standards;

- the incompleteness of the “legal environment” precludes a yes/no answer.

4.2. A Framework for the Use of Artificial Intelligence in Cooperatives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fici, A. Cooperative identity and the law. Eur. Bus. Law Rev. 2013, 24, 37–64. [Google Scholar] [CrossRef]

- Hiez, D.; Fajardo-García, G.; Fici, A.; Henrÿ, H.; Hiez, D.; Meira, D.A.; Muenker, H.-H.; Snaith, I. Introduction. In Principles of European Cooperative Law: Principles, Commentaries and National Reports; Intersentia: Cambridge, UK, 2017; pp. 1–16. [Google Scholar]

- Curado Malta, M.; Azevedo, A.I.; Bernardino, S.; Azevedo, J.M. Digital Transformation in the Social Economy Organisations in Portugal: A preliminary study. In Proceedings of the 33rd International Conference of CIRIEC: New Global Dynamics in the Post-Covid Era: Challenges for the Public, Social and Cooperative Economy, Valencia, Spain, 13–15 June 2022. [Google Scholar]

- Meira, D.; Azevedo, A.; Castro, C.; Tomé, B.; Rodrigues, A.C.; Bernardino, S.; Martinho, A.L.; Curado Malta, M.; Sousa Pinto, A.; Coutinho, B.; et al. Portuguese social solidarity cooperatives between recovery and resilience in the context of COVID-19: Preliminary results of the COOPVID Project. CIRIEC-España Rev. Econ. Pública Soc. Coop. 2022, 104, 233–266. [Google Scholar] [CrossRef]

- Gagliardi, D.; Psarra, F.; Wintjes, R.; Trendafili, K.; Mendoza, J.P.; Turkeli, S.; Giotitsas, C.; Pazaitis, A.; Niglia, F. New Technologies and Digitisation: Opportunities and Challenges for the Social Economy and Social Enterprises. 2020. Available online: https://www.socialenterprisebsr.net/wp-content/uploads/2020/10/New-technologies-and-digitisation-opportunities-and-challenges-for-the-SE_ENG.pdf (accessed on 14 November 2022).

- Makridakis, S. The forthcoming Artificial Intelligence (AI) revolution: Its impact on society and firms. Futures 2017, 90, 46–60. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Duan, Y.; Dwivedi, R.; Edwards, J.; Eirug, A.; et al. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2021, 57, 101994. [Google Scholar] [CrossRef]

- Sharma, M.; Luthra, S.; Joshi, S.; Kumar, A. Implementing challenges of artificial intelligence: Evidence from public manufacturing sector of an emerging economy. Gov. Inf. Q. 2022, 39, 101624. [Google Scholar] [CrossRef]

- Charles, V.; Rana, N.P.; Carter, L. Artificial Intelligence for data-driven decision-making and governance in public affairs. Gov. Inf. Q. 2022, 39, 101742. [Google Scholar] [CrossRef]

- Pietronudo, M.C.; Croidieu, G.; Schiavone, F. A solution looking for problems? A systematic literature review of the rationalizing influence of artificial intelligence on decision-making in innovation management. Technol. Forecast. Soc. Change 2022, 182, 121828. [Google Scholar] [CrossRef]

- Boustani, N.M. Artificial intelligence impact on banks clients and employees in an Asian developing country. J. Asia Bus. Stud. 2022, 16, 267–278. [Google Scholar] [CrossRef]

- McBride, R.; Dastan, A.; Mehrabinia, P. How AI affects the future relationship between corporate governance and financial markets: A note on impact capitalism. Manag. Financ. 2022, 48, 1240–1249. [Google Scholar] [CrossRef]

- Frankish, K.; Ramsey, W.M. The Cambridge Handbook of Artificial Intelligence; DiMatteo, L.A., Poncibò, C., Cannarsa, M., Eds.; Cambridge University Press: Cambridge, UK, 2022; ISBN 9781009072168. [Google Scholar]

- Noponen, N. Impact of Artificial Intelligence on Management. Electron. J. Bus. Ethics Organ. Stud. 2019, 24, 43–50. [Google Scholar]

- Wood, A.J.; Graham, M.; Lehdonvirta, V.; Hjorth, I. Good Gig, Bad Gig: Autonomy and Algorithmic Control in the Global Gig Economy. Work. Employ. Soc. 2019, 33, 56–75. [Google Scholar] [CrossRef]

- Köstler, L.; Ossewaarde, R. The making of AI society: AI futures frames in German political and media discourses. AI Soc. 2022, 37, 249–263. [Google Scholar] [CrossRef]

- Alshahrani, A.; Dennehy, D.; Mäntymäki, M. An attention-based view of AI assimilation in public sector organizations: The case of Saudi Arabia. Gov. Inf. Q. 2022, 39, 101617. [Google Scholar] [CrossRef]

- Ashok, M.; Madan, R.; Joha, A.; Sivarajah, U. Ethical framework for Artificial Intelligence and Digital technologies. Int. J. Inf. Manag. 2022, 62, 102433. [Google Scholar] [CrossRef]

- Buhmann, A.; Fieseler, C. Deep Learning Meets Deep Democracy: Deliberative Governance and Responsible Innovation in Artificial Intelligence. Bus. Ethics Q. 2022, 1–34. [Google Scholar] [CrossRef]

- Ulnicane, I.; Knight, W.; Leach, T.; Stahl, B.C.; Wanjiku, W.-G. Governance of Artificial Intelligence. In The Global Politics of Artificial Intelligence; Chapman and Hall/CRC: Boca Raton, FL, USA, 2022; pp. 29–56. [Google Scholar]

- Chhillar, D.; Aguilera, R.V. An Eye for Artificial Intelligence: Insights Into the Governance of Artificial Intelligence and Vision for Future Research. Bus. Soc. 2022, 61, 1197–1241. [Google Scholar] [CrossRef]

- Schneider, J.; Abraham, R.; Meske, C.; Vom Brocke, J. Artificial Intelligence Governance For Businesses. Inf. Syst. Manag. 2022, 1–21. [Google Scholar] [CrossRef]

- Sigfrids, A.; Nieminen, M.; Leikas, J.; Pikkuaho, P. How Should Public Administrations Foster the Ethical Development and Use of Artificial Intelligence? A Review of Proposals for Developing Governance of AI. Front. Hum. Dyn. 2022, 4, 20. [Google Scholar] [CrossRef]

- Namorado, R. Os Princípios Cooperativos; Fora do Texto: Coimbra, Portugal, 1995. [Google Scholar]

- Meira, D. Projeções, conexões e instrumentos do princípio cooperativo da educação, formação e informação no ordemanento português. Boletín Asoc. Int. Derecho Coop. 2020, 57, 71–94. [Google Scholar] [CrossRef]

- Meira, D.; Ramos, M.E. Projeções do princípio da autonomia e da independência na legislação cooperativa portuguesa. Boletín Asoc. Int. Derecho Coop. 2019, 55, 135–170. [Google Scholar] [CrossRef]

- Meira, D. Cooperative Governance and Sustainability: An Analysis According to New Trends in European Cooperative Law. In Perspectives on Cooperative Law; Tadjudje, W., Douvitsa, I., Eds.; Springer: Berlin/Heidelberg, Germany, 2022; pp. 223–230. [Google Scholar]

- Münkner, H.H. Chances of Co-Operatives in the Future: Contribution to the International Co-Operative Alliance Centennial 1895–1995; Papers and Reports; Marburg Consult für Selbsthilfeförderung: Marburg, Germany, 1995; ISBN 9783927489295. [Google Scholar]

- Henrÿ, H. Guidelines for Cooperative Legislation; International Labour Organization: Geneva, Switzerland, 2012; ISBN 9221172104. [Google Scholar]

- Meira, D. Portugal. In Principles of European Cooperative Law: Principles, Commentaries and National Reports; Fajardo-García, G., Fici, A., Henrÿ, H., Hiez, D., Meira, D.A., Muenker, H.-H., Snaith, I., Eds.; Intersentia: Cambridge, UK, 2017; pp. 409–516. [Google Scholar]

- Meira, D. A relevância do cooperador na governação das cooperativas. Coop. Econ. Soc. 2013, 35, 9–35. [Google Scholar]

- Fajardo, G.; Fici, A.; Henrÿ, H.; Hiez, D.; Meira, D.; Münkner, H.-H.; Snaith, I. Principles of European Cooperative Law; Intersentia: Cambridge, UK, 2017; pp. 409–516. [Google Scholar]

- Yoo, Y.; Henfridsson, O.; Lyytinen, K. Research commentary—The new organizing logic of digital innovation: An agenda for information systems research. Inf. Syst. Res. 2010, 21, 724–735. [Google Scholar] [CrossRef]

- Laudon, K.C.; Traver, C.G. E-Commerce 2020–2021: Business, Technology and Society, eBook; Pearson Higher Education: London, UK, 2020. [Google Scholar]

- Scholz, T. Platform Cooperativism. Challenging the Corporate Sharing Economy; Rosa Luxemburg Stiftung: New York, NY, USA, 2016. [Google Scholar]

- Sharda, R.; Delen, D.; Turban, E. Business Intelligence and Analytics: Systems for Decision Support; Pearson Higher Education: London, UK, 2014; ISBN 9781292009209. [Google Scholar]

- Power, D.J. A Brief History of Decision Support Systems—Version 4.0. Available online: http://dssresources.com/history/dsshistory.html (accessed on 1 November 2022).

- Arnott, D.; Pervan, G. Eight key issues for the decision support systems discipline. Decis. Support Syst. 2008, 44, 657–672. [Google Scholar] [CrossRef]

- Nemati, H.R.; Steiger, D.M.; Iyer, L.S.; Herschel, R.T. Knowledge warehouse: An architectural integration of knowledge management, decision support, artificial intelligence and data warehousing. Decis. Support Syst. 2002, 33, 143–161. [Google Scholar] [CrossRef] [Green Version]

- Shim, J.P.; Warkentin, M.; Courtney, J.F.; Power, D.J.; Sharda, R.; Carlsson, C. Past, present, and future of decision support technology. Decis. Support Syst. 2002, 33, 111–126. [Google Scholar] [CrossRef]

- Turing, A.M. Computing Machinery and Intelligence. Mind 1950, LIX, 433–460. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Pearson: London, UK, 2021; ISBN 9781292401133. [Google Scholar]

- Cormen, T.H.; Leiserson, C.E.; Rivest, R.L.; Stein, C. Introduction to Algorithms, 3rd ed.; MIT Press: Cambridge, MA, USA, 2009; ISBN 9780262033848. [Google Scholar]

- Witten, I.; Frank, E.; Hall, M.A.; Pal, C.J. Data Mining: Practical Machine Learning Tools and Techniques; Elsevier: Amsterdam, The Netherlands, 2011; ISBN 9780123748560. [Google Scholar]

- Sharda, R.; Delen, D.; Turban, E. Business Intelligence, Analytics, and Data Science: A Managerial Perspective, 4th ed.; Pearson Education: Upper Sadle River, NJ, USA, 2016. [Google Scholar]

- Scopino, G. Key Concepts: Algorithms, Artificial Intelligence, and More. In Algo Bots and the Law: Technology, Automation, and the Regulation of Futures and Other Derivatives; Cambridge University Press: Cambridge, UK, 2020; pp. 13–47. [Google Scholar]

- Eder, N. Privacy, Non-Discrimination and Equal Treatment: Developing a Fundamental Rights Response to Behavioural Profiling. In Algorithmic Governance and Governance of Algorithms; Ebers, M., Cantero Gamito, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2021; pp. 23–48. [Google Scholar]

- Junqueira, T. Tratamento de Dados Pessoais e Discriminação Algoritmica; Thomson Reuters Revista dos Tribunais: São Paulo, Brazil, 2020; ISBN 978-6550654351. [Google Scholar]

- Dignum, V. Ethical and Social Issues in Artificial Intelligence, An Introductory Guide to Artificial Intelligence for Legal Professionals; González-Espejo, M.J., Pavón, J., Eds.; Wolters Kluwer: Alphen aan den Rijn, The Netherlands, 2020; ISBN 9789403509433. [Google Scholar]

- Maia, P. Intelligent Compliance. In Artificial Intelligence in the Economic Sector Prevention and Responsibility; Antunes, M.J., de Sousa, S., Eds.; Instituto Jurídico: Coimbra, Portugal, 2021; pp. 3–50. [Google Scholar]

- Martins, A. Algo trading. In Antunes, Artificial Intelligence in the Economic Sector Prevention and Responsibility; Antunes, M.J., de Sousa, S., Eds.; Instituto Jurídico: Coimbra, Portugal, 2021; pp. 51–84. [Google Scholar]

- Costa, R. Artigo 64.o—Deveres fundamentais. In Código das Sociedades Comerciais em comentário; de Abreu, J.M., Ed.; Almedina: Coimbra, Portugal, 2017; Volume 1, pp. 757–800. [Google Scholar]

- Costa, R. Artigo 46.o—Deveres dos titulares do órgão de administração. In Código Cooperativo anotado; Meira, D., Ramos, M.E., Eds.; Almedina: Coimbra, Portugal, 2018; pp. 257–274. [Google Scholar]

- Enriques, L.; Zetzsche, D.A. Corporate technologies and the tech nirvana fallacy. Hast. LJ 2020, 72, 55. [Google Scholar] [CrossRef]

- Ramos, M.E. O Seguro de Responsabilidade Civil dos Administradores:(Entre a Exposição ao Risco ea Delimitação da Cobertura); Almedina: Coimbra, Portugal, 2010. [Google Scholar]

- Montagnani, M.L.; Passador, M.L. Il consiglio di amministrazione nell\’era dell\’intelligenza artificiale: Tra corporate reporting, composizione e responsabilità. Riv. Soc. 2021, 66, 121–151. [Google Scholar]

- Ramos, M.E. Direito das Sociedades; Almedina: Coimbra, Portugal, 2022; ISBN 9789894000785. [Google Scholar]

| Era | Technologies and Tools | Prós | Cons |

|---|---|---|---|

| 1943–1956 (the inception of AI) |

|

|

|

| 1952–1969 (early enthusiasm, great expectations) |

|

|

|

| 1966–1973 (a dose of reality) |

|

|

|

| 1969–1986 (expert systems) |

|

|

|

| 1986–present (the return of neural networks) |

|

|

|

| 1987–present (probabilistic reasoning and ML) |

|

| |

| 2001–present (big data) |

|

| |

| 2011–present (deep learning) |

|

|

| Type of System | Use? | Comments | |

|---|---|---|---|

| DSS | Yes | The cooperative should create technical committees to advise on how the system works and how to choose the appropriate DSS system. These committees should be able to accompany the bodies in the decisions taken from these systems, keep the system capable, and identify and control possible biases in the data. | |

| ADS | Black boxes (e.g., DL algorithms) | No | The steps to achieve a decision are unknown. There is a problem of transparency, an essential value in cooperatives (and any democratic organisation). |

| White boxes (e.g., trees or rules models) | Yes, with conditions | The use of ADS systems is inadvisable. Nevertheless, this use can be considered if it respects the principle of interpretability. We can foresee that these systems can be used in the following circumstances: that the decision taken is accompanied by the rules that led to that decision being taken and that there is, as with DSS systems, a technical committee that permanently accompanies the decisions taken automatically. The bodies are informed of the decisions with reports to ensure complete transparency. Additionally, it is fundamental to identify and control possible biases in the data. | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramos, M.E.; Azevedo, A.; Meira, D.; Curado Malta, M. Cooperatives and the Use of Artificial Intelligence: A Critical View. Sustainability 2023, 15, 329. https://doi.org/10.3390/su15010329

Ramos ME, Azevedo A, Meira D, Curado Malta M. Cooperatives and the Use of Artificial Intelligence: A Critical View. Sustainability. 2023; 15(1):329. https://doi.org/10.3390/su15010329

Chicago/Turabian StyleRamos, Maria Elisabete, Ana Azevedo, Deolinda Meira, and Mariana Curado Malta. 2023. "Cooperatives and the Use of Artificial Intelligence: A Critical View" Sustainability 15, no. 1: 329. https://doi.org/10.3390/su15010329