E-Learning Behavior Categories and Influencing Factors of STEM Courses: A Case Study of the Open University Learning Analysis Dataset (OULAD)

Abstract

1. Introduction

2. Research Review

2.1. E-Learning Performance Prediction Methods

2.2. Influences on E-Learning Performance

2.3. E-Learning Behavior Classification Study

3. Materials and Methods

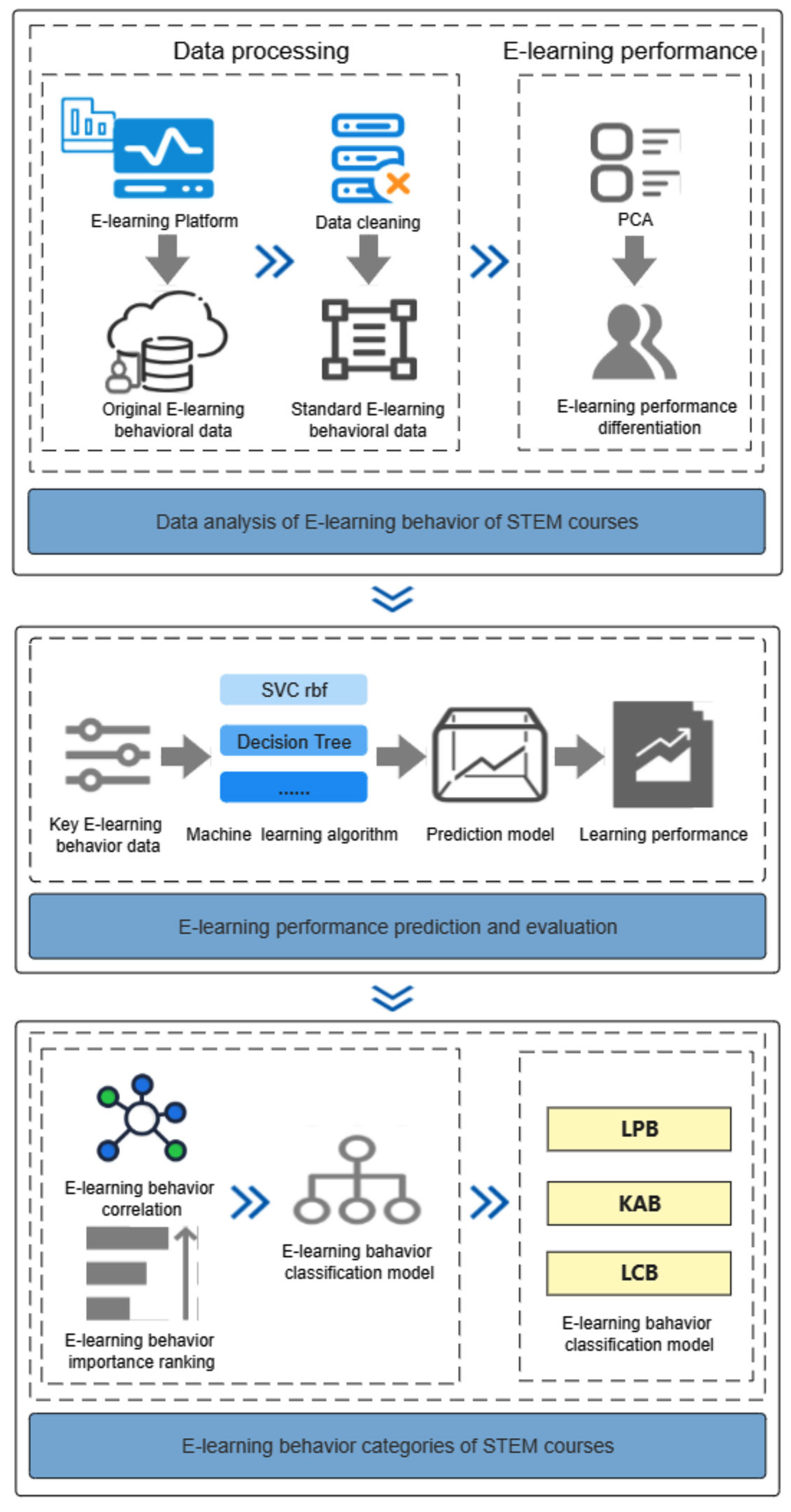

3.1. Research Design

3.2. Data Source

3.3. Data Preprocessing

3.4. Data Analysis

4. Results

4.1. Differentiation of E-Learning Performance of STEM Courses

4.2. Prediction and Evaluation of E-Learning Performance of STEM Courses

4.2.1. Experimental Scheme

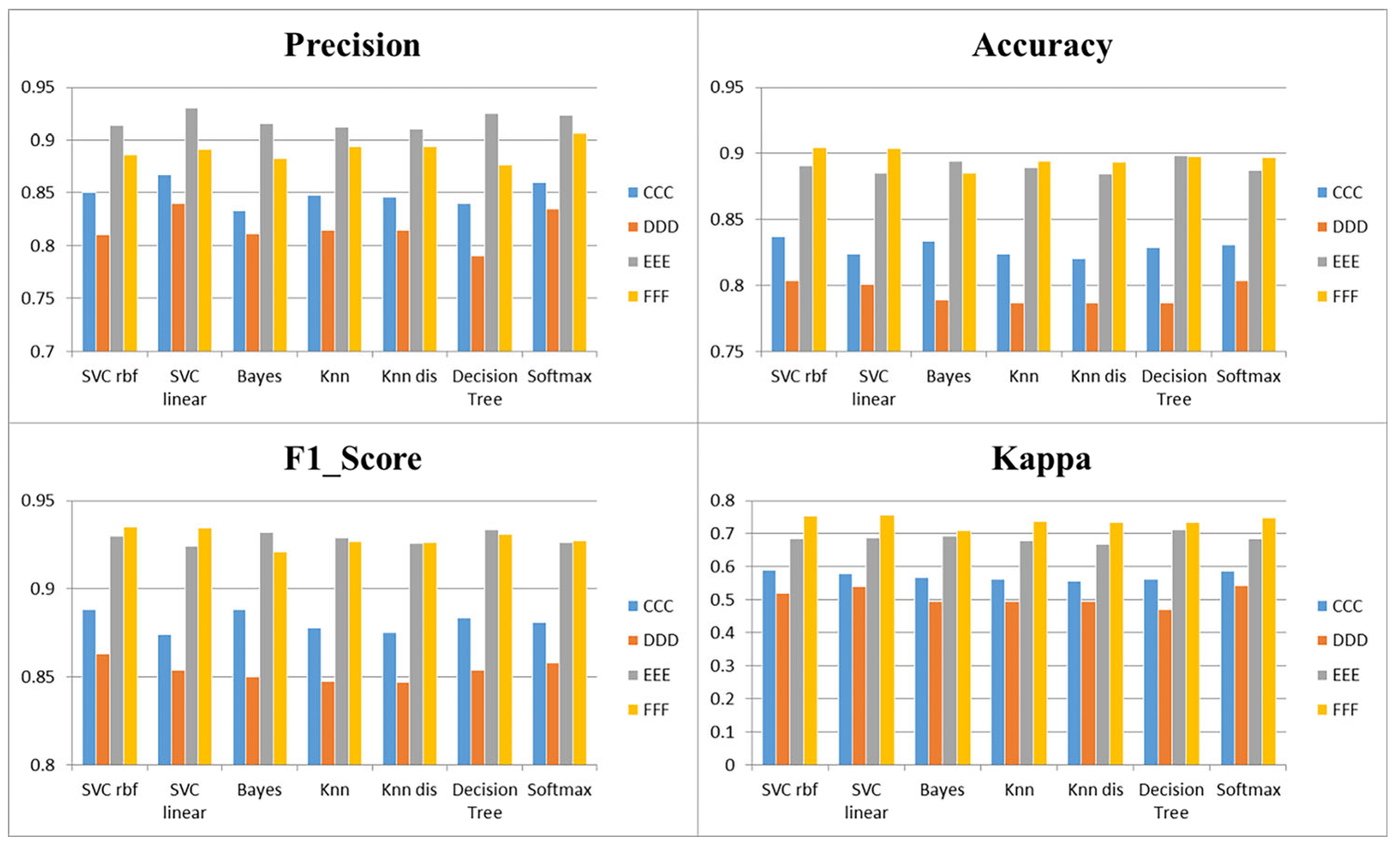

4.2.2. Experimental Results and Analysis

4.3. Learning Behavior Categories of E-Learning Performance of STEM Courses

4.3.1. Experimental Scheme

4.3.2. Experimental Results and Analysis

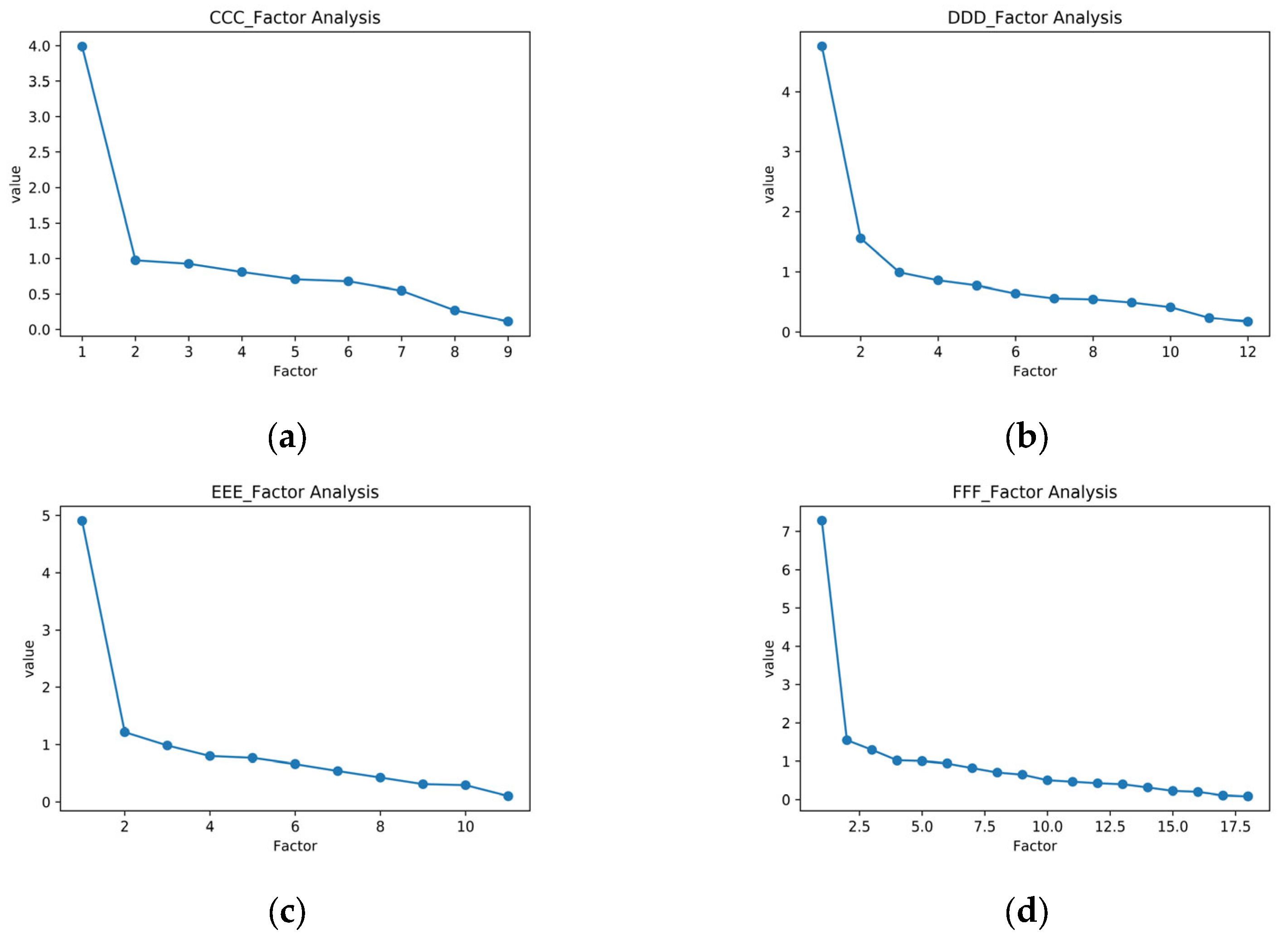

- Factorial Analysis

- 2.

- Importance Sequence of E-learning Behavior of STEM Courses

- 3.

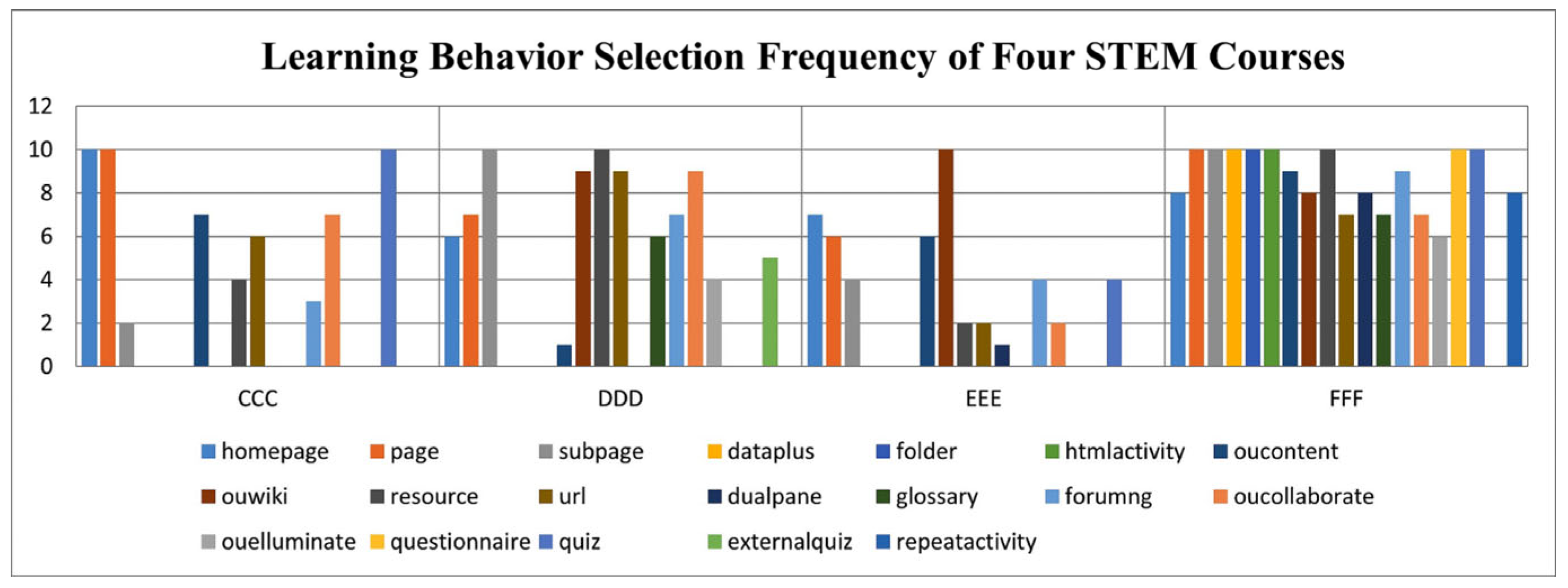

- Learning Behavior Categories of E-learning Performance of STEM Courses

5. Discussion

5.1. Learning Preparation Behavior

5.2. Knowledge Acquisition Behavior

5.3. Learning Consolidation Behavior

5.4. Interactive Learning Behavior

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y.; Li, X.; Jing, H.; Zhang, Y.; Fu, Y.; Jue, W.; Mei, L. Research on the Influence of Design-based Interdisciplinary STEM Teaching on Primary School Students’ Interdisciplinary Learning Attitude. China Educ. Technol. 2018, 378, 81–89. [Google Scholar]

- Ling, R.; An, T.; Ren, Y. On the Cultivation of Scientific Thinking in STEM Education. Mod. Educ. Technol. 2019, 29, 107–113. [Google Scholar]

- Chen, X.; Xu, B.; Zhang, Z. Idea and Path of STEM Education Research and Practice—Interview with Professor Samson Nashon, a science education expert at the University of British Columbia. China Educ. Technol. 2019, 387, 1–4+22. [Google Scholar]

- Kong, J. Research on the Path of STEM Curriculum Design Based on Cognitive Depth Model. Mod. Educ. Technol. 2021, 31, 112–119. [Google Scholar]

- Chen, P.; Tian, Y.; Wang, R. The Research and Enlightenment of Innovative STEM Education Curriculum Based on Design Thinking *—Taking the d.loft STEM Course of Stanford University as an Example. China Educ. Technol. 2019, 391, 82–90. [Google Scholar]

- Sha, S.; Wang, F.; Yu, X. Characteristics Analysis and Development Trend Research of STEM Curriculum from the Perspective of Scientific Quality Cultivation. China Educ. Inf. 2021, 503, 47–50+55. [Google Scholar]

- Hooshyar, D.; Pedaste, M.; Yang, Y. Mining Educational Data to Predict Students’ Performance through Procrastination Behavior. Entropy 2019, 22, 12. [Google Scholar] [CrossRef]

- Qiao, L.; Jiang, F. Cluster Analysis of Learners in massive open online course—A Case Study of “STEM Curriculum Design and Case Analysis” in massive open online course. Mod. Educ. Technol. 2020, 30, 100–106. [Google Scholar]

- Qiu, F.; Zhang, G.; Sheng, X.; Jiang, L.; Zhu, L.; Xiang, Q.; Jiang, B.; Chen, P.K. Predicting students’ performance in e-learning using learning process and behaviour data. Sci. Rep. 2022, 12, 453. [Google Scholar] [CrossRef]

- Alhothali, A.; Albsisi, M.; Assalahi, H.; Aldosemani, T. Predicting Student Outcomes in Online Courses Using Machine Learning Techniques: A Review. Sustainability 2022, 14, 6199. [Google Scholar] [CrossRef]

- Moubayed, A.; Injadat, M.; Nassif, A.B.; Lutfiyya, H.; Shami, A. E-Learning: Challenges and Research Opportunities Using Machine Learning & Data Analytics. IEEE Access 2018, 6, 39117–39138. [Google Scholar] [CrossRef]

- Yağcı, M. Educational data mining: Prediction of students’ academic performance using machine learning algorithms. Smart Learn. Environ. 2022, 9, 11. [Google Scholar] [CrossRef]

- Abidi, S.M.R.; Zhang, W.; Haidery, S.A.; Rizvi, S.S.; Riaz, R.; Ding, H.; Kwon, S.J. Educational sustainability through big data assimilation to quantify academic procrastination using ensemble classifiers. Sustainability 2020, 12, 6074. [Google Scholar] [CrossRef]

- Hussain, A.; Khan, M.; Ullah, K. Student’s performance prediction model and affecting factors using classification techniques. Educ. Inf. Technol. 2022, 27, 8841–8858. [Google Scholar] [CrossRef]

- Ban, W.; Jiang, Q.; Zhao, W. Research on Accurate Prediction of Online Learning Achievement Based on Multi-algorithm Fusion. Mod. Distance Educ. 2022, 201, 37–45. [Google Scholar] [CrossRef]

- Tao, T.; Sun, C.; Wu, Z.; Yang, J.; Wang, J. Deep Neural Network-Based Prediction and Early Warning of Student Grades and Recommendations for Similar Learning Approaches. Appl. Sci. 2022, 12, 7733. [Google Scholar] [CrossRef]

- Zheng, Y.; Gao, Z.; Wang, Y.; Fu, Q. MOOC Dropout Prediction Using FWTS-CNN Model Based on Fused Feature Weighting and Time Series. IEEE Access 2020, 8, 225324–225335. [Google Scholar] [CrossRef]

- Xiao, J.; Teng, H.; Wang, H.; Tan, J. Psychological emotions-based online learning grade prediction via BP neural network. Front. Psychol. 2022, 13, 981561. [Google Scholar] [CrossRef]

- Song, X.; Li, J.; Sun, S.; Yin, H.; Dawson, P.; Doss, R.R.M. SEPN: A Sequential Engagement Based Academic Performance Prediction Model. IEEE Intell. Syst. 2021, 36, 46–53. [Google Scholar] [CrossRef]

- Esteban, A.; Romero, C.; Zafra, A. Assignments as Influential Factor to Improve the Prediction of Student Performance in Online Courses. Appl. Sci. 2021, 11, 10145. [Google Scholar] [CrossRef]

- Xiong, J.; Wheeler, J.M.; Choi, H.-J.; Cohen, A.S. A Bi-level Individualized Adaptive Learning Recommendation System Based on Topic Modeling. In Quantitative Psychology; Springer: Cham, Switzerland, 2022; pp. 121–140. [Google Scholar] [CrossRef]

- Lin, Q.; He, S.; Deng, Y. Method of personalized educational resource recommendation based on LDA and learner’s behavior. Int. J. Electr. Eng. Educ. 2021. [Google Scholar] [CrossRef]

- Xue, Y.; Qin, J.; Su, S.; Slowik, A. Brain Storm Optimization Based Clustering for Learning Behavior Analysis. Comput. Syst. Sci. Eng. 2021, 39, 211–219. [Google Scholar] [CrossRef]

- Luo, Y.; Han, X.; Zhang, C. Prediction of learning outcomes with a machine learning algorithm based on online learning behavior data in blended courses. Asia Pac. Educ. Rev. 2022. [Google Scholar] [CrossRef]

- Adnan, M.; Habib, A.; Ashraf, J.; Mussadiq, S.; Raza, A.A.; Abid, M.; Bashir, M.; Khan, S.U. Predicting at-Risk Students at Different Percentages of Course Length for Early Intervention Using Machine Learning Models. IEEE Access 2021, 9, 7519–7539. [Google Scholar] [CrossRef]

- Ni, Q.; Xu, Y.; Wei, T.; Gao, R. Learning effect model based on online learning behavior data and analysis of influencing factors. J. Shanghai Norm. Univ. Nat. Sci. Ed. 2022, 51, 143–148. [Google Scholar]

- Shi, W.; Niu, X.; Zheng, Q. An Empirical Study on the Influencing Factors of Learning Results of Activity-centered Online Courses—Taking the Open University Learning Analysis Data Set (OULAD) as an example. Open Learn. Res. 2018, 23, 10–18. [Google Scholar] [CrossRef]

- Wang, J.Y.; Yang, C.H.; Liao, W.C.; Yang, K.C.; Chang, I.W.; Sheu, B.C.; Ni, Y.H. Highly Engaged Video-Watching Pattern in Asynchronous Online Pharmacology Course in Pre-clinical 4th-Year Medical Students Was Associated With a Good Self-Expectation, Understanding, and Performance. Front. Med. 2021, 8, 799412. [Google Scholar] [CrossRef]

- Sun, F.; Feng, R. Research on Influencing Factors of Online Academic Achievement Based on Learning Analysis. China Educ. Technol. 2019, 386, 48–54. [Google Scholar]

- Mubarak, A.A.; Cao, H.; Zhang, W.; Zhang, W. Visual analytics of video-clickstream data and prediction of learners’ performance using deep learning models in MOOCs’ courses. Comput. Appl. Eng. Educ. 2020, 29, 710–732. [Google Scholar] [CrossRef]

- Lim, K.K.; Lee, C.S. Investigating Learner’s Online Learning Behavioural Changes during the COVID-19 Pandemic. Proc. Assoc. Inf. Sci. Technol. 2021, 58, 777–779. [Google Scholar] [CrossRef]

- Balti, R.; Hedhili, A.; Chaari, W.L.; Abed, M. Hybrid analysis of the learner’s online behavior based on learning style. Educ. Inf. Technol. 2023. [Google Scholar] [CrossRef]

- Ye, M.; Sheng, X.; Lu, Y.; Zhang, G.; Chen, H.; Jiang, B.; Zou, S.; Dai, L. SA-FEM: Combined Feature Selection and Feature Fusion for Students’ Performance Prediction. Sensors 2022, 22, 8838. [Google Scholar] [CrossRef]

- Sun, Y. Analysis on the Characteristics of Online Learning Behavior of Distance Learners in Open University. China Educ. Technol. 2015, 343, 64–71. [Google Scholar]

- Qiu, F.; Zhu, L.; Zhang, G.; Sheng, X.; Ye, M.; Xiang, Q.; Chen, P.K. E-Learning Performance Prediction: Mining the Feature Space of Effective Learning Behavior. Entropy 2022, 24, 722. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Ouyang, F. The application of AI technologies in STEM education: A systematic review from 2011 to 2021. Int. J. STEM Educ. 2022, 9, 59. [Google Scholar] [CrossRef]

- Fomunyam, K.G. Machine Learning and Stem Education: Challenges and Possibilities. Int. J. Differ. Equ. IJDE 2022, 17, 165–176. [Google Scholar]

- Brahim, G.B. Predicting Student Performance from Online Engagement Activities Using Novel Statistical Features. Arab J. Sci. Eng. 2022, 47, 10225–10243. [Google Scholar] [CrossRef]

- Shreem, S.S.; Turabieh, H.; Al Azwari, S.; Baothman, F. Enhanced binary genetic algorithm as a feature selection to predict student performance. Soft Comput. 2022, 26, 1811–1823. [Google Scholar] [CrossRef]

- Bujang, S.D.A.; Selamat, A.; Ibrahim, R.; Krejcar, O.; Herrera-Viedma, E.; Fujita, H.; Ghani, N.A.M. Multiclass prediction model for student grade prediction using machine learning. IEEE Access 2021, 9, 95608–95621. [Google Scholar] [CrossRef]

- Hasan, R.; Palaniappan, S.; Mahmood, S.; Abbas, A.; Sarker, K.U.; Sattar, M.U. Predicting student performance in higher educational institutions using video learning analytics and data mining techniques. Appl. Sci. 2020, 10, 3894. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, Q.; Zhao, W.; Li, Y.; Zhao, Y. An Empirical Study on Early Warning Factors and Intervention Countermeasures of Online Learning Performance Based on Big Data Learning Analysis. E-Educ. Res. 2017, 38, 62–69. [Google Scholar]

- Lange, C.; Costley, J. Improving online video lectures: Learning challenges created by media. Int. J. Educ. Technol. High. Educ. 2020, 17, 16. [Google Scholar] [CrossRef]

- Tseng, S.-S. The influence of teacher annotations on student learning engagement and video watching behaviors. Int. J. Educ. Technol. High. Educ. 2021, 18, 7. [Google Scholar] [CrossRef]

- Rizvi, S.; Rienties, B.; Rogaten, J.; Kizilcec, R.F. Beyond one-size-fits-all in MOOCs: Variation in learning design and persistence of learners in different cultural and socioeconomic contexts. Comput. Hum. Behav. 2022, 126, 106973. [Google Scholar] [CrossRef]

- Yurum, O.R.; Taskaya-Temizel, T.; Yildirim, S. The use of video clickstream data to predict university students’ test performance: A comprehensive educational data mining approach. Educ. Inf. Technol. 2022, 28, 5209–5240. [Google Scholar] [CrossRef]

- Moran, M.; Seaman, J.; Tinti-Kane, H.; Pearson Learning Solutions and Babson Survey Research Group. Teaching, Learning, and Sharing: How Today’s Higher Education Faculty Use Social Media. 2011. Available online: http://www.pearsonlearningsolutions.com/educators/pearson-social-media-survey-2011-bw.pdf (accessed on 12 May 2023).

- Augar, N.; Raitman, R.; Zhou, W. Teaching and Learning Online with Wikis. In Proceedings of the 21st ASCILITE Conference, Perth, Australia, 5–8 December 2014; Available online: https://www.ascilite.org/conferences/perth04/procs/pdf/augar.pdf (accessed on 12 May 2023).

- Raković, M.; Bernacki, M.L.; Greene, J.A.; Plumley, R.D.; Hogan, K.A.; Gates, K.M.; Panter, A.T. Examining the critical role of evaluation and adaptation in self-regulated learning. Contemp. Educ. Psychol. 2022, 68, 102027. [Google Scholar] [CrossRef]

- Yan, H.; Wang, W. The Comparison and Optimization of STEM Curriculum Quality at Home and Abroad from the Perspective of Interdisciplinary Integration. Mod. Distance Educ. Res. 2020, 32, 39–47. [Google Scholar]

- Dong, J.; Wang, F.; Peng, Z.; Zhou, S. Integration Road of STEM Curriculum Oriented by Discipline Core Literacy—Guided by Mathematics Discipline. Mod. Educ. Technol. 2020, 30, 111–117. [Google Scholar]

- Ansari, J.A.N.; Khan, N.A. Exploring the role of social media in collaborative learning the new domain of learning. Smart Learn. Environ. 2020, 7, 378–385. [Google Scholar] [CrossRef]

- Tsoni, R.; Panagiotakopoulos, C.; Verykios, V.S. Revealing latent traits in the social behavior of distance learning students. Educ. Inf. Technol. 2022, 27, 3529–3565. [Google Scholar] [CrossRef]

| Behavior Codes | E-Learning Behavior | Explanation |

|---|---|---|

| HP | homepage | Access the main interface of the learning platform |

| PG | page | Access the course interface |

| SP | subpage | Access the course sub-interface |

| DA | dataplus | Supplementary data |

| FD | folder | Open folder |

| HA | htmlactivity | Web activity |

| CT | oucontent | Download platform resources |

| WK | ouwiki | Query with Wikipedia |

| RS | resource | Search platform resources |

| UR | url | Access course URL link |

| DU | dualpane | Access double window |

| GS | glossary | Access the glossary |

| FU | forumng | Participate in the course topic forum |

| CA | oucollaborate | Participate in collaborative exchange activities |

| EM | ouelluminate | Participate in simulation course seminars |

| QN | questionnaire | Participate in simulation course seminars |

| QZ | quiz | Test |

| EQ | externalquiz | Complete extracurricular quizzes |

| RP | repeatactivity | Repetitive activity |

| Factor | CCC Course | DDD Course | EEE Course | FFF Course |

|---|---|---|---|---|

| HP | ✓ | ✓ | ✓ | ✓ |

| PG | ✓ | ✓ | ✓ | ✓ |

| SP | ✓ | ✓ | ✓ | ✓ |

| DA | ✓ | |||

| FD | ✓ | |||

| HA | ✓ | |||

| CT | ✓ | ✓ | ✓ | ✓ |

| WK | ✓ | ✓ | ✓ | |

| RS | ✓ | ✓ | ✓ | ✓ |

| UR | ✓ | ✓ | ✓ | ✓ |

| DU | ✓ | ✓ | ||

| GS | ✓ | |||

| FU | ✓ | ✓ | ✓ | ✓ |

| CA | ✓ | ✓ | ✓ | ✓ |

| EM | ✓ | ✓ | ||

| QN | ✓ | |||

| QZ | ✓ | ✓ | ✓ | |

| EQ | ✓ | |||

| RP | ✓ |

| Learning Behavior Category | CCC Course | DDD Course | EEE Course | FFF Course | |

|---|---|---|---|---|---|

| Learning preparation behavior (LPB) | HP | 10 | 6 | 7 | 8 |

| PG | 10 | 7 | 6 | 10 | |

| SP | 2 | 10 | 4 | 10 | |

| Knowledge acquisition behavior (KAB) | DA | 10 | |||

| FD | 10 | ||||

| HA | 10 | ||||

| CT | 7 | 1 | 6 | 9 | |

| WK | 9 | 10 | 8 | ||

| RS | 4 | 10 | 2 | 10 | |

| UR | 6 | 9 | 2 | 7 | |

| DU | 1 | 8 | |||

| GS | 6 | 7 | |||

| Interactive learning behavior (ILB) | FU | 3 | 7 | 4 | 9 |

| CA | 7 | 9 | 2 | 7 | |

| EM | 4 | 6 | |||

| Learning consolidation behavior (LCB) | QN | 10 | |||

| QZ | 10 | 4 | 10 | ||

| EQ | 5 | ||||

| RP | 8 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Qiu, F.; Wu, W.; Wang, J.; Li, R.; Guan, M.; Huang, J. E-Learning Behavior Categories and Influencing Factors of STEM Courses: A Case Study of the Open University Learning Analysis Dataset (OULAD). Sustainability 2023, 15, 8235. https://doi.org/10.3390/su15108235

Zhang J, Qiu F, Wu W, Wang J, Li R, Guan M, Huang J. E-Learning Behavior Categories and Influencing Factors of STEM Courses: A Case Study of the Open University Learning Analysis Dataset (OULAD). Sustainability. 2023; 15(10):8235. https://doi.org/10.3390/su15108235

Chicago/Turabian StyleZhang, Jingran, Feiyue Qiu, Wei Wu, Jiayue Wang, Rongqiang Li, Mujie Guan, and Jiang Huang. 2023. "E-Learning Behavior Categories and Influencing Factors of STEM Courses: A Case Study of the Open University Learning Analysis Dataset (OULAD)" Sustainability 15, no. 10: 8235. https://doi.org/10.3390/su15108235

APA StyleZhang, J., Qiu, F., Wu, W., Wang, J., Li, R., Guan, M., & Huang, J. (2023). E-Learning Behavior Categories and Influencing Factors of STEM Courses: A Case Study of the Open University Learning Analysis Dataset (OULAD). Sustainability, 15(10), 8235. https://doi.org/10.3390/su15108235