E-Learning Readiness Assessment Using Machine Learning Methods

Abstract

:1. Introduction

2. Related Works

3. Materials and Methods

3.1. ADKAR Model

- It considers the individual-level changes needed, such as the students’ awareness, desire, and ability to use e-learning platforms.

- It also addresses the organizational level changes required, such as the availability of resources and support for e-learning implementation.

- It can be used to identify readiness gaps and develop a plan to address them.

3.2. ADKAR-Based Feature Selection via Random Forest and Decision Tree

3.2.1. Decision Tree-Based Variable Importance Identification

3.2.2. Identification of ADKAR Factors’ Importance Using RF

3.3. SHapley Additive exPlanations (SHAP) for Identifying the Importance of ADKAR Factors

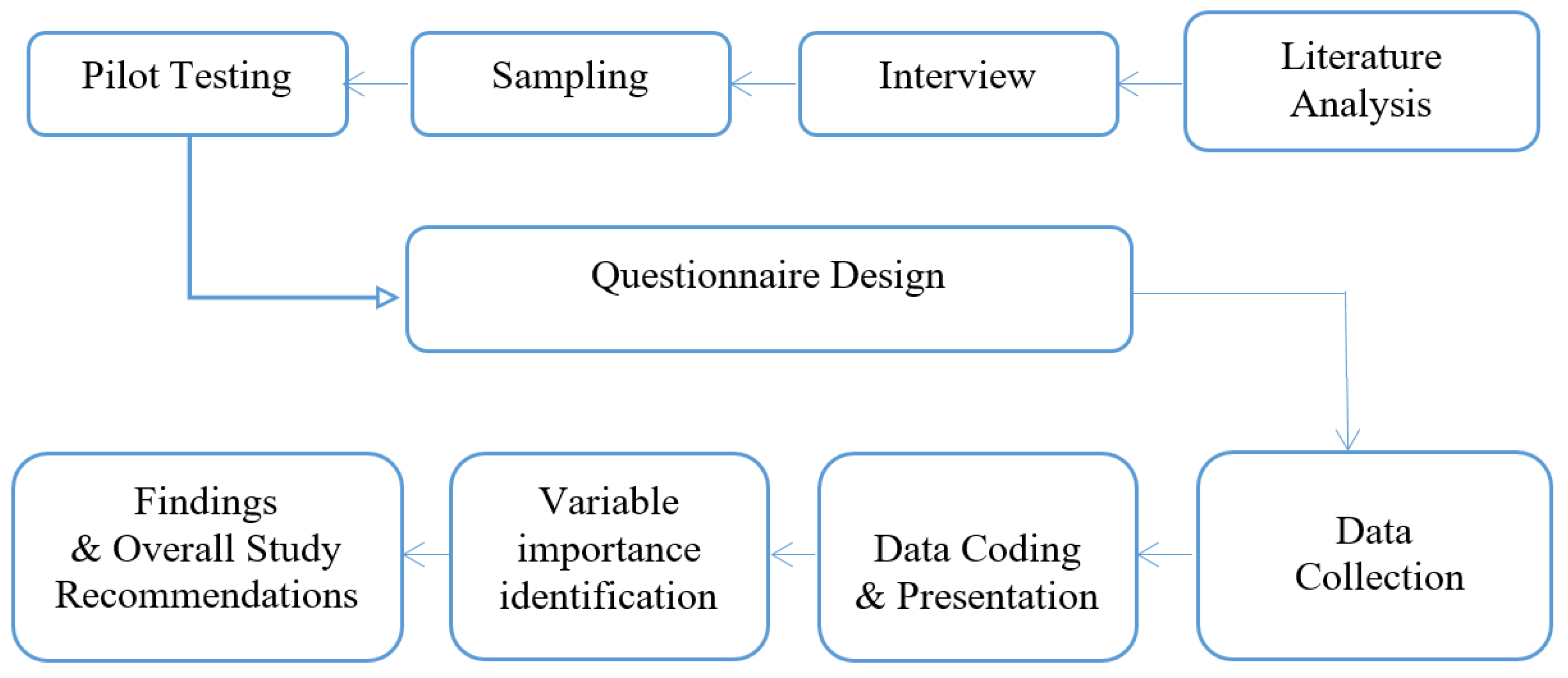

- Develop a questionnaire based on the ADKAR model and other relevant ELR frameworks.

- Identify a representative sample of academic staff and students in the university.

- Administer the questionnaire to the identified participants in person or through online platforms.

- Collect and compile the responses from the participants.

- Use quantitative methods such as descriptive statistics and regression analysis to analyze the data.

- Apply random forest, decision tree algorithms on ADKAR factors to identify the most important factors affecting ELR.

- Develop recommendations based on the findings of the study, which can be used to enhance ELR in Algerian universities, specifically at Tlemcen university in Algeria.

4. Results and Discussions

4.1. Study Design

4.2. Study Model and Research Hypotheses

4.3. Data Collection

4.4. Sampling and Sample Size

4.5. Validity and Reliability

- Step 1: Compute the score for each question

- -

- Add up the responses for each question across all participants.

- Step 2: Compute the total score for each participant

- -

- We sum up the responses for each participant across all questions.

- Step 3: Calculate the mean and standard deviation of the total scores across all participants. To do so, we can use the following formulas:where is the sample mean, s is the sample standard deviation, n is the sample size, and is the total score for participant i.

- Step 4: Calculate the total variance of the responses for all items in the survey.

- Step 5: Calculate the alpha coefficient using Equation (3).

Participants’ Characteristics

4.6. Data Analysis

- Figure 3 indicates the presence of a relatively high positive correlation of 0.6645 between readiness and ability, which means that students with a higher level of ability are more likely to be ready for e-learning. This makes sense because having the necessary skills and knowledge is a prerequisite for effective learning through online platforms. In other words, this suggests that individuals who feel more capable of making the change are more likely to feel ready to make the change. This is consistent with previous research that has shown that learners with a high level of technology literacy are more likely to succeed in e-learning environments.

- The second-highest correlation was found between readiness and knowledge, 0.6282, which suggests that having sufficient knowledge about e-learning technologies and the content being taught is also an essential factor in determining readiness. This highlights the importance of ensuring learners access appropriate training and support to help them acquire the necessary knowledge and skills.

- The correlations between readiness and desire, awareness, and reinforcement were also significant but lower than for the other factors (Figure 3). This suggests that learners’ motivation to engage in e-learning, their awareness of the benefits of e-learning, and the support they receive from their peers and instructors also play a role in determining their readiness.

- Figure 3 also shows a positive correlation between the five ADKAR factors in this case study. The highest correlation is between ability and desire, 0.4731. This suggests that students who have the necessary skills and knowledge for e-learning are more likely to be motivated to learn through online platforms. This could be because they feel more confident and capable of succeeding in an online learning environment.

- From Figure 3, we observe a positive correlation between ability and desire, 0.4731. This suggests that students who have the necessary skills and knowledge for e-learning are more likely to be motivated to learn through online platforms. One possible explanation for this correlation is that as individuals gain more confidence in using e-learning platforms, they may become more interested and motivated to use them to enhance their learning experiences. Similarly, individuals who strongly desire to use e-learning platforms may be more likely to invest the time and effort needed to develop the necessary skills and abilities to use them effectively.

- The 0.3737 correlation between ability and awareness suggests a moderate positive relationship between these two ADKAR factors (Figure 3). This relationship could be explained by the fact that as individuals become more proficient in using e-learning platforms, they become more aware of their benefits and potential applications in their learning and work environments.

Feature Importance Identification

4.7. Discussion

5. Conclusions

- There is a need to increase awareness and knowledge of e-learning among faculty members and students through training and workshops.

- Universities should provide adequate resources and technical support to facilitate the adoption of e-learning.

- Universities should work on enhancing the design and development of e-learning courses to meet the needs and preferences of students.

- It is recommended that students be involved in the decision-making process and to seek their feedback on e-learning experiences.

- Further research is needed to explore the impact of cultural and contextual factors on ELR in different regions and universities in Algeria.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wagiran, W.; Suharjana, S.; Nurtanto, M.; Mutohhari, F. Determining the e-learning readiness of higher education students: A study during the COVID-19 pandemic. Heliyon 2022, 8, e11160. [Google Scholar] [CrossRef]

- Jaoua, F.; Almurad, H.M.; Elshaer, I.A.; Mohamed, E.S. E-learning success model in the context of COVID-19 pandemic in higher educational institutions. Int. J. Environ. Res. Public Health 2022, 19, 2865. [Google Scholar] [CrossRef]

- Aslam, S.M.; Jilani, A.K.; Sultana, J.; Almutairi, L. Feature evaluation of emerging e-learning systems using machine learning: An extensive survey. IEEE Access 2021, 9, 69573–69587. [Google Scholar] [CrossRef]

- Elezi, E.; Bamber, C. Factors Affecting Successful Adoption and Adaption of E-Learning Strategies. In Enhancing Academic Research and Higher Education with Knowledge Management Principles; IGI Global: Hershey, PA, USA, 2021; pp. 19–35. [Google Scholar]

- Rodrigues, H.; Almeida, F.; Figueiredo, V.; Lopes, S.L. Tracking e-learning through published papers: A systematic review. Comput. Educ. 2019, 136, 87–98. [Google Scholar] [CrossRef]

- Rohayani, A.H. A literature review: Readiness factors to measuring e-learning readiness in higher education. Procedia Comput. Sci. 2015, 59, 230–234. [Google Scholar] [CrossRef]

- Moubayed, A.; Injadat, M.; Nassif, A.B.; Lutfiyya, H.; Shami, A. E-learning: Challenges and research opportunities using machine learning & data analytics. IEEE Access 2018, 6, 39117–39138. [Google Scholar]

- Darab, B.; Montazer, G.A. An eclectic model for assessing e-learning readiness in the Iranian universities. Comput. Educ. 2011, 56, 900–910. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Nguyen, D.T.; Cao, T.H. Acceptance and use of information system: E-learning based on cloud computing in Vietnam. In Proceedings of the Information and Communication Technology: Second IFIP TC5/8 International Conference, ICT-EurAsia 2014, Bali, Indonesia, 14–17 April 2014; Proceedings 2. Springer: Berlin/Heidelberg, Germany, 2014; pp. 139–149. [Google Scholar]

- Papadakis, S.; Kalogiannakis, M.; Sifaki, E.; Vidakis, N. Evaluating moodle use via smart mobile phones. A case study in a Greek university. EAI Endorsed Trans. Creat. Technol. 2018, 5, e1. [Google Scholar] [CrossRef]

- Hashem, I.A.T.; Yaqoob, I.; Anuar, N.B.; Mokhtar, S.; Gani, A.; Khan, S.U. The rise of “big data” on cloud computing: Review and open research issues. Inf. Syst. 2015, 47, 98–115. [Google Scholar] [CrossRef]

- Khanal, S.S.; Prasad, P.; Alsadoon, A.; Maag, A. A systematic review: Machine learning based recommendation systems for e-learning. Educ. Inf. Technol. 2020, 25, 2635–2664. [Google Scholar] [CrossRef]

- Parlakkiliç, A. Change management in transition to e-learning system. Qual. Quant. Methods Libr. 2017, 3, 637–651. [Google Scholar]

- Parlakkilic, A. E-learning change management: Challenges and opportunities. Turk. Online J. Distance Educ. 2013, 14, 54–68. [Google Scholar]

- Baig, M.I.; Shuib, L.; Yadegaridehkordi, E. E-learning adoption in higher education: A review. Inf. Dev. 2022, 38, 570–588. [Google Scholar] [CrossRef]

- Rafiee, M.; Abbasian-Naghneh, S. E-learning: Development of a model to assess the acceptance and readiness of technology among language learners. Comput. Assist. Lang. Learn. 2021, 34, 730–750. [Google Scholar] [CrossRef]

- Mafunda, B.; Swart, A.J. Determining African students’e-learning readiness to improve their e-learning experience. Glob. J. Eng. Educ. 2020, 22, 216–221. [Google Scholar]

- Shakeel, S.I.; Haolader, M.F.A.; Sultana, M.S. Exploring dimensions of blended learning readiness: Validation of scale and assessing blended learning readiness in the context of TVET Bangladesh. Heliyon 2023, 9, e12766. [Google Scholar] [CrossRef]

- Bubou, G.M.; Job, G.C. Individual innovativeness, self-efficacy and e-learning readiness of students of Yenagoa study centre, National Open University of Nigeria. J. Res. Innov. Teach. Learn. 2022, 15, 2–22. [Google Scholar] [CrossRef]

- Zandi, G.; Rhoma, A.A.; Ruhoma, A. Assessment of E-Learning Readiness in the Primary Education Sector in Libya: A Case of Yefren. J. Inf. Technol. Manag. 2023, 15, 153–165. [Google Scholar]

- Lucero, H.R.; Victoriano, J.M.; Carpio, J.T.; Fernando, P.G., Jr. Assessment of e-learning readiness of faculty members and students in the government and private higher education institutions in the Philippines. arXiv 2022, arXiv:2202.06069. [Google Scholar] [CrossRef]

- Mushi, R.M.; Lashayo, D.M. E-Learning Readiness Assessment on Distance Learning: A Case of Tanzanian Higher Education Institutions. Int. J. Inf. Commun. Technol. Hum. Dev. (IJICTHD) 2022, 14, 1–10. [Google Scholar] [CrossRef]

- Nwagwu, W.E. E-learning readiness of universities in Nigeria-what are the opinions of the academic staff of Nigeria’s premier university? Educ. Inf. Technol. 2020, 25, 1343–1370. [Google Scholar] [CrossRef]

- Keramati, A.; Afshari-Mofrad, M.; Kamrani, A. The role of readiness factors in E-learning outcomes: An empirical study. Comput. Educ. 2011, 57, 1919–1929. [Google Scholar] [CrossRef]

- Peñarrubia-Lozano, C.; Segura-Berges, M.; Lizalde-Gil, M.; Bustamante, J.C. A qualitative analysis of implementing e-learning during the COVID-19 lockdown. Sustainability 2021, 13, 3317. [Google Scholar] [CrossRef]

- Ober, J.; Kochmańska, A. Remote Learning in Higher Education: Evidence from Poland. Int. J. Environ. Res. Public Health 2022, 19, 14479. [Google Scholar] [CrossRef] [PubMed]

- Septama, H.D.; Komarudin, M.; Yulianti, T. E-learning Readiness in University of Lampung during COVID-19 Pandemic. In Proceedings of the 2021 2nd SEA-STEM International Conference (SEA-STEM), Hat Yai, Thailand, 24–25 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 43–47. [Google Scholar]

- Sardjono, W.; Sudrajat, J.; Retnowardhani, A. Readiness Model of e-Learning Implementation from Teacher and Student Side at School in The Pramuka Island of Seribu Islands–DKI Jakarta. In Proceedings of the 2020 International Conference on Information Management and Technology (ICIMTech), Bandung, Indonesia, 13–14 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 649–653. [Google Scholar]

- Laksitowening, K.A.; Wibowo, Y.F.A.; Hidayati, H. An assessment of E-Learning readiness using multi-dimensional model. In Proceedings of the 2016 IEEE Conference on e-Learning, e-Management and e-Services (IC3e), Kota Kinabalu, Malaysia, 17–19 November 2020; IEEE: Piscataway, NJ, USA, 2016; pp. 128–132. [Google Scholar]

- The, M.M.; Usagawa, T. Evaluation on e-learning readiness of Yangon and Mandalay technological universities, Myanmar. In Proceedings of the Tencon 2017–2017 Ieee Region 10 Conference, Penang, Malaysia, 5–8 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2072–2076. [Google Scholar]

- Saekow, A.; Samson, D. A study of e-learning readiness of Thailand’s higher education comparing to the United States of America (USA)’s case. In Proceedings of the 2011 3rd International Conference on Computer Research and Development, Shanghai, China, 11–13 March 2011; IEEE: Piscataway, NJ, USA, 2011; Volume 2, pp. 287–291. [Google Scholar]

- Irene, K.; Zuva, T. Assessment of e-learning readiness in South African Schools. In Proceedings of the 2018 International Conference on Advances in Big Data, Computing and Data Communication Systems (icABCD), Durban, South Africa, 6–7 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–7. [Google Scholar]

- Herath, C.P.; Thelijjagoda, S.; Gunarathne, W. Stakeholders’ psychological factors affecting E-learning readiness in higher education community in Sri Lanka. In Proceedings of the 2015 8th International Conference on Ubi-Media Computing (UMEDIA), Colombo, Sri Lanka, 24–26 August 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 168–173. [Google Scholar]

- Mohammed, Y.A. E-learning readiness assessment of medical students in university of fallujah. In Proceedings of the 2018 1st Annual International Conference on Information and Sciences (AiCIS), Fallujah, Iraq, 20–21 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 201–207. [Google Scholar]

- Samaneh, Y.; Marziyeh, I.; Mehrak, R. An assessment of the e-learning readiness among EFL university students and its relationship with their English proficiency. In Proceedings of the 4th International Conference on E-Learning and E-Teaching (ICELET 2013), Shiraz, Iran, 13–14 February 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 81–85. [Google Scholar]

- Tiwari, S.; Srivastava, S.K.; Upadhyay, S. An Analysis of Students’ Readiness and Facilitators’ Perception Towards E-learning Using Machine Learning Algorithms. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 28–30 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 504–509. [Google Scholar]

- Alotaibi, R.S. Measuring Students’ Readiness to Use E-leaming Platforms: Shaqra University as a Case Study. In Proceedings of the 2021 International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Port Louis, Mauritius, 7–8 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 01–09. [Google Scholar]

- Hung, M.L.; Chou, C.; Chen, C.H.; Own, Z.Y. Learner readiness for online learning: Scale development and student perceptions. Comput. Educ. 2010, 55, 1080–1090. [Google Scholar] [CrossRef]

- Hiatt, J. ADKAR: A Model for Change in Business, Government, and Our Community; Prosci: Fort Collins, CO, USA, 2006. [Google Scholar]

- Hiatt, J.M. The Essence of ADKAR: A Model for Individual Change Management; Prosci: Fort Collins Colorado, CO, USA, 2006. [Google Scholar]

- Ali, M.A.; Zafar, U.; Mahmood, A.; Nazim, M. The power of ADKAR change model in innovative technology acceptance under the moderating effect of culture and open innovation. LogForum 2021, 17, 485–502. [Google Scholar]

- Al-Shahrbali, I.A.T.; Abdullah, A.Y.K. Change models and applications of the ADKAR model in the strategies of change and its fields in the performance of the Tourism Authority. J. Adm. Econ. 2021, 130, 227–242. [Google Scholar]

- Bahamdan, M.A.; Al-Subaie, O.A. Change Management and Its Obstacles in Light of “ADKAR Model” Dimensions from Female Teachers Perspective in Secondary Schools in Dammam in Saudi Arabia. Ilkogr. Online 2021, 20, 2475. [Google Scholar]

- Glegg, S.M.; Ryce, A.; Brownlee, K. A visual management tool for program planning, project management and evaluation in paediatric health care. Eval. Program Plan. 2019, 72, 16–23. [Google Scholar] [CrossRef] [PubMed]

- Al-Alawi, A.I.; Abdulmohsen, M.; Al-Malki, F.M.; Mehrotra, A. Investigating the barriers to change management in public sector educational institutions. Int. J. Educ. Manag. 2019, 33, 112–148. [Google Scholar] [CrossRef]

- Rogers, J.; Gunn, S. Identifying feature relevance using a random forest. In Proceedings of the Subspace, Latent Structure and Feature Selection: Statistical and Optimization Perspectives Workshop, SLSFS 2005, Bohinj, Slovenia, 23–25 February 2005; Revised Selected Papers. Springer: Berlin/Heidelberg, Germany, 2006; pp. 173–184. [Google Scholar]

- Grömping, U. Variable importance assessment in regression: Linear regression versus random forest. Am. Stat. 2009, 63, 308–319. [Google Scholar] [CrossRef]

- Wei, P.; Lu, Z.; Song, J. Variable importance analysis: A comprehensive review. Reliab. Eng. Syst. Saf. 2015, 142, 399–432. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.; Olshen, R.; Stone, C. Classification and regression trees. Wadsworth & Brooks. In Cole Statistics/Probability Series; Springer: Berlin/Heidelberg, Germany, 1984. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ishwaran, H. Variable importance in binary regression trees and forests. Electron. J. Statist. 2007, 1, 519–537. [Google Scholar] [CrossRef]

- Breiman, L. Classification and Regression Trees; Routledge: London, UK, 2017. [Google Scholar]

- Archer, K.J.; Kimes, R.V. Empirical characterization of random forest variable importance measures. Comput. Stat. Data Anal. 2008, 52, 2249–2260. [Google Scholar] [CrossRef]

- Nohara, Y.; Matsumoto, K.; Soejima, H.; Nakashima, N. Explanation of machine learning models using shapley additive explanation and application for real data in hospital. Comput. Methods Programs Biomed. 2022, 214, 106584. [Google Scholar] [CrossRef]

- Roth, A.E. The Shapley Value: Essays in Honor of Lloyd S. Shapley; Cambridge University Press: Cambridge, UK, 1988. [Google Scholar]

- Cochran, W.G. Sampling Techniques; John Wiley & Sons: Hoboken, NJ, USA, 1977. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Ab Hamid, M.; Sami, W.; Sidek, M.M. Discriminant validity assessment: Use of Fornell & Larcker criterion versus HTMT criterion. Proc. J. Phys. Conf. Ser. 2017, 890, 012163. [Google Scholar]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, 2021. [Google Scholar]

- da Silva, L.M.; Dias, L.P.S.; Rigo, S.; Barbosa, J.L.V.; Leithardt, D.R.; Leithardt, V.R.Q. A literature review on intelligent services applied to distance learning. Educ. Sci. 2021, 11, 666. [Google Scholar] [CrossRef]

- Mutambik, I.; Lee, J.; Almuqrin, A. Role of gender and social context in readiness for e-learning in Saudi high schools. Distance Educ. 2020, 41, 515–539. [Google Scholar] [CrossRef]

- Reyes, J.R.S.; Grajo, J.D.; Comia, L.N.; Talento, M.S.D.; Ebal, L.P.A.; Mendoza, J.J.O. Assessment of Filipino higher education students’ readiness for e-learning during a pandemic: A Rasch Technique application. Philipp. J. Sci. 2021, 150, 1007–1018. [Google Scholar] [CrossRef]

- Adams, D.; Chuah, K.M.; Sumintono, B.; Mohamed, A. Students’ readiness for e-learning during the COVID-19 pandemic in a South-East Asian university: A Rasch analysis. Asian Educ. Dev. Stud. 2022, 11, 324–339. [Google Scholar] [CrossRef]

- Tang, Y.M.; Chen, P.C.; Law, K.M.; Wu, C.H.; Lau, Y.Y.; Guan, J.; He, D.; Ho, G.T. Comparative analysis of Student’s live online learning readiness during the coronavirus (COVID-19) pandemic in the higher education sector. Comput. Educ. 2021, 168, 104211. [Google Scholar] [CrossRef] [PubMed]

- Mirabolghasemi, M.; Choshaly, S.H.; Iahad, N.A. Using the HOT-fit model to predict the determinants of E-learning readiness in higher education: A developing Country’s perspective. Educ. Inf. Technol. 2019, 24, 3555–3576. [Google Scholar] [CrossRef]

- Hasani, L.M.; Adnan, H.R.; Sensuse, D.I.; Suryono, R.R. Factors affecting student’s perceived readiness on abrupt distance learning adoption: Indonesian Higher-Education Perspectives. In Proceedings of the 2020 3rd International Conference on Computer and Informatics Engineering (IC2IE), Yogyakarta, Indonesia, 15–16 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 286–292. [Google Scholar]

- Shahzad, A.; Hassan, R.; Aremu, A.Y.; Hussain, A.; Lodhi, R.N. Effects of COVID-19 in E-learning on higher education institution students: The group comparison between male and female. Qual. Quant. 2021, 55, 805–826. [Google Scholar] [CrossRef]

- Negm, E. Intention to use Internet of Things (IoT) in higher education online learning–the effect of technology readiness. High. Educ. Skills Work-Based Learn. 2023, 13, 53–65. [Google Scholar] [CrossRef]

- Daniels, M.; Sarte, E.; Cruz, J.D. Students’ perception on e-learning: A basis for the development of e-learning framework in higher education institutions. Proc. IOP Conf. Ser. Mater. Sci. Eng. 2019, 482, 012008. [Google Scholar] [CrossRef]

- Sulistiyani, E.; Budiarti, R.P.N. The Current Conditions of Online Learning in Universitas Nahdlatul Ulama Surabaya. Kresna Soc. Sci. Humanit. Res. 2020, 1, 1–4. [Google Scholar] [CrossRef]

- Jaaron, A.A.; Hijazi, I.H.; Musleh, K.I.Y. A conceptual model for adoption of BIM in construction projects: ADKAR as an integrative model of change management. Technol. Anal. Strateg. Manag. 2022, 34, 655–667. [Google Scholar] [CrossRef]

- Vázquez, S.R. The use of ADKAR to instil change in the accessibility of university websites. In Proceedings of the 19th International Web for All Conference, Virtual, 25–26 April 2022; pp. 1–5. [Google Scholar]

- Yasid, M.; Laela, S.F. The Success Factors of Conversion Process Using ADKAR Model: A Case Study of Islamic Savings Loan and Financing Cooperative. Afkaruna Indones. Interdiscip. J. Islam. Stud. 2022, 18, 234–259. [Google Scholar] [CrossRef]

- Chipamaunga, S.; Nyoni, C.N.; Kagawa, M.N.; Wessels, Q.; Kafumukache, E.; Gwini, R.; Kandawasvika, G.; Katowa-Mukwato, P.; Masanganise, R.; Nyamakura, R.; et al. Response to the impact of COVID-19 by health professions education institutions in Africa: A case study on preparedness for remote learning and teaching. Smart Learn. Environ. 2023, 10, 31. [Google Scholar] [CrossRef]

- Ferri, F.; Grifoni, P.; Guzzo, T. Online learning and emergency remote teaching: Opportunities and challenges in emergency situations. Societies 2020, 10, 86. [Google Scholar] [CrossRef]

- Harrou, F.; Sun, Y.; Hering, A.S.; Madakyaru, M. Statistical Process Monitoring Using Advanced Data-Driven and Deep Learning Approaches: Theory and Practical Applications; Elsevier: Amsterdam, The Netherlands, 2020. [Google Scholar]

- Salem, H.; El-Hasnony, I.M.; Kabeel, A.; El-Said, E.M.; Elzeki, O.M. Deep Learning model and Classification Explainability of Renewable energy-driven Membrane Desalination System using Evaporative Cooler. Alex. Eng. J. 2022, 61, 10007–10024. [Google Scholar] [CrossRef]

- Torkey, H.; Atlam, M.; El-Fishawy, N.; Salem, H. Machine Learning Model for Cancer Diagnosis based on RNAseq Microarray. Menoufia J. Electron. Eng. Res. 2021, 30, 65–75. [Google Scholar] [CrossRef]

- Harrou, F.; Sun, Y.; Hering, A.S.; Madakyaru, M.; Dairi, A. Unsupervised Deep Learning-Based Process Monitoring Methods; Elsevier BV: Amsterdam, The Netherlands, 2021. [Google Scholar]

| Variables | Cronbach Alpha | Number of Items | AVE | CR |

|---|---|---|---|---|

| Awareness | 0.835 | 5 | 0.580 | 0.879 |

| Desire | 0.808 | 5 | 0.575 | 0.880 |

| Knowledge | 0.792 | 5 | 0.576 | 0.864 |

| Ability | 0.680 | 5 | 0.555 | 0.859 |

| Reinforcement | 0.705 | 5 | 0.580 | 0.890 |

| Total | 0.886 | 25 |

| Frequency | Percentage (%) | ||

|---|---|---|---|

| Gender | Male | 206 | 64.4 |

| Female | 114 | 35.6 | |

| Total | 320 | 100 | |

| Educational level | 1st year | 15 | 4.7 |

| 2nd | 141 | 44.1 | |

| Master’s | 3 | 0.9 | |

| Professor | 161 | 50.3 |

| 5-Point Likert Scale | Frequency | Percentage (%) |

|---|---|---|

| 2 | 9 | 2.8 |

| 3 | 25 | 7.8 |

| 4 | 235 | 73.4 |

| 5 | 51 | 15.9 |

| Total | 320 | 100 |

| Variables | Person |

|---|---|

| Gender | 0.841 |

| Education Level | 0.452 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zine, M.; Harrou, F.; Terbeche, M.; Bellahcene, M.; Dairi, A.; Sun, Y. E-Learning Readiness Assessment Using Machine Learning Methods. Sustainability 2023, 15, 8924. https://doi.org/10.3390/su15118924

Zine M, Harrou F, Terbeche M, Bellahcene M, Dairi A, Sun Y. E-Learning Readiness Assessment Using Machine Learning Methods. Sustainability. 2023; 15(11):8924. https://doi.org/10.3390/su15118924

Chicago/Turabian StyleZine, Mohamed, Fouzi Harrou, Mohammed Terbeche, Mohammed Bellahcene, Abdelkader Dairi, and Ying Sun. 2023. "E-Learning Readiness Assessment Using Machine Learning Methods" Sustainability 15, no. 11: 8924. https://doi.org/10.3390/su15118924