4.1. Model Parameters

Taking a part of the data in the training set as the cross-validation set, the optimal parameters of each model were found with 5-fold cross-validation and a grid search algorithm.

Figure 6 represents the process of tuning parameters for traditional ML and EL. The prediction results of traditional ML models (Lasso) are shown in

Figure 6a. As shown in

Figure 6(a1), Lasso is the scatter plot of actual power (P) and predicted power (P_pred), where Corr is the correlation, and the closer it is to one, the better. The Corr of the model in this study was one, indicating a strong correlation.

Figure 6(a2) shows the scatter plot of the residuals of the actual power, the predicted power (P- P_pred), and the real value (P), in which Std_resid represents the variance; the smaller it is, the more stable the model. There was a big deviation from the predicted value around P = 2500. In addition, the variance was 22.596, indicating that the model was unstable.

Figure 6(a3) shows the residuals of the predicted and actual power of the model and the frequency falling in the intervals on the axis Z (Z = (Resid-Mean_Resid)/Std_Resid). On the horizontal axis Z, Resid represents the residual of predicted and actual power, Mean_Resid denotes the average residual of predicted and actual power, and Std_Resid denotes their variance. A total of 212 samples with Z > 3 indicate that the residual error between P_pred and P is greater than three times the standard deviation. The smaller the value, the better, whereas a larger value indicates that some samples in the prediction have a large deviation. Z = 212 means that this model does not have a good tolerance for data with severe deviations.

Figure 6(a4) is the error bar diagram of the Lasso model parameters (alpha) and the evaluation indicator MSE (vertical axis: Score). With the increase in alpha, the value of the evaluation indicator score initially decreased and subsequently increased, and the variance of the model gradually increased. Similarly, the prediction results of EL models (XGB) are shown in

Figure 6b. Because the EL models have multiple parameters, the error bar graph is not displayed.

Tables S2 and S3 summarize the prediction results of traditional ML and EL models, respectively. “Score” represents the model score, where the model scoring standard is the evaluation indicator MSE, with CV_Mean representing the MSE average error of the 5-fold cross-validation set and CV_Std denoting the average variance of the 5-fold cross-validation set.

Tables S2 and S3 show that models based on traditional ML have poor stability, while the stability and inclusiveness of EL models are generally better than those of traditional ML. The model stability of XGB and AdaBoost is better. Thus,

Tables S4 and S5 are provided to represent the main parameters of the traditional ML and EL models in the cross-validation model, respectively. Similarly,

Table S6 shows the optimal parameters of DL models.

4.2. Model Evaluation

After being adjusted to the optimal parameters, the model was tested for its generalization ability on the test set.

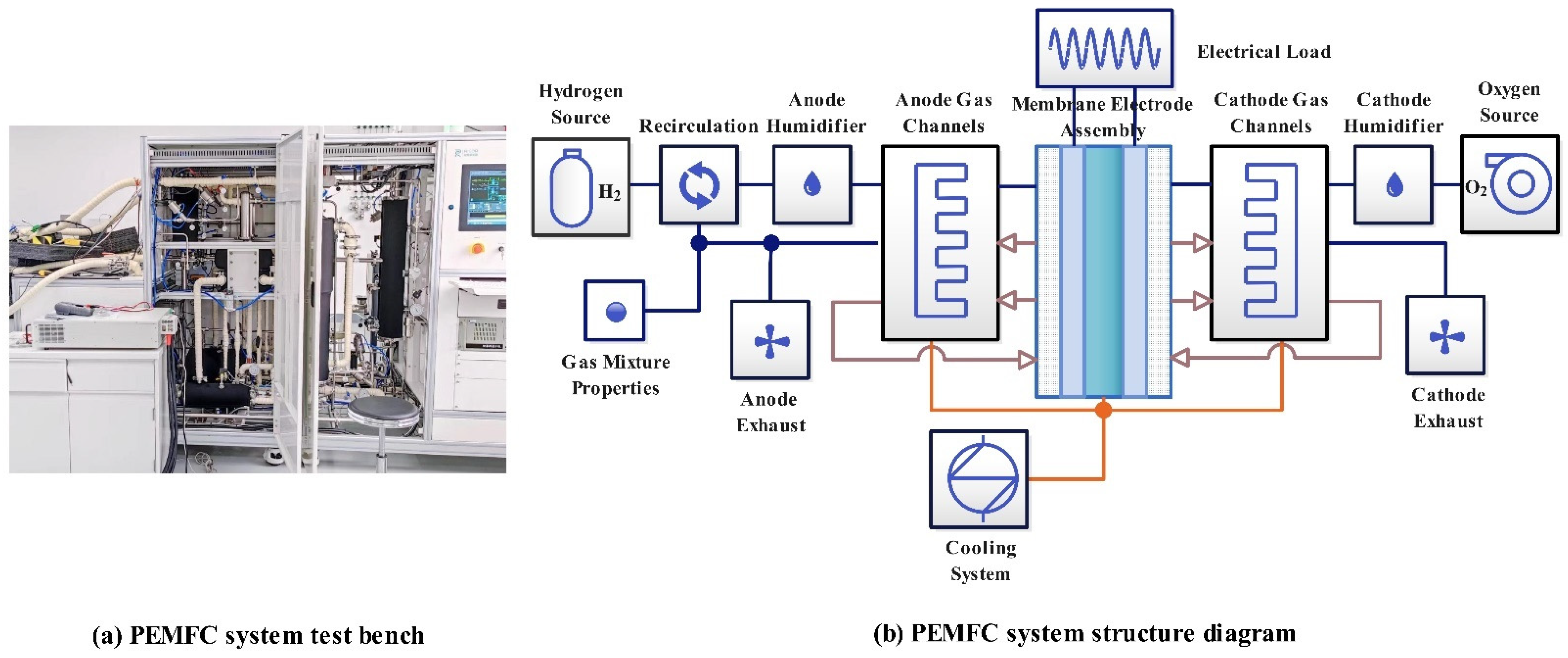

Table 8 summarizes the prediction results of traditional ML methods. The large values of the six evaluation indicators do not mean that the performance of the model is unsatisfactory, as it should be analyzed in the actual situation. Because we applied the measured data from the PEMFC system test bench in the laboratory, as shown in

Figure 1a, the power fluctuates with the influence of various control systems and operating parameters within the range of 5 W. Therefore, the error between the predicted and actual power is normal in this range. Clearly, traditional ML models have low prediction accuracy and are unsuitable for PEMFC power prediction. This is because traditional ML models are simple and have few parameters, which are unsuitable for predicting large sample sizes and thus not suitable for predicting PEMFC power in this study.

Table 9 summarizes the prediction results of EL models, among which the performance of XGB, an improved GBDT-based algorithm, is better.

Table 10 also summarizes the prediction results of DL models. Among them, the CNN model has the highest accuracy, where the six evaluation indicators are optimal, with the shortest training time being only 0.7 s for one iteration. Therefore, the CNN model better predicts the power of the PEMFC.

Figure 7a–c show the error curve predicted based on the CNN model, where “Epoch” represents the iteration cycle, “Loss function value” denotes the prediction error of the Huber loss function, “Test” is the test set error, and “Train” denotes the training set error. Applying the parameters in

Tables S4–S6, the error curve of the model prediction is divided into three stages. In the first stage, if a small learning rate is used, the gradient decreases slowly and requires many iterations, which is significantly time-consuming. Therefore, we first set a larger learning rate for iteration such that the model gradient decreases rapidly. In the second stage, the error of the model was reduced to a certain range, using a slightly smaller learning rate than in the first stage to prevent the model from skipping the global optimal solution and falling into a local optimal solution. In the third stage, if a larger learning rate is used, the error curve fluctuates a lot. The prediction results are likely to fluctuate around the global optimum, resulting in inaccurate prediction results. Therefore, a smaller learning rate should be used to obtain more accurate results.

Figure 7a shows the error curve of the first stage. The model parameters for this stage were set to learning_rate1 = 0.001, epochs1 = 1200, and weight_decay1 = 0.000000001.

Figure 7b shows the error curve of the second stage. The model parameters for this stage were set to learning_rate2 = 0.0006, epochs2 = 1200, and weight_decay2 = 0.0000000001.

Figure 7c shows the error curve of the third stage. The model parameters for this stage were set to learning_rate3 = 0.00006, epochs3 = 1500, and weight_decay3 = 0.0000000001. It is observed in the figure that the error of the Huber loss function was minimized at approximately 3900 cycles with the increase in the iteration cycle of the model, reaching final errors of Train = 0.31 and Test = 0.56.

Figure 7d shows the error between the power predicted by the CNN model and the actual power in the test set after the CNN training of the model was completed. It shows that the error between the power predicted by the CNN model and the actual power is low, indicating high fitting accuracy.

4.3. Model Results Discussion

This section presents discussions of the parameters, prediction results, and the applicability of models.

Figure 6a–k show the tuning parameter process for ML and EL. The effects of the tuning process correspond to

Tables S2 and S3 (in Supplementary Material).

Figure 7a–c show the CNN-based parameter-tuning process. The final optimal parameters of all algorithms are presented in

Tables S4–S6. From the model evaluation index in

Section 2.3, the smaller the values of MSE, RMSE, RAE, MAE, and MAPE, and the closer the value of R

2 to one, the better the performance of the model.

- (1)

ML-based model discussion

Ridge uses L2 regularization and Lasso uses L1 regularization to mitigate overfitting. Elastic Net is a weighted average of Ridge and Lasso regression. LR, Ridge, and Lasso are essentially linear regression methods and are only applicable to linear relationships between data. This study used nonlinear data, so these algorithms were not effective in separating signals from noise and had low prediction precision for the data; therefore, they were not suitable for modeling.

SVR does not overfit on high-dimensional datasets and is suitable for the case of the multiple characteristic variables in this study. It is suitable for small datasets and not for large datasets. Owing to more noise in the dataset in this study, SVR did not perform well in this case. Moreover, it is not easy to update the model when new data are added. Therefore, it was not suitable for modeling in this study.

KNN is computationally intensive, performs well on small datasets, and is not suitable for large datasets. It was not suitable for handling the high-dimensional data in this study. Moreover, the amount of data in this study was quite large, and KNN was not ideal for separating signals from noise.

DT is fast in training and predicting, good at obtaining nonlinear relationships, and also good at handling abnormal samples in our dataset. It is not suitable for small datasets, and when new data are added, it is not easy to update the model, which may be overfitted. DT could not separate signals from noise in this study, and the prediction accuracy was average.

- (2)

EL-based model discussion

The structure of AdaBoost is simple, and we used the DT model to construct a weak learner. It is fast to train, has higher accuracy, and is not prone to overfitting. It is sensitive to abnormal samples. In this study, there were abnormal samples, which may be given higher weights in the iterations and affect the prediction accuracy of the final strong learner.

RF is the integration of multiple DT trees. The model is random, noise-resistant, and fast to train in parallel. There were 10 features in this study, and the features that took more value divisions tended to have a significant impact on the decisions of RF. There was a relatively noisy dataset; RF cannot correctly handle data samples that are extremely difficult, and its performance was average.

GBR is computationally fast in the prediction phase and can be computed in parallel from tree to tree. We used DT as a weak learner to make GBR more robust and automatically discover higher-order relationships between features. This model uses a robust Huber loss function, which is robust to outliers, and GBR generalizes relatively well to the densely distributed dataset. Owing to the dependency between the weak learner of GBR, it is difficult to train data in parallel, and the model does not perform as well as neural networks on high-dimensional sparse datasets (when handling numerical features).

XGB is an improved algorithm based on GBR. Improvements are made in the following three aspects: optimization of the algorithm, optimization of the algorithm operation efficiency, and optimization of the algorithm robustness. Clearly, from

Table 9 and

Table S5, XGB outperformed GBR, and it is the best algorithm for the prediction in EL.

- (3)

DL-based model discussion

The greater the number of layers and parameters in the neural network, the longer the training time. FFN is a fully connected neural network with more weight parameters. Compared with FFN, the sparse connection and weight-sharing strategy of the CNN network can reduce the complexity of the model and make the training and prediction speed fast. The final prediction accuracy was 0.96, and the correlation coefficient was 0.999999, which are both higher than those obtained by FFN, and the prediction accuracy is the highest among all algorithms.

- (4)

Performance comparison with previous methods

Table 11 shows a performance comparison of the algorithm in this paper with state-of-the-art methods. There has been less literature related to the prediction of PEMFC power using DL-based methods in recent years, and

Table 11 compares the use of DL-based methods to predict PEMFC power with the method developed in this study.

In terms of the source of the data, there was a lack of actual data on PEMFC in most of the literature, as most of the data were obtained using methods based on 3D modeling. This study applied the measured data from the PEMFC system test bench (3 kW) in the laboratory, which included 20 PEMFC characteristic variables, such as cell temperature, hydrogen and air flow, and hydrogen and air backpressure, to demonstrate the model’s reliability and applicability in real-world scenarios.

According to the comparison of algorithms, in most of the literature, only two or three algorithms were compared for the determination of the optimal model for PEMFC power prediction, and the conclusions were not comprehensive enough. Given these circumstances, this paper complements previous work by comparing and optimizing more than ten mainstream conventional ML, EL, and DL methods to propose an optimal model for PEMFC power prediction, providing a strong basis for the selection and optimization of the model.

Comparing the optimization of algorithms, most of the literature did not use techniques to prevent overfitting. In this study, a dropout layer was used to prevent overfitting. This study used the Nadam optimization algorithm. As mentioned in the Optimization Model section, Nadam is an improvement based on Adam. Stronger constraints on the learning rate can improve the performance of the optimization algorithm.

Most of the literature used the MSE loss function. This work used the Huber loss function. From the Structure of the Prediction Model section, it can be seen that Huber is very robust to abnormal samples and solves the problem of MSE’s sensitivity to abnormal samples. In this work, the Swish activation function was chosen. As can be seen from the Optimization Model section, the Swish function solves the drawback that the negative gradient of the ReLU function is 0. Most of the literature used the Tanh or Sigmoid activation function, which lead to premature gradient disappearance in regions with large values, resulting in the slow convergence of the model. The Swish function solves these drawbacks.

The CNN-based model in this paper has an R2 of 0.9999 and a MAPE of 0.0004, which makes it the best-performing model in the current literature.